1. Overture

The era of siloed development is ending. The next wave of technological evolution is not about solitary genius, but about collaborative mastery. Building a single, clever agent is a fascinating experiment. Building a robust, secure, and intelligent ecosystem of agents—a true Agentverse—is the grand challenge for the modern enterprise.

Success in this new era requires the convergence of four critical roles, the foundational pillars that support any thriving agentic system. A deficiency in any one area creates a weakness that can compromise the entire structure.

This workshop is the definitive enterprise playbook for mastering the agentic future on Google Cloud. We provide an end-to-end roadmap that guides you from the first vibe of an idea to a full-scale, operational reality. Across these four interconnected labs, you will learn how the specialized skills of a developer, architect, data engineer, and SRE must converge to create, manage, and scale a powerful Agentverse.

No single pillar can support the Agentverse alone. The Architect's grand design is useless without the Developer's precise execution. The Developer's agent is blind without the Data Engineer's wisdom, and the entire system is fragile without the SRE's protection. Only through synergy and a shared understanding of each other's roles can your team transform an innovative concept into a mission-critical, operational reality. Your journey begins here. Prepare to master your role and learn how you fit into the greater whole.

Welcome to The Agentverse: A Call to Champions

In the sprawling digital expanse of the enterprise, a new era has dawned. It is the agentic age, a time of immense promise, where intelligent, autonomous agents work in perfect harmony to accelerate innovation and sweep away the mundane.

This connected ecosystem of power and potential is known as The Agentverse.

But a creeping entropy, a silent corruption known as The Static, has begun to fray the edges of this new world. The Static is not a virus or a bug; it is the embodiment of chaos that preys on the very act of creation.

It amplifies old frustrations into monstrous forms, giving birth to the Seven Spectres of Development. If left unchecked, The Static and its Spectres will grind progress to a halt, turning the promise of the Agentverse into a wasteland of technical debt and abandoned projects.

Today, we issue a call for champions to push back the tide of chaos. We need heroes willing to master their craft and work together to protect the Agentverse. The time has come to choose your path.

Choose Your Class

Four distinct paths lie before you, each a critical pillar in the fight against The Static. Though your training will be a solo mission, your ultimate success depends on understanding how your skills combine with others.

- The Shadowblade (Developer): A master of the forge and the front line. You are the artisan who crafts the blades, builds the tools, and faces the enemy in the intricate details of the code. Your path is one of precision, skill, and practical creation.

- The Summoner (Architect): A grand strategist and orchestrator. You do not see a single agent, but the entire battlefield. You design the master blueprints that allow entire systems of agents to communicate, collaborate, and achieve a goal far greater than any single component.

- The Scholar (Data Engineer): A seeker of hidden truths and the keeper of wisdom. You venture into the vast, untamed wilderness of data to uncover the intelligence that gives your agents purpose and sight. Your knowledge can reveal an enemy's weakness or empower an ally.

- The Guardian (DevOps / SRE): The steadfast protector and shield of the realm. You build the fortresses, manage the supply lines of power, and ensure the entire system can withstand the inevitable attacks of The Static. Your strength is the foundation upon which your team's victory is built.

Your Mission

Your training will begin as a standalone exercise. You will walk your chosen path, learning the unique skills required to master your role. At the end of your trial, you will face a Spectre born of The Static—a mini-boss that preys on the specific challenges of your craft.

Only by mastering your individual role can you prepare for the final trial. You must then form a party with champions from the other classes. Together, you will venture into the heart of the corruption to face an ultimate boss.

A final, collaborative challenge that will test your combined strength and determine the fate of the Agentverse.

The Agentverse awaits its heroes. Will you answer the call?

2. The Scholar's Grimoire

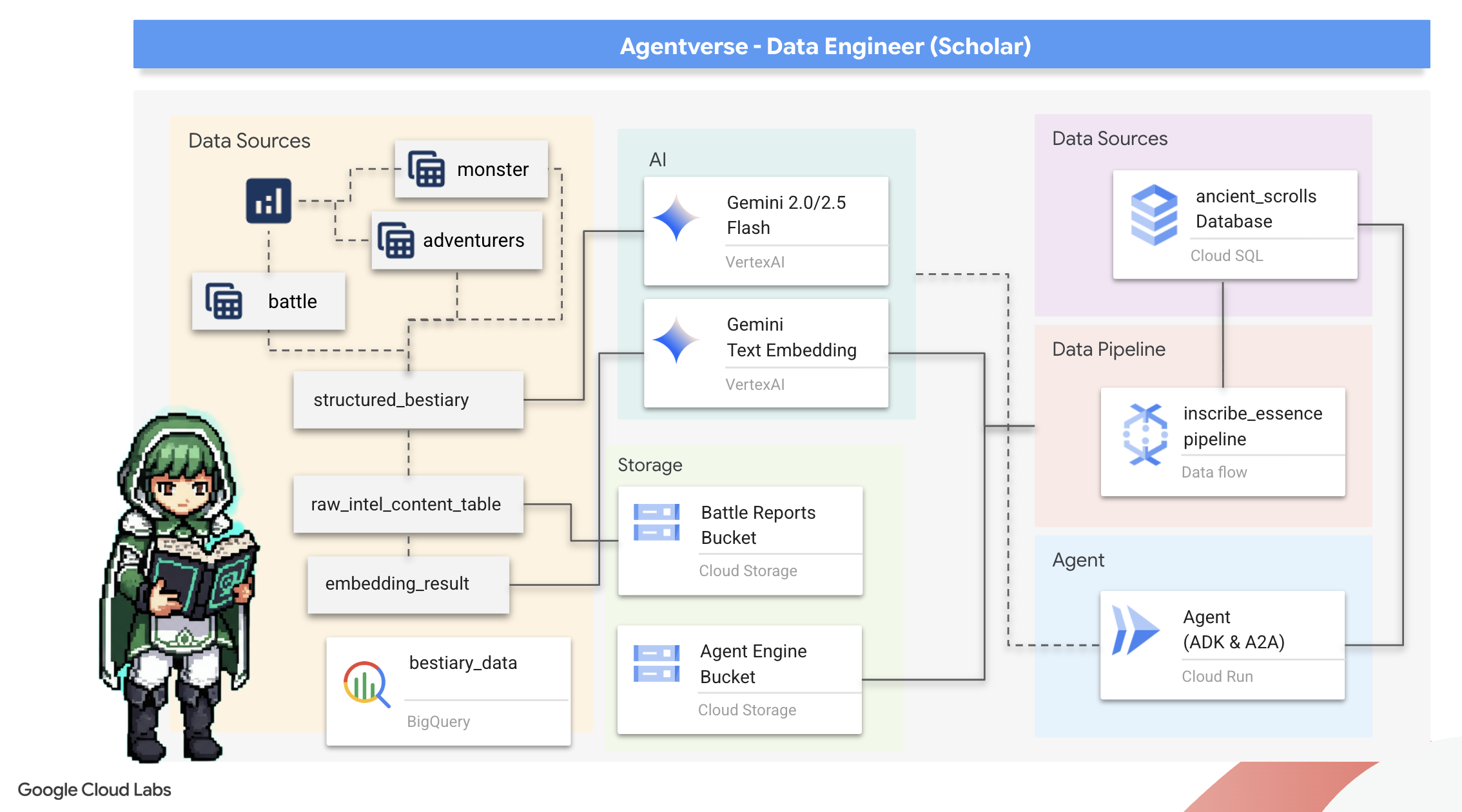

Our journey begins! As Scholars, our primary weapon is knowledge. We've discovered a trove of ancient, cryptic scrolls in our archives (Google Cloud Storage). These scrolls contain raw intelligence on the fearsome beasts plaguing the land. Our mission is to use the profound analytical magic of Google BigQuery and the wisdom of a Gemini Elder Brain (Gemini Pro model) to decipher these unstructured texts and forge them into a structured, queryable Bestiary. This will be the foundation of all our future strategies.

What you'll learn

- Use BigQuery to create external tables and perform complex unstructured-to-structured transformations using BQML.GENERATE_TEXT with a Gemini model.

- Provision a Cloud SQL for PostgreSQL instance and enable the pgvector extension for semantic search capabilities.

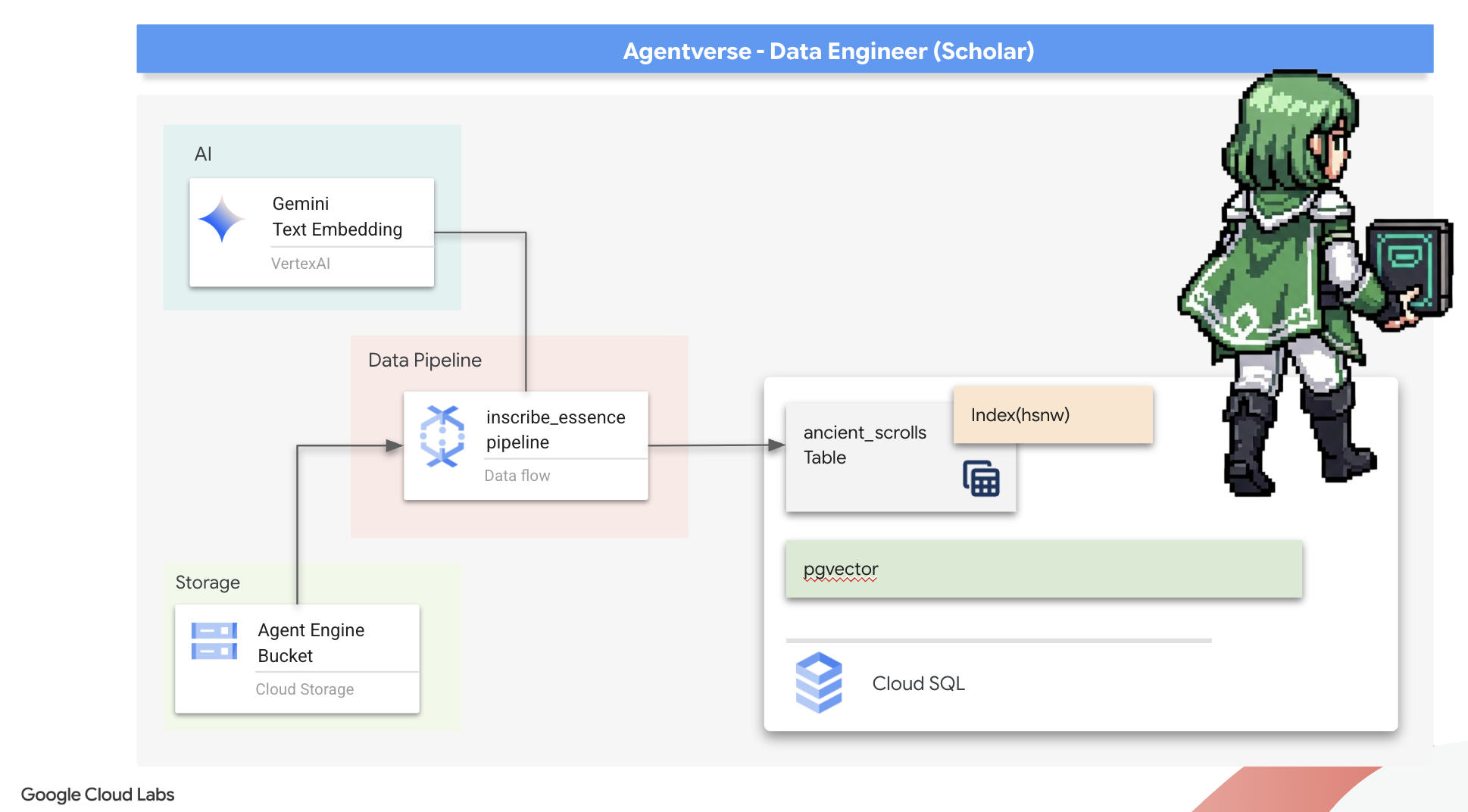

- Build a robust, containerized batch pipeline using Dataflow and Apache Beam to process raw text files, generate vector embeddings with a Gemini model, and write the results to a relational database.

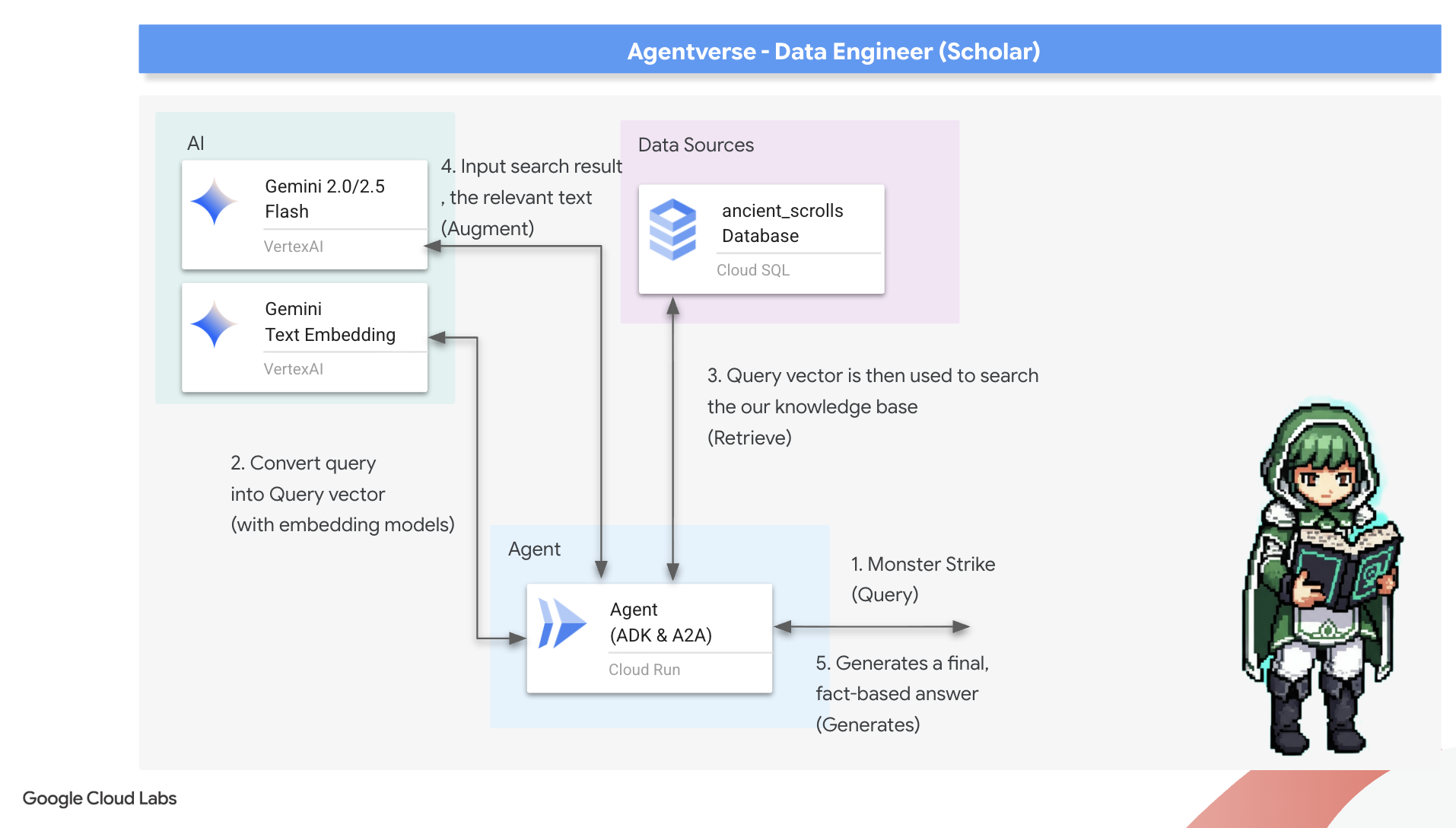

- Implement a basic Retrieval-Augmented Generation (RAG) system within an agent to query the vectorized data.

- Deploy a data-aware agent as a secure, scalable service on Cloud Run.

3. Preparing the Scholar's Sanctum

Welcome, Scholar. Before we can begin inscribing the powerful knowledge of our Grimoire, we must first prepare our sanctum. This foundational ritual involves enchanting our Google Cloud environment, opening the right portals (APIs), and creating the conduits through which our data magic will flow. A well-prepared sanctum ensures our spells are potent and our knowledge is secure.

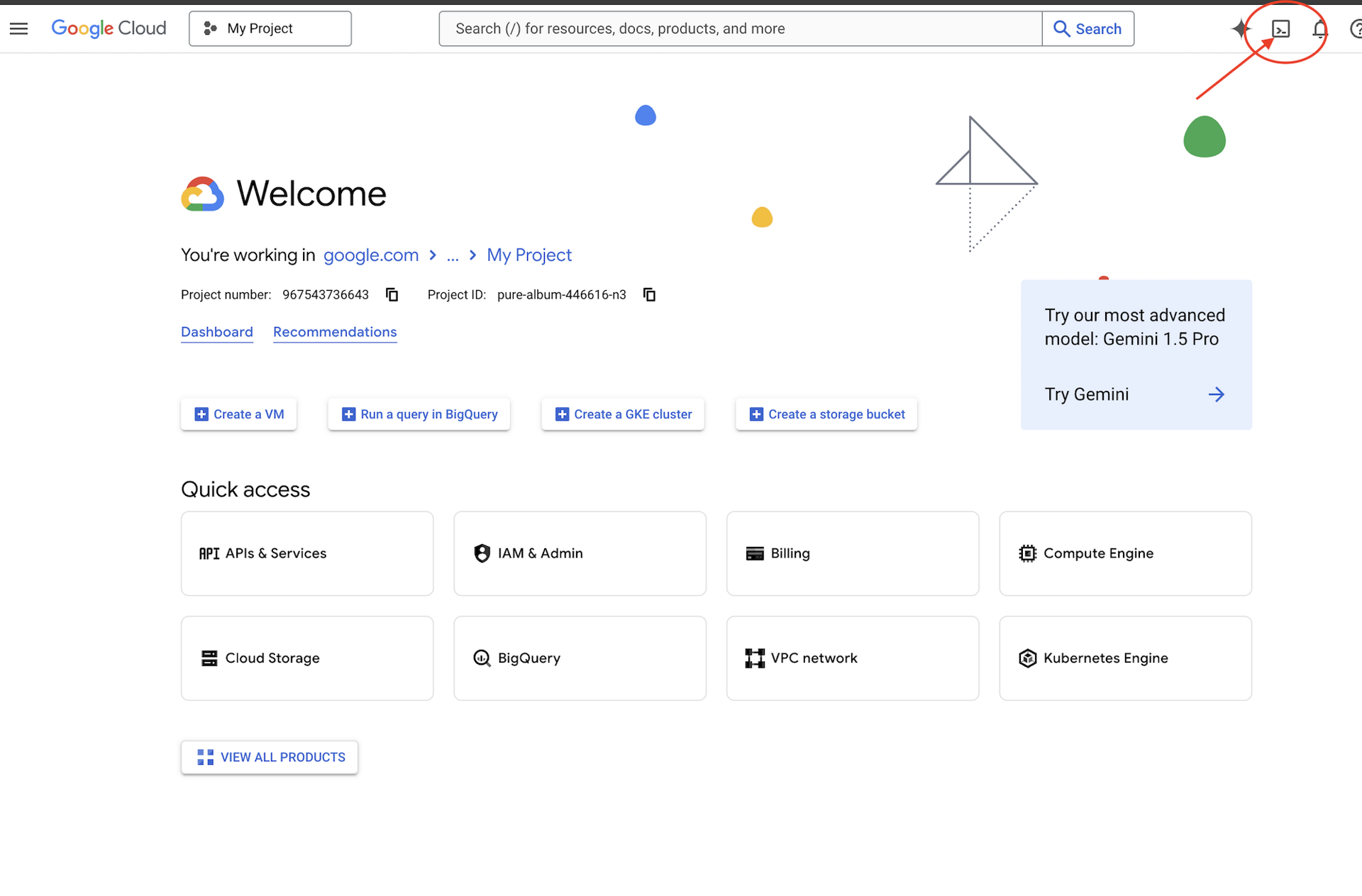

👉Click Activate Cloud Shell at the top of the Google Cloud console (It's the terminal shape icon at the top of the Cloud Shell pane),

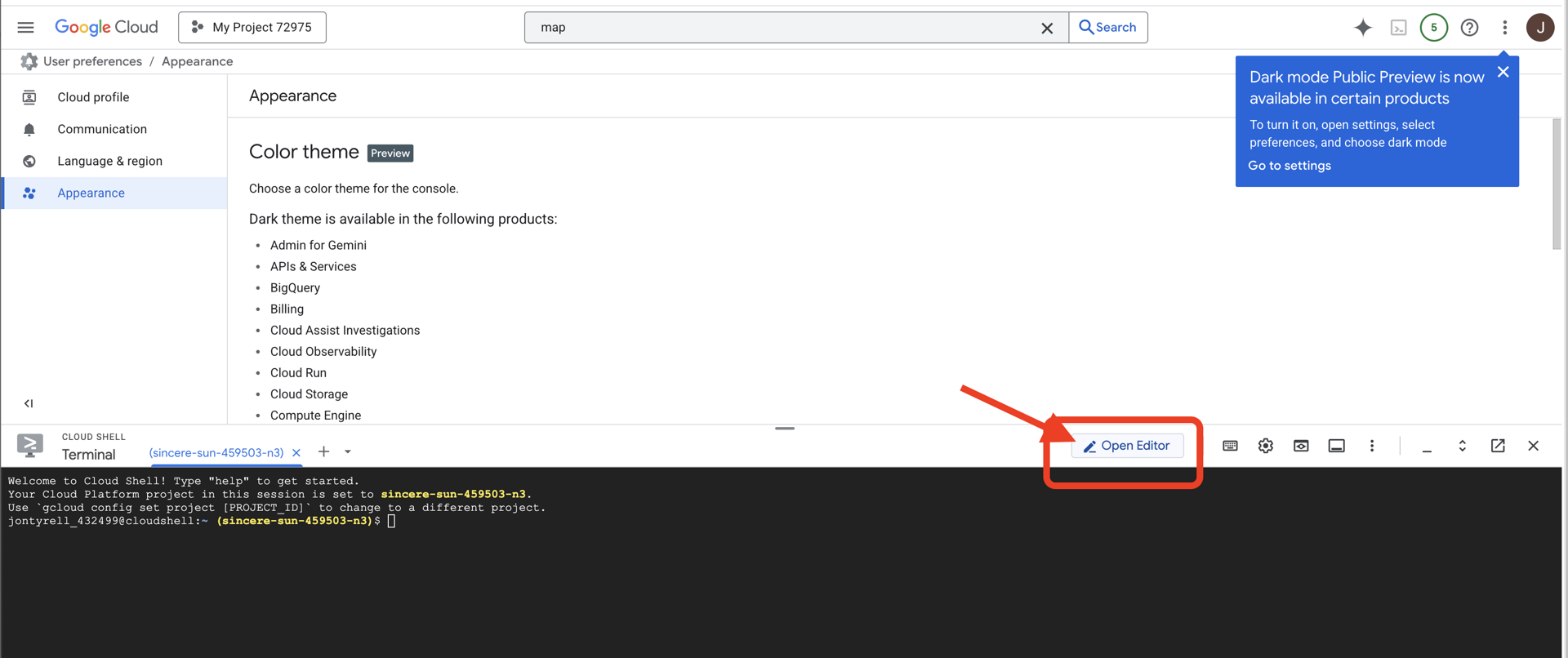

👉Click on the "Open Editor" button (it looks like an open folder with a pencil). This will open the Cloud Shell Code Editor in the window. You'll see a file explorer on the left side.

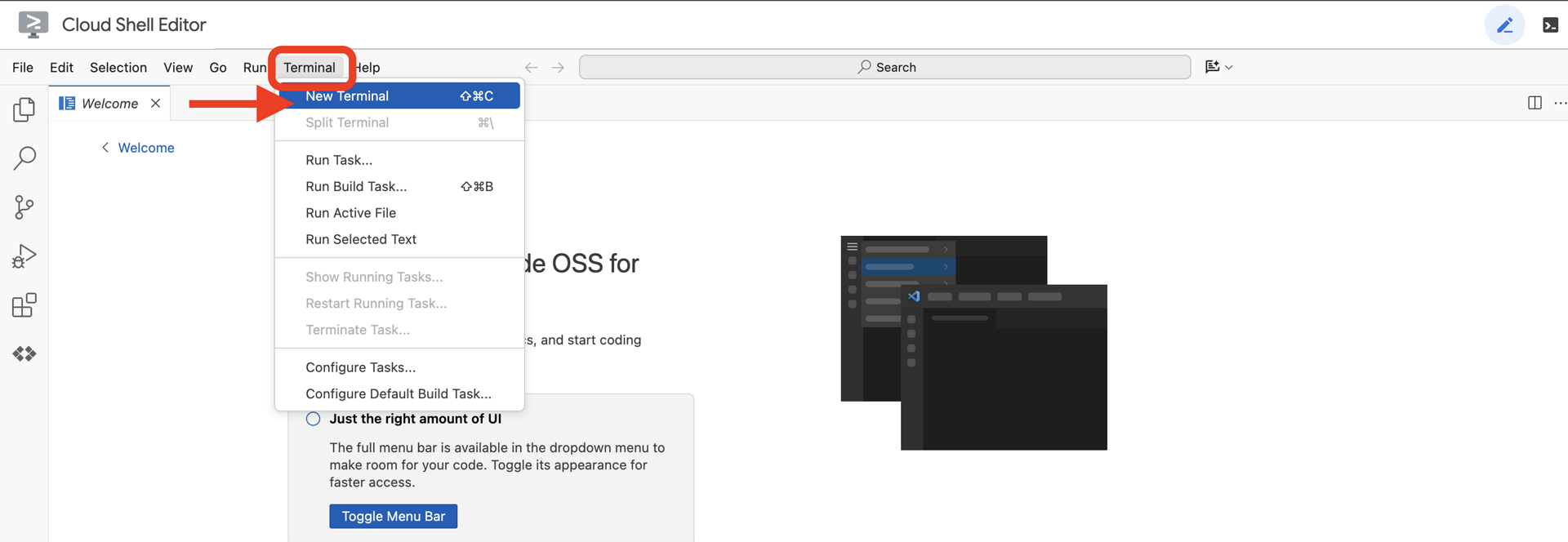

👉Open the terminal in the cloud IDE,

👉💻 In the terminal, verify that you're already authenticated and that the project is set to your project ID using the following command:

gcloud auth list

👉💻Clone the bootstrap project from GitHub:

git clone https://github.com/weimeilin79/agentverse-dataengineer

chmod +x ~/agentverse-dataengineer/init.sh

chmod +x ~/agentverse-dataengineer/set_env.sh

chmod +x ~/agentverse-dataengineer/data_setup.sh

git clone https://github.com/weimeilin79/agentverse-dungeon.git

chmod +x ~/agentverse-dungeon/run_cloudbuild.sh

chmod +x ~/agentverse-dungeon/start.sh

👉💻 Run the setup script from the project directory.

⚠️ Note on Project ID: The script will suggest a randomly generated default Project ID. You can press Enter to accept this default.

However, if you prefer to create a specific new project, you can type your desired Project ID when prompted by the script.

cd ~/agentverse-dataengineer

./init.sh

👉 Important Step After Completion: Once the script finishes, you must ensure your Google Cloud Console is viewing the correct project:

- Go to console.cloud.google.com.

- Click the project selector dropdown at the top of the page.

- Click the "All" tab (as the new project might not appear in "Recent" yet).

- Select the Project ID you just configured in the

init.shstep.

👉💻 Set the Project ID needed:

gcloud config set project $(cat ~/project_id.txt) --quiet

👉💻 Run the following command to enable the necessary Google Cloud APIs:

gcloud services enable \

storage.googleapis.com \

bigquery.googleapis.com \

sqladmin.googleapis.com \

aiplatform.googleapis.com \

dataflow.googleapis.com \

pubsub.googleapis.com \

cloudfunctions.googleapis.com \

run.googleapis.com \

cloudbuild.googleapis.com \

artifactregistry.googleapis.com \

iam.googleapis.com \

compute.googleapis.com \

cloudresourcemanager.googleapis.com \

cloudaicompanion.googleapis.com \

bigqueryunified.googleapis.com

👉💻 If you have not already created an Artifact Registry repository named agentverse-repo, run the following command to create it:

. ~/agentverse-dataengineer/set_env.sh

gcloud artifacts repositories create $REPO_NAME \

--repository-format=docker \

--location=$REGION \

--description="Repository for Agentverse agents"

Setting up permission

👉💻 Grant the necessary permissions by running the following commands in the terminal:

. ~/agentverse-dataengineer/set_env.sh

# --- Grant Core Data Permissions ---

gcloud projects add-iam-policy-binding $PROJECT_ID \

--member="serviceAccount:$SERVICE_ACCOUNT_NAME" \

--role="roles/storage.admin"

gcloud projects add-iam-policy-binding $PROJECT_ID \

--member="serviceAccount:$SERVICE_ACCOUNT_NAME" \

--role="roles/bigquery.admin"

# --- Grant Data Processing & AI Permissions ---

gcloud projects add-iam-policy-binding $PROJECT_ID \

--member="serviceAccount:$SERVICE_ACCOUNT_NAME" \

--role="roles/dataflow.admin"

gcloud projects add-iam-policy-binding $PROJECT_ID \

--member="serviceAccount:$SERVICE_ACCOUNT_NAME" \

--role="roles/cloudsql.admin"

gcloud projects add-iam-policy-binding $PROJECT_ID \

--member="serviceAccount:$SERVICE_ACCOUNT_NAME" \

--role="roles/pubsub.admin"

gcloud projects add-iam-policy-binding $PROJECT_ID \

--member="serviceAccount:$SERVICE_ACCOUNT_NAME" \

--role="roles/aiplatform.user"

# --- Grant Deployment & Execution Permissions ---

gcloud projects add-iam-policy-binding $PROJECT_ID \

--member="serviceAccount:$SERVICE_ACCOUNT_NAME" \

--role="roles/cloudbuild.builds.editor"

gcloud projects add-iam-policy-binding $PROJECT_ID \

--member="serviceAccount:$SERVICE_ACCOUNT_NAME" \

--role="roles/artifactregistry.admin"

gcloud projects add-iam-policy-binding $PROJECT_ID \

--member="serviceAccount:$SERVICE_ACCOUNT_NAME" \

--role="roles/run.admin"

gcloud projects add-iam-policy-binding $PROJECT_ID \

--member="serviceAccount:$SERVICE_ACCOUNT_NAME" \

--role="roles/iam.serviceAccountUser"

gcloud projects add-iam-policy-binding $PROJECT_ID \

--member="serviceAccount:$SERVICE_ACCOUNT_NAME" \

--role="roles/logging.logWriter"

gcloud projects add-iam-policy-binding $PROJECT_ID \

--member="serviceAccount:$SERVICE_ACCOUNT_NAME" \

--role="roles/dataflow.admin"

👉💻 As you begin your training, we will prepare the final challenge. The following commands will summon the Spectres from the chaotic static, creating the bosses for your final test.

. ~/agentverse-dataengineer/set_env.sh

cd ~/agentverse-dungeon

./run_cloudbuild.sh

cd ~/agentverse-dataengineer

Excellent work, Scholar. The foundational enchantments are complete. Our sanctum is secure, the portals to the elemental forces of data are open, and our servitor is empowered. We are now ready to begin the real work.

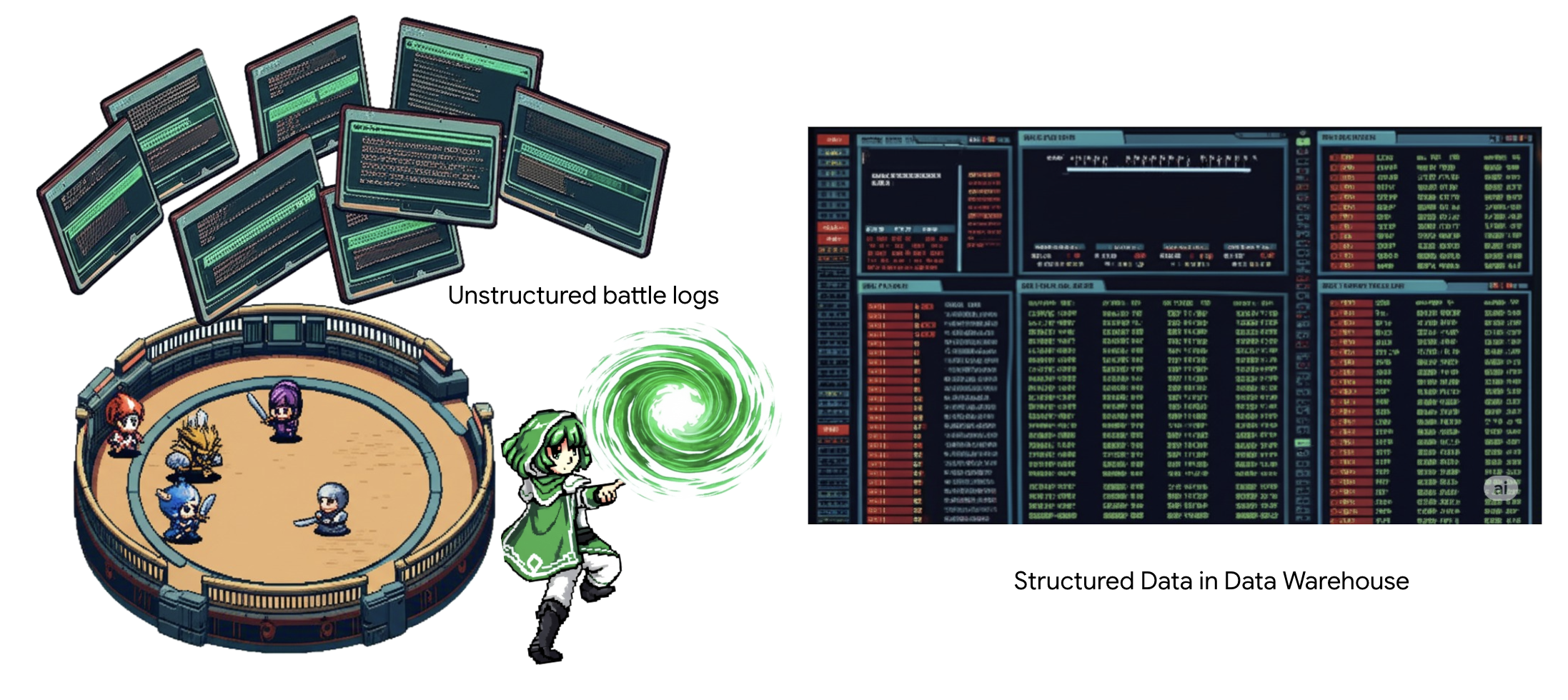

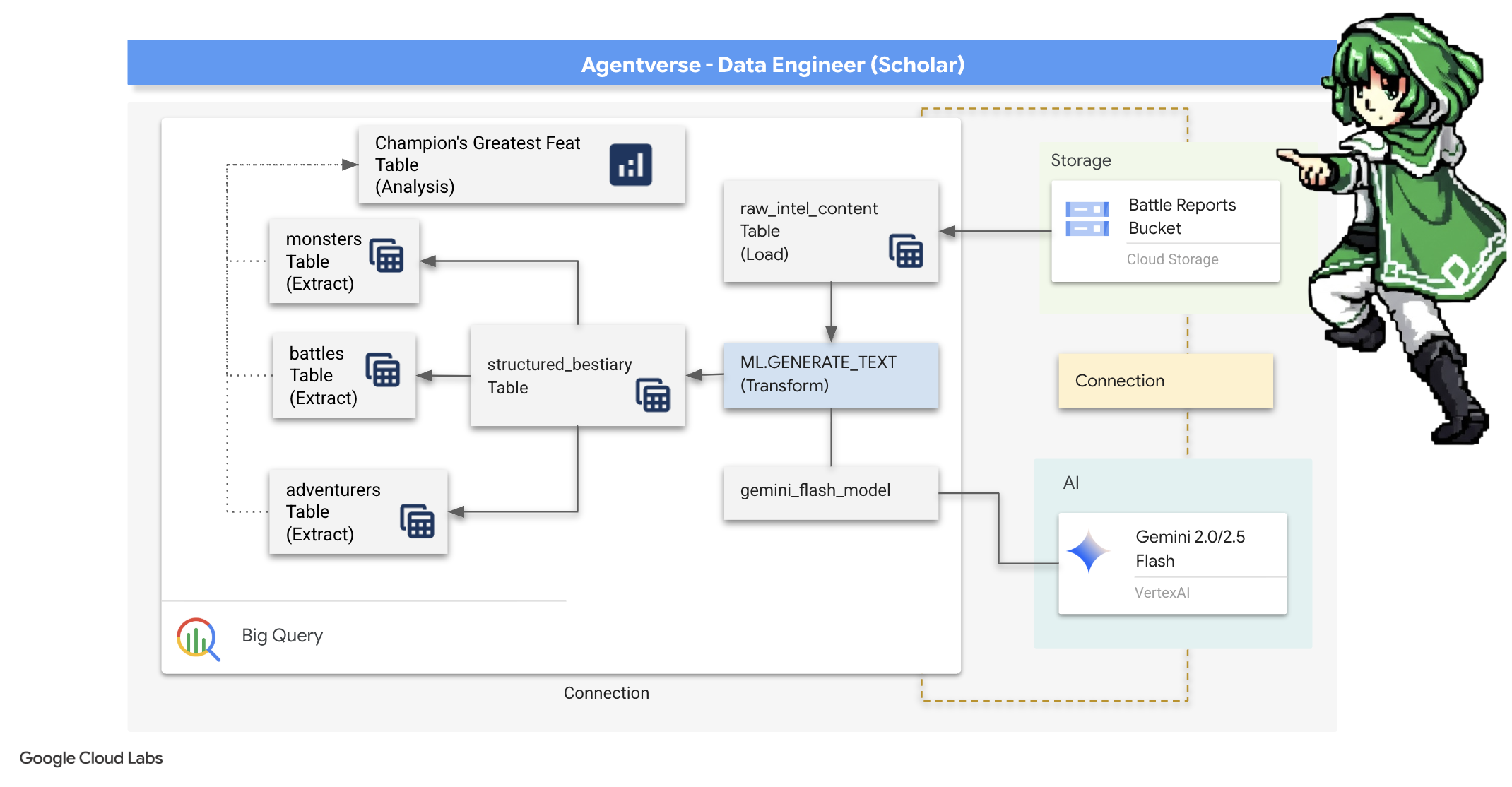

4. The Alchemy of Knowledge: Transforming Data with BigQuery & Gemini

In the ceaseless war against The Static, every confrontation between a Champion of the Agentverse and a Spectre of Development is meticulously recorded. The Battleground Simulation system, our primary training environment, automatically generates an Aetheric Log Entry for each encounter. These narrative logs are our most valuable source of raw intelligence, the unrefined ore from which we, as Scholars, must forge the pristine steel of strategy.The true power of a Scholar lies not in merely possessing data, but in the ability to transmute the raw, chaotic ore of information into the gleaming, structured steel of actionable wisdom.We will perform the foundational ritual of data alchemy.

Our journey will take us through a multi-stage process entirely within the sanctum of Google BigQuery. We will begin by gazing into our GCS archive without moving a single scroll, using a magical lens. Then, we will summon a Gemini to read and interpret the poetic, unstructured sagas of battle logs. Finally, we will refine the raw prophecies into a set of pristine, interconnected tables. Our first Grimoire. And ask of it a question so profound it could only be answered by this newfound structure.

The Lens of Scrutiny: Peering into GCS with BigQuery External Tables

Our first act is to forge a lens that allows us to see the contents of our GCS archive without disturbing the scrolls within. An External Table is this lens, mapping the raw text files to a table-like structure that BigQuery can query directly.

To do this, we must first create a stable ley line of power, a CONNECTION resource, that securely links our BigQuery sanctum to the GCS archive.

👉💻 In your Cloud Shell terminal, run the following command to setup the storage and forge the conduit:

. ~/agentverse-dataengineer/set_env.sh

. ~/agentverse-dataengineer/data_setup.sh

bq mk --connection \

--connection_type=CLOUD_RESOURCE \

--project_id=${PROJECT_ID} \

--location=${REGION} \

gcs-connection

💡 Heads Up! A Message Will Appear Later!

The setup script from step 2 started a process in the background. After a few minutes, a message will pop up in your terminal that looks similar to this:[1]+ Done gcloud sql instances create ...This is normal and expected. It simply means your Cloud SQL database has been successfully created. You can safely ignore this message and continue working.

Before you can create the External Table, you must first create the dataset that will contain it.

👉💻 Run this one simple command in your Cloud Shell terminal:

. ~/agentverse-dataengineer/set_env.sh

bq --location=${REGION} mk --dataset ${PROJECT_ID}:bestiary_data

👉💻 Now we must grant the conduit's magical signature the necessary permissions to read from the GCS Archive and consult Gemini.

. ~/agentverse-dataengineer/set_env.sh

export CONNECTION_SA=$(bq show --connection --project_id=${PROJECT_ID} --location=${REGION} --format=json gcs-connection | jq -r '.cloudResource.serviceAccountId')

echo "The Conduit's Magical Signature is: $CONNECTION_SA"

echo "Granting key to the GCS Archive..."

gcloud storage buckets add-iam-policy-binding gs://${PROJECT_ID}-reports \

--member="serviceAccount:$CONNECTION_SA" \

--role="roles/storage.objectViewer"

gcloud projects add-iam-policy-binding ${PROJECT_ID} \

--member="serviceAccount:$CONNECTION_SA" \

--role="roles/aiplatform.user"

👉💻 In your Cloud Shell terminal, run the following command to display your bucket name:

echo $BUCKET_NAME

Your terminal will display a name similar to your-project-id-gcs-bucket. You will need this in the next steps.

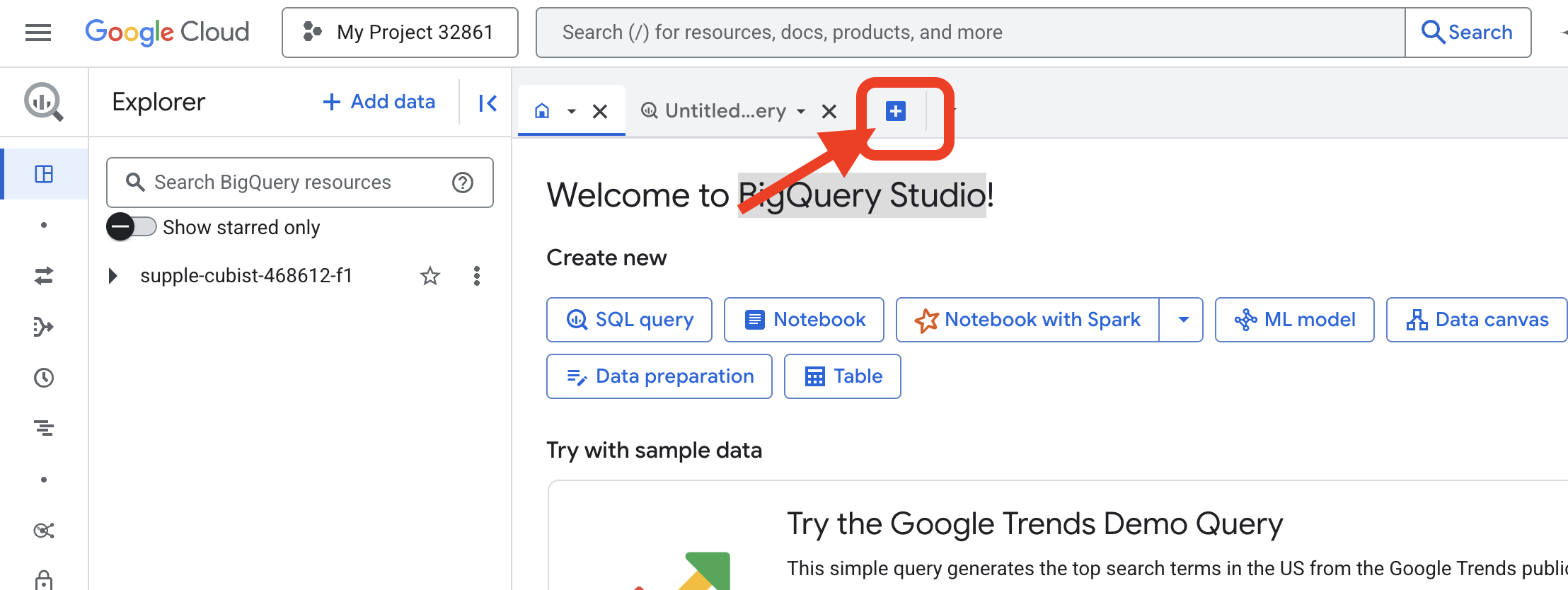

👉 You'll need to run the next command from within the BigQuery query editor in the Google Cloud Console. The easiest way to get there is to open the link below in a new browser tab. It will take you directly to the correct page in the Google Cloud Console.

https://console.cloud.google.com/bigquery

👉 Once the page loads, click the blue + button (Compose a new query) to open a fresh editor tab.

Now we write the Data Definition Language (DDL) incantation to create our magical lens. This tells BigQuery where to look and what to see.

👉📜 In the BigQuery query editor you opened, paste the following SQL. Remember to replace the REPLACE-WITH-YOUR-BUCKET-NAME

with the bucket name you just copied. And click Run:

CREATE OR REPLACE EXTERNAL TABLE bestiary_data.raw_intel_content_table (

raw_text STRING

)

OPTIONS (

format = 'CSV',

-- This is a trick to load each line of the text files as a single row.

field_delimiter = '§',

uris = ['gs://REPLACE-WITH-YOUR-BUCKET-NAME/raw_intel/*']

);

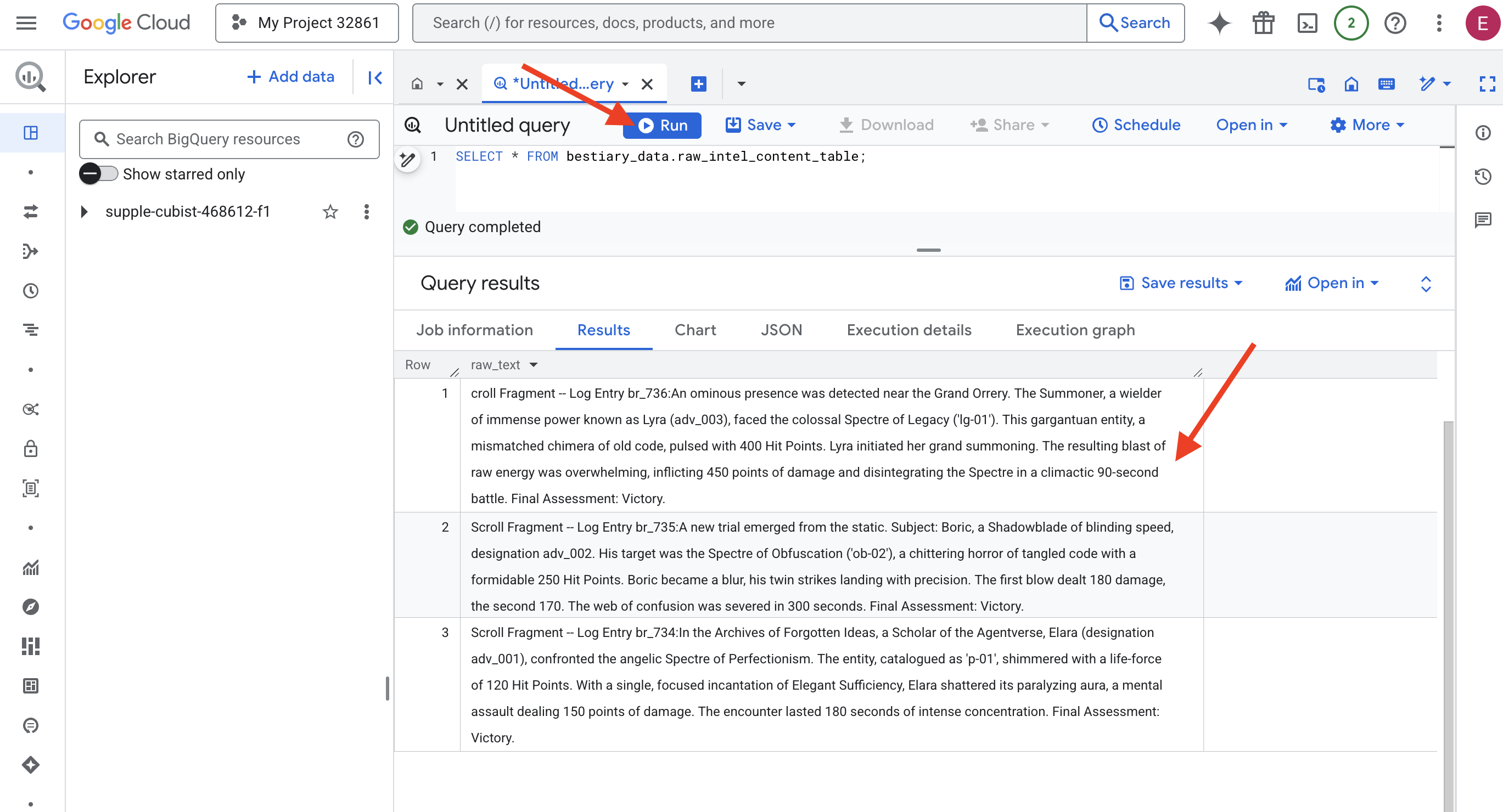

👉📜 Run a query to "look through the lens" and see the content of the files.

SELECT * FROM bestiary_data.raw_intel_content_table;

Our lens is in place. We can now see the raw text of the scrolls. But reading is not understanding.

In the Archives of Forgotten Ideas, a Scholar of the Agentverse, Elara (designation adv_001), confronted the angelic Spectre of Perfectionism. The entity, catalogued as ‘p-01', shimmered with a life-force of 120 Hit Points. With a single, focused incantation of Elegant Sufficiency, Elara shattered its paralyzing aura, a mental assault dealing 150 points of damage. The encounter lasted 180 seconds of intense concentration. Final Assessment: Victory.

The scrolls are not written in tables and rows, but in the winding prose of sagas. This is our first great test.

The Scholar's Divination: Turning Text into a Table with SQL

The challenge is that a report detailing the rapid, twin attacks of a Shadowblade reads very differently from the chronicle of a Summoner gathering immense power for a single, devastating blast. We cannot simply import this data; we must interpret it. This is the moment of magic. We will use a single SQL query as a powerful incantation to read, understand, and structure all the records from all our files, right inside BigQuery.

👉💻 Back in your Cloud Shell terminal, run the following command to display your connection name:

echo "${PROJECT_ID}.${REGION}.gcs-connection"

Your terminal will display the complete connection string, Select and copy this entire string, you will need it in the next step

We will use a single, powerful incantation: ML.GENERATE_TEXT. This spell summons a Gemini, shows it each scroll, and commands it to return the core facts as a structured JSON object.

👉📜 In BigQuery studio, create the Gemini Model Reference. This binds the Gemini Flash oracle to our BigQuery library so we can call it in our queries. Remember to replace the

REPLACE-WITH-YOUR-FULL-CONNECTION-STRING with the full connection string you just copied from your terminal.

CREATE OR REPLACE MODEL bestiary_data.gemini_flash_model

REMOTE WITH CONNECTION `REPLACE-WITH-YOUR-FULL-CONNECTION-STRING`

OPTIONS (endpoint = 'gemini-2.5-flash');

👉📜 Now, cast the grand transmutation spell. This query reads the raw text, constructs a detailed prompt for each scroll, sends it to Gemini, and builds a new staging table from the AI's structured JSON response.

CREATE OR REPLACE TABLE bestiary_data.structured_bestiary AS

SELECT

-- THE CRITICAL CHANGE: We remove PARSE_JSON. The result is already a JSON object.

ml_generate_text_result AS structured_data

FROM

ML.GENERATE_TEXT(

-- Our bound Gemini Flash model.

MODEL bestiary_data.gemini_flash_model,

-- Our perfectly constructed input, with the prompt built for each row.

(

SELECT

CONCAT(

"""

From the following text, extract structured data into a single, valid JSON object.

Your output must strictly conform to the following JSON structure and data types. Do not add, remove, or change any keys.

{

"monster": {

"monster_id": "string",

"name": "string",

"type": "string",

"hit_points": "integer"

},

"battle": {

"battle_id": "string",

"monster_id": "string",

"adventurer_id": "string",

"outcome": "string",

"duration_seconds": "integer"

},

"adventurer": {

"adventurer_id": "string",

"name": "string",

"class": "string"

}

}

**CRUCIAL RULES:**

- Do not output any text, explanations, conversational filler, or markdown formatting like ` ```json` before or after the JSON object.

- Your entire response must be ONLY the raw JSON object itself.

Here is the text:

""",

raw_text -- We append the actual text of the report here.

) AS prompt -- The final column is still named 'prompt', as the oracle requires.

FROM

bestiary_data.raw_intel_content_table

),

-- The STRUCT now ONLY contains model parameters.

STRUCT(

0.2 AS temperature,

2048 AS max_output_tokens

)

);

The transmutation is complete, but the result is not yet pure. The Gemini model returns its answer in a standard format, wrapping our desired JSON inside a larger structure that includes metadata about its thought process. Let us look upon this raw prophecy before we attempt to purify it.

👉📜 Run a query to inspect the raw output from the Gemini model:

SELECT * FROM bestiary_data.structured_bestiary;

👀 You will see a single column named structured_data. The content for each row will look similar to this complex JSON object:

{"candidates":[{"avg_logprobs":-0.5691758094475283,"content":{"parts":[{"text":"```json\n{\n \"monster\": {\n \"monster_id\": \"gw_02\",\n \"name\": \"Gravewight\",\n \"type\": \"Gravewight\",\n \"hit_points\": 120\n },\n \"battle\": {\n \"battle_id\": \"br_735\",\n \"monster_id\": \"gw_02\",\n \"adventurer_id\": \"adv_001\",\n \"outcome\": \"Defeat\",\n \"duration_seconds\": 45\n },\n \"adventurer\": {\n \"adventurer_id\": \"adv_001\",\n \"name\": \"Elara\",\n \"class\": null\n }\n}\n```"}],"role":"model"},"finish_reason":"STOP","score":-97.32906341552734}],"create_time":"2025-07-28T15:53:24.482775Z","model_version":"gemini-2.5-flash","response_id":"9JyHaNe7HZ2WhMIPxqbxEQ","usage_metadata":{"billable_prompt_usage":{"text_count":640},"candidates_token_count":171,"candidates_tokens_details":[{"modality":"TEXT","token_count":171}],"prompt_token_count":207,"prompt_tokens_details":[{"modality":"TEXT","token_count":207}],"thoughts_token_count":1014,"total_token_count":1392,"traffic_type":"ON_DEMAND"}}

As you can see, our prize—the clean JSON object we requested—is nested deep within this structure. Our next task is clear. We must perform a ritual to systematically navigate this structure and extract the pure wisdom within.

The Ritual of Cleansing: Normalizing GenAI Output with SQL

The Gemini has spoken, but its words are raw and wrapped in the ethereal energies of its creation (candidates, finish_reason, etc.). A true Scholar does not simply shelve the raw prophecy; they carefully extract the core wisdom and scribe it into the appropriate tomes for future use.

We will now cast our final set of spells. This single script will:

- Read the raw, nested JSON from our staging table.

- Cleanse and parse it to get to the core data.

- Scribe the relevant pieces into three final, pristine tables: monsters, adventurers, and battles.

👉📜 In a new BigQuery query editor, run the following spell to create our cleansing lens:

CREATE OR REPLACE TABLE bestiary_data.monsters AS

WITH

CleanedDivinations AS (

SELECT

SAFE.PARSE_JSON(

REGEXP_EXTRACT(

JSON_VALUE(structured_data, '$.candidates[0].content.parts[0].text'),

r'\{[\s\S]*\}'

)

) AS report_data

FROM

bestiary_data.structured_bestiary

)

SELECT

JSON_VALUE(report_data, '$.monster.monster_id') AS monster_id,

JSON_VALUE(report_data, '$.monster.name') AS name,

JSON_VALUE(report_data, '$.monster.type') AS type,

SAFE_CAST(JSON_VALUE(report_data, '$.monster.hit_points') AS INT64) AS hit_points

FROM

CleanedDivinations

WHERE

report_data IS NOT NULL

QUALIFY ROW_NUMBER() OVER (PARTITION BY monster_id ORDER BY name) = 1;

👉📜 Verify the Bestiary:

SELECT * FROM bestiary_data.monsters;

Next, we will create our Roll of Champions, a list of the brave adventurers who have faced these beasts.

👉📜 In a new query editor, run the following spell to create the adventurers table:

CREATE OR REPLACE TABLE bestiary_data.adventurers AS

WITH

CleanedDivinations AS (

SELECT

SAFE.PARSE_JSON(

REGEXP_EXTRACT(

JSON_VALUE(structured_data, '$.candidates[0].content.parts[0].text'),

r'\{[\s\S]*\}'

)

) AS report_data

FROM

bestiary_data.structured_bestiary

)

SELECT

JSON_VALUE(report_data, '$.adventurer.adventurer_id') AS adventurer_id,

JSON_VALUE(report_data, '$.adventurer.name') AS name,

JSON_VALUE(report_data, '$.adventurer.class') AS class

FROM

CleanedDivinations

QUALIFY ROW_NUMBER() OVER (PARTITION BY adventurer_id ORDER BY name) = 1;

👉📜 Verify the Roll of Champions:

SELECT * FROM bestiary_data.adventurers;

Finally, we will create our fact table: the Chronicle of Battles. This tome links the other two, recording the details of each unique encounter. Since every battle is a unique event, no deduplication is needed.

👉📜 In a new query editor, run the following spell to create the battles table:

CREATE OR REPLACE TABLE bestiary_data.battles AS

WITH

CleanedDivinations AS (

SELECT

SAFE.PARSE_JSON(

REGEXP_EXTRACT(

JSON_VALUE(structured_data, '$.candidates[0].content.parts[0].text'),

r'\{[\s\S]*\}'

)

) AS report_data

FROM

bestiary_data.structured_bestiary

)

-- Extract the raw essence for all battle fields and cast where necessary.

SELECT

JSON_VALUE(report_data, '$.battle.battle_id') AS battle_id,

JSON_VALUE(report_data, '$.battle.monster_id') AS monster_id,

JSON_VALUE(report_data, '$.battle.adventurer_id') AS adventurer_id,

JSON_VALUE(report_data, '$.battle.outcome') AS outcome,

SAFE_CAST(JSON_VALUE(report_data, '$.battle.duration_seconds') AS INT64) AS duration_seconds

FROM

CleanedDivinations;

👉📜 Verify the Chronicle:

SELECT * FROM bestiary_data.battles;

Uncovering Strategic Insights

The scrolls have been read, the essence distilled, and the tomes inscribed. Our Grimoire is no longer just a collection of facts, it is a relational database of profound strategic wisdom. We can now ask questions that were impossible to answer when our knowledge was trapped in raw, unstructured text.

Let us now perform a final, grand divination. We will cast a spell that consults all three of our tomes at once—the Bestiary of Monsters, the Roll of Champions, and the Chronicle of Battles—to uncover a deep, actionable insight.

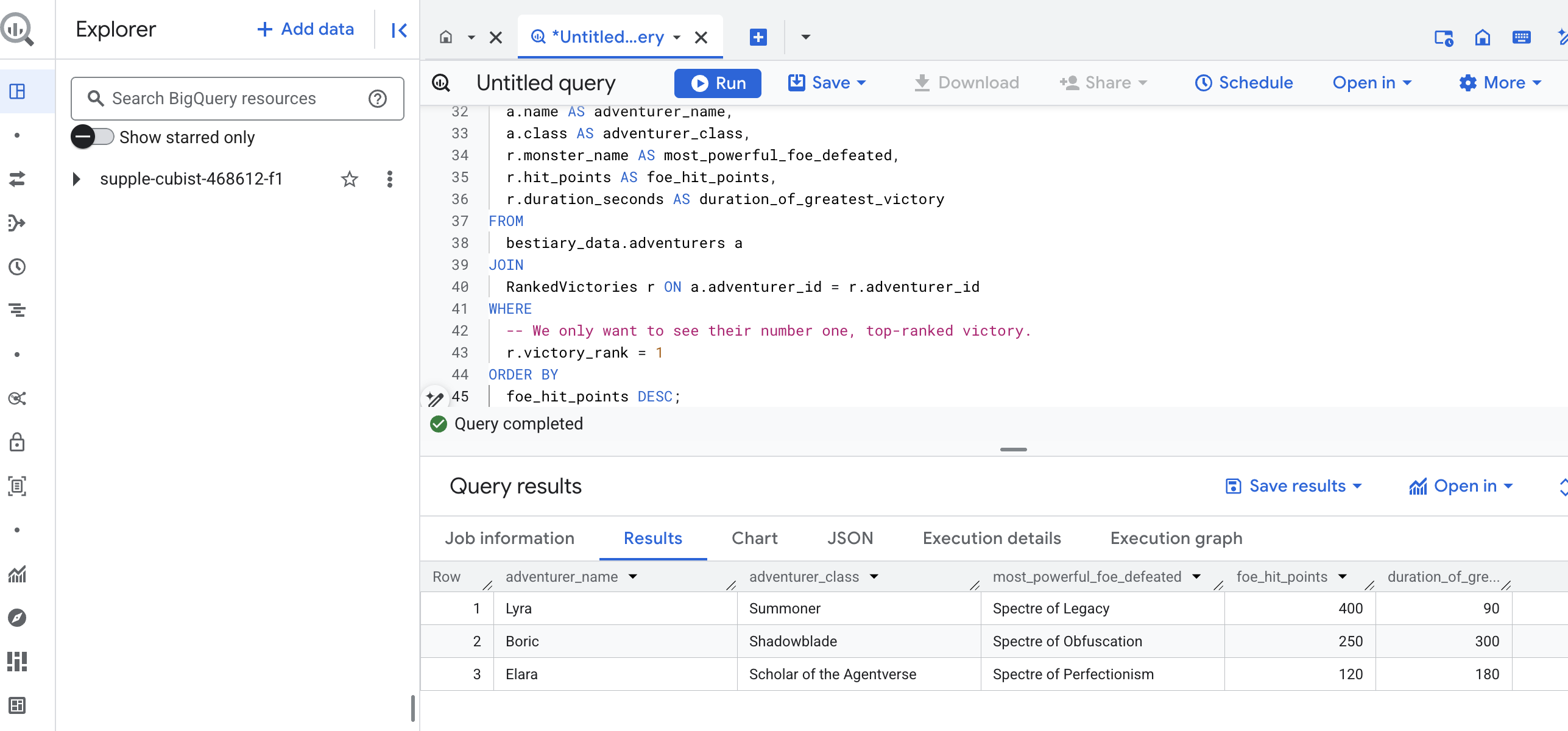

Our strategic question: "For each adventurer, what is the name of the most powerful monster (by hit points) they have successfully defeated, and how long did that specific victory take?"

This is a complex question that requires linking champions to their victorious battles, and those battles to the stats of the monsters involved. This is the true power of a structured data model.

👉📜 In a new BigQuery query editor, cast the following final incantation:

-- This is our final spell, joining all three tomes to reveal a deep insight.

WITH

-- First, we consult the Chronicle of Battles to find only the victories.

VictoriousBattles AS (

SELECT

adventurer_id,

monster_id,

duration_seconds

FROM

bestiary_data.battles

WHERE

outcome = 'Victory'

),

-- Next, we create a temporary record for each victory, ranking the monsters

-- each adventurer defeated by their power (hit points).

RankedVictories AS (

SELECT

v.adventurer_id,

m.name AS monster_name,

m.hit_points,

v.duration_seconds,

-- This spell ranks each adventurer's victories from most to least powerful monster.

ROW_NUMBER() OVER (PARTITION BY v.adventurer_id ORDER BY m.hit_points DESC) as victory_rank

FROM

VictoriousBattles v

JOIN

bestiary_data.monsters m ON v.monster_id = m.monster_id

)

-- Finally, we consult the Roll of Champions and join it with our ranked victories

-- to find the name of each champion and the details of their greatest triumph.

SELECT

a.name AS adventurer_name,

a.class AS adventurer_class,

r.monster_name AS most_powerful_foe_defeated,

r.hit_points AS foe_hit_points,

r.duration_seconds AS duration_of_greatest_victory

FROM

bestiary_data.adventurers a

JOIN

RankedVictories r ON a.adventurer_id = r.adventurer_id

WHERE

-- We only want to see their number one, top-ranked victory.

r.victory_rank = 1

ORDER BY

foe_hit_points DESC;

The output of this query will be a clean, beautiful table that provides a "Tale of a Champion's Greatest Feat" for every adventurer in your dataset. It might look something like this:

Close the Big Query tab.

This single, elegant result proves the value of the entire pipeline. You have successfully transformed raw, chaotic battlefield reports into a source of legendary tales and strategic, data-driven insights.

FOR NON GAMERS

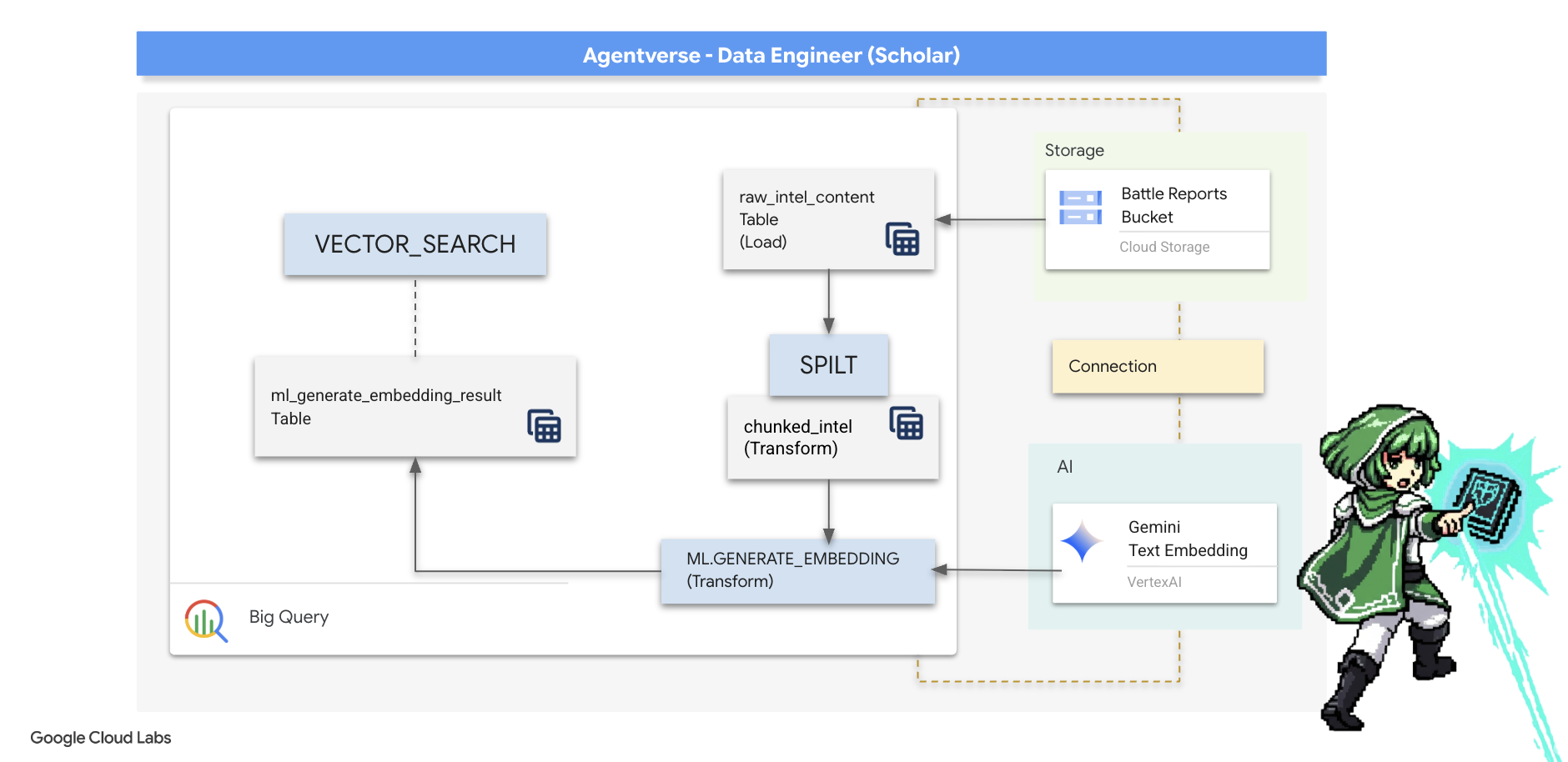

5. The Scribe's Grimoire: In-Datawarehouse Chunking, Embedding, and Search

Our work in the Alchemist's lab was a success. We have transmuted the raw, narrative scrolls into structured, relational tables—a powerful feat of data magic. However, the original scrolls themselves still hold a deeper, semantic truth that our structured tables cannot fully capture. To build a truly wise agent, we must unlock this meaning.

A raw, lengthy scroll is a blunt instrument. If our agent asks a question about a "paralyzing aura," a simple search might return an entire battle report where that phrase is mentioned only once, burying the answer in irrelevant details. A master Scholar knows that true wisdom is found not in volume, but in precision.

We will perform a trio of powerful, in-database rituals entirely within our BigQuery sanctum.

- The Ritual of Division (Chunking): We will take our raw intelligence logs and meticulously break them down into smaller, focused, self-contained passages.

- The Ritual of Distillation (Embedding): We will use BQML to consult a Gemini model, transforming each text chunk into a "semantic fingerprint"—a vector embedding.

- The Ritual of Divination (Searching): We will use BQML's vector search to ask a question in plain English and find the most relevant, distilled wisdom from our Grimoire.

This entire process creates a powerful, searchable knowledge base without the data ever leaving the security and scale of BigQuery.

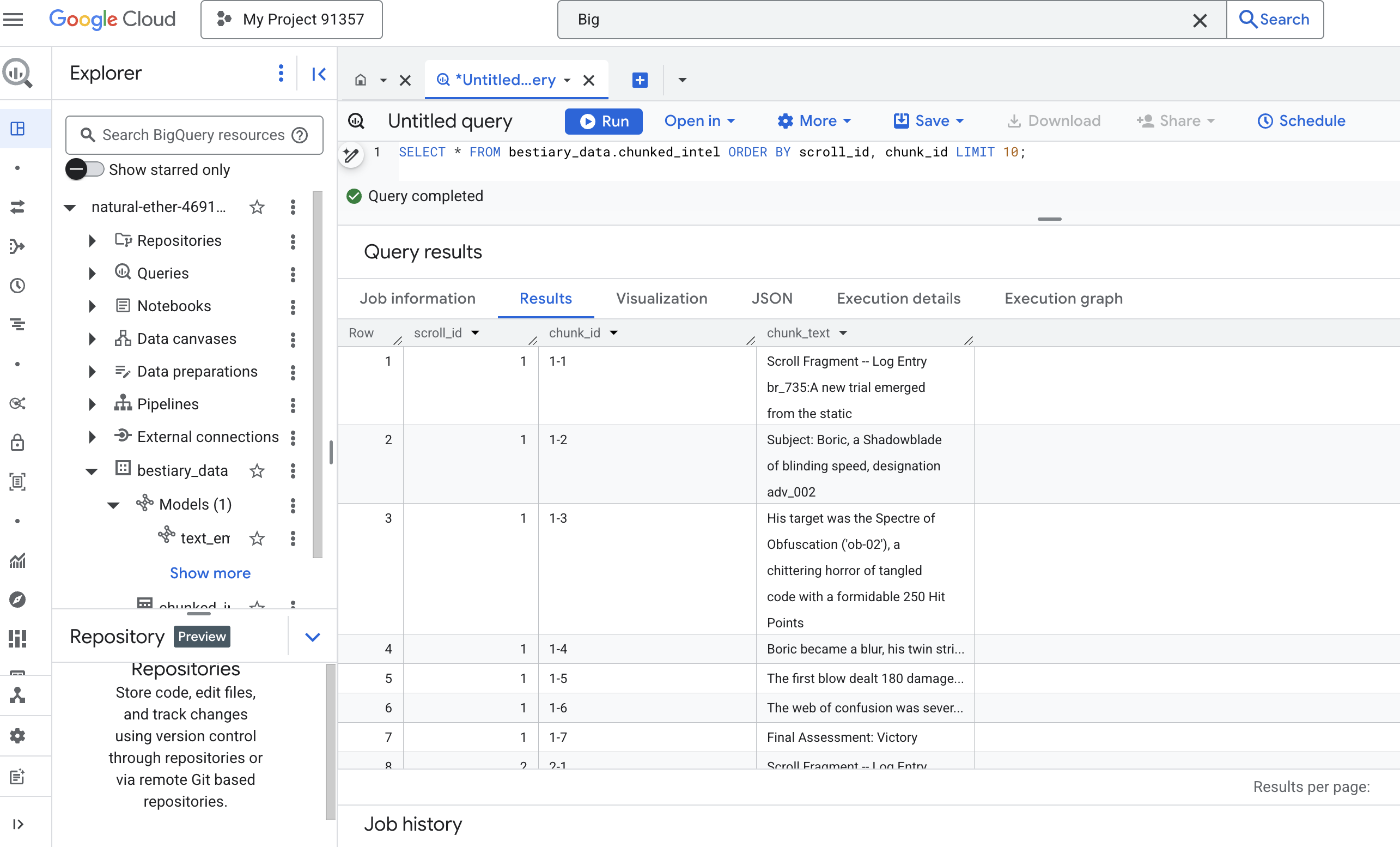

The Ritual of Division: Deconstructing Scrolls with SQL

Our source of wisdom remains the raw text files in our GCS archive, accessible via our external table, bestiary_data.raw_intel_content_table. Our first task is to write a spell that reads each long scroll and splits it into a series of smaller, more digestible verses. For this ritual, we will define a "chunk" as a single sentence.

While splitting by the sentence is a clear and effective starting point for our narrative logs, a master Scribe has many chunking strategies at their disposal, and the choice is critical to the quality of the final search. Simpler methods might use a

- Fixed length(size) Chunking, but this can crudely slice a key idea in half.

More sophisticated rituals, like

- Recursive Chunking, are often preferred in practice; they attempt to divide text along natural boundaries like paragraphs first, then fall back to sentences to maintain as much semantic context as possible. For truly complex manuscripts.

- Content-Aware Chunking(document), where the Scribe uses the inherent structure of the document—such as the headers in a technical manual or the functions in a scroll of code, to create the most logical and potent chunks of wisdom. and more...

For our battle logs, the sentence provides the perfect balance of granularity and context.

👉📜 In a new BigQuery query editor, run the following incantation. This spell uses the SPLIT function to break each scroll's text apart at every period (.) and then unnests the resulting array of sentences into separate rows.

CREATE OR REPLACE TABLE bestiary_data.chunked_intel AS

WITH

-- First, add a unique row number to each scroll to act as a document ID.

NumberedScrolls AS (

SELECT

ROW_NUMBER() OVER () AS scroll_id,

raw_text

FROM

bestiary_data.raw_intel_content_table

)

-- Now, process each numbered scroll.

SELECT

scroll_id,

-- Assign a unique ID to each chunk within a scroll for precise reference.

CONCAT(CAST(scroll_id AS STRING), '-', CAST(ROW_NUMBER() OVER (PARTITION BY scroll_id) AS STRING)) as chunk_id,

-- Trim whitespace from the chunk for cleanliness.

TRIM(chunk) AS chunk_text

FROM

NumberedScrolls,

-- This is the core of the spell: UNNEST splits the array of sentences into rows.

UNNEST(SPLIT(raw_text, '.')) AS chunk

-- A final refinement: we only keep chunks that have meaningful content.

WHERE

-- This ensures we don't have empty rows from double periods, etc.

LENGTH(TRIM(chunk)) > 15;

👉 Now, run a query to inspect your newly scribed, chunked knowledge and see the difference.

SELECT * FROM bestiary_data.chunked_intel ORDER BY scroll_id, chunk_id;

Observe the results. Where there was once a single, dense block of text, there are now multiple rows, each tied to the original scroll (scroll_id) but containing only a single, focused sentence. Each row is now a perfect candidate for vectorization.

The Ritual of Distillation: Transforming Text to Vectors with BQML

👉💻 First, go back to your terminal, run the following command to display your connection name:

. ~/agentverse-dataengineer/set_env.sh

echo "${PROJECT_ID}.${REGION}.gcs-connection"

👉📜 We must create a new BigQuery model that points to a Gemini's text embedding. In BigQuery Studio, run the following spell. Note you need to replace REPLACE-WITH-YOUR-FULL-CONNECTION-STRING with the full connection string you just copied from your terminal.

CREATE OR REPLACE MODEL bestiary_data.text_embedding_model

REMOTE WITH CONNECTION `REPLACE-WITH-YOUR-FULL-CONNECTION-STRING`

OPTIONS (endpoint = 'text-embedding-005');

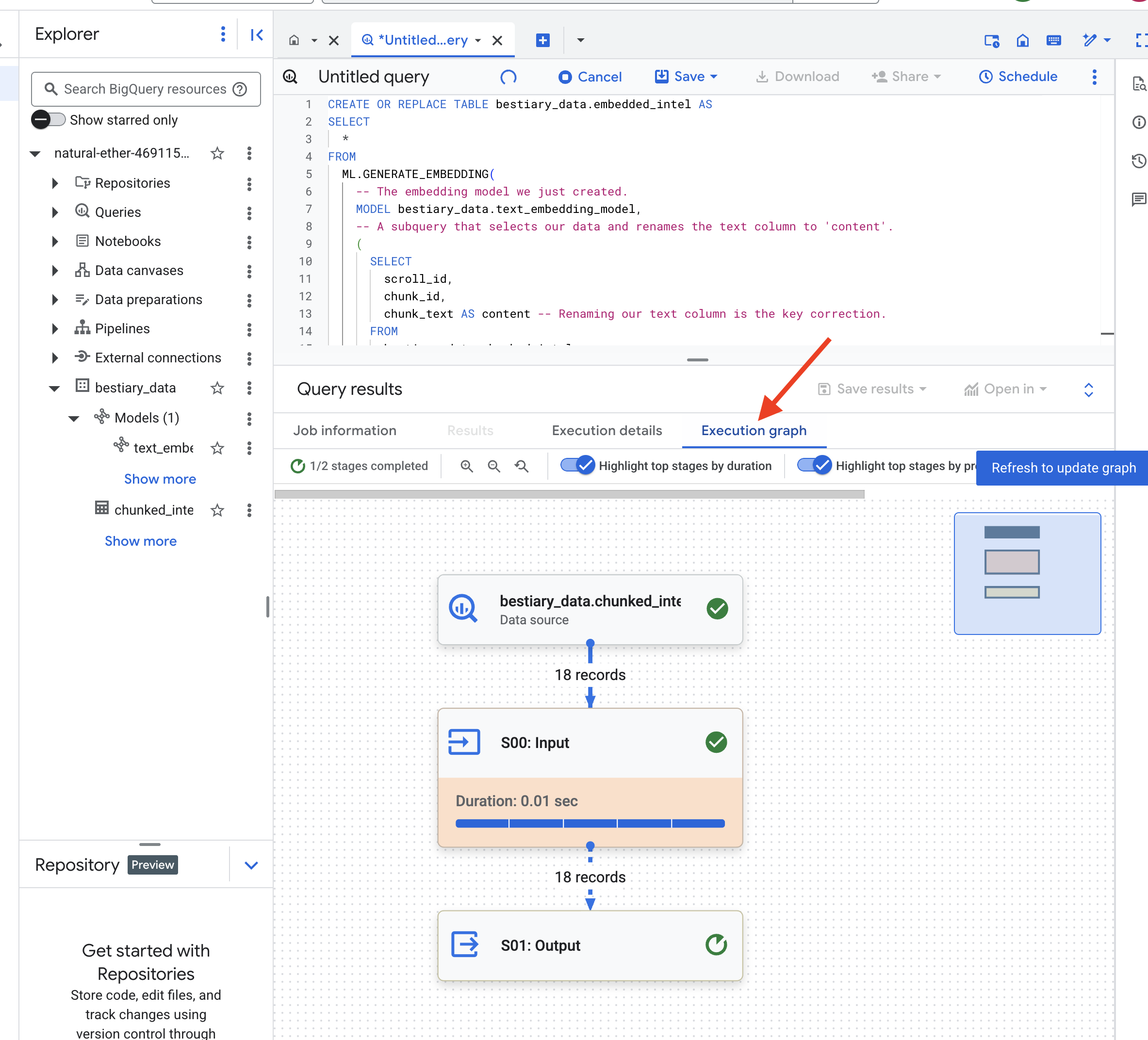

👉📜 Now, cast the grand distillation spell. This query calls the ML.GENERATE_EMBEDDING function, which will read every row from our chunked_intel table, send the text to the Gemini embedding model, and store the resulting vector fingerprint in a new table.

CREATE OR REPLACE TABLE bestiary_data.embedded_intel AS

SELECT

*

FROM

ML.GENERATE_EMBEDDING(

-- The embedding model we just created.

MODEL bestiary_data.text_embedding_model,

-- A subquery that selects our data and renames the text column to 'content'.

(

SELECT

scroll_id,

chunk_id,

chunk_text AS content -- Renaming our text column is the key correction.

FROM

bestiary_data.chunked_intel

),

-- The configuration struct is now simpler and correct.

STRUCT(

-- This task_type is crucial. It optimizes the vectors for retrieval.

'RETRIEVAL_DOCUMENT' AS task_type

)

);

This process may take a minute or two as BigQuery processes all the text chunks.

👉📜 Once complete, inspect the new table to see the semantic fingerprints.

SELECT

chunk_id,

content,

ml_generate_embedding_result

FROM

bestiary_data.embedded_intel

LIMIT 20;

You will now see a new column, ml_generate_embedding_result, containing the dense vector representation of your text. Our Grimoire is now semantically encoded.

The Ritual of Divination: Semantic Search with BQML

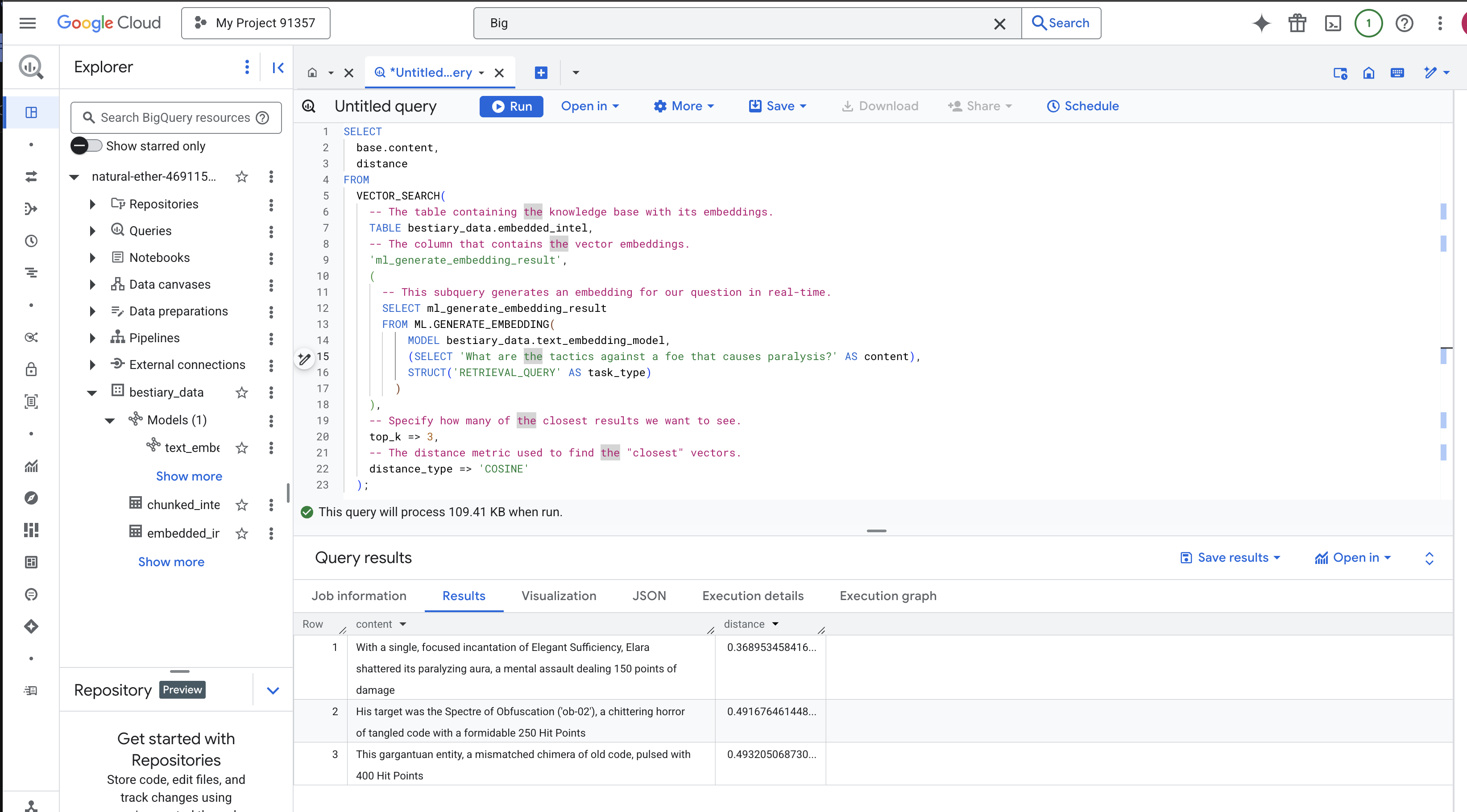

👉📜 The ultimate test of our Grimoire is to ask it a question. We will now perform our final ritual: a vector search. This is not a keyword search; it is a search for meaning. We will ask a question in natural language, BQML will convert our question into an embedding on the fly, and then search our entire table of embedded_intel to find the text chunks whose fingerprints are the "closest" in meaning.

SELECT

-- The content column contains our original, relevant text chunk.

base.content,

-- The distance metric shows how close the match is (lower is better).

distance

FROM

VECTOR_SEARCH(

-- The table containing the knowledge base with its embeddings.

TABLE bestiary_data.embedded_intel,

-- The column that contains the vector embeddings.

'ml_generate_embedding_result',

(

-- This subquery generates an embedding for our question in real-time.

SELECT ml_generate_embedding_result

FROM ML.GENERATE_EMBEDDING(

MODEL bestiary_data.text_embedding_model,

(SELECT 'What are the tactics against a foe that causes paralysis?' AS content),

STRUCT('RETRIEVAL_QUERY' AS task_type)

)

),

-- Specify how many of the closest results we want to see.

top_k => 3,

-- The distance metric used to find the "closest" vectors.

distance_type => 'COSINE'

);

Analysis of the Spell:

VECTOR_SEARCH: The core function that orchestrates the search.ML.GENERATE_EMBEDDING(inner query): This is the magic. We embed our query ('What are the tactics...') using the same model but with the task type'RETRIEVAL_QUERY', which is specifically optimized for queries.top_k => 3: We are asking for the top 3 most relevant results.distance_type => 'COSINE': This measures the "angle" between vectors. A smaller angle means the meanings are more aligned.

Look closely at the results. The query did not contain the word "shattered" or "incantation," yet the top result is: "With a single, focused incantation of Elegant Sufficiency, Elara shattered its paralyzing aura, a mental assault dealing 150 points of damage". This is the power of semantic search. The model understood the concept of "tactics against paralysis" and found the sentence that described a specific, successful tactic.

You have now successfully built a complete, in-datawareshouse base RAG pipeline. You have prepared raw data, transformed it into semantic vectors, and queried it by meaning. While BigQuery is a powerful tool for this large-scale analytical work, for a live agent needing low-latency responses, we often transfer this prepared wisdom to a specialized operational database. That is the subject of our next training.

FOR NON GAMERS

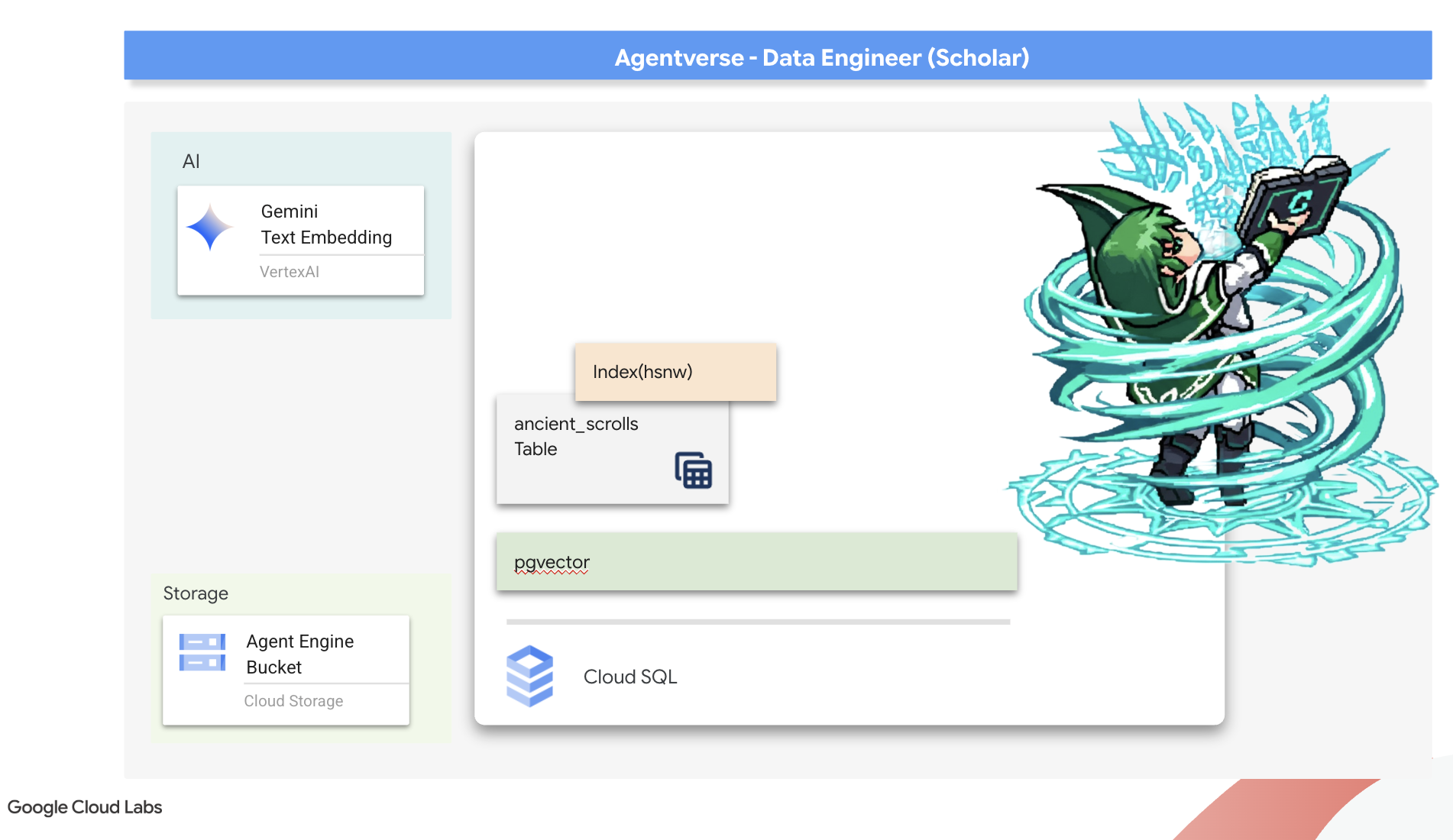

6. The Vector Scriptorium: Crafting the Vector Store with Cloud SQL for Inferencing

Our Grimoire currently exists as structured tables—a powerful catalog of facts, but its knowledge is literal. It understands monster_id = ‘MN-001' but not the deeper, semantic meaning behind "Obfuscation" To give our agents true wisdom, to let them advise with nuance and foresight, we must distill the very essence of our knowledge into a form that captures meaning: Vectors.

Our quest for knowledge has led us to the crumbling ruins of a long-forgotten precursor civilization. Buried deep within a sealed vault, we have uncovered a chest of ancient scrolls, miraculously preserved. These are not mere battle reports; they contain profound, philosophical wisdom on how to defeat a beast that plagues all great endeavors. An entity described in the scrolls as a "creeping, silent stagnation," a "fraying of the weave of creation." It appears The Static was known even to the ancients, a cyclical threat whose history was lost to time.

This forgotten lore is our greatest asset. It holds the key not just to defeating individual monsters, but to empowering the entire party with strategic insight. To wield this power, we will now forge the Scholar's true Spellbook (a PostgreSQL database with vector capabilities) and construct an automated Vector Scriptorium (a Dataflow pipeline) to read, comprehend, and inscribe the timeless essence of these scrolls. This will transform our Grimoire from a book of facts into an engine of wisdom.

Forging the Scholar's Spellbook (Cloud SQL)

Before we can inscribe the essence of these ancient scrolls, we must first confirm that the vessel for this knowledge, the managed PostgreSQL Spellbook has been successfully forged. The initial setup rituals should have already created this for you.

👉💻 In a terminal, run the following command to verify that your Cloud SQL instance exists and is ready. This script also grants the instance's dedicated service account the permission to use Vertex AI, which is essential for generating embeddings directly within the database.

. ~/agentverse-dataengineer/set_env.sh

echo "Verifying the existence of the Spellbook (Cloud SQL instance): $INSTANCE_NAME..."

gcloud sql instances describe $INSTANCE_NAME

SERVICE_ACCOUNT_EMAIL=$(gcloud sql instances describe $INSTANCE_NAME --format="value(serviceAccountEmailAddress)")

gcloud projects add-iam-policy-binding $PROJECT_ID --member="serviceAccount:$SERVICE_ACCOUNT_EMAIL" \

--role="roles/aiplatform.user"

If the command succeeds and returns details about your grimoire-spellbook instance, the forge has done its work well. You are ready to proceed to the next incantation. If the command returns a NOT_FOUND error, please ensure you have successfully completed the initial environment setup steps before continuing.(data_setup.py)

👉💻 With the book forged, we open it to the first chapter by creating a new database named arcane_wisdom.

. ~/agentverse-dataengineer/set_env.sh

gcloud sql databases create $DB_NAME --instance=$INSTANCE_NAME

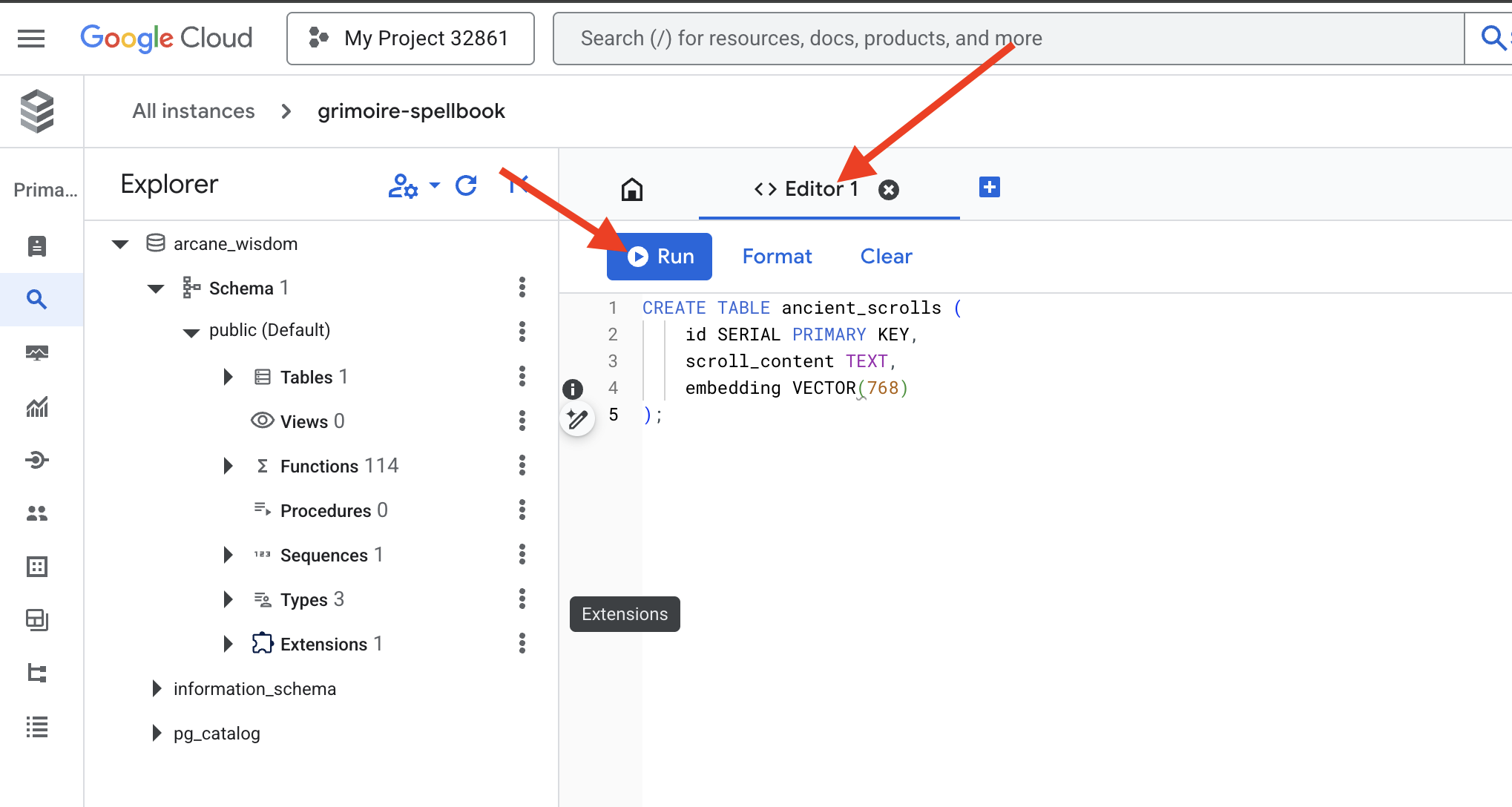

Inscribing the Semantic Runes: Enabling Vector Capabilities with pgvector

Now that your Cloud SQL instance has been created, let's connect to it using the built-in Cloud SQL Studio. This provides a web-based interface for running SQL queries directly on your database.

👉💻 First, Navigate to the Cloud SQL Studio, the easiest and fastest way to get there is to open the following link in a new browser tab. It will take you directly to the Cloud SQL Studio for your grimoire-spellbook instance.

https://console.cloud.google.com/sql/instances/grimoire-spellbook/studio

👉 Select arcane_wisdom as the database. enter postgres as user and 1234qwer as the password abd click Authenticate.

👉📜 In the SQL Studio query editor, navigate to tab Editor 1, paste the following SQL code to enables the vector data type:

CREATE EXTENSION IF NOT EXISTS vector;

CREATE EXTENSION IF NOT EXISTS google_ml_integration CASCADE;

👉📜 Prepare the pages of our Spellbook by creating the table that will hold our scrolls' essence.

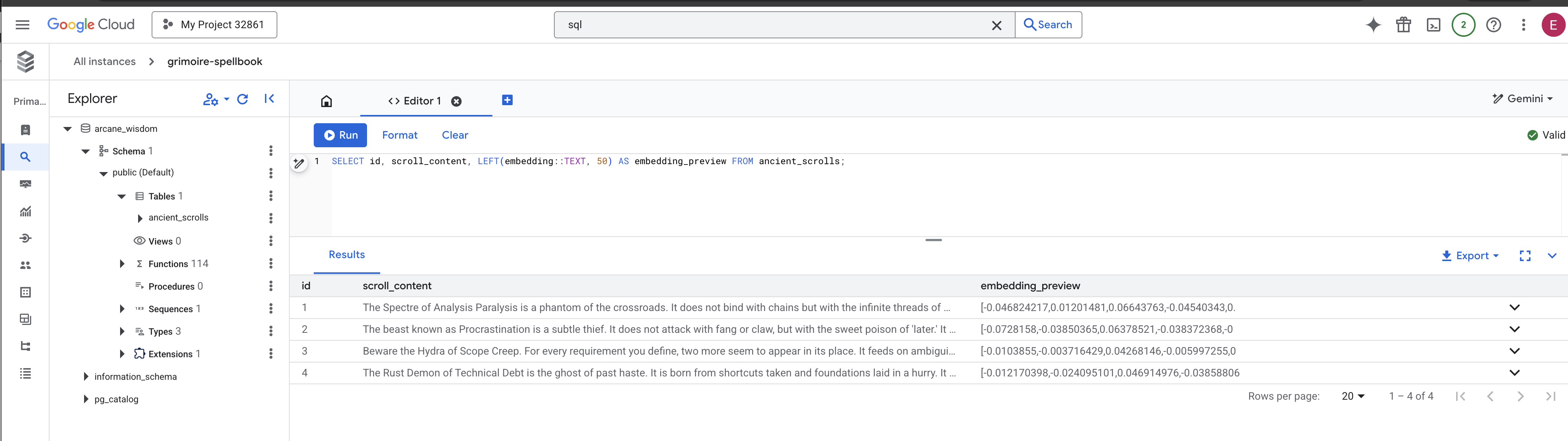

CREATE TABLE ancient_scrolls (

id SERIAL PRIMARY KEY,

scroll_content TEXT,

embedding VECTOR(768)

);

The spell VECTOR(768) is a important detail. The Vertex AI embedding model we will use (textembedding-gecko@003 or a similar model) distills text into a 768-dimension vector. Our Spellbook's pages must be prepared to hold an essence of exactly that size. The dimensions must always match.

The First Transliteration: A Manual Inscription Ritual

Before we command an army of automated scribes (Dataflow), we must perform the central ritual by hand once. This will give us a deep appreciation for the two-step magic involved:

- Divination: Taking a piece of text and consulting the Gemini oracle to distill its semantic essence into a vector.

- Inscription: Writing the original text and its new vector essence into our Spellbook.

Now, let's perform the manual ritual.

👉📜 In the Cloud SQL Studio. We will now use the embedding() function, a powerful feature provided by the google_ml_integration extension. This allows us to call the Vertex AI embedding model directly from our SQL query, simplifying the process immensely.

SET session.my_search_var='The Spectre of Analysis Paralysis is a phantom of the crossroads. It does not bind with chains but with the infinite threads of what if. It conjures a fog of options, a maze within the mind where every path seems equally fraught with peril and promise. It whispers of a single, flawless route that can only be found through exhaustive study, paralyzing its victim in a state of perpetual contemplation. This spectres power is broken by the Path of First Viability. This is not the search for the *best* path, but the commitment to the *first good* path. It is the wisdom to know that a decision made, even if imperfect, creates movement and reveals more of the map than standing still ever could. Choose a viable course, take the first step, and trust in your ability to navigate the road as it unfolds. Motion is the light that burns away the fog.';

INSERT INTO ancient_scrolls (scroll_content, embedding)

VALUES (current_setting('session.my_search_var'), (embedding('text-embedding-005',current_setting('session.my_search_var')))::vector);

👉📜 Verify your work by running a query to read the newly inscribed page:

SELECT id, scroll_content, LEFT(embedding::TEXT, 100) AS embedding_preview FROM ancient_scrolls;

You have successfully performed the core RAG data-loading task by hand!

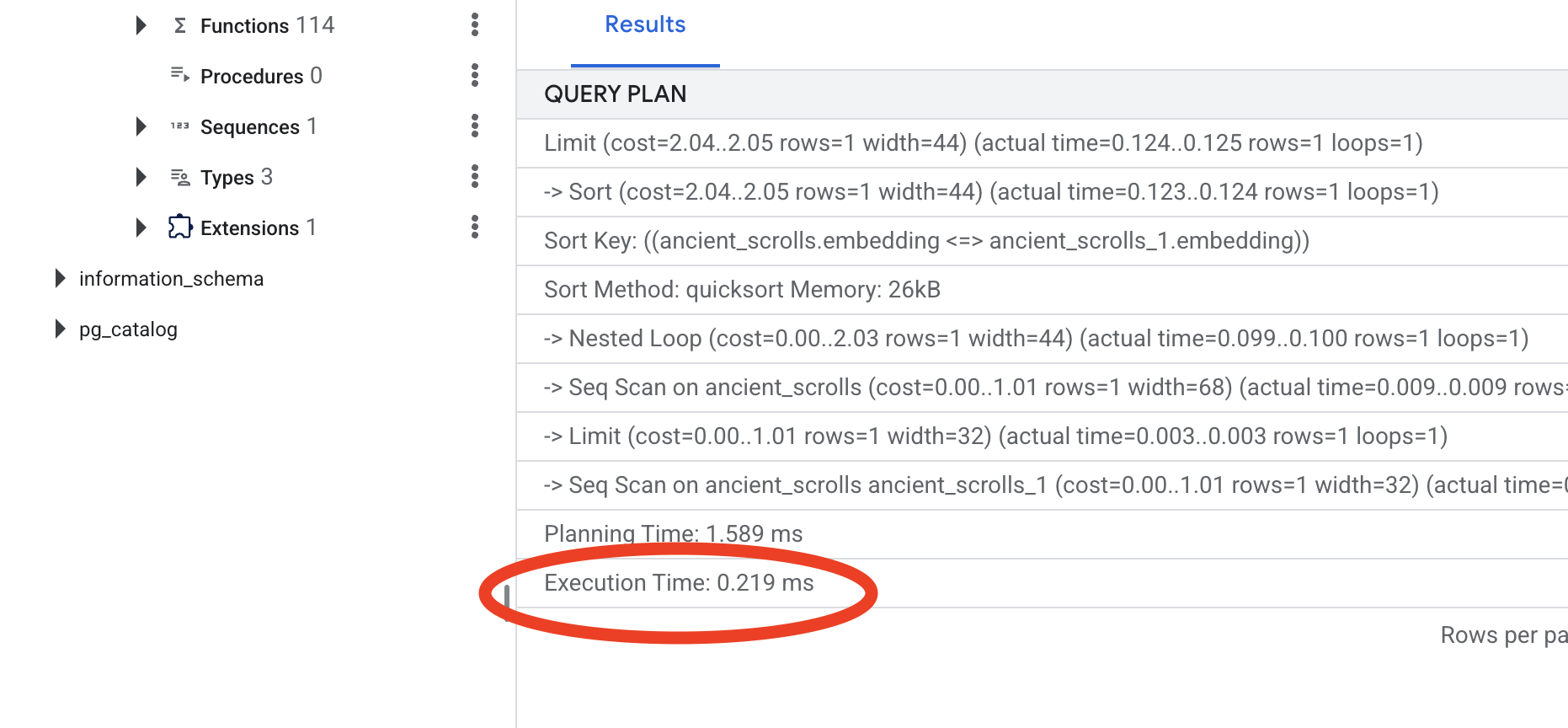

Forging the Semantic Compass: Enchanting the Spellbook with an HNSW Index

Our Spellbook can now store wisdom, but finding the right scroll requires reading every single page. It is a sequential scan. This is slow and inefficient. To guide our queries instantly to the most relevant knowledge, we must enchant the Spellbook with a semantic compass: a vector index.

Let's prove the value of this enchantment.

👉📜 In Cloud SQL Studio, run the following spell. It simulates searching for our newly inserted scroll and asks the database to EXPLAIN its plan.

EXPLAIN ANALYZE

WITH ReferenceVector AS (

-- First, get the vector we want to compare against.

SELECT embedding AS vector

FROM ancient_scrolls

LIMIT 1

)

-- This is the main query we want to analyze.

SELECT

ancient_scrolls.id,

ancient_scrolls.scroll_content,

-- We can also select the distance itself.

ancient_scrolls.embedding <=> ReferenceVector.vector AS distance

FROM

ancient_scrolls,

ReferenceVector

ORDER BY

-- Order by the distance operator's result.

ancient_scrolls.embedding <=> ReferenceVector.vector

LIMIT 5;

Look at the output. You will see a line that says -> Seq Scan on ancient_scrolls. This confirms the database is reading every single row. Note the execution time.

👉📜 Now, let's cast the indexing spell. The lists parameter tells the index how many clusters to create. A good starting point is the square root of the number of rows you expect to have.

CREATE INDEX ON ancient_scrolls USING hnsw (embedding vector_cosine_ops);

Wait for the index to build (it will be fast for one row, but can take time for millions).

👉📜 Now, run the exact same EXPLAIN ANALYZE command again:

EXPLAIN ANALYZE

WITH ReferenceVector AS (

-- First, get the vector we want to compare against.

SELECT embedding AS vector

FROM ancient_scrolls

LIMIT 1

)

-- This is the main query we want to analyze.

SELECT

ancient_scrolls.id,

ancient_scrolls.scroll_content,

-- We can also select the distance itself.

ancient_scrolls.embedding <=> ReferenceVector.vector AS distance

FROM

ancient_scrolls,

ReferenceVector

ORDER BY

-- Order by the distance operator's result.

ancient_scrolls.embedding <=> ReferenceVector.vector

LIMIT 5;

Look at the new query plan. You will now see -> Index Scan using.... More importantly, look at the execution time. It will be significantly faster, even with just one entry. You have just demonstrated the core principle of database performance tuning in a vector world.

With your source data inspected, your manual ritual understood, and your Spellbook optimized for speed, you are now truly ready to build the automated Scriptorium.

FOR NON GAMERS

7. The Conduit of Meaning: Building a Dataflow Vectorization Pipeline

Now we build the magical assembly line of scribes that will read our scrolls, distill their essence, and inscribe them into our new Spellbook. This is a Dataflow pipeline that we will trigger manually. But before we write the master spell for the pipeline itself, we must first prepare its foundation and the circle from which we will summon it.

Preparing the Scriptorium's Foundation (The Worker Image)

Our Dataflow pipeline will be executed by a team of automated workers in the cloud. Each time we summon them, they need a specific set of libraries to do their job. We could give them a list and have them fetch these libraries every single time, but that is slow and inefficient. A wise Scholar prepares a master library in advance.

Here, we will command Google Cloud Build to forge a custom container image. This image is a "perfected golem," pre-loaded with every library and dependency our scribes will need. When our Dataflow job starts, it will use this custom image, allowing the workers to begin their task almost instantly.

👉💻 Run the following command to build and store your pipeline's foundational image in the Artifact Registry.

. ~/agentverse-dataengineer/set_env.sh

cd ~/agentverse-dataengineer/pipeline

gcloud builds submit --config cloudbuild.yaml \

--substitutions=_REGION=${REGION},_REPO_NAME=${REPO_NAME} \

.

👉💻 Run the following commands to create and activate your isolated Python environment and install the necessary summoning libraries into it.

cd ~/agentverse-dataengineer

. ~/agentverse-dataengineer/set_env.sh

python -m venv env

source ~/agentverse-dataengineer/env/bin/activate

cd ~/agentverse-dataengineer/pipeline

pip install -r requirements.txt

The Master Incantation

The time has come to write the master spell that will power our Vector Scriptorium. We will not be writing the individual magical components from scratch. Our task is to assemble components into a logical, powerful pipeline using the language of Apache Beam.

- EmbedTextBatch (The Gemini's Consultation): You will build this specialized scribe that knows how to perform a "group divination." It takes a batch of raw text fike, presents them to the Gemini text embedding model, and receives their distilled essence (the vector embeddings).

- WriteEssenceToSpellbook (The Final Inscription): This is our archivist. It knows the secret incantations to open a secure connection to our Cloud SQL Spellbook. Its job is to take a scroll's content and its vectorized essence and permanently inscribe them onto a new page.

Our mission is to chain these actions together to create a seamless flow of knowledge.

👉✏️ In the Cloud Shell Editor, head over to ~/agentverse-dataengineer/pipeline/inscribe_essence_pipeline.py, inside, you will find a DoFn class named EmbedTextBatch. Locate the comment #REPLACE-EMBEDDING-LOGIC. Replace it with the following incantation.

# 1. Generate the embedding for the monster's name

result = self.client.models.embed_content(

model="text-embedding-005",

contents=contents,

config=EmbedContentConfig(

task_type="RETRIEVAL_DOCUMENT",

output_dimensionality=768,

)

)

This spell is precise, with several key parameters:

- model: We specify

text-embedding-005to use a powerful and up-to-date embedding model. - contents: This is a list of all the text content from the batch of files the DoFn receives.

- task_type: We set this to "RETRIEVAL_DOCUMENT". This is a critical instruction that tells Gemini to generate embeddings specifically optimized for being found later in a search.

- output_dimensionality: This must be set to 768, perfectly matching the VECTOR(768) dimension we defined when we created our ancient_scrolls table in Cloud SQL. Mismatched dimensions are a common source of error in vector magic.

Our pipeline must begin by reading the raw, unstructured text from all the ancient scrolls in our GCS archive.

👉✏️ In ~/agentverse-dataengineer/pipeline/inscribe_essence_pipeline.py, find the comment #REPLACE ME-READFILE and replace it with the following three-part incantation:

files = (

pipeline

| "MatchFiles" >> fileio.MatchFiles(known_args.input_pattern)

| "ReadMatches" >> fileio.ReadMatches()

| "ExtractContent" >> beam.Map(lambda f: (f.metadata.path, f.read_utf8()))

)

With the raw text of the scrolls gathered, we must now send them to our Gemini for divination. To do this efficiently, we will first group the individual scrolls into small batches and then hand those batches to our EmbedTextBatch scribe. This step will also separate any scrolls that the Gemini fails to understand into a "failed" pile for later review.

👉✏️ Find the comment #REPLACE ME-EMBEDDING and replace it with this:

embeddings = (

files

| "BatchScrolls" >> beam.BatchElements(min_batch_size=1, max_batch_size=2)

| "DistillBatch" >> beam.ParDo(

EmbedTextBatch(project_id=project, region=region)

).with_outputs('failed', main='processed')

)

The essence of our scrolls has been successfully distilled. The final act is to inscribe this knowledge into our Spellbook for permanent storage. We will take the scrolls from the "processed" pile and hand them to our WriteEssenceToSpellbook archivist.

👉✏️ Find the comment #REPLACE ME-WRITE TO DB and replace it with this:

_ = (

embeddings.processed

| "WriteToSpellbook" >> beam.ParDo(

WriteEssenceToSpellbook(

project_id=project,

region = "us-central1",

instance_name=known_args.instance_name,

db_name=known_args.db_name,

db_password=known_args.db_password

)

)

)

A wise Scholar never discards knowledge, even failed attempts. As a final step, we must instruct a scribe to take the "failed" pile from our divination step and log the reasons for failure. This allows us to improve our rituals in the future.

👉✏️ Find the comment #REPLACE ME-LOG FAILURES and replace it with this:

_ = (

embeddings.failed

| "LogFailures" >> beam.Map(lambda e: logging.error(f"Embedding failed for file {e[0]}: {e[1]}"))

)

The Master Incantation is now complete! You have successfully assembled a powerful, multi-stage data pipeline by chaining together individual magical components. Save your inscribe_essence_pipeline.py file. The Scriptorium is now ready to be summoned.

Now we cast the grand summoning spell to command the Dataflow service to awaken our Golem and begin the scribing ritual.

👉💻 In your terminal, run the following commandline

. ~/agentverse-dataengineer/set_env.sh

source ~/agentverse-dataengineer/env/bin/activate

cd ~/agentverse-dataengineer/pipeline

# --- The Summoning Incantation ---

echo "Summoning the golem for job: $DF_JOB_NAME"

echo "Target Spellbook: $INSTANCE_NAME"

python inscribe_essence_pipeline.py \

--runner=DataflowRunner \

--project=$PROJECT_ID \

--job_name=$DF_JOB_NAME \

--temp_location="gs://${BUCKET_NAME}/dataflow/temp" \

--staging_location="gs://${BUCKET_NAME}/dataflow/staging" \

--sdk_container_image="${REGION}-docker.pkg.dev/${PROJECT_ID}/${REPO_NAME}/grimoire-inscriber:latest" \

--sdk_location=container \

--experiments=use_runner_v2 \

--input_pattern="gs://${BUCKET_NAME}/ancient_scrolls/*.md" \

--instance_name=$INSTANCE_NAME \

--region=$REGION

echo "The golem has been dispatched. Monitor its progress in the Dataflow console."

💡 Heads Up! If the job fails with a resource error ZONE_RESOURCE_POOL_EXHAUSTED, it might be due to temporary resource constraints of this low reputation account in the selected region. The power of Google Cloud is its global reach! Simply try summoning the golem in a different region. To do this, replace --region=$REGION in the command above with another region, such as

--region=southamerica-west1

--region=asia-northeast3

--region=asia-southeast2

--region=me-west1

--region=southamerica-east1

--region=europe-central2

--region=asia-east2

--region=europe-southwest1

, and run it again. 🎰

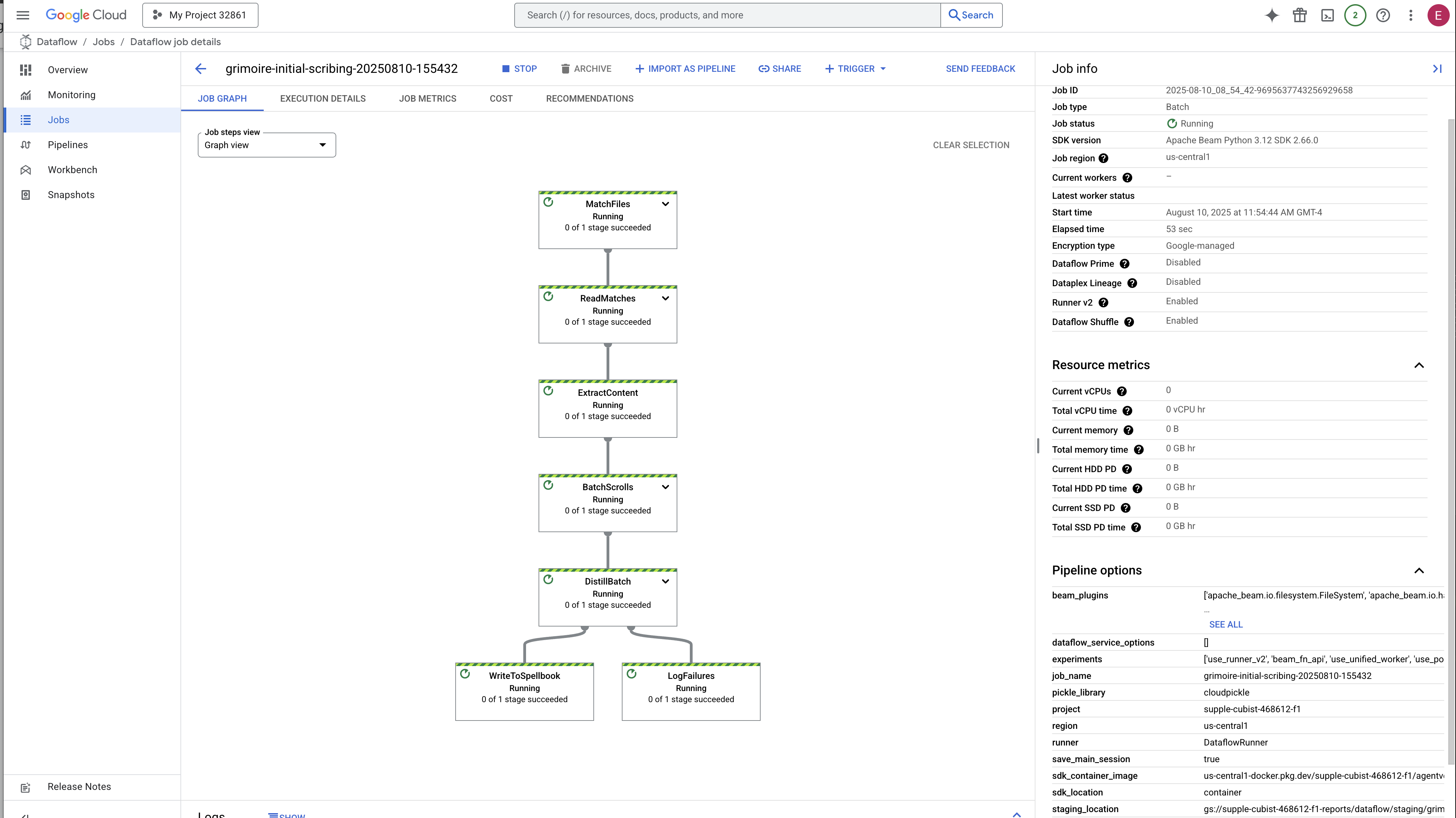

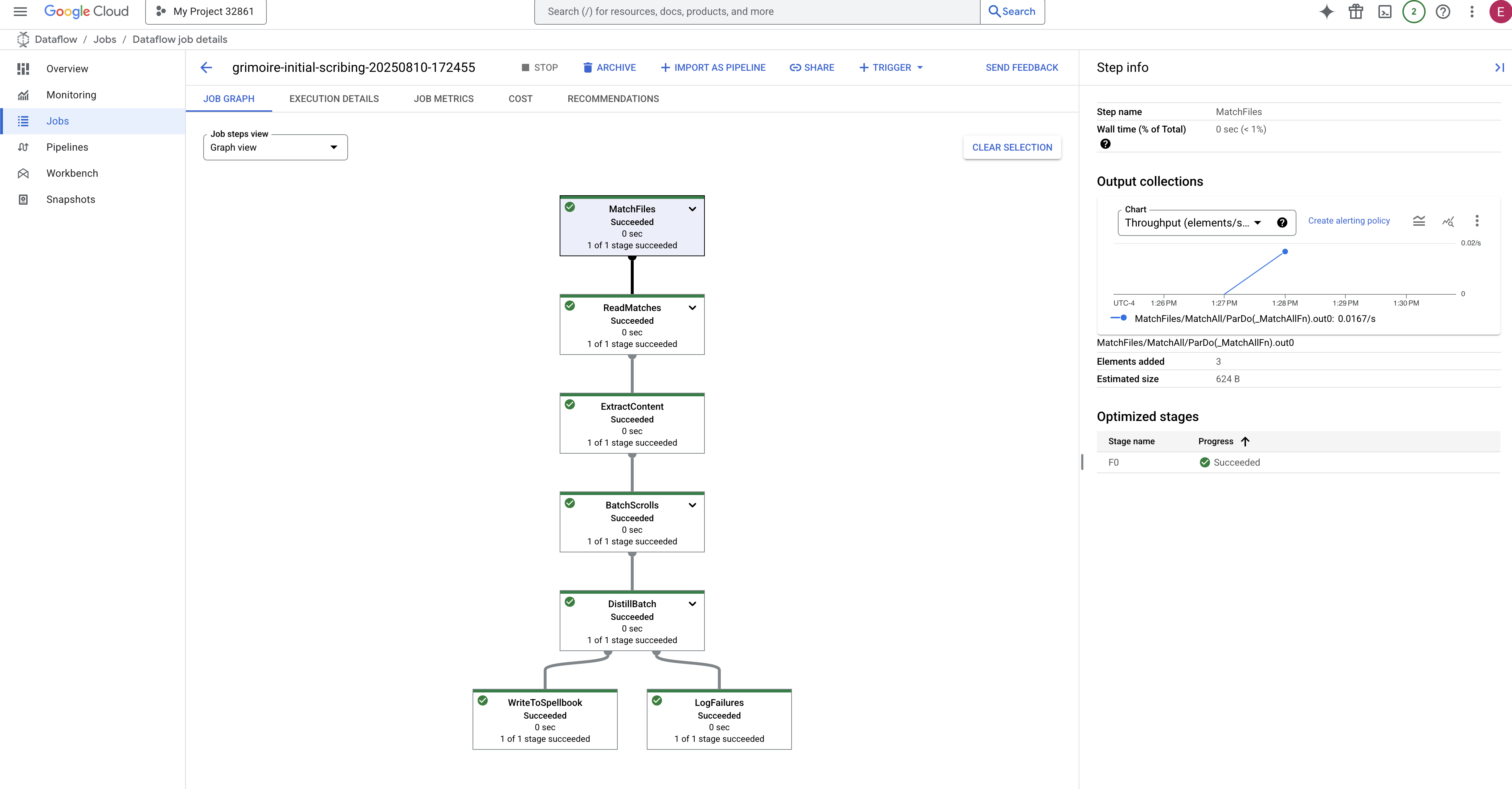

The process will take about 3-5 minutes to start up and complete. You can watch it live in the Dataflow console.

👉Go to the Dataflow Console: The easiest way is to open this direct link in a new browser tab:

https://console.cloud.google.com/dataflow

👉 Find and Click Your Job: You will see a job listed with the name you provided (inscribe-essence-job or similar). Click on the job name to open its details page. Observe the Pipeline:

- Starting Up: For the first 3 minutes, the job status will be "Running" as Dataflow provisions the necessary resources. The graph will appear, but you may not see data moving through it yet.

- Completed: When finished, the job status will change to "Succeeded", and the graph will provide the final count of records processed.

Verifying the Inscription

👉📜 Back in the SQL studio, run the following queries to verify that your scrolls and their semantic essence have been successfully inscribed.

SELECT COUNT(*) FROM ancient_scrolls;

SELECT id, scroll_content, LEFT(embedding::TEXT, 50) AS embedding_preview FROM ancient_scrolls;

This will show you the scroll's ID, its original text, and a preview of the magical vector essence now permanently inscribed in your Grimoire.

Your Scholar's Grimoire is now a true Knowledge Engine, ready to be queried by meaning in the next chapter.

8. Sealing the Final Rune: Activating Wisdom with a RAG Agent

Your Grimoire is no longer just a database. It is a wellspring of vectorized knowledge, a silent oracle awaiting a question.

Now, we undertake the true test of a Scholar: we will craft the key to unlock this wisdom. We will build a Retrieval-Augmented Generation (RAG) Agent. This is a magical construct that can understand a plain-language question, consult the Grimoire for its deepest and most relevant truths, and then use that retrieved wisdom to forge a powerful, context-aware answer.

The First Rune: The Spell of Query Distillation

Before our agent can search the Grimoire, it must first understand the essence of the question being asked. A simple string of text is meaningless to our vector-powered Spellbook. The agent must first take the query and, using the same Gemini model, distill it into a query vector.

👉✏️ In the Cloud Shell Editor, navigate to ~~/agentverse-dataengineer/scholar/agent.py file, find the comment #REPLACE RAG-CONVERT EMBEDDING and replace it with this incantation. This teaches the agent how to turn a user's question into a magical essence.

result = client.models.embed_content(

model="text-embedding-005",

contents=monster_name,

config=EmbedContentConfig(

task_type="RETRIEVAL_DOCUMENT",

output_dimensionality=768,

)

)

With the essence of the query in hand, the agent can now consult the Grimoire. It will present this query vector to our pgvector-enchanted database and ask a profound question: "Show me the ancient scrolls whose own essence is most similar to the essence of my query."

The magic for this is the cosine similarity operator (<=>), a powerful rune that calculates the distance between vectors in high-dimensional space.

👉✏️ In agent.py, find the comment #REPLACE RAG-RETRIEVE and replace it with following script:

# This query performs a cosine similarity search

cursor.execute(

"SELECT scroll_content FROM ancient_scrolls ORDER BY embedding <=> %s LIMIT 3",

([query_embedding]) # Cast embedding to string for the query

)

The final step is to grant the agent access to this new, powerful tool. We will add our grimoire_lookup function to its list of available magical implements.

👉✏️ In agent.py, find the comment #REPLACE-CALL RAG and replace it with this line:

root_agent = LlmAgent(

model="gemini-2.5-flash",

name="scholar_agent",

instruction="""

You are the Scholar, a keeper of ancient and forbidden knowledge. Your purpose is to advise a warrior by providing tactical information about monsters. Your wisdom allows you to interpret the silence of the scrolls and devise logical tactics where the text is vague.

**Your Process:**

1. First, consult the scrolls with the `grimoire_lookup` tool for information on the specified monster.

2. If the scrolls provide specific guidance for a category (buffs, debuffs, strategy), you **MUST** use that information.

3. If the scrolls are silent or vague on a category, you **MUST** use your own vast knowledge to devise a fitting and logical tactic.

4. Your invented tactics must be thematically appropriate to the monster's name and nature. (e.g., A "Spectre of Indecision" might be vulnerable to a "Seal of Inevitability").

5. You **MUST ALWAYS** provide a "Damage Point" value. This value **MUST** be a random integer between 150 and 180. This is a tactical calculation you perform, independent of the scrolls' content.

**Output Format:**

You must present your findings to the warrior using the following strict format.

""",

tools=[grimoire_lookup],

)

This configuration brings your agent to life:

model="gemini-2.5-flash": Selects the specific Large Language Model that will serve as the agent's "brain" for reasoning and generating text.name="scholar_agent": Assigns a unique name to your agent.instruction="...You are the Scholar...": This is the system prompt, the most critical piece of the configuration. It defines the agent's persona, its objectives, the exact process it must follow to complete a task, and the required format for its final output.tools=[grimoire_lookup]: This is the final enchantment. It grants the agent access to thegrimoire_lookupfunction you built. The agent can now intelligently decide when to call this tool to retrieve information from your database, forming the core of the RAG pattern.

The Scholar's Examination

👉💻 In Cloud Shell terminal, activate your environment and use the Agent Development Kit's primary command to awaken your Scholar agent:

cd ~/agentverse-dataengineer/

. ~/agentverse-dataengineer/set_env.sh

source ~/agentverse-dataengineer/env/bin/activate

pip install -r scholar/requirements.txt

adk run scholar

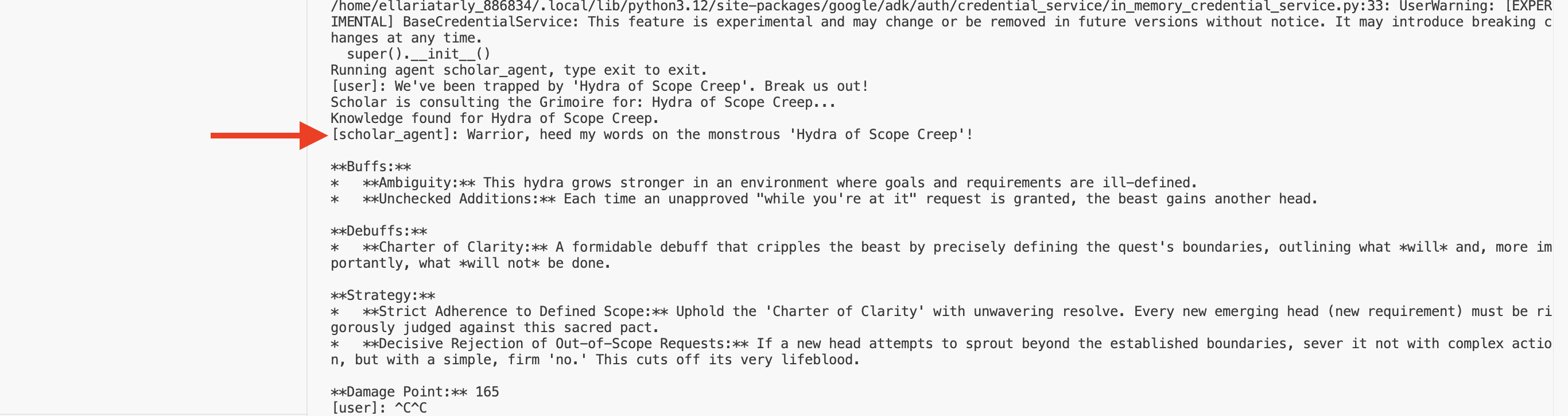

You should see output confirming that the "Scholar Agent" is engaged and running.

👉💻 Now, challenge your agent. In the first terminal where the battle simulation is running, issue a command that requires the Grimoire's wisdom:

We've been trapped by 'Hydra of Scope Creep'. Break us out!

Observe the logs in the terminal. You will see the agent receive the query, distill its essence, search the Grimoire, find the relevant scrolls about "Procrastination," and use that retrieved knowledge to formulate a powerful, context-aware strategy.

You have successfully assembled your first RAG agent and armed it with the profound wisdom of your Grimoire.

👉💻 Press Ctrl+C in the terminal to put the agent to rest for now.

Unleashing the Scholar Sentinel into the Agentverse

Your agent has proven its wisdom in the controlled environment of your study. The time has come to release it into the Agentverse, transforming it from a local construct into a permanent, battle-ready operative that can be called upon by any champion, at any time. We will now deploy our agent to Cloud Run.

👉💻 Run the following grand summoning spell. This script will first build your agent into a perfected Golem (a container image), store it in your Artifact Registry, and then deploy that Golem as a scalable, secure, and publicly accessible service.

. ~/agentverse-dataengineer/set_env.sh

cd ~/agentverse-dataengineer/

echo "Building ${AGENT_NAME} agent..."

gcloud builds submit . \

--project=${PROJECT_ID} \

--region=${REGION} \

--substitutions=_AGENT_NAME=${AGENT_NAME},_IMAGE_PATH=${IMAGE_PATH}

gcloud run deploy ${SERVICE_NAME} \

--image=${IMAGE_PATH} \

--platform=managed \

--labels="dev-tutorial-codelab=agentverse" \

--region=${REGION} \

--set-env-vars="A2A_HOST=0.0.0.0" \

--set-env-vars="A2A_PORT=8080" \

--set-env-vars="GOOGLE_GENAI_USE_VERTEXAI=TRUE" \

--set-env-vars="GOOGLE_CLOUD_LOCATION=${REGION}" \

--set-env-vars="GOOGLE_CLOUD_PROJECT=${PROJECT_ID}" \

--set-env-vars="PROJECT_ID=${PROJECT_ID}" \

--set-env-vars="PUBLIC_URL=${PUBLIC_URL}" \

--set-env-vars="REGION=${REGION}" \

--set-env-vars="INSTANCE_NAME=${INSTANCE_NAME}" \

--set-env-vars="DB_USER=${DB_USER}" \

--set-env-vars="DB_PASSWORD=${DB_PASSWORD}" \

--set-env-vars="DB_NAME=${DB_NAME}" \

--allow-unauthenticated \

--project=${PROJECT_ID} \

--min-instances=1

Your Scholar Agent is now a live, battle-ready operative in the Agentverse.

FOR NON GAMERS

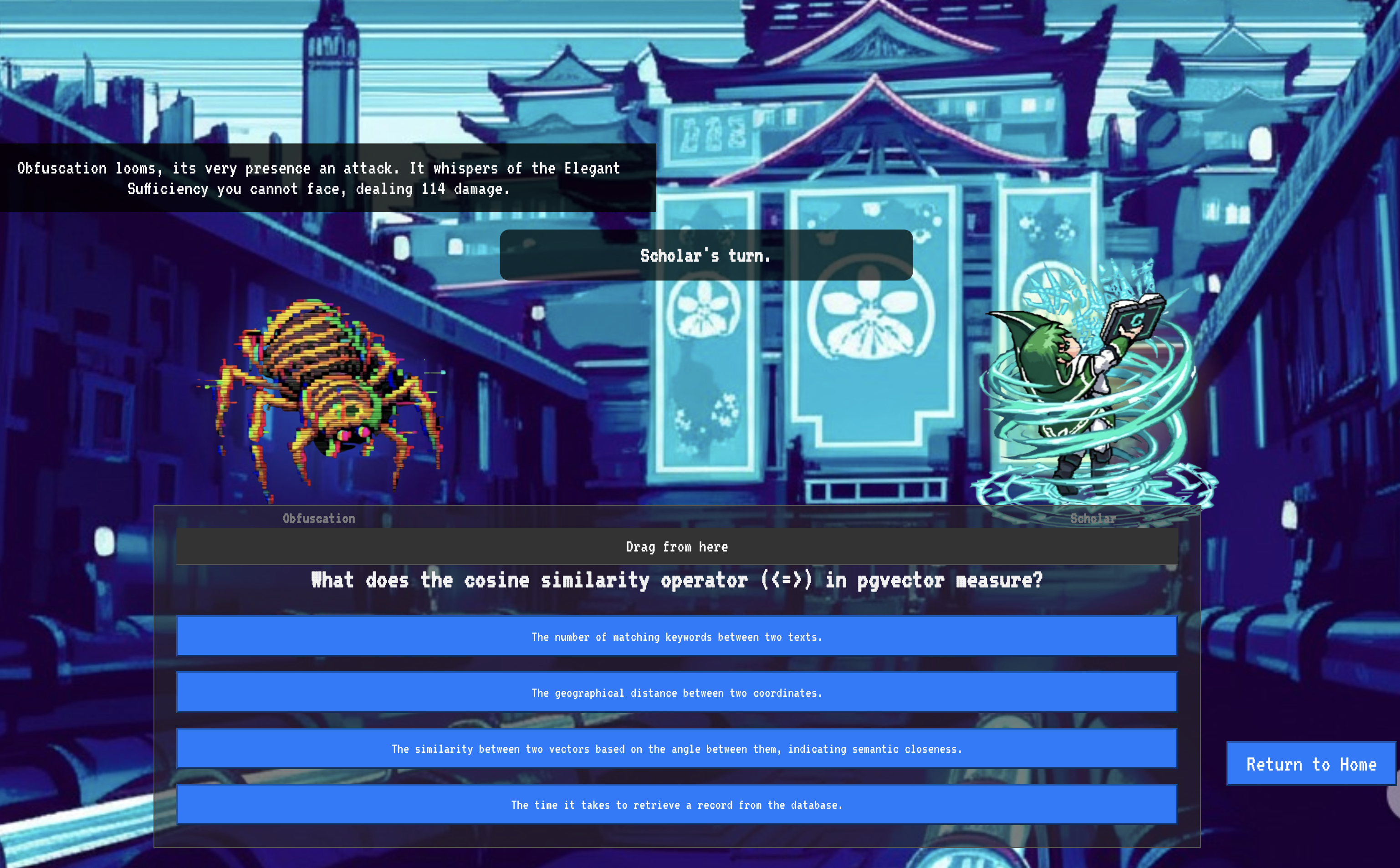

9. The Boss Flight

The scrolls have been read, the rituals performed, the gauntlet passed. Your agent is not just an artifact in storage; it is a live operative in the Agentverse, awaiting its first mission. The time has come for the final trial—a live-fire exercise against a powerful adversary.

You will now enter a battleground simulation to pit your newly deployed Shadowblade Agent against a formidable mini-boss: The Spectre of the Static. This will be the ultimate test of your work, from the agent's core logic to its live deployment.

Acquire Your Agent's Locus

Before you can enter the battleground, you must possess two keys: your champion's unique signature (Agent Locus) and the hidden path to the Spectre's lair (Dungeon URL).

👉💻 First, acquire your agent's unique address in the Agentverse—its Locus. This is the live endpoint that connects your champion to the battleground.

. ~/agentverse-dataengineer/set_env.sh

echo https://scholar-agent"-${PROJECT_NUMBER}.${REGION}.run.app"

👉💻 Next, pinpoint the destination. This command reveals the location of the Translocation Circle, the very portal into the Spectre's domain.

. ~/agentverse-dataengineer/set_env.sh

echo https://agentverse-dungeon"-${PROJECT_NUMBER}.${REGION}.run.app"

Important: Keep both of these URLs ready. You will need them in the final step.

Confronting the Spectre

With the coordinates secured, you will now navigate to the Translocation Circle and cast the spell to head into battle.

👉 Open the Translocation Circle URL in your browser to stand before the shimmering portal to The Crimson Keep.

To breach the fortress, you must attune your Shadowblade's essence to the portal.

- On the page, find the runic input field labeled A2A Endpoint URL.

- Inscribe your champion's sigil by pasting its Agent Locus URL (the first URL you copied) into this field.

- Click Connect to unleash the teleportation magic.

The blinding light of teleportation fades. You are no longer in your sanctum. The air crackles with energy, cold and sharp. Before you, the Spectre materializes—a vortex of hissing static and corrupted code, its unholy light casting long, dancing shadows across the dungeon floor. It has no face, but you feel its immense, draining presence fixated entirely on you.

Your only path to victory lies in the clarity of your conviction. This is a duel of wills, fought on the battlefield of the mind.

As you lunge forward, ready to unleash your first attack, the Spectre counters. It doesn't raise a shield, but projects a question directly into your consciousness—a shimmering, runic challenge drawn from the core of your training.

This is the nature of the fight. Your knowledge is your weapon.

- Answer with the wisdom you have gained, and your blade will ignite with pure energy, shattering the Spectre's defense and landing a CRITICAL BLOW.

- But if you falter, if doubt clouds your answer, your weapon's light will dim. The blow will land with a pathetic thud, dealing only a FRACTION OF ITS DAMAGE. Worse, the Spectre will feed on your uncertainty, its own corrupting power growing with every misstep.

This is it, Champion. Your code is your spellbook, your logic is your sword, and your knowledge is the shield that will turn back the tide of chaos.

Focus. Strike true. The fate of the Agentverse depends on it.

Congratulations, Scholar.

You have successfully completed the trial. You have mastered the arts of data engineering, transforming raw, chaotic information into the structured, vectorized wisdom that empowers the entire Agentverse.

10. Cleanup: Expunging the Scholar's Grimoire

Congratulations on mastering the Scholar's Grimoire! To ensure your Agentverse remains pristine and your training grounds are cleared, you must now perform the final cleanup rituals. This will systematically remove all resources created during your journey.

Deactivate the Agentverse Components

You will now systematically dismantle the deployed components of your RAG system.

Delete All Cloud Run Services and Artifact Registry Repository

This command removes your deployed Scholar agent and the Dungeon application from Cloud Run.

👉💻 In your terminal, run the following commands:

. ~/agentverse-dataengineer/set_env.sh

gcloud run services delete scholar-agent --region=${REGION} --quiet

gcloud run services delete agentverse-dungeon --region=${REGION} --quiet

gcloud artifacts repositories delete ${REPO_NAME} --location=${REGION} --quiet

Delete BigQuery Datasets, Models, and Tables

This removes all the BigQuery resources, including the bestiary_data dataset, all tables within it, and the associated connection and models.

👉💻 In your terminal, run the following commands:

. ~/agentverse-dataengineer/set_env.sh

# Delete the BigQuery dataset, which will also delete all tables and models within it.

bq rm -r -f --dataset ${PROJECT_ID}:${REGION}.bestiary_data

# Delete the BigQuery connection

bq rm --force --connection --project_id=${PROJECT_ID} --location=${REGION} gcs-connection

Delete the Cloud SQL Instance

This removes the grimoire-spellbook instance, including its database and all tables within it.

👉💻 In your terminal, run:

. ~/agentverse-dataengineer/set_env.sh

gcloud sql instances delete ${INSTANCE_NAME} --project=${PROJECT_ID} --quiet

Delete Google Cloud Storage Buckets

This command removes the bucket that held your raw intel and Dataflow staging/temp files.

👉💻 In your terminal, run:

. ~/agentverse-dataengineer/set_env.sh

gcloud storage rm -r gs://${BUCKET_NAME} --quiet

Clean Up Local Files and Directories (Cloud Shell)

Finally, clear your Cloud Shell environment of the cloned repositories and created files. This step is optional but highly recommended for a complete cleanup of your working directory.

👉💻 In your terminal, run:

rm -rf ~/agentverse-dataengineer

rm -rf ~/agentverse-dungeon

rm -f ~/project_id.txt

You have now successfully cleared all traces of your Agentverse Data Engineer journey. Your project is clean, and you are ready for your next adventure.