1. Before you begin

This codelab goes through an example of building an AR web app. It uses JavaScript to render 3D models that appear as if they exist in the real world.

You use the WebXR Device API that combines AR and virtual-reality (VR) functionality. You focus on AR extensions to the WebXR Device API to create a simple AR app that runs on the interactive web.

What is AR?

AR is a term usually used to describe the mixing of computer-generated graphics with the real world. In the case of phone-based AR, this means convincingly placing computer graphics over a live camera feed. In order for this effect to remain realistic as the phone moves through the world, the AR-enabled device needs to understand the world it is moving through and determine its pose (position and orientation) in 3D space. This may include detecting surfaces and estimating lighting of the environment.

AR has become widely used in apps after the release of Google's ARCore and Apple's ARKit, whether it's for selfie filters or AR-based games.

What you'll build

In this codelab, you build a web app that places a model in the real world using augmented reality. Your app will:

- Use the target device's sensors to determine and track its position and orientation in the world

- Render a 3D model composited on top of a live camera view

- Execute hit tests to place objects on top of discovered surfaces in the real world

What you'll learn

- How to use the WebXR Device API

- How to configure a basic AR scene

- How to find a surface using AR hit tests

- How to load and render a 3D model synchronized with the real world camera feed

- How to render shadows based on the 3D model

This codelab is focused on AR APIs. Non-relevant concepts and code blocks are glossed over and are provided for you in the corresponding repository code.

What you'll need

- A workstation for coding and hosting static web content

- ARCore-capable Android device running Android 8.0 Oreo

- Google Chrome

- Google Play Services for AR installed (Chrome automatically prompts you to install it on compatible devices)

- A web server of your choice

- USB cable to connect your AR device to your workstation

- The sample code

- A text editor

- Basic knowledge of HTML, CSS, JavaScript, and Google Chrome Developer Tools

Click Try it on your AR device to try the first step of this codelab. If you get a page with a message displaying "Your browser does not have AR features", check that your Android device has Google Play Services for AR installed.

2. Set up your development environment

Download the code

- Click the following link to download all the code for this codelab on your workstation:

- Unpack the downloaded zip file. This unpacks a root folder (

ar-with-webxr-master), which contains directories of several steps of this codelab, along with all the resources you need.

The step-03 and step-04 folders contain the desired end state of the third and fourth steps of this codelab, as well as the final result. They are there for reference.

You do all of your coding work in the work directory.

Install web server

- You're free to use your own web server. If you don't have one set up already, this section details how to set up Web Server for Chrome.

If you don't have that app installed on your workstation yet, you can install it from the Chrome Web Store.

- After installing the Web Server for Chrome app, go to

chrome://appsand click the Web Server icon:

![]()

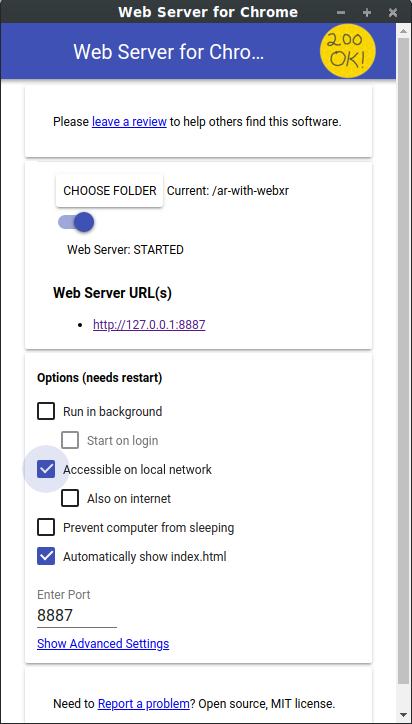

You see this dialog next, which lets you configure your local web server:

- Click choose folder and select the

ar-with-webxr-masterfolder. This lets you serve your work in progress through the URL highlighted in the web-server dialog (in the Web Server URL(s) section). - Under Options (needs restart), select the Automatically show index.html checkbox.

- Toggle Web server to Stop, then back to Started.

- Verify that at least one Web Server URL(s) appears: http://127.0.0.1:8887—the default localhost URL.

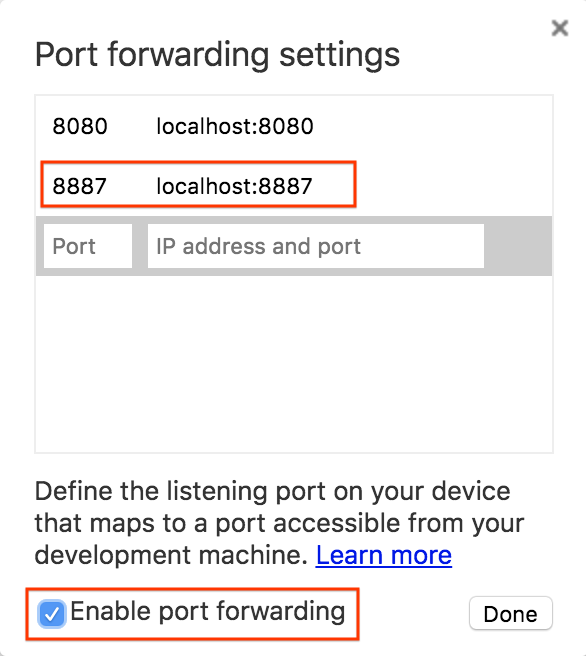

Set up port forwarding

Configure your AR device so that it accesses the same port on your workstation when you visit localhost:8887 on it.

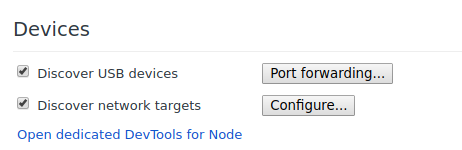

- On your development workstation, go to chrome://inspect and click Port forwarding...:

- Use the Port forwarding settings dialog to forward port 8887 to localhost:8887.

- Select the Enable port forwarding checkbox:

Verify your setup

Test your connection:

- Connect your AR device to your workstation with a USB cable.

- On your AR device in Chrome, enter http://localhost:8887 in the address bar. Your AR device should forward this request to your development workstation's web server. You should see a directory of files.

- On your AR device, click

step-03to load thestep-03/index.htmlfile in your browser.

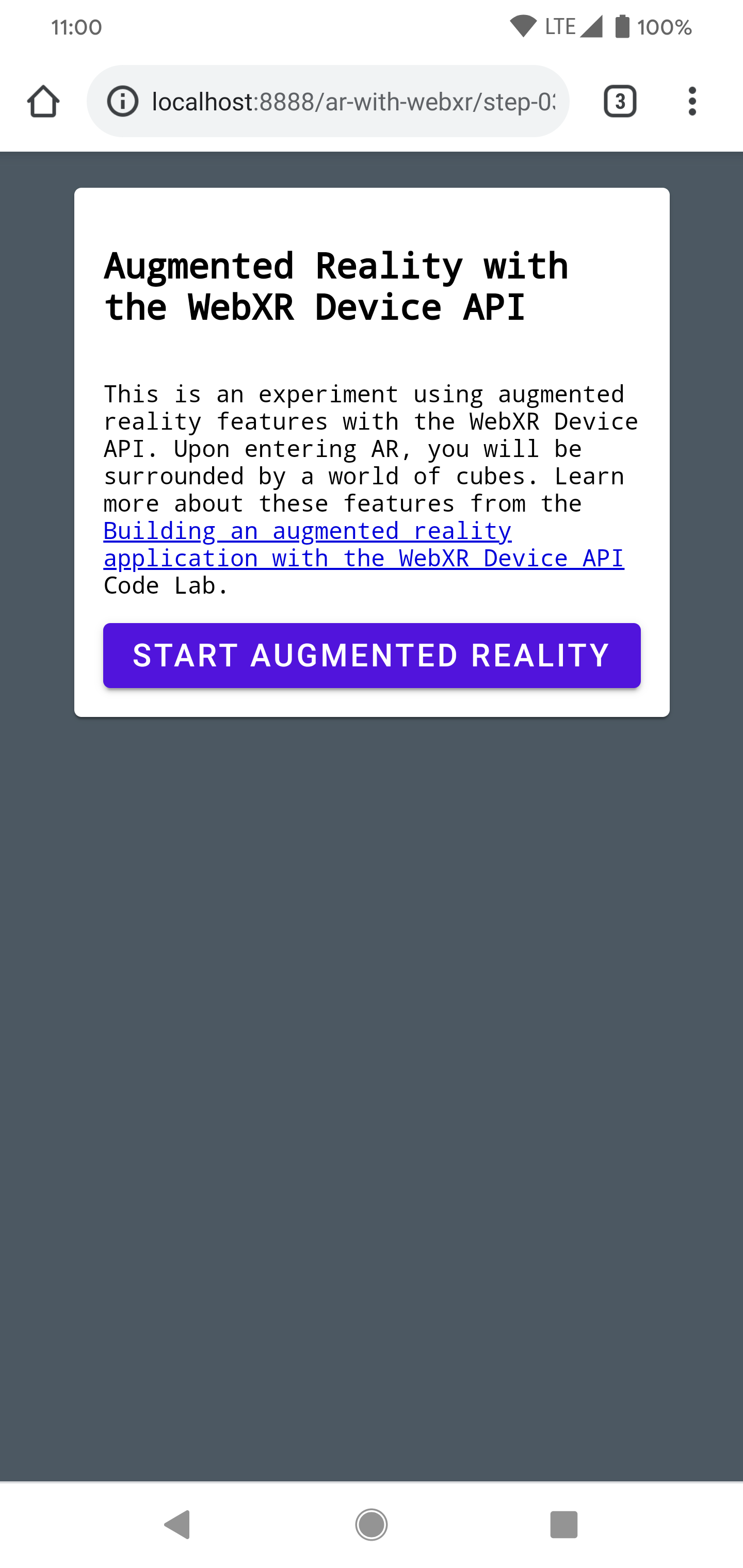

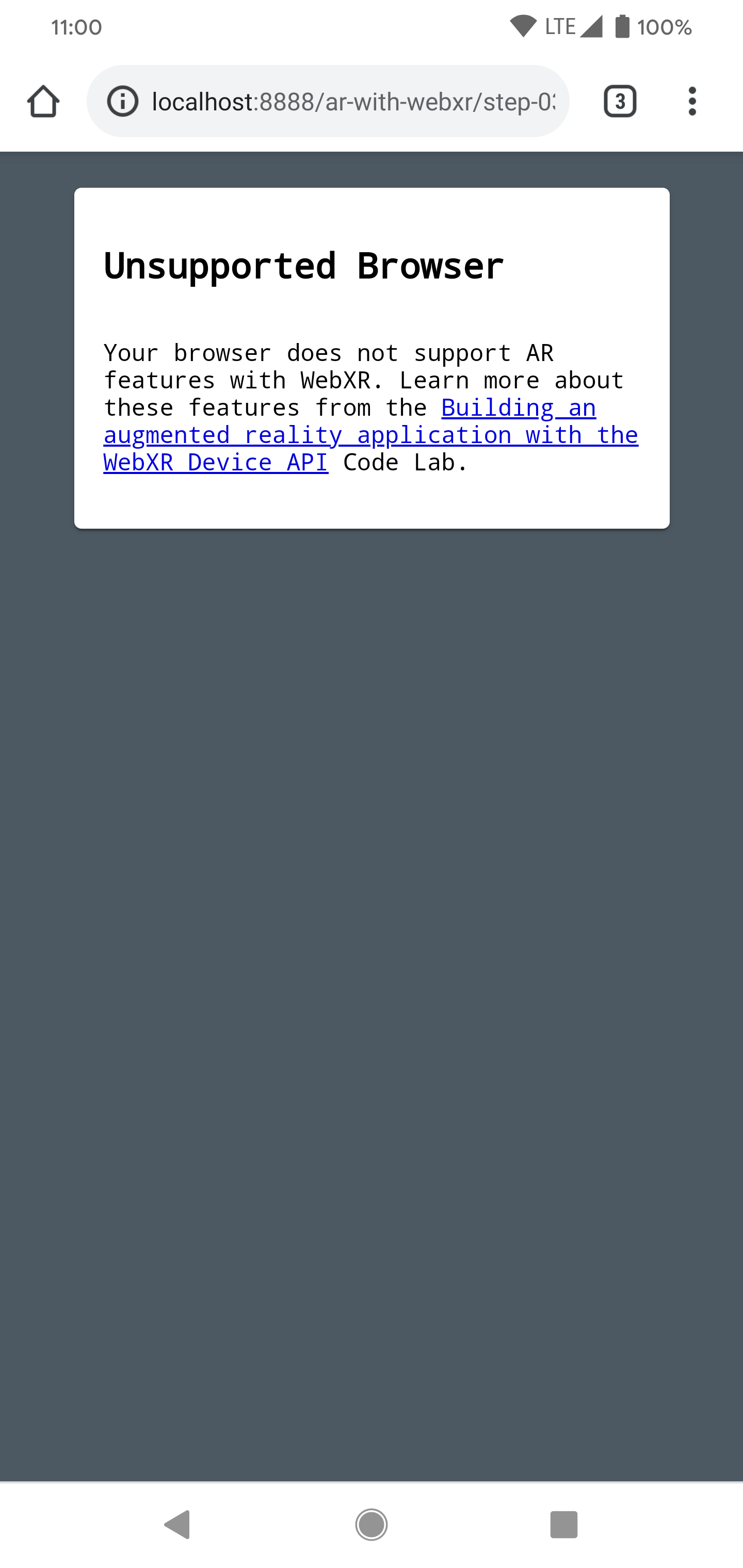

You should see a page that contains an Start augmented reality button | However, if you see an Unsupported browser error page, your device probably isn't compatible. |

|

|

The connection to your web server should now work with your AR device.

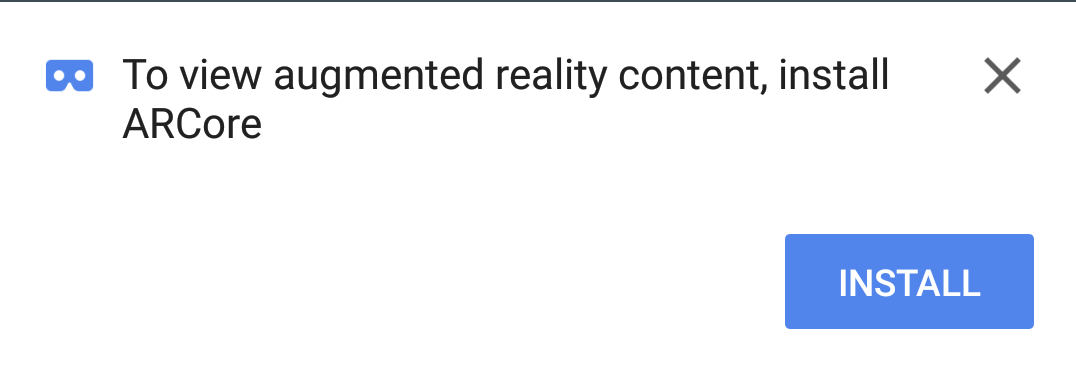

- Click Start augmented reality. You may be prompted to install ARCore.

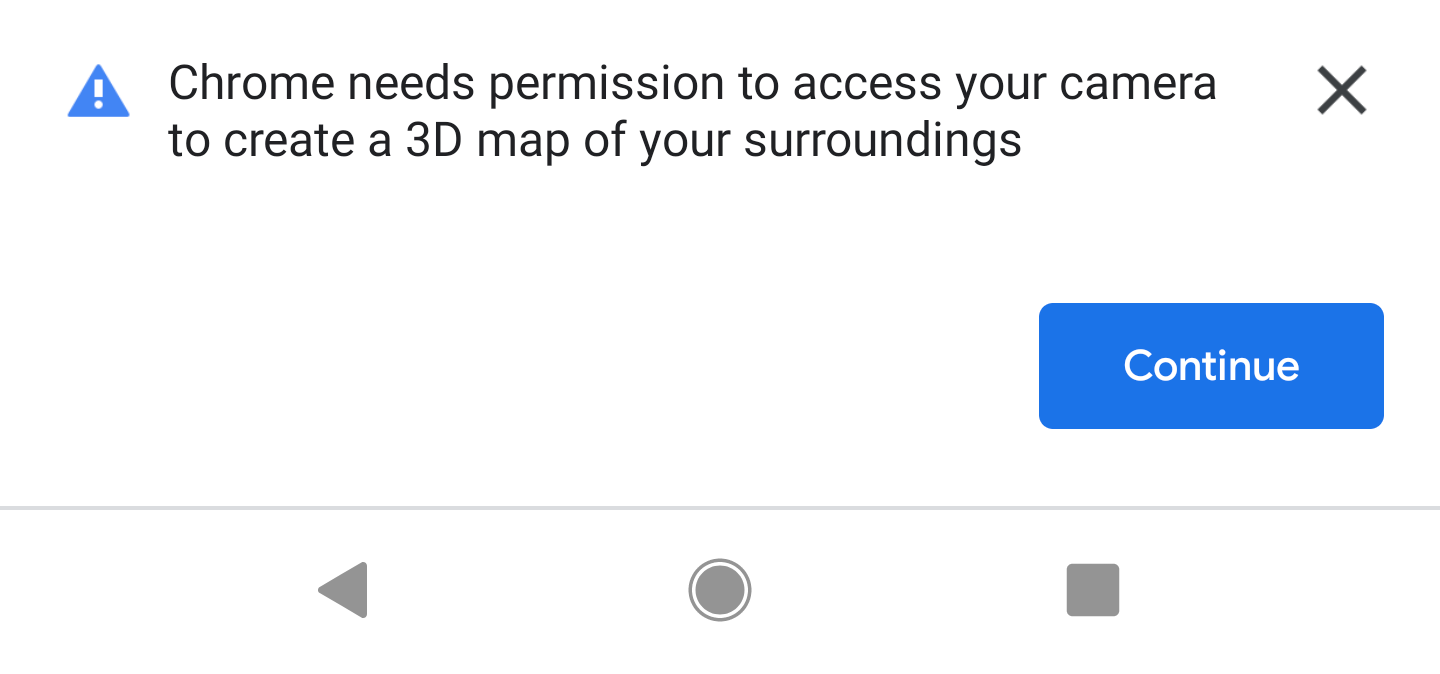

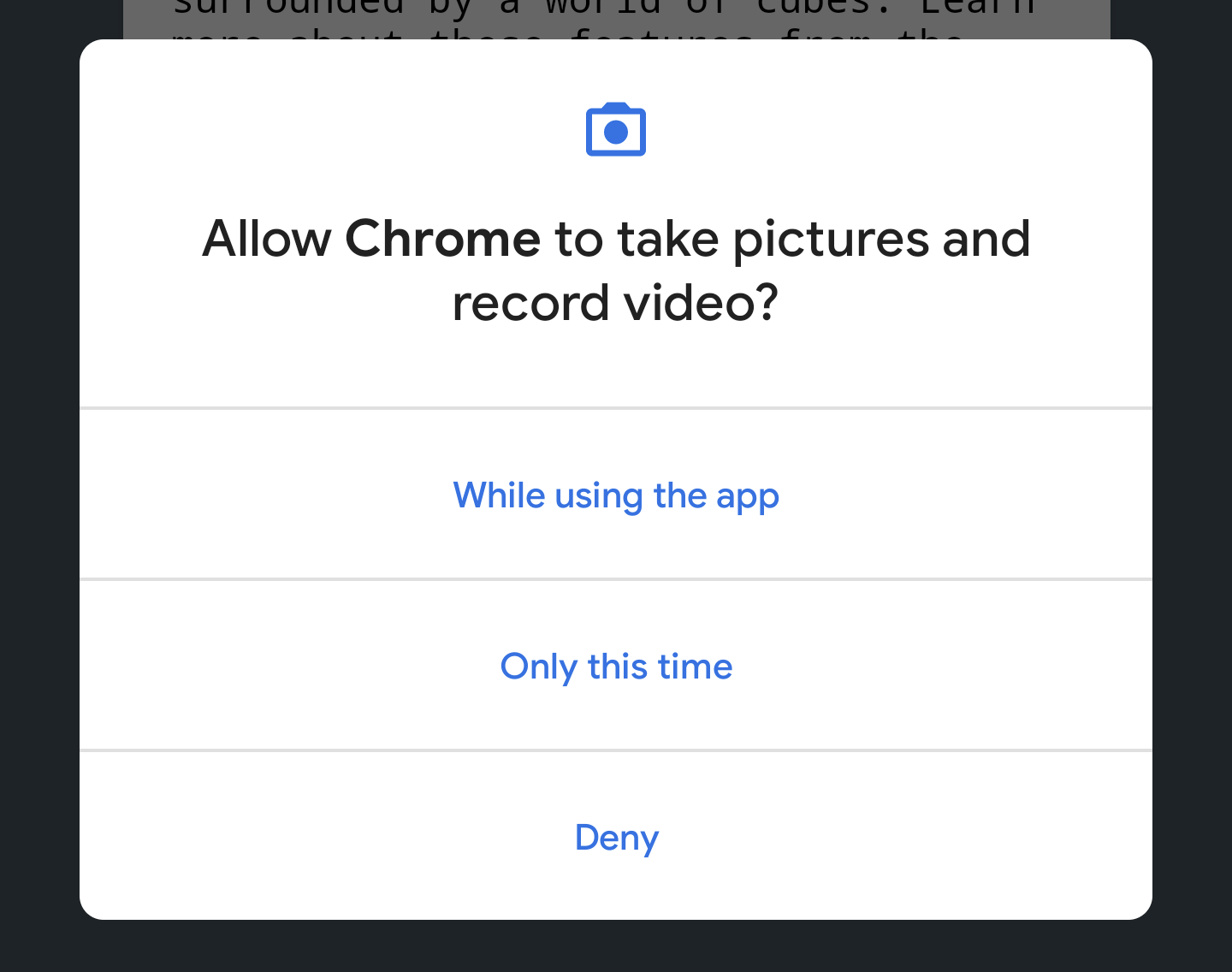

You see a camera permissions prompt the first time you run an AR app.

→

→

Once everything is good to go, you should see a scene of cubes overlaid on top of a camera feed. The scene understanding improves as more of the world is parsed by the camera, so moving around can help stabilize things.

3. Configure WebXR

In this step, you learn how to set up a WebXR session and a basic AR scene. The HTML page is provided with CSS styling and JavaScript for enabling basic AR functionality. This speeds up the setup process, allowing the codelab to focus on AR features.

The HTML page

You build an AR experience into a traditional webpage using existing web technologies. In this experience, you use a full-screen rendering canvas, so the HTML file doesn't need to have too much complexity.

AR features require a user gesture to initiate, so there are some Material Design components for displaying the Start AR button and the unsupported browser message.

The index.html file that is already in your work directory should look something like the following. This is a subset of the actual contents; don't copy this code into your file!

<!-- Don't copy this code into your file! -->

<html>

<head>

<meta charset="utf-8">

<meta name="viewport" content="width=device-width, initial-scale=1.0">

<title>Building an augmented reality application with the WebXR Device API</title>

<link rel="stylesheet" href="https://unpkg.com/material-components-web@latest/dist/material-components-web.min.css">

<script src="https://unpkg.com/material-components-web@latest/dist/material-components-web.min.js"></script>

<!-- three.js -->

<script src="https://unpkg.com/three@0.123.0/build/three.js"></script>

<script src="https://unpkg.com/three@0.123.0/examples/js/loaders/GLTFLoader.js"></script>

<script src="../shared/utils.js"></script>

<script src="app.js"></script>

</head>

<body>

<!-- Information about AR removed for brevity. -->

<!-- Starting an immersive WebXR session requires user interaction. Start the WebXR experience with a simple button. -->

<a onclick="activateXR()" class="mdc-button mdc-button--raised mdc-button--accent">

Start augmented reality

</a>

</body>

</html>

Open the key JavaScript code

The starting point for your app is in app.js. This file provides some boilerplate for setting up an AR experience.

Your work directory also already includes the app code (app.js).

Check for WebXR and AR support

Before a user can work with AR, check for the existence of navigator.xr and the necessary XR features. The navigator.xr object is the entry point for the WebXR Device API, so it should exist if the device is compatible. Also, check that the "immersive-ar" session mode is supported.

If all is well, clicking the Enter augmented reality button attempts to create an XR session. Otherwise, onNoXRDevice() is called (in shared/utils.js) which displays a message indicating a lack of AR support.

This code is already present in app.js, so no change needs to be made.

(async function() {

if (navigator.xr && await navigator.xr.isSessionSupported("immersive-ar")) {

document.getElementById("enter-ar").addEventListener("click", activateXR)

} else {

onNoXRDevice();

}

})();

Request an XRSession

When you click Enter augmented Reality, the code calls activateXR(). This starts the AR experience.

- Find the

activateXR()function inapp.js. Some code has been left out:

activateXR = async () => {

// Initialize a WebXR session using "immersive-ar".

this.xrSession = /* TODO */;

// Omitted for brevity

}

The entrypoint to WebXR is through XRSystem.requestSession(). Use the immersive-ar mode to allow rendered content to be viewed in a real-world environment.

- Initialize

this.xrSessionusing the"immersive-ar"mode:

activateXR = async () => {

// Initialize a WebXR session using "immersive-ar".

this.xrSession = await navigator.xr.requestSession("immersive-ar");

// ...

}

Initialize an XRReferenceSpace

An XRReferenceSpace describes the coordinate system used for objects within the virtual world. The 'local' mode is best suited for an AR experience, with a reference space that has an origin near the viewer and stable tracking.

Initialize this.localReferenceSpace in onSessionStarted() with the following code:

this.localReferenceSpace = await this.xrSession.requestReferenceSpace("local");

Define an animation loop

- Use

XRSession'srequestAnimationFrameto start a rendering loop, similar towindow.requestAnimationFrame.

On every frame, onXRFrame is called with a timestamp and an XRFrame.

- Complete the implementation of

onXRFrame. When a frame is drawn, queue the next request by adding:

// Queue up the next draw request.

this.xrSession.requestAnimationFrame(this.onXRFrame);

- Add code to set up the graphics environment. Add to the bottom of

onXRFrame:

// Bind the graphics framebuffer to the baseLayer's framebuffer.

const framebuffer = this.xrSession.renderState.baseLayer.framebuffer;

this.gl.bindFramebuffer(this.gl.FRAMEBUFFER, framebuffer);

this.renderer.setFramebuffer(framebuffer);

- To determine the viewer pose, use

XRFrame.getViewerPose(). ThisXRViewerPosedescribes the device's position and orientation in space. It also contains an array ofXRViews, which describes every viewpoint the scene should be rendered from in order to properly display on the current device. While stereoscopic VR has two views (one for each eye), AR devices only have one view.

The information inpose.viewsis most commonly used to configure the virtual camera's view matrix and projection matrix. This affects how the scene is laid out in 3D. When the camera is configured, the scene can be rendered. - Add to the bottom of

onXRFrame:

// Retrieve the pose of the device.

// XRFrame.getViewerPose can return null while the session attempts to establish tracking.

const pose = frame.getViewerPose(this.localReferenceSpace);

if (pose) {

// In mobile AR, we only have one view.

const view = pose.views[0];

const viewport = this.xrSession.renderState.baseLayer.getViewport(view);

this.renderer.setSize(viewport.width, viewport.height);

// Use the view's transform matrix and projection matrix to configure the THREE.camera.

this.camera.matrix.fromArray(view.transform.matrix);

this.camera.projectionMatrix.fromArray(view.projectionMatrix);

this.camera.updateMatrixWorld(true);

// Render the scene with THREE.WebGLRenderer.

this.renderer.render(this.scene, this.camera);

}

Test it

Run the app; on your development device, visit work/index.html. You should see your camera feed with cubes floating in space whose perspective changes as you move your device. Tracking improves the more you move around, so explore what works for you and your device.

If have issues running the app, check the Introduction and Set up your development environment sections.

4. Add a targeting reticle

With a basic AR scene set up, it's time to start interacting with the real world using a hit test. In this section, you program a hit test and use it to find a surface in the real world.

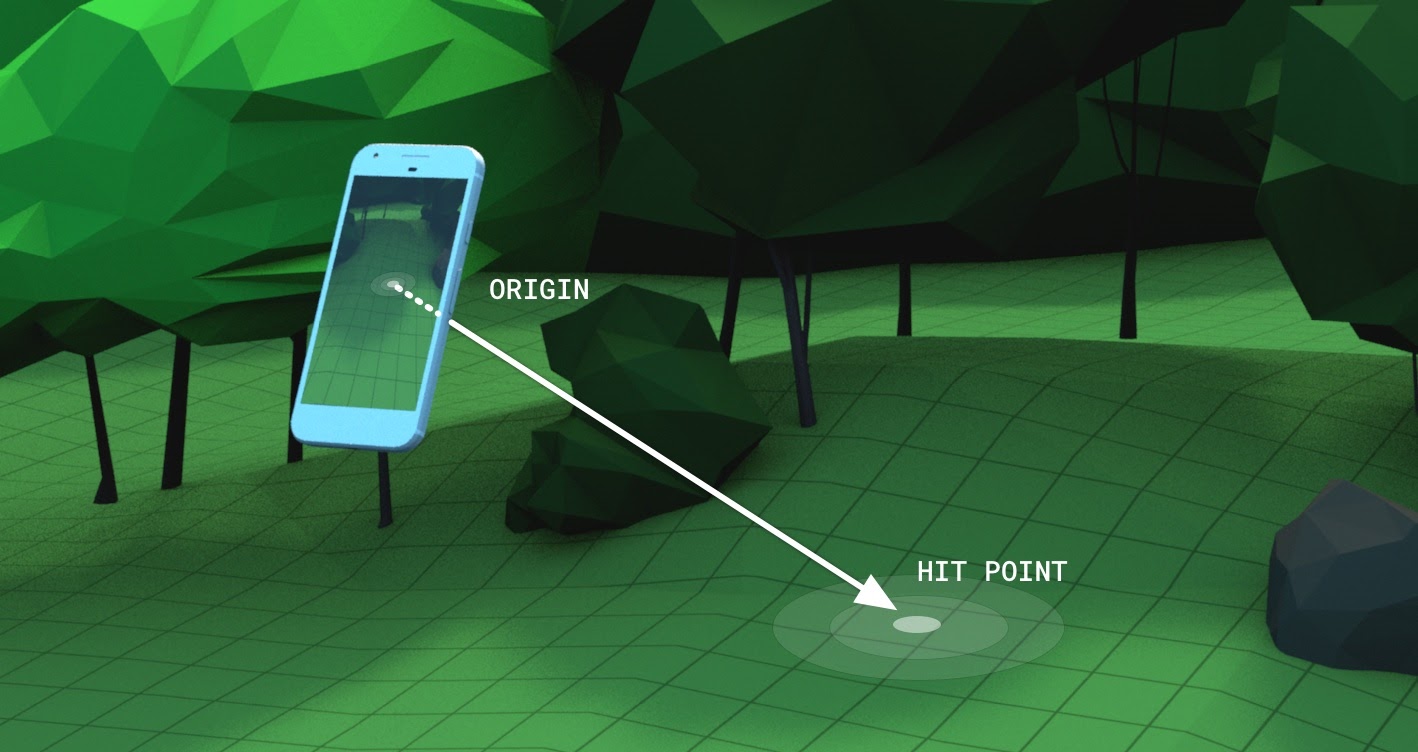

Understand a hit test

A hit test is generally a way to cast out a straight line from a point in space in some direction and determine if it intersects with any objects of interest. In this example, you aim the device at a location in the real world. Imagine a ray traveling from your device's camera and straight into the physical world in front of it.

The WebXR Device API lets you know if this ray intersected any objects in the real world, determined by the underlying AR capabilities and understanding of the world.

Request an XRSession with extra features

In order to conduct hit tests, extra features are required when requesting the XRSession.

- In

app.js, locatenavigator.xr.requestSession. - Add the features

"hit-test"and"dom-overlay"asrequiredFeatures as follows:

this.xrSession = await navigator.xr.requestSession("immersive-ar", {

requiredFeatures: ["hit-test", "dom-overlay"]

});

- Configure the DOM overlay. Layer the

document.bodyelement over the AR camera view like so:

this.xrSession = await navigator.xr.requestSession("immersive-ar", {

requiredFeatures: ["hit-test", "dom-overlay"],

domOverlay: { root: document.body }

});

Add a motion prompt

ARCore works best when an adequate understanding of the environment has been built. This is achieved through a process called simultaneous localization and mapping (SLAM) in which visually distinct feature points are used to compute a change in location and environment characteristics.

Use the "dom-overlay" from the previous step to display a motion prompt on top of the camera stream.

Add a <div> to index.html with ID stabilization. This <div> displays an animation to users representing stabilization status and prompts them to move around with their device to enhance the SLAM process. This is displayed once the user is in AR and hidden once the reticle finds a surface, controlled by <body> classes.

<div id="stabilization"></div>

</body>

</html>

Add a reticle

Use a reticle to indicate the location the device's view is pointing at.

- In

app.js, replace theDemoUtils.createCubeScene()call insetupThreeJs()with an emptyThree.Scene().

setupThreeJs() {

// ...

// this.scene = DemoUtils.createCubeScene();

this.scene = DemoUtils.createLitScene();

}

- Populate the new scene with an object that represents the point of collision. The provided

Reticleclass handles loading the reticle model inshared/utils.js. - Add the

Reticleto the scene insetupThreeJs():

setupThreeJs() {

// ...

// this.scene = DemoUtils.createCubeScene();

this.scene = DemoUtils.createLitScene();

this.reticle = new Reticle();

this.scene.add(this.reticle);

}

To perform a hit test, you use a new XRReferenceSpace. This reference space indicates a new coordinate system from the viewer's perspective to create a ray that is aligned with the viewing direction. This coordinate system is used in XRSession.requestHitTestSource(), which can compute hit tests.

- Add the following to

onSessionStarted()inapp.js:

async onSessionStarted() {

// ...

// Setup an XRReferenceSpace using the "local" coordinate system.

this.localReferenceSpace = await this.xrSession.requestReferenceSpace("local");

// Add these lines:

// Create another XRReferenceSpace that has the viewer as the origin.

this.viewerSpace = await this.xrSession.requestReferenceSpace("viewer");

// Perform hit testing using the viewer as origin.

this.hitTestSource = await this.xrSession.requestHitTestSource({ space: this.viewerSpace });

// ...

}

- Using this

hitTestSource, perform a hit test every frame:- If there are no results for the hit test, then ARCore hasn't had enough time to build a an understanding of the environment. In this case, prompt the user to move the device using the stabilization

<div>. - If there are results, move the reticle to that location.

- If there are no results for the hit test, then ARCore hasn't had enough time to build a an understanding of the environment. In this case, prompt the user to move the device using the stabilization

- Modify

onXRFrameto move the reticle:

onXRFrame = (time, frame) => {

// ... some code omitted ...

this.camera.updateMatrixWorld(true);

// Add the following:

const hitTestResults = frame.getHitTestResults(this.hitTestSource);

if (!this.stabilized && hitTestResults.length > 0) {

this.stabilized = true;

document.body.classList.add("stabilized");

}

if (hitTestResults.length > 0) {

const hitPose = hitTestResults[0].getPose(this.localReferenceSpace);

// update the reticle position

this.reticle.visible = true;

this.reticle.position.set(hitPose.transform.position.x, hitPose.transform.position.y, hitPose.transform.position.z)

this.reticle.updateMatrixWorld(true);

}

// More code omitted.

}

Add behavior on screen tap

An XRSession can emit events based on user interaction though the select event, which represents the primary action. In WebXR on mobile devices, the primary action is a screen tap.

- Add a

selectevent listener at the bottom ofonSessionStarted:

this.xrSession.addEventListener("select", this.onSelect);

In this example, a screen tap causes a sunflower to be placed at the reticle.

- Create an implementation for

onSelectin theAppclass:

onSelect = () => {

if (window.sunflower) {

const clone = window.sunflower.clone();

clone.position.copy(this.reticle.position);

this.scene.add(clone);

}

}

Test the app

You created a reticle that you can aim using your device using hit tests. When tapping the screen, you should be able to place a sunflower at the location that the reticle designates.

- When running your app, you should be able to see a reticle tracing the surface of the floor. If not, try looking around slowly with your phone.

- Once you see the reticle, tap it. A sunflower should be placed on top of it. You may have to move around a bit so that the underlying AR platform can better detect surfaces in the real world. Low lighting and surfaces without features decrease the quality of scene understanding, and increase the chance no hit will be found. If you run into any issues, check out the

step-04/app.jscode to see a working example of this step.

5. Add shadows

Creating a realistic scene involves elements like proper lighting and shadows on digital objects that add realism and immersion in the scene.

Lighting and shadows are handled by three.js. You can specify which lights should cast shadows, which materials should receive and render these shadows, and which meshes can cast shadows. This app's scene contains a light that casts a shadow and a flat surface for rendering only shadows.

- Enable shadows on the

three.jsWebGLRenderer. After creating the renderer, set the following values on itsshadowMap:

setupThreeJs() {

...

this.renderer = new THREE.WebGLRenderer(...);

...

this.renderer.shadowMap.enabled = true;

this.renderer.shadowMap.type = THREE.PCFSoftShadowMap;

...

}

The example scene created in DemoUtils.createLitScene() contains an object called shadowMesh, a flat, horizontal surface that only renders shadows. This surface initially has a Y position of 10,000 units. Once a sunflower is placed, move the shadowMesh to be the same height as the real-world surface, such that the shadow of the flower is rendered on top of the real-world ground.

- In

onSelect, after addingcloneto the scene, add code to reposition the shadow plane:

onSelect = () => {

if (window.sunflower) {

const clone = window.sunflower.clone();

clone.position.copy(this.reticle.position);

this.scene.add(clone);

const shadowMesh = this.scene.children.find(c => c.name === "shadowMesh");

shadowMesh.position.y = clone.position.y;

}

}

Test it

When placing a sunflower, you should be able to see it casting a shadow. If you run into any issues, check out the final/app.js code to see a working example of this step.

6. Additional resources

Congratulations! You reached the end of this codelab on AR using WebXR.