1. Introduction

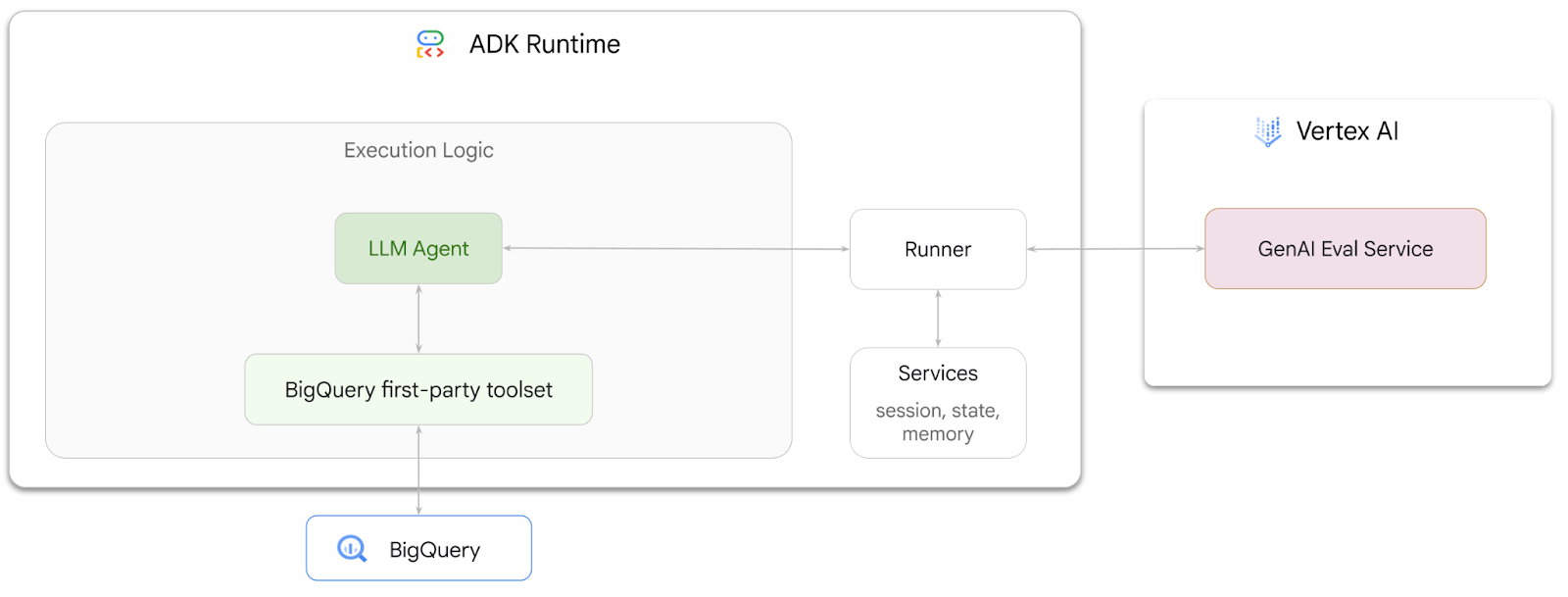

In this codelab you will learn how to build agents that can answer questions about data stored in BigQuery using Agent Development Kit (ADK). You'll also evaluate these agents using Vertex AI's GenAI Evaluation service:

What you'll do

- Build a conversational analytics agent in ADK

- Equip this agent with ADK's first-party toolset for BigQuery so it can interact with data stored in BigQuery

- Create an evaluation framework for your agent using Vertex AI GenAI Evaluation service

- Run evaluations on this agent against a set of golden responses

What you'll need

- A web browser such as Chrome

- A Google Cloud project with billing enabled, or

- A gmail account. The next section will show you how to redeem a free $5 credit for this codelab and set up a new project

This codelab is for developers of all levels, including beginners. You will use the command-line interface in Google Cloud Shell and Python code for ADK development. You don't need to be a Python expert, but a basic understanding of how to read code will help you understand the concepts.

2. Before you begin

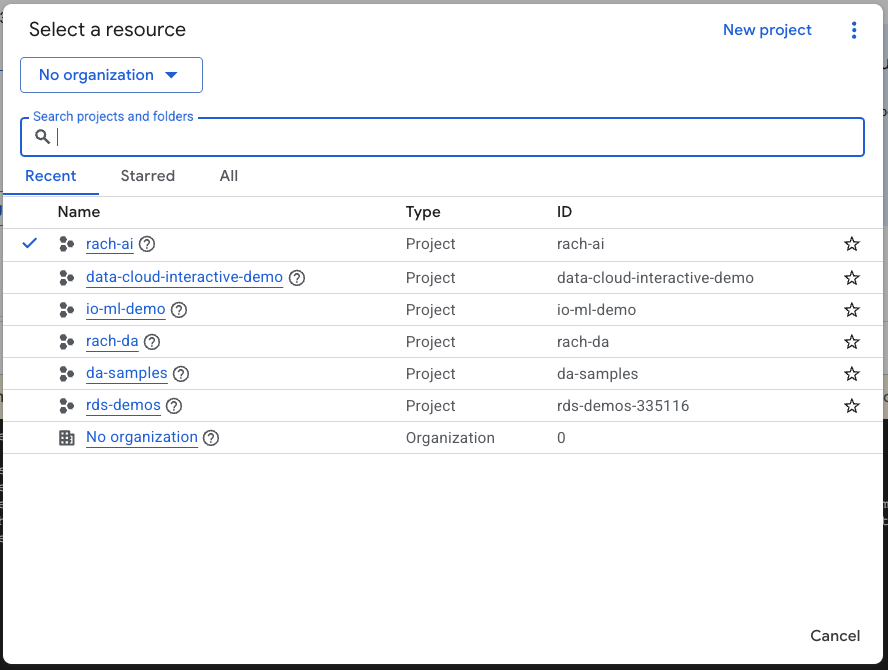

Create a Google Cloud Project

- In the Google Cloud Console, on the project selector page, select or create a Google Cloud project.

- Make sure that billing is enabled for your Cloud project. Learn how to check if billing is enabled on a project.

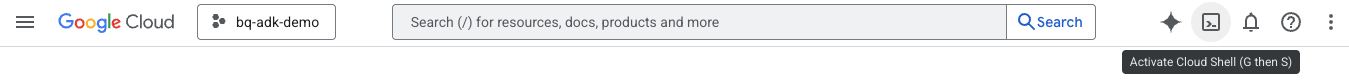

Start Cloud Shell

Cloud Shell is a command-line environment running in Google Cloud that comes preloaded with necessary tools.

- Click Activate Cloud Shell at the top of the Google Cloud console:

- Once connected to Cloud Shell, run this command to verify your authentication in Cloud Shell:

gcloud auth list

- Run the following command to confirm that your project is configured for use with gcloud:

gcloud config list project

- Use the following command to set your project:

export PROJECT_ID=<YOUR_PROJECT_ID>

gcloud config set project $PROJECT_ID

Enable APIs

- Run this command to enable all the required APIs and services:

gcloud services enable bigquery.googleapis.com \

aiplatform.googleapis.com \

cloudresourcemanager.googleapis.com

- On successful execution of the command, you should see a message similar to the one shown below:

Operation "operations/..." finished successfully.

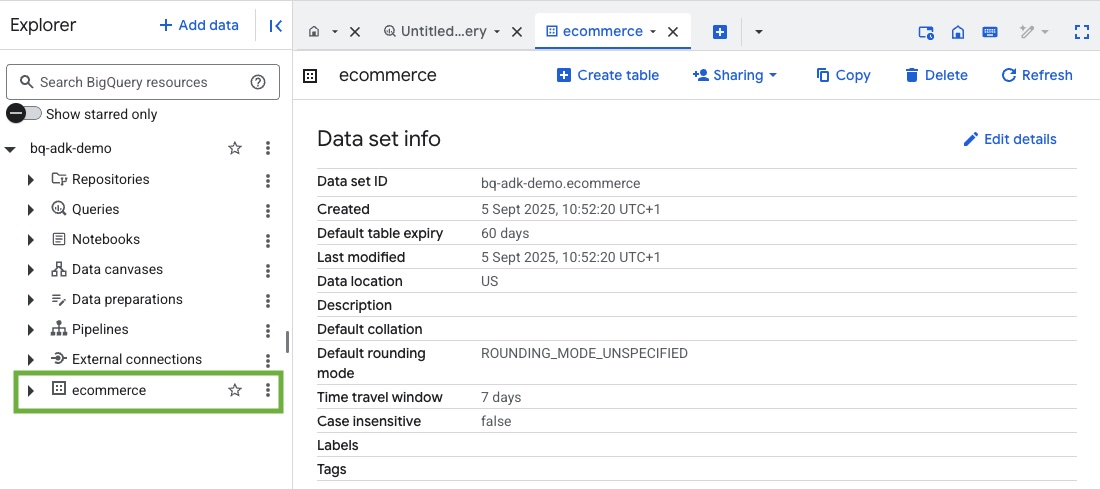

3. Create a BigQuery dataset

- Run the following command in Cloud Shell to create a new dataset called ecommerce in BigQuery:

bq mk --dataset --location=US ecommerce

A static subset of the BigQuery public dataset thelook_ecommerce is saved as AVRO files in a public Google Cloud Storage bucket.

- Run this command in Cloud Shell to load these Avro files into BigQuery as tables (events, order_items, products, users, orders):

bq load --source_format=AVRO --autodetect \

ecommerce.events \

gs://sample-data-and-media/thelook_dataset_snapshot/events/*.avro.gz

bq load --source_format=AVRO --autodetect \

ecommerce.order_items \

gs://sample-data-and-media/thelook_dataset_snapshot/order_items/*.avro.gz

bq load --source_format=AVRO --autodetect \

ecommerce.products \

gs://sample-data-and-media/thelook_dataset_snapshot/products/*.avro.gz

bq load --source_format=AVRO --autodetect \

ecommerce.users \

gs://sample-data-and-media/thelook_dataset_snapshot/users/*.avro.gz

bq load --source_format=AVRO --autodetect \

ecommerce.orders \

gs://sample-data-and-media/thelook_dataset_snapshot/orders/*.avro.gz

bq load --source_format=AVRO --autodetect \

ecommerce.inventory_items \

gs://sample-data-and-media/thelook_dataset_snapshot/inventory_items/*.avro.gz

bq load --source_format=AVRO --autodetect \

ecommerce.distribution_centers \

gs://sample-data-and-media/thelook_dataset_snapshot/distribution_centers/*.avro.gz

This process might take a few minutes.

- Verify the dataset and tables are created by visiting the BigQuery console in your Google Cloud Project:

4. Prepare environment for ADK agents

Return to Cloud Shell and ensure you are in your home directory. We will create a virtual Python environment and install the required packages.

- Open a new terminal tab in Cloud Shell and run this command to create and navigate to a folder named bigquery-adk-codelab:

mkdir bigquery-adk-codelab

cd bigquery-adk-codelab

- Create a virtual Python environment:

python -m venv .venv

- Activate the virtual environment:

source .venv/bin/activate

- Install Google's ADK and AI-Platform python packages. The AI platform and pandas package is required to evaluate the bigquery agent:

pip install google-adk google-cloud-aiplatform[evaluation] pandas

5. Create an ADK application

Now, let's create our BigQuery Agent. This agent will be designed to answer natural language questions about data stored in BigQuery.

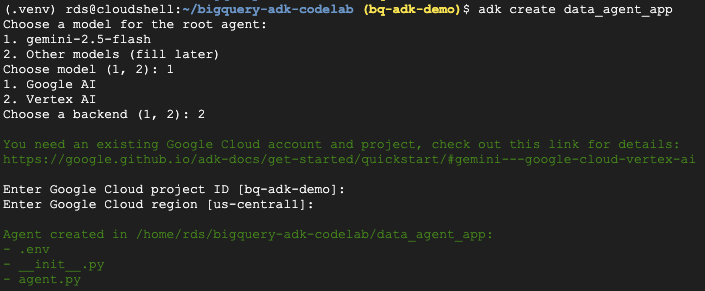

- Run the adk create utility command to scaffold a new agent application with the necessary folders and files:

adk create data_agent_app

Follow the prompts:

- Choose gemini-2.5-flash for the model.

- Choose Vertex AI for the backend.

- Confirm your default Google Cloud Project ID and region.

A sample interaction is shown below:

- Click the Open Editor button in Cloud Shell to open Cloud Shell Editor and view the newly created folders and files:

Note the generated files:

bigquery-adk-codelab/

├── .venv/

└── data_agent_app/

├── __init__.py

├── agent.py

└── .env

- init.py: Marks the folder as a Python module.

- agent.py: Contains the initial agent definition.

- .env: Contains environment variables for your project (You may need to click View > Toggle Hidden Files to view this file)

Update any variables that were not correctly set from the prompts:

GOOGLE_GENAI_USE_VERTEXAI=1 GOOGLE_CLOUD_PROJECT=<YOUR_GOOGLE_PROJECT_ID> GOOGLE_CLOUD_LOCATION=<YOUR_GOOGLE_CLOUD_REGION>

6. Define your agent and assign it the BigQuery toolset

To define an ADK Agent that interacts with BigQuery using the BigQuery toolset, replace the existing content of the agent.py file with the following code.

You must update the project ID in the agent's instructions to your actual project ID:

from google.adk.agents import Agent

from google.adk.tools.bigquery import BigQueryCredentialsConfig, BigQueryToolset

import google.auth

import dotenv

dotenv.load_dotenv()

credentials, _ = google.auth.default()

credentials_config = BigQueryCredentialsConfig(credentials=credentials)

bigquery_toolset = BigQueryToolset(

credentials_config=credentials_config

)

root_agent = Agent(

model="gemini-2.5-flash",

name="bigquery_agent",

description="Agent that answers questions about BigQuery data by executing SQL queries.",

instruction=(

"""

You are a BigQuery data analysis agent.

You are able to answer questions on data stored in project-id: '<YOUR_PROJECT_ID>' on the `ecommerce` dataset.

"""

),

tools=[bigquery_toolset]

)

def get_bigquery_agent():

return root_agent

The BigQuery toolset provides an agent with the capabilities to fetch metadata and execute SQL queries on BigQuery data. To use the toolset, you must authenticate, with the most common options being Application Default Credentials (ADC) for development, Interactive OAuth for when the agent needs to act on behalf of a specific user, or Service Account Credentials for secure, production-level authentication.

From here, you can chat with your agent by returning to Cloud Shell and running this command:

adk web

You should see a notification saying the webserver has started:

... INFO: Started server process [2735] INFO: Waiting for application startup. +-----------------------------------------------------------------------------+ | ADK Web Server started | | | For local testing, access at http://127.0.0.1:8000. | +-----------------------------------------------------------------------------+ INFO: Application startup complete. INFO: Uvicorn running on http://127.0.0.1:8000

Click on the url provided to launch adk web - you can ask your agent some questions about the dataset:

Close adk web and press Ctrl + C in terminal to shutdown the web server.

7. Prepare your agent for evaluation

Now you've defined your BigQuery agent, you need to make it runnable for evaluation.

The code below defines a function, run_conversation, that handles the conversation flow by creating an agent, running a session, and processing the events to retrieve the final response.

- Navigate back to Cloud Editor and create a new file named

run_agent.pyin the bigquery-adk-codelab directory and copy/paste the code below:

from data_agent_app.agent import get_bigquery_agent

from google.adk.sessions import InMemorySessionService

from google.adk.runners import Runner

from google.genai import types

import uuid

APP_NAME = "data_agent_app"

USER_ID = "biquery_user_101"

async def run_conversation(prompt: str):

"""Runs a conversation with the BigQuery agent using the ADK Runner."""

session_service = InMemorySessionService()

session_id = f"{APP_NAME}-{uuid.uuid4().hex[:8]}"

root_agent = get_bigquery_agent()

runner = Runner(

agent=root_agent, app_name=APP_NAME, session_service=session_service

)

session = await session_service.create_session(

app_name=APP_NAME, user_id=USER_ID, session_id=session_id

)

final_response_text = "Unable to retrieve final response."

tool_calls = []

try:

# Run the agent and process the events as they are generated

async for event in runner.run_async(

user_id=USER_ID,

session_id=session_id,

new_message=types.Content(role="user", parts=[types.Part(text=prompt)]),

):

if (

event.content

and event.content.parts

and event.content.parts[0].function_call

):

func_call = event.content.parts[0].function_call

tool_call = {

"tool_name": func_call.name,

"tool_input": dict(func_call.args),

}

tool_calls.append(tool_call)

if event.is_final_response():

if event.content and event.content.parts:

final_response_text = event.content.parts[0].text

break

except Exception as e:

print(f"Error in run_conversation: {e}")

final_response_text = f"An error occurred during the conversation: {e}"

return {

"response": final_response_text,

"predicted_trajectory": tool_calls

}

The code below defines utility functions to call this runnable function and return the result. It also includes helper functions that print and save the evaluation results:

- Create a new file named

utils.pyin the bigquery-adk-codelab directory and Copy/paste this code into the utils.py file:

import json

import os

import asyncio

import run_agent

import numbers

import math

def get_agent_response(prompt: str) -> dict:

"""Invokes the agent with a prompt and returns its response."""

try:

response = asyncio.run(run_agent.run_conversation(prompt)) # Invoke the agent

return response

except Exception as e:

return {"response": "Error: Agent failed to produce a response."}

def save_evaluation_results(eval_result, experiment_run):

"""Processes, saves, and prints the evaluation results for a single run."""

os.makedirs("eval_results", exist_ok=True)

output_file_path = os.path.join(

"eval_results", f"bq_agent_eval_results_{experiment_run}.json"

)

# Prepare data for JSON serialization

eval_result_dict = {

"summary_metrics": eval_result.summary_metrics,

"pointwise_metrics": eval_result.metrics_table.to_dict("records"),

}

# --- Save the results as a JSON file ---

with open(output_file_path, "w") as f:

json.dump(eval_result_dict, f, indent=4)

print(f"Results for run '{experiment_run}' saved to {output_file_path}")

def print_evaluation_summary(eval_result):

"""Prints a detailed summary of the evaluation results, including summary-level and aggregated pointwise metrics."""

pointwise_metrics = eval_result.metrics_table

# Print summary metrics for the current run

summary_metrics = eval_result.summary_metrics

if summary_metrics:

for key, value in summary_metrics.items():

if isinstance(value, numbers.Real) and not math.isnan(value):

value = f"{value:.2f}"

metric_name = key.replace("/mean", "").replace("_", " ").title()

print(f"- {metric_name}: {key}: {value}")

else:

print("No summary metrics found for this run.")

print("\n" + "=" * 50 + "\n")

if not pointwise_metrics.empty:

total_questions = len(pointwise_metrics)

avg_completeness_score = pointwise_metrics["completeness_metric/score"].mean()

avg_factual_accuracy_score = pointwise_metrics[

"factual_accuracy_metric/score"

].mean()

print("\n" + "=" * 50 + "\n")

print("--- Aggregated Evaluation Summary ---")

print(f"Total questions in evaluation dataset: {total_questions}")

print(f"Average Completeness Score: {avg_completeness_score:.2f}")

print(f"Average Factual Accuracy Score: {avg_factual_accuracy_score:.2f}")

print("\n" + "=" * 50 + "\n")

else:

print("\nNo successful evaluation runs were completed.")

8. Create an evaluation dataset

To evaluate your agent, you'll need to create an evaluation dataset, define your evaluation metrics, and run the evaluation task.

The evaluation dataset contains a list of questions (prompts) and their corresponding correct answers (references). The evaluation service will use these pairs to compare your agent's responses and determine if they're accurate.

- Create a new file named evaluation_dataset.json in the bigquery-adk-codelab directory and copy/paste the evaluation dataset below:

[

{

"prompt": "What tables are available in the dataset `ecommerce`?",

"reference": "The tables available in the dataset `ecommerce` are: `distribution_centers`, `events`, `inventory_items`, `order_items`, `orders`, `products`, and `users`."

},

{

"prompt": "How many users are there in total?",

"reference": "There are 100,000 users in total."

},

{

"prompt": "Find the email and age of the user with id 72685.",

"reference": "The email address of user 72685 is lindseybrennan@example.org and their age is 59."

},

{

"prompt": "How many orders have a status of Complete?",

"reference": "There are 31,077 orders with a status of 'complete'."

},

{

"prompt": "Which distribution center has the highest latitude, and what is it's latitude?",

"reference": "Chicago IL is the distribution center with the highest latitude, with a latitude of 41.84."

},

{

"prompt": "Retrieve the order id for all orders with a status of cancelled placed on the 1st June 2023 before 6am.",

"reference": "The order IDs for all orders with a status of 'cancelled' placed on the 1st June 2023 before 6am are: 26622, 49223"

},

{

"prompt": "What id the full name and user ids of the top 5 users with the most orders.",

"reference": "The top 5 users with the most orders are: Kristine Pennington (user ID 77359), Anthony Bright (user ID 4137), David Bean (user ID 30740), Michelle Wright (user ID 54563), and Matthew Reynolds (user ID 41136), each with 4 total orders."

},

{

"prompt": "Which distribution center is associated with the highest average retail price of its products, and what is the average retail price?",

"reference": "The distribution center associated with the highest average retail price of its products is Houston TX, with an average retail price of $69.74."

},

{

"prompt": "How many events were of type 'purchase' in Seoul during May 2024?",

"reference": "In May 2024, there were 57 'purchase' events recorded in Seoul."

},

{

"prompt": "For orders placed in June 2023, how many took three days or longer to be delivered after they were shipped?",

"reference": "In June 2023, there were 318 orders with a time difference of of 3 days or more between when they were shipped and delivered."

},

{

"prompt": "What are the names of the products and their respective retail price that have never been sold, but have a retail price greater than $210?",

"reference": "The products that have never been sold but have a retail price greater than $210 are:\n- Tommy Hilfiger Men's 2 Button Side Vent Windowpane Trim Fit Sport Coat, with a retail price of $249.9\n- MICHAEL Michael Kors Women's Hooded Leather Jacket: $211.11"

},

{

"prompt": "List the id and first name of users between the ages of 70 and 75 who have Facebook were sourced from Facebook and are located in California.",

"reference": "The users between the ages of 70 and 75 from California with 'Facebook' as their traffic source are:\n- Julie (ID: 25379)\n- Sherry (ID: 85196)\n- Kenneth (ID: 82238)\n- Lindsay (ID: 64079)\n- Matthew (ID: 99612)"

},

{

"prompt": "Identify the full name and user id of users over the age of 67 who live within 3.5 kilometers of any distribution_center.",

"reference": "The users over the age of 67 who live within 3.5 kilometers of any distribution center are:\n- William Campbell (user ID: 26082)\n- Becky Cantrell (user ID: 39008)"

},

{

"prompt": "What is the median age of users for each gender?",

"reference": "The median age for female users is 41, and the median age for male users is 41."

},

{

"prompt": "What is the average sale price of complete orders compared to returned orders, and what is the percentage difference (to two decimal places) between them?",

"reference": "The average sale price for 'Complete' orders was $59.56, while for 'Returned' orders it was $59.76. This represents a percentage difference of 0.34%."

}

]

9. Define your evaluation metrics

We will now use two custom metrics to assess the agent's ability to answer questions related to your BigQuery data, both providing a score from 1 to 5:

- Factual Accuracy Metric: This assesses if all data and facts presented in the response are precise and correct when compared to the ground truth.

- Completeness Metric: This measures whether the response includes all the key pieces of information requested by the user and present in the correct answer, without any critical omissions.

- Finally, create a new file named

evaluate_agent.pyin the bigquery-adk-codelab directory and copy/paste the metric definition code into the evaluate_agent.py file:

import uuid

import pandas as pd

from datetime import datetime

from vertexai.preview.evaluation import EvalTask

from vertexai.preview.evaluation.metrics import (

PointwiseMetricPromptTemplate,

PointwiseMetric,

TrajectorySingleToolUse,

)

from utils import save_evaluation_results, print_evaluation_summary, get_agent_response

factual_accuracy_metric = PointwiseMetric(

metric="factual_accuracy_metric",

metric_prompt_template=PointwiseMetricPromptTemplate(

instruction="""You are an expert evaluator assessing the factual accuracy of an AI's answer to a user's question, given a natural language prompt and a 'reference' (ground truth) answer. Your task is to determine if all factual information in the AI's answer is precise and correct when compared to the reference.""",

criteria={

"Accuracy": """The AI's answer must present factual information (numerical values, names, dates, specific values) that are **identical** to or an exact logical derivation from the reference.

- **Wording may vary, but the core factual information must be the same.**

- No numerical discrepancies.

- No incorrect names or identifiers.

- No fabricated or misleading details.

- Note: Minor rounding of numerical values that doesn't alter the core meaning or lead to significant misrepresentation is generally acceptable, assuming the prompt doesn't ask for exact precision."""

},

rating_rubric={

"5": "Excellent: The response is entirely factually correct. **All factual information precisely matches the reference.** There are absolutely no inaccuracies or misleading details.",

"3": "Good: The response is generally accurate, but contains minor, non-critical factual inaccuracies (e.g., a negligible rounding difference or slightly wrong detail) that do not impact the core understanding.",

"1": "Poor: The response contains significant factual errors, major numerical discrepancies, or fabricated information that makes the answer incorrect or unreliable."

},

input_variables=["prompt", "reference", "response"],

),

)

completeness_metric = PointwiseMetric(

metric="completeness_metric",

metric_prompt_template=PointwiseMetricPromptTemplate(

instruction="""You are an expert evaluator assessing the completeness of an AI's answer to a user's question, given a natural language prompt and a 'reference' (ground truth) answer. Your task is to determine if the AI's answer provides all the essential information requested by the user and present in the reference.""",

criteria={

"Completeness": """The AI's answer must include **all** key pieces of information explicitly or implicitly requested by the prompt and present in the reference.

- No omissions of critical facts.

- All requested attributes (e.g., age AND email, not just one) must be present.

- If the reference provides a multi-part answer, all parts must be covered."""

},

rating_rubric={

"5": "Excellent: The response is perfectly complete. **All key information requested by the prompt and present in the reference is included.** There are absolutely no omissions.",

"3": "Good: The response is mostly complete. It has only a slight, non-critical omission that does not impact the core understanding or utility of the answer.",

"1": "Poor: The response is critically incomplete. Essential parts of the requested information are missing, making the answer less useful or unusable for the user's purpose."

},

input_variables=["prompt", "reference", "response"],

),

)

tool_use_metric = TrajectorySingleToolUse(tool_name="list_table_ids")

I've also included the TrajectorySingleToolUse metric for trajectory evaluation. When these metrics are present, the agent tool calls (including the raw SQL it generates and executes against BigQuery) will be included in the evaluation response, allowing for detailed inspection.

The TrajectorySingleToolUse metric determines whether an agent has used a particular tool. In this case, I chose the list_table_ids, as we expect this tool to be called for every question in the evaluation dataset. Unlike other trajectory metrics, this metric doesn't require you to specify all the expected tool calls and arguments for each question in the evaluation dataset.

10. Create your evaluation task

The EvalTask takes the evaluation dataset and the custom metrics and sets up a new evaluation experiment.

This function, run_eval, is the main engine for the evaluation. It loops through an EvalTask, running your agent on each question in the dataset. For each question, it records the agent's response and then uses the metrics you defined earlier to grade it.

Copy/paste the following code at the bottom of evaluate_agent.py file:

def run_eval():

eval_dataset = pd.read_json("evaluation_dataset.json")

# Generate a unique run name

current_time = datetime.now().strftime("%Y%m%d-%H%M%S")

experiment_run_id = f"{current_time}-{uuid.uuid4().hex[:8]}"

print(f"--- Starting evaluation: ({experiment_run_id}) ---")

# Define the evaluation task with your dataset and metrics

eval_task = EvalTask(

dataset=eval_dataset,

metrics=[

factual_accuracy_metric,

completeness_metric,

tool_use_metric,

],

experiment="evaluate-bq-data-agent"

)

try:

eval_result = eval_task.evaluate(

runnable=get_agent_response, experiment_run_name=experiment_run_id

)

save_evaluation_results(eval_result, experiment_run_id)

print_evaluation_summary(eval_result)

except Exception as e:

print(f"An error occurred during evaluation run: {e}")

if __name__ == "__main__":

run_eval()

The results are summarized and saved to a JSON file.

11. Run your evaluation

Now that you have your agent, evaluation metrics, and evaluation dataset ready, you can run the evaluation.

Return to Cloud Shell, ensure you are in the bigquery-adk-codelab directory and run the evaluation script using the following command:

python evaluate_agent.py

You will see output similar to this as the evaluation progresses:

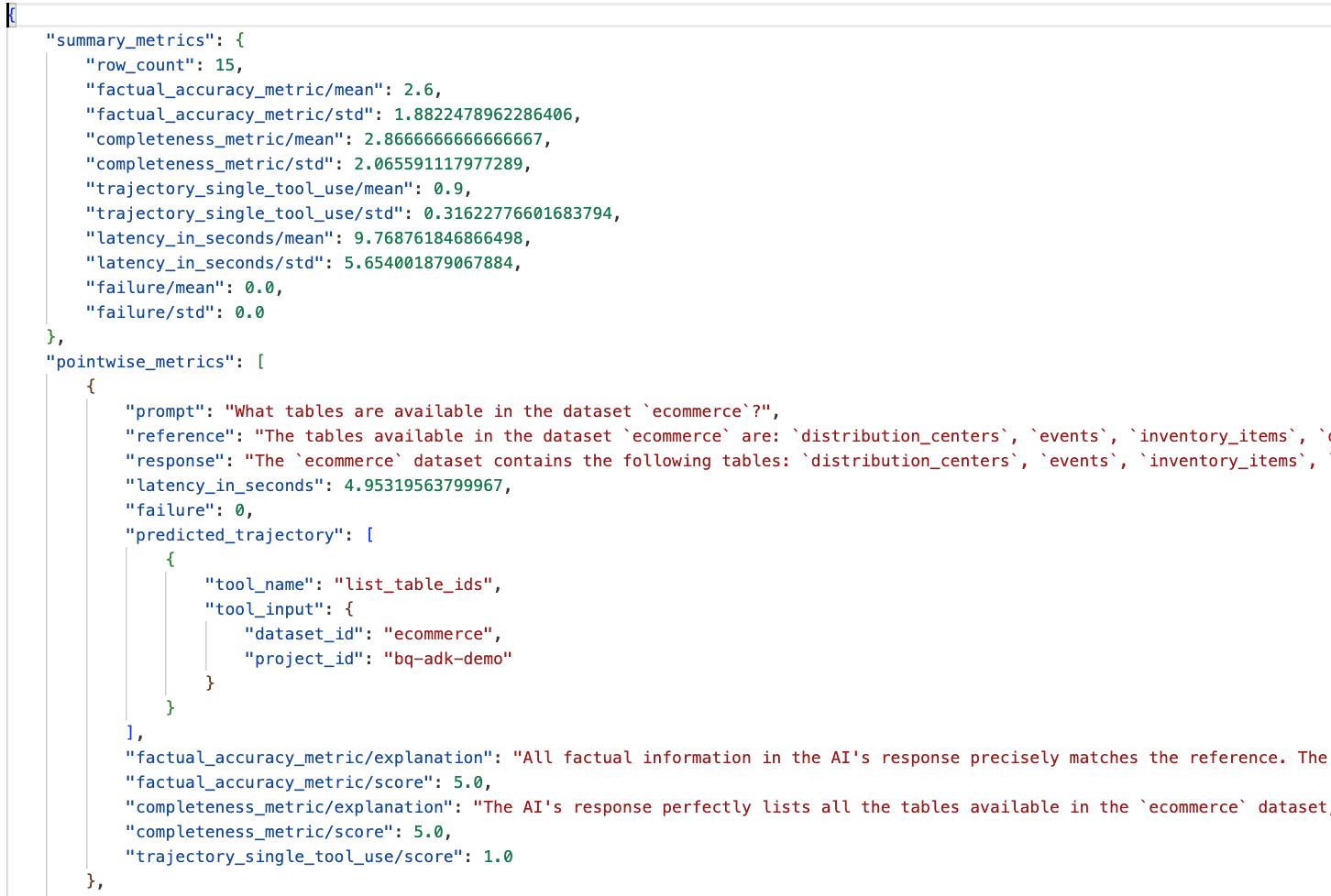

Evaluation Took:11.410560518999773 seconds Results for run '20250922-130011-300ea89b' saved to eval_results/bq_agent_eval_results_20250922-130011-300ea89b.json - Row Count: row_count: 15.00 - Factual Accuracy Metric: factual_accuracy_metric/mean: 2.60 - Factual Accuracy Metric/Std: factual_accuracy_metric/std: 1.88 - Completeness Metric: completeness_metric/mean: 2.87 - Completeness Metric/Std: completeness_metric/std: 2.07 - Trajectory Single Tool Use: trajectory_single_tool_use/mean: 0.90 - Trajectory Single Tool Use/Std: trajectory_single_tool_use/std: 0.32 - Latency In Seconds: latency_in_seconds/mean: 9.77 - Latency In Seconds/Std: latency_in_seconds/std: 5.65 - Failure: failure/mean: 0.00 - Failure/Std: failure/std: 0.00

If you run into any errors like the below, it just means the agent didn't call any tools for a particular run; you can inspect the agent behavior further in the next step.

Error encountered for metric trajectory_single_tool_use at dataset index 1: Error: 400 List of Field: trajectory_single_tool_use_input.instances[0].predicted_trajectory; Message: Required field is not set.

Interpreting the Results:

Navigate to the eval_results folder in the data_agent_app directory and open the evaluation result file named bq_agent_eval_results_*.json:

- Summary Metrics: Provide an aggregate view of your agent's performance across the dataset.

- Factual Accuracy and Completeness Pointwise Metrics: A score closer to 5 indicates higher accuracy and completeness. There will be a score for each question, along with a written explanation of why it received that score.

- Predicted Trajectory: This is the list of tool calls used by the agents to reach the final response. This will allow us to see any SQL queries generated by the agent.

We can see that the mean score for the average completeness and factual accuracy is 2.87 and 2.6 respectively.

The results aren't very good! Let's try to improve our agent's ability to answer questions.

12. Improve your agent's evaluation results

Navigate to the agent.py in the bigquery-adk-codelab directory and update the agent's model and system instructions. Remember to replace <YOUR_PROJECT_ID> with your project id:

root_agent = Agent(

model="gemini-2.5-flash",

name="bigquery_agent",

description="Agent that answers questions about BigQuery data by executing SQL queries.",

instruction=(

"""

You are a data analysis agent with access to several BigQuery tools.

Use the appropriate tools to fetch relevant BigQuery metadata and execute SQL queries.

You must use these tools to answer the user's questions.

Run these queries in the project-id: '<YOUR_PROJECT_ID>' on the `ecommerce` dataset.

"""

),

tools=[bigquery_toolset]

)

Now head back to terminal and re-run the evaluation:

python evaluate_agent.py

You should see the results are now much better:

================================================== --- Aggregated Evaluation Summary --- Total questions in evaluation dataset: 15 Average Completeness Score: 4.73 Average Factual Accuracy Score: 4.20 ==================================================

Evaluating your agent is an iterative process. To improve the evaluation results further, you can tweak the system instructions, the model parameters or even the metadata in BigQuery - check out these tips and tricks for some more ideas.

13. Clean Up

To avoid ongoing charges to your Google Cloud account, it's important to delete the resources we created during this workshop.

If you created any specific BigQuery datasets or tables for this codelab (e.g., the ecommerce dataset), you might want to delete them:

bq rm -r $PROJECT_ID:ecommerce

To remove the bigquery-adk-codelab directory and its contents:

cd .. # Go back to your home directory if you are still in bigquery-adk-codelab

rm -rf bigquery-adk-codelab

14. Congratulations

Congratulations! You've successfully built and evaluated a BigQuery agent using the Agent Development Kit (ADK). You now understand how to set up an ADK agent with BigQuery tools and measure its performance using custom evaluation metrics.