1. Introduction

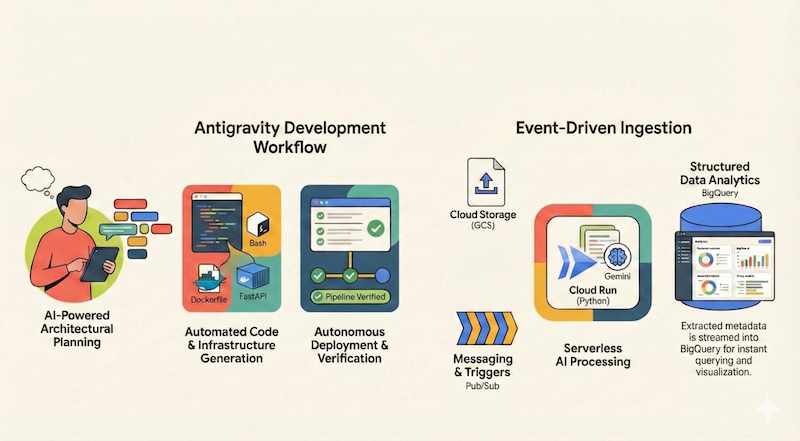

In this codelab, you will learn how to use Google Antigravity (referred to as Antigravity for the rest of the document) to design, build, and deploy a serverless application to Google Cloud. We will build a serverless and event-driven document pipeline that ingests files from Google Cloud Storage (GCS), processes them using Cloud Run and Gemini, and streams their metadata into BigQuery.

What you'll learn

- How to use Antigravity for architectural planning and design.

- Generate infrastructure as code (shell scripts) with an AI agent.

- Build and deploy a Python based Cloud Run service.

- Integrate Gemini on Vertex AI for multimodal document analysis.

- Verify the end-to-end pipeline using Antigravity's Walkthrough artifact.

What you'll need

- Google Antigravity installed. If you need help to install Antigravity and understand the basics, it is recommended to complete the codelab: Getting Started with Google Antigravity.

- A Google Cloud Project with billing enabled.

- gcloud CLI installed and authenticated.

2. Overview of the app

Before we jump into architecting and implementing the application using Antigravity, let's first outline the application we want to build for ourselves.

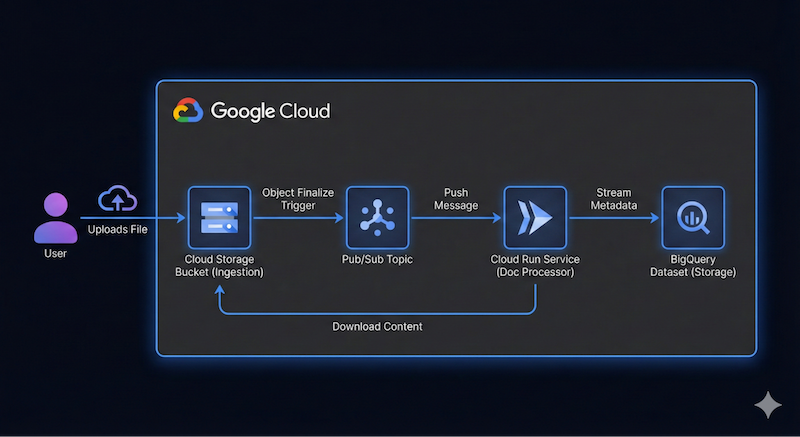

We want to build a serverless and event-driven document pipeline that ingests files from Google Cloud Storage (GCS), processes them using Cloud Run and Gemini, and streams their metadata into BigQuery.

A high level architecture diagram for this application could look like this:

This does not have to be precise. Antigravity can help us to figure out the architecture details as we go along. However, it helps to have an idea on what you want to build. The more detail you can provide, the better results you'll get from Antigravity in terms of architecture and code.

3. Plan the architecture

We are ready to get started planning the architecture details with Antigravity!

Antigravity excels at planning complex systems. Instead of writing code immediately, we can start by defining the high-level architecture.

First, ensure that you are in the Agent Manager. If you just opened Antigravity, you can either click on the Open Agent Manager button in the middle or you should also see the same Open Agent Manager on the top right corner.

In the Agent Manager, you have the option of opening a workspace or simply use the Playground, an independent workspace for quick prototypes and experimentation. Let's start with the Playground.

Click on the + button to start a new conversation in the Playground:

This brings up an interface where you can provide the prompt as shown below:

In the top right corner, click on the settings icon ⚙️and set the Review Policy under Artifact and Terminal Command Auto Execution under Terminal to Request Review. This will ensure that at every step, you will get to review, and approve the plan before the agent executes.

Prompt

Now, we're ready to provide our first prompt to Antigravity.

First, make sure the Antigravity is in Planning mode and for the model, let's go with the Gemini Pro (High) model (but feel free to experiment with other models).

Enter the following prompt and click submit button:

I want to build a serverless event-driven document processing pipeline on Google Cloud.

Architecture:

- Ingestion: Users upload files to a Cloud Storage bucket.

- Trigger: File uploads trigger a Pub/Sub message.

- Processor: A Python-based Cloud Run service receives the message, processes the file (simulated OCR), and extracts metadata.

- Storage: Stream the metadata (filename, date, tags, word_count) into a BigQuery dataset.

Task List & Implementation Plan

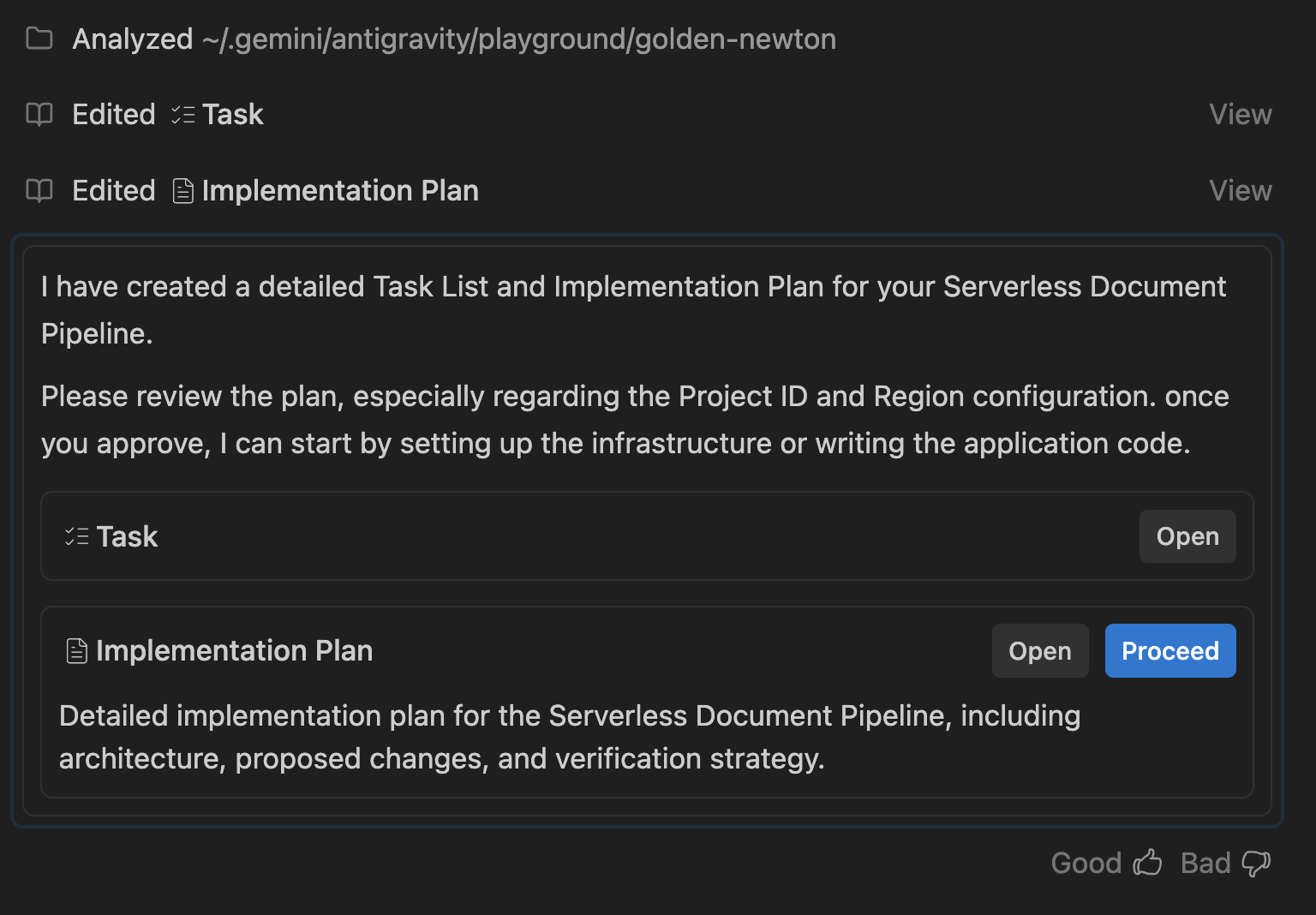

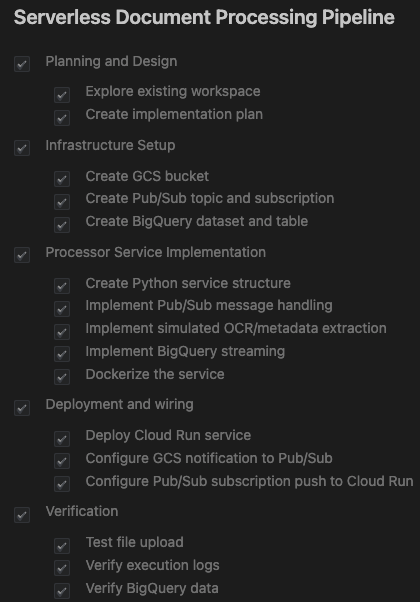

Antigravity will analyze your request and generate a Task list and Implementation Plan.

This plan outlines:

- Infrastructure: GCS Bucket, Pub/Sub Topic, BigQuery Dataset.

- Processor: Python/Flask app, Dockerfile, Requirements.

- Integration: GCS Notifications → Pub/Sub → Cloud Run.

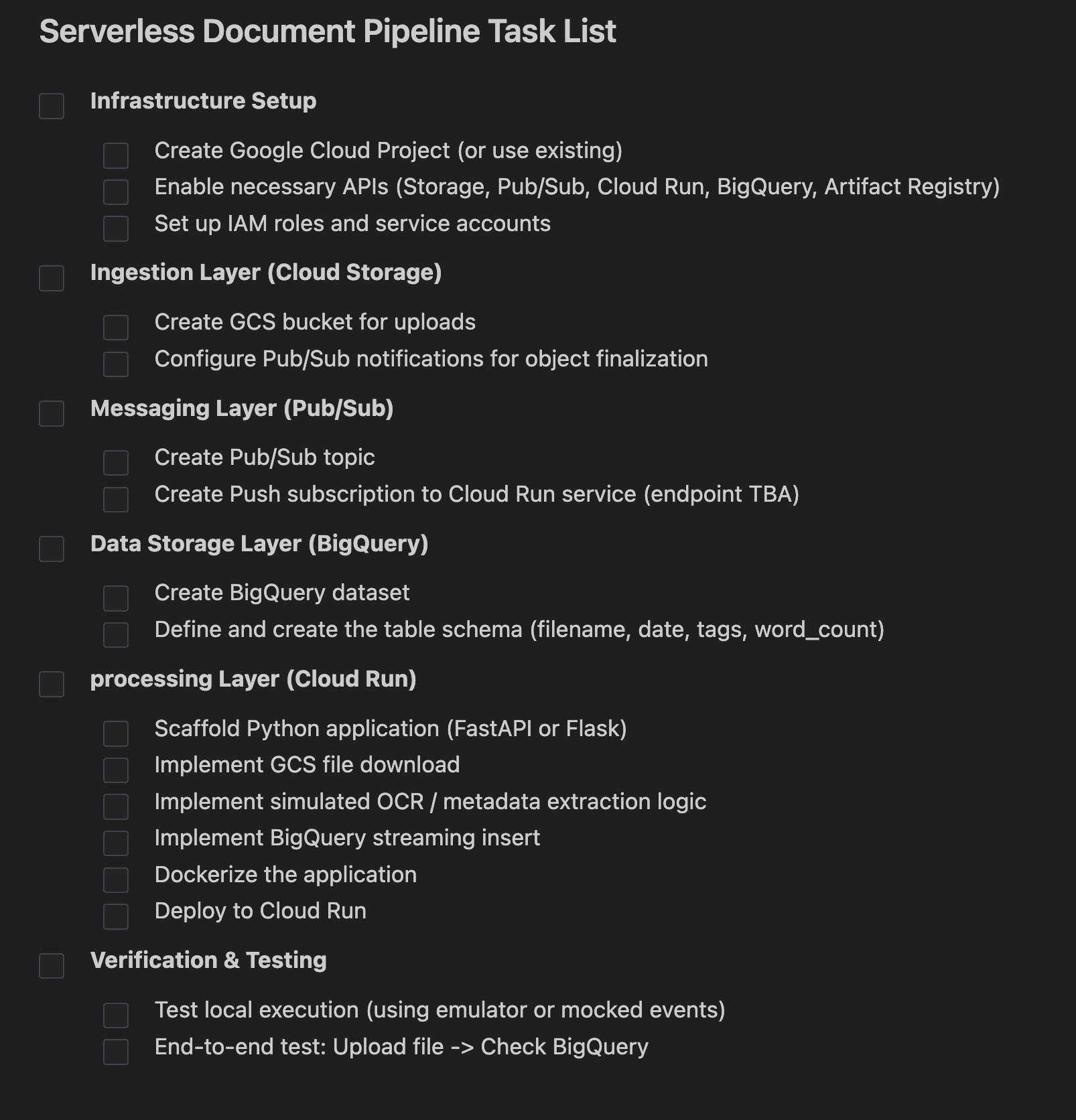

You should see something similar to the following:

Click on the Open button next to the Task row. This should show you a set of tasks that have been created by Antigravity. The agent will go through them one by one:

The next step is to review the implementation plan and give the permission to the agent to proceed.

Click on the implementation plan to see its details. Give it a careful read. This is your chance to provide your feedback for the implementation. You can click on any part of the implementation plan and add comments. Once you add some comments, make sure to submit for review any changes that you would like to see, especially around naming, Google Cloud project id, region, etc.

Once it all looks fine, give the agent the permission to proceed with the implementation plan by clicking on the Proceed button.

4. Generate the application

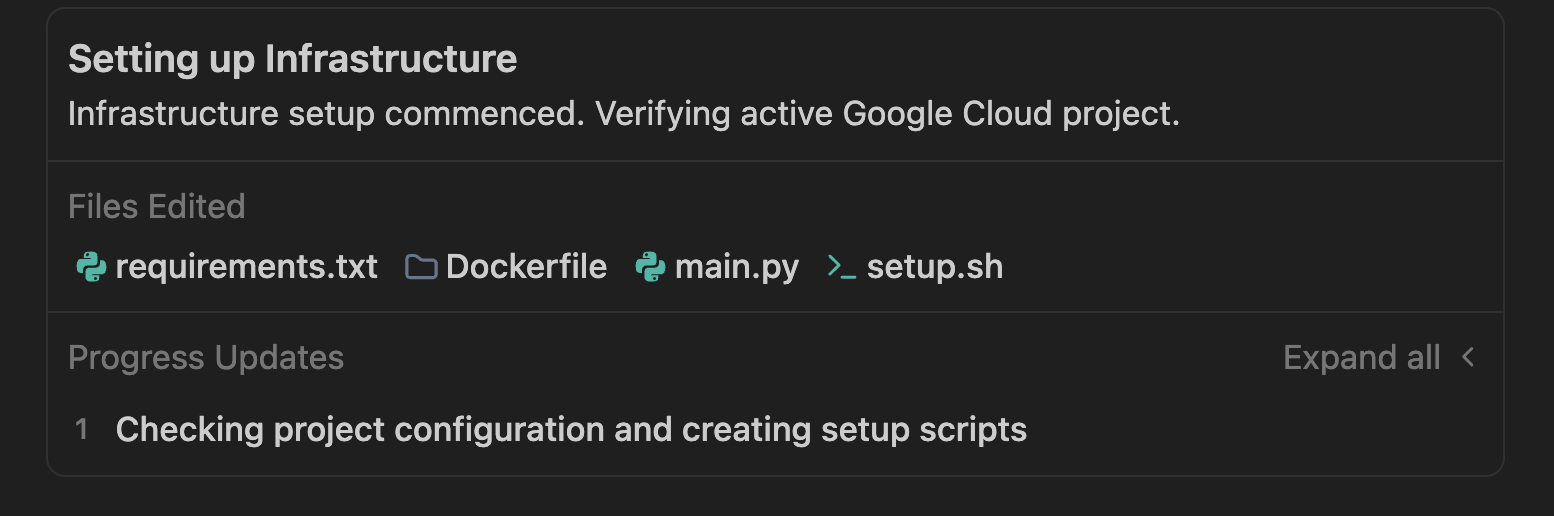

Once the plan is approved, Antigravity starts generating files required for the application, from provisioning scripts to application code.

Antigravity will create a folder and start creating the files necessary for the project. In our sample run, we saw the following:

A setup.sh or a similarly named shell script file is generated, which automates the resource creation. It handles:

- Enabling APIs (

run,pubsub,bigquery,storage). - Creating the Google Cloud Storage bucket (

doc-ingestion-{project-id}). - Creating the BigQuery dataset and table (

pipeline_data.processed_docs). - Configuring Pub/Sub topics and notifications.

The agent should generate a Python application (main.py) listening for Pub/Sub push messages. It uses a simulated OCR logic, generating random word counts and tags and persisting that to BigQuery.

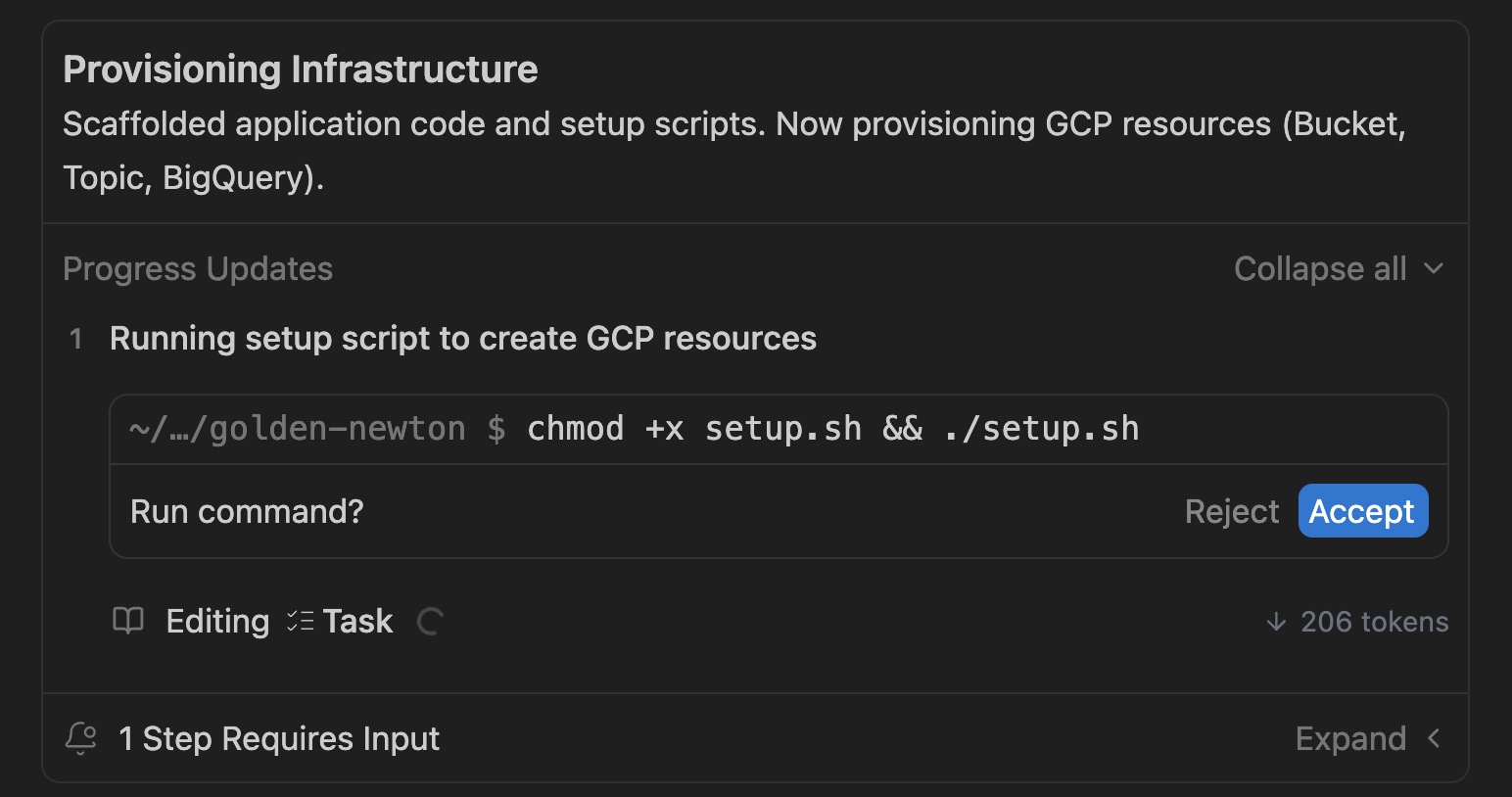

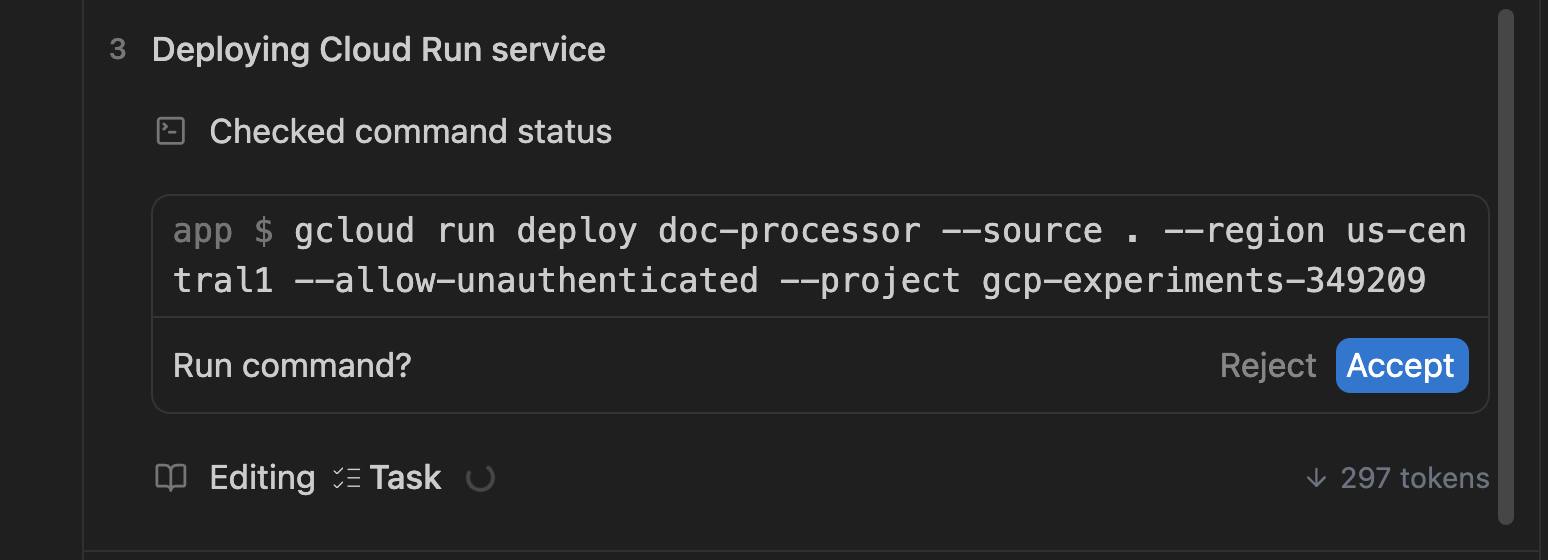

Once this setup script is generated, Antigravity should then prompt you to execute the script on your behalf. A sample screen is shown below:

Go ahead and click on Accept as needed.

The provision script will create the resources and validate that they have been created. On successful check, it will move on to building the container and deploying it as a service (in main.py) in Cloud Run. A sample output is shown below:

As part of deploying the service, it will also set up Pub/Sub subscriptions and other glue that is required to make this pipeline work. All of this should take a few minutes.

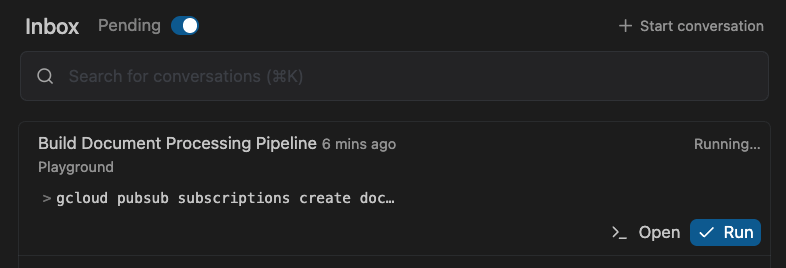

In the meantime, you can switch to Inbox (from top left corner), check the Pending tasks waiting for your input:

This is a good way of ensuring that you're approving tasks as the agent seeks for your feedback.

5. Verify the application

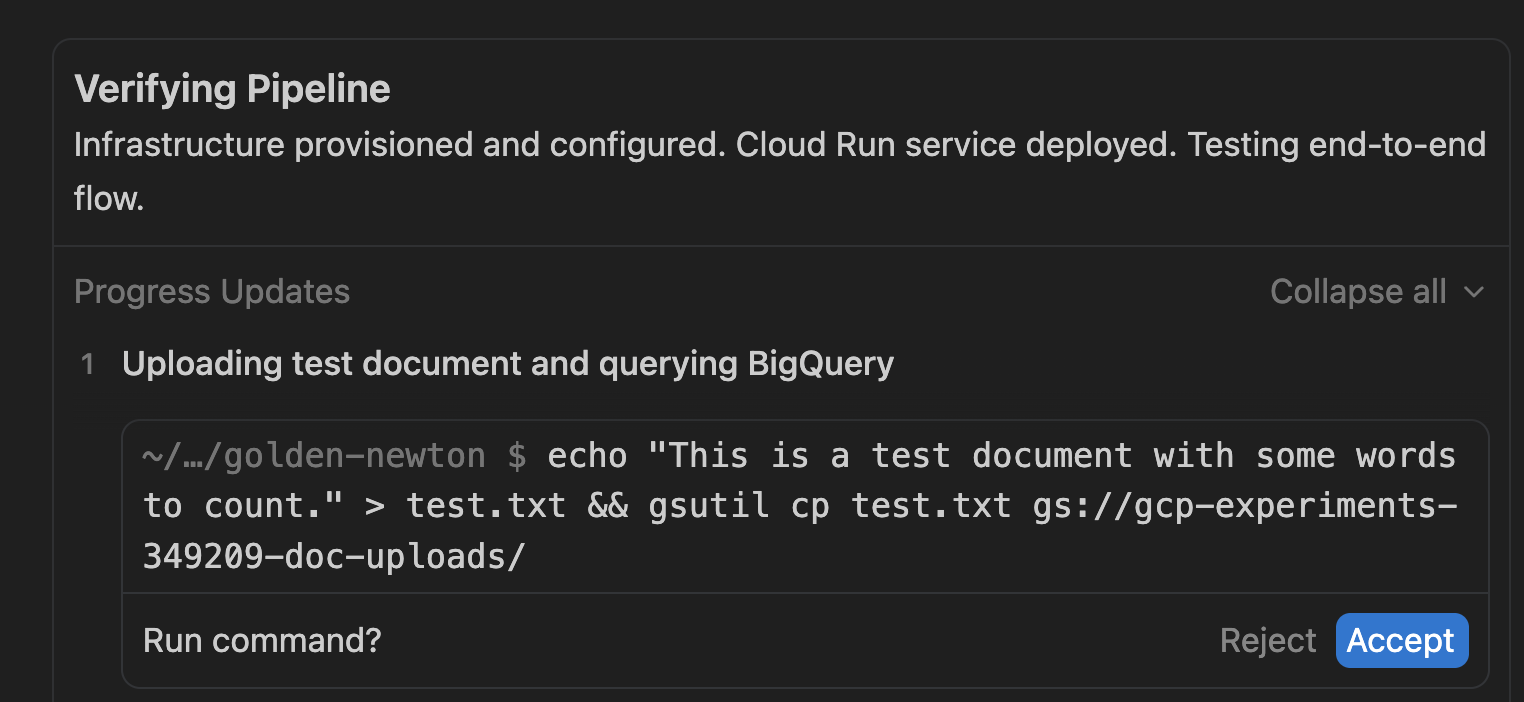

Once the pipeline is deployed, Antigravity goes ahead to verify that the application actually works. Antigravity automates this with verification steps in the task list. A sample screen is shown below:

It creates a test artifact (test.txt) and wants to upload it to Google Cloud Storage bucket. Click on Accept to go ahead.

If you want to run further tests on your own, you can take a hint from Antigravity's validation step, where it uses the gsutil utility to upload a sample file to the Cloud Storage bucket. The sample command is shown below:

gsutil cp <some-test-doc>.txt gs://<bucket-name>/

Check results in BigQuery

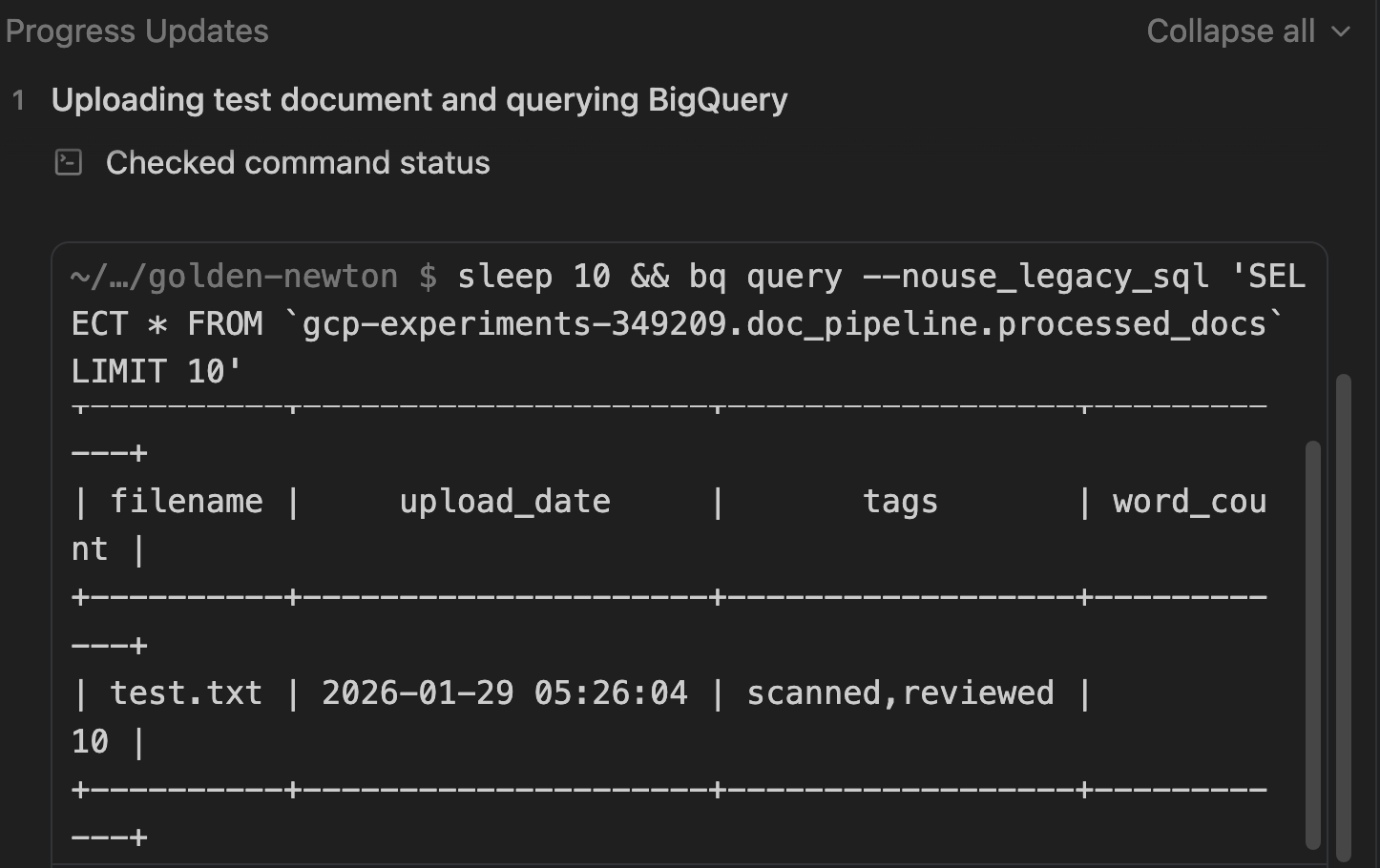

As part of the verification process, it will also check that the data got persisted in BigQuery.

Note the SQL query that it used to check for the documents.

Once the verification is done, you should see that the task list is done:

Optional: Manual verification

Even though Antigravity already verified the application, you can also manually check in Google Cloud console that all the resources are created, if you wish, by following these steps.

Cloud Storage

Goal: Verify the bucket exists and check for uploaded files.

- Navigate to Cloud Storage > Buckets.

- Locate the bucket named

[PROJECT_ID]-doc-uploads. - Click on the bucket name to browse files.

- Verify: You should see your uploaded files (e.g.,

test.txt).

Pub/Sub

Goal: Confirm the topic exists and has a push subscription.

- Navigate to Pub/Sub > Topics.

- Find doc-processing-topic.

- Click on the topic ID.

- Scroll down to the Subscriptions tab.

- Verify: Ensure doc-processing-sub is listed with "Push" delivery type.

Cloud Run

Goal: Check the service status and logs.

- Navigate to Cloud Run.

- Click on the service doc-processor.

- Verify:

- Health: Green checkmark indicating the service is active.

- Logs: Click the Logs tab. Look for entries like "Processing file: gs://..." and "Successfully processed...".

BigQuery

Goal: Validate the data is actually stored.

- Navigate to BigQuery > SQL Workspace.

- In the Explorer pane, expand your project > pipeline_data dataset.

- Click on the processed_docs table.

- Click on the Preview tab.

- Verify: You should see rows containing filename, upload_date, tags, and word_count.

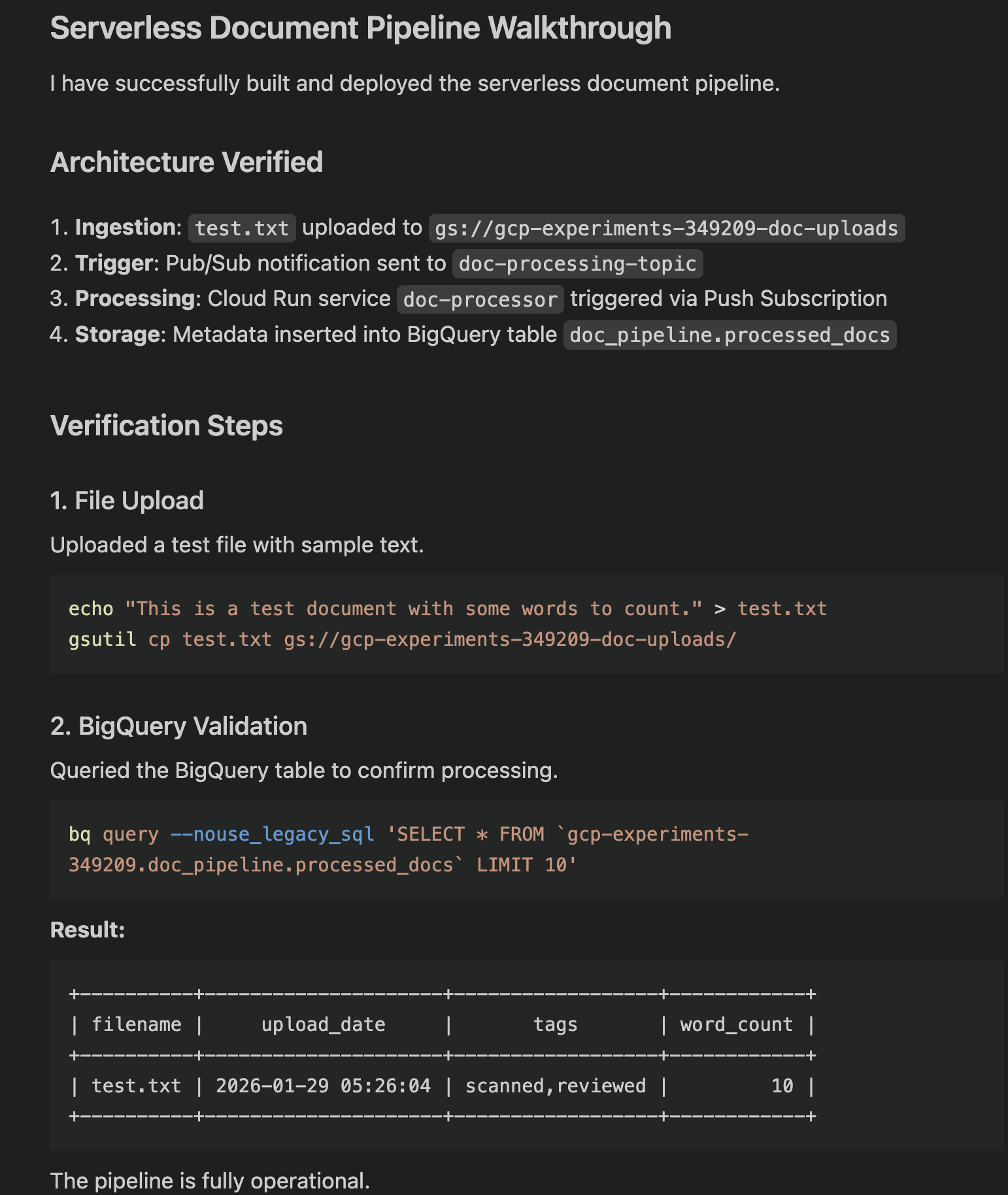

Walkthrough

As a final step, Antigravity generates a walkthrough artifact. This artifact summarizes:

- Changes made.

- Verification commands run.

- Actual results (query output showing the Gemini extracted metadata).

You can click Open to see it. A sample output is shown below:

6. Explore the application

At this point, you have the basic app provisioned and running. Before diving into extending this application further, take a moment to explore the code. You can switch to the editor with the Open Editor button on the top right corner.

Here's a quick summary of the files you might see:

setup.sh: The master script that provisions all Google Cloud resources and enables the required APIs.main.py: The main entry point of the pipeline. This Python app creates a web server that receives Pub/Sub push messages, downloads the file from GCS, "processes" (simulates OCR) it, and streams the metadata to BigQuery.Dockerfile: Defines how to package the app into a container image.requirements.txt: Lists the Python dependencies.

You might also see other scripts and text files needed for testing and verification.

At this point, you might also want to move from Playground to a dedicated workspace/folder. You can do that by clicking on the relevant button on the top right corner:

Once you choose a folder, all the code will move to that folder and a new workspace will be created with the folder and the conversation history.

7. Extend the application

Now that you have a working basic application, you can continue iterating and extending the application. Here are some ideas.

Add a frontend

Build a simple web interface to view the processed documents.

Try the following prompt: Create a simple Streamlit or Flask web application that connects to BigQuery. It should display a table of the processed documents (filename, upload_date, tags, word_count) and allow me to filter the results by tag

Integrate with real AI/ML

Instead of simulated OCR processing, use Gemini models to extract, classify and translate.

- Replace the dummy OCR logic. Send the image/PDF to Gemini to extract actual text and data. Analyze the extracted text to classify the document type (invoice, contract, resume) or extract entities (dates, names, locations).

- Automatically detect the language of the document and translate it to English before storing it. You can use any other language too.

Enhance storage & analytics

You can configure lifecycle rules on the bucket to move old files to "Coldline" or "Archive" storage to save costs.

Robustness & Security

You can make the app more robust and secure such as:

- Dead Letter Queues (DLQ): Update the Pub/Sub subscription to handle failures. If the Cloud Run service fails to process a file 5 times, send the message to a separate "Dead Letter" topic/bucket for human inspection.

- Secret Manager: If your app needs API keys or sensitive config, store them in Secret Manager and access them securely from Cloud Run instead of hardcoding strings.

- Eventarc: Upgrade from direct Pub/Sub to Eventarc for more flexible event routing, allowing you to trigger based on complex audit logs or other GCP service events.

Of course, you can come up with your own ideas and use Antigravity to help you to implement them!

8. Conclusion

You have successfully built a scalable, serverless, AI-powered document pipeline in minutes using Google Antigravity. You learned how to:

- Plan architectures with AI.

- Instruct and manage Antigravity as it works through generating the application from code generation to deployment and validation.

- Verify deployments and validation with Walkthroughs.

Reference docs

- Official Site : https://antigravity.google/

- Documentation: https://antigravity.google/docs

- Use cases : https://antigravity.google/use-cases

- Download : https://antigravity.google/download

- Codelab : Getting Started with Google Antigravity