1. Before you begin

ARCore is a platform for building Augmented Reality (AR) apps on mobile devices. Using different APIs, ARCore makes it possible for a user's device to observe and receive information about its environment, and interact with that information.

In this codelab, you'll go through the process of building a simple AR-enabled app that uses the ARCore Depth API.

Prerequisites

This codelab has been written for developers with knowledge of fundamental AR concepts.

What you'll build

You'll build an app that uses the depth image for each frame to visualize the geometry of the scene and to perform occlusion on placed virtual assets. You will go through the specific steps of:

- Checking for Depth API support on the phone

- Retrieving the depth image for each frame

- Visualizing depth information in multiple ways (see above animation)

- Using depth to increase the realism of apps with occlusion

- Learning how to gracefully handle phones that do not support the Depth API

What you'll need

Hardware Requirements

- A supported ARCore device, connected via a USB cable to your development machine. This device also must support the Depth API. Please see this list of supported devices. The Depth API is only available on Android.

- Enable USB debugging for this device.

Software Requirements

- ARCore SDK 1.31.0 or later.

- A development machine with Android Studio (v3.0 or later).

2. ARCore and the Depth API

The Depth API uses a supported device's RGB camera to create depth maps (also called depth images). You can use the information provided by a depth map to make virtual objects accurately appear, in front of or behind, real world objects, enabling immersive and realistic user experiences.

The ARCore Depth API provides access to depth images matching each frame provided by ARCore's Session. Each pixel provides a distance measurement from the camera to the environment, which provides enhanced realism for your AR app.

A key capability behind the Depth API is occlusion: the ability for digital objects to accurately appear relative to real world objects. This makes objects feel as if they're actually in the environment with the user.

This codelab will guide you through the process of building a simple AR-enabled app that uses depth images to perform occlusion of virtual objects behind real-world surfaces and visualize the detected geometry of the space.

3. Get set up

Set up the development machine

- Connect your ARCore device to your computer via the USB cable. Make sure that your device allows USB debugging.

- Open a terminal and run

adb devices, as shown below:

adb devices List of devices attached <DEVICE_SERIAL_NUMBER> device

The <DEVICE_SERIAL_NUMBER> will be a string unique to your device. Make sure that you see exactly one device before continuing.

Download and instal the Code

- You can either clone the repository:

git clone https://github.com/googlecodelabs/arcore-depth

Or download a ZIP file and extract it:

- Launch Android Studio, and click Open an existing Android Studio project.

- Find the directory where you extracted the ZIP file downloaded above, and open the

depth_codelab_io2020directory.

This is a single Gradle project with multiple modules. If the Project pane on the top left of Android Studio isn't already displayed in the Project pane, click Projects from the drop-down menu.

The result should look like this:

| This project contains the following modules:

|

You will work in the part0_work module. There are also complete solutions for each part of the codelab. Each module is a buildable app.

4. Run the Starter App

- Click Run > Run... > ‘part0_work'. In the Select Deployment Target dialog that displays, your device should be listed under Connected Devices.

- Select your device and click OK. Android Studio will build the initial app and run it on your device.

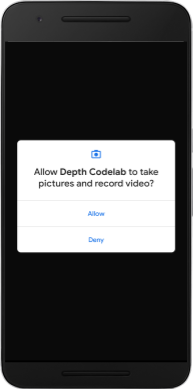

- The app will request camera permissions. Tap Allow to continue.

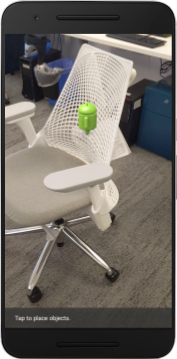

| How to use the app

|

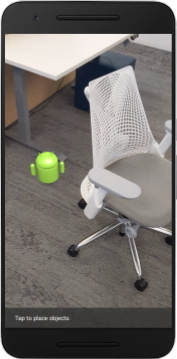

Currently, your app is very simple and doesn't know much about the real-world scene geometry.

If you place an Android figure behind a chair, for example, the rendering will appear to hover in front, since the application doesn't know that the chair is there and should be hiding the Android.

To fix this issue, we will use the Depth API to improve immersiveness and realism in this app.

5. Check if Depth API is supported (Part 1)

The ARCore Depth API only runs on a subset of supported devices. Before integrating functionality into an app using these depth images, you must first ensure that the app is running on a supported device.

Add a new private member to DepthCodelabActivity that serves as a flag which stores whether the current device supports depth:

private boolean isDepthSupported;

We can populate this flag from inside the onResume() function, where a new Session is created.

Find the existing code:

// Creates the ARCore session.

session = new Session(/* context= */ this);

Update the code to:

// Creates the ARCore session.

session = new Session(/* context= */ this);

Config config = session.getConfig();

isDepthSupported = session.isDepthModeSupported(Config.DepthMode.AUTOMATIC);

if (isDepthSupported) {

config.setDepthMode(Config.DepthMode.AUTOMATIC);

} else {

config.setDepthMode(Config.DepthMode.DISABLED);

}

session.configure(config);

Now the AR Session is configured appropriately, and your app knows whether or not it can use depth-based features.

You should also let the user know whether or not depth is used for this session.

Add another message to the Snackbar. It will appear at the bottom of the screen:

// Add this line at the top of the file, with the other messages.

private static final String DEPTH_NOT_AVAILABLE_MESSAGE = "[Depth not supported on this device]";

Inside onDrawFrame(), you can present this message as needed:

// Add this if-statement above messageSnackbarHelper.showMessage(this, messageToShow).

if (!isDepthSupported) {

messageToShow += "\n" + DEPTH_NOT_AVAILABLE_MESSAGE;

}

If your app is run on a device that doesn't support depth, the message you just added appears at the bottom:

Next, you'll update the app to call the Depth API and retrieve depth images for each frame.

6. Retrieve the depth images (Part 2)

The Depth API captures 3D observations of the device's environment and returns a depth image with that data to your app. Each pixel in the depth image represents a distance measurement from the device camera to its real-world environment.

Now you'll use these depth images to improve rendering and visualization in the app. The first step is to retrieve the depth image for each frame and bind that texture to be used by the GPU.

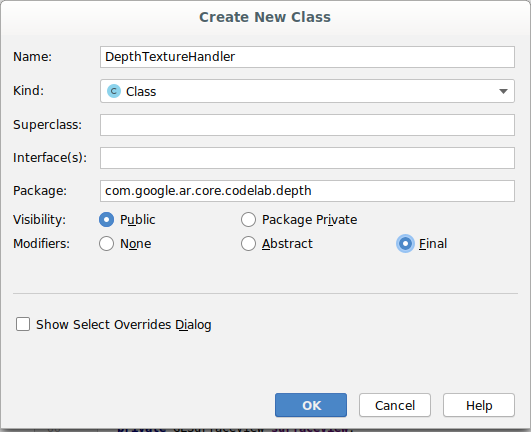

First, add a new class to your project.DepthTextureHandler is responsible for retrieving the depth image for a given ARCore frame.

Add this file:

src/main/java/com/google/ar/core/codelab/depth/DepthTextureHandler.java

package com.google.ar.core.codelab.depth;

import static android.opengl.GLES20.GL_CLAMP_TO_EDGE;

import static android.opengl.GLES20.GL_TEXTURE_2D;

import static android.opengl.GLES20.GL_TEXTURE_MAG_FILTER;

import static android.opengl.GLES20.GL_TEXTURE_MIN_FILTER;

import static android.opengl.GLES20.GL_TEXTURE_WRAP_S;

import static android.opengl.GLES20.GL_TEXTURE_WRAP_T;

import static android.opengl.GLES20.GL_UNSIGNED_BYTE;

import static android.opengl.GLES20.glBindTexture;

import static android.opengl.GLES20.glGenTextures;

import static android.opengl.GLES20.glTexImage2D;

import static android.opengl.GLES20.glTexParameteri;

import static android.opengl.GLES30.GL_LINEAR;

import static android.opengl.GLES30.GL_RG;

import static android.opengl.GLES30.GL_RG8;

import android.media.Image;

import com.google.ar.core.Frame;

import com.google.ar.core.exceptions.NotYetAvailableException;

/** Handle RG8 GPU texture containing a DEPTH16 depth image. */

public final class DepthTextureHandler {

private int depthTextureId = -1;

private int depthTextureWidth = -1;

private int depthTextureHeight = -1;

/**

* Creates and initializes the depth texture. This method needs to be called on a

* thread with a EGL context attached.

*/

public void createOnGlThread() {

int[] textureId = new int[1];

glGenTextures(1, textureId, 0);

depthTextureId = textureId[0];

glBindTexture(GL_TEXTURE_2D, depthTextureId);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_WRAP_S, GL_CLAMP_TO_EDGE);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_WRAP_T, GL_CLAMP_TO_EDGE);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_MIN_FILTER, GL_LINEAR);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_MAG_FILTER, GL_LINEAR);

}

/**

* Updates the depth texture with the content from acquireDepthImage16Bits().

* This method needs to be called on a thread with an EGL context attached.

*/

public void update(final Frame frame) {

try {

Image depthImage = frame.acquireDepthImage16Bits();

depthTextureWidth = depthImage.getWidth();

depthTextureHeight = depthImage.getHeight();

glBindTexture(GL_TEXTURE_2D, depthTextureId);

glTexImage2D(

GL_TEXTURE_2D,

0,

GL_RG8,

depthTextureWidth,

depthTextureHeight,

0,

GL_RG,

GL_UNSIGNED_BYTE,

depthImage.getPlanes()[0].getBuffer());

depthImage.close();

} catch (NotYetAvailableException e) {

// This normally means that depth data is not available yet.

}

}

public int getDepthTexture() {

return depthTextureId;

}

public int getDepthWidth() {

return depthTextureWidth;

}

public int getDepthHeight() {

return depthTextureHeight;

}

}

Now you'll add an instance of this class to DepthCodelabActivity, ensuring you'll have an easy-to-access copy of the depth image for every frame.

In DepthCodelabActivity.java, add an instance of our new class as a private member variable:

private final DepthTextureHandler depthTexture = new DepthTextureHandler();

Next, update the onSurfaceCreated() method to initialize this texture, so it is usable by our GPU shaders:

// Put this at the top of the "try" block in onSurfaceCreated().

depthTexture.createOnGlThread();

Finally, you want to populate this texture in every frame with the latest depth image, which can be done by calling the update() method you created above on the latest frame retrieved from session.

Since depth support is optional for this app, only use this call if you are using depth.

// Add this just after "frame" is created inside onDrawFrame().

if (isDepthSupported) {

depthTexture.update(frame);

}

Now you have a depth image that is updated with every frame. It's ready to be used by your shaders.

However, nothing about the app's behavior has changed yet. Now you'll use the depth image to improve your app.

7. Render the depth image (Part 3)

Now you have a depth image to play with, you'll want to see what it looks like. In this section, you'll add a button to the app to render the depth for each frame.

Add new shaders

There are many ways to view a depth image. The following shaders provide a simple color mapping visualization.

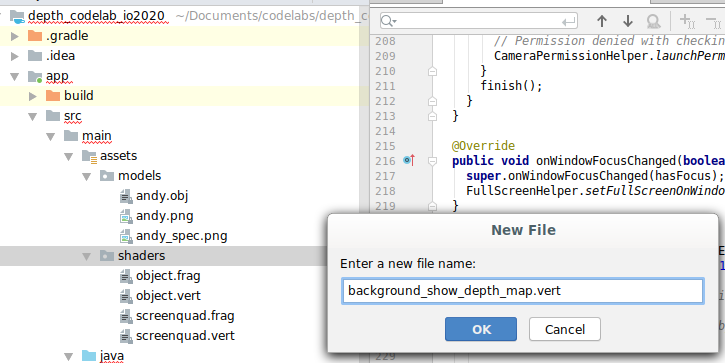

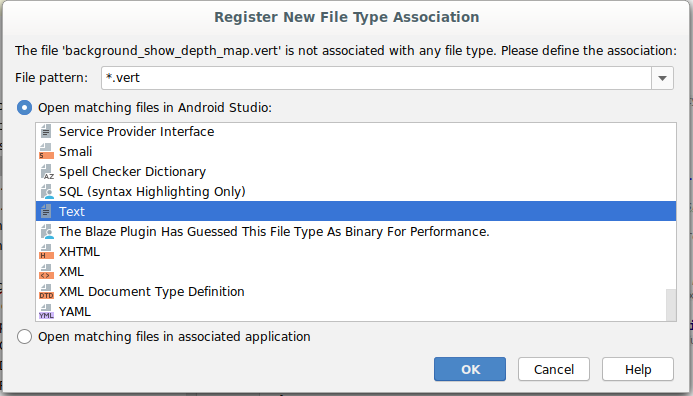

| Add a new .vert shaderIn Android Studio:

|

In the new file, add the following code:

src/main/assets/shaders/background_show_depth_map.vert

attribute vec4 a_Position;

attribute vec2 a_TexCoord;

varying vec2 v_TexCoord;

void main() {

v_TexCoord = a_TexCoord;

gl_Position = a_Position;

}

Repeat the steps above to make the fragment shader in the same directory, and name it background_show_depth_map.frag.

Add the following code to this new file:

src/main/assets/shaders/background_show_depth_map.frag

precision mediump float;

uniform sampler2D u_Depth;

varying vec2 v_TexCoord;

const highp float kMaxDepth = 20000.0; // In millimeters.

float GetDepthMillimeters(vec4 depth_pixel_value) {

return 255.0 * (depth_pixel_value.r + depth_pixel_value.g * 256.0);

}

// Returns an interpolated color in a 6 degree polynomial interpolation.

vec3 GetPolynomialColor(in float x,

in vec4 kRedVec4, in vec4 kGreenVec4, in vec4 kBlueVec4,

in vec2 kRedVec2, in vec2 kGreenVec2, in vec2 kBlueVec2) {

// Moves the color space a little bit to avoid pure red.

// Removes this line for more contrast.

x = clamp(x * 0.9 + 0.03, 0.0, 1.0);

vec4 v4 = vec4(1.0, x, x * x, x * x * x);

vec2 v2 = v4.zw * v4.z;

return vec3(

dot(v4, kRedVec4) + dot(v2, kRedVec2),

dot(v4, kGreenVec4) + dot(v2, kGreenVec2),

dot(v4, kBlueVec4) + dot(v2, kBlueVec2)

);

}

// Returns a smooth Percept colormap based upon the Turbo colormap.

vec3 PerceptColormap(in float x) {

const vec4 kRedVec4 = vec4(0.55305649, 3.00913185, -5.46192616, -11.11819092);

const vec4 kGreenVec4 = vec4(0.16207513, 0.17712472, 15.24091500, -36.50657960);

const vec4 kBlueVec4 = vec4(-0.05195877, 5.18000081, -30.94853351, 81.96403246);

const vec2 kRedVec2 = vec2(27.81927491, -14.87899417);

const vec2 kGreenVec2 = vec2(25.95549545, -5.02738237);

const vec2 kBlueVec2 = vec2(-86.53476570, 30.23299484);

const float kInvalidDepthThreshold = 0.01;

return step(kInvalidDepthThreshold, x) *

GetPolynomialColor(x, kRedVec4, kGreenVec4, kBlueVec4,

kRedVec2, kGreenVec2, kBlueVec2);

}

void main() {

vec4 packed_depth = texture2D(u_Depth, v_TexCoord.xy);

highp float depth_mm = GetDepthMillimeters(packed_depth);

highp float normalized_depth = depth_mm / kMaxDepth;

vec4 depth_color = vec4(PerceptColormap(normalized_depth), 1.0);

gl_FragColor = depth_color;

}

Next, update the BackgroundRenderer class to use these new shaders, located in src/main/java/com/google/ar/core/codelab/common/rendering/BackgroundRenderer.java.

Add the file paths to the shaders at the top of the class:

// Add these under the other shader names at the top of the class.

private static final String DEPTH_VERTEX_SHADER_NAME = "shaders/background_show_depth_map.vert";

private static final String DEPTH_FRAGMENT_SHADER_NAME = "shaders/background_show_depth_map.frag";

Add more member variables to the BackgroundRenderer class, since it will be running two shaders:

// Add to the top of file with the rest of the member variables.

private int depthProgram;

private int depthTextureParam;

private int depthTextureId = -1;

private int depthQuadPositionParam;

private int depthQuadTexCoordParam;

Add a new method to populate these fields:

// Add this method below createOnGlThread().

public void createDepthShaders(Context context, int depthTextureId) throws IOException {

int vertexShader =

ShaderUtil.loadGLShader(

TAG, context, GLES20.GL_VERTEX_SHADER, DEPTH_VERTEX_SHADER_NAME);

int fragmentShader =

ShaderUtil.loadGLShader(

TAG, context, GLES20.GL_FRAGMENT_SHADER, DEPTH_FRAGMENT_SHADER_NAME);

depthProgram = GLES20.glCreateProgram();

GLES20.glAttachShader(depthProgram, vertexShader);

GLES20.glAttachShader(depthProgram, fragmentShader);

GLES20.glLinkProgram(depthProgram);

GLES20.glUseProgram(depthProgram);

ShaderUtil.checkGLError(TAG, "Program creation");

depthTextureParam = GLES20.glGetUniformLocation(depthProgram, "u_Depth");

ShaderUtil.checkGLError(TAG, "Program parameters");

depthQuadPositionParam = GLES20.glGetAttribLocation(depthProgram, "a_Position");

depthQuadTexCoordParam = GLES20.glGetAttribLocation(depthProgram, "a_TexCoord");

this.depthTextureId = depthTextureId;

}

Add this method, which is used to draw with these shaders on each frame:

// Put this at the bottom of the file.

public void drawDepth(@NonNull Frame frame) {

if (frame.hasDisplayGeometryChanged()) {

frame.transformCoordinates2d(

Coordinates2d.OPENGL_NORMALIZED_DEVICE_COORDINATES,

quadCoords,

Coordinates2d.TEXTURE_NORMALIZED,

quadTexCoords);

}

if (frame.getTimestamp() == 0 || depthTextureId == -1) {

return;

}

// Ensure position is rewound before use.

quadTexCoords.position(0);

// No need to test or write depth, the screen quad has arbitrary depth, and is expected

// to be drawn first.

GLES20.glDisable(GLES20.GL_DEPTH_TEST);

GLES20.glDepthMask(false);

GLES20.glActiveTexture(GLES20.GL_TEXTURE0);

GLES20.glBindTexture(GLES20.GL_TEXTURE_2D, depthTextureId);

GLES20.glUseProgram(depthProgram);

GLES20.glUniform1i(depthTextureParam, 0);

// Set the vertex positions and texture coordinates.

GLES20.glVertexAttribPointer(

depthQuadPositionParam, COORDS_PER_VERTEX, GLES20.GL_FLOAT, false, 0, quadCoords);

GLES20.glVertexAttribPointer(

depthQuadTexCoordParam, TEXCOORDS_PER_VERTEX, GLES20.GL_FLOAT, false, 0, quadTexCoords);

// Draws the quad.

GLES20.glEnableVertexAttribArray(depthQuadPositionParam);

GLES20.glEnableVertexAttribArray(depthQuadTexCoordParam);

GLES20.glDrawArrays(GLES20.GL_TRIANGLE_STRIP, 0, 4);

GLES20.glDisableVertexAttribArray(depthQuadPositionParam);

GLES20.glDisableVertexAttribArray(depthQuadTexCoordParam);

// Restore the depth state for further drawing.

GLES20.glDepthMask(true);

GLES20.glEnable(GLES20.GL_DEPTH_TEST);

ShaderUtil.checkGLError(TAG, "BackgroundRendererDraw");

}

Add a toggle button

Now that you have the capability to render the depth map, use it! Add a button that toggles this rendering on and off.

At the top of the DepthCodelabActivity file, add an import for the button to use:

import android.widget.Button;

Update the class to add a boolean member indicating if depth-rendering is toggled: (it's off by default):

private boolean showDepthMap = false;

Next, add the button that controls the showDepthMap boolean to the end of the onCreate() method:

final Button toggleDepthButton = (Button) findViewById(R.id.toggle_depth_button);

toggleDepthButton.setOnClickListener(

view -> {

if (isDepthSupported) {

showDepthMap = !showDepthMap;

toggleDepthButton.setText(showDepthMap ? R.string.hide_depth : R.string.show_depth);

} else {

showDepthMap = false;

toggleDepthButton.setText(R.string.depth_not_available);

}

});

Add these strings to res/values/strings.xml:

<string translatable="false" name="show_depth">Show Depth</string>

<string translatable="false" name="hide_depth">Hide Depth</string>

<string translatable="false" name="depth_not_available">Depth Not Available</string>

Add this button to the bottom of the app layout in res/layout/activity_main.xml:

<Button

android:id="@+id/toggle_depth_button"

android:layout_width="wrap_content"

android:layout_height="wrap_content"

android:layout_margin="20dp"

android:gravity="center"

android:text="@string/show_depth"

android:layout_alignParentRight="true"

android:layout_alignParentTop="true"/>

The button now controls the value of the boolean showDepthMap. Use this flag to control whether the depth map gets rendered.

Back in the method onDrawFrame() in DepthCodelabActivity, add:

// Add this snippet just under backgroundRenderer.draw(frame);

if (showDepthMap) {

backgroundRenderer.drawDepth(frame);

}

Pass the depth texture to the backgroundRenderer by adding the following line in onSurfaceCreated():

// Add to onSurfaceCreated() after backgroundRenderer.createonGlThread(/*context=*/ this);

backgroundRenderer.createDepthShaders(/*context=*/ this, depthTexture.getDepthTexture());

Now you can see the depth image of each frame by pressing the button in the upper-right of the screen.

|

| ||

Running without Depth API support | Running with Depth API support | ||

[Optional] Fancy depth animation

The app currently shows the depth map directly. Red pixels represent areas that are close. Blue pixels represent areas that are far away.

|

|

There are many ways to convey depth information. In this section, you'll modify the shader to pulse depth periodically, by modifying the shader to only show depth within bands that repeatedly move away from the camera.

Start by adding these variables to the top of background_show_depth_map.frag:

uniform float u_DepthRangeToRenderMm;

const float kDepthWidthToRenderMm = 350.0;

- Then, use these values to filter which pixels to cover with depth values in the shader's

main()function:

// Add this line at the end of main().

gl_FragColor.a = clamp(1.0 - abs((depth_mm - u_DepthRangeToRenderMm) / kDepthWidthToRenderMm), 0.0, 1.0);

Next, update BackgroundRenderer.java to maintain these shader params. Add the following fields to the top of the class:

private static final float MAX_DEPTH_RANGE_TO_RENDER_MM = 20000.0f;

private float depthRangeToRenderMm = 0.0f;

private int depthRangeToRenderMmParam;

Inside the createDepthShaders() method, add the following to match these params with the shader program:

depthRangeToRenderMmParam = GLES20.glGetUniformLocation(depthProgram, "u_DepthRangeToRenderMm");

- Finally, you can control this range over time within the

drawDepth()method. Add the following code, which increments this range every time a frame is drawn:

// Enables alpha blending.

GLES20.glEnable(GLES20.GL_BLEND);

GLES20.glBlendFunc(GLES20.GL_SRC_ALPHA, GLES20.GL_ONE_MINUS_SRC_ALPHA);

// Updates range each time draw() is called.

depthRangeToRenderMm += 50.0f;

if (depthRangeToRenderMm > MAX_DEPTH_RANGE_TO_RENDER_MM) {

depthRangeToRenderMm = 0.0f;

}

// Passes latest value to the shader.

GLES20.glUniform1f(depthRangeToRenderMmParam, depthRangeToRenderMm);

Now the depth is visualized as an animated pulse flowing through your scene.

Feel free to change the values provided here to make the pulse slower, faster, wider, narrower, etc. You can also try exploring brand new ways to change the shader to show the depth information!

8. Use the Depth API for occlusion (Part 4)

Now you'll handle object occlusion in your app.

Occlusion refers to what happens when the virtual object can't be fully rendered, because there are real objects between the virtual object and the camera. Managing occlusion is essential for AR experiences to be immersive.

Properly rendering virtual objects in real time enhances the realism and believability of the augmented scene. For more examples, please see our video on blending realities with the Depth API.

In this section, you'll update your app to include virtual objects only if depth is available.

Adding new object shaders

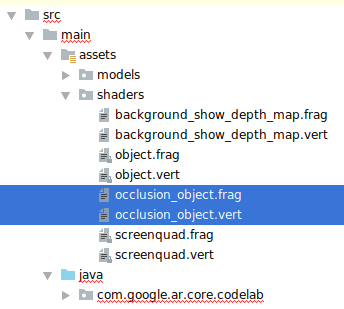

Like in the previous sections, you will add new shaders to support depth information. This time you can copy the existing object shaders and add occlusion functionality.

It's important to keep both versions of the object shaders, so that your app can make a run-time decision whether to support depth.

Make copies of the object.vert and object.frag shader files in the src/main/assets/shaders directory.

|

|

Inside occlusion_object.vert, add the following variable above main():

varying vec3 v_ScreenSpacePosition;

Set this variable at the bottom of main():

v_ScreenSpacePosition = gl_Position.xyz / gl_Position.w;

Update occlusion_object.frag by adding these variables above main() at the top of the file:

varying vec3 v_ScreenSpacePosition;

uniform sampler2D u_Depth;

uniform mat3 u_UvTransform;

uniform float u_DepthTolerancePerMm;

uniform float u_OcclusionAlpha;

uniform float u_DepthAspectRatio;

- Add these helper functions above

main()in the shader to make it easier to deal with depth information:

float GetDepthMillimeters(in vec2 depth_uv) {

// Depth is packed into the red and green components of its texture.

// The texture is a normalized format, storing millimeters.

vec3 packedDepthAndVisibility = texture2D(u_Depth, depth_uv).xyz;

return dot(packedDepthAndVisibility.xy, vec2(255.0, 256.0 * 255.0));

}

// Returns linear interpolation position of value between min and max bounds.

// E.g., InverseLerp(1100, 1000, 2000) returns 0.1.

float InverseLerp(in float value, in float min_bound, in float max_bound) {

return clamp((value - min_bound) / (max_bound - min_bound), 0.0, 1.0);

}

// Returns a value between 0.0 (not visible) and 1.0 (completely visible)

// Which represents how visible or occluded is the pixel in relation to the

// depth map.

float GetVisibility(in vec2 depth_uv, in float asset_depth_mm) {

float depth_mm = GetDepthMillimeters(depth_uv);

// Instead of a hard z-buffer test, allow the asset to fade into the

// background along a 2 * u_DepthTolerancePerMm * asset_depth_mm

// range centered on the background depth.

float visibility_occlusion = clamp(0.5 * (depth_mm - asset_depth_mm) /

(u_DepthTolerancePerMm * asset_depth_mm) + 0.5, 0.0, 1.0);

// Depth close to zero is most likely invalid, do not use it for occlusions.

float visibility_depth_near = 1.0 - InverseLerp(

depth_mm, /*min_depth_mm=*/150.0, /*max_depth_mm=*/200.0);

// Same for very high depth values.

float visibility_depth_far = InverseLerp(

depth_mm, /*min_depth_mm=*/17500.0, /*max_depth_mm=*/20000.0);

float visibility =

max(max(visibility_occlusion, u_OcclusionAlpha),

max(visibility_depth_near, visibility_depth_far));

return visibility;

}

Now update main() in occlusion_object.frag to be depth-aware and apply occlusion. Add the following lines at the bottom of the file:

const float kMToMm = 1000.0;

float asset_depth_mm = v_ViewPosition.z * kMToMm * -1.;

vec2 depth_uvs = (u_UvTransform * vec3(v_ScreenSpacePosition.xy, 1)).xy;

gl_FragColor.a *= GetVisibility(depth_uvs, asset_depth_mm);

Now that you have a new version of your object shaders, you can modify the renderer code.

Rendering object occlusion

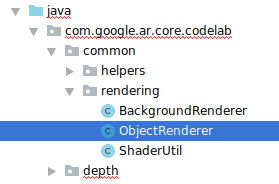

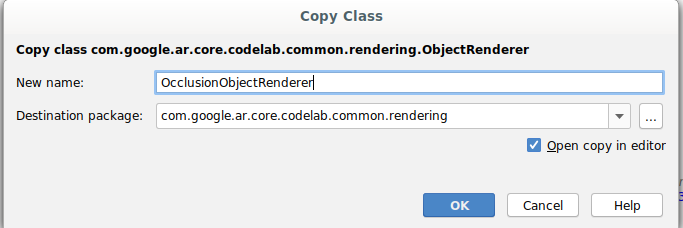

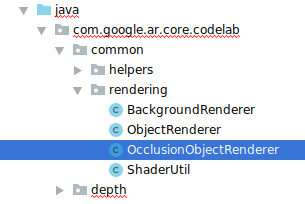

Make a copy of the ObjectRenderer class next, found in src/main/java/com/google/ar/core/codelab/common/rendering/ObjectRenderer.java.

- Select the

ObjectRendererclass - Right click > Copy

- Select the rendering folder

- Right click > Paste

- Rename the class to

OcclusionObjectRenderer

The new, renamed class should now appear in the same folder:

Open the newly created OcclusionObjectRenderer.java, and change the shader paths at the top of the file:

private static final String VERTEX_SHADER_NAME = "shaders/occlusion_object.vert";

private static final String FRAGMENT_SHADER_NAME = "shaders/occlusion_object.frag";

- Add these depth-related member variables with the others at the top of the class. The variables will adjust the sharpness of the occlusion border.

// Shader location: depth texture

private int depthTextureUniform;

// Shader location: transform to depth uvs

private int depthUvTransformUniform;

// Shader location: depth tolerance property

private int depthToleranceUniform;

// Shader location: maximum transparency for the occluded part.

private int occlusionAlphaUniform;

private int depthAspectRatioUniform;

private float[] uvTransform = null;

private int depthTextureId;

Create these member variables with default values at the top of the class:

// These values will be changed each frame based on the distance to the object.

private float depthAspectRatio = 0.0f;

private final float depthTolerancePerMm = 0.015f;

private final float occlusionsAlpha = 0.0f;

Initialize the uniform parameters for the shader in the createOnGlThread() method:

// Occlusions Uniforms. Add these lines before the first call to ShaderUtil.checkGLError

// inside the createOnGlThread() method.

depthTextureUniform = GLES20.glGetUniformLocation(program, "u_Depth");

depthUvTransformUniform = GLES20.glGetUniformLocation(program, "u_UvTransform");

depthToleranceUniform = GLES20.glGetUniformLocation(program, "u_DepthTolerancePerMm");

occlusionAlphaUniform = GLES20.glGetUniformLocation(program, "u_OcclusionAlpha");

depthAspectRatioUniform = GLES20.glGetUniformLocation(program, "u_DepthAspectRatio");

- Ensure these values are updated every time they are drawn by updating the

draw()method:

// Add after other GLES20.glUniform calls inside draw().

GLES20.glActiveTexture(GLES20.GL_TEXTURE1);

GLES20.glBindTexture(GLES20.GL_TEXTURE_2D, depthTextureId);

GLES20.glUniform1i(depthTextureUniform, 1);

GLES20.glUniformMatrix3fv(depthUvTransformUniform, 1, false, uvTransform, 0);

GLES20.glUniform1f(depthToleranceUniform, depthTolerancePerMm);

GLES20.glUniform1f(occlusionAlphaUniform, occlusionsAlpha);

GLES20.glUniform1f(depthAspectRatioUniform, depthAspectRatio);

Add the following lines within draw()to enable blend-mode in rendering so that transparency can be applied to virtual objects when they are occluded:

// Add these lines just below the code-block labeled "Enable vertex arrays"

GLES20.glEnable(GLES20.GL_BLEND);

GLES20.glBlendFunc(GLES20.GL_SRC_ALPHA, GLES20.GL_ONE_MINUS_SRC_ALPHA);

// Add these lines just above the code-block labeled "Disable vertex arrays"

GLES20.glDisable(GLES20.GL_BLEND);

GLES20.glDepthMask(true);

- Add the following methods so that callers of

OcclusionObjectRenderercan provide the depth information:

// Add these methods at the bottom of the OcclusionObjectRenderer class.

public void setUvTransformMatrix(float[] transform) {

uvTransform = transform;

}

public void setDepthTexture(int textureId, int width, int height) {

depthTextureId = textureId;

depthAspectRatio = (float) width / (float) height;

}

Controlling object occlusion

Now that you have a new OcclusionObjectRenderer, you can add it to your DepthCodelabActivity and choose when and how to employ occlusion rendering.

Enable this logic by adding an instance of OcclusionObjectRenderer to the activity, so that both ObjectRenderer and OcclusionObjectRenderer are members of DepthCodelabActivity:

// Add this include at the top of the file.

import com.google.ar.core.codelab.common.rendering.OcclusionObjectRenderer;

// Add this member just below the existing "virtualObject", so both are present.

private final OcclusionObjectRenderer occludedVirtualObject = new OcclusionObjectRenderer();

- You can next control when this

occludedVirtualObjectgets used based on whether the current device supports the Depth API. Add these lines inside of theonSurfaceCreatedmethod, below wherevirtualObjectis configured:

if (isDepthSupported) {

occludedVirtualObject.createOnGlThread(/*context=*/ this, "models/andy.obj", "models/andy.png");

occludedVirtualObject.setDepthTexture(

depthTexture.getDepthTexture(),

depthTexture.getDepthWidth(),

depthTexture.getDepthHeight());

occludedVirtualObject.setMaterialProperties(0.0f, 2.0f, 0.5f, 6.0f);

}

On devices where depth is not supported, the occludedVirtualObject instance gets created but is unused. On phones with depth, both versions are initialized, and a run-time decision is made which version of the renderer to use when drawing.

Inside the onDrawFrame() method, find the existing code:

virtualObject.updateModelMatrix(anchorMatrix, scaleFactor);

virtualObject.draw(viewmtx, projmtx, colorCorrectionRgba, OBJECT_COLOR);

Replace this code with the following:

if (isDepthSupported) {

occludedVirtualObject.updateModelMatrix(anchorMatrix, scaleFactor);

occludedVirtualObject.draw(viewmtx, projmtx, colorCorrectionRgba, OBJECT_COLOR);

} else {

virtualObject.updateModelMatrix(anchorMatrix, scaleFactor);

virtualObject.draw(viewmtx, projmtx, colorCorrectionRgba, OBJECT_COLOR);

}

Lastly, ensure that the depth image is mapped correctly onto the output rendering. Since the depth image is a different resolution, and potentially a different aspect ratio than your screen, the texture coordinates may be different between itself and the camera image.

- Add the helper method

getTextureTransformMatrix()to the bottom of the file. This method returns a transformation matrix that, when applied, makes screen space UVs match correctly with the quad texture coordinates that are used to render the camera feed. It also takes device orientation into account.

private static float[] getTextureTransformMatrix(Frame frame) {

float[] frameTransform = new float[6];

float[] uvTransform = new float[9];

// XY pairs of coordinates in NDC space that constitute the origin and points along the two

// principal axes.

float[] ndcBasis = {0, 0, 1, 0, 0, 1};

// Temporarily store the transformed points into outputTransform.

frame.transformCoordinates2d(

Coordinates2d.OPENGL_NORMALIZED_DEVICE_COORDINATES,

ndcBasis,

Coordinates2d.TEXTURE_NORMALIZED,

frameTransform);

// Convert the transformed points into an affine transform and transpose it.

float ndcOriginX = frameTransform[0];

float ndcOriginY = frameTransform[1];

uvTransform[0] = frameTransform[2] - ndcOriginX;

uvTransform[1] = frameTransform[3] - ndcOriginY;

uvTransform[2] = 0;

uvTransform[3] = frameTransform[4] - ndcOriginX;

uvTransform[4] = frameTransform[5] - ndcOriginY;

uvTransform[5] = 0;

uvTransform[6] = ndcOriginX;

uvTransform[7] = ndcOriginY;

uvTransform[8] = 1;

return uvTransform;

}

getTextureTransformMatrix() requires the following import at the top of the file:

import com.google.ar.core.Coordinates2d;

You want to compute the transform between these texture coordinates whenever the screen texture changes (such as if the screen rotates). This functionality is gated.

Add the following flag at the top of the file:

// Add this member at the top of the file.

private boolean calculateUVTransform = true;

- Inside

onDrawFrame(), check if the stored transformation needs to be recomputed after the frame and camera are created:

// Add these lines inside onDrawFrame() after frame.getCamera().

if (frame.hasDisplayGeometryChanged() || calculateUVTransform) {

calculateUVTransform = false;

float[] transform = getTextureTransformMatrix(frame);

occludedVirtualObject.setUvTransformMatrix(transform);

}

With these changes in place, you can now run the app with virtual object occlusion!

Your app should now run gracefully on all phones, and automatically use depth-for-occlusion when it is supported.

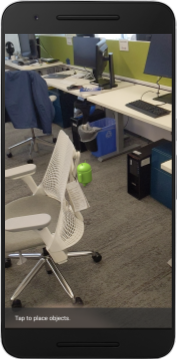

|

| ||

Running app with Depth API support | Running app without Depth API support | ||

9. [Optional] Improve occlusion quality

The method for depth-based occlusion, implemented above, provides occlusion with sharp boundaries. As the camera moves farther away from the object, the depth measurements can become less accurate, which may result in visual artifacts.

We can mitigate this issue by adding additional blur to the occlusion test, which yields a smoother edge to hidden virtual objects.

occlusion_object.frag

Add the following uniform variable at the top of occlusion_object.frag:

uniform float u_OcclusionBlurAmount;

Add this helper function just above main() in the shader, which applies a kernel blur to the occlusion sampling:

float GetBlurredVisibilityAroundUV(in vec2 uv, in float asset_depth_mm) {

// Kernel used:

// 0 4 7 4 0

// 4 16 26 16 4

// 7 26 41 26 7

// 4 16 26 16 4

// 0 4 7 4 0

const float kKernelTotalWeights = 269.0;

float sum = 0.0;

vec2 blurriness = vec2(u_OcclusionBlurAmount,

u_OcclusionBlurAmount * u_DepthAspectRatio);

float current = 0.0;

current += GetVisibility(uv + vec2(-1.0, -2.0) * blurriness, asset_depth_mm);

current += GetVisibility(uv + vec2(+1.0, -2.0) * blurriness, asset_depth_mm);

current += GetVisibility(uv + vec2(-1.0, +2.0) * blurriness, asset_depth_mm);

current += GetVisibility(uv + vec2(+1.0, +2.0) * blurriness, asset_depth_mm);

current += GetVisibility(uv + vec2(-2.0, +1.0) * blurriness, asset_depth_mm);

current += GetVisibility(uv + vec2(+2.0, +1.0) * blurriness, asset_depth_mm);

current += GetVisibility(uv + vec2(-2.0, -1.0) * blurriness, asset_depth_mm);

current += GetVisibility(uv + vec2(+2.0, -1.0) * blurriness, asset_depth_mm);

sum += current * 4.0;

current = 0.0;

current += GetVisibility(uv + vec2(-2.0, -0.0) * blurriness, asset_depth_mm);

current += GetVisibility(uv + vec2(+2.0, +0.0) * blurriness, asset_depth_mm);

current += GetVisibility(uv + vec2(+0.0, +2.0) * blurriness, asset_depth_mm);

current += GetVisibility(uv + vec2(-0.0, -2.0) * blurriness, asset_depth_mm);

sum += current * 7.0;

current = 0.0;

current += GetVisibility(uv + vec2(-1.0, -1.0) * blurriness, asset_depth_mm);

current += GetVisibility(uv + vec2(+1.0, -1.0) * blurriness, asset_depth_mm);

current += GetVisibility(uv + vec2(-1.0, +1.0) * blurriness, asset_depth_mm);

current += GetVisibility(uv + vec2(+1.0, +1.0) * blurriness, asset_depth_mm);

sum += current * 16.0;

current = 0.0;

current += GetVisibility(uv + vec2(+0.0, +1.0) * blurriness, asset_depth_mm);

current += GetVisibility(uv + vec2(-0.0, -1.0) * blurriness, asset_depth_mm);

current += GetVisibility(uv + vec2(-1.0, -0.0) * blurriness, asset_depth_mm);

current += GetVisibility(uv + vec2(+1.0, +0.0) * blurriness, asset_depth_mm);

sum += current * 26.0;

sum += GetVisibility(uv , asset_depth_mm) * 41.0;

return sum / kKernelTotalWeights;

}

Replace this existing line in main():

gl_FragColor.a *= GetVisibility(depth_uvs, asset_depth_mm);

with this line:

gl_FragColor.a *= GetBlurredVisibilityAroundUV(depth_uvs, asset_depth_mm);

Update the renderer to take advantage of this new shader functionality.

OcclusionObjectRenderer.java

Add the following member variables at the top of the class:

private int occlusionBlurUniform;

private final float occlusionsBlur = 0.01f;

Add the following inside the createOnGlThread method:

// Add alongside the other calls to GLES20.glGetUniformLocation.

occlusionBlurUniform = GLES20.glGetUniformLocation(program, "u_OcclusionBlurAmount");

Add the following inside the draw method:

// Add alongside the other calls to GLES20.glUniform1f.

GLES20.glUniform1f(occlusionBlurUniform, occlusionsBlur);

| Visual ComparisonThe occlusion boundary should now be smoother with these changes. |

10. Build-Run-Test

Build and Run your app

- Plug in an Android device via USB.

- Choose File > Build and Run.

- Save As: ARCodeLab.apk.

- Wait for the app to build and deploy to your device.

The first time you attempt to deploy the app to your device:

- You'll need to Allow USB debugging on the device. Select OK to continue.

- You will be asked if the app has permission to use the device camera. Allow access to continue using AR functionality.

Testing your app

When you run your app, you can test its basic behavior by holding your device, moving around your space, and slowly scanning an area. Try to collect at least 10 seconds of data and scan the area from several directions before moving to the next step.

Troubleshooting

Setting up your Android device for development

- Connect your device to your development machine with a USB cable. If you develop using Windows, you might need to install the appropriate USB driver for your device.

- Perform the following steps to enable USB debugging in the Developer options window:

- Open the Settings app.

- If your device uses Android v8.0 or higher, select System. Otherwise, proceed to the next step.

- Scroll to the bottom and select About phone.

- Scroll to the bottom and tap Build number 7 times.

- Return to the previous screen, scroll to the bottom, and tap Developer options.

- In the Developer options window, scroll down to find and enable USB debugging.

You can find more detailed information about this process on Google's android developer website.

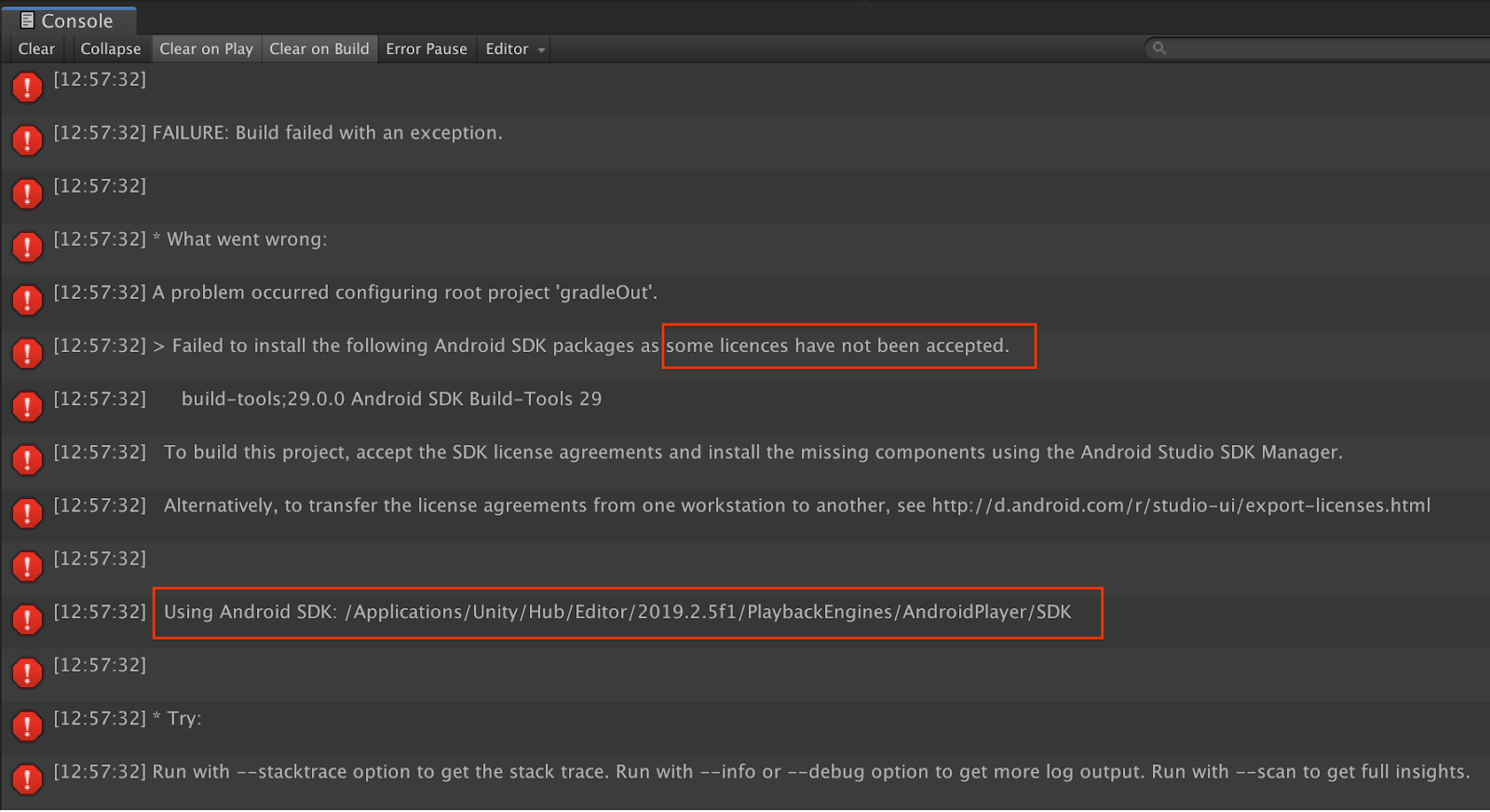

Build failures related to licenses

If you encounter a build failure related to licenses (Failed to install the following Android SDK packages as some licences have not been accepted), you can use the following commands to review and accept these licenses:

cd <path to Android SDK>

tools/bin/sdkmanager --licenses

11. Congratulations

Congratulations, you've successfully built and run your first depth-based Augmented Reality app using Google's ARCore Depth API!