1. Overview

The Serverless Migration Station series of codelabs (self-paced, hands-on tutorials) and related videos aim to help Google Cloud serverless developers modernize their appications by guiding them through one or more migrations, primarily moving away from legacy services. Doing so makes your apps more portable and gives you more options and flexibility, enabling you to integrate with and access a wider range of Cloud products and more easily upgrade to newer language releases. While initially focusing on the earliest Cloud users, primarily App Engine (standard environment) developers, this series is broad enough to include other serverless platforms like Cloud Functions and Cloud Run, or elsewhere if applicable.

This codelab teaches you how to include and use App Engine Task Queue pull tasks to the sample app from the Module 1 codelab. We add its use of pull tasks in this Module 18 tutorial, then migrate that usage to Cloud Pub/Sub ahead in Module 19. Those using Task Queues for push tasks will instead migrate to Cloud Tasks and should refer to Modules 7-9 instead.

You'll learn how to

- Use the App Engine Task Queue API/bundled service

- Add pull queue usage to a basic Python 2 Flask App Engine NDB app

What you'll need

- A Google Cloud Platform project with an active GCP billing account

- Basic Python skills

- Working knowledge of common Linux commands

- Basic knowledge of developing and deploying App Engine apps

- A working Module 1 App Engine app (complete its codelab [recommended] or copy the app from the repo)

Survey

How will you use this tutorial?

How would you rate your experience with Python?

How would you rate your experience with using Google Cloud services?

2. Background

In order to migrate from App Engine Task Queue pull tasks, add its usage to the existing Flask and App Engine NDB app resulting from the Module 1 codelab. The sample app displays the most recent visits to the end-user. That's fine, but it's even more interesting to also track the visitors to see who visits the most.

While we could use push tasks for these visitor counts, we want to divide the responsibility between the sample app whose job it is to register visits and immediately respond to users, and a designated "worker" whose job it is to tally up the visitor counts outside the normal request-response workflow.

To implement this design, we're adding use of pull queues to the main application as well as supporting the worker functionality. The worker can run as a separate process (like a backend instance or code running on a VM that's always up), a cron job, or a basic command-line HTTP request using curl or wget. After this integration, you can migrate the app to Cloud Pub/Sub in the next (Module 19) codelab.

This tutorial features the following steps:

- Setup/Prework

- Update configuration

- Modify application code

3. Setup/Prework

This section explains how to:

- Set up your Cloud project

- Get baseline sample app

- (Re)Deploy and validate baseline app

These steps ensure you're starting with working code.

1. Setup project

If you completed the Module 1 codelab, reuse that same project (and code). Alternatively, create a brand new project or reuse another existing project. Ensure the project has an active billing account and an enabled App Engine app. Find your project ID as you will need to have it several times in this codelab and use it whenever you encounter the PROJECT_ID variable.

2. Get baseline sample app

One of the prerequisites to this codelab is to have a working Module 1 App Engine app. Complete the Module 1 codelab (recommended) or copy the Module 1 app from the repo. Whether you use yours or ours, the Module 1 code is where we'll "START." This codelab walks you through each step, concluding with code that resembles what's in the Module 18 repo folder "FINISH".

- START: Module 1 folder (Python 2)

- FINISH: Module 18 folder (Python 2)

- Entire repo (to clone or download ZIP file)

Regardless which Module 1 app you use, the folder should look like the output below, possibly with a lib folder as well:

$ ls README.md appengine_config.py requirements.txt app.yaml main.py templates

3. (Re)Deploy baseline app

Execute the following steps to deploy the Module 1 app:

- Delete the

libfolder if there is one and run:pip install -t lib -r requirements.txtto repopulatelib. You may need to use thepip2command instead if you have both Python 2 and 3 installed. - Ensure you've installed and initialized the

gcloudcommand-line tool and reviewed its usage. - Set your Cloud project with

gcloud config set projectPROJECT_IDif you don't want to enter yourPROJECT_IDwith eachgcloudcommand issued. - Deploy the sample app with

gcloud app deploy - Confirm the Module 1 app runs as expected and displays the most recent visits (illustrated below)

4. Update configuration

No changes are necessary to the standard App Engine configuration files (app.yaml, requirements.txt, appengine_config.py). Instead, add a new configuration file, queue.yaml, with the following contents, putting it in the same top-level directory:

queue:

- name: pullq

mode: pull

The queue.yaml file specifies all task queues that exist for your app (except the default [push] queue which is automatically created by App Engine). In this case, there is only one, a pull queue named pullq. App Engine requires the mode directive be specified as pull, otherwise it creates a push queue by default. Learn more about creating pull queues in the documentation. Also see the queue.yaml reference page for other options.

Deploy this file separately from your app. You'll still use gcloud app deploy but also provide queue.yaml on the command line:

$ gcloud app deploy queue.yaml Configurations to update: descriptor: [/tmp/mod18-gaepull/queue.yaml] type: [task queues] target project: [my-project] WARNING: Caution: You are updating queue configuration. This will override any changes performed using 'gcloud tasks'. More details at https://cloud.google.com/tasks/docs/queue-yaml Do you want to continue (Y/n)? Updating config [queue]...⠹WARNING: We are using the App Engine app location (us-central1) as the default location. Please use the "--location" flag if you want to use a different location. Updating config [queue]...done. Task queues have been updated. Visit the Cloud Platform Console Task Queues page to view your queues and cron jobs. $

5. Modify application code

This section features updates to the following files:

main.py— add use of pull queues to the main applicationtemplates/index.html— update the web template to display the new data

Imports and constants

The first step is to add one new import and several constants to support pull queues:

- Add an import of the Task Queue library,

google.appengine.api.taskqueue. - Add three constants to support leasing the maximum number of pull tasks (

TASKS) for an hour (HOUR) from our pull queue (QUEUE). - Add a constant for displaying the most recent visits as well as top visitors (

LIMIT).

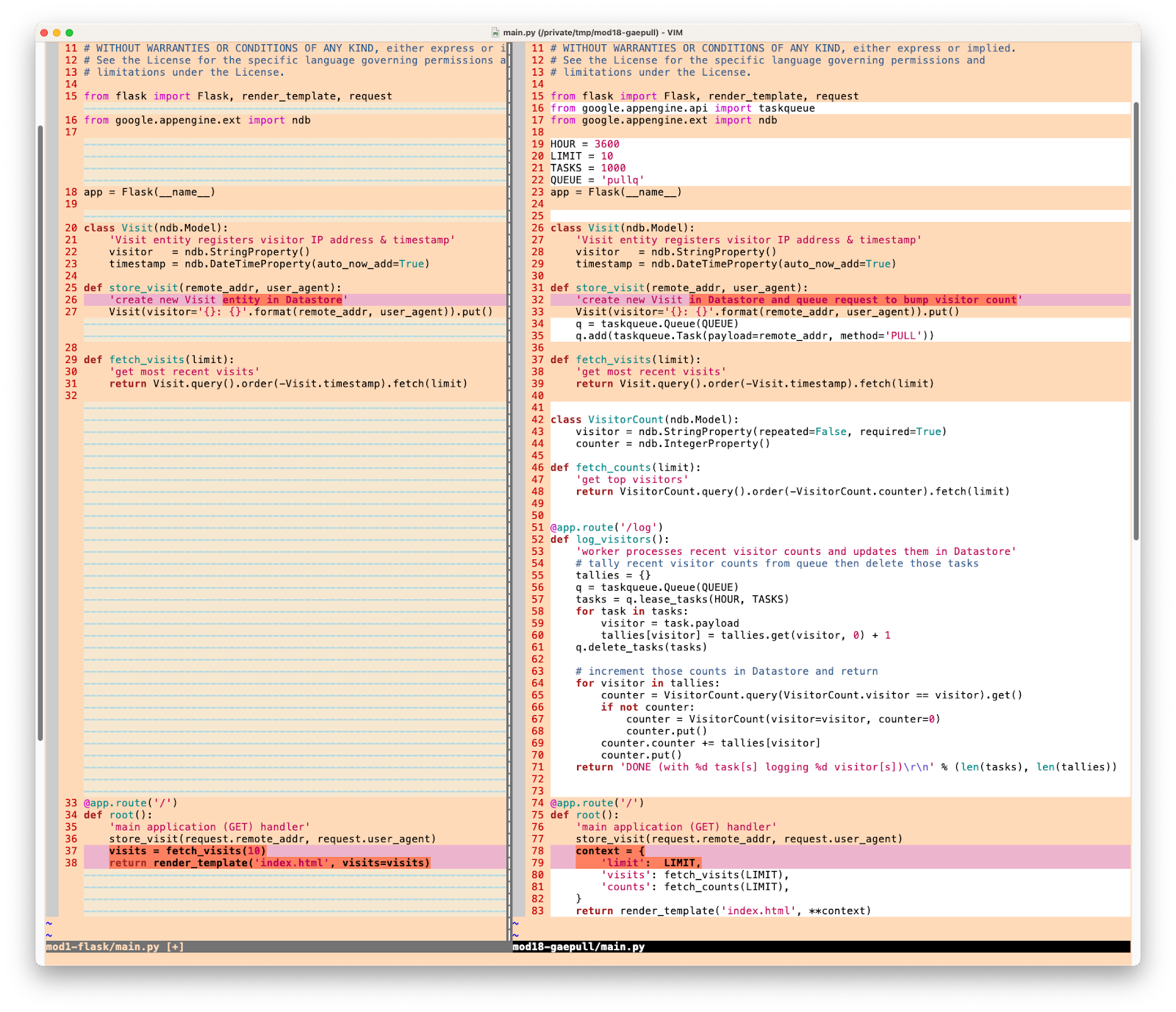

Below is the original code and what it looks like after making these updates:

BEFORE:

from flask import Flask, render_template, request

from google.appengine.ext import ndb

app = Flask(__name__)

AFTER:

from flask import Flask, render_template, request

from google.appengine.api import taskqueue

from google.appengine.ext import ndb

HOUR = 3600

LIMIT = 10

TASKS = 1000

QNAME = 'pullq'

QUEUE = taskqueue.Queue(QNAME)

app = Flask(__name__)

Add a pull task (gather data for task & create task in pull queue)

The data model Visit stays the same, as does querying for visits to display in fetch_visits(). The only change required in this part of the code is in store_visit(). In addition to registering the visit, add a task to the pull queue with the visitor's IP address so the worker can increment the visitor counter.

BEFORE:

class Visit(ndb.Model):

'Visit entity registers visitor IP address & timestamp'

visitor = ndb.StringProperty()

timestamp = ndb.DateTimeProperty(auto_now_add=True)

def store_visit(remote_addr, user_agent):

'create new Visit entity in Datastore'

Visit(visitor='{}: {}'.format(remote_addr, user_agent)).put()

def fetch_visits(limit):

'get most recent visits'

return Visit.query().order(-Visit.timestamp).fetch(limit)

AFTER:

class Visit(ndb.Model):

'Visit entity registers visitor IP address & timestamp'

visitor = ndb.StringProperty()

timestamp = ndb.DateTimeProperty(auto_now_add=True)

def store_visit(remote_addr, user_agent):

'create new Visit in Datastore and queue request to bump visitor count'

Visit(visitor='{}: {}'.format(remote_addr, user_agent)).put()

QUEUE.add(taskqueue.Task(payload=remote_addr, method='PULL'))

def fetch_visits(limit):

'get most recent visits'

return Visit.query().order(-Visit.timestamp).fetch(limit)

Create data model and query function for visitor tracking

Add a data model VisitorCount to track visitors; it should have fields for the visitor itself as well as an integer counter to track the number of visits. Then add a new function (alternatively, it can be a Python classmethod) named fetch_counts()to query for and return the top visitors in most-to-least order. Add the class and function right below the body of fetch_visits():

class VisitorCount(ndb.Model):

visitor = ndb.StringProperty(repeated=False, required=True)

counter = ndb.IntegerProperty()

def fetch_counts(limit):

'get top visitors'

return VisitCount.query().order(-VisitCount.counter).fetch(limit)

Add worker code

Add a new function log_visitors() to log the visitors through a GET request to /log. It uses a dictionary/hash to track the most recent visitor counts, leasing as many tasks as possible for an hour. For each task, it tallies all visits by the same visitor. With tallies in hand, the app then updates all corresponding VisitorCount entities already in Datastore or creates new ones if needed. The last step returns a plain text message indicating how many visitors got registered from how many processed tasks. Add this function to main.py right below fetch_counts():

@app.route('/log')

def log_visitors():

'worker processes recent visitor counts and updates them in Datastore'

# tally recent visitor counts from queue then delete those tasks

tallies = {}

tasks = QUEUE.lease_tasks(HOUR, TASKS)

for task in tasks:

visitor = task.payload

tallies[visitor] = tallies.get(visitor, 0) + 1

if tasks:

QUEUE.delete_tasks(tasks)

# increment those counts in Datastore and return

for visitor in tallies:

counter = VisitorCount.query(VisitorCount.visitor == visitor).get()

if not counter:

counter = VisitorCount(visitor=visitor, counter=0)

counter.put()

counter.counter += tallies[visitor]

counter.put()

return 'DONE (with %d task[s] logging %d visitor[s])\r\n' % (

len(tasks), len(tallies))

Update main handler with new display data

To display the top visitors, update the main handler root() to invoke fetch_counts(). Furthermore, the template will be updated to show the number of top visitors and most recent visits. Package the visitor counts along with the most recent visits from the call to fetch_visits() and drop that into a single context to pass to the web template. Below is the code before as well as after this change has been made:

BEFORE:

@app.route('/')

def root():

'main application (GET) handler'

store_visit(request.remote_addr, request.user_agent)

visits = fetch_visits(10)

return render_template('index.html', visits=visits)

AFTER:

@app.route('/')

def root():

'main application (GET) handler'

store_visit(request.remote_addr, request.user_agent)

context = {

'limit': LIMIT,

'visits': fetch_visits(LIMIT),

'counts': fetch_counts(LIMIT),

}

return render_template('index.html', **context)

These are all the changes required of main.py, and here is a pictorial representation of those updates for illustrative purposes to give you a broad idea of the changes you're making to main.py:

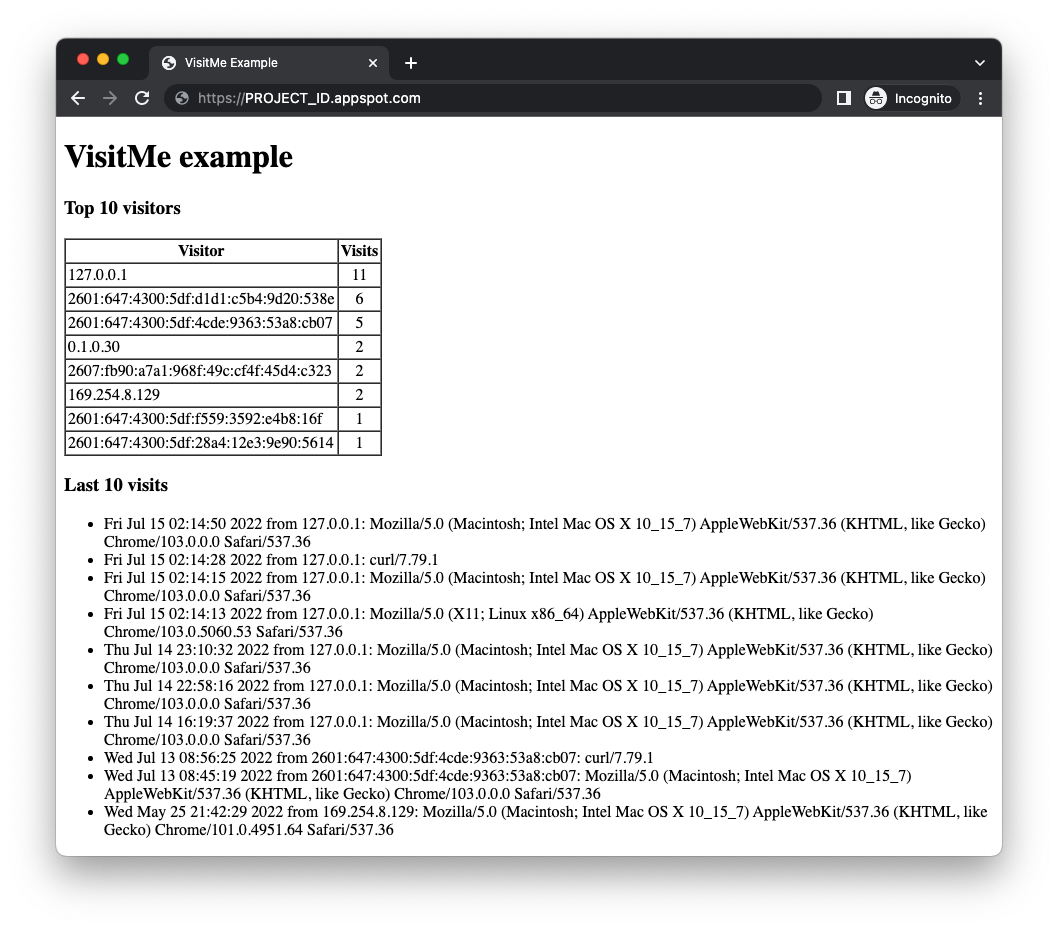

Update web template with new display data

The web template templates/index.html requires an update to display the top visitors in addition to the normal payload of most recent visitors. Drop the top visitors and their counts into a table at the top of the page and continue to render the most recent visits as before. The only other change is to specify the number shown via the limit variable rather than hardcoding the number. Here are the updates you should make to your web template:

BEFORE:

<!doctype html>

<html>

<head>

<title>VisitMe Example</title>

<body>

<h1>VisitMe example</h1>

<h3>Last 10 visits</h3>

<ul>

{% for visit in visits %}

<li>{{ visit.timestamp.ctime() }} from {{ visit.visitor }}</li>

{% endfor %}

</ul>

AFTER:

<!doctype html>

<html>

<head>

<title>VisitMe Example</title>

<body>

<h1>VisitMe example</h1>

<h3>Top {{ limit }} visitors</h3>

<table border=1 cellspacing=0 cellpadding=2>

<tr><th>Visitor</th><th>Visits</th></tr>

{% for count in counts %}

<tr><td>{{ count.visitor|e }}</td><td align="center">{{ count.counter }}</td></tr>

{% endfor %}

</table>

<h3>Last {{ limit }} visits</h3>

<ul>

{% for visit in visits %}

<li>{{ visit.timestamp.ctime() }} from {{ visit.visitor }}</li>

{% endfor %}

</ul>

This concludes the necessary changes adding the use of App Engine Task Queue pull tasks to the Module 1 sample app. Your directory now represents the Module 18 sample app and should contain these files:

$ ls README.md appengine_config.py queue.yaml templates app.yaml main.py requirements.txt

6. Summary/Cleanup

This section wraps up this codelab by deploying the app, verifying it works as intended and in any reflected output. Run the worker separately to process the visitor counts. After app validation, perform any clean-up steps and consider next steps.

Deploy and verify application

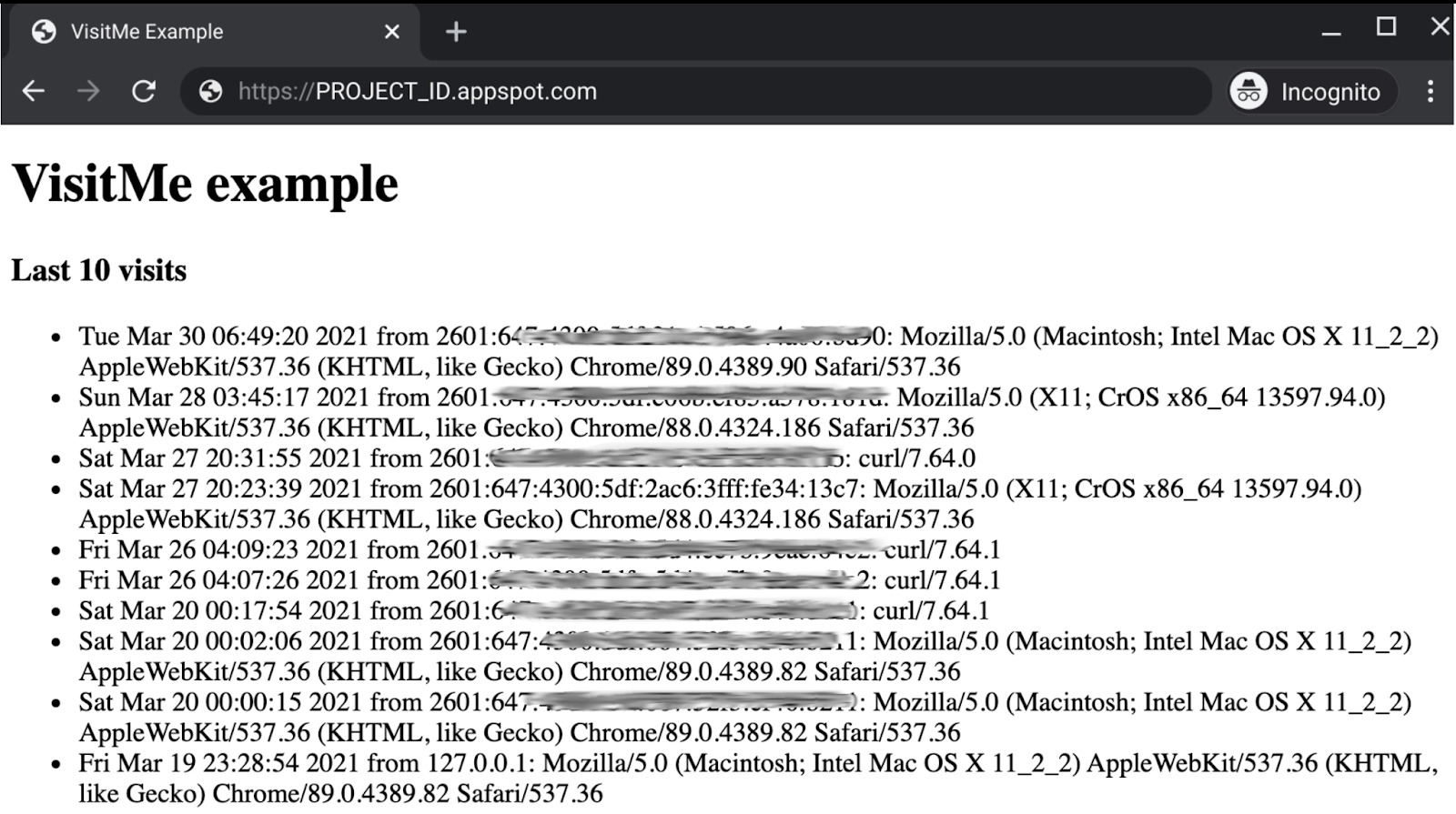

Ensure you've already set up your pull queue as we did near the top of this codelab with gcloud app deploy queue.yaml. If that has been completed and your sample app ready to go, deploy your app with gcloud app deploy. The output should be identical to the Module 1 app except that it now features a "top visitors" table at the top:

While the updated web frontend displays top visitors and most recent visits, realize the visitor counts do not include this visit. The app displays the previous visitor counts while dropping a new task incrementing this visitor's count in the pull queue, a task which is waiting to be processed.

You can execute the task by calling /log, in a avriety of ways:

- An App Engine backend service

- A

cronjob - A web browser

- A command-line HTTP request (

curl,wget, etc.)

For example, if you use curl to send a GET request to /log, your output would look like this, given you provided your PROJECT_ID:

$ curl https://PROJECT_ID.appspot.com/log DONE (with 1 task[s] logging 1 visitor[s])

The updated count will then be reflected on the next website visit. That's it!

Congratulations for completing this codelab for adding the use of App Engine Task Queue pull queue service to the sample app successfully. It's now ready for migrating to Cloud Pub/Sub, Cloud NDB, and Python 3 in Module 19.

Clean up

General

If you are done for now, we recommend you disable your App Engine app to avoid incurring billing. However if you wish to test or experiment some more, the App Engine platform has a free quota, and so as long as you don't exceed that usage tier, you shouldn't be charged. That's for compute, but there may also be charges for relevant App Engine services, so check its pricing page for more information. If this migration involves other Cloud services, those are billed separately. In either case, if applicable, see the "Specific to this codelab" section below.

For full disclosure, deploying to a Google Cloud serverless compute platform like App Engine incurs minor build and storage costs. Cloud Build has its own free quota as does Cloud Storage. Storage of that image uses up some of that quota. However, you might live in a region that does not have such a free tier, so be aware of your storage usage to minimize potential costs. Specific Cloud Storage "folders" you should review include:

console.cloud.google.com/storage/browser/LOC.artifacts.PROJECT_ID.appspot.com/containers/imagesconsole.cloud.google.com/storage/browser/staging.PROJECT_ID.appspot.com- The storage links above depend on your

PROJECT_IDand *LOC*ation, for example, "us" if your app is hosted in the USA.

On the other hand, if you're not going to continue with this application or other related migration codelabs and want to delete everything completely, shut down your project.

Specific to this codelab

The services listed below are unique to this codelab. Refer to each product's documentation for more information:

- The App Engine Task Queue service doesn't incur any additional billing per the pricing page for legacy bundled services like Task Queue.

- The App Engine Datastore service is provided by Cloud Datastore (Cloud Firestore in Datastore mode) which also has a free tier; see its pricing page for more information.

Next steps

In this "migration," you added Task Queue push queue usage to the Module 1 sample app, by adding support for tracking visitors, thereby implementing the Module 18 sample app. In the next migration, you will upgrade App Engine pull tasks to Cloud Pub/Sub. As of late 2021, users are no longer required to migrate to Cloud Pub/Sub when upgrading to Python 3. Read more about this in the next section.

For migrating to Cloud Pub/Sub, refer to Module 19 codelab. Beyond that are additional migrations to consider, such as Cloud Datastore, Cloud Memorystore, Cloud Storage, or Cloud Tasks (push queues). There are also cross-product migrations to Cloud Run and Cloud Functions. All Serverless Migration Station content (codelabs, videos, source code [when available]) can be accessed at its open source repo.

7. Migration to Python 3

In Fall 2021, the App Engine team extended support of many of the bundled services to 2nd generation runtimes (that have a 1st generation runtime). As a result, you are no longer required to migrate from bundled services like App Engine Task Queue to standalone Cloud or 3rd-party services like Cloud Pub/Sub when porting your app to Python 3. In other words, you can continue using Task Queue in Python 3 App Engine apps so long as you retrofit the code to access bundled services from next-generation runtimes.

You can learn more about how to migrate bundled services usage to Python 3 in the Module 17 codelab and its corresponding video. While that topic is out-of-scope for Module 18, linked below are Python 3 versions of the Module 1 app ported to Python 3 and still using App Engine NDB. (At some point, a Python 3 version of the Module 18 app will also be made available.)

8. Additional resources

Listed below are additional resources for developers further exploring this or related Migration Module as well as related products. This includes places to provide feedback on this content, links to the code, and various pieces of documentation you may find useful.

Codelab issues/feedback

If you find any issues with this codelab, please search for your issue first before filing. Links to search and create new issues:

Migration resources

Links to the repo folders for Module 1 (START) and Module 18 (FINISH) can be found in the table below. They can also be accessed from the repo for all App Engine codelab migrations; clone it or download a ZIP file.

Codelab | Python 2 | Python 3 |

code (not featured in this tutorial) | ||

Module 18 (this codelab) | N/A |

Online references

Below are resources relevant for this tutorial:

App Engine Task Queue

- App Engine Task Queue overview

- App Engine Task Queue pull queues overview

- App Engine Task Queue pull queue full sample app

- Creating Task Queue pull queues

- Google I/O 2011 pull queue launch video ( Votelator sample app)

queue.yamlreferencequeue.yamlvs. Cloud Tasks- Pull queues to Pub/Sub migration guide

- App Engine Task Queue pull queues to Cloud Pub/Sub documentation sample

App Engine platform

App Engine documentation

Python 2 App Engine (standard environment) runtime

Python 3 App Engine (standard environment) runtime

Differences between Python 2 & 3 App Engine (standard environment) runtimes

Python 2 to 3 App Engine (standard environment) migration guide

App Engine pricing and quotas information

Second generation App Engine platform launch (2018)

Long-term support for legacy runtimes

Documentation migration samples

Other Cloud information

- Python on Google Cloud Platform

- Google Cloud Python client libraries

- Google Cloud "Always Free" tier

- Google Cloud SDK (

gcloudcommand-line tool) - All Google Cloud documentation

Videos

- Serverless Migration Station

- Serverless Expeditions

- Subscribe to Google Cloud Tech

- Subscribe to Google Developers

License

This work is licensed under a Creative Commons Attribution 2.0 Generic License.