1. Intro

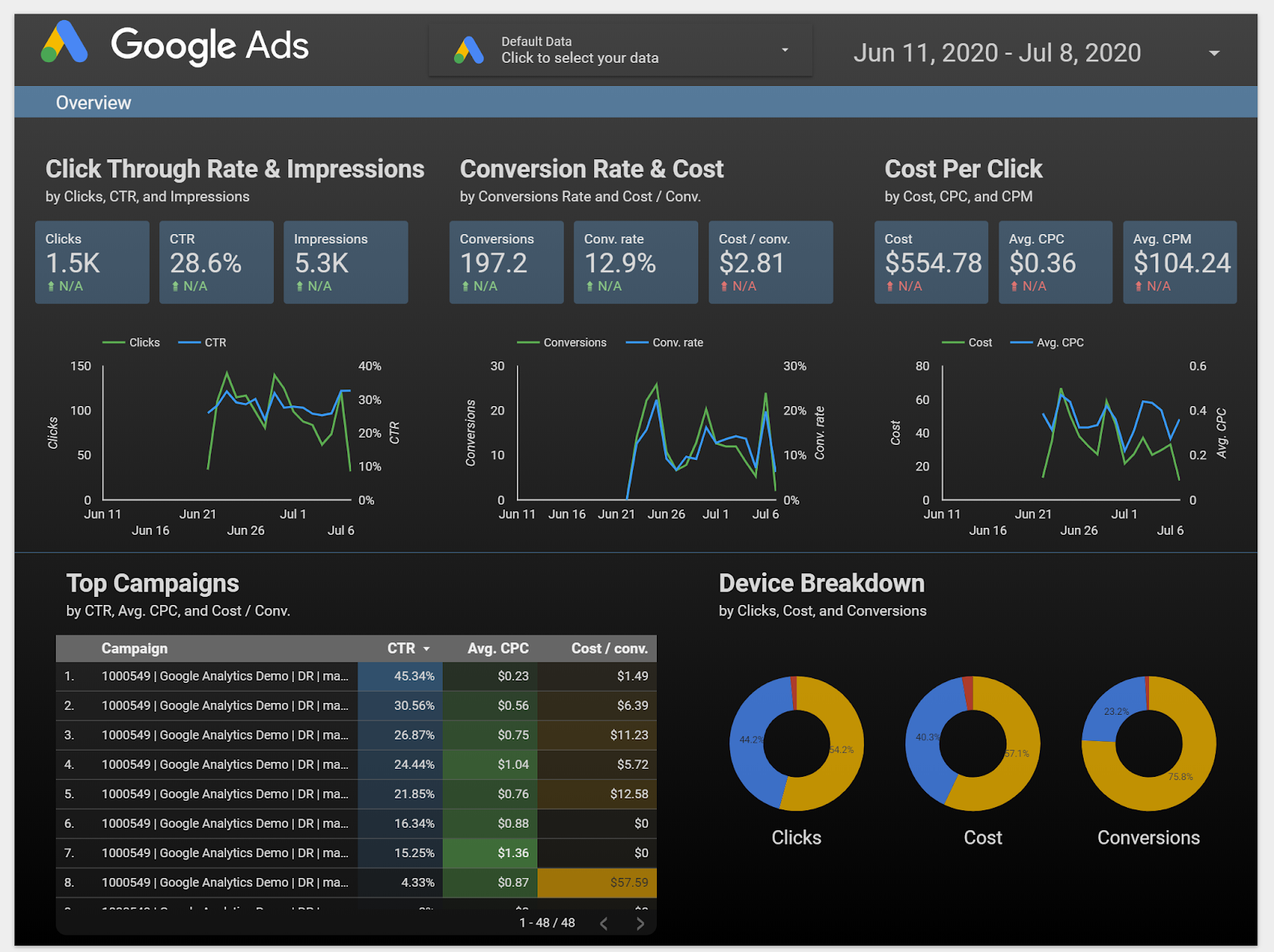

Looker Studio lets you build live, interactive dashboards with beautiful data visualizations, for free. Fetch your data from a variety of sources and create unlimited reports in Looker Studio, with full editing and sharing capabilities. The following screenshot is an example Looker Studio dashboard:

( Click here to view this example report in Looker Studio)

Community Connectors is a feature for Looker Studio that lets you use Apps Script to build connectors to any internet accessible data source. Community Connectors are built by the Looker Studio community. That means anyone can build Community Connectors. You can also share Community Connectors with other people so they can access their own data from within Looker Studio.

You can use Community Connectors in different use cases:

- You are visualizing data from a commercial platform (e.g. social media, marketing, analytics, etc)

- You are visualizing on-premise enterprise data (e.g. sales data from an on-premise MySQL database)

- You are providing a way for your customers to visualize their data from your service

- You are creating a push button reporting platform

- You are visualizing your own data from a web source (e.g. creating your Google Fit dashboard)

What you'll learn

- How a Looker Studio Community Connector works

- How to use Google Apps Script to build a Community Connector

- How to use Community Connectors in Looker Studio

What you'll need

- Access to the internet and a web browser

- A Google account

- Familiarity with basic Javascript and Web APIs

2. Quick Survey

Why did you choose this codelab?

How do you plan to use this codelab/tutorial?

How would you rate your familiarity with Looker Studio?

What best describes your background?

You can move to the next page to submit the survey information.

3. Overview of Community Connectors

Looker Studio Community Connectors enable direct connections from Looker Studio to any internet accessible data source. You can connect to commercial platforms, public datasets, or your own on-premise private data. Community Connectors can fetch data through Web APIs, JDBC APIs, flat files (CSV, JSON, XML), and Apps Script Services.

Consider a scenario where you have published a package on npm and you want to track the download count of the package over time by day. In this codelab, you will build a Community Connector that fetches data using the npm package download counts API. The Community Connector can then be used in Looker Studio to build a dashboard to visualize download counts.

4. Community Connector Workflow

In a basic Community Connector, you'll define four functions:

getAuthType()getConfig()getSchema()getData()

Depending on the current step of the workflow, Looker Studio executes these connector functions and uses the response in the subsequent steps. The video below gives an overview of:

- How a Community Connector works

- Different steps in the workflow

- When different functions are called

- When Looker Studio shows different user interfaces

- Expected user actions at different steps

You can resume the codelab after watching the video.

There's no need to memorize this workflow, just have a look to get a sense of what happens in a connector. You can always come back to this diagram.

In the next step, you will start creating your connector in Google Apps Script. You will have to switch back and forth between the Apps Script UI and this codelab.

5. Set up your Apps Script project

Step 1: Visit Google Apps Script.

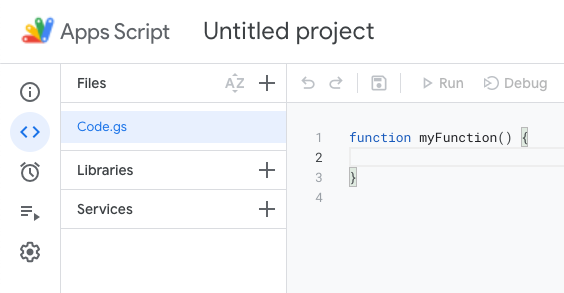

Step 2: Create a new apps script project by clicking "+ New project" in the top left section.

You will see a shell project with a blank myFunction function in the Code.gs file.

Step 3: Delete the myFunction function.

Step 4: Give the project a name:

- Click

Untitled projectin the top-left of the page - Enter a project title.

Start writing your connector code in the Code.gs file.

6. Define getAuthType()

Looker Studio will call the getAuthType() function when it needs to know the authentication method used by the connector. This function should return the authentication method required by the connector to authorize the 3rd-party service.

For the npm download connector you're building, you do not need to authenticate with any 3rd-party service since the API you are using does not require any authentication. Copy the following code and add to your Code.gs file:

Code.gs

var cc = DataStudioApp.createCommunityConnector();

function getAuthType() {

var AuthTypes = cc.AuthType;

return cc

.newAuthTypeResponse()

.setAuthType(AuthTypes.NONE)

.build();

}

Here, you are indicating that your connector does not require any 3rd-party authentication (AuthTypes.NONE). To see all supported authentication methods, view the AuthType() reference.

7. Define getConfig()

Users of your connector will need to configure the connector before they can start using it. The getConfig() function response defines the configuration options users will see. Looker Studio calls the getConfig() function to get the connector's configuration details. Based on the response provided by getConfig(), Looker Studio will render the connector configuration screen and change certain connector behavior.

In the configuration screen, you can provide information or get user input using the following form elements:

| Input element | A single-line text box. |

| Input element | A multi-line textarea box. |

| Input element | A dropdown for single-select options. |

| Input element | A dropdown for multi-select options. |

| Input element | A single checkbox that can be used to capture boolean values. |

| Display element | A static plain-text box that can be used to provide instructions or information to the user. |

Use the INFO element to provide user instructions and a TEXTINPUT element to get the input package name from the user. In the getConfig() response, you will group these form elements under configParams key.

Since the API you are connecting to requires date as a parameter, set dateRangeRequired to true in the getConfig() response. This tells Looker Studio to provide date ranges with all data requests. If your data source does not require date as a parameter, you can omit this.

Add the following getConfig()code to your Code.gs file, below the existing code for getAuthType():

Code.gs

function getConfig(request) {

var config = cc.getConfig();

config.newInfo()

.setId('instructions')

.setText('Enter npm package names to fetch their download count.');

config.newTextInput()

.setId('package')

.setName('Enter a single package name')

.setHelpText('e.g. googleapis or lighthouse')

.setPlaceholder('googleapis');

config.setDateRangeRequired(true);

return config.build();

}

Based on these configParams, when you use the connector in Looker Studio, you can expect to see a configuration screen like the following. But more on that later.

Let's move on to the next function - getSchema().

8. Define getSchema()

Looker Studio calls the getSchema() function to get the schema associated with the user-selected configuration for the connector. Based on the response provided by getSchema(), Looker Studio will show the fields screen to the user listing all the fields in the connector.

For any specific configuration of your connector, the schema is a list of all fields for which the connector can provide data. A connector might return a different schema with different fields based on various configurations. The schema can contain fields that you fetch from your API source, fields that you calculate in Apps Script, and fields that are calculated in Looker Studio using a calculated field formula. Your connector provides the metadata about each field in the schema, including:

- Name of the field

- Data type for the field

- Semantic information

Review the getSchema() and Field reference later to learn more.

Depending on how your connector fetches, the schema may be fixed or dynamically calculated when getSchema() is called. Configuration parameters from getConfig() that are defined by the user will be provided in the request argument for the getSchema() function.

For this codelab, you do not need to access the request argument. You will learn more about the request argument when you write code for the getData() function in the next segment.

For your connector, the schema is fixed and contains the following 3 fields:

| Name of the npm package that the user provides |

| Download count of the npm package |

| Date of the download count |

Below is the getSchema() code for your connector. The getFields() helper function abstracts out the creation of the fields since this functionality is needed by both getSchema() and getData(). Add the following code to your Code.gs file:

Code.gs

function getFields(request) {

var cc = DataStudioApp.createCommunityConnector();

var fields = cc.getFields();

var types = cc.FieldType;

var aggregations = cc.AggregationType;

fields.newDimension()

.setId('packageName')

.setType(types.TEXT);

fields.newMetric()

.setId('downloads')

.setType(types.NUMBER)

.setAggregation(aggregations.SUM);

fields.newDimension()

.setId('day')

.setType(types.YEAR_MONTH_DAY);

return fields;

}

function getSchema(request) {

var fields = getFields(request).build();

return { schema: fields };

}

Based on this schema, you can expect to see the following fields in the Looker Studio fields screen when you use the connector in Looker Studio. But more on that later when you test your connector.

Let's move on to our last function - getData().

9. Define getData() : Part 1

Looker Studio calls the getData() function any time it needs to fetch data. Based on the response provided by getData(), Looker Studio will render and update charts in the dashboard. getData()might be called during these events:

- User adds a chart to the dashboard

- User edits a chart

- User views the dashboard

- User edits a filter or a data control associated

- Looker Studio needs a sample of data

There's no need to copy any code from this page since you will copy the completed

getData()

code in a later step.

Understanding the request object

Looker Studio passes the request object with each getData() call. Review the structure of the request object below. This will help you to write the code for your getData() function.

request object structure

{

configParams: object,

scriptParams: object,

dateRange: {

startDate: string,

endDate: string

},

fields: [

{

name: Field.name

}

]

}

- The

configParamsobject will contain configuration values for the parameters defined ingetConfig()and configured by the user. - The

scriptParamsobject will contain information relevant to connector execution. You do not need to use this for this codelab. dateRangewill contain the requested date range if requested ingetConfig()response.fieldswill contain the list of names of fields for which data is requested.

For your connector, an example request from the getData() function might look like this:

{

configParams: {

package: 'jquery'

},

dateRange: {

startDate: '2017-07-16',

endDate: '2017-07-18'

},

fields: [

{

name: 'day',

},

{

name: 'downloads',

}

]

}

For the getData()call in the above request, only two fields are being requested even though the connector schema has additional fields. The next page will contain the example response for this getData()call and the general getData()response structure.

10. Define getData() : Part 2

In the getData()response, you will need to provide both schema and data for the requested fields. You will divide up the code into three segments:

- Create schema for requested fields.

- Fetch and parse data from API.

- Transform parsed data and filter for requested fields.

There's no need to copy any code from this page since you will copy the completed

getData()

code in the next page.

This is the structure of getData()for your connector.

function getData(request) {

// TODO: Create schema for requested fields.

// TODO: Fetch and parse data from API.

// TODO: Transform parsed data and filter for requested fields.

return {

schema: <filtered schema>,

rows: <transformed and filtered data>

};

}

Create schema for requested fields

// Create schema for requested fields

var requestedFieldIds = request.fields.map(function(field) {

return field.name;

});

var requestedFields = getFields().forIds(requestedFieldIds);

Fetch and parse data from API

The npm API URL will be in this format:

https://api.npmjs.org/downloads/point/{start_date}:{end_date}/{package}

Create the URL for the API using the request.dateRange.startDate, request.dateRange.endDate, and request.configParams.package provided by Looker Studio. Then fetch the data from the API using UrlFetchApp(Apps Script Class: reference). Then parse the fetched response.

// Fetch and parse data from API

var url = [

'https://api.npmjs.org/downloads/range/',

request.dateRange.startDate,

':',

request.dateRange.endDate,

'/',

request.configParams.package

];

var response = UrlFetchApp.fetch(url.join(''));

var parsedResponse = JSON.parse(response).downloads;

Transform parsed data and filter for requested fields

The response from the npm API will be in the following format:

{

downloads: [

{

day: '2014-02-27',

downloads: 1904088

},

..

{

day: '2014-03-04',

downloads: 7904294

}

],

start: '2014-02-25',

end: '2014-03-04',

package: 'somepackage'

}

Transform the response from the npm API and provide the getData() response in the following format. If this format is unclear, have a look at the example response in the following paragraph.

{

schema: [

{

object(Field)

}

],

rows: [

{

values: [string]

}

]

}

In the response, return the schema for only the requested fields using the schema property. You will return the data using the rows property as a list of rows. For each row, the sequence of fields in values must match the sequence of fields in schema. Based on our earlier example of request, this is what the response for getData() will look like:

{

schema: requestedFields.build(),

rows: [

{

values: [ 38949, '20170716']

},

{

values: [ 165314, '20170717']

},

{

values: [ 180124, '20170718']

},

]

}

You have already made the subset of the schema. Use the following function to transform the parsed data and filter it down for requested fields.

function responseToRows(requestedFields, response, packageName) {

// Transform parsed data and filter for requested fields

return response.map(function(dailyDownload) {

var row = [];

requestedFields.asArray().forEach(function (field) {

switch (field.getId()) {

case 'day':

return row.push(dailyDownload.day.replace(/-/g, ''));

case 'downloads':

return row.push(dailyDownload.downloads);

case 'packageName':

return row.push(packageName);

default:

return row.push('');

}

});

return { values: row };

});

}

11. Define getData() : Part 3

The combined getData() code will look like the one below. Add the following code to your Code.gs file:

Code.gs

function responseToRows(requestedFields, response, packageName) {

// Transform parsed data and filter for requested fields

return response.map(function(dailyDownload) {

var row = [];

requestedFields.asArray().forEach(function (field) {

switch (field.getId()) {

case 'day':

return row.push(dailyDownload.day.replace(/-/g, ''));

case 'downloads':

return row.push(dailyDownload.downloads);

case 'packageName':

return row.push(packageName);

default:

return row.push('');

}

});

return { values: row };

});

}

function getData(request) {

var requestedFieldIds = request.fields.map(function(field) {

return field.name;

});

var requestedFields = getFields().forIds(requestedFieldIds);

// Fetch and parse data from API

var url = [

'https://api.npmjs.org/downloads/range/',

request.dateRange.startDate,

':',

request.dateRange.endDate,

'/',

request.configParams.package

];

var response = UrlFetchApp.fetch(url.join(''));

var parsedResponse = JSON.parse(response).downloads;

var rows = responseToRows(requestedFields, parsedResponse, request.configParams.package);

return {

schema: requestedFields.build(),

rows: rows

};

}

You are done with the Code.gs file! Next, update the manifest.

12. Update manifest

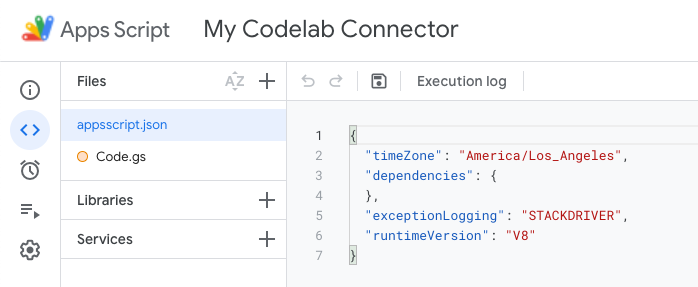

In the Apps Script editor, select Project Settings > Show "appsscript.json" manifest file in editor.

This will create a new appsscript.json manifest file.

Replace your appscript.json file with the following:

appsscript.json

{

"timeZone": "America/Los_Angeles",

"exceptionLogging": "STACKDRIVER",

"runtimeVersion": "V8",

"dataStudio": {

"name": "npm Downloads - From Codelab",

"logoUrl": "https://raw.githubusercontent.com/npm/logos/master/npm%20logo/npm-logo-red.png",

"company": "Codelab user",

"companyUrl": "https://developers.google.com/looker-studio/",

"addonUrl": "https://github.com/googledatastudio/example-connectors/tree/master/npm-downloads",

"supportUrl": "https://github.com/googledatastudio/community-connectors/issues",

"description": "Get npm package download counts.",

"sources": ["npm"]

}

}

Save the Apps Script project.

Congratulations! You have built your first community connector and it is ready for a test drive!

13. Test your connector in Looker Studio

Use the deployment

Step 1: In the Apps Script development environment, Click on Deploy > Test deployments to open the Test deployments dialog.

The default deployment, Head Deployment, will be listed.

Step 2: Click Copy to copy the Head Deployment ID.

Step 3: To load your connector in Looker Studio, replace the <HEAD_DEPLOYMENT_ID> placeholder in the following link with your connector's Head Deployment ID and follow the link in your browser:

https://lookerstudio.google.com/datasources/create?connectorId=<HEAD_DEPLOYMENT_ID>

Authorize the connector

First time Looker Studio users: If you have not used Looker Studio before, you will be asked to authorize Looker Studio and agree to the terms and conditions. Complete the authorization process. When you first use Looker Studio, you may also see a dialog to update your marketing preferences. Sign up for Product announcements if you want to know about the latest features, updates, and product announcements by email.

Once loaded, you will see a prompt to authorize your connector.

Click Authorize and provide the required authorization to the connector.

Configure the connector

Once the authorization is complete, it will show the configuration screen. Type in "lighthouse" in the text input area and click Connect in the top right.

Confirm the schema

You will see the fields screen. Click Create Report in top right.

Create your dashboard

You will be in the Looker Studio dashboard environment. Click Add to Report.

In Looker Studio, every time a user accesses a connector and adds a new configuration, a new data source is created in the user's Looker Studio account. You can think of a data source as an instantiation of the connector based on a specific configuration. Based on the connector and the configuration the user had selected, a data source will return a data table with a specific set of fields. Users can create multiple data sources from the same connector. A data source can be used in multiple reports, and the same report can use multiple data sources.

Now add a Time Series Chart! In the menu, click Insert > Time Series. Then place the time series in the canvas. You should see a time series chart of the npm download count for the selected package. Add a date filter control and view the dashboard as shown below.

That's it! You just created your first community connector! This brings you to the end of this codelab. Now, let's see what next steps you can take.

14. Next steps

Improve the connector your built

Make improvements to the connector you just built:

- In Looker Studio, if you do not provide a package name in the configuration screen for your connector, you will see an error message when you draw the time series chart. Try adding input validation or a default option to your connector config.

- Try adding support for querying multiple package names at the same time in your connector config. Hint: The npm package download counts API supports input of multiple package names separated by comma.

- You can find solutions to both of these in our npm connector code.

Do more with Community Connectors

- View the reference for the connector API and the manifest.

- Explore example connector code in our Open Source Repository to understand best practices.

- Complete the clasp Codelab so that you can develop Community Connectors in your local environment.

- Once you have built a complete Community Connector, consider the publishing options available.

- Build a Community Visualization for Looker studio.

Additional resources

Below are various resources you can access to help you dig into the material covered in this codelab more.

Resource Type | User Features | Developer Features | |

Documentation | |||

News & Updates | Sign up in Looker Studio > User Settings | ||

Ask Questions | |||

Videos | |||

Examples | |||