1. Introduction

Last Updated: 2020-05-16

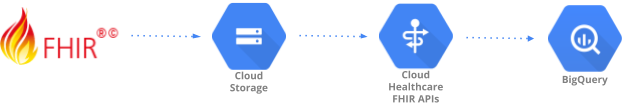

This codelab demonstrates a data ingestion pattern to ingest FHIR R4 formatted healthcare data (Regular Resources) into BigQuery using Cloud Healthcare FHIR APIs. Realistic healthcare test data has been generated and made available in the Google Cloud Storage bucket (gs://hcls_testing_data_fhir_10_patients/) for you.

In this code lab you will learn:

- How to import FHIR R4 resources from GCS into Cloud Healthcare FHIR Store.

- How to export FHIR data from FHIR Store to a Dataset in BigQuery.

What do you need to run this demo?

- You need access to a GCP Project.

- You must be assigned an Owner role to the GCP Project.

- FHIR R4 resources in NDJSON format (content-structure=RESOURCE)

If you don't have a GCP Project, follow these steps to create a new GCP Project.

FHIR R4 resources in NDJSON format has been pre-loaded into GCS bucket at the following locations:

- gs://hcls_testing_data_fhir_10_patients/fhir_r4_ndjson/ - Regular Resources

All of the resources above have new line delimiter JSON (NDJSON) file format but different content structure:

- Regular Resources in ndjson format - each line in the file contains a core FHIR resource in JSON format (like Patient, Observation, etc). Each ndjson file contains FHIR resources of the same resource type. For example Patient.ndjson will contain one or more FHIR resources of resourceType = ‘ Patient' and Observation.ndjson will contain one or more FHIR resources of resourceType = ‘ Observation'.

If you need a new dataset, you can always generate it using SyntheaTM. Then, upload it to GCS instead of using the bucket provided in codelab.

2. Project Setup

Follow these steps to enable Healthcare API and grant required permissions:

Initialize shell variables for your environment

To find the PROJECT_NUMBER and PROJECT_ID, refer to Identifying projects.

<!-- CODELAB: Initialize shell variables --> export PROJECT_ID=<PROJECT_ID> export PROJECT_NUMBER=<PROJECT_NUMBER> export SRC_BUCKET_NAME=hcls_testing_data_fhir_10_patients export BUCKET_NAME=<BUCKET_NAME> export DATASET_ID=<DATASET_ID> export FHIR_STORE=<FHIR_STORE> export BQ_DATASET=<BQ_DATASET>

Enable Healthcare API

Following steps will enable Healthcare APIs in your GCP Project. It will add Healthcare API service account to the project.

- Go to the GCP Console API Library.

- From the projects list, select your project.

- In the API Library, select the API you want to enable. If you need help finding the API, use the search field and the filters.

- On the API page, click ENABLE.

Get access to the synthetic dataset

- From the email address you are using to login to Cloud Console, send an email to hcls-solutions-external+subscribe@google.com requesting to join.

- You will receive an email with instructions on how to confirm the action.

- Use the option to respond to the email to join the group.

- DO NOT click the

button. It doesn't work.

button. It doesn't work. - Once you receive the confirmation email, you can proceed to the next step in the codelab.

Create a Google Cloud Storage bucket in your GCP Project

gsutil mb gs://$BUCKET_NAME

Copy synthetic data to your GCP Project

gsutil -m cp gs://$SRC_BUCKET_NAME/fhir_r4_ndjson/**.ndjson \ gs://$BUCKET_NAME/fhir_r4_ndjson/

Grant Permissions

Before importing FHIR resources from Cloud Storage and exporting to BigQuery, you must grant additional permissions to the Cloud Healthcare Service Agent service account. For more information, see FHIR store Cloud Storage and FHIR store BigQuery permissions.

Grant Storage Admin Permission

gcloud projects add-iam-policy-binding $PROJECT_ID \ --member=serviceAccount:service-$PROJECT_NUMBER@gcp-sa-healthcare.iam.gserviceaccount.com \ --role=roles/storage.objectViewer

Grant BigQuery Admin Permissions

gcloud projects add-iam-policy-binding $PROJECT_ID \ --member=serviceAccount:service-$PROJECT_NUMBER@gcp-sa-healthcare.iam.gserviceaccount.com \ --role=roles/bigquery.admin

3. Environment Setup

Follow these steps to ingest data from NDJSON files to healthcare dataset in BigQuery using Cloud Healthcare FHIR APIs:

Create Healthcare Dataset and FHIR Store

Create Healthcare dataset using Cloud Healthcare APIs

gcloud beta healthcare datasets create $DATASET_ID --location=us-central1

Create FHIR Store in dataset using Cloud Healthcare APIs

gcloud beta healthcare fhir-stores create $FHIR_STORE \ --dataset=$DATASET_ID --location=us-central1 --version=r4

4. Import data into FHIR Store

Import test data from Google Cloud Storage to FHIR Store.

We will use preloaded files from GCS Bucket. These files contain FHIR R4 regular resources in the NDJSON format. As a response, you will get OPERATION_NUMBER, which can be used in the validation step.

Import Regular Resources from the GCS Bucket in your GCP Project

gcloud beta healthcare fhir-stores import gcs $FHIR_STORE \ --dataset=$DATASET_ID --async \ --gcs-uri=gs://$BUCKET_NAME/fhir_r4_ndjson/**.ndjson \ --location=us-central1 --content-structure=RESOURCE

Validate

The validate operation finished successfully. It might take a few minutes of operation to finish, so you might need to repeat this command a few times with some delay.

gcloud beta healthcare operations describe OPERATION_NUMBER \ --dataset=$DATASET_ID --location=us-central1

5. Export data from FHIR Store to BigQuery

Create a BigQuery Dataset

bq mk --location=us --dataset $PROJECT_ID:$BQ_DATASET

Export healthcare data from FHIR Store to BigQuery Dataset

gcloud beta healthcare fhir-stores export bq $FHIR_STORE \ --dataset=$DATASET_ID --location=us-central1 --async \ --bq-dataset=bq://$PROJECT_ID.$BQ_DATASET \ --schema-type=analytics

As a response, you will get OPERATION_NUMBER, which can be used in the validation step.

Validate

Validate operation finished successfully

gcloud beta healthcare operations describe OPERATION_NUMBER \ --dataset=$DATASET_ID --location=us-central1

Validate if BigQuery Dataset has all 16 tables

bq ls $PROJECT_ID:$BQ_DATASET

6. Clean up

To avoid incurring charges to your Google Cloud Platform account for the resources used in this tutorial, you can clean up the resources that you created on GCP so they won't take up your quota, and you won't be billed for them in the future. The following sections describe how to delete or turn off these resources.

Delete the project

The easiest way to eliminate billing is to delete the project that you created for the tutorial.

To delete the project:

- In the GCP Console, go to the Projects page. GO TO THE PROJECTS PAGE

- In the project list, select the project you want to delete and click Delete.

- In the dialog, type the project ID, and then click Shut down to delete the project.

If you need to keep the project, you can delete the Cloud healthcare dataset and BigQuery dataset using the following instructions.

Delete the Cloud Healthcare API dataset

Follow the steps to delete Healthcare API dataset using both GCP Console and gcloud CLI.

Quick CLI command:

gcloud beta healthcare datasets delete $DATASET_ID --location=us-central1

Delete the BigQuery dataset

Follow the steps to delete BigQuery dataset using different interfaces.

Quick CLI command:

bq rm -r -f $PROJECT_ID:$DATASET_ID

7. Congratulations

Congratulations, you've successfully completed the code lab to ingest healthcare data in BigQuery using Cloud Healthcare APIs.

You imported FHIR R4 compliant synthetic data from Google Cloud Storage into the Cloud Healthcare FHIR APIs.

You exported data from the Cloud Healthcare FHIR APIs to BigQuery.

You now know the key steps required to start your Healthcare Data Analytics journey with BigQuery on Google Cloud Platform.