1. Overview/Introduction

While multi-tier applications consisting of web, application server and database are foundational to web development and are the starting point for many websites, success will often bring challenges around scalability, integration and agility. For example, how can data be handled in real-time and how can it be distributed to multiple key business systems? These issues coupled with the demands of internet-scale applications drove the need for a distributed messaging system and gave rise to an architectural pattern of using data pipelines to achieve resilient, real-time systems. As a result, understanding how to publish real-time data to a distributed messaging system and then how to build a data pipeline are crucial skills for developer and architect alike.

What you will build

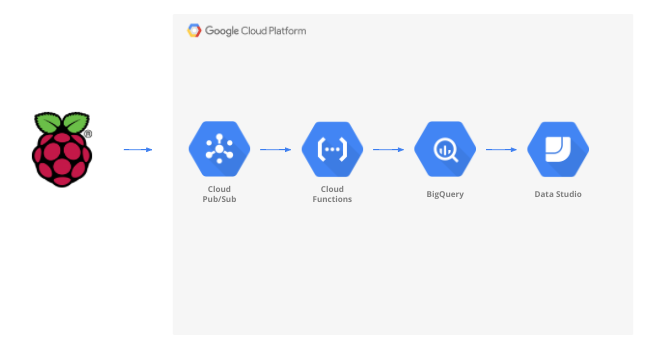

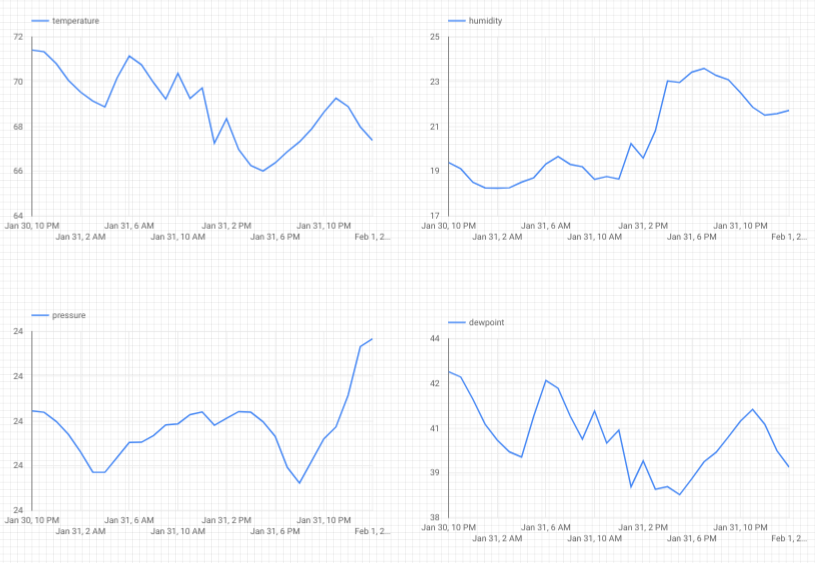

In this codelab, you are going to build a weather data pipeline that starts with an Internet of Things (IoT) device, utilizes a message queue to receive and deliver data, leverages a serverless function to move the data to a data warehouse and then create a dashboard that displays the information. A Raspberry Pi with a weather sensor will be used for the IoT device and several components of the Google Cloud Platform will form the data pipeline. Building out the Raspberry Pi, while beneficial, is an optional portion of this codelab and the streaming weather data can be replaced with a script.

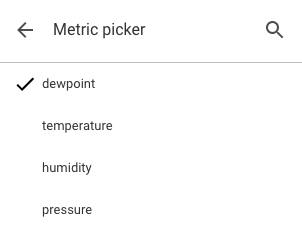

After completing the steps in this codelab, you will have a streaming data pipeline feeding a dashboard that displays temperature, humidity, dewpoint and air pressure.

What you'll learn

- How to use Google Pub/Sub

- How deploy a Google Cloud Function

- How to leverage Google BigQuery

- How to create a dashboard using Google Data Studio

- In addition, if you build out the IoT sensor, you'll also learn how to utilize the Google Cloud SDK and how to secure remote access calls to the Google Cloud Platform

What you'll need

- A Google Cloud Platform account. New users of Google Cloud Platform are eligible for a $300 free trial.

If you want to build the IoT sensor portion of this codelab instead of leveraging sample data and a script, you'll also need the following ( which can be ordered as a complete kit or as individual parts here)...

- Raspberry Pi Zero W with power supply, SD memory card and case

- USB card reader

- USB hub (to allow for connecting a keyboard and mouse into the sole USB port on the Raspberry Pi)

- Female-to-female breadboard wires

- GPIO Hammer Headers

- BME280 sensor

- Soldering iron with solder

In addition, having access to a computer monitor or TV with HDMI input, HDMI cable, keyboard and a mouse is assumed.

2. Getting set up

Self-paced environment setup

If you don't already have a Google account (Gmail or G Suite), you must create one. Regardless of whether you already have a Google account or not, make sure to take advantage of the $300 free trial!

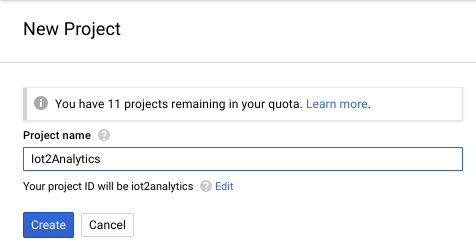

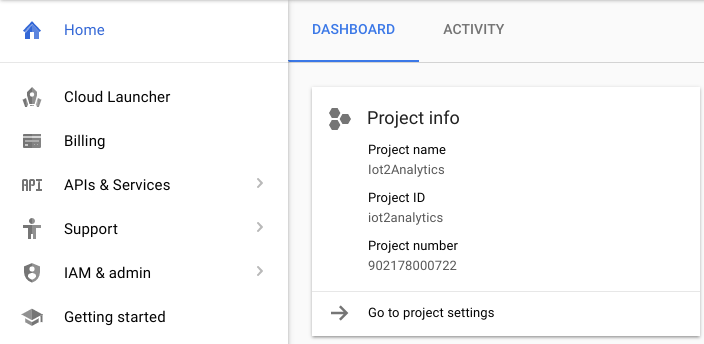

Sign-in to Google Cloud Platform console ( console.cloud.google.com). You can use the default project ("My First Project") for this lab or you can choose to create a new project. If you'd like to create a new project, you can use the the Manage resources page. The project ID needs to be a unique name across all Google Cloud projects (the one shown below has already been taken and won't work for you). Take note of your project ID (i.e. Your project ID will be _____) as it will be needed later.

Running through this codelab shouldn't cost more than a few dollars, but it could be more if you decide to use more resources or if you leave them running. Make sure to go through the Cleanup section at the end of the codelab.

3. Create a BigQuery table

BigQuery is a serverless, highly scalable, low cost enterprise data warehouse and will be an ideal option to store data being streamed from IoT devices while also allowing an analytics dashboard to query the information.

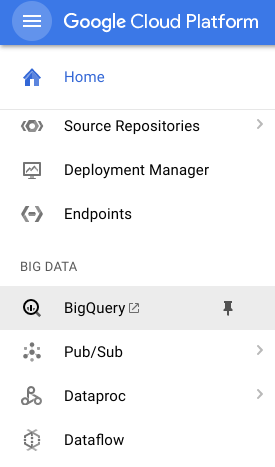

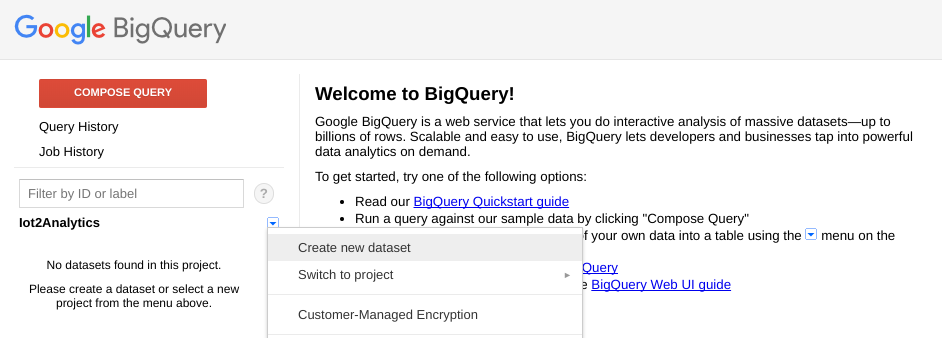

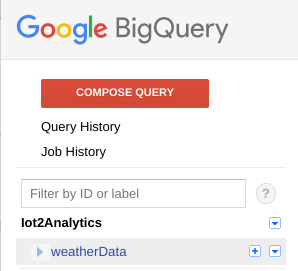

Let's create a table that will hold all of the IoT weather data. Select BigQuery from the Cloud console. This will open BigQuery in a new window (don't close the original window as you'll need to access it again).

Click on the down arrow icon next to your project name and then select "Create new dataset"

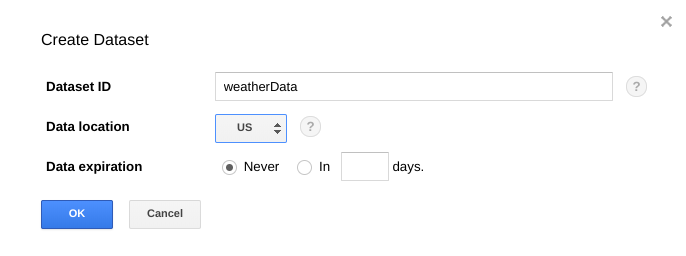

Enter "weatherData" for the Dataset, select a location where it will be stored and Click "OK"

Click the "+" sign next to your Dataset to create a new table

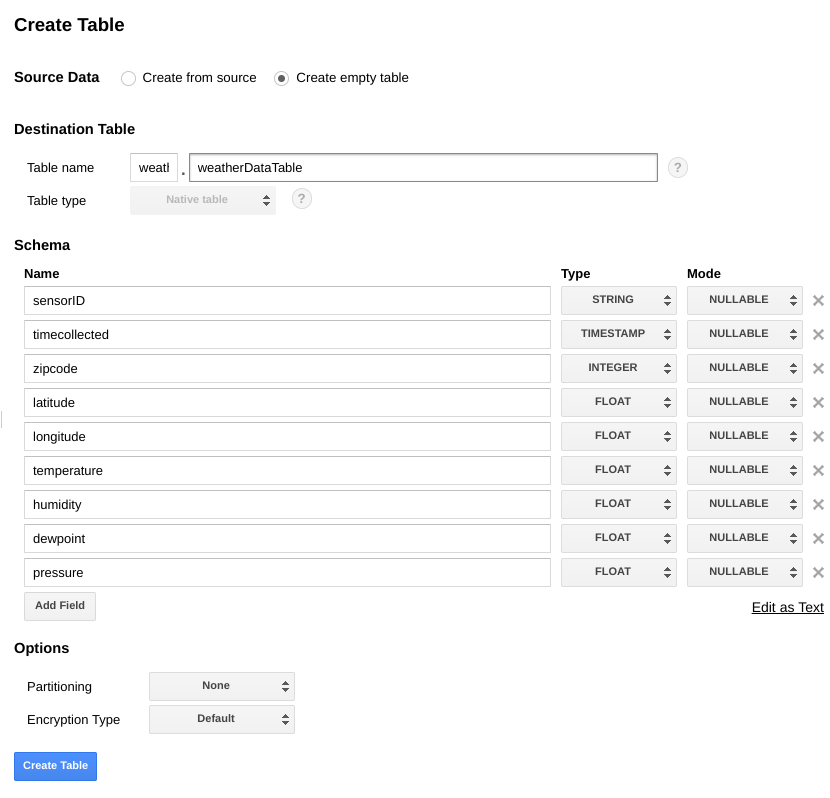

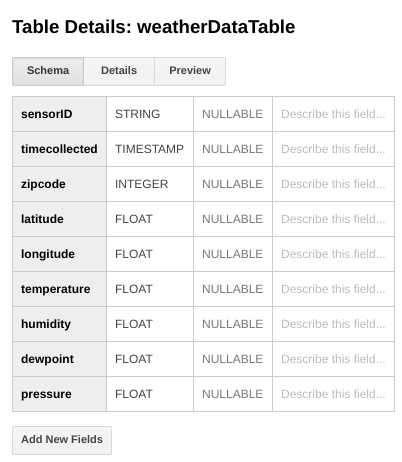

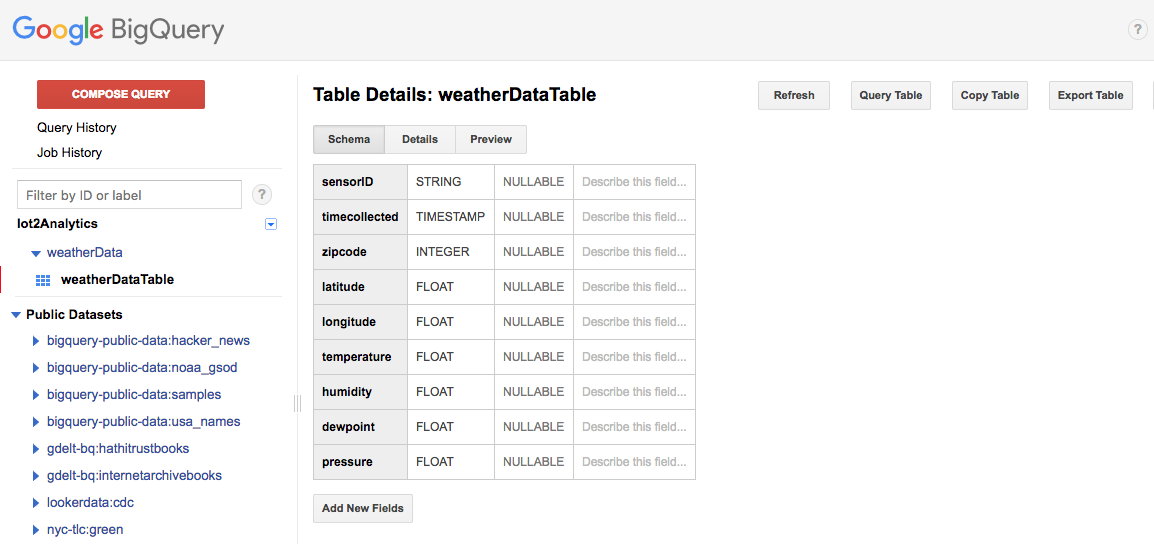

For Source Data, select Create empty table. For Destination table name, enter weatherDataTable. Under Schema, click the Add Field button until there are a total of 9 fields. Fill in the fields as shown below making sure to also select the appropriate Type for each field. When everything is complete, click on the Create Table button.

You should see a result like this...

You now have a data warehouse setup to receive your weather data.

4. Create a Pub/Sub topic

Cloud Pub/Sub is a simple, reliable, scalable foundation for stream analytics and event-driven computing systems. As a result, it is perfect for handling incoming IoT messages and then allowing downstream systems to process them.

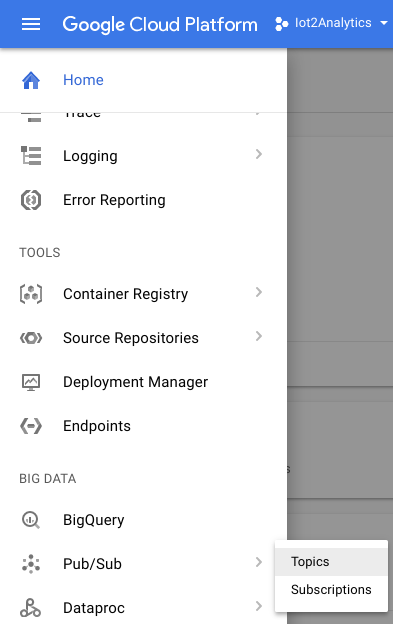

If you are still in the window for BigQuery, switch back to the Cloud Console. If you closed the Cloud Console, go to https://console.cloud.google.com

From the Cloud Console, select Pub/Sub and then Topics.

If you see an Enable API prompt, click the Enable API button.

Click on the Create a topic button

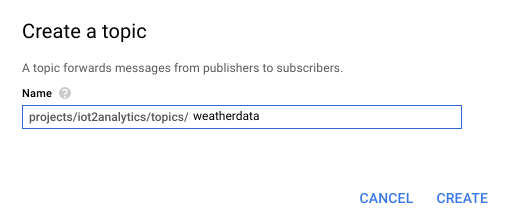

Enter "weatherdata" as the topic name and the click Create

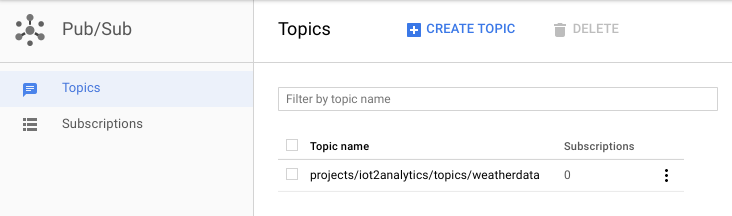

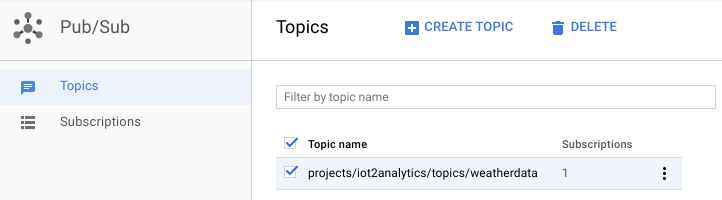

You should see the newly created topic

You now have a Pub/Sub topic to both publish IoT messages to and to allow other processes to access those messages.

Secure publishing to the topic

If you plan to publish messages to the Pub/Sub topic from resources outside of your Google Cloud Console (e.g. an IoT sensor), it will be necessary to more tightly control access using a service account and to ensure the security of the connection by creating a trust certificate.

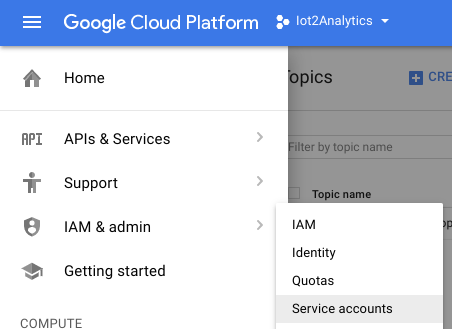

From the Cloud Console, select IAM & Admin and then Service accounts

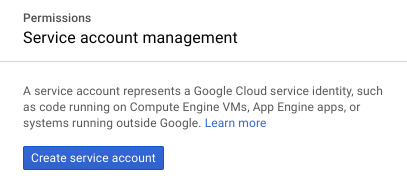

Click on the Create service account button

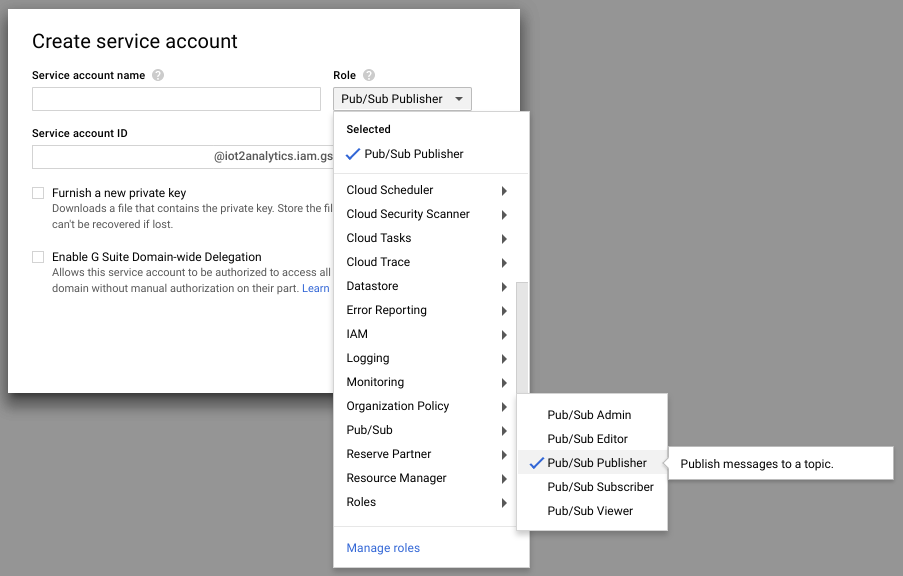

In the Role dropdown, select the Pub/Sub Publisher role

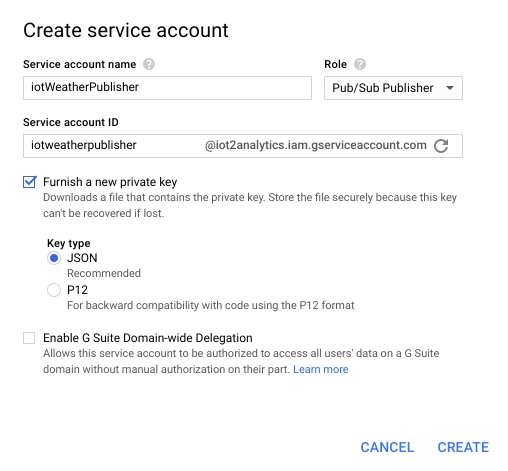

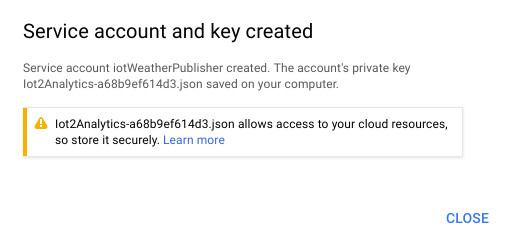

Type in a service account name (iotWeatherPublisher), check the Furnish a new private key checkbox, make sure that Key type is set to JSON and click on "Create"

The security key will download automatically. There is only one key, so it is important to not lose it. Click Close.

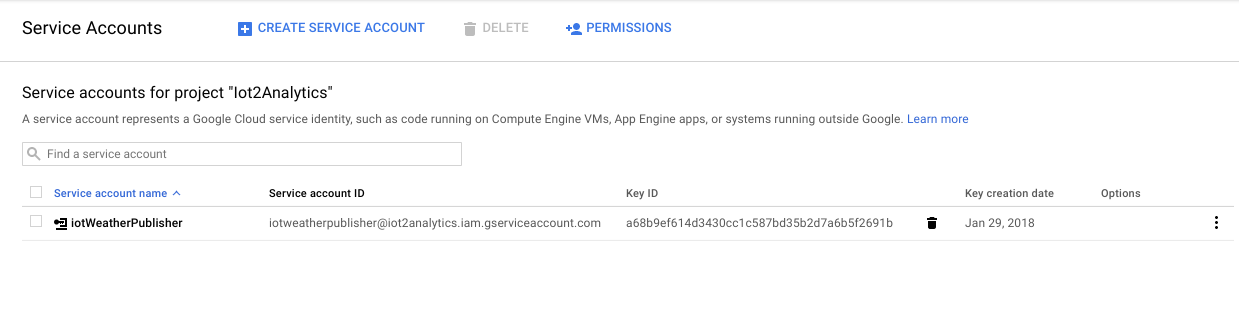

You should see that a service account has been created and that there is a Key ID associated with it.

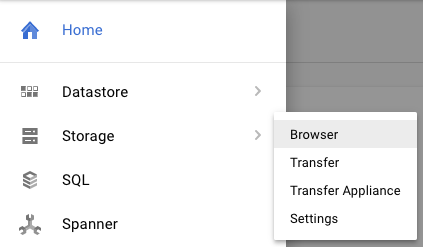

In order to easily access the key later, we will store it in Google Cloud Storage. From the Cloud Console, select Storage and then Browser.

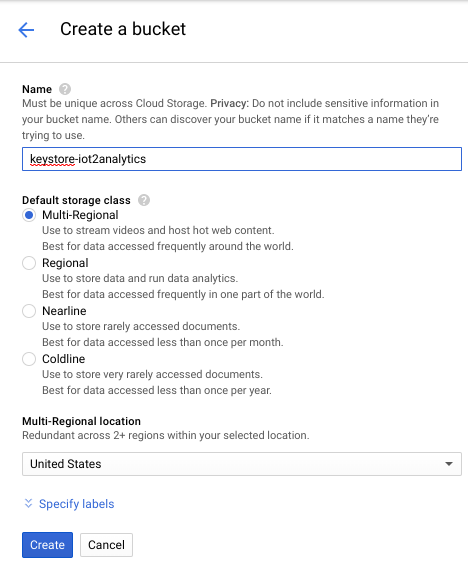

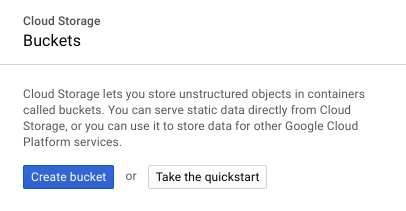

Click the Create Bucket button

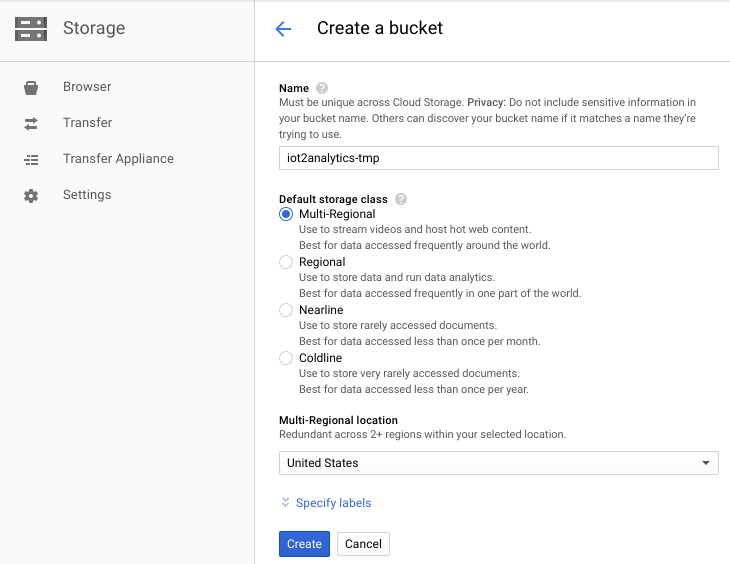

Choose a name for the storage bucket (it must be a name that is globally unique across all of Google Cloud) and click on the Create button

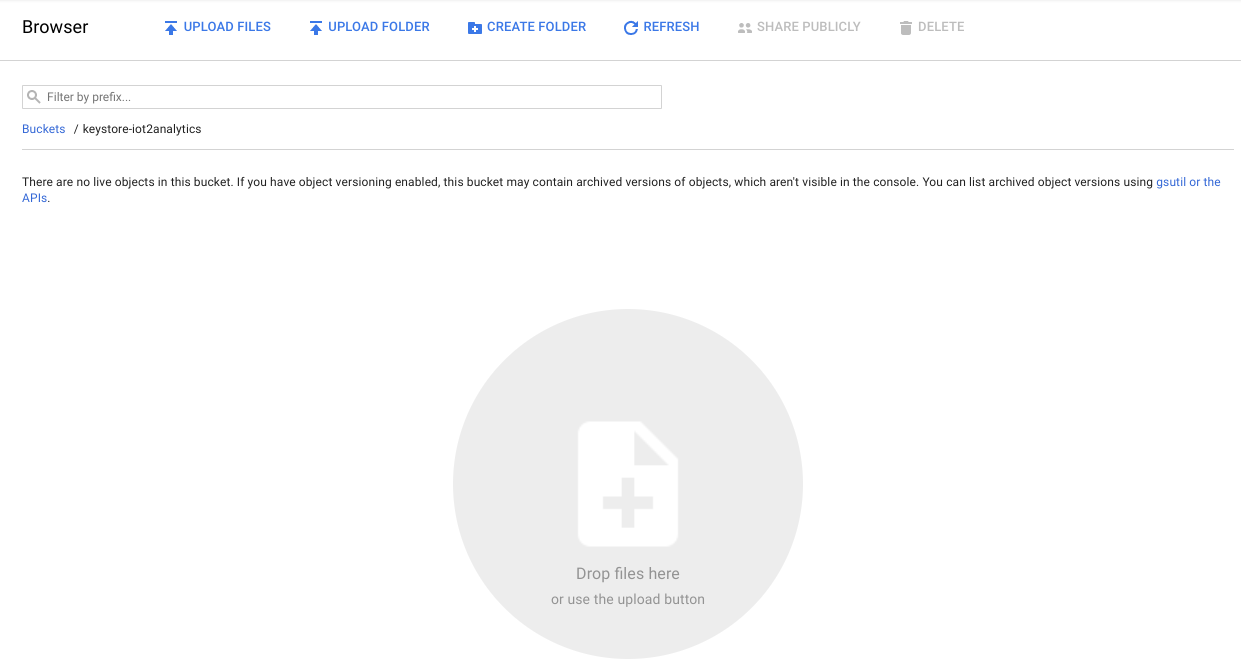

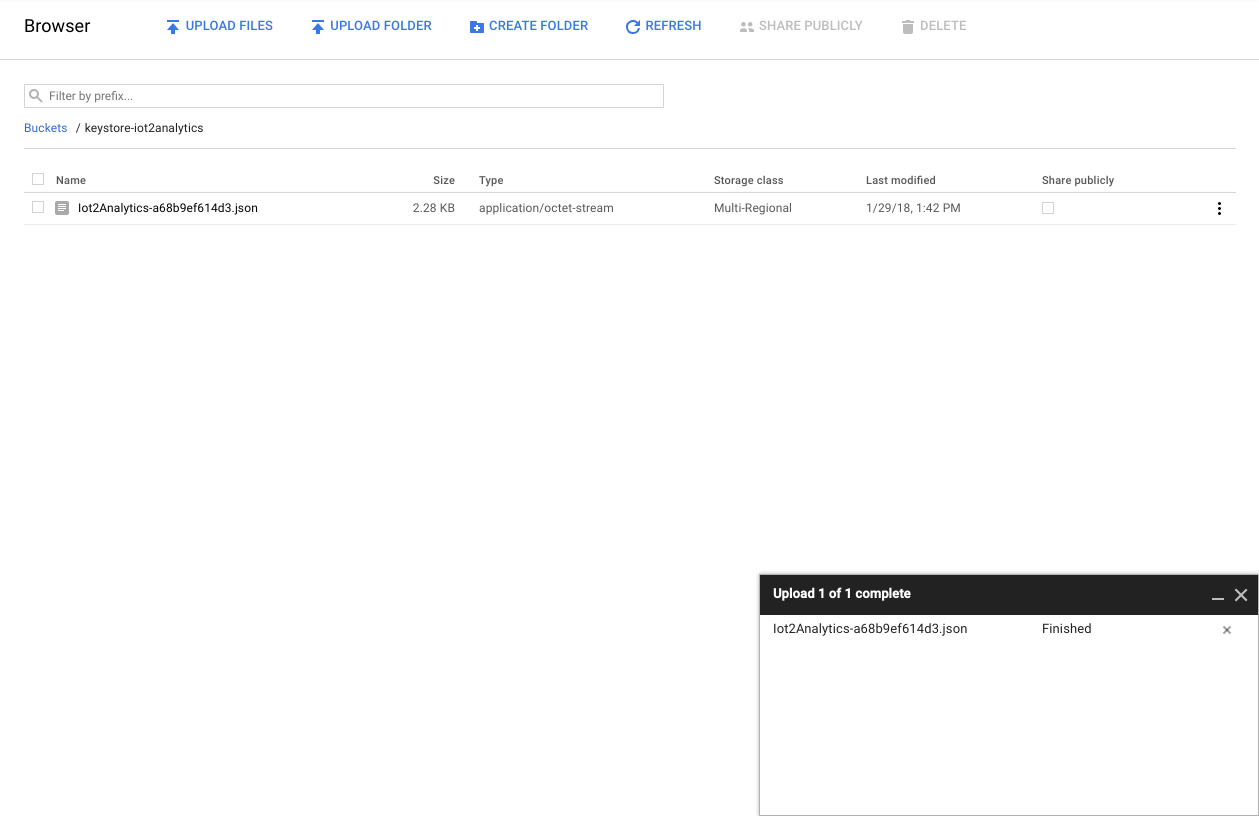

Locate the security key that was automatically downloaded and either drag/drop or upload it into the storage bucket

After the key upload is complete, it should appear in the Cloud Storage browser

Make note of the storage bucket name and the security key file name for later.

5. Create a Cloud Function

Cloud computing has made possible fully serverless models of computing where logic can be spun up on-demand in response to events originating from anywhere. For this lab, a Cloud Function will start each time a message is published to the weather topic, will read the message and then store it in BigQuery.

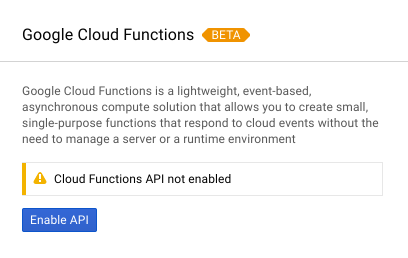

From the Cloud Console, select Cloud Functions

If you see an API message, click on the Enable API button

Click on the Create function button

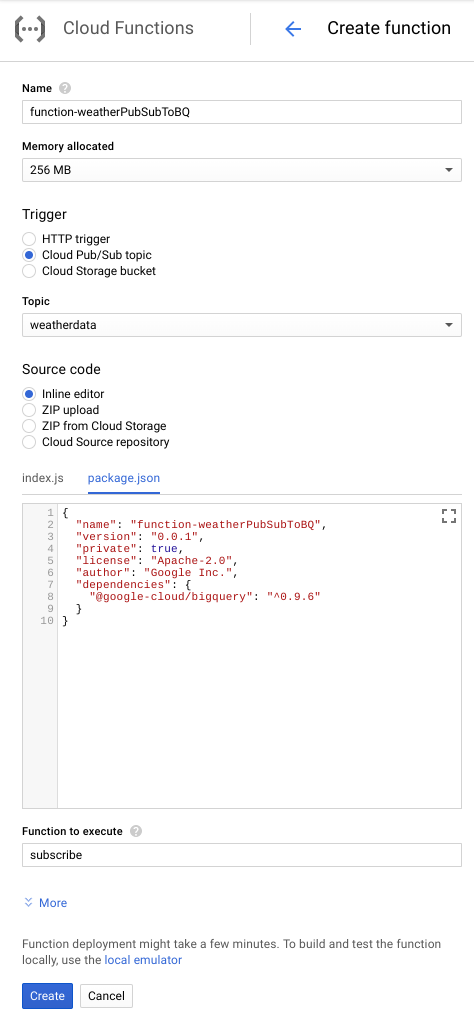

In the Name field, type function-weatherPubSubToBQ. For Trigger select Cloud Pub/Sub topic and in the Topic dropdown select weatherdata. For source code, select inline editor. In the index.js tab, paste the following code over what is there to start with. Make sure to change the constants for projectId, datasetId and tableId to fit your environment.

/**

* Background Cloud Function to be triggered by PubSub.

*

* @param {object} event The Cloud Functions event.

* @param {function} callback The callback function.

*/

exports.subscribe = function (event, callback) {

const BigQuery = require('@google-cloud/bigquery');

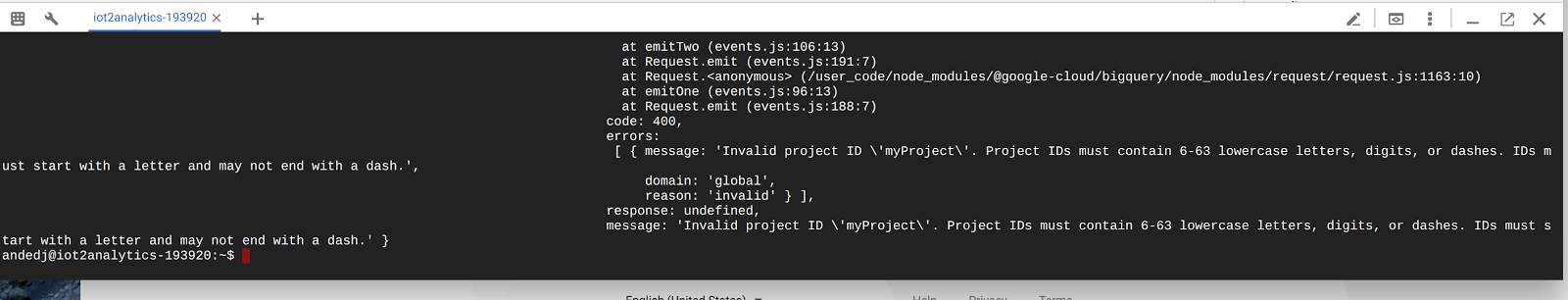

const projectId = "myProject"; //Enter your project ID here

const datasetId = "myDataset"; //Enter your BigQuery dataset name here

const tableId = "myTable"; //Enter your BigQuery table name here -- make sure it is setup correctly

const PubSubMessage = event.data;

// Incoming data is in JSON format

const incomingData = PubSubMessage.data ? Buffer.from(PubSubMessage.data, 'base64').toString() : "{'sensorID':'na','timecollected':'1/1/1970 00:00:00','zipcode':'00000','latitude':'0.0','longitude':'0.0','temperature':'-273','humidity':'-1','dewpoint':'-273','pressure':'0'}";

const jsonData = JSON.parse(incomingData);

var rows = [jsonData];

console.log(`Uploading data: ${JSON.stringify(rows)}`);

// Instantiates a client

const bigquery = BigQuery({

projectId: projectId

});

// Inserts data into a table

bigquery

.dataset(datasetId)

.table(tableId)

.insert(rows)

.then((foundErrors) => {

rows.forEach((row) => console.log('Inserted: ', row));

if (foundErrors && foundErrors.insertErrors != undefined) {

foundErrors.forEach((err) => {

console.log('Error: ', err);

})

}

})

.catch((err) => {

console.error('ERROR:', err);

});

// [END bigquery_insert_stream]

callback();

};

In the package.json tab, paste the following code over the placeholder code that is there

{

"name": "function-weatherPubSubToBQ",

"version": "0.0.1",

"private": true,

"license": "Apache-2.0",

"author": "Google Inc.",

"dependencies": {

"@google-cloud/bigquery": "^0.9.6"

}

}

If the Function to execute is set to "HelloWorld", change it to "subscribe". Click the Create button

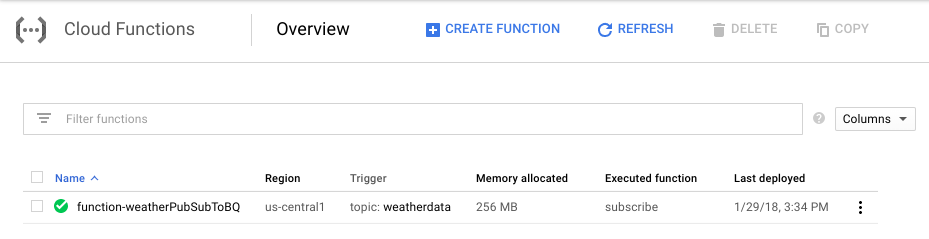

It will take about 2 minutes until your function will show that it has deployed

Congratulations! You just connected Pub/Sub to BigQuery via Functions.

6. Setup the IoT hardware (optional)

Assemble the Raspberry Pi and sensor

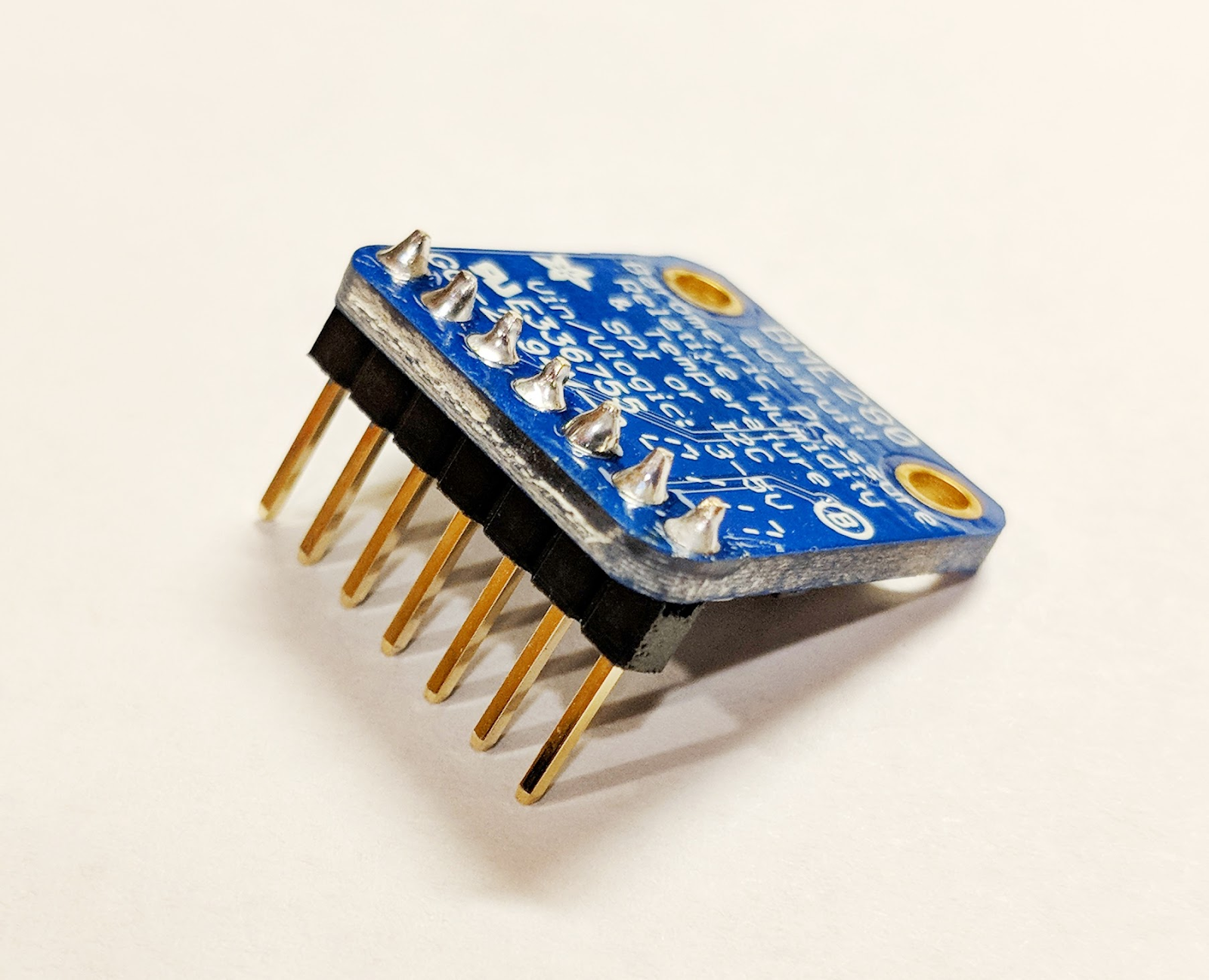

If there are more than 7 pins, trim the header down to only 7 pins. Solder the header pins to the sensor board.

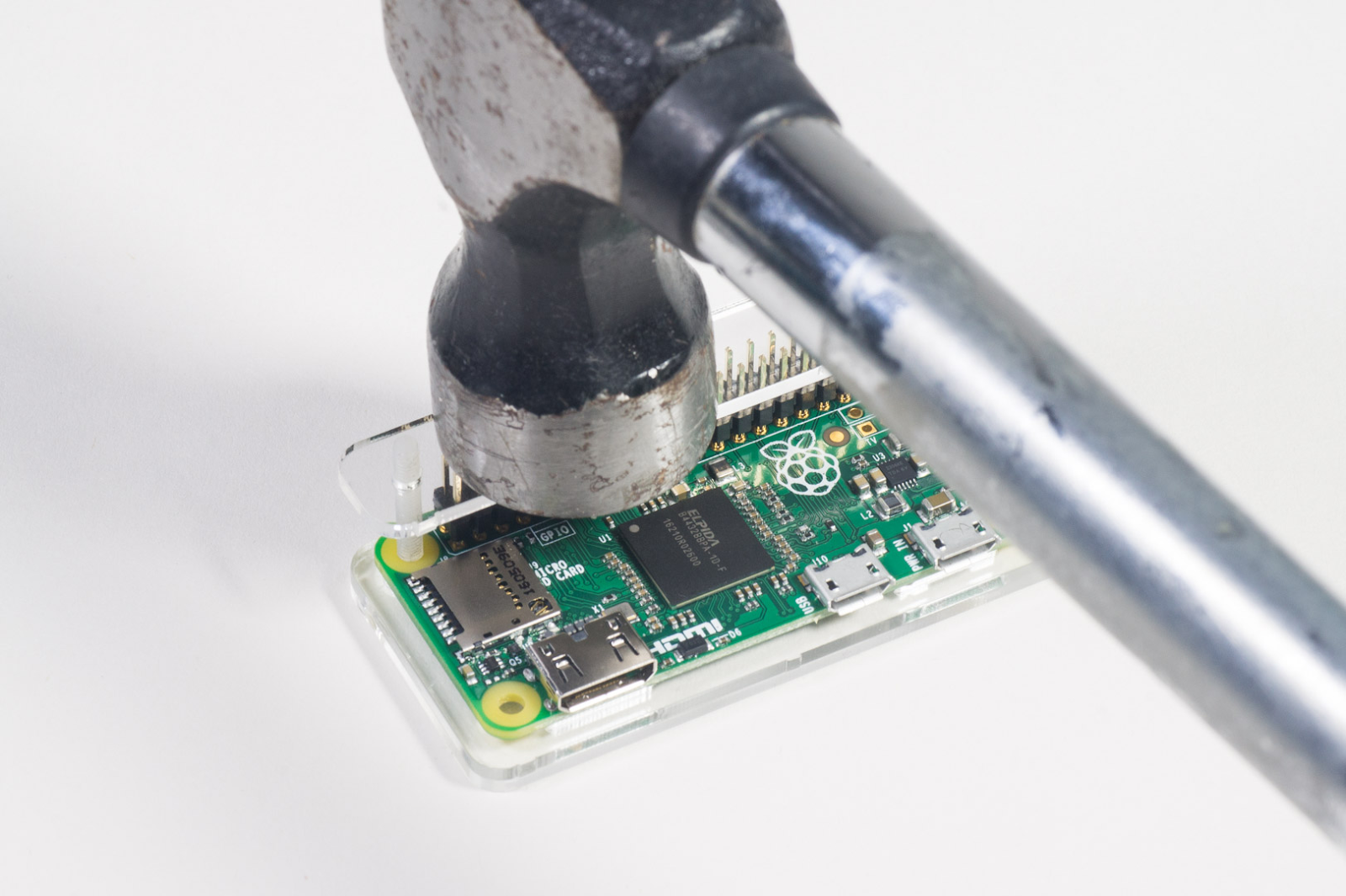

Carefully install the hammer header pins into the Raspberry Pi.

Format the SD card and install the NOOBS (New Out Of Box Software) installer by following the steps here. Insert the SD card into the Raspberry Pi and place the Raspberry Pi into its case.

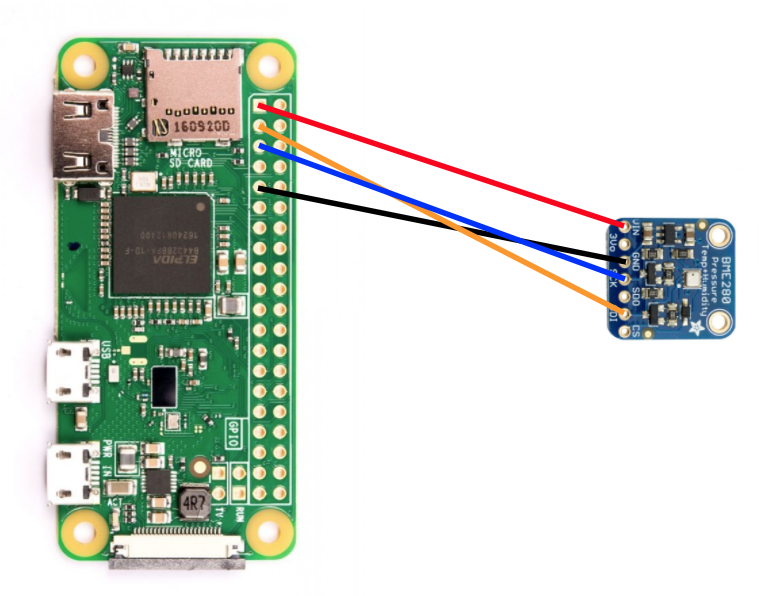

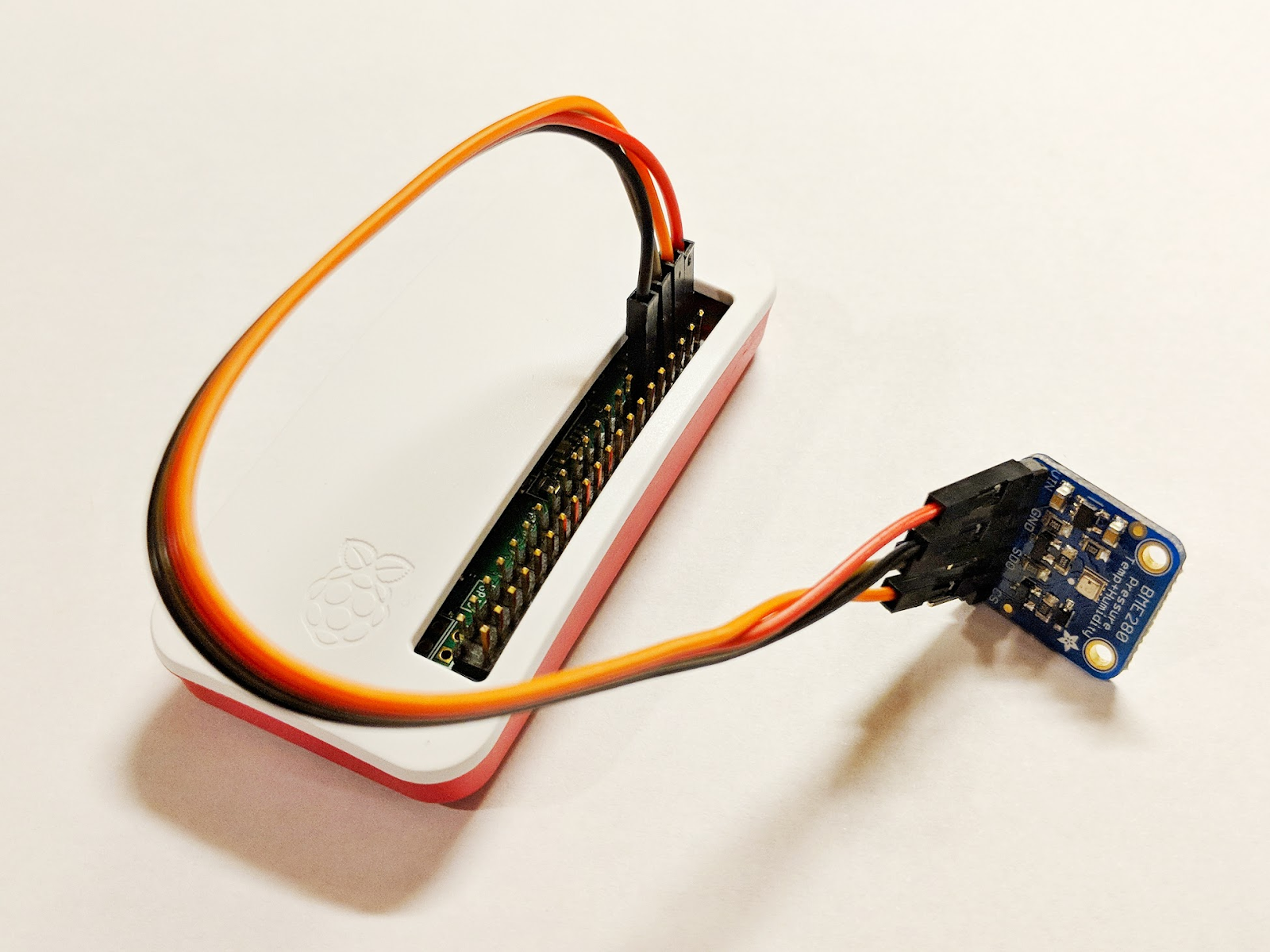

Use the breadboard wires to connect the sensor to the Raspberry Pi according to the diagram below.

Raspberry Pi pin | Sensor connection |

Pin 1 (3.3V) | VIN |

Pin 3 (CPIO2) | SDI |

Pin 5 (GPIO3) | SCK |

Pin 9 (Ground) | GND |

Connect the monitor (using the mini-HDMI connector), keyboard/mouse (with the USB hub) and finally, power adapter.

Configure the Raspberry Pi and sensor

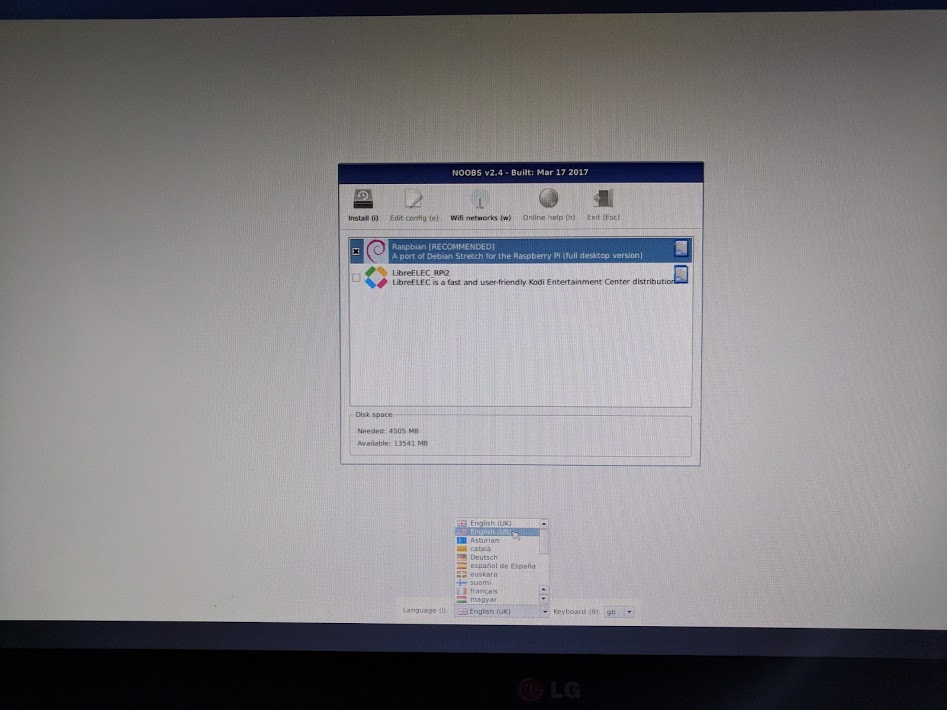

After the Raspberry Pi finishes booting up, select Raspbian for the desired operating system, make certain your desired language is correct and then click on Install (hard drive icon on the upper left portion of the window).

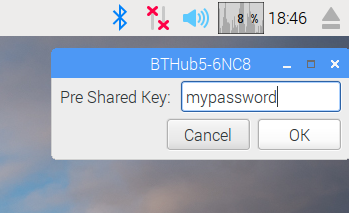

Click on the Wifi icon (top right of the screen) and select a network. If it is a secured network, enter the password (pre shared key).

Click on the raspberry icon (top left of the screen), select Preferences and then Raspberry Pi Configuration. From the Interfaces tab, enable I2C. From the Localisation tab, set the Locale and the Timezone. After setting the Timezone, allow the Raspberry Pi to reboot.

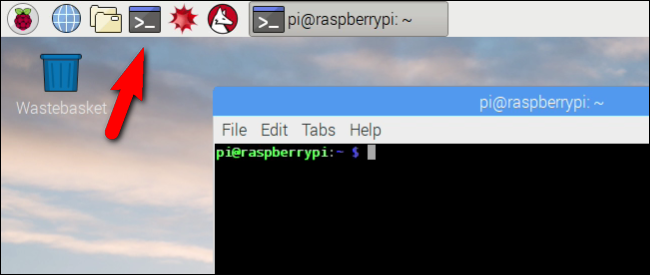

After the reboot has completed, click on the Terminal icon to open a terminal window.

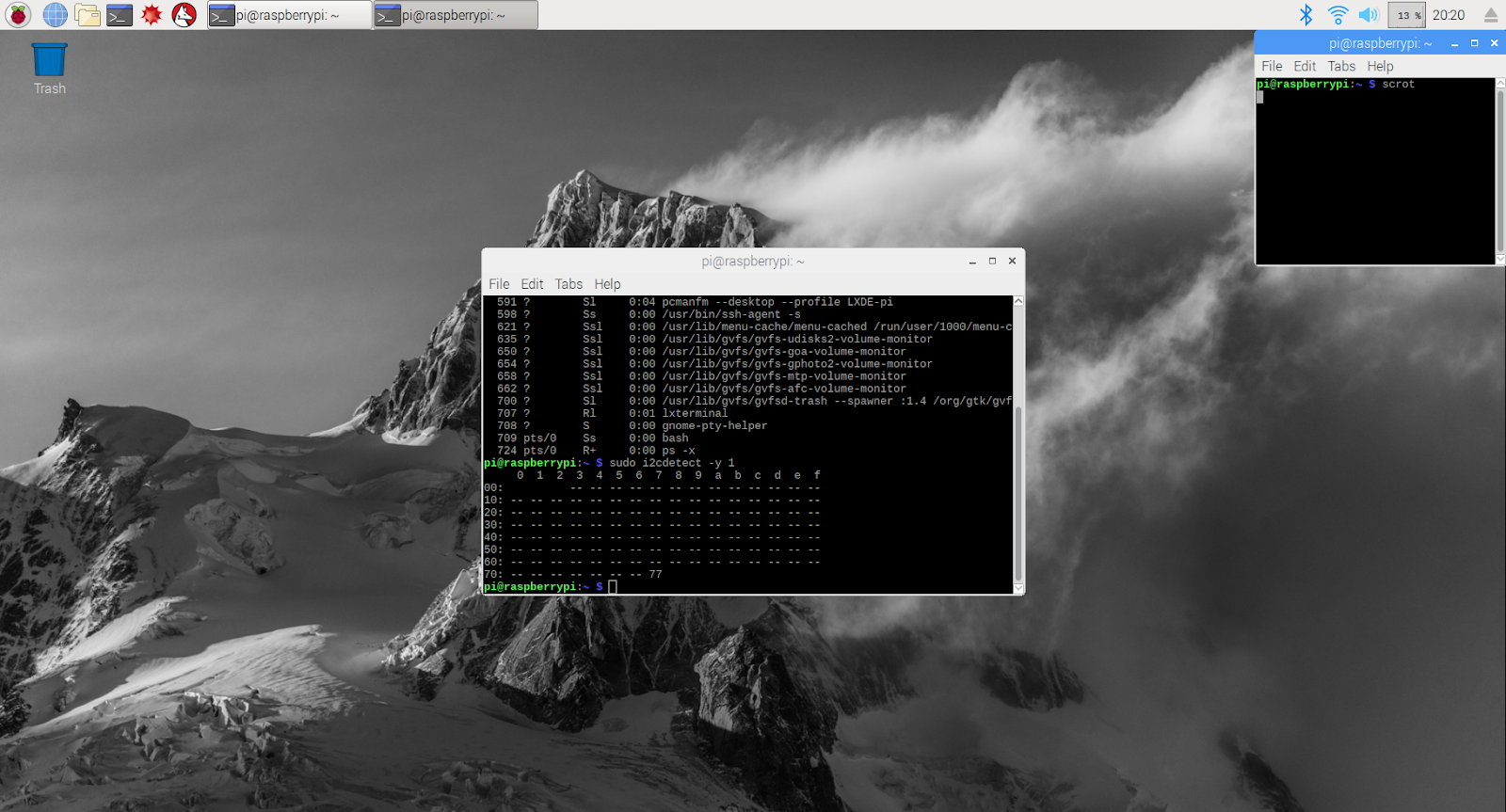

Type in the following command to make certain that the sensor is correctly connected.

sudo i2cdetect -y 1

The result should look like this – make sure it reads 77.

Install the Google Cloud SDK

In order to leverage the tools on the Google Cloud platform, the Google Cloud SDK will need to be installed on the Raspberry Pi. The SDK includes the tools needed to manage and leverage the Google Cloud Platform and is available for several programming languages.

Open a terminal window on the Raspberry Pi if one isn't already open and set an environment variable that will match the SDK version to the operating system on the Raspberry Pi.

export CLOUD_SDK_REPO="cloud-sdk-$(lsb_release -c -s)"

Now add the location of where the Google Cloud SDK packages are stored so that the installation tools will know where to look when asked to install the SDK.

echo "deb http://packages.cloud.google.com/apt $CLOUD_SDK_REPO main" | sudo tee -a /etc/apt/sources.list.d/google-cloud-sdk.list

Add the public key from Google's package repository so that the Raspberry Pi will verify the security and trust the content during installation

curl https://packages.cloud.google.com/apt/doc/apt-key.gpg | sudo apt-key add -

Make sure that all the software on the Raspberry Pi is up to date and install the core Google Cloud SDK

sudo apt-get update && sudo apt-get install google-cloud-sdk

When prompted "Do you want to continue?", press Enter.

Install the tendo package using the Python package manager. This package is used to check if a script is running more than once and is being installed for its application to the weather script.

pip install tendo

Make sure the Google Cloud PubSub and OAuth2 packages for Python are installed and up to date using the Python package manager

sudo pip install --upgrade google-cloud-pubsub

sudo pip install --upgrade oauth2client

Initialize the Google Cloud SDK

The SDK allows remote, authenticated access to the Google Cloud. For this codelab, it will be used to access the storage bucket so that the security key can be downloaded easily to the Raspberry Pi.

From the command line on the Raspberry Pi, enter

gcloud init --console-only

When prompted "Would you like to log in (Y/n)?", press Enter.

When you see "Go to the following link in your browser:" followed by a long URL that starts with https://accounts.google.com/o/oauth?..., hover over the URL with the mouse, right click and select "Copy URL". Then open the web browser (blue globe icon in the top left corner of the screen), right click over the address bar and click "Paste".

Once you see the Sign In screen, enter your email address that is associated with your Google Cloud account and hit Enter. Then enter your password and click on the Next button.

You will be prompted that the Google Cloud SDK wants to access your Google Account. Click on the Allow button.

You will be presented with the verification code. Using the mouse, highlight it, then right click and choose to Copy. Return to the terminal window, make sure the cursor is to the right of "Enter verification code:", right click with the mouse and then choose to Paste. Press the Enter button.

If you are asked to "Pick cloud project to use:", enter the number corresponding to the project name that you've been using for this codelab and then press Enter.

If you are prompted to enable the compute API, press the Enter button to enable it. Following that, you will be asked to configure the Google Compute Engine settings. Hit Enter. You'll be presented with a list of potential regions/zones – choose one close to you, enter the corresponding number and press Enter.

In a moment, you will see some additional information displayed. The Google Cloud SDK is now configured. You can close the web browser window as you won't need it going forward.

Install the sensor software and weather script

From the command line on the Raspberry Pi, clone the needed packages for reading information from the input/output pins.

git clone https://github.com/adafruit/Adafruit_Python_GPIO

Install the downloaded packages

cd Adafruit_Python_GPIO

sudo python setup.py install

cd ..

Clone the project code that enables the weather sensor

git clone https://github.com/googlecodelabs/iot-data-pipeline

Copy the sensor driver into the same directory as the remainder of the downloaded software.

cd iot-data-pipeline/third_party/Adafruit_BME280

mv Adafruit_BME280.py ../..

cd ../..

Edit the script by typing...

nano checkWeather.py

Change the project to your Project ID and the topic to the name of your Pub/Sub topic (these were noted in the Getting Set-up and Create a Pub/Sub topic sections of this codelab).

Change the sensorID, sensorZipCode, sensorLat and sensorLong values to whatever value you'd like. Latitude and Longitude values for a specific location or address can be found here.

When you've completed making the necessary changes, press Ctrl-X to begin to exit the nano editor. Press Y to confirm.

# constants - change to fit your project and location

SEND_INTERVAL = 10 #seconds

sensor = BME280(t_mode=BME280_OSAMPLE_8, p_mode=BME280_OSAMPLE_8, h_mode=BME280_OSAMPLE_8)

credentials = GoogleCredentials.get_application_default()

project="myProject" #change this to your Google Cloud project id

topic = "myTopic" #change this to your Google Cloud PubSub topic name

sensorID = "s-Googleplex"

sensorZipCode = "94043"

sensorLat = "37.421655"

sensorLong = "-122.085637"

Install the security key

Copy the security key (from the "Secure publishing to a topic" section) to the Raspberry Pi.

If you used SFTP or SCP to copy the security key from your local machine to your Raspberry Pi (into the /home/pi directory), then you can skip the next step and jump down to exporting the path.

If you placed the security key into a storage bucket, you'll need to remember the name of the storage bucket and the name of the file. Use the gsutil command to copy the security key. This command can access Google Storage (why it is named gsutil and why the path to the file starts with gs://). Make sure to change the command below to have your bucket name and file name.

gsutil cp gs://nameOfYourBucket/yourSecurityKeyFilename.json .

You should see a message that the file is copying and then that the operation has been completed.

From the command line on the Raspberry Pi, export a path to the security key (change the filename to match what you have)

export GOOGLE_APPLICATION_CREDENTIALS=/home/pi/iot-data-pipeline/yourSecurityKeyFilename.json

You now have a completed IoT weather sensor that is ready to transmit data to Google Cloud.

7. Start the data pipeline

Might need to enable Compute API

Data streaming from a Raspberry Pi

If you constructed a Raspberry Pi IoT weather sensor, start the script that will read the weather data and push it to Google Cloud Pub/Sub. If you aren't in the /home/pi/iot-data-pipeline directory, move there first

cd /home/pi/iot-data-pipeline

Start the weather script

python checkWeather.py

You should see the terminal window echo the weather data results every minute. With data flowing, you can skip to the next section (Check that Data is Flowing).

Simulated data streaming

If you didn't build the IoT weather sensor, you can simulate data streaming by using a public dataset that has been stored in Google Cloud Storage and feeding it into the existing Pub/Sub topic. Google Dataflow along with a Google-provided template for reading from Cloud Storage and publishing to Pub/Sub will be used.

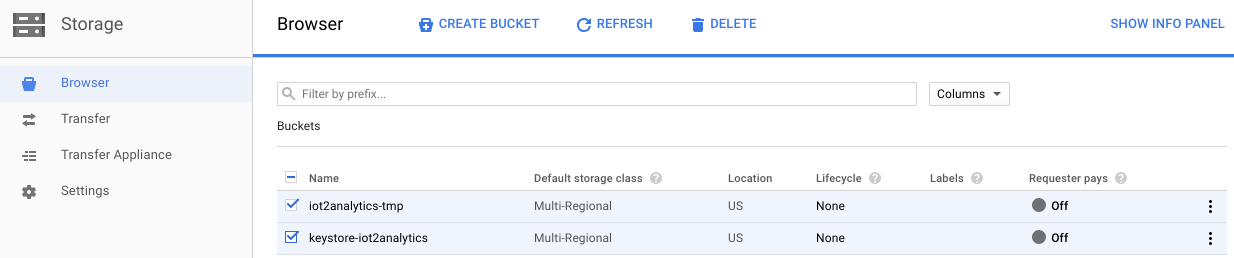

As part of the process, Dataflow will need a temporary storage location, so let's create a storage bucket for this purpose.

From the Cloud Console, select Storage and then Browser.

Click the Create Bucket button

Choose a name for the storage bucket (remember, it must be a name that is globally unique across all of Google Cloud) and click on the Create button. Remember the name of this storage bucket as it will be needed shortly.

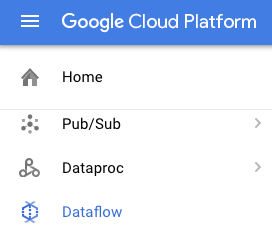

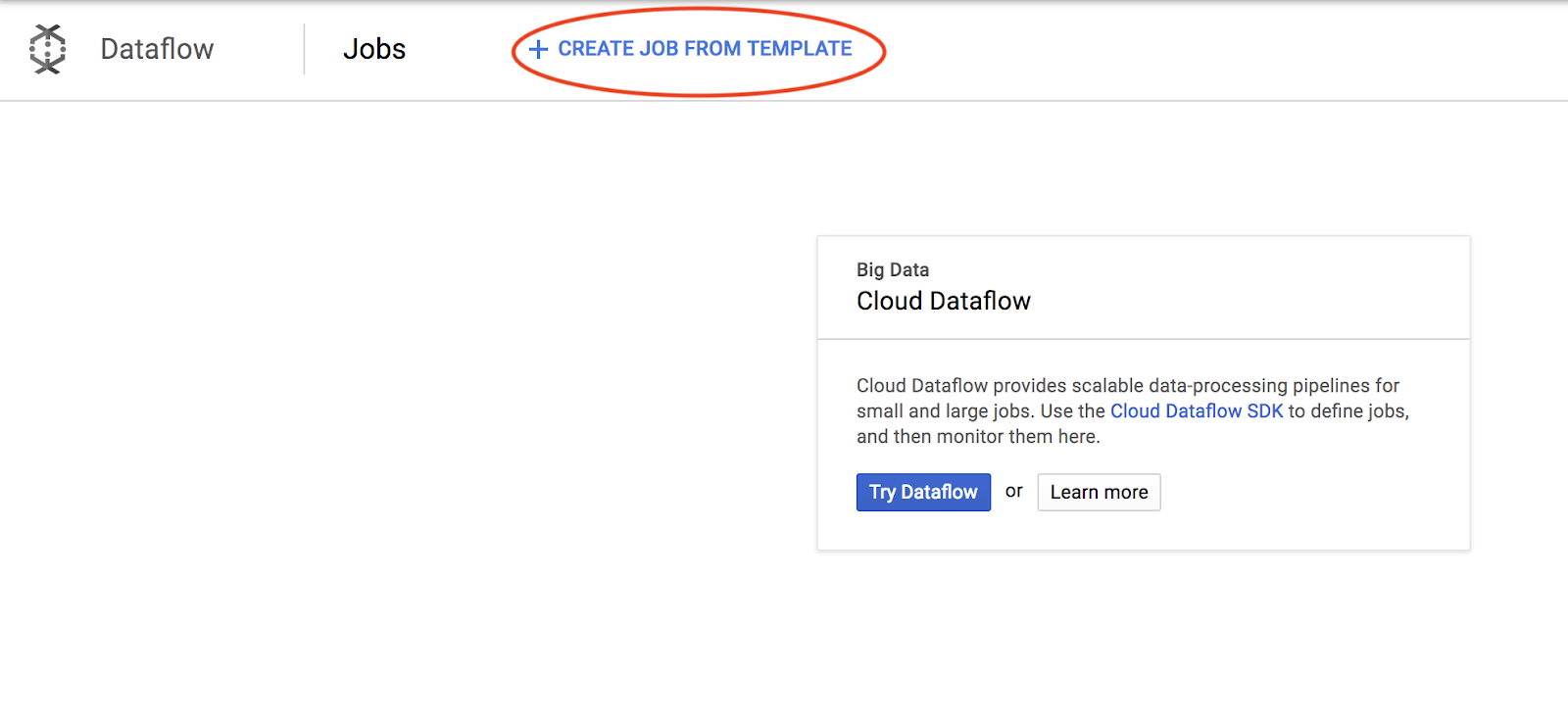

From the Cloud Console, select Dataflow.

Click on Create Job from Template (upper portion of the screen)

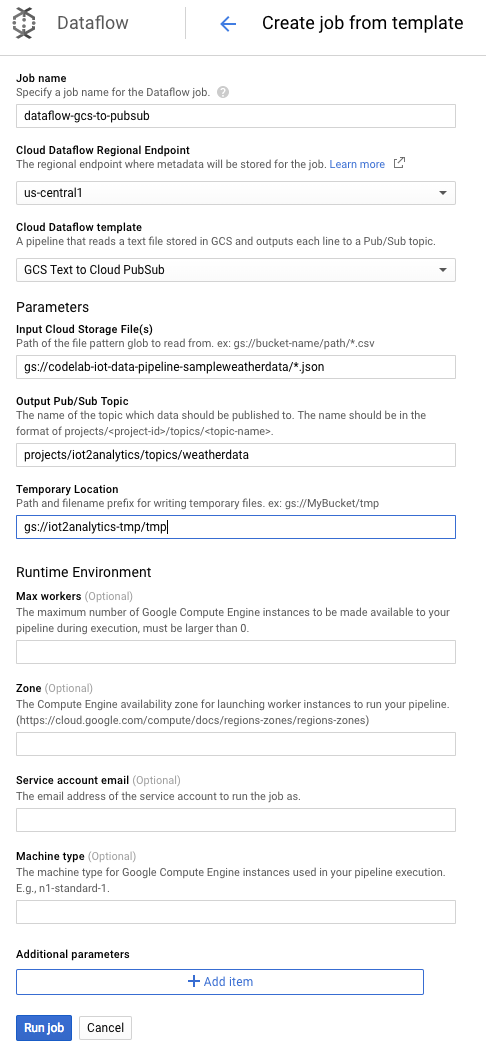

Fill in the job details as shown below paying attention to the following:

- Enter a job name of dataflow-gcs-to-pubsub

- Your region should auto-select according to where your project is hosted and should not need to be changed.

- Select a Cloud Dataflow template of GCS Text to Cloud Pub/Sub

- For the Input Cloud Storage File(s), enter gs://codelab-iot-data-pipeline-sampleweatherdata/*.json (this is a public dataset)

- For the Output Pub/Sub Topic, the exact path will depend upon your project name and will look something like "projects/yourProjectName/topics/weatherdata"

- Set the Temporary Location to the name of the Google Cloud Storage bucket you just created along with a filename prefix of "tmp". It should look like "gs://myStorageBucketName/tmp".

When you have all the information filled in (see below), click the Run job button

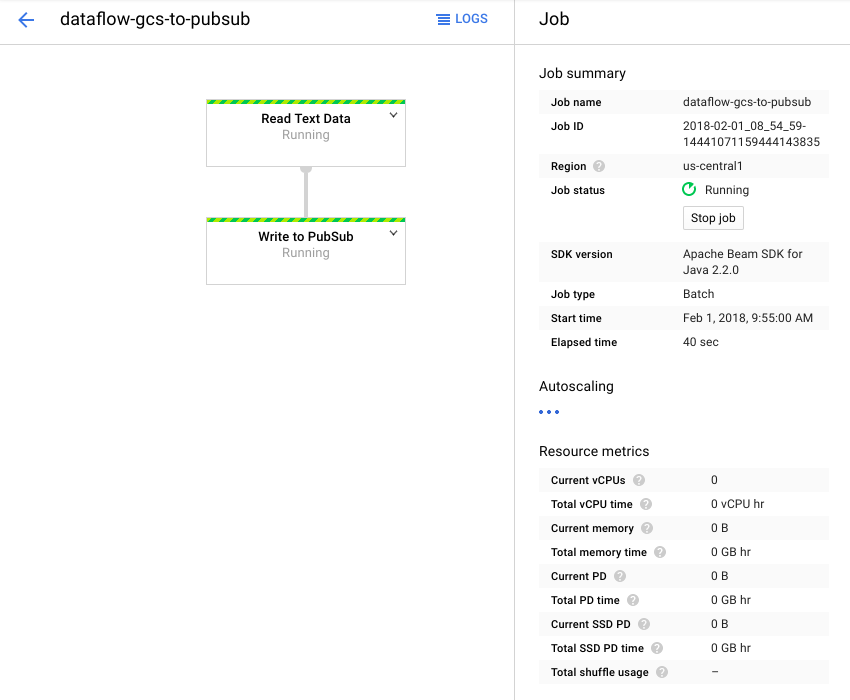

The Dataflow job should start to run.

It should take approximately a minute for the Dataflow job to complete.

8. Check that data is flowing

Cloud Function logs

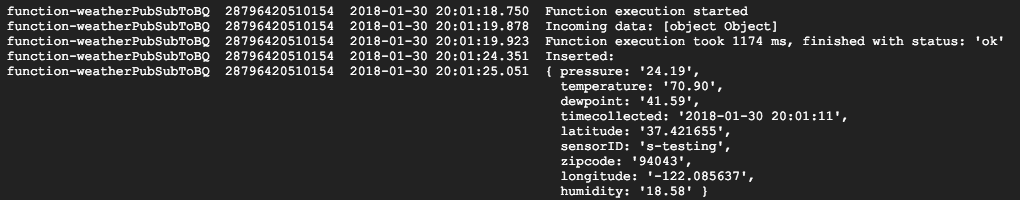

Ensure that the Cloud Function is being triggered by Pub/Sub

gcloud beta functions logs read function-weatherPubSubToBQ

The logs should show that the function is executing, data is being received and that it is being inserted into BigQuery

BigQuery data

Check to make sure that data is flowing into the BigQuery table. From the Cloud Console, go to BigQuery (bigquery.cloud.google.com).

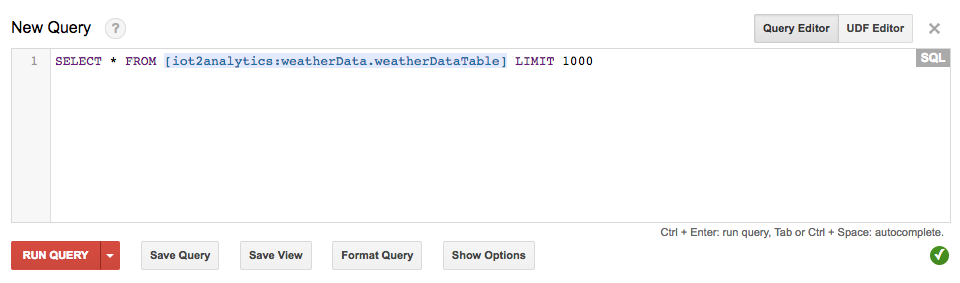

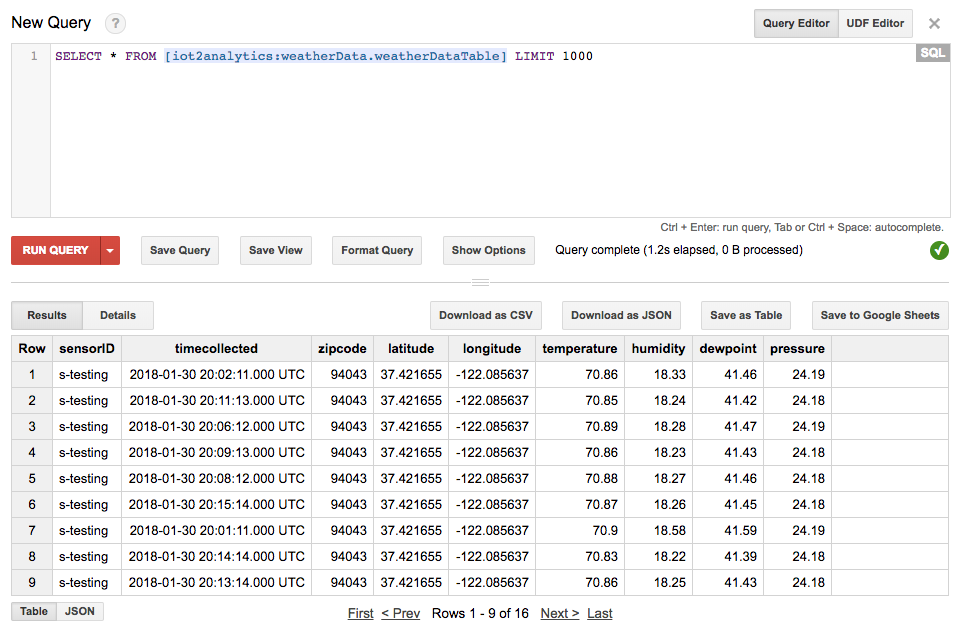

Under the project name (on the left hand side of the window), click on the Dataset (weatherData), then on the table (weatherDataTable) and then click on the Query Table button

Add an asterisk to the SQL statement so it reads SELECT * FROM... as shown below and then click the RUN QUERY button

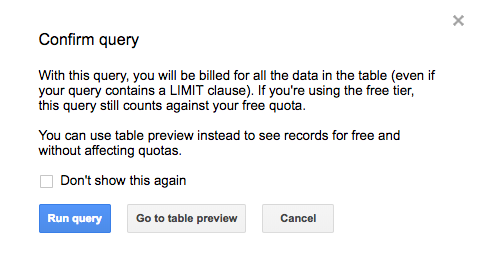

If prompted, click on the Run query button

If you see results, then data is flowing properly.

With data flowing, you are now ready to build out an analytics dashboard.

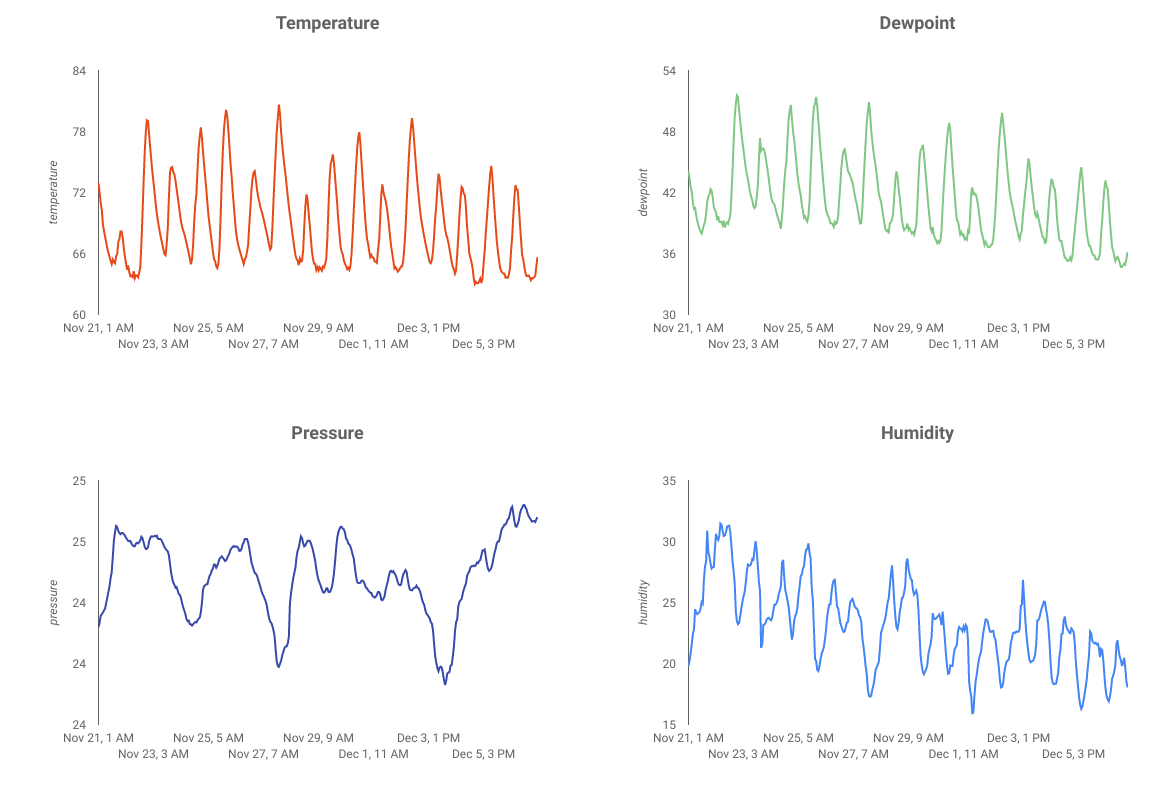

9. Create a Data Studio dashboard

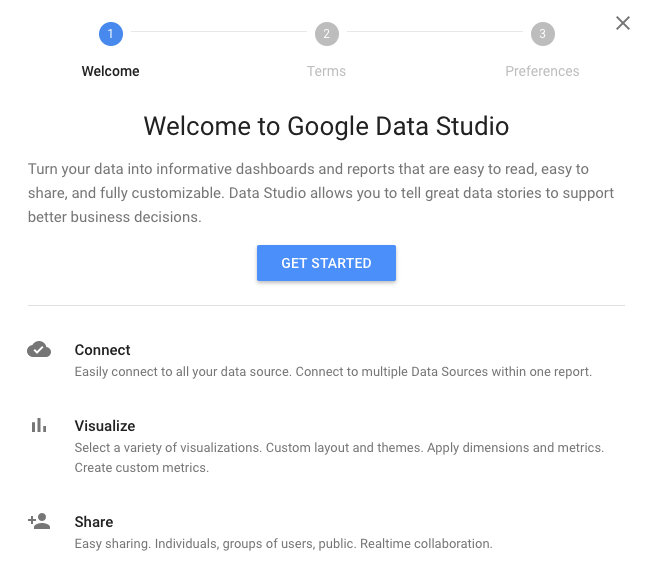

Google Data Studio turns your data into informative dashboards and reports that are easy to read, easy to share, and fully customizable.

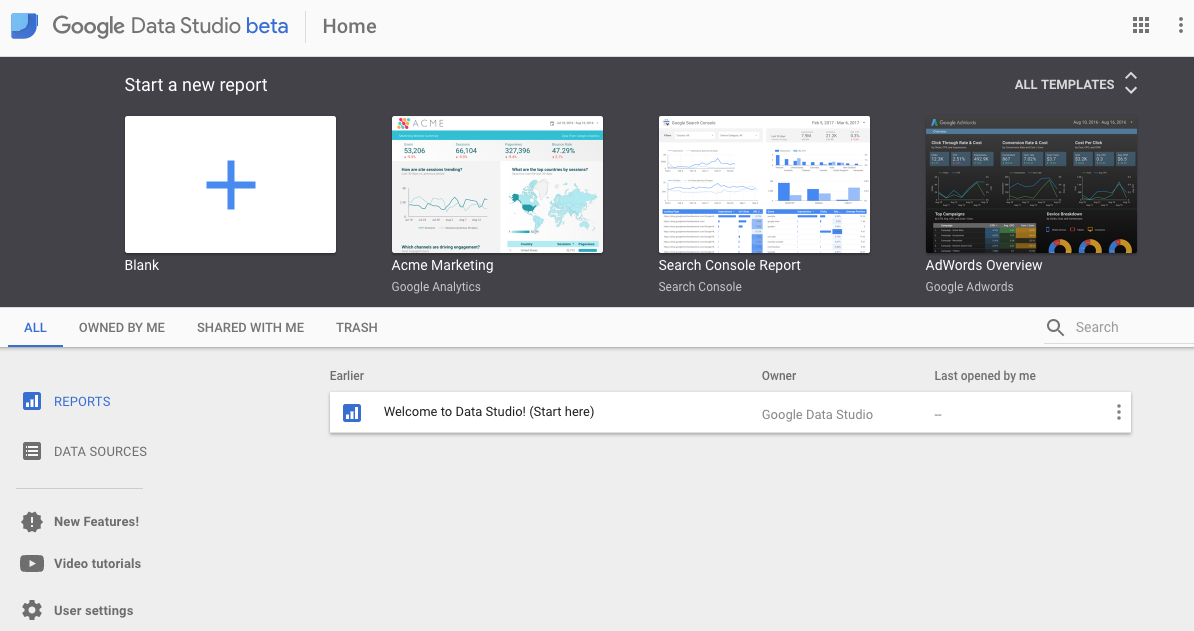

From your web browser, go to https://datastudio.google.com

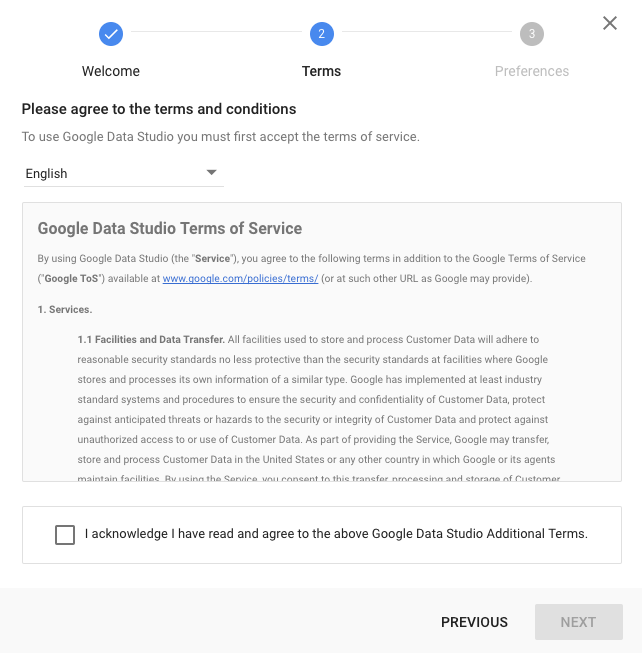

Under "Start a new report", click on Blank and then click on the Get Started button

Click the checkbox to accept the terms, click the Next button, select which emails you are interested in receiving and click on the Done button. Once again, under "Start a new report", click on Blank

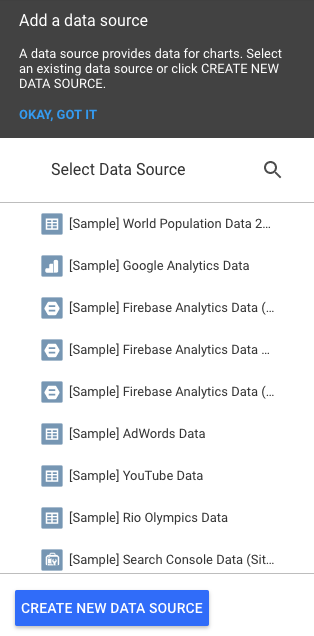

Click on the Create New Data Source button

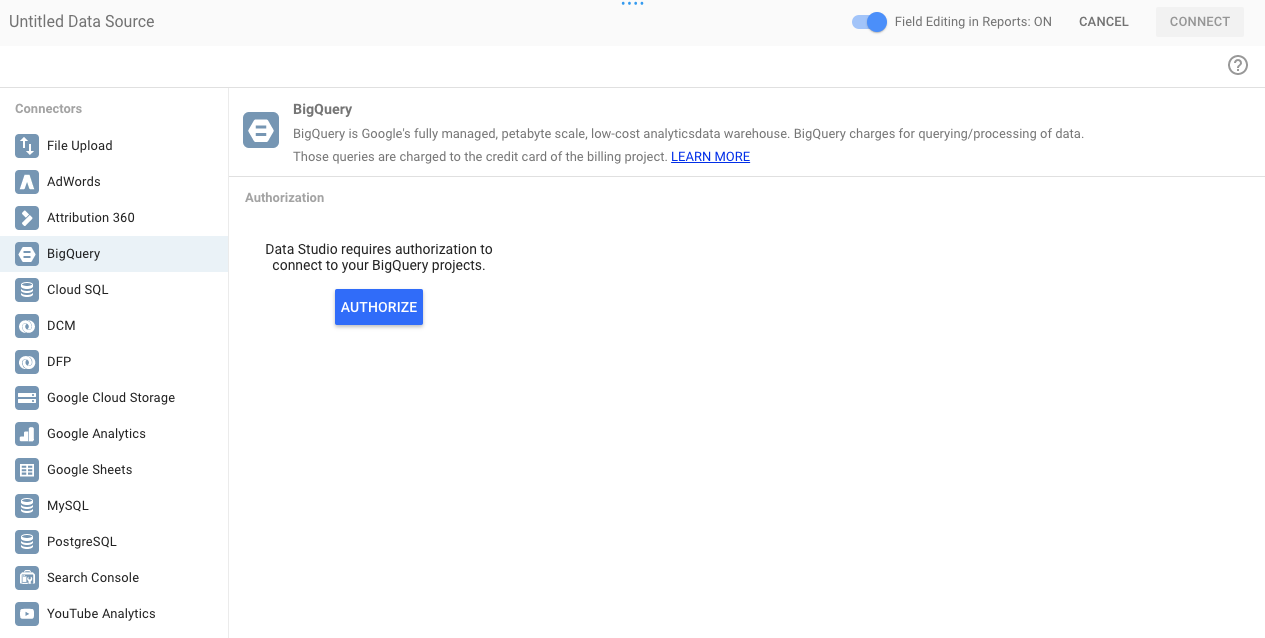

Click on BigQuery, then on the Authorize button and then choose the Google account you wish to use with Data Studio (it should be the same one that you have been using for the codelab).

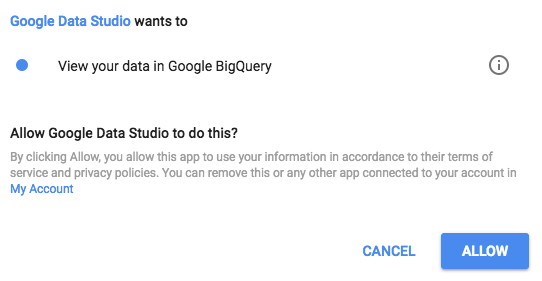

Click on the Allow button

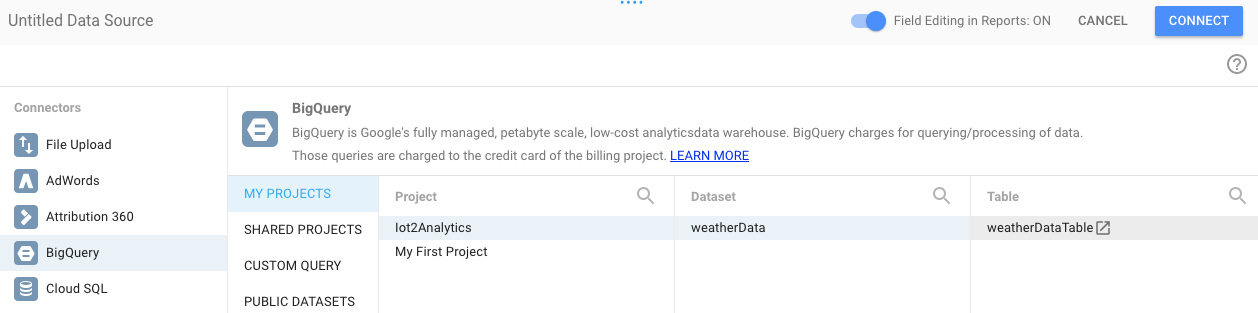

Select your project name, dataset and table. Then click the Connect button.

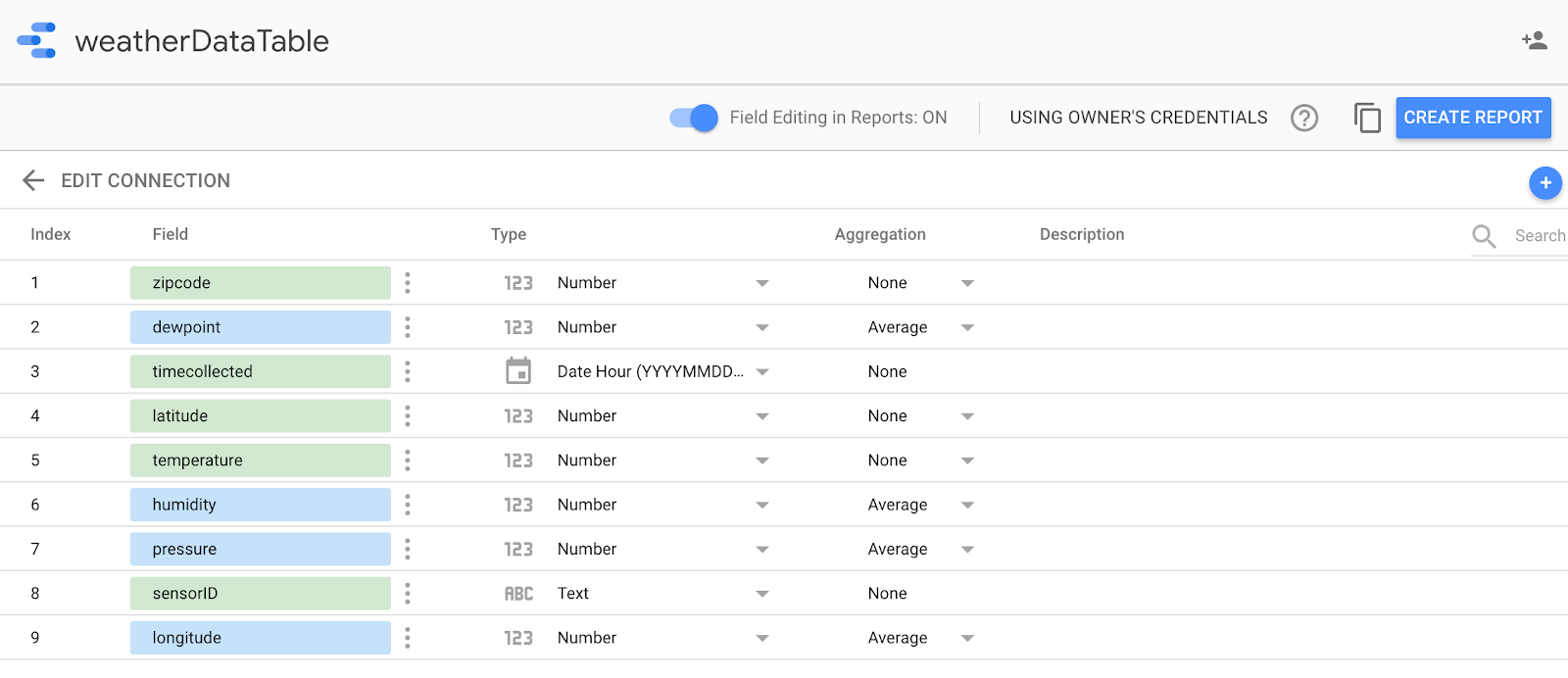

Change the type fields as shown below (everything should be a number except for timecollected and sensorID). Note that timecollected is set to Date Hour (and not just Date). Change the Aggregation fields as shown below (dewpoint, temperature, humidity and pressure should be averages and everything else should be set to "None"). Click on the Create Report button.

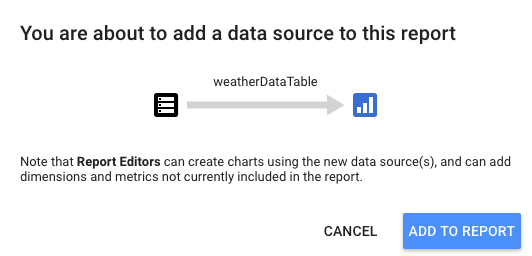

Confirm by clicking the Add to report button

If asked to select your Google account, do so and then click the Allow button to let Data Studio store its reports in Google Drive.

You are presented with a blank canvas on which to create your dashboard. From the top row of icons, choose Time Series.

Draw a rectangle in the top left corner of the blank sheet. It should occupy about ¼ of the total blank sheet.

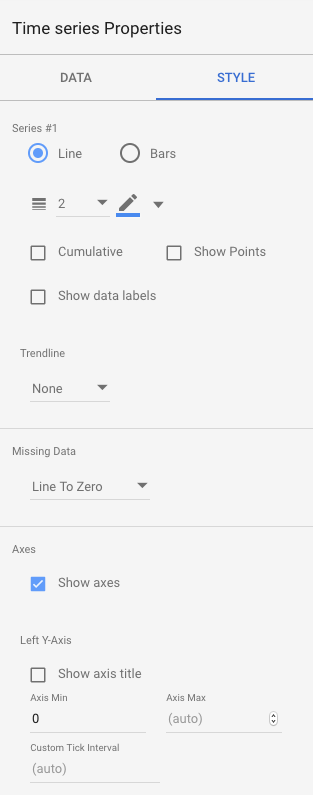

On the right hand side of the window, select the Style tab. Change Missing Data from "Line To Zero" to "Line Breaks". In the Left Y-Axis section, delete the 0 from Axis Min to change it to (Auto).

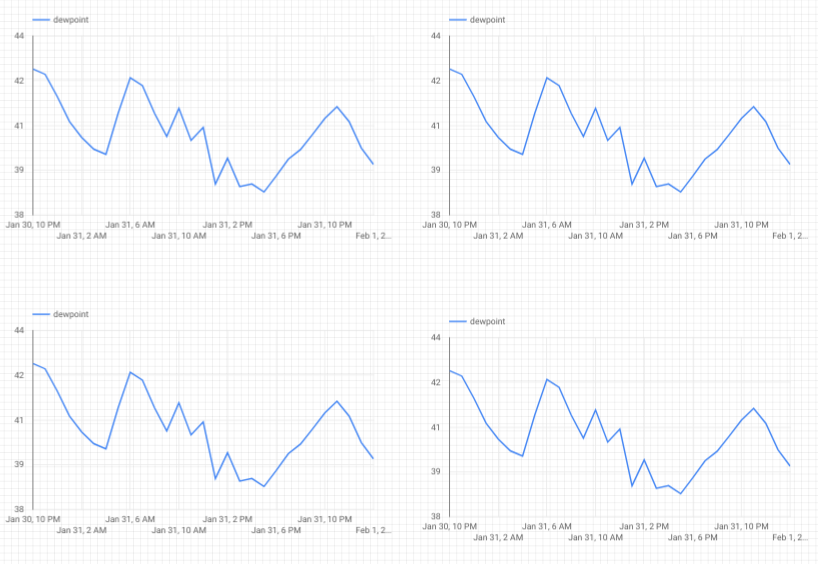

Click the graph on the sheet and copy/paste (Ctrl-C/Ctrl-V) it 3 times. Align the graphs so that each has ¼ of the layout.

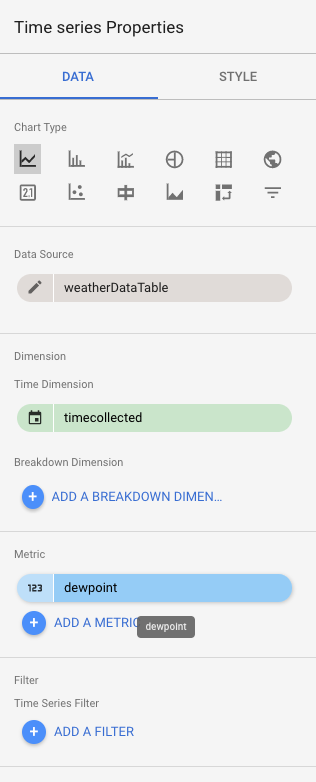

Click on each graph and under the Time Series Properties and Data section click on the existing metric (dewpoint), choose a different metric to be displayed until all four weather readings (dewpoint, temperature, humidity and pressure) have their own graph.

You now have a basic dashboard!

10. Congratulations!

You've created an entire data pipeline! In doing so, you've learned how to use Google Pub/Sub, how to deploy a serverless Function, how to leverage BigQuery and how to create an analytics dashboard using Data Studio. In addition, you've seen how the Google Cloud SDK can be used securely to bring data into the Google Cloud Platform. Finally, you now have some hands-on experience with an important architectural pattern that can handle high volumes while maintaining availability.

Clean-up

Once you are done experimenting with the weather data and the analytics pipeline, you can remove the running resources.

If you built the IoT sensor, shut it down. Hit Ctrl-C in the terminal window to stop the script and then type the following to power down the Raspberry Pi

shutdown -h now

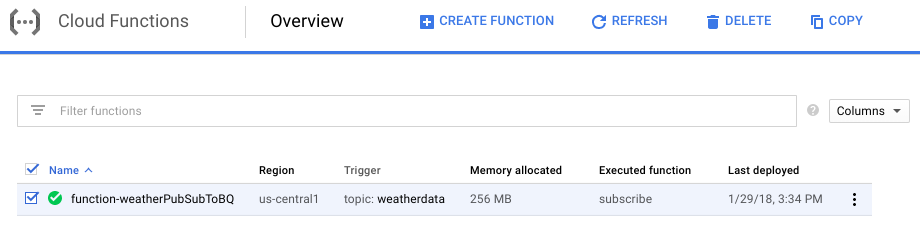

Go to Cloud Functions, click on the checkbox next to function-weatherPubSubToBQ and then click on Delete

Go to Pub/Sub, click on Topic, click on the checkbox next to the weatherdata topic and then click on Delete

Go to Storage, click on the checkboxes next to the storage buckets and then click on Delete

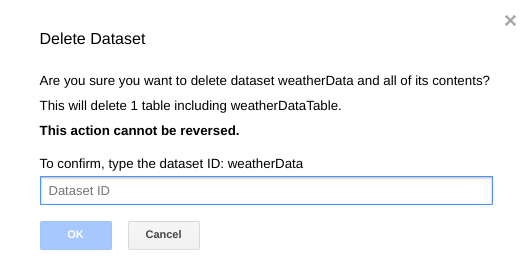

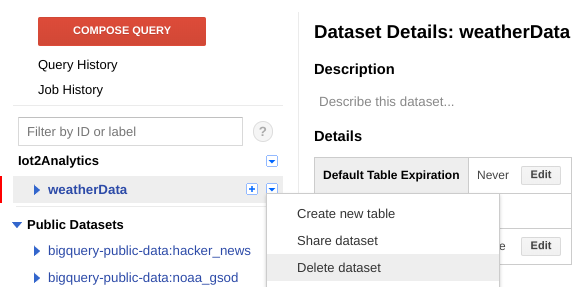

Go to bigquery.cloud.google.com, click the down arrow next to your project name, click the down arrow to the right of the weatherData dataset and then click on Delete dataset.

When prompted, type in the dataset ID (weatherData) in order to finish deleting the data.