1. Introduction

Looker (Google Cloud core) provides simplified and streamlined provisioning, configuration, and management of a Looker instance from the Google Cloud console. Some instance administration tasks may also be performed from the console.

There are three available network configurations for Looker (Google Cloud core) instances:

- Public: Network connection uses an external, internet-accessible IP address.

- Private: Network connection uses an internal, Google-hosted Virtual Private Cloud (VPC) IP address.

- Public and private: Network connection uses both a public IP address and a private IP address, where incoming traffic will be routed through public IP, and outgoing traffic will be routed through private IP.

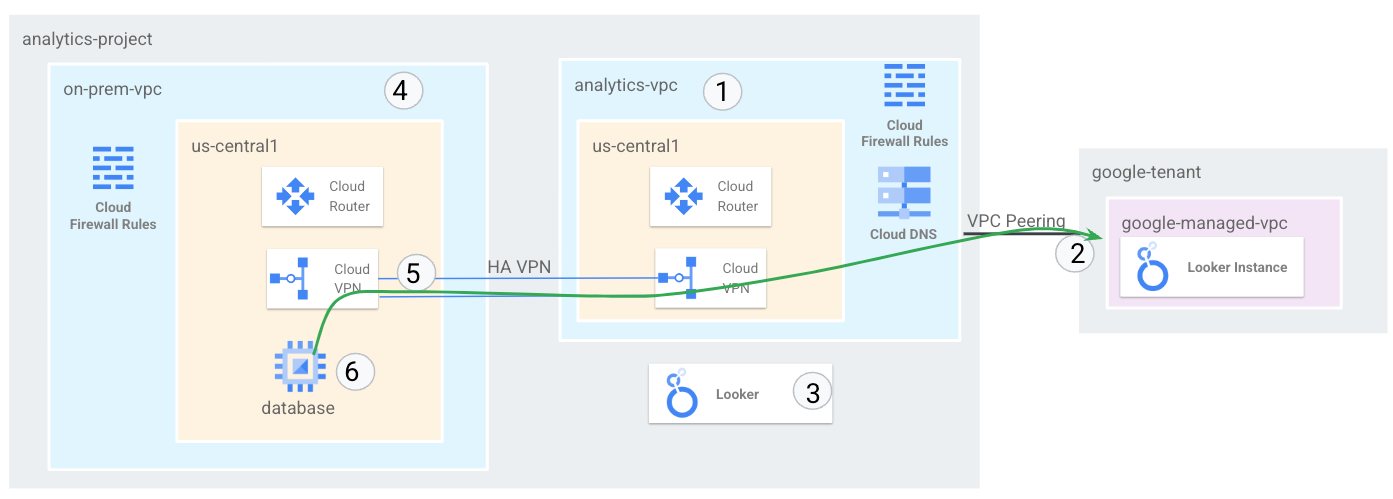

In the tutorial you will deploy a comprehensive end to end Private network to support Looker connectivity to an on-prem-vpc over HA VPN that can be replicated to meet your requirements for multi cloud and on-premises connectivity.

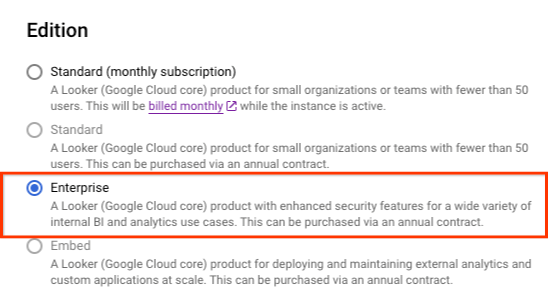

Looker (Google Cloud core) supports private IP for instances that meet the following criteria:

- Instance editions must be Enterprise or Embed.

What you'll build

In this tutorial, you're going to build a comprehensive private Looker network deployment in a standalone VPC that has hybrid connectivity to multi cloud and on-premises.

You will set up a VPC network called on-prem-vpc to represent an on-premises environment. For your deployment, the on-prem-vpc would not exist, instead hybrid networking to your on-premise data center or cloud provider would be used.

Below are the major steps of the tutorial

- Create a standalone VPC in us-central1

- Allocate an IP subnet to Private Service Access

- Deploy Looker instance in the standalone VPC

- Create the on-prem-vpc and hybrid networking

- Advertise and validate Looker IP range over BGP

- Integrate and validate Looker and Postgresql data communication

Figure1

What you'll learn

- How to create a VPC and associated hybrid networking

- How to deploy Looker in a standalone vpc

- How to create a on-prem-vpc and associated hybrid networking

- Connect the on-prem-vpc with the analytics-vps over HA VPN

- How to advertise Looker subnets over hybrid networking

- How to monitor hybrid networking infrastructure

- How to integrate a Postgresql database with Looker Cloud Core

What you'll need

- Google Cloud Project

IAM Permissions

2. Before you begin

Update the project to support the tutorial

This tutorial makes use of $variables to aid gcloud configuration implementation in Cloud Shell.

Inside Cloud Shell, perform the following:

gcloud config list project

gcloud config set project [YOUR-PROJECT-NAME]

projectid=YOUR-PROJECT-NAME

echo $projectid

3. VPC Setup

Create the analytics-vpc

Inside Cloud Shell, perform the following:

gcloud compute networks create analytics-vpc --project=$projectid --subnet-mode=custom

Create the on-prem-vpc

Inside Cloud Shell, perform the following:

gcloud compute networks create on-prem-vpc --project=$projectid --subnet-mode=custom

Create the Postgresql database subnet

Inside Cloud Shell, perform the following:

gcloud compute networks subnets create database-subnet-us-central1 --project=$projectid --range=172.16.10.0/27 --network=on-prem-vpc --region=us-central1

Cloud Router and NAT configuration

Cloud NAT is used in the tutorial for software package installation because the database VM instance does not have an external IP address.

Inside Cloud Shell, create the Cloud Router.

gcloud compute routers create on-prem-cr-us-central1-nat --network on-prem-vpc --region us-central1

Inside Cloud Shell, create the NAT gateway.

gcloud compute routers nats create on-prem-nat-us-central1 --router=on-prem-cr-us-central1-nat --auto-allocate-nat-external-ips --nat-all-subnet-ip-ranges --region us-central1

Create the database test instance

Create a postgres-database instance that will be used to test and validate connectivity to Looker.

Inside Cloud Shell, create the instance.

gcloud compute instances create postgres-database \

--project=$projectid \

--zone=us-central1-a \

--machine-type=e2-medium \

--subnet=database-subnet-us-central1 \

--no-address \

--image=projects/ubuntu-os-cloud/global/images/ubuntu-2304-lunar-amd64-v20230621 \

--metadata startup-script="#! /bin/bash

sudo apt-get update

sudo apt -y install postgresql postgresql-client postgresql-contrib -y"

Create Firewall Rules

To allow IAP to connect to your VM instances, create a firewall rule that:

- Applies to all VM instances that you want to be accessible by using IAP.

- Allows ingress traffic from the IP range 35.235.240.0/20. This range contains all IP addresses that IAP uses for TCP forwarding.

From Cloud Shell

gcloud compute firewall-rules create on-prem-ssh \

--network on-prem-vpc --allow tcp:22 --source-ranges=35.235.240.0/20

4. Private Service Access

Private services access is a private connection between your VPC network and a network owned by Google or a third party. Google or the third party, entities providing services, are also known as service producers. Looker Cloud Core is a service producer.

The private connection enables VM instances in your VPC network and the services that you access to communicate exclusively by using internal IP addresses.

At a high level, to use private services access, you must allocate an IP address range (CIDR block) in your VPC network and then create a private connection to a service producer.

Allocate IP address range for services

Before you create a private connection, you must allocate an IPv4 address range to be used by the service producer's VPC network. This ensures that there's no IP address collision between your VPC network and the service producer's network.

When you allocate a range in your VPC network, that range is ineligible for subnets (primary and secondary ranges) and destinations of custom static routes.

Using IPv6 address ranges with private services access is not supported.

Enable the Service Networking API for your project in the Google Cloud console. When enabling the API, you may need to refresh the console page to confirm that the API has been enabled.

Create an IP allocation

To specify an address range and a prefix length (subnet mask), use the addresses and prefix-length flags. For example, to allocate the CIDR block 192.168.0.0/22, specify 192.168.0.0 for the address and 22 for the prefix length.

Inside Cloud Shell, create the IP allocation for Looker.

gcloud compute addresses create psa-range-looker \

--global \

--purpose=VPC_PEERING \

--addresses=192.168.0.0 \

--prefix-length=22 \

--description="psa range for looker" \

--network=analytics-vpc

Inside Cloud Shell, validate the IP allocation.

gcloud compute addresses list --global --filter="purpose=VPC_PEERING"

Example:

userl@cloudshell$ gcloud compute addresses list --global --filter="purpose=VPC_PEERING"

NAME: psa-range-looker

ADDRESS/RANGE: 192.168.0.0/22

TYPE: INTERNAL

PURPOSE: VPC_PEERING

NETWORK: analytics-vpc

REGION:

SUBNET:

STATUS: RESERVED

Create a Private Connection

After you create an allocated range, you can create a private connection to a service producer, Looker Cloud Core. The private connection establishes a VPC Network Peering connection between your VPC network and the service producer's network once the Looker instance is established.

Private connections are a one-to-one relationship between your VPC network and a service producer. If a single service producer offers multiple services, you only need one private connection for all of the producer's services.

If you connect to multiple service producers, use a unique allocation for each service producer. This practice helps you manage your network settings, such as routes and firewall rules, for each service producer.

Inside Cloud Shell, create a private connection, make note of the operation name.

gcloud services vpc-peerings connect \

--service=servicenetworking.googleapis.com \

--ranges=psa-range-looker \

--network=analytics-vpc

Example:

user@cloudshell$ gcloud services vpc-peerings connect \

--service=servicenetworking.googleapis.com \

--ranges=psa-range-looker \

--network=analytics-vpc

Operation "operations/pssn.p24-1049481044803-f16d61ba-7db0-4516-b525-cd0be063d4ea" finished successfully.

Inside Cloud Shell, check whether the operation was successful, replace OPERATION_NAME with the name generated in the previous step.

gcloud services vpc-peerings operations describe \

--name=OPERATION_NAME

Example:

user@cloudshell$ gcloud services vpc-peerings operations describe \

--name=operations/pssn.p24-1049481044803-f16d61ba-7db0-4516-b525-cd0be063d4ea

Operation "operations/pssn.p24-1049481044803-f16d61ba-7db0-4516-b525-cd0be063d4ea" finished successfully.

5. Create a Looker (Google Cloud core) instance

Before you begin

Enable the Looker API for your project in the Google Cloud console. When enabling the API, you may need to refresh the console page to confirm that the API has been enabled.

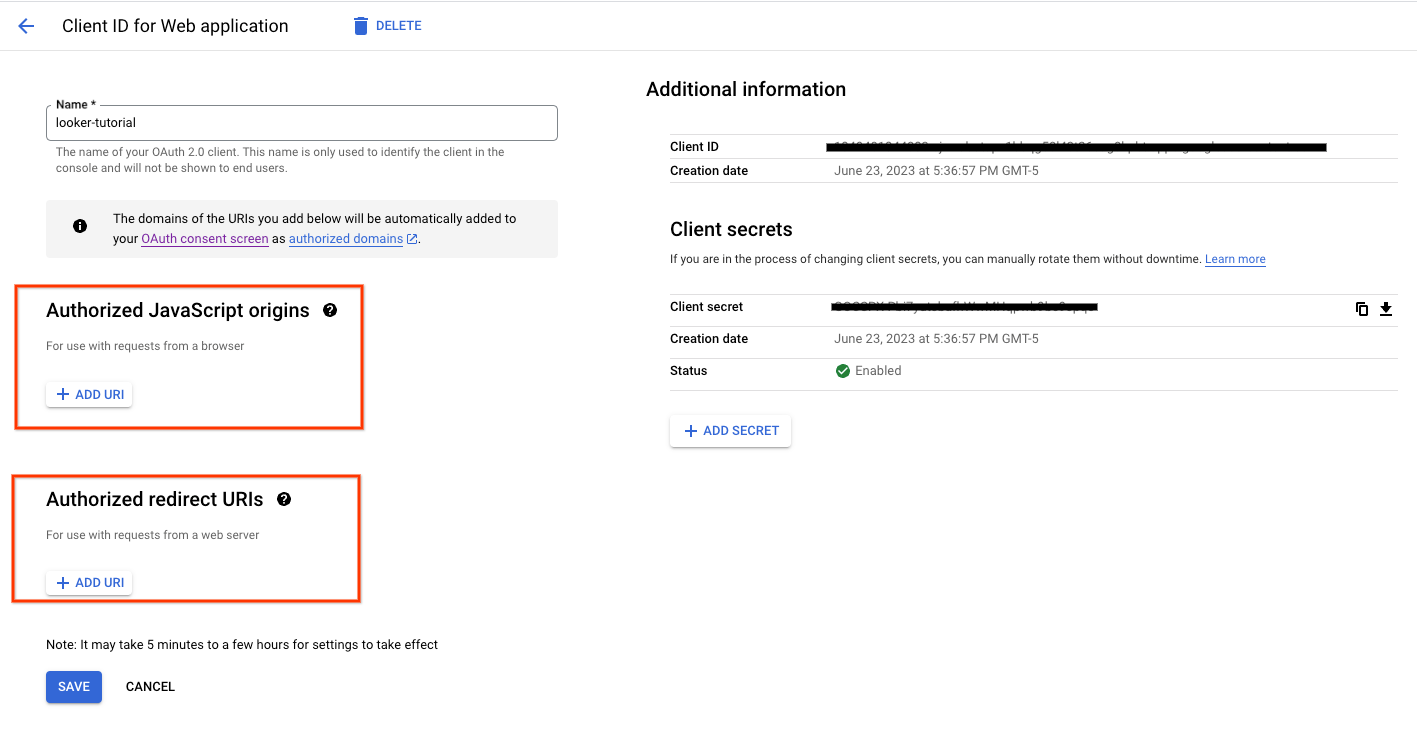

Set up an OAuth client to authenticate and access the instance.

In the following section, you will need to use the OAuth Client ID and Secret to create the Looker instance.

Authorized Javascript origins and redirect URIs are not required.

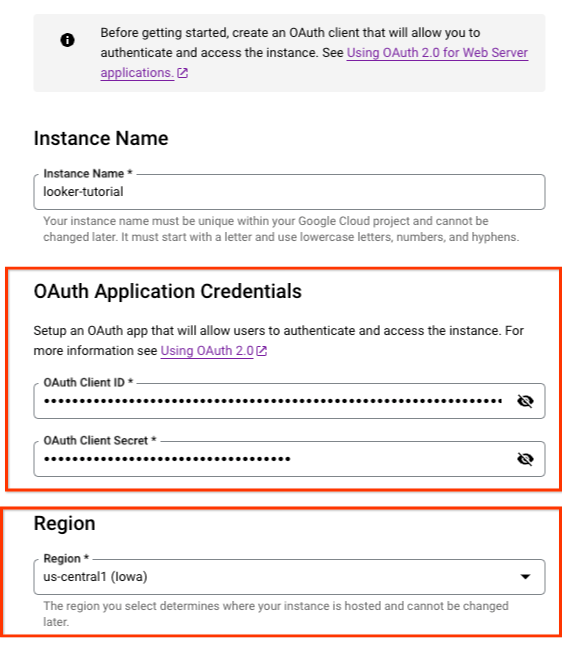

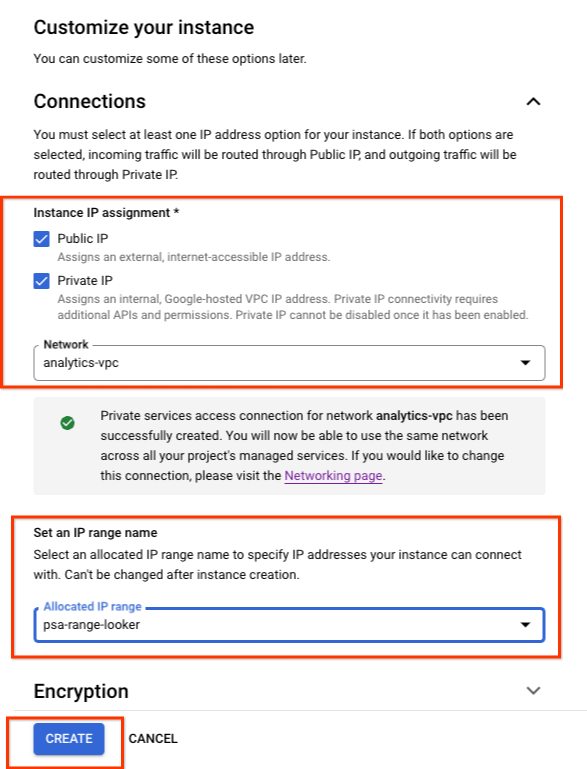

Inside Cloud Console create an instance based on the screenshots provided.

Navigate to LOOKER → CREATE AN INSTANCE

Populate the previously created OAuth Client ID and Secret.

Select CREATE

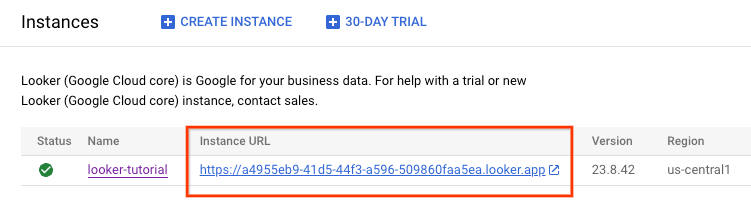

As the instance is being created, you will be redirected to the Instances page within the console. You may need to refresh the page to view the status of your new instance. You can also see your instance creation activity by clicking on the notifications icon in the Google Cloud console menu. While your instance is being created, the notifications icon in the Google Cloud console menu will be encircled by a loading icon.

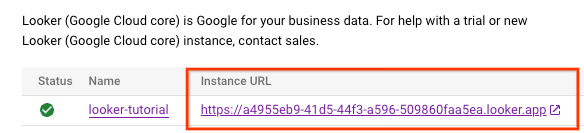

After the Looker instance is created an Instance URL is generated. Make note of the URL.

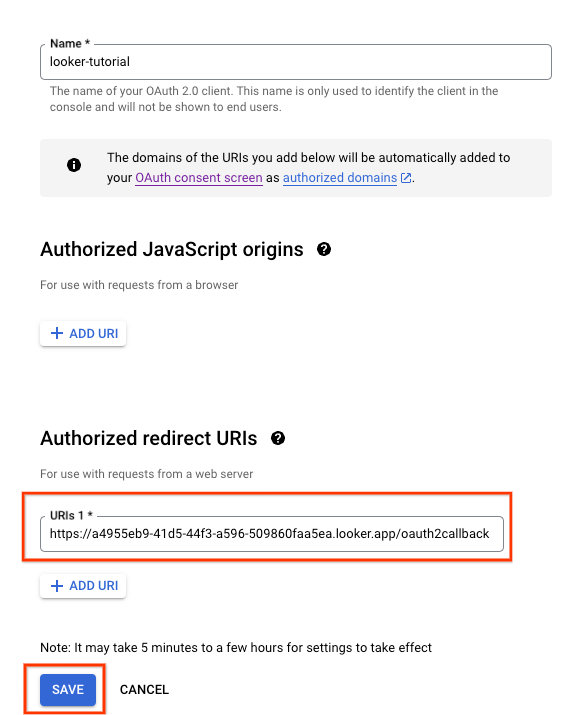

6. Update the OAuth 2.0 Client ID

In the following section, you will need to update the previously created OAuth Client ID Authorized redirect URI by appending /oauth2callback to the Instance URL.

Once completed, you can then use the Instance URL to log into Looker UI.

Inside Cloud Console, navigate to APIs & SERVICES → CREDENTIALS

Select your Oauth 2.0 Client ID and update your Instance URL, example below:

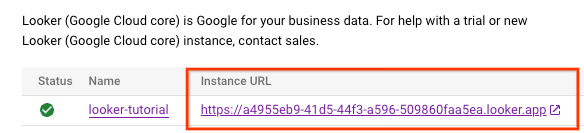

7. Validate Looker Access

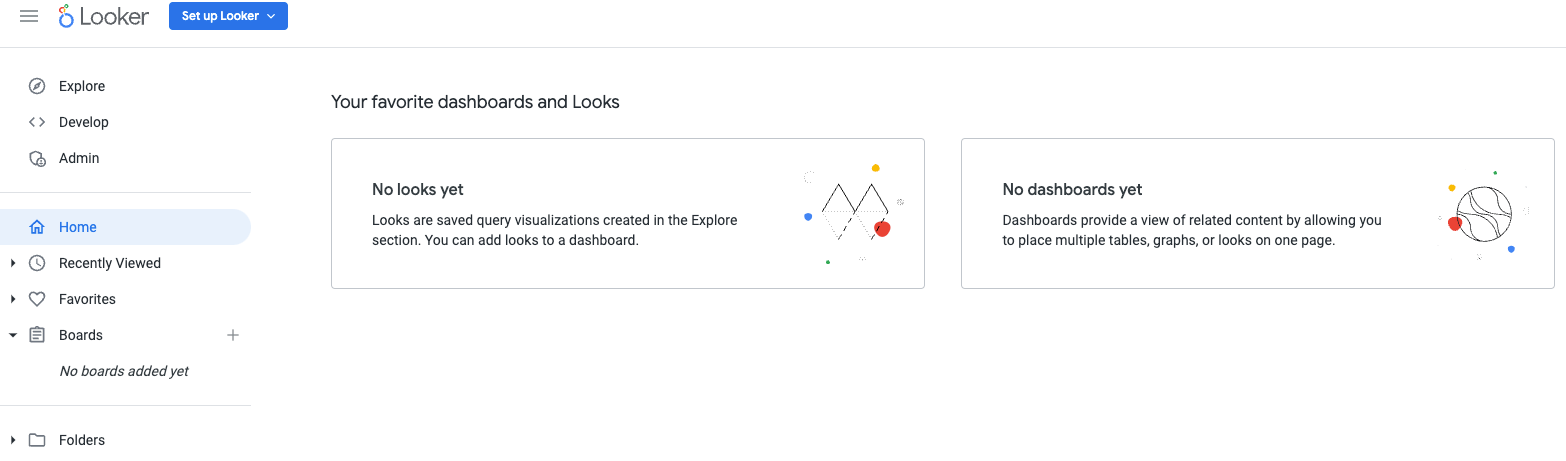

In Cloud Console, navigate to Looker and select your instance url that will open the Looker UI.

Once launched you will be presented with the landing page per the screenshot below confirming your access to Looker Cloud Core.

8. Hybrid connectivity

In the following section, you will create a Cloud Router that enables you to dynamically exchange routes between your Virtual Private Cloud (VPC) and peer network by using Border Gateway Protocol (BGP).

Cloud Router can set up a BGP session over a Cloud VPN tunnel to connect your networks. It automatically learns new subnet IP address ranges and announces them to your peer network.

In the tutorial you will deploy HA VPN between the analytics-vpc and on-prem-vpc to illustrate private connectivity to Looker.

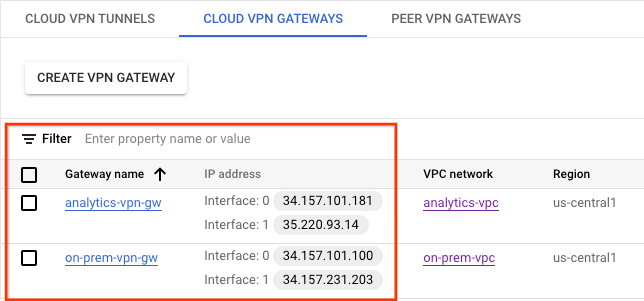

Create the HA VPN GW for the analytics-vpc

When each gateway is created, two external IPv4 addresses are automatically allocated, one for each gateway interface. Note down these IP addresses to use later on in the configuration steps.

Inside Cloud Shell, create the HA VPN GW

gcloud compute vpn-gateways create analytics-vpn-gw \

--network=analytics-vpc\

--region=us-central1

Create the HA VPN GW for the on-prem-vpc

When each gateway is created, two external IPv4 addresses are automatically allocated, one for each gateway interface. Note down these IP addresses to use later on in the configuration steps.

Inside Cloud Shell, create the HA VPN GW.

gcloud compute vpn-gateways create on-prem-vpn-gw \

--network=on-prem-vpc\

--region=us-central1

Validate HA VPN GW creation

Using the console, navigate to HYBRID CONNECTIVITY → VPN → CLOUD VPN GATEWAYS.

Create the Cloud Router for the analytics-vpc

Inside Cloud Shell, create the Cloud Router located in us-central1

gcloud compute routers create analytics-cr-us-central1 \

--region=us-central1 \

--network=analytics-vpc\

--asn=65001

Create the Cloud Router for the on-prem-vpc

Inside Cloud Shell, create the Cloud Router located in us-central1

gcloud compute routers create on-prem-cr-us-central1 \

--region=us-central1 \

--network=on-prem-vpc \

--asn=65002

Create the VPN tunnels for analytics-vpc

You will create two VPN tunnels on each HA VPN gateway.

Create VPN tunnel0

Inside Cloud Shell, create tunnel0:

gcloud compute vpn-tunnels create analytics-vpc-tunnel0 \

--peer-gcp-gateway on-prem-vpn-gw \

--region us-central1 \

--ike-version 2 \

--shared-secret [ZzTLxKL8fmRykwNDfCvEFIjmlYLhMucH] \

--router analytics-cr-us-central1 \

--vpn-gateway analytics-vpn-gw \

--interface 0

Create VPN tunnel1

Inside Cloud Shell, create tunnel1:

gcloud compute vpn-tunnels create analytics-vpc-tunnel1 \

--peer-gcp-gateway on-prem-vpn-gw \

--region us-central1 \

--ike-version 2 \

--shared-secret [bcyPaboPl8fSkXRmvONGJzWTrc6tRqY5] \

--router analytics-cr-us-central1 \

--vpn-gateway analytics-vpn-gw \

--interface 1

Create the VPN tunnels for on-prem-vpc

You will create two VPN tunnels on each HA VPN gateway.

Create VPN tunnel0

Inside Cloud Shell, create tunnel0:

gcloud compute vpn-tunnels create on-prem-tunnel0 \

--peer-gcp-gateway analytics-vpn-gw \

--region us-central1 \

--ike-version 2 \

--shared-secret [ZzTLxKL8fmRykwNDfCvEFIjmlYLhMucH] \

--router on-prem-cr-us-central1 \

--vpn-gateway on-prem-vpn-gw \

--interface 0

Create VPN tunnel1

Inside Cloud Shell, create tunnel1:

gcloud compute vpn-tunnels create on-prem-tunnel1 \

--peer-gcp-gateway analytics-vpn-gw \

--region us-central1 \

--ike-version 2 \

--shared-secret [bcyPaboPl8fSkXRmvONGJzWTrc6tRqY5] \

--router on-prem-cr-us-central1 \

--vpn-gateway on-prem-vpn-gw \

--interface 1

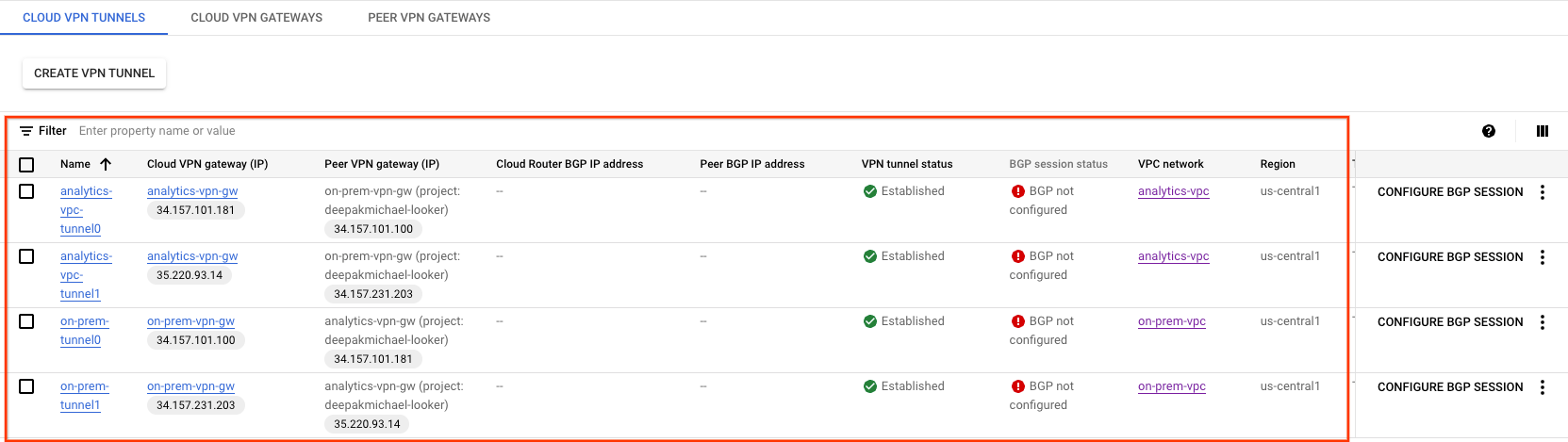

Validate vpn tunnel creation

Using the console, navigate to HYBRID CONNECTIVITY → VPN → CLOUD VPN TUNNELS.

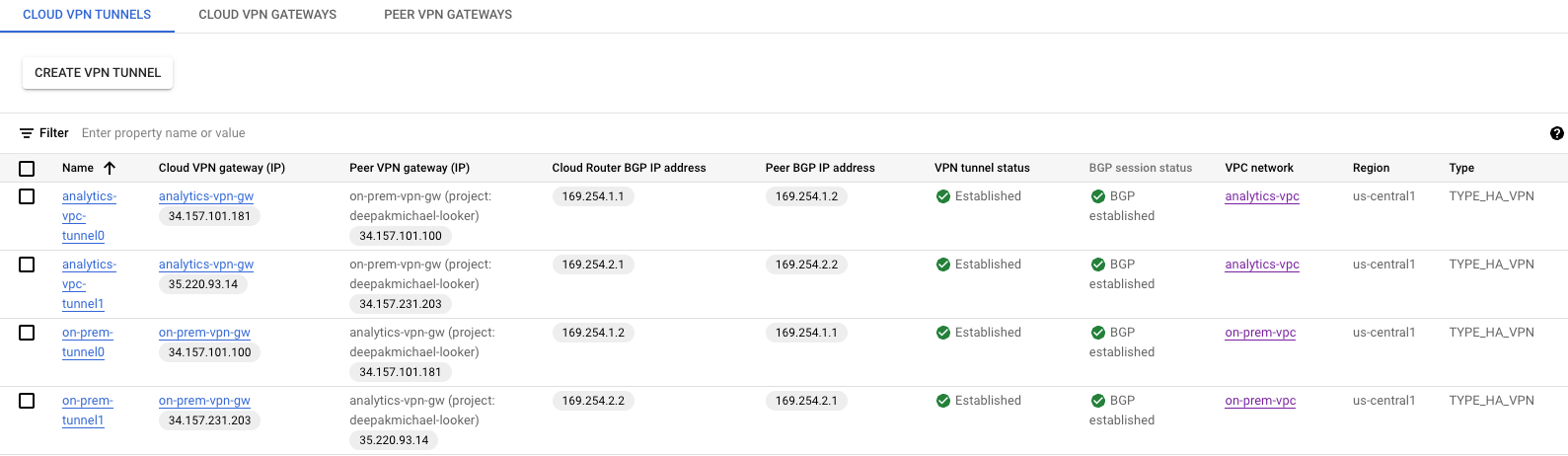

9. Establish BGP neighbors

Create BGP sessions

In this section, you configure Cloud Router interfaces and BGP peers.

Create a BGP interface and peering for analytics-vpc

Inside Cloud Shell, create the BGP interface:

gcloud compute routers add-interface analytics-cr-us-central1 \

--interface-name if-tunnel0-to-onprem \

--ip-address 169.254.1.1 \

--mask-length 30 \

--vpn-tunnel analytics-vpc-tunnel0 \

--region us-central1

Inside Cloud Shell, create the BGP peer:

gcloud compute routers add-bgp-peer analytics-cr-us-central1 \

--peer-name bgp-on-premises-tunnel0 \

--interface if-tunnel1-to-onprem \

--peer-ip-address 169.254.1.2 \

--peer-asn 65002 \

--region us-central1

Inside Cloud Shell, create the BGP interface:

gcloud compute routers add-interface analytics-cr-us-central1 \

--interface-name if-tunnel1-to-onprem \

--ip-address 169.254.2.1 \

--mask-length 30 \

--vpn-tunnel analytics-vpc-tunnel1 \

--region us-central1

Inside Cloud Shell, create the BGP peer:

gcloud compute routers add-bgp-peer analytics-cr-us-central1 \

--peer-name bgp-on-premises-tunnel1 \

--interface if-tunnel2-to-onprem \

--peer-ip-address 169.254.2.2 \

--peer-asn 65002 \

--region us-central1

Create a BGP interface and peering for on-prem-vpc

Inside Cloud Shell, create the BGP interface:

gcloud compute routers add-interface on-prem-cr-us-central1 \

--interface-name if-tunnel0-to-analytics-vpc \

--ip-address 169.254.1.2 \

--mask-length 30 \

--vpn-tunnel on-prem-tunnel0 \

--region us-central1

Inside Cloud Shell, create the BGP peer:

gcloud compute routers add-bgp-peer on-prem-cr-us-central1 \

--peer-name bgp-analytics-vpc-tunnel0 \

--interface if-tunnel1-to-analytics-vpc \

--peer-ip-address 169.254.1.1 \

--peer-asn 65001 \

--region us-central1

Inside Cloud Shell, create the BGP interface:

gcloud compute routers add-interface on-prem-cr-us-central1 \

--interface-name if-tunnel1-to-analytics-vpc \

--ip-address 169.254.2.2 \

--mask-length 30 \

--vpn-tunnel on-prem-tunnel1 \

--region us-central1

Inside Cloud Shell, create the BGP peer:

gcloud compute routers add-bgp-peer on-prem-cr-us-central1 \

--peer-name bgp-analytics-vpc-tunnel1\

--interface if-tunnel2-to-analytics-vpc \

--peer-ip-address 169.254.2.1 \

--peer-asn 65001 \

--region us-central1

Navigate to Hybrid CONNECTIVITY → VPN to view the VPN tunnel details.

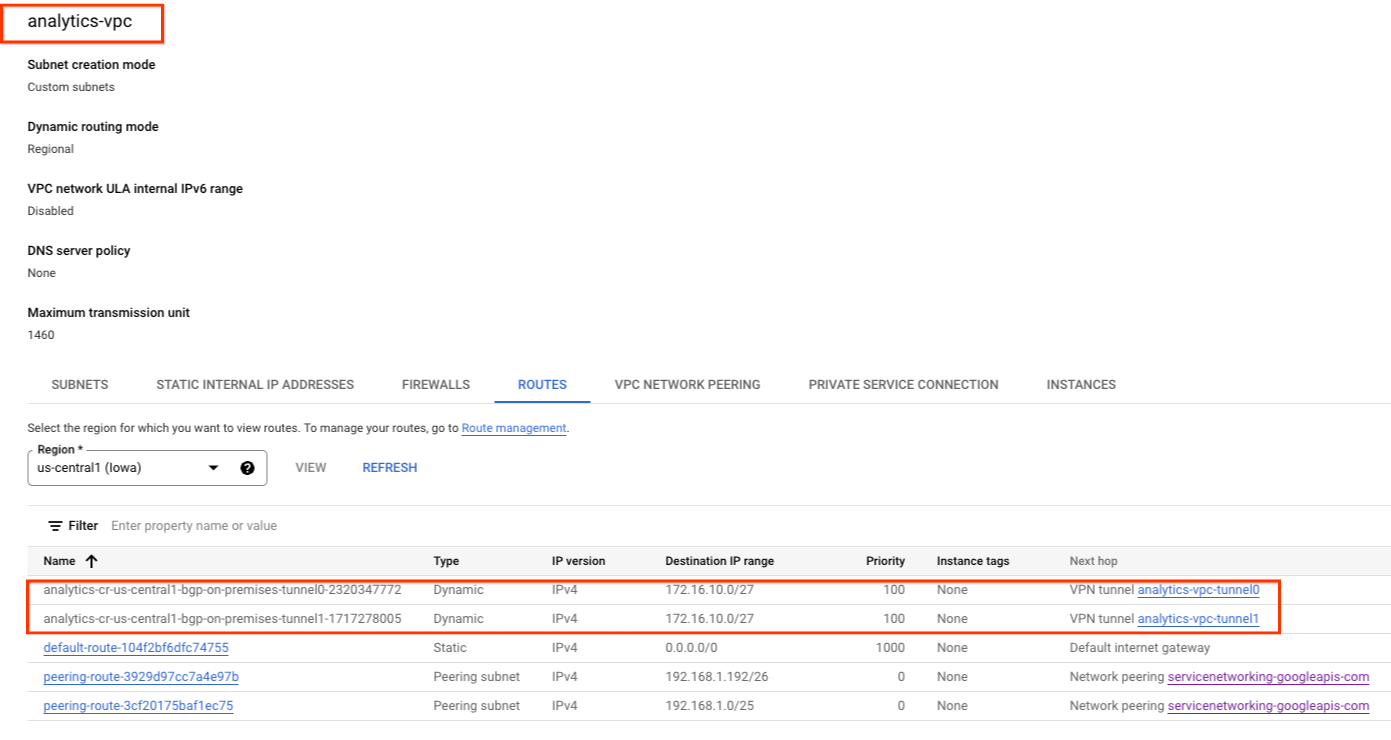

Validate analytics-vpc learned routes over HA VPN

Because the HA VPN tunnels and BGP sessions are established, routes from on-prem-vpc are learned from the analytics-vpc. Using the console, navigate to VPC network → VPC networks → analytics-vpc → ROUTES → REGION → us-central1 → VIEW

Observe the analytics-vpc has learned routes from the on-prem-vpc database-subnet-us-central1 172.16.10.0/27

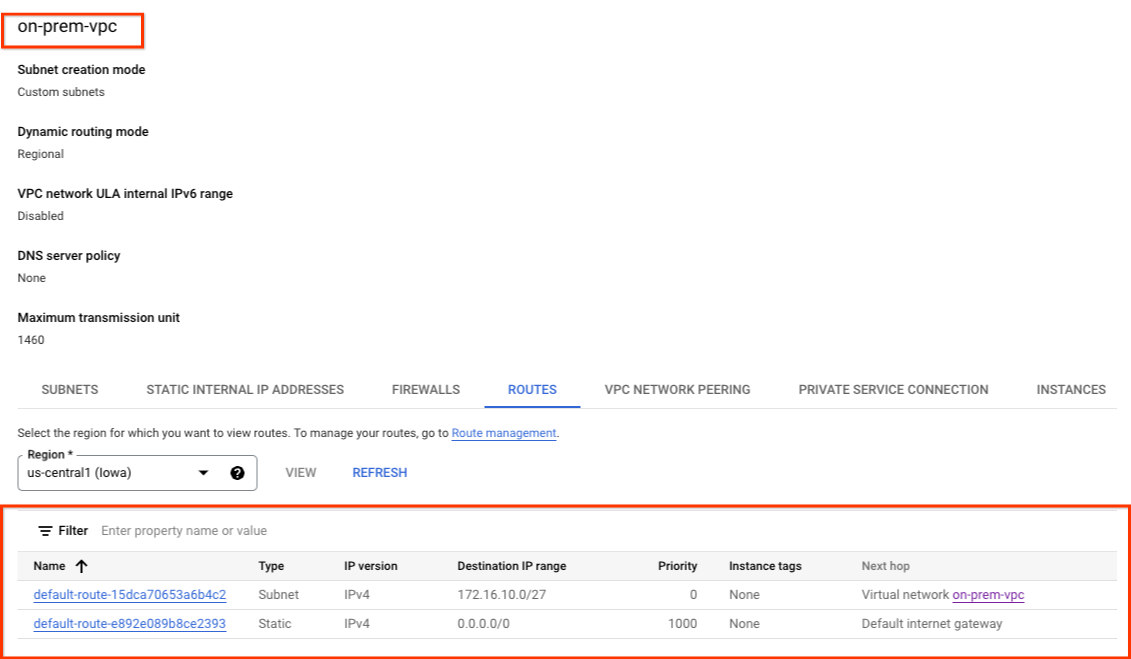

Validate that on-prem-vpc has not learned routes over HA VPN

The analytics-vpc does not have a subnet, therefore the Cloud Router will not advertise any subnets to the on-prem-vpc . Using the console, navigate to VPC network → VPC networks → on-prem-vpc → ROUTES → REGION → us-central1 → VIEW

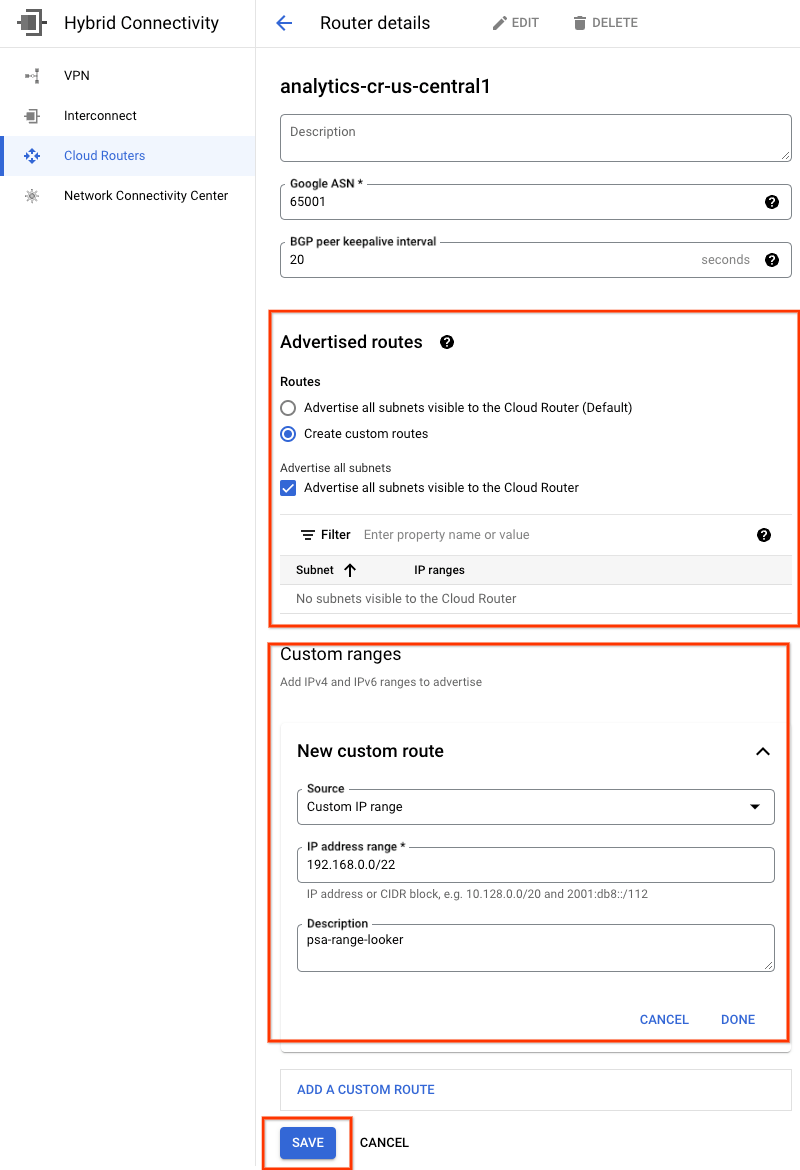

10. Advertise the Looker subnet to on-prem

The Looker Private Service Access (PSA) subnet is not automatically advertised by the analytics-cr-us-central1 cloud router because the subnet is assigned to PSA, not the VPC.

You will need to create a custom route advertisement from the analytics-cr-central1 for the PSA subnet 192.168.0.0/22 (psa-range-looker) that will be advertised to the on-premises environment and used by workloads to access Looker.

From the console navigate to HYBRID CONNECTIVITY → CLOUD ROUTERS → analytics-cr-us-central1, then select EDIT.

In the section Advertised routes, select the option Create custom routes, update the fields based on the example below, select DONE, and then click SAVE.

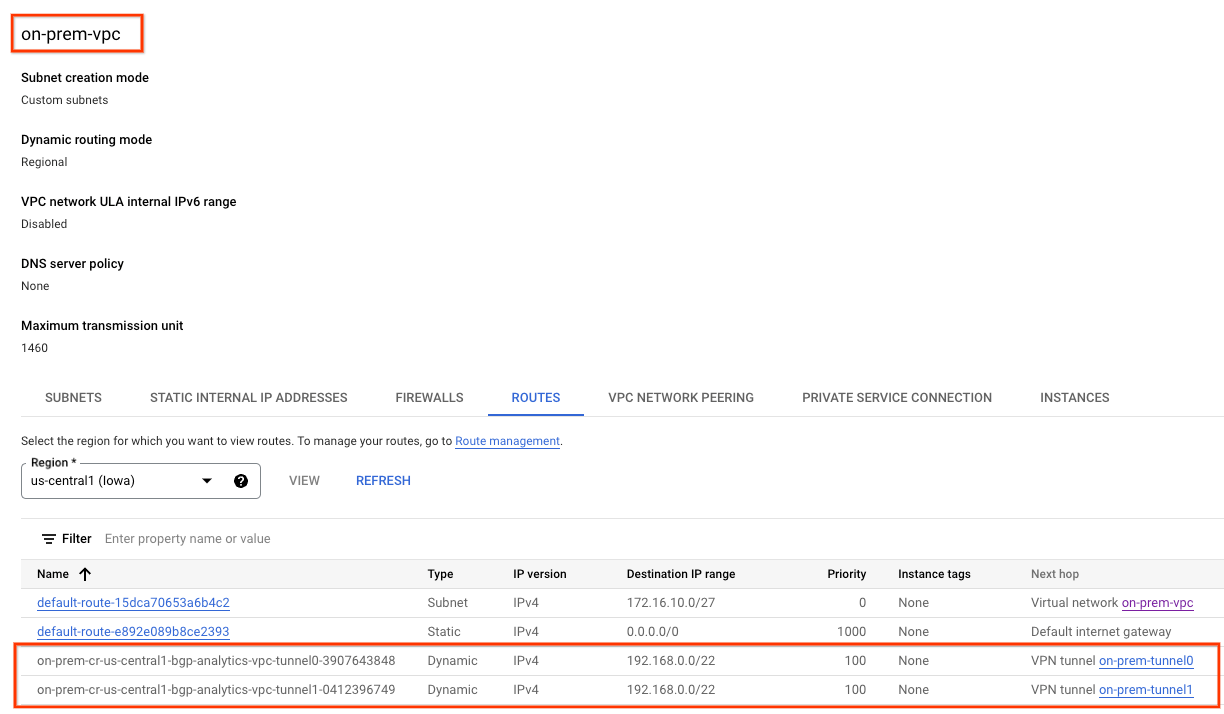

11. Validate that the on-prem-vpc has learned the Looker subnet

The on-prem-vpc will now be able to access the Looker PSA subnet since it has been advertised from the analytics-cr-us-central1 as a custom route advertisement.

Using the console, navigate to VPC NETWORK → VPC NETWORKS → on-prem-vpc → ROUTES → REGION → us-central1 → VIEW

Observe the Looker routes advertised from the analytics-vpc:

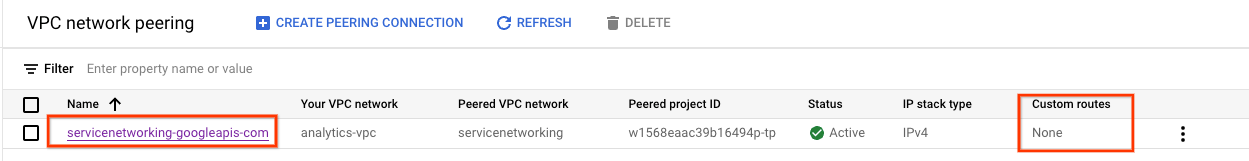

12. Validate current VPC Peering

The connection between Looker Cloud Core and the analytics-vpc is through VPC peering that allows for the exchange of custom routes learned via BGP. In the tutorial, the analytics-vpc will need to publish the routes learned by the on-prem-vpc to Looker. To enable this functionality, VPC peering requires an update to export custom routes.

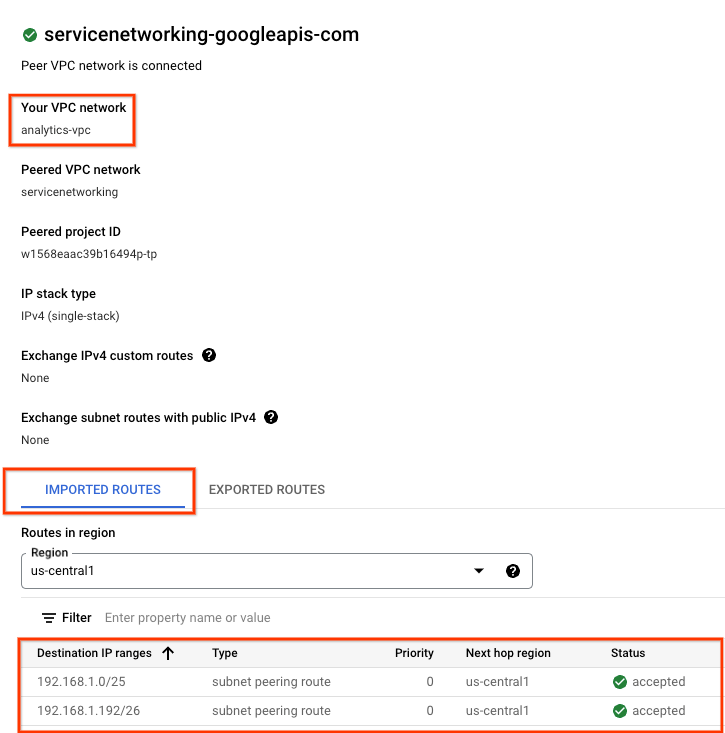

Validate current imported and exported routes.

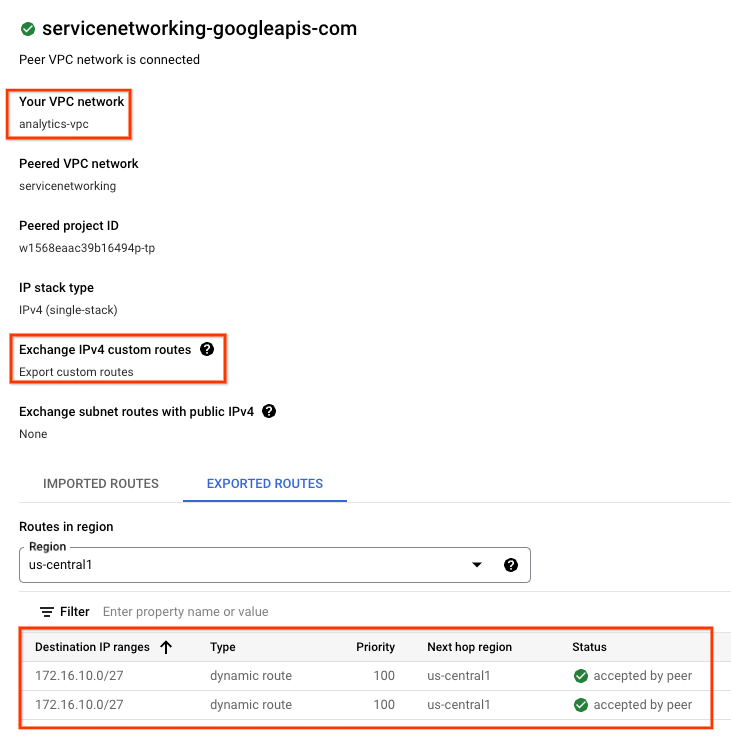

Navigate to VPC NETWORK → VPC NETWORK PEERING → servicenetworking-googleapis-com

The screenshot below details the analytics-vpc importing the psa-range-looker from the Google managed peered vpc networking, servicesnetworking.

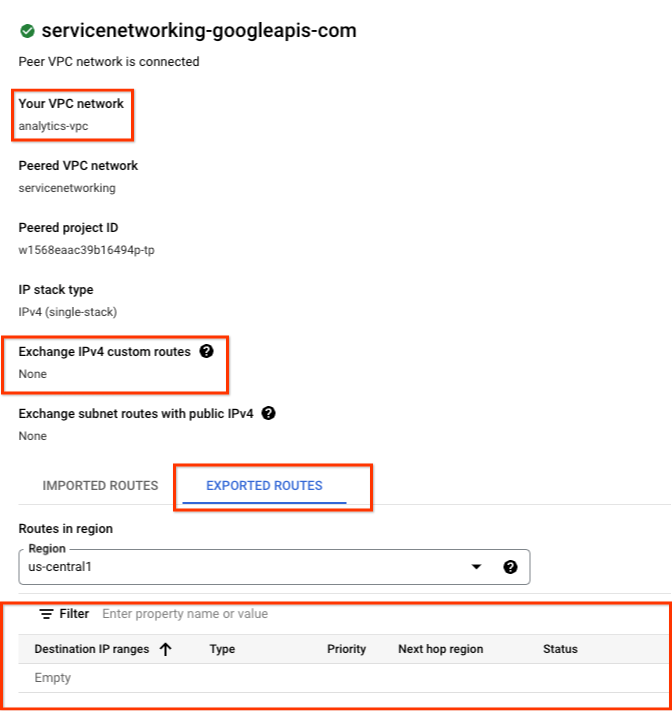

Select EXPORTED ROUTES reverals no routes exported to the Peered VPC network since 1) Subnets are not configured in the analytics-vpc 2) Export custom routes is not selected

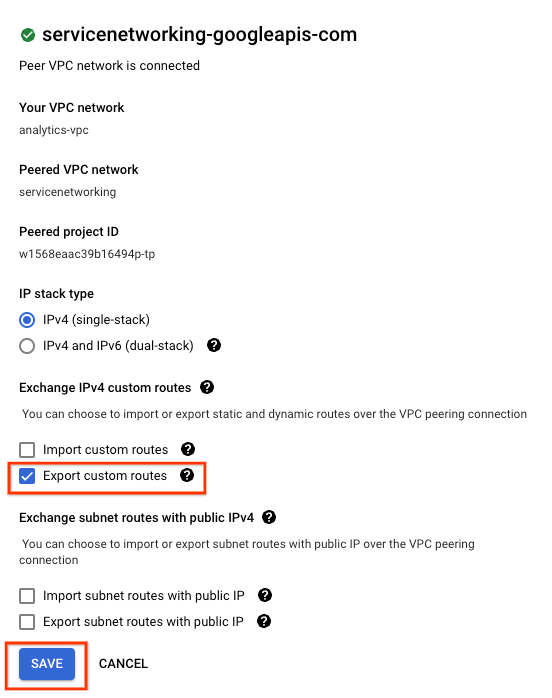

13. Update VPC Peering

Navigate to VPC NETWORK → VPC NETWORK PEERING → servicenetworking-googleapis-com → EDIT

Select EXPORT CUSTOM ROUTES → SAVE

14. Validate updated VPC Peering

Validate exported routes.

Navigate to VPC NETWORK → VPC NETWORK PEERING → servicenetworking-googleapis-com

Select EXPORTED ROUTES reveals the on-prem-vpc routes (database subnet 172.16.10.0/27) are exported to the Peered VPC networking hosting Looker by the analytics-vpc.

15. Looker postgres-database creation

In the following section, you will perform a SSH into the postgres-database vm using Cloud Shell.

Inside Cloud Shell, perform a ssh to postgres-database instance**.**

gcloud compute ssh --zone "us-central1-a" "postgres-database" --project "$projectid"

Inside the OS, identify and note the IP address (ens4) of the postgres-database instance.

ip a

Example:

user@postgres-database:~$ ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens4: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1460 qdisc mq state UP group default qlen 1000

link/ether 42:01:ac:10:0a:02 brd ff:ff:ff:ff:ff:ff

altname enp0s4

inet 172.16.10.2/32 metric 100 scope global dynamic ens4

valid_lft 84592sec preferred_lft 84592sec

inet6 fe80::4001:acff:fe10:a02/64 scope link

valid_lft forever preferred_lft forever

Inside the OS, log into postgresql.

sudo -u postgres psql postgres

Inside the OS, enter the password prompt.

\password postgres

Inside the OS, set the password to postgres (enter the same password twice)

postgres

Example:

user@postgres-database:~$ sudo -u postgres psql postgres

\password postgres

psql (13.11 (Debian 13.11-0+deb11u1))

Type "help" for help.

postgres=# \password postgres

Enter new password for user "postgres":

Enter it again:

Inside the OS, exit postgres.

\q

Example:

postgres=# \q

user@postgres-database:~$

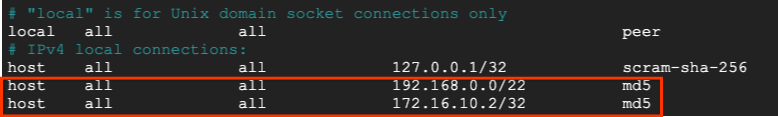

In the following section, you will insert your postgres-database instance IP address and Looker Private Google Access subnet (192.168.0.0/22) in the pg_hba.conf file under the IPv4 local connections per the screenshot below:

sudo nano /etc/postgresql/15/main/pg_hba.conf

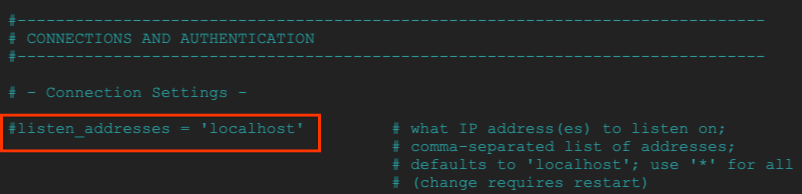

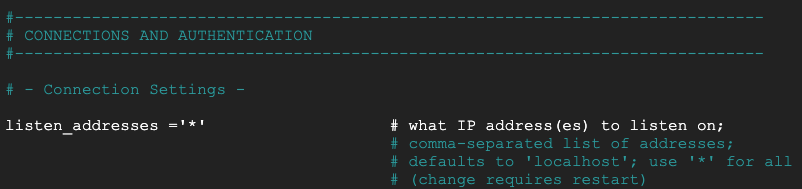

In the following section, uncomment the postgresql.conf to listen for all ‘*' IP addresses per the screenshot below:

sudo nano /etc/postgresql/15/main/postgresql.conf

Before:

After:

Inside the OS, restart the postgresql service.

sudo service postgresql restart

Inside the OS, validate the postgresql status as active.

sudo service postgresql status

Example:

Inside the OS, validate the postgresql status as active.

user@postgres-database$ sudo service postgresql status

● postgresql.service - PostgreSQL RDBMS

Loaded: loaded (/lib/systemd/system/postgresql.service; enabled; preset: enabled)

Active: active (exited) since Sat 2023-07-01 23:40:59 UTC; 7s ago

Process: 4073 ExecStart=/bin/true (code=exited, status=0/SUCCESS)

Main PID: 4073 (code=exited, status=0/SUCCESS)

CPU: 2ms

Jul 01 23:40:59 postgres-database systemd[1]: Starting postgresql.service - PostgreSQL RDBMS...

Jul 01 23:40:59 postgres-database systemd[1]: Finished postgresql.service - PostgreSQL RDBMS.

16. Create the postgres database

In the following section, you will create a postgres database named postgres_looker and schema looker_schema used to validate looker to on-premises connectivity.

Inside the OS, log into postgres.

sudo -u postgres psql postgres

Inside the OS, create the database.

create database postgres_looker;

Inside the OS, list the database.

\l

Inside the OS, create the user looker with the password looker

create user postgres_looker with password 'postgreslooker';

Inside the OS, connect to the database.

\c postgres_looker;

Inside the OS, create the schema looker-schema and exit to the Cloud Shell prompt.

create schema looker_schema;

create table looker_schema.test(firstname CHAR(15), lastname CHAR(20));

exit

Example:

user@postgres-database$ sudo -u postgres psql postgres

psql (15.3 (Ubuntu 15.3-0ubuntu0.23.04.1))

Type "help" for help.

postgres=# create database postgres_looker;

CREATE DATABASE

postgres=# \l

List of databases

Name | Owner | Encoding | Collate | Ctype | ICU Locale | Locale Provider | Access privileges

-----------------+----------+----------+---------+---------+------------+-----------------+-----------------------

postgres | postgres | UTF8 | C.UTF-8 | C.UTF-8 | | libc |

postgres_looker | postgres | UTF8 | C.UTF-8 | C.UTF-8 | | libc |

template0 | postgres | UTF8 | C.UTF-8 | C.UTF-8 | | libc | =c/postgres +

| | | | | | | postgres=CTc/postgres

template1 | postgres | UTF8 | C.UTF-8 | C.UTF-8 | | libc | =c/postgres +

| | | | | | | postgres=CTc/postgres

(4 rows)

postgres=# create user postgres_looker with password 'postgreslooker';

CREATE ROLE

postgres=# \c postgres_looker;

You are now connected to database "postgres_looker" as user "postgres".

postgres_looker=# create schema looker_schema;

CREATE SCHEMA

postgres_looker=# create table looker_schema.test(firstname CHAR(15), lastname CHAR(20));

CREATE TABLE

postgres_looker=# exit

Exit from the OS, returning you to cloud shell.

\q

exit

17. Create a Firewall in the on-prem-vpc

In the following section, create an Ingress firewall with logging that allows the Looker subnet communication to the postgres-database instance.

From Cloud Shell, create the on-prem-vpc firewall.

gcloud compute --project=$projectid firewall-rules create looker-access-to-postgres --direction=INGRESS --priority=1000 --network=on-prem-vpc --action=ALLOW --rules=all --source-ranges=192.168.0.0/22 --enable-logging

18. Create Private DNS in the analytics-vpc

Although Looker is deployed in a Google managed VPC, access to the analytics-vpc Private DNS is supported through peering with services networking.

In the following section, you will create a Private DNS Zone in the analytics-vpc consisting of an A record of the postgres-database instance Fully Qualified Domain Name (postgres.analytics.com)and IP address.

From Cloud Shell, create the private zone analytics.com.

gcloud dns --project=$projectid managed-zones create gcp-private-zone --description="" --dns-name="analytics.com." --visibility="private" --networks="https://www.googleapis.com/compute/v1/projects/$projectid/global/networks/analytics-vpc"

From Cloud Shell, identify the IP Address of the postgres-database instance.

gcloud compute instances describe postgres-database --zone=us-central1-a | grep networkIP:

Example:

user@cloudshell$ gcloud compute instances describe postgres-database --zone=us-central1-a | grep networkIP:

networkIP: 172.16.10.2

From Cloud Shell, create the A record, ensure to add the previously identified IP Address.

gcloud dns --project=$projectid record-sets create postgres.analytics.com. --zone="gcp-private-zone" --type="A" --ttl="300" --rrdatas="your-postgres-database-ip"

Example:

user@cloudshell$ gcloud dns --project=$projectid record-sets create postgres.analytics.com. --zone="gcp-private-zone" --type="A" --ttl="300" --rrdatas="172.16.10.2"

NAME: postgres.analytics.com.

TYPE: A

TTL: 300

DATA: 172.16.10.2

From Cloud Shell, peer dns-suffix analytics.com. to services networking thereby allowing Looker access to the analytics-vpc private zone.

gcloud services peered-dns-domains create looker-com --network=analytics-vpc --service=servicenetworking.googleapis.com --dns-suffix=analytics.com.

19. Integrate Looker with the Postgres postgres-database

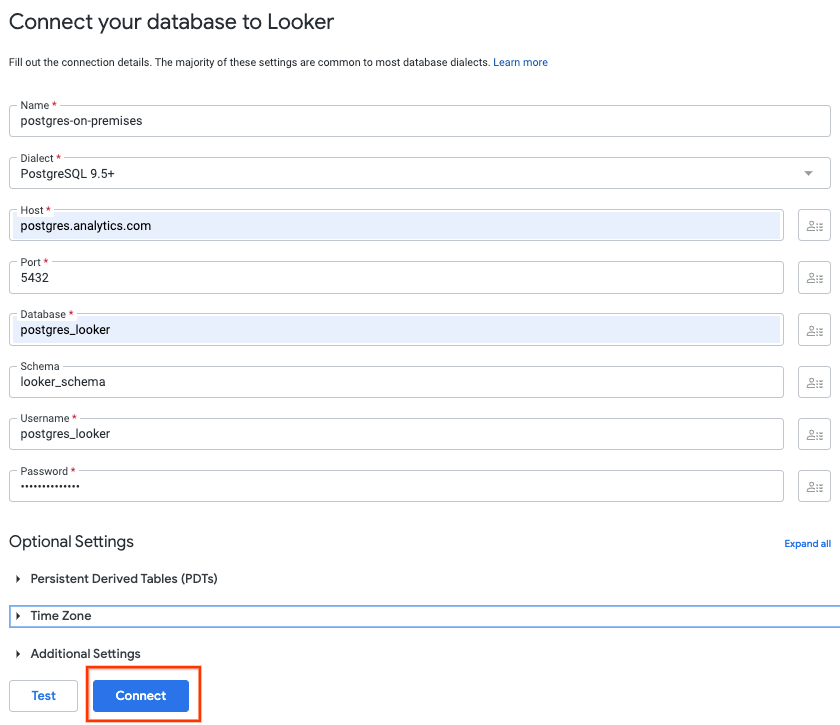

In the following section you will use Cloud Console to create a Database connection to the on-premises postgres-database instance.

In Cloud Console, navigate to Looker and select your instance url that will open the Looker UI.

Once launched you will be presented with the landing page per the screenshot below.

Navigate to ADMIN → DATABASE → CONNECTIONS → Select ADD CONNECTION

Fill out the connection details per the screenshot below, select CONNECT

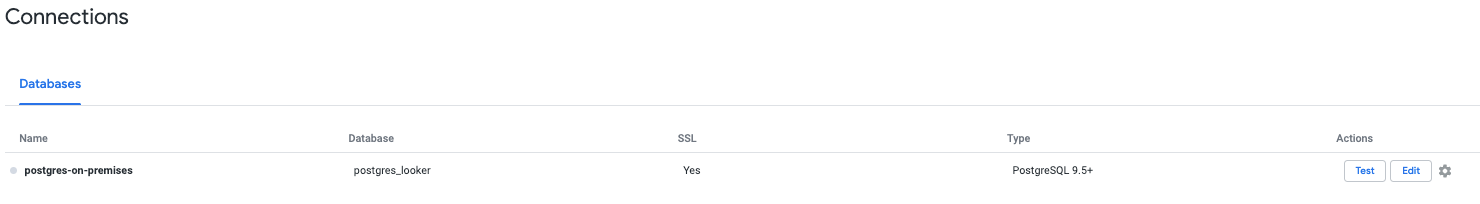

The connection is now successful

20. Validate Looker connectivity

In the following section you will learn how to validate Looker connectivity to the postgres-database in the on-prem-vpc using the Looker ‘test' action and TCPDUMP.

From Cloud Shell, log into the postgres-database if the session has timed out.

Inside Cloud Shell, perform the following:

gcloud config list project

gcloud config set project [YOUR-PROJECT-NAME]

projectid=YOUR-PROJECT-NAME

echo $projectid

gcloud compute ssh --zone "us-central1-a" "postgres-database" --project "$projectid"

From the OS, create a TCPDUMP filter with the psa-range-looker subnet 192.168.0.0/22

sudo tcpdump -i any net 192.168.0.0/22 -nn

Navigate to the Data Connection ADMIN → DATABASE → CONNECTIONS → Select → Test

Once Test is selected Looker will successfully connect to the postgres-database as indicated below:

Navigate back to the OS terminal and validate TCPDUMP has identified the psc-range-looker is connecting to the on-premises postgres-database instance.

Add a note that any IP from the PSA range will show from Looker

user@postgres-database$ sudo tcpdump -i any net 192.168.0.0/22 -nn

tcpdump: data link type LINUX_SLL2

tcpdump: verbose output suppressed, use -v[v]... for full protocol decode

listening on any, link-type LINUX_SLL2 (Linux cooked v2), snapshot length 262144 bytes

00:16:55.121631 ens4 In IP 192.168.1.24.46892 > 172.16.10.2.5432: Flags [S], seq 2221858189, win 42600, options [mss 1366,sackOK,TS val 4045928414 ecr 0,nop,wscale 7], length 0

00:16:55.121683 ens4 Out IP 172.16.10.2.5432 > 192.168.1.24.46892: Flags [S.], seq 1464964586, ack 2221858190, win 64768, options [mss 1420,sackOK,TS val 368503074 ecr 4045928414,nop,wscale 7], length 0

21. Security Recommendations

There are few security recommendations and best practices related to securing Looker and the Postgres database. These includes:

- Setting up the least privileged database account permissions for Looker that still allows it to perform needed functions.

- Data in transit between Client and Looker UI and Looker to Database in encrypted using TLS 1.2+

- Data at rest is encrypted by default, customer can also leverage CMEK for Looker instances ( https://cloud.google.com/looker/docs/looker-core-cmek) and for Postgres ( https://cloud.google.com/sql/docs/postgres/configure-cmek)

- Looker Access Control - Looker administrators can control what a principle or group of users can see and do in Looker by granting content access, data access and feature access. These options allow Looker Admin to define specific roles which include Model and permission set and create fine grained access control to data.

- Looker supports both Audit Logs and Data Access Logs which captures who did what when and where. Audit Logs are enabled by default while Data Access logs need to be explicitly enabled.

- Currently VPC-SC supports Enterprise and Embed instances that are configured with only private IP

22. Clean up

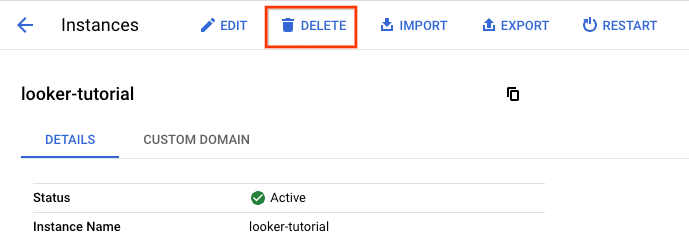

Delete the Looker Cloud Core instance, Navigate to:

LOOKER → looker-tutorial → DELETE

From Cloud Shell, delete tutorial components.

gcloud compute vpn-tunnels delete analytics-vpc-tunnel0 analytics-vpc-tunnel1 on-prem-tunnel0 on-prem-tunnel1 --region=us-central1 --quiet

gcloud compute vpn-gateways delete analytics-vpn-gw on-prem-vpn-gw --region=us-central1 --quiet

gcloud compute routers delete analytics-cr-us-central1 on-prem-cr-us-central1 on-prem-cr-us-central1-nat --region=us-central1 --quiet

gcloud compute instances delete postgres-database --zone=us-central1-a --quiet

gcloud compute networks subnets delete database-subnet-us-central1 --region=us-central1 --quiet

gcloud compute firewall-rules delete looker-access-to-postgres on-prem-ssh --quiet

gcloud dns record-sets delete postgres.analytics.com. --type=A --zone=gcp-private-zone

gcloud dns managed-zones delete gcp-private-zone

gcloud compute networks delete on-prem-vpc --quiet

gcloud compute addresses delete psa-range-looker --global --quiet

gcloud compute networks delete analytics-vpc --quiet

23. Congratulations

Congratulations, you've successfully configured and validated Looker connectivity over hybrid networking enabling data communication across on-premises and multi cloud environments.

You were also able to successfully test Looker Cloud Core connectivity to the postgres-database using Lookers Connect ‘Test' tool and TCPDUMP in the postgres-database instance.

Cosmopup thinks tutorials are awesome!!

Further reading & Videos

- Introducing the next evolution of Looker

- Migrating to GCP? First Things First: VPCs

- Dynamic routing with Cloud Router