1. Introduction

Overview

In this lab, you mitigate common threats to an AI development environment's infrastructure. You implement security controls designed to protect the core components of this environment.

Context

You are the security champion in your development team, and your goal is to build an environment that balances minimal friction of use with protection against common threats.

The following table lists the threats you are most concerned with mitigating. Each threat is addressed by a specific task within this lab:

Threat | Mitigation | Task Covered |

Unauthorized ingress to the network through exploited open ports. | Create a private VPC and limit Vertex AI access to a single user proxied through Google Cloud instead of a public IP address. | Configure a Secure Network Foundation |

Privilege escalation from a compromised compute instance using over-privileged credentials. | Create and assign a least-privilege service account to the Vertex AI instance. | Deploy a Secure Vertex AI Workbench Instance |

Instance takeover of the compute resource, leading to system tampering. | Harden the instance by disabling root access and enabling Secure Boot. | Deploy a Secure Vertex AI Workbench Instance |

Accidental public exposure of training data and models due to storage misconfiguration. | Enforce public access prevention on the bucket and use uniform bucket-level access controls. | Deploy a Secure Cloud Storage Bucket |

Malicious or accidental deletion or tampering of datasets and model artifacts. | Enable object versioning for recovery and enable data access logs for an immutable audit trail of all activity. | Deploy a Secure Cloud Storage Bucket |

Quick reference

Throughout this lab, you'll be working with these named resources:

Component | Name |

VPC Name |

|

Subnet Name |

|

Cloud Router |

|

Cloud NAT |

|

Service Account |

|

Vertex AI Instance |

|

Storage Bucket |

|

What you'll learn

In this lab, you learn how to:

- Provision a secure VPC with private networking to mitigate unsolicited traffic.

- Harden a Vertex AI Workbench instance against bootkits and privilege escalation.

- Secure a Cloud Storage bucket to mitigate unmonitored data transfer and accidental public exposure.

2. Project setup

Google Account

If you don't already have a personal Google Account, you must create a Google Account.

Use a personal account instead of a work or school account.

Sign-in to the Google Cloud Console

Sign-in to the Google Cloud Console using a personal Google account.

Enable Billing

Redeem $5 Google Cloud credits (optional)

To run this workshop, you need a Billing Account with some credit. If you are planning to use your own billing, you can skip this step.

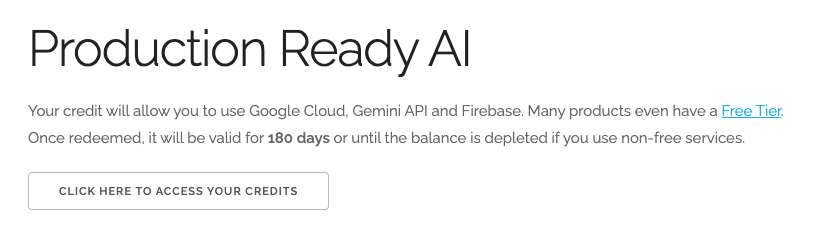

- Click this link and sign in with a personal google account. You will see something like this:

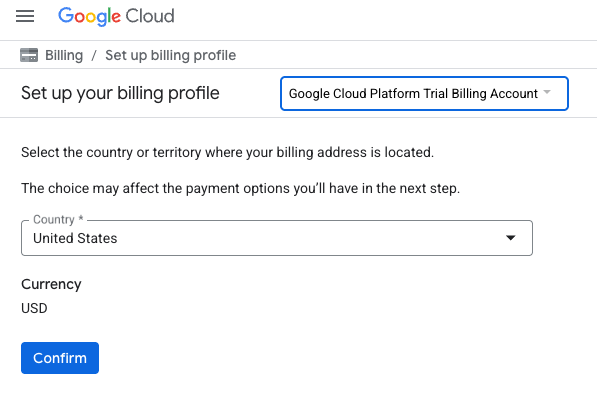

- Click the CLICK HERE TO ACCESS YOUR CREDITS button. This will bring you to a page to set up your billing profile

- Click Confirm

You are now connected to a Google Cloud Platform Trial Billing Account.

Set up a personal billing account

If you set up billing using Google Cloud credits, you can skip this step.

To set up a personal billing account, go here to enable billing in the Cloud Console.

Some Notes:

- Completing this lab should cost less than $1 USD in Cloud resources.

- You can follow the steps at the end of this lab to delete resources to avoid further charges.

- New users are eligible for the $300 USD Free Trial.

Create a project (optional)

If you do not have a current project you'd like to use for this lab, create a new project here.

3. Enable the APIs

Configure Cloud Shell

Once your project is created successfully, do the following steps to set up Cloud Shell.

Launch Cloud Shell

Navigate to shell.cloud.google.com and if you see a popup asking you to authorize, click on Authorize.

Set Project ID

Execute the following command in the Cloud Shell terminal to set the correct Project ID. Replace <your-project-id> with your actual Project ID copied from the project creation step above.

gcloud config set project <your-project-id>

You should now see that the correct project is selected within the Cloud Shell terminal.

Enable Vertex AI Workbench and Cloud Storage

To use the services in this lab, you need to enable APIs for Compute Engine, Vertex AI Workbench, IAM, and Cloud Storage in your Google Cloud project.

- In the terminal, enable the APIs:

gcloud services enable compute.googleapis.com notebooks.googleapis.com aiplatform.googleapis.com iam.googleapis.com storage.googleapis.com

Alternatively, you can enable these APIs by navigating to their respective pages in the console and clicking Enable.

4. Configure a secure network foundation

In this task, you create an isolated network environment for all your services. By using a private network and controlling data flows, you build a multi-layered defense to significantly reduce the attack surface of your infrastructure compared to publicly reachable resources. This secure network foundation is crucial for protecting your AI applications from unauthorized access.

Create the VPC and subnet

In this step, you set up a Virtual Private Cloud (VPC) and a subnet. This creates an isolated network environment, which is the first line of defense against unauthorized network ingress.

- In the Google Cloud Console, navigate to VPC Network > VPC networks. Use the search bar at the top of the Google Cloud Console to search for "VPC networks" and select it.

- Click Create VPC network.

- For Name, enter

genai-secure-vpc. - For Subnet creation mode, select Custom.

- Under New subnet, specify the following properties to create your subnet:

Property

Value (type or select)

Name

genai-subnet-us-central1Region

us-central1IP address range

10.0.1.0/24Private Google Access

On

- Click Create.

Create the Cloud NAT gateway

The Cloud NAT gateway allows your private instances to initiate outbound connections (e.g., for software updates) without having a public IP address, meaning the public internet cannot initiate connections to them.

- First, create a Cloud Router. Use the search bar at the top of the Google Cloud Console to search for "Cloud Router" and select it.

- Click Create router.

- Configure the Cloud Router with the following:

Property

Value (type or select)

Name

genai-router-us-central1Network

genai-secure-vpc(the VPC network you just created)Region

us-central1 - Click Create.

- Next, navigate to Network Services > Cloud NAT. Use the search bar at the top of the Google Cloud Console to search for "Cloud NAT" and select it.

- Click Get started.

- Configure the Cloud NAT gateway with the following:

Property

Value (type or select)

Gateway name

genai-nat-us-central1VPC network

genai-secure-vpc(the VPC network you created)Region

us-central1Cloud Router

genai-router-us-central1(the router you just set up) - Click Create.

5. Deploy a secure Vertex AI Workbench instance

Now that you have a secure network foundation, you deploy a hardened Vertex AI Workbench instance inside your secure VPC. This Workbench instance serves as your development environment, providing a secure and isolated space for your AI development work. The previous network configuration ensures that this instance is not exposed directly to the public internet, building upon the multi-layered defense.

Create a least-privilege service account

Creating a dedicated service account with the fewest permissions necessary supports the principle of least privilege. If your instance is ever compromised, this practice limits the "blast radius" by ensuring the instance can only access the resources and perform the actions explicitly required for its function.

- From the Google Cloud Console, navigate to IAM & Admin > Service Accounts. Use the search bar at the top of the Google Cloud Console to search for "Service Accounts" and select it.

- Click Create service account.

- For Service account name, enter

vertex-workbench-sa. - Click Create and continue.

- Grant the following roles:

Vertex AI UserStorage Object Creator

- Click Done.

Create the workbench instance

In this step, you deploy your Vertex AI Workbench instance. This instance is configured to run within your previously created private VPC, further isolating it from the public internet. You also apply additional security hardening measures directly to the instance.

- From the Google Cloud Console navigation menu (hamburger menu), navigate to Vertex AI > Workbench. Use the search bar at the top of the Google Cloud Console to search for "Workbench" and select the result with "Vertex AI" as the subtitle.

- Click Create New and configure:

Property

Value (type or select)

Name

secure-genai-instanceRegion

us-central1 - Click Advanced options.

- Click Machine type and select the checkboxes for the following:

- Secure Boot

- Virtual Trusted Platform Module (vTPM)

- Integrity Monitoring.

- Click Networking and configure the following:

Property

Value (type or select)

Network

genai-secure-vpcSubnetwork

genai-subnet-us-central1(10.0.1.0/24)Assign external IP address

Deselect, since you only access this instance through a proxy in Google Cloud.

- Click IAM and security and configure the following:

Property

Value (type or select)

User email

Single user and select the email address you currently used to sign in to Google Cloud.

Use default Compute Engine service account

Deselect the checkbox.

Service account email

Enter the email created for the least-privilege service account (replace

[PROJECT_ID]with your actual ID):vertex-workbench-sa@[PROJECT_ID].iam.gserviceaccount.comRoot access to the instance

Deselect the checkbox.

- Click Create.

Access your Vertex AI instance

Now that your Vertex AI Workbench instance is provisional, you can access it securely. You connect to it through Google Cloud's proxy, ensuring that the instance remains private and not exposed to the public internet, thus limiting the risk of unsolicited and potentially malicious traffic.

- Navigate to Vertex AI > Workbench. You may already be on this page if your instance just finished provisioning. If not, you can use the Google Cloud Console navigation menu (hamburger menu) or search bar to get there.

- Find your instance named

secure-genai-instancein the list. - To the right of your instance, click the Open JupyterLab link.

This opens a new tab in your browser, giving you access to your instance. You have access to it through Google Cloud, but the instance is not exposed to the public internet, limiting the risk of unsolicited and potentially malicious traffic.

6. Deploy a secure Cloud Storage bucket

Now, you create a secure Cloud Storage bucket for your datasets. This is where your AI training data, models, and artifacts are stored. By applying strong security configurations to this bucket, you prevent accidental public exposure of sensitive data and protect against malicious or accidental deletion and tampering. This ensures the integrity and confidentiality of your valuable AI assets.

Create and configure the bucket

In this step, you create your Cloud Storage bucket and apply initial security settings. These settings enforce public access prevention and enable uniform bucket-level access controls, which are crucial for maintaining control over who can access your data.

- From the Google Cloud Console, navigate to Cloud Storage > Buckets. Use the search bar at the top of the Google Cloud Console to search for "Buckets" and select it.

- Click Create.

- In Get Started, set the Name to

secure-genai-artifacts-[PROJECT_ID], replacing[PROJECT_ID]with your actual Google Cloud project ID. - Continue to Choose where to store your data and configure the following:

Property

Value (type or select)

Location type

Region

Region

us-central1 - Continue to Choose how to store your data and keep the default settings.

- Continue to Choose how to control access to objects and keep the default settings:

Property

Value (type or select)

Reason

Prevent public access

Keep Enforce public access prevention selected.

Public access prevention overrides any changes to IAM that might inadvertently make an object exposed to the internet.

Access control

Keep Uniform selected.

Although ACLs seem like the better option for fine-grained control, hence least privilege, they introduce complexity and unpredictability when combined with other IAM features. Consider the tradeoffs and advantages of uniform bucket level access for preventing unintended data exposure.

- Continue to Choose how to protect object data and keep the default settings:

Property

Value (type or select)

Reason

Soft delete policy (For data recovery)

Keep Soft delete policy (For data recovery) selected.

In case of either accidental or malicious deletions in the bucket, soft delete allows you to restore contents within the retention window.

Object versioning (For version control)

Select Object versioning (For version control).

Object versioning allows for recovery from accidental or malicious overwrites, such as if an attacker were to replace a benign file with one containing exploit code.

Max. number of versions per object

3

While this is arbitrary, and can increase cost, it has to be greater than 1 in order for you to roll back to a previous version. If an attacker could potentially attempt multiple overwrites, a more robust versioning a backup strategy may be necessary.

Expire noncurrent versions after

7

7 days is the recommended amount of time to keep older versions alive, but this number may be greater for longer-term storage.

- Click Create.

- If a popup appears that reads "Public access will be prevented", keep the default box ("Enforce public access prevention on this bucket") selected and click Confirm.

Enable data protection and logging

To ensure the immutability and auditability of your data, you enable object versioning and configure data access logs for your bucket. Object versioning allows for recovery from accidental deletions or overwrites, while data access logs provide a comprehensive audit trail of all activity on your bucket, essential for security monitoring and compliance.

- From the Google Cloud Console, navigate to IAM & Admin > Audit Logs. Use the search bar at the top of the Google Cloud Console to search for "Audit Logs" and select it.

- Use the filter search to find and select Google Cloud Storage.

- In the panel that appears, check the boxes for both Data Read and Data Write.

- Click Save.

7. From lab to reality

You've just completed a series of steps in a temporary lab environment, but the principles and configurations you've applied are the blueprint for securing real-world AI projects on Google Cloud. Here's how you can translate what you've learned into your own work, moving from a simple lab to a production-ready setup.

Think of the resources you just built—the private VPC, the hardened Workbench instance, and the secure bucket—as a secure starter template for any new AI project. Your goal is to make this secure foundation the default, easy path for yourself and your team.

The secure network: Your private workspace

How you'd use this in your setup

Every time you start a new AI project (e.g., "customer-churn-prediction," "image-classification-model"), you would replicate this network setup. You would create a dedicated VPC (churn-pred-vpc) or use a shared, pre-approved network. This becomes your project's isolated "sandbox." Your development environment, like the Vertex AI Workbench you configured, lives inside this protected space. You'd connect to it via the secure proxy (Open JupyterLab), never exposing it to the public internet.

Connecting to production

In a production environment, you would take this concept even further by considering:

- Infrastructure as code (IaC): Instead of using the Cloud Console, you would define this network using tools like Terraform or Cloud Deployment Manager. This allows you to deploy a secure network foundation for a new project in minutes, ensuring it's repeatable and auditable.

- Shared VPC: In larger organizations, a central networking team often manages a Shared VPC. As a developer, you would be granted permission to launch your instances and services into specific subnets within that centrally managed secure network. The principle is the same—you're still operating in a private space, but it's part of a larger, shared infrastructure.

- VPC Service Controls: For maximum security, you would wrap this entire environment in a VPC Service Controls perimeter. This is a powerful feature that prevents data exfiltration by ensuring that services like Cloud Storage can only be accessed by authorized resources within your private network perimeter.

The hardened compute: Your secure development & training hub

How you'd use this in your setup

The secure-genai-instance is your day-to-day AI development machine. You'd use the JupyterLab interface to:

- Write and test your model code in notebooks.

- Install Python libraries (

pip install ...). - Experiment with small-to-medium datasets. The security you configured (no public IP, least-privilege service account, no root access, Secure Boot) runs transparently in the background. You can focus on your AI work, knowing the instance is hardened against common attacks.

Connecting to production

You don't typically run large-scale training on a single Workbench instance in production. Instead, you can productionize your work in the following ways:

- From notebook to pipeline: You would take the code from your notebook and formalize it into a script. This script would then be run as a Vertex AI Custom Training job or as a step in a Vertex AI Pipeline.

- Containerization: You'd package your training code and its dependencies into a Docker container and store it in Artifact Registry. This ensures your code runs in a consistent, predictable environment every time.

- The service account is key: The

vertex-workbench-saservice account you created is crucial. In production, your automated Vertex AI Training jobs would run using this same (or a similar) least-privilege service account, ensuring the automated job only has the permissions it absolutely needs.

The secure storage: Your central artifact repository

How you'd use this in your setup

The secure-genai-artifacts bucket is the single source of truth for your project's data. It's not just for the initial dataset. You would use it to store:

- Raw and preprocessed training data.

- Model checkpoints during long training runs.

- The final, trained model artifacts (

.pkl,.pb, or.h5files). - Evaluation results and logs. The security settings you applied (Public Access Prevention, Uniform Bucket-Level Access, Versioning, and Audit Logging) mean you can confidently use this bucket as a central store, protected from leaks and accidental deletions.

Connecting to production

To manage and govern your principles in a production environment, you should also consider:

- Lifecycle policies: To manage costs, you'd set up lifecycle policies to automatically move older model versions or datasets to cheaper storage classes (like Nearline or Coldline) or delete them after a certain period.

- Cross-project permissions: In a production pipeline, a data engineering team might populate this bucket from a different Google Cloud project. The Uniform Bucket-Level Access you enabled makes managing these cross-project IAM permissions simple and secure, without complex (and thus easy to misconfigure) ACLs.

The big picture: Making security the default

By adopting this model, you shift your security posture from being an afterthought to being the foundation. You are no longer just a developer; you are a security champion for your team. When a new team member joins, you don't give them an insecure, public-facing machine. You give them access to a secure, pre-configured environment where the safe way of working is also the easiest way. This approach directly reduces business risk by preventing common causes of data breaches and system compromises, allowing your team to innovate and build powerful AI applications with confidence.

8. Congratulations!

Congratulations! You have successfully built and audited a multi-layered, secure infrastructure for your AI development environment. You created a secure network perimeter with a "default deny" firewall posture, deployed a hardened compute instance, secured a data bucket, and used logs to verify that your controls work exactly as intended.

Recap

In this lab, you accomplished the following:

- Provisioned a secure VPC with private networking to mitigate unsolicited traffic.

- Deployed a hardened Vertex AI Workbench instance against bootkits and privilege escalation.

- Secured a Cloud Storage bucket to mitigate unmonitored data transfer and accidental public exposure.

- Implemented least-privilege service accounts to limit potential "blast radius."

- Enabled object versioning and data access logs for data protection and an immutable audit trail.