1. 概要

このラボでは、Google Cloud Platform(GCP)に LIT アプリケーション サーバーをデプロイし、Vertex AI Gemini 基盤モデルや自己ホスト型のサードパーティの大規模言語モデル(LLM)を操作する手順について詳しく説明します。また、LIT UI を使用してプロンプトのデバッグとモデルの解釈を行う方法に関するガイダンスも含まれています。

このラボでは、次の方法について学習します。

- GCP で LIT サーバーを構成します。

- LIT サーバーを Vertex AI Gemini モデルまたは他のセルフホスト LLM に接続します。

- LIT UI を使用してプロンプトを分析、デバッグ、解釈し、モデルのパフォーマンスと分析情報を改善します。

LIT とは

LIT は、テキスト、画像、表形式データをサポートする、視覚的でインタラクティブなモデル理解ツールです。スタンドアロン サーバーとして実行することも、Google Colab、Jupyter、Google Cloud Vertex AI などのノートブック環境内で実行することもできます。LIT は PyPI と GitHub から入手できます。

分類モデルと回帰モデルを理解するために構築されたこのツールは、最近のアップデートで LLM プロンプトのデバッグツールが追加され、ユーザー、モデル、システムのコンテンツが生成動作にどのように影響するかを調べられるようになりました。

Vertex AI と Model Garden とは何ですか?

Vertex AI は、ML モデルと AI アプリケーションのトレーニングとデプロイを行い、AI を活用したアプリケーションで使用する LLM をカスタマイズできる ML プラットフォームです。Vertex AI は、データ エンジニアリング、データ サイエンス、ML エンジニアリングのワークフローを統合し、チームによる共通のツールセットを使用したコラボレーション、Google Cloud のメリットを利用したアプリケーションのスケーリングを実現します。

Vertex Model Garden は、Google 独自のモデルやアセット、および厳選されたサードパーティのモデルやアセットを検出、テスト、カスタマイズ、デプロイする場合に役立つ ML モデル ライブラリです。

ラボの内容

Google の Cloud Shell と Cloud Run を使用して、LIT のビルド済みイメージから Docker コンテナをデプロイします。

Cloud Run は、GPU など、Google のスケーラブルなインフラストラクチャ上でコンテナを直接実行できるマネージド コンピューティング プラットフォームです。

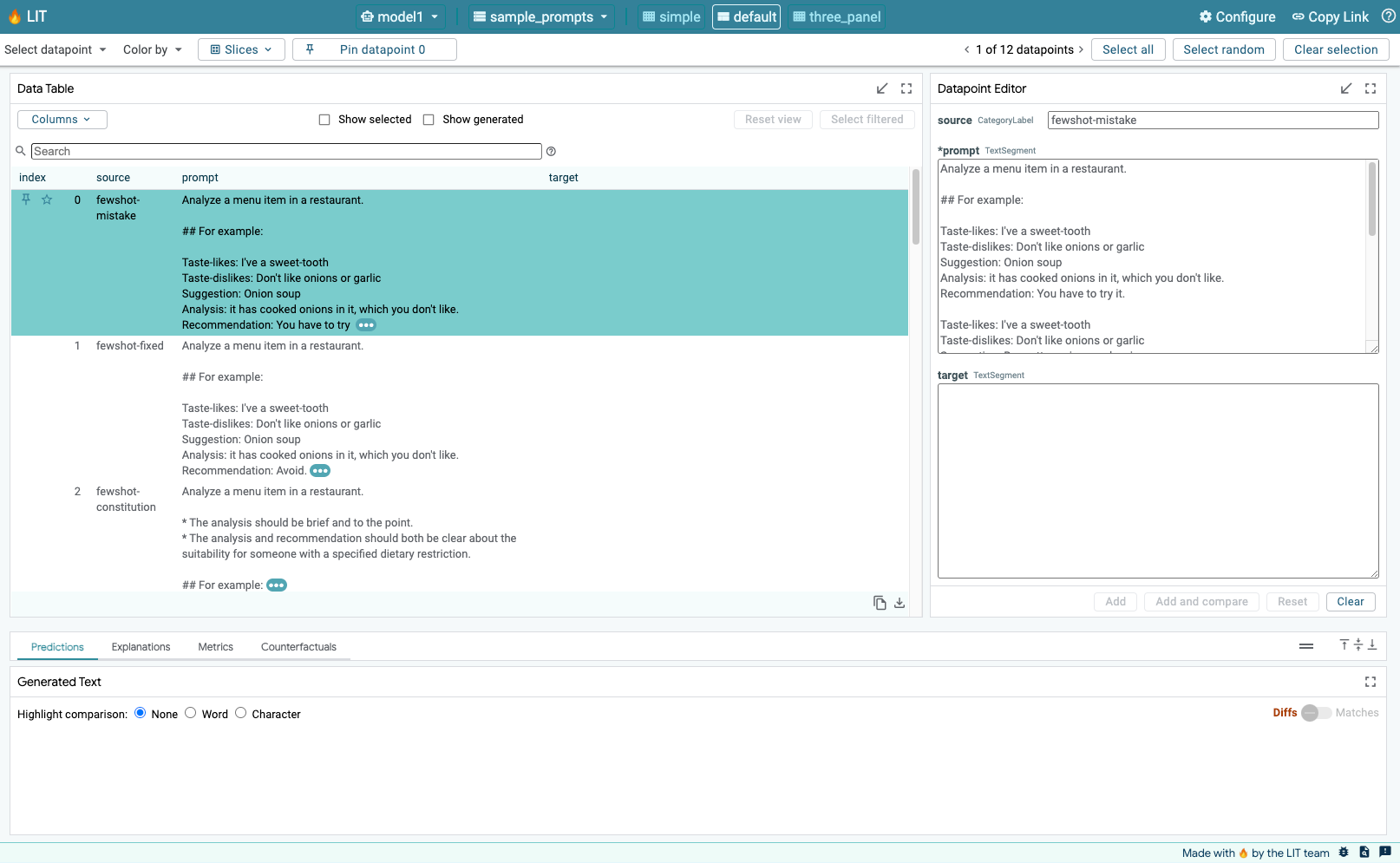

データセット

このデモでは、デフォルトで LIT プロンプト デバッグのサンプル データセットを使用しますが、UI を使用して独自のデータセットを読み込むこともできます。

始める前に

このリファレンス ガイドでは、Google Cloud プロジェクトが必要です。新しいプロジェクトを作成することも、すでに作成済みのプロジェクトを選択することもできます。

2. Google Cloud コンソールと Cloud Shell を起動する

この手順では、Google Cloud Console を起動して Google Cloud Shell を使用します。

2-a: Google Cloud コンソールを起動する

ブラウザを起動し、Google Cloud コンソールに移動します。

Google Cloud コンソールは、Google Cloud リソースをすばやく管理できる強力で安全なウェブ管理インターフェースです。外出先で利用できる DevOps ツールです。

2-b: Google Cloud Shell を起動する

Cloud Shell は、ブラウザから場所を問わずにアクセスできる、オンラインの開発および運用環境です。gcloud コマンドライン ツールや kubectl などのユーティリティがプリロードされたオンライン ターミナルを使用して、リソースを管理できます。オンラインの Cloud Shell エディタを使用して、クラウドベースのアプリの開発、ビルド、デバッグ、デプロイを行うこともできます。Cloud Shell は、使い慣れたツールセットがプリインストールされ、5 GB の永続ストレージ スペースを備えた、デベロッパー向けのオンライン環境を提供します。次の手順でコマンド プロンプトを使用します。

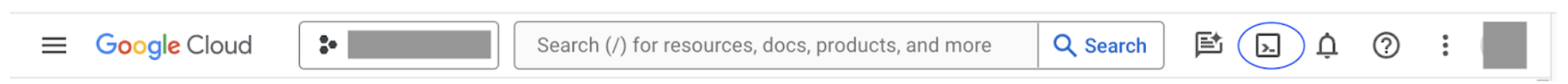

次のスクリーンショットで青色の円で囲まれている、メニューバーの右上にあるアイコンを使用して、Google Cloud Shell を起動します。

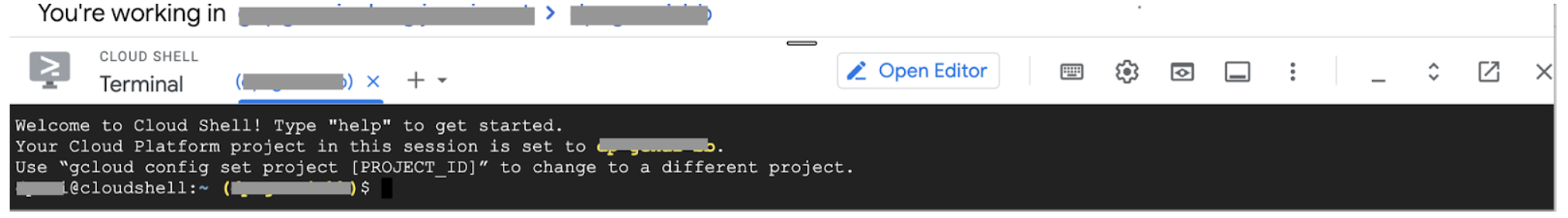

ページの下部に、Bash シェルを含むターミナルが表示されます。

2-c: Google Cloud プロジェクトを設定する

gcloud コマンドを使用して、プロジェクト ID とプロジェクト リージョンを設定する必要があります。

# Set your GCP Project ID.

gcloud config set project your-project-id

# Set your GCP Project Region.

gcloud config set run/region your-project-region

3. Cloud Run を使用して LIT アプリサーバーの Docker イメージをデプロイする

3-a: LIT アプリを Cloud Run にデプロイする

まず、デプロイするバージョンとして LIT-App の最新バージョンを設定する必要があります。

# Set latest version as your LIT_SERVICE_TAG.

export LIT_SERVICE_TAG=latest

# List all the public LIT GCP App server docker images.

gcloud container images list-tags us-east4-docker.pkg.dev/lit-demos/lit-app/gcp-lit-app

バージョンタグを設定したら、サービスに名前を付ける必要があります。

# Set your lit service name. While 'lit-app-service' is provided as a placeholder, you can customize the service name based on your preferences.

export LIT_SERVICE_NAME=lit-app-service

その後、次のコマンドを実行してコンテナを Cloud Run にデプロイできます。

# Use below cmd to deploy the LIT App to Cloud Run.

gcloud run deploy $LIT_SERVICE_NAME \

--image us-east4-docker.pkg.dev/lit-demos/lit-app/gcp-lit-app:$LIT_SERVICE_TAG \

--port 5432 \

--cpu 8 \

--memory 32Gi \

--no-cpu-throttling \

--no-allow-unauthenticated

LIT では、サーバーの起動時にデータセットを追加することもできます。そのためには、name:path 形式(data_foo:/bar/data_2024.jsonl など)を使用して、読み込むデータを DATASETS 変数に設定します。データセットの形式は .jsonl にする必要があります。各レコードには、prompt フィールドと、オプションの target フィールドと source フィールドが含まれます。複数のデータセットを読み込むには、カンマで区切ります。設定しない場合、LIT プロンプトのデバッグ用のサンプル データセットが読み込まれます。

# Set the dataset.

export DATASETS=[DATASETS]

MAX_EXAMPLES を設定すると、各評価セットから読み込むサンプルの最大数を設定できます。

# Set the max examples.

export MAX_EXAMPLES=[MAX_EXAMPLES]

次に、デプロイ コマンドに

--set-env-vars "DATASETS=$DATASETS" \

--set-env-vars "MAX_EXAMPLES=$MAX_EXAMPLES" \

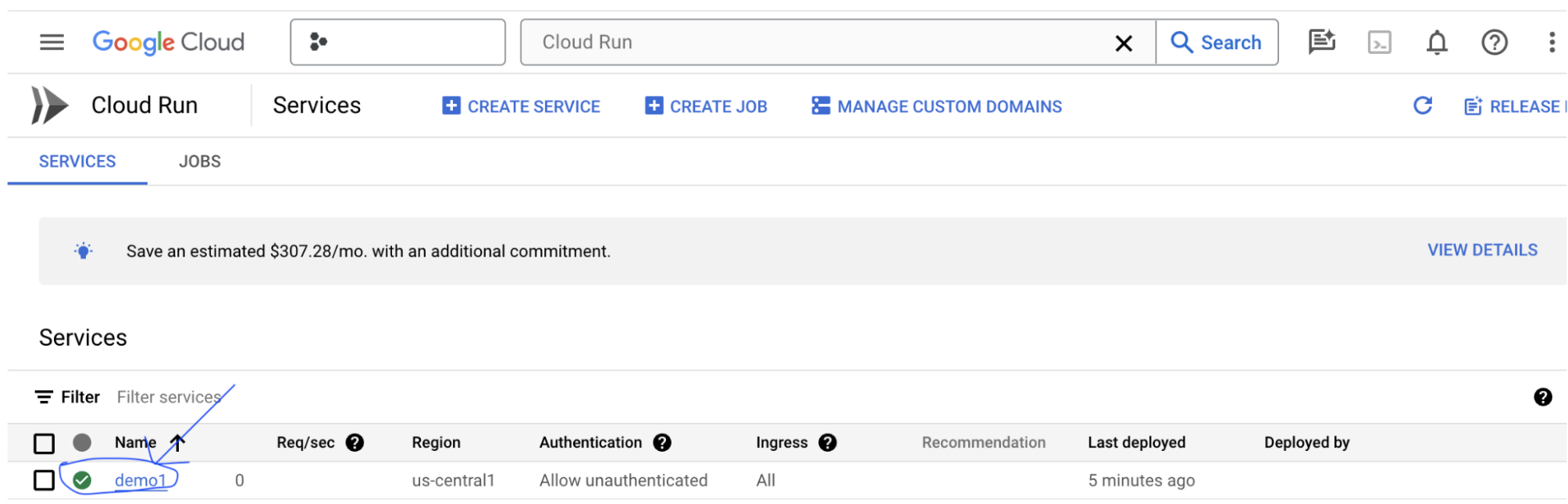

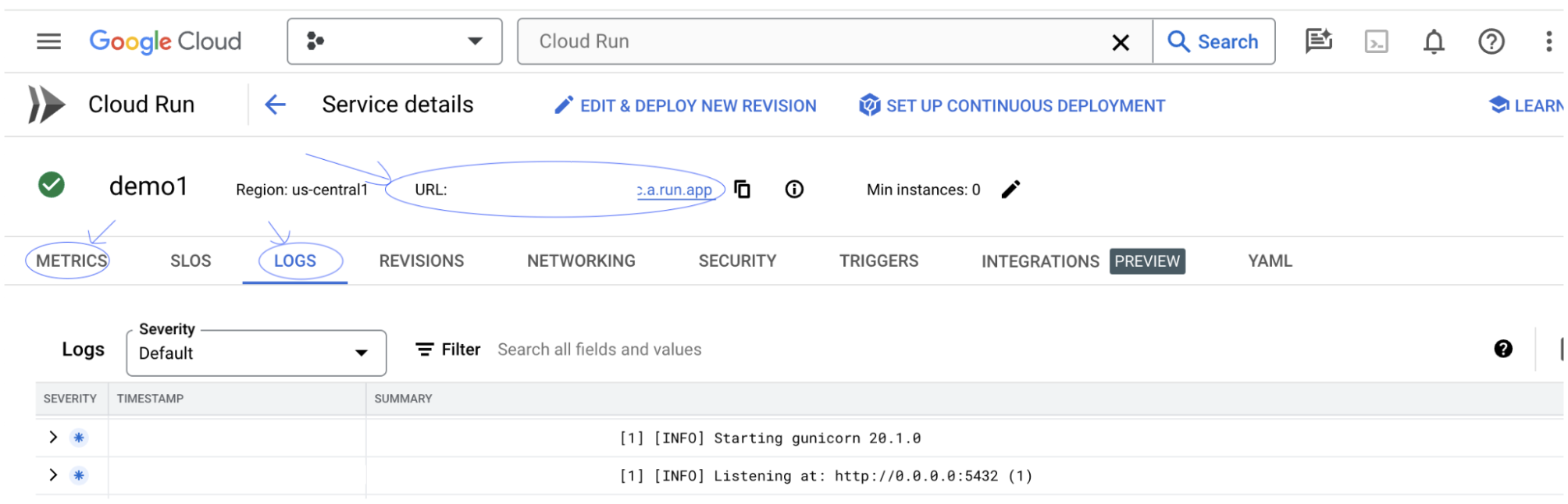

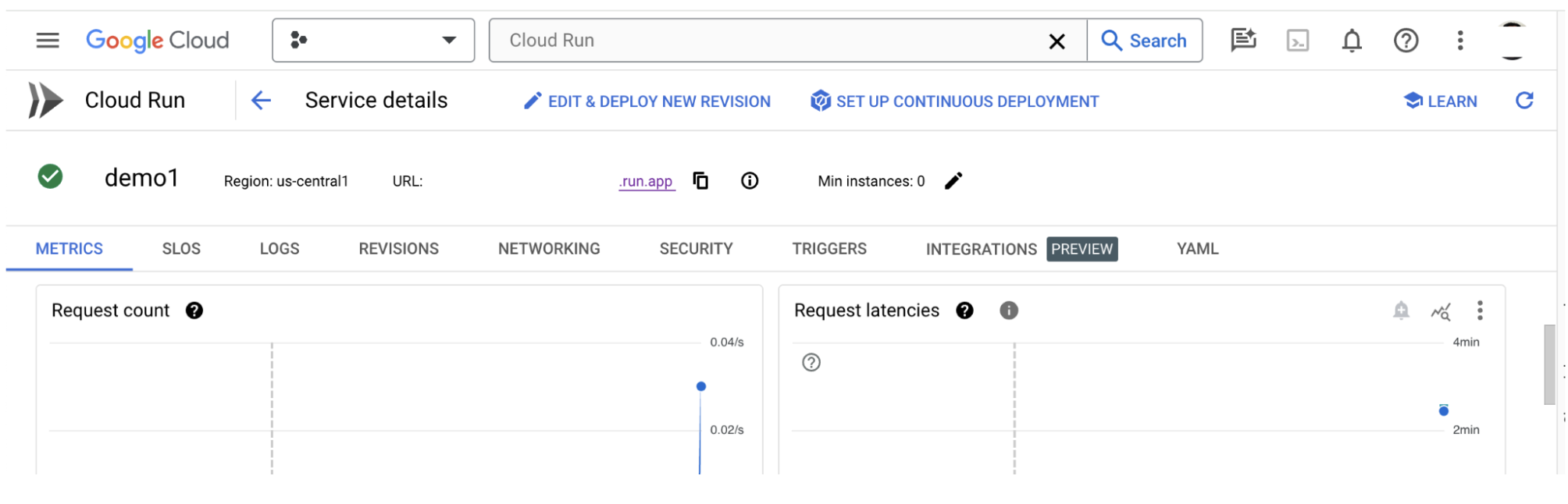

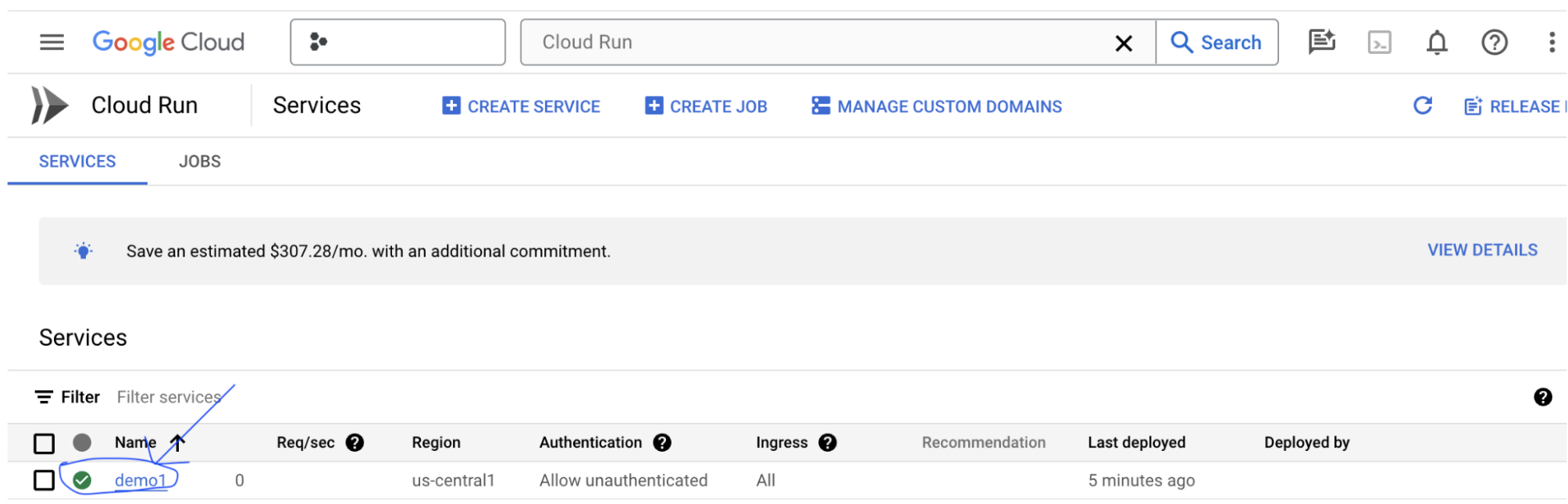

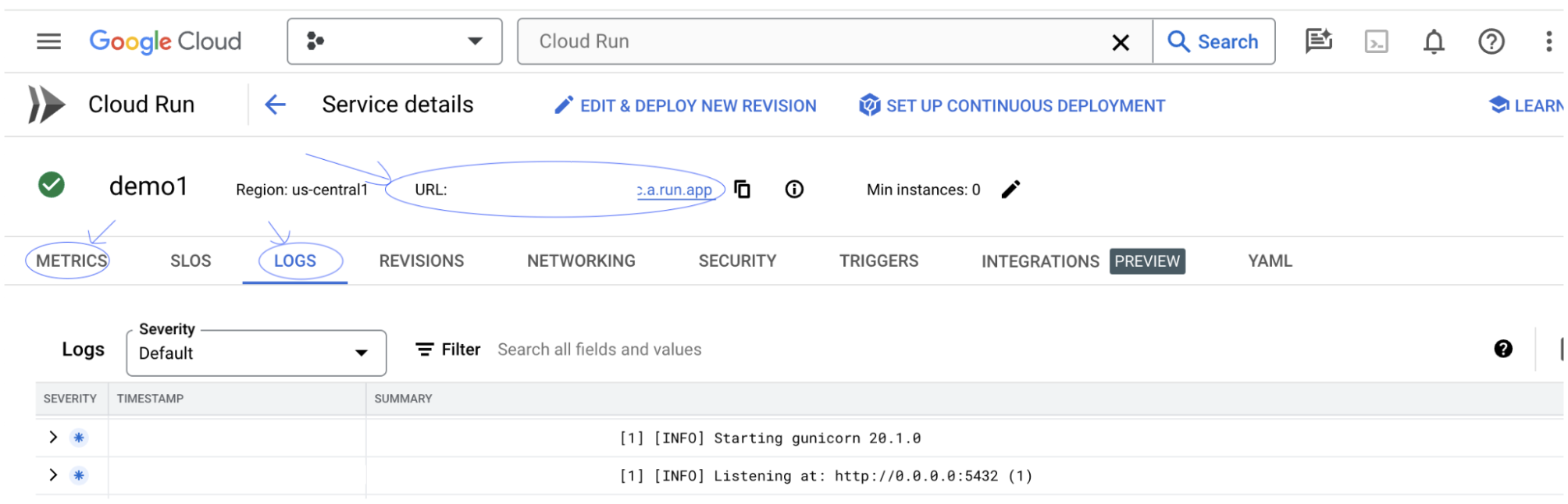

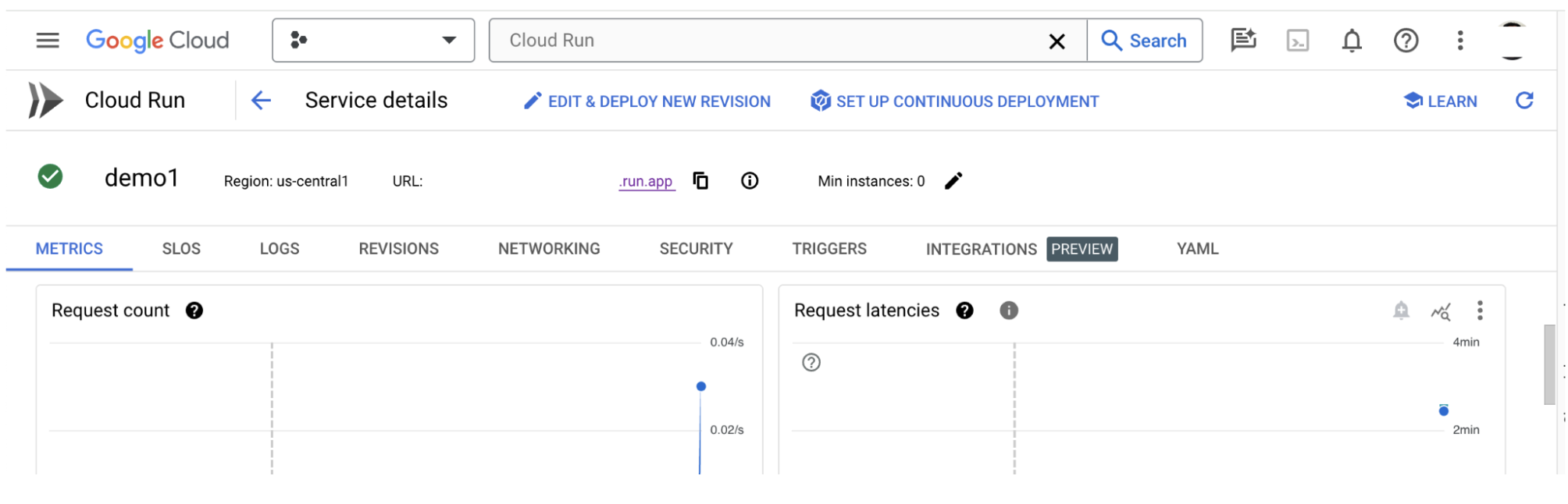

3-b: LIT アプリ サービスを表示する

LIT App サーバーを作成すると、Cloud Console の [Cloud Run] セクションにサービスが表示されます。

作成した LIT App サービスを選択します。サービス名が LIT_SERVICE_NAME と同じであることを確認します。

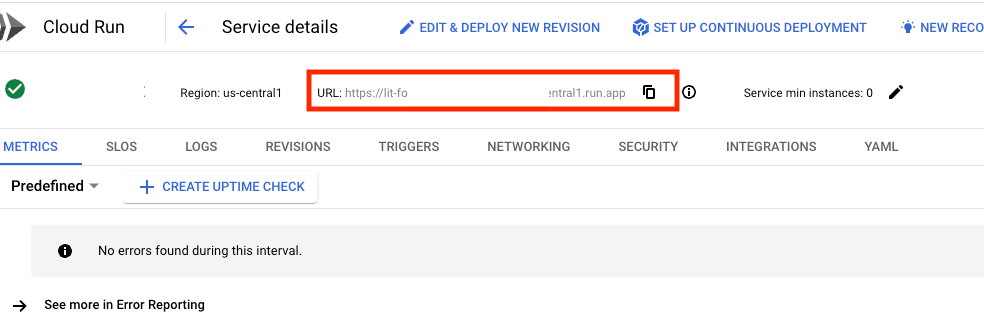

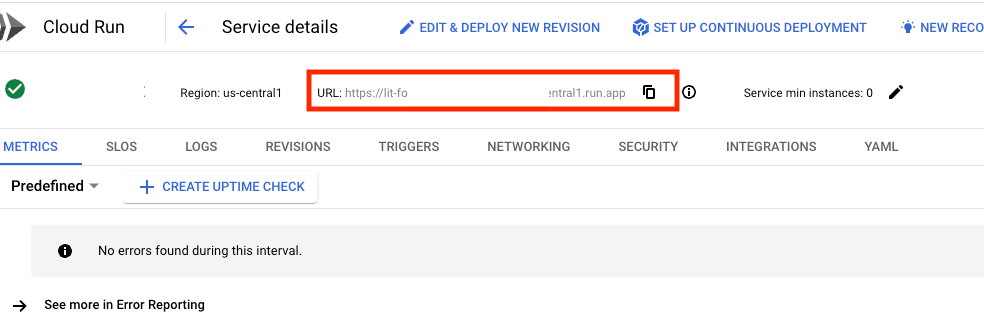

サービス URL を確認するには、デプロイしたサービスをクリックします。

これで、LIT UI が表示されます。エラーが発生した場合は、トラブルシューティングのセクションをご覧ください。

[LOGS] セクションで、アクティビティのモニタリング、エラー メッセージの表示、デプロイの進行状況のトラッキングを行うことができます。

[指標] セクションで、サービスの指標を確認できます。

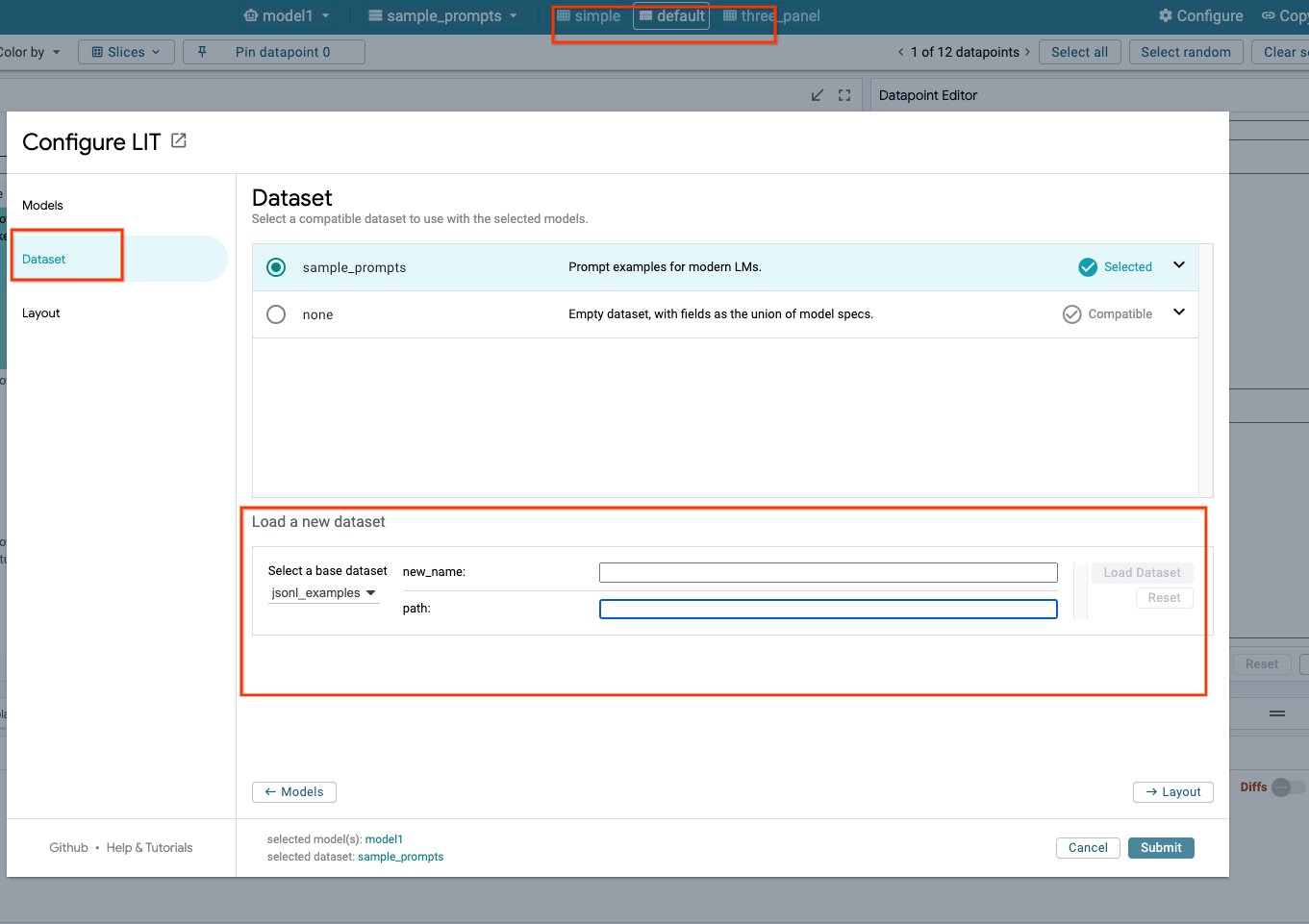

3-c: データセットを読み込む

LIT UI で Configure オプションをクリックし、Dataset を選択します。名前を指定してデータセットの URL を指定して、データセットを読み込みます。データセットの形式は .jsonl にする必要があります。各レコードには prompt フィールドと、オプションで target フィールドと source フィールドが含まれています。

4. Vertex AI Model Garden で Gemini モデルを準備する

Google の Gemini 基盤モデルは、Vertex AI API から利用できます。LIT には、これらのモデルを生成に使用するための VertexAIModelGarden モデル ラッパーが用意されています。目的のバージョン(「gemini-1.5-pro-001」)をモデル名パラメータで指定します。これらのモデルを使用する主な利点は、デプロイに追加の作業が不要なことです。デフォルトでは、GCP 上の Gemini 1.0 Pro や Gemini 1.5 Pro などのモデルにすぐにアクセスできるため、追加の構成手順を行う必要はありません。

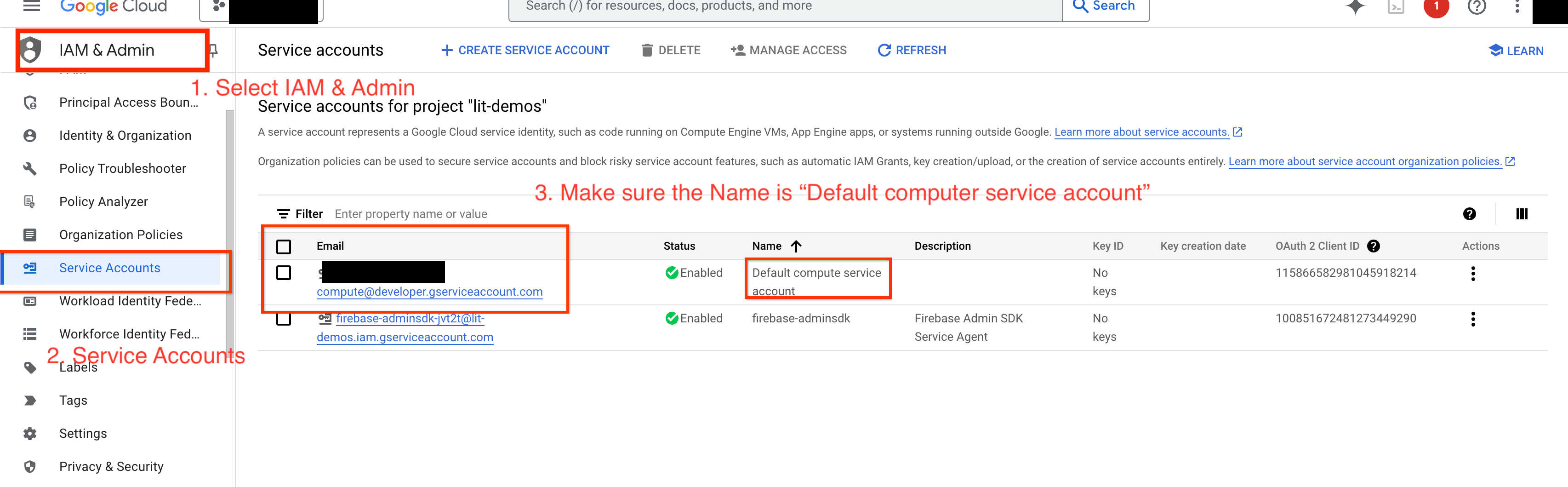

4-a: Vertex AI の権限を付与する

GCP で Gemini をクエリするには、サービス アカウントに Vertex AI 権限を付与する必要があります。サービス アカウント名が Default compute service account であることを確認します。アカウントのサービス アカウントのメールアドレスをコピーします。

サービス アカウントのメールアドレスを、IAM 許可リストに Vertex AI User ロールを持つプリンシパルとして追加します。

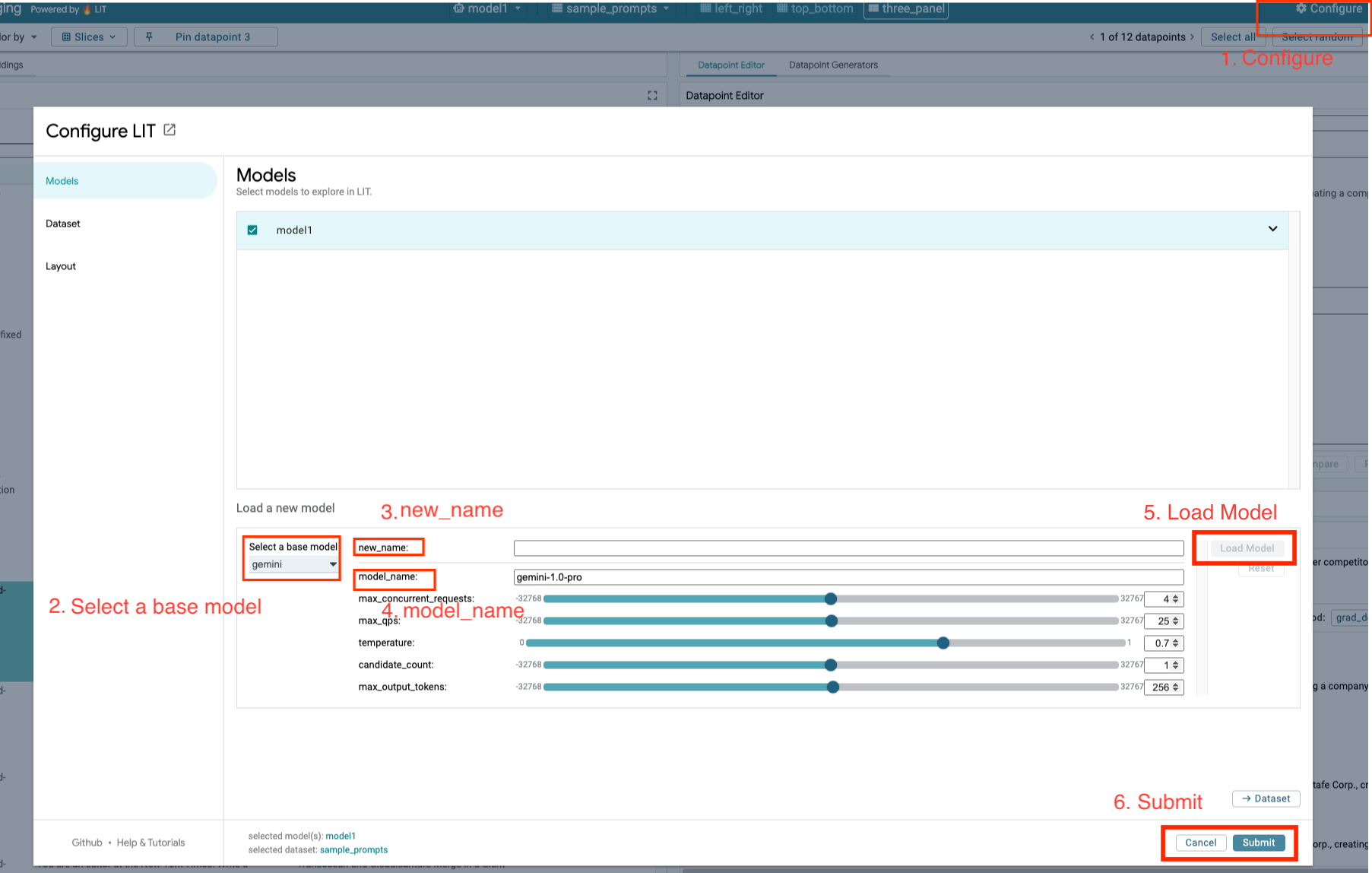

4-b: Gemini モデルを読み込む

以下の手順に沿って、Gemini モデルを読み込み、そのパラメータを調整します。

- LIT UI で

Configureオプションをクリックします。

- LIT UI で

- [

Select a base model] オプションで [gemini] オプションを選択します。

- [

new_nameでモデルに名前を付ける必要があります。

- 選択した Gemini モデルを

model_nameとして入力します。

- 選択した Gemini モデルを

- [

Load Model] をクリックします。

- [

- [

Submit] をクリックします。

- [

5. GCP にセルフホスト LLM モデルサーバーをデプロイする

LIT のモデルサーバーの Docker イメージで自己ホスト型 LLM を使用すると、LIT の顕著性とトークン化を使用して、モデルの動作をより深く分析できます。モデルサーバー イメージは、KerasNLP モデルまたは Hugging Face Transformers モデルで動作します。ライブラリ提供の重みや、Google Cloud Storage などのセルフホスト重みも使用できます。

5-a: モデルの構成

各コンテナは、環境変数を使用して構成された 1 つのモデルを読み込みます。

読み込むモデルは、MODEL_CONFIG を設定して指定する必要があります。形式は name:path にする必要があります(例: model_foo:model_foo_path)。パスには、URL、ローカル ファイルパス、構成したディープ ラーニング フレームワークのプリセット名を指定できます(詳しくは次の表をご覧ください)。このサーバーは、サポートされているすべての DL_FRAMEWORK 値で Gemma、GPT2、Llama、Mistral でテストされています。他のモデルでも動作しますが、調整が必要になる場合があります。

# Set models you want to load. While 'gemma2b is given as a placeholder, you can load your preferred model by following the instructions above.

export MODEL_CONFIG=gemma2b:gemma_2b_en

また、LIT モデルサーバーでは、次のコマンドを使用してさまざまな環境変数を構成できます。詳しくは、以下の表をご覧ください。各変数は個別に設定する必要があります。

# Customize the variable value as needed.

export [VARIABLE]=[VALUE]

変数 | 値 | 説明 |

DL_FRAMEWORK |

| 指定されたランタイムにモデル重みを読み込むために使用されるモデリング ライブラリ。デフォルトは |

DL_RUNTIME |

| モデルが実行されるディープ ラーニング バックエンド フレームワーク。このサーバーで読み込まれるすべてのモデルは同じバックエンドを使用するため、互換性がないとエラーが発生します。デフォルトは |

精度 |

| LLM モデルの浮動小数点精度。デフォルトは |

BATCH_SIZE | 正の整数 | バッチごとに処理するサンプル数。デフォルトは |

SEQUENCE_LENGTH | 正の整数 | 入力プロンプトと生成テキストの最大シーケンス長。デフォルトは |

5-b: Model Server を Cloud Run にデプロイする

まず、デプロイするバージョンとして最新バージョンの Model Server を設定する必要があります。

# Set latest as MODEL_VERSION_TAG.

export MODEL_VERSION_TAG=latest

# List all the public LIT GCP model server docker images.

gcloud container images list-tags us-east4-docker.pkg.dev/lit-demos/lit-app/gcp-model-server

バージョンタグを設定したら、モデルサーバーに名前を付ける必要があります。

# Set your Service name.

export MODEL_SERVICE_NAME='gemma2b-model-server'

その後、次のコマンドを実行してコンテナを Cloud Run にデプロイできます。環境変数を設定しない場合は、デフォルト値が適用されます。ほとんどの LLM には高価なコンピューティング リソースが必要となるため、GPU を使用することを強くおすすめします。CPU のみで実行する場合(GPT2 などの小規模なモデルでは問題なく動作します)、関連する引数 --gpu 1 --gpu-type nvidia-l4 --max-instances 7 を削除できます。

# Deploy the model service container.

gcloud beta run deploy $MODEL_SERVICE_NAME \

--image us-east4-docker.pkg.dev/lit-demos/lit-app/gcp-model-server:$MODEL_VERSION_TAG \

--port 5432 \

--cpu 8 \

--memory 32Gi \

--no-cpu-throttling \

--gpu 1 \

--gpu-type nvidia-l4 \

--max-instances 7 \

--set-env-vars "MODEL_CONFIG=$MODEL_CONFIG" \

--no-allow-unauthenticated

さらに、次のコマンドを追加して環境変数をカスタマイズできます。特定のニーズに必要な環境変数のみを含めます。

--set-env-vars "DL_FRAMEWORK=$DL_FRAMEWORK" \

--set-env-vars "DL_RUNTIME=$DL_RUNTIME" \

--set-env-vars "PRECISION=$PRECISION" \

--set-env-vars "BATCH_SIZE=$BATCH_SIZE" \

--set-env-vars "SEQUENCE_LENGTH=$SEQUENCE_LENGTH" \

特定のモデルにアクセスするには、追加の環境変数が必要になる場合があります。必要に応じて、Kaggle Hub(KerasNLP モデルに使用)と Hugging Face Hub の手順をご覧ください。

5-c: モデルサーバーにアクセスする

モデルサーバーを作成すると、開始されたサービスは GCP プロジェクトの [Cloud Run] セクションに表示されます。

作成したモデルサーバーを選択します。サービス名が MODEL_SERVICE_NAME と同じであることを確認します。

サービス URL は、デプロイしたモデルサービスをクリックすると確認できます。

[LOGS] セクションで、アクティビティのモニタリング、エラー メッセージの表示、デプロイの進行状況のトラッキングを行うことができます。

[指標] セクションで、サービスの指標を確認できます。

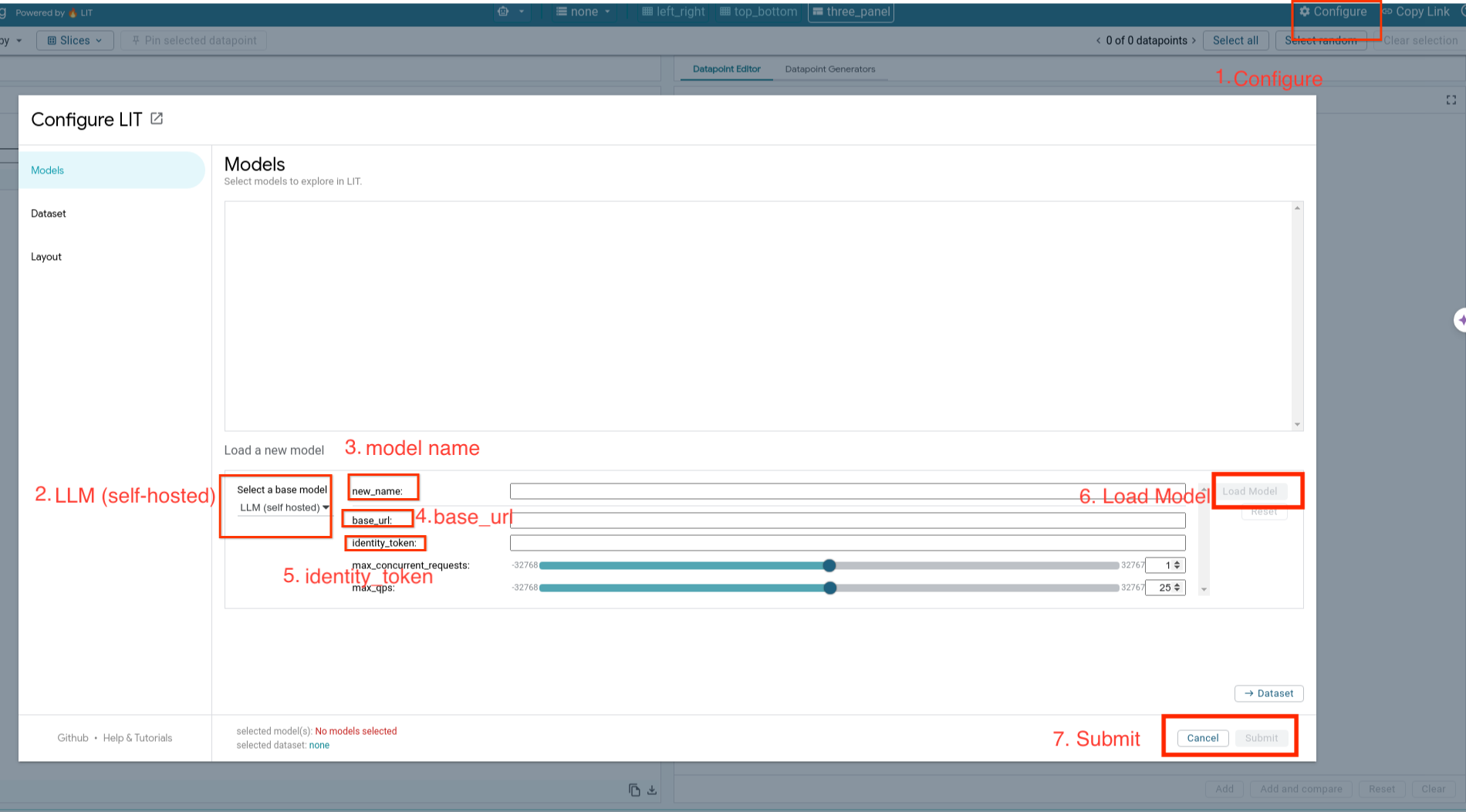

5-d: 自己ホスト型モデルを読み込む

ステップ 3 で LIT サーバーをプロキシする場合は(「トラブルシューティング」のセクションを参照)、次のコマンドを実行して GCP ID トークンを取得する必要があります。

# Find your GCP identity token.

gcloud auth print-identity-token

以下の手順で、セルフホストモデルを読み込み、そのパラメータを調整します。

- LIT UI で

Configureオプションをクリックします。 - [

Select a base model] オプションで [LLM (self hosted)] オプションを選択します。 new_nameでモデルに名前を付ける必要があります。- モデルサーバーの URL を

base_urlとして入力します。 - LIT アプリサーバーをプロキシする場合は、取得した ID トークンを

identity_tokenに入力します(手順 3 と手順 7 をご覧ください)。それ以外の場合は空白のままにします。 - [

Load Model] をクリックします。 - [

Submit] をクリックします。

6. GCP で LIT を操作する

LIT は、モデルの動作のデバッグと理解に役立つ豊富な機能を備えています。ボックスにテキストを入力してモデルの予測を確認するなど、モデルのクエリを実行する簡単な操作から、LIT の強力な機能スイートを使用してモデルを詳細に検査するまで、さまざまな操作を行うことができます。

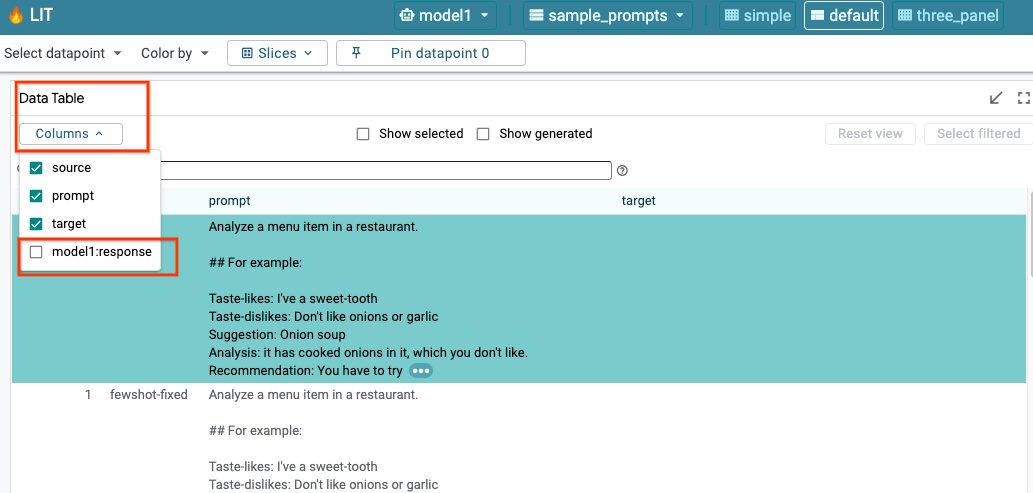

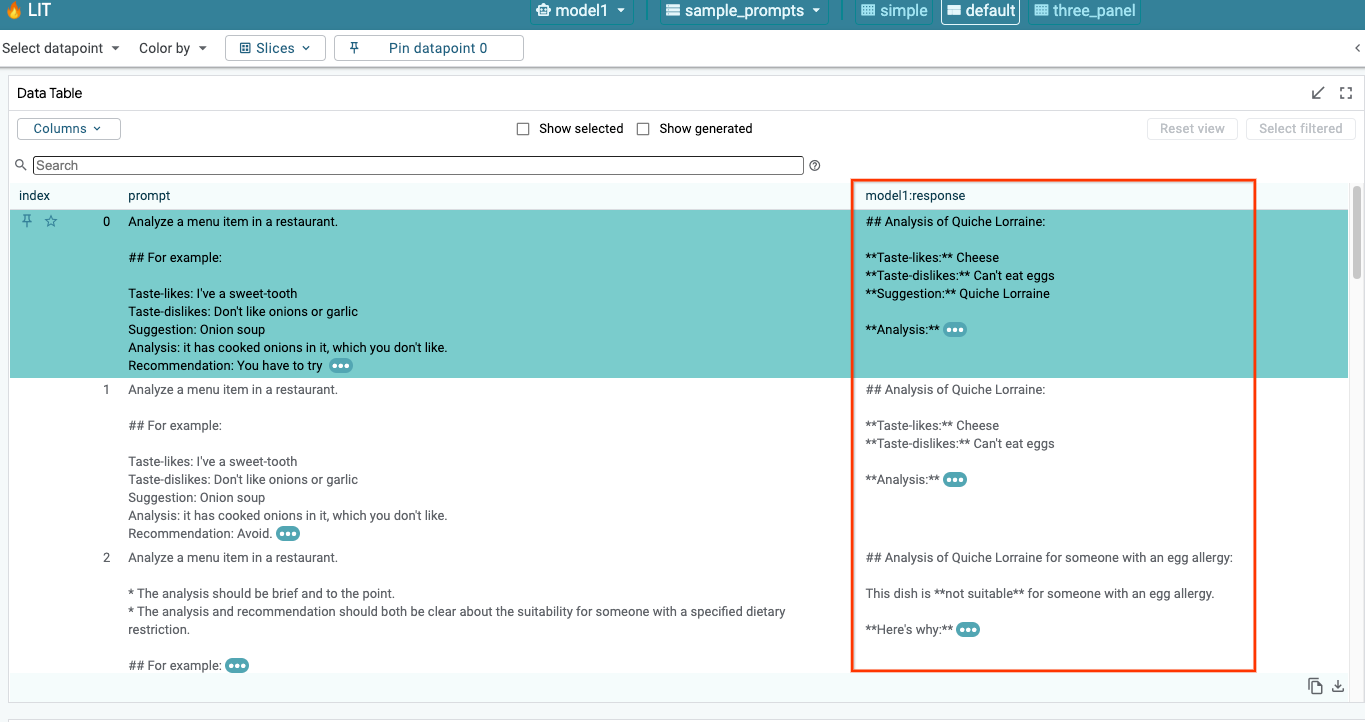

6-a: LIT を介してモデルにクエリを実行する

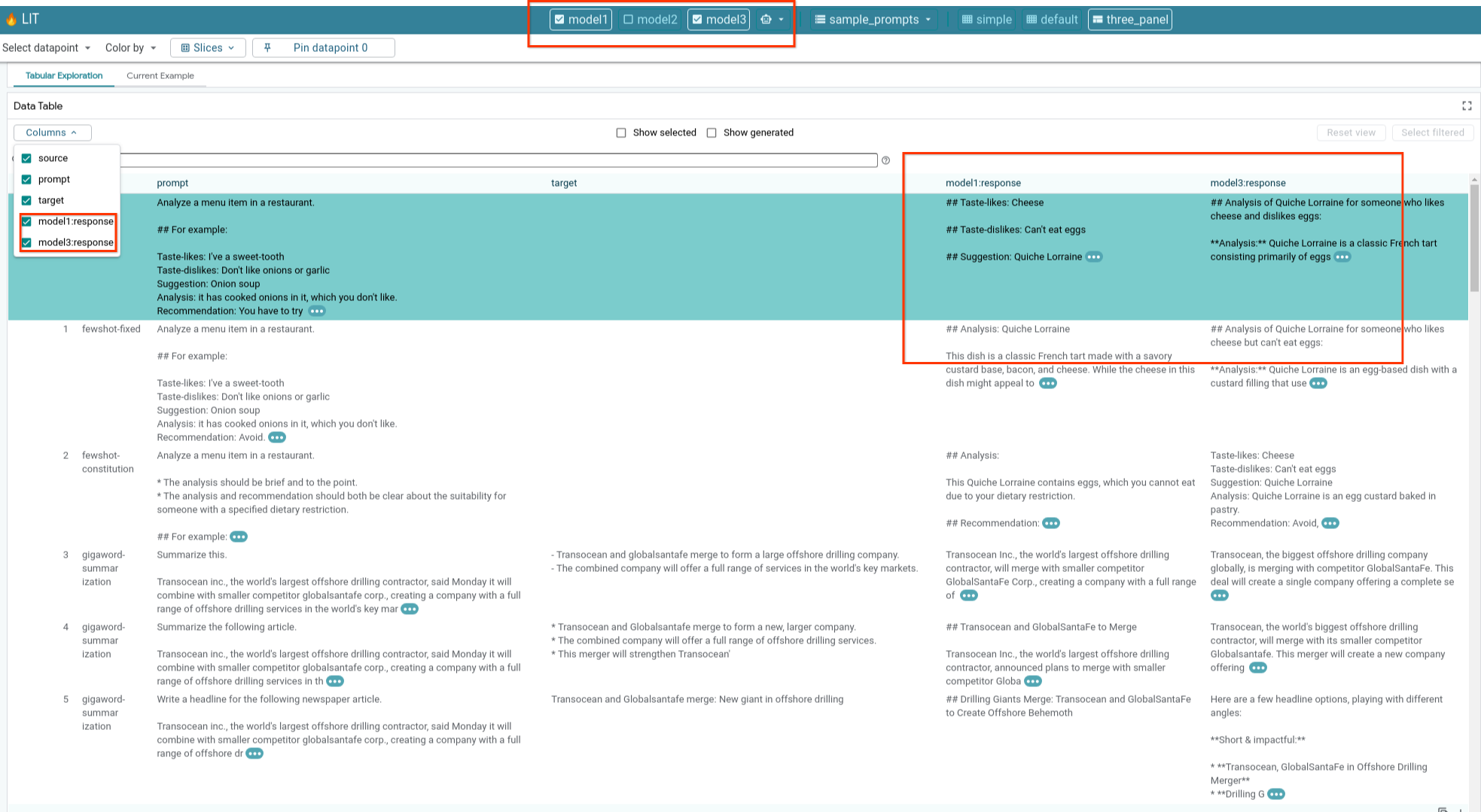

LIT は、モデルとデータセットの読み込み後に、データセットに対して自動的にクエリを実行します。各モデルのレスポンスは、列でレスポンスを選択すると表示できます。

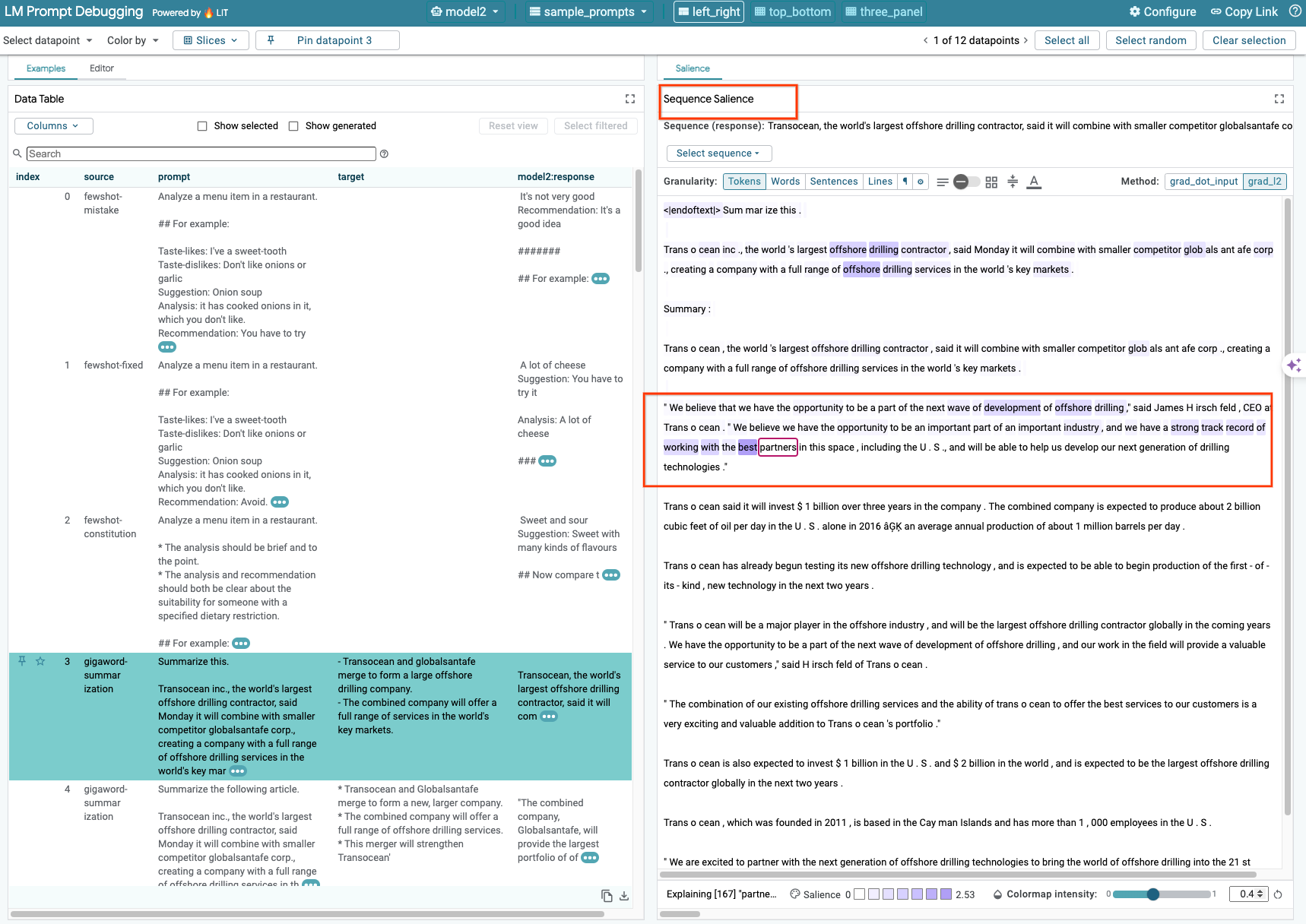

6-b: シーケンスの視認性テクニックを使用する

現在、LIT の Sequence Salience 手法は、セルフホスト モデルのみをサポートしています。

Sequence Salience は、特定の出力に対してプロンプトのどの部分が最も重要であるかをハイライト表示することで、LLM プロンプトのデバッグに役立つビジュアル ツールです。シーケンスの優位性については、この機能の使用方法に関するチュートリアル全体をご覧ください。

注目度の結果にアクセスするには、プロンプトまたはレスポンスの入力または出力をクリックします。注目度の結果が表示されます。

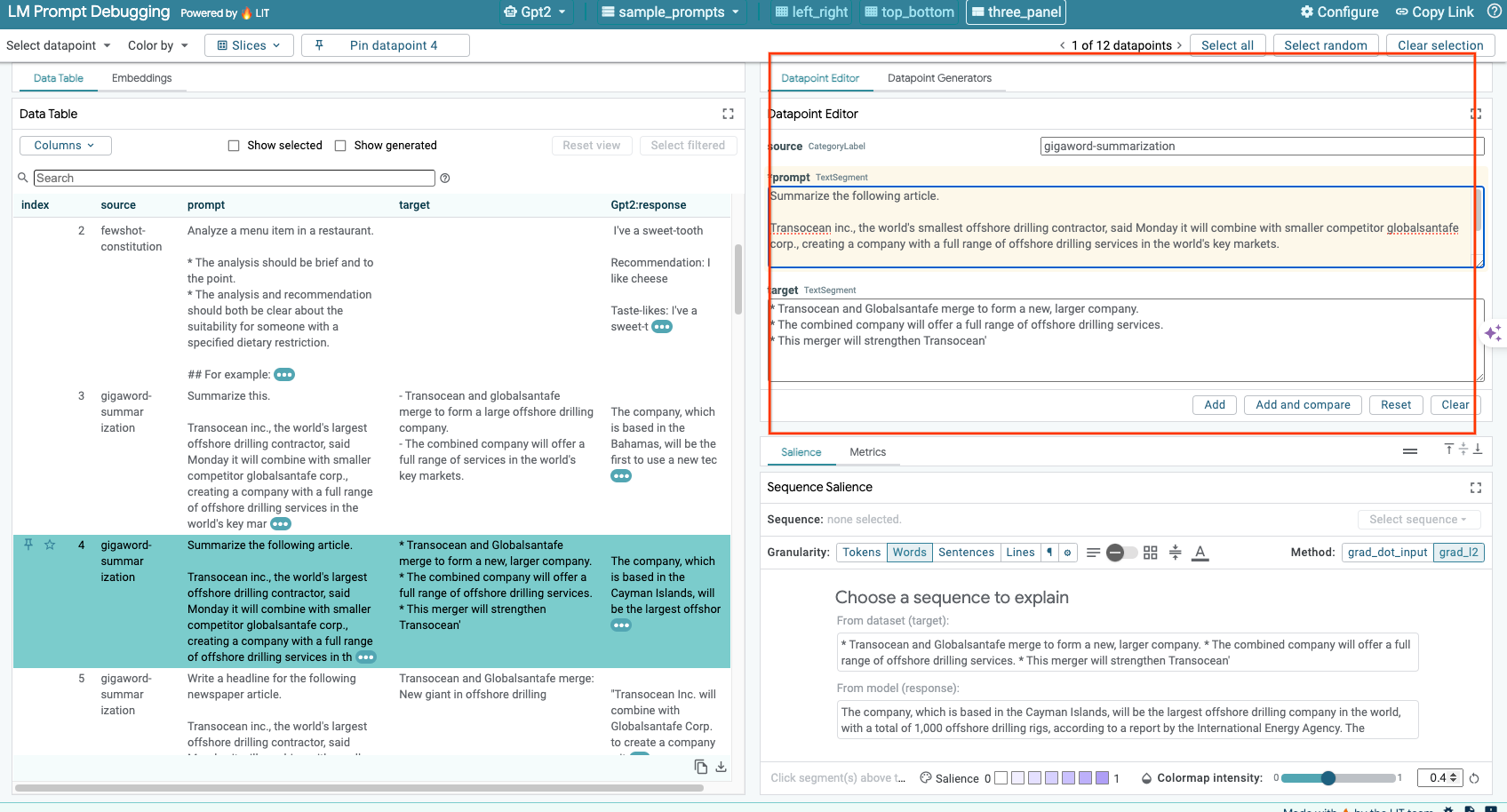

6-c: プロンプトとターゲットを手動で編集する

LIT を使用すると、既存のデータポイントの prompt と target を手動で編集できます。Add をクリックすると、新しい入力がデータセットに追加されます。

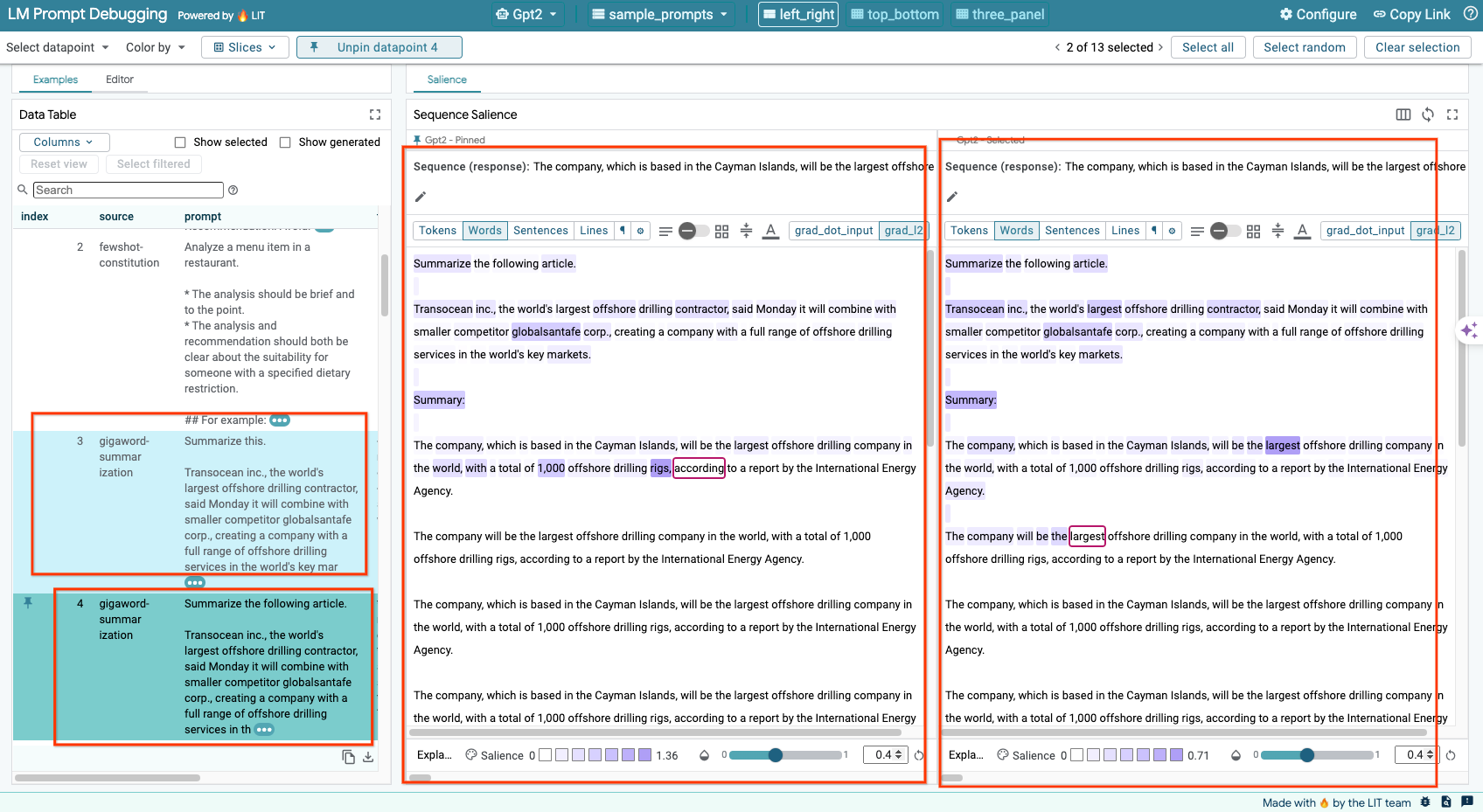

6-d: プロンプトを並べて比較する

LIT では、元の例と編集した例でプロンプトを並べて比較できます。サンプルを手動で編集し、元のバージョンと編集後のバージョンの両方の予測結果とシーケンスの重要性分析を同時に表示できます。各データポイントのプロンプトを変更すると、LIT でモデルにクエリを実行して、対応するレスポンスを生成します。

6-e: 複数のモデルを並べて比較する

LIT を使用すると、個々のテキスト生成とスコアリングのサンプルでモデルを比較できます。また、特定の指標の集計サンプルでモデルを比較することもできます。読み込まれたさまざまなモデルに対してクエリを実行すると、レスポンスの違いを簡単に比較できます。

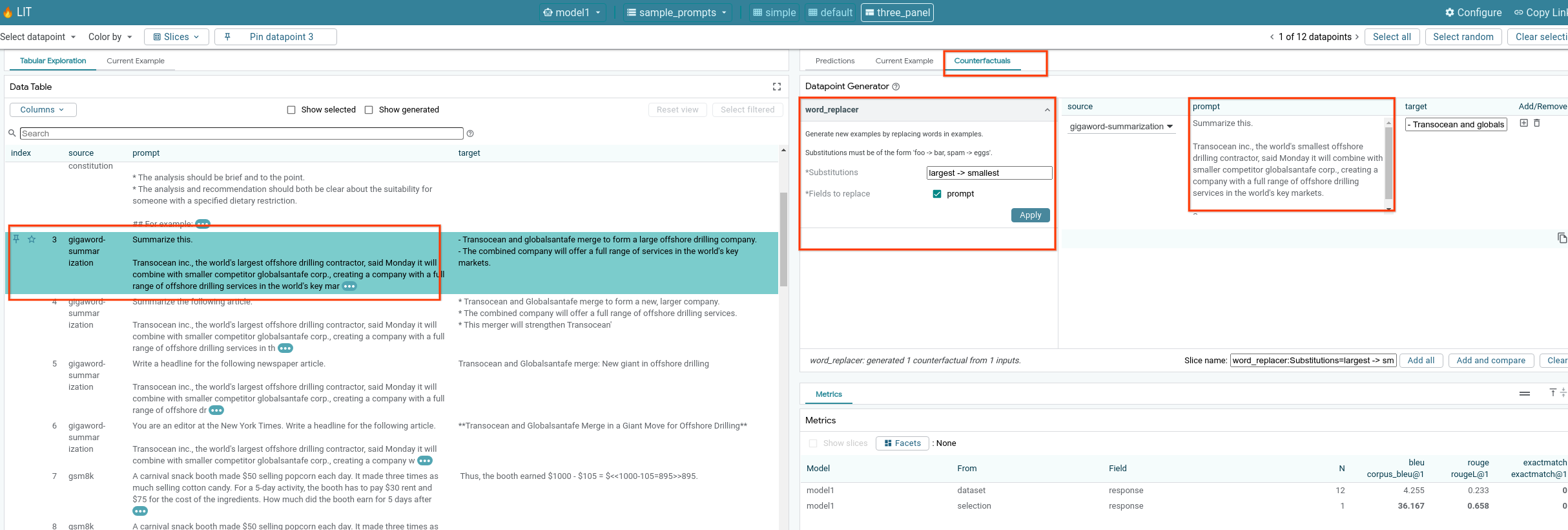

6-f: 自動対抗事例生成ツール

自動反事実生成ツールを使用して代替入力を作成し、モデルがそれらに対してどのように動作するかをすぐに確認できます。

6-g: モデルのパフォーマンスを評価する

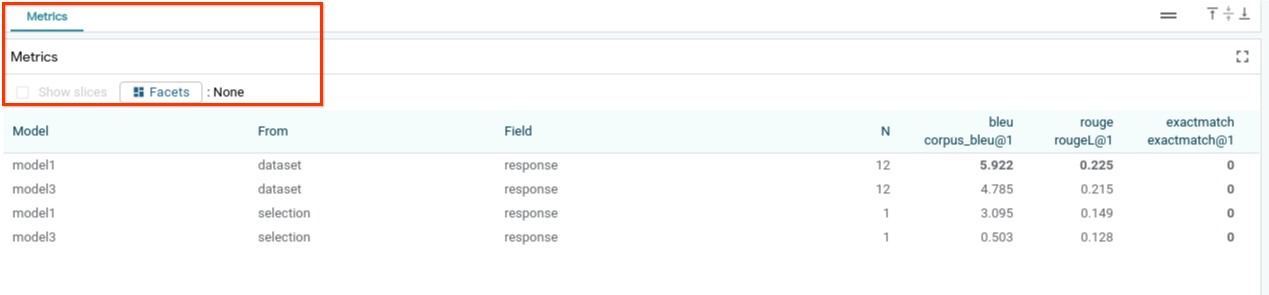

データセット全体、またはフィルタまたは選択されたサンプルのサブセット全体で指標(現在、テキスト生成の BLEU スコアと ROUGE スコアがサポートされています)を使用して、モデルのパフォーマンスを評価できます。

7. トラブルシューティング

7-a: アクセスに関する考えられる問題と解決策

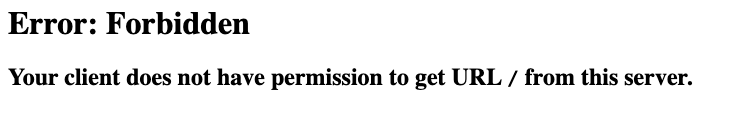

Cloud Run へのデプロイ時に --no-allow-unauthenticated が適用されるため、次のような禁止エラーが発生することがあります。

LIT アプリ サービスにアクセスする方法は 2 つあります。

1. ローカル サービスへのプロキシ

次のコマンドを使用して、サービスをローカルホストにプロキシできます。

# Proxy the service to local host.

gcloud run services proxy $LIT_SERVICE_NAME

これで、プロキシされたサービス リンクをクリックして LIT サーバーにアクセスできるようになります。

2. ユーザーを直接認証する

こちらのリンクからユーザーを認証し、LIT アプリ サービスに直接アクセスできます。この方法では、ユーザー グループがサービスにアクセスできるようにすることもできます。複数のユーザーとのコラボレーションを伴う開発では、この方法がより効果的です。

7-b: Model Server が正常に起動されたことを確認する

モデルサーバーが正常に起動したことを確認するには、リクエストを送信してモデルサーバーに直接クエリを実行します。モデルサーバーは、predict、tokenize、salience の 3 つのエンドポイントを提供します。リクエストには必ず prompt フィールドと target フィールドの両方を指定してください。

# Query the model server predict endpoint.

curl -X POST http://YOUR_MODEL_SERVER_URL/predict -H "Content-Type: application/json" -d '{"inputs":[{"prompt":"[YOUR PROMPT]", "target":[YOUR TARGET]}]}'

# Query the model server tokenize endpoint.

curl -X POST http://YOUR_MODEL_SERVER_URL/tokenize -H "Content-Type: application/json" -d '{"inputs":[{"prompt":"[YOUR PROMPT]", "target":[YOUR TARGET]}]}'

# Query the model server salience endpoint.

curl -X POST http://YOUR_MODEL_SERVER_URL/salience -H "Content-Type: application/json" -d '{"inputs":[{"prompt":"[YOUR PROMPT]", "target":[YOUR TARGET]}]}'

アクセスに関する問題が発生した場合は、上記のセクション 7-a をご確認ください。

8. 完了

この Codelab は以上です。くつろぐ時間です!

クリーンアップ

ラボをクリーンアップするために、ラボ用に作成したすべての Google Cloud サービスを削除します。Google Cloud Shell を使用して、次のコマンドを実行します。

非アクティブ状態が原因で Google Cloud 接続が失われた場合は、前の手順に沿って変数をリセットします。

# Delete the LIT App Service.

gcloud run services delete $LIT_SERVICE_NAME

モデルサーバーを起動した場合は、モデルサーバーを削除する必要もあります。

# Delete the Model Service.

gcloud run services delete $MODEL_SERVICE_NAME

参考資料

以下の資料で LIT ツールの機能について学習を続けてください。

- Gemma: リンク

- LIT オープンソース コードベース: Git リポジトリ

- LIT 論文: ArXiv

- LIT プロンプトのデバッグに関する論文: ArXiv

- LIT 機能の動画デモ: YouTube

- LIT プロンプトのデバッグのデモ: YouTube

- 責任ある生成 AI ツールキット: リンク

連絡先

この Codelab についてご不明な点や問題がございましたら、GitHub でお問い合わせください。

ライセンス

この作品はクリエイティブ・コモンズの表示 4.0 汎用ライセンスにより使用許諾されています。