1. Introduction

So you've taken your first steps with TensorFlow.js, tried our pre-made models, or maybe even made your own - but you saw some cutting edge research come out over in Python and you are curious to see if it will run in the web browser to make that cool idea you had become a reality to millions of people in scalable way. Sound familiar? If so, this is the CodeLab for you!

The TensorFlow.js team have made a convenient tool to convert models that are in the SavedModel format to TensorFlow.js via a command line converter so you can enjoy using such models with the reach and scale of the web.

What you'll learn

In this code lab you will learn how to use the TensorFlow.js command line converter to port a Python generated SavedModel to the model.json format required for execution on the client side in a web browser.

Specifically:

- How to create a simple Python ML model and save it to the required format needed by the TensorFlow.js converter.

- How to install and use the TensorFlow.js converter on the SavedModel you exported from Python.

- Take the resulting files from conversion and use in your JS web application.

- Understand what to do when something goes wrong (not all models will convert) and what options you have.

Imagine being able to take some newly released research and make that model available to millions of JS developers globally. Or maybe you will use this yourself in your own creation, which anyone in the world can then experience if it runs in the web browser, as no complex dependencies or environment setup is required. Ready to get hacking? Let's go!

Share what you convert with us!

You can use what we learn today to try and convert some of your favourite models from Python. If you manage to do so successfully, and make a working demo website of the model in action, tag us on social media using the #MadeWithTFJS hashtag for a chance for your project to be featured on our TensorFlow blog or even future show and tell events. We would love to see more amazing research being ported to the web and enable a greater number of people to use such models in innovative or creative ways just like this great example.

2. What is TensorFlow.js?

TensorFlow.js is an open source machine learning library that can run anywhere JavaScript can. It's based upon the original TensorFlow library written in Python and aims to re-create this developer experience and set of APIs for the JavaScript ecosystem.

Where can it be used?

Given the portability of JavaScript, you can now write in 1 language and perform machine learning across all of the following platforms with ease:

- Client side in the web browser using vanilla JavaScript

- Server side and even IoT devices like Raspberry Pi using Node.js

- Desktop apps using Electron

- Native mobile apps using React Native

TensorFlow.js also supports multiple backends within each of these environments (the actual hardware based environments it can execute within such as the CPU or WebGL for example. A "backend" in this context does not mean a server side environment - the backend for execution could be client side in WebGL for example) to ensure compatibility and also keep things running fast. Currently TensorFlow.js supports:

- WebGL execution on the device's graphics card (GPU) - this is the fastest way to execute larger models (over 3MB in size) with GPU acceleration.

- Web Assembly (WASM) execution on CPU - to improve CPU performance across devices including older generation mobile phones for example. This is better suited to smaller models (less than 3MB in size) which can actually execute faster on CPU with WASM than with WebGL due to the overhead of uploading content to a graphics processor.

- CPU execution - the fallback should none of the other environments be available. This is the slowest of the three but is always there for you.

Note: You can choose to force one of these backends if you know what device you will be executing on, or you can simply let TensorFlow.js decide for you if you do not specify this.

Client side super powers

Running TensorFlow.js in the web browser on the client machine can lead to several benefits that are worth considering.

Privacy

You can both train and classify data on the client machine without ever sending data to a 3rd party web server. There may be times where this may be a requirement to comply with local laws, such as GDPR for example, or when processing any data that the user may want to keep on their machine and not sent to a 3rd party.

Speed

As you are not having to send data to a remote server, inference (the act of classifying the data) can be faster. Even better, you have direct access to the device's sensors such as the camera, microphone, GPS, accelerometer and more should the user grant you access.

Reach and scale

With one click anyone in the world can click a link you send them, open the web page in their browser, and utilise what you have made. No need for a complex server side Linux setup with CUDA drivers and much more just to use the machine learning system.

Cost

No servers means the only thing you need to pay for is a CDN to host your HTML, CSS, JS, and model files. The cost of a CDN is much cheaper than keeping a server (potentially with a graphics card attached) running 24/7.

Server side features

Leveraging the Node.js implementation of TensorFlow.js enables the following features.

Full CUDA support

On the server side, for graphics card acceleration, you must install the NVIDIA CUDA drivers to enable TensorFlow to work with the graphics card (unlike in the browser which uses WebGL - no install needed). However with full CUDA support you can fully leverage the graphics card's lower level abilities, leading to faster training and inference times. Performance is on parity with the Python TensorFlow implementation as they both share the same C++ backend.

Model Size

For cutting edge models from research, you may be working with very large models, maybe gigabytes in size. These models can not currently be run in the web browser due to the limitations of memory usage per browser tab. To run these larger models you can use Node.js on your own server with the hardware specifications you require to run such a model efficiently.

IOT

Node.js is supported on popular single board computers like the Raspberry Pi, which in turn means you can execute TensorFlow.js models on such devices too.

Speed

Node.js is written in JavaScript which means that it benefits from just in time compilation. This means that you may often see performance gains when using Node.js as it will be optimized at runtime, especially for any preprocessing you may be doing. A great example of this can be seen in this case study which shows how Hugging Face used Node.js to get a 2x performance boost for their natural language processing model.

Now you understand the basics of TensorFlow.js, where it can run, and some of the benefits, let's start doing useful things with it!

3. Setting up your system

For this tutorial we will be using Ubuntu - a popular Linux distribution that many folk use and is available on the Google Cloud Compute Engine as a base image if you choose to follow along on a cloud based virtual machine.

At time of writing we can select the image of Ubuntu 18.04.4 LTS when creating a new vanilla compute engine instance which is what we will be using. You can of course use your own machine, or even a different operating system if you choose to do so, but installation instructions and dependencies may differ between systems.

Installing TensorFlow (Python version)

Now then, as you are probably trying to convert some existing Python based model you found / or will write, before we can export a "SavedModel" file from Python, you will need to have the Python version of TensorFlow setup on your instance if the "SavedModel" is not available for download already.

SSH into your cloud machine you created above and then type the following in the terminal window:

Terminal window:

sudo apt update

sudo apt-get install python3

This will ensure we have Python 3 installed on the machine. Python 3.4 or higher must be installed to use TensorFlow.

To verify correct version is installed, type the following:

Terminal window:

python3 --version

You should see some output indicating the version number such as Python 3.6.9. If you see this printed correctly and it is higher than 3.4 we are ready to continue.

Next we will install PIP for Python 3 which is Python's package manager and then update it. Type:

Terminal window:

sudo apt install python3-pip

pip3 install --upgrade pip

Again we can verify pip3 installation via:

Terminal window:

pip3 --version

At time of writing we see pip 20.2.3 printed to the terminal after executing this command.

Before we can install TensorFlow, it requires the Python package "setuptools" to be of version 41.0.0 or higher. Run this the following command to ensure it is updated to the latest version:

Terminal window:

pip3 install -U setuptools

Finally, we can now install TensorFlow for Python:

Terminal window:

pip3 install tensorflow

This may take some time to complete so please wait until it has finished executing.

Let's check TensorFlow installed correctly. Create a Python file named test.py in your current directory:

Terminal window:

nano test.py

Once nano opens we can write some Python code to print the version of TensorFlow installed:

test.py:

import tensorflow as tf

print(tf.__version__)

Press CTRL + O to write changes to disk and then CTRL + X to exit nano editor.

Now we can run this Python file to see the version of TensorFlow printed to screen:

Terminal window:

python3 test.py

At time of writing we see 2.3.1 printed to the console for our version of TensorFlow Python installed.

4. Making a Python model

The next step of this codelab will walk through creating a simple Python model to show how we can save this resulting trained model in the "SavedModel" format to then use with our TensorFlow.js command line converter. The principle would be similar for any Python model you were trying to convert, but we shall keep this code simple so everyone can understand.

Let's edit our test.py file we created in the first section and update the code to be as follows:

test.py:

import tensorflow as tf

print(tf.__version__)

# Import NumPy - package for working with arrays in Python.

import numpy as np

# Import useful keras functions - this is similar to the

# TensorFlow.js Layers API functionality.

from tensorflow.keras import Sequential

from tensorflow.keras.layers import Dense

# Create a new dense layer with 1 unit, and input shape of [1].

layer0 = Dense(units=1, input_shape=[1])

model = Sequential([layer0])

# Compile the model using stochastic gradient descent as optimiser

# and the mean squared error loss function.

model.compile(optimizer='sgd', loss='mean_absolute_error')

# Provide some training data! Here we are using some fictional data

# for house square footage and house price (which is simply 1000x the

# square footage) which our model must learn for itself.

xs = np.array([800.0, 850.0, 900.0, 950.0, 980.0, 1000.0, 1050.0, 1075.0, 1100.0, 1150.0, 1200.0, 1250.0, 1300.0, 1400.0, 1500.0, 1600.0, 1700.0, 1800.0, 1900.0, 2000.0], dtype=float)

ys = np.array([800000.0, 850000.0, 900000.0, 950000.0, 980000.0, 1000000.0, 1050000.0, 1075000.0, 1100000.0, 1150000.0, 1200000.0, 1250000.0, 1300000.0, 1400000.0, 1500000.0, 1600000.0, 1700000.0, 1800000.0, 1900000.0, 2000000.0], dtype=float)

# Train the model for 500 epochs.

model.fit(xs, ys, epochs=500, verbose=0)

# Test the trained model on a test input value

print(model.predict([1200.0]))

# Save the model we just trained to the "SavedModel" format to the

# same directory our test.py file is located.

tf.saved_model.save(model, './')

This code will train a very simple linear regression to learn to estimate the relationship between our x's (inputs) and y's (outputs) provided. We will then save the resulting trained model to disk. Check the inline comments for more details on what each line does.

If we check our directory after running this program (by calling python3 test.py) we should now see some new files and folders created in our current directory:

- test.py

- saved_model.pb

- assets

- variables

We now have generated the files we need to be used by the TensorFlow.js converter to convert this model to run in the browser!

5. Converting SavedModel to TensorFlow.js format

Install TensorFlow.js converter

To install the converter, run the following command:

Terminal window:

pip3 install tensorflowjs

That was easy.

Assuming we were using the command line converter (tensorflowjs_converter) and not the wizard version shown above, we can call the following command to convert the saved model we just created and explicitly pass the parameters to the converter:

Terminal window:

tensorflowjs_converter \

--input_format=keras_saved_model \

./ \

./predict_houses_tfjs

What is going on here? First we are calling the tensorflowjs_converter binary we just installed and specifying we are trying to convert a keras saved model.

In our example code above you will note we imported keras and used its higher level layers APIs to create our model. If you had not used keras in your Python code you may want to use a different input format:

- keras - to load keras format (HDF5 file type)

- tf_saved_model - to load model that uses tensorflow core APIs instead of keras.

- tf_frozen_model - to load a model that contains frozen weights.

- tf_hub - to load a model generated from tensorflow hub.

You can learn more about these other formats here.

The next 2 parameters specify which folder the saved model is located - in our demo above we specify the current directory, and then finally we specify which directory we wish to output our conversion to, which we specify above as a folder called "predict_houses_tfjs" in the current directory.

Running the above command creates a new folder in the current directory named predict_houses_tfjs that contains:

- model.json

- Group1-shard1of1.bin

These are the files we need to run the model in the web browser. Save these files as we shall use them in the next section.

6. Using our converted model in the browser

Host the converted files

First we must place our model.json and our *.bin files that were generated on a web server so we can access them via our web page. For this demo we shall use Glitch.com so it is easy for you to follow along. However if you are from a web engineering background you may choose to fire up a simple http server on your current Ubuntu server instance to do this instead. The choice is yours.

Uploading files to Glitch

- Sign in to Glitch.com

- Use this link to Clone our boilerplate TensorFlow.js project. This contains a skeleton html, css, and js files which import the TensorFlow.js library for us ready to use.

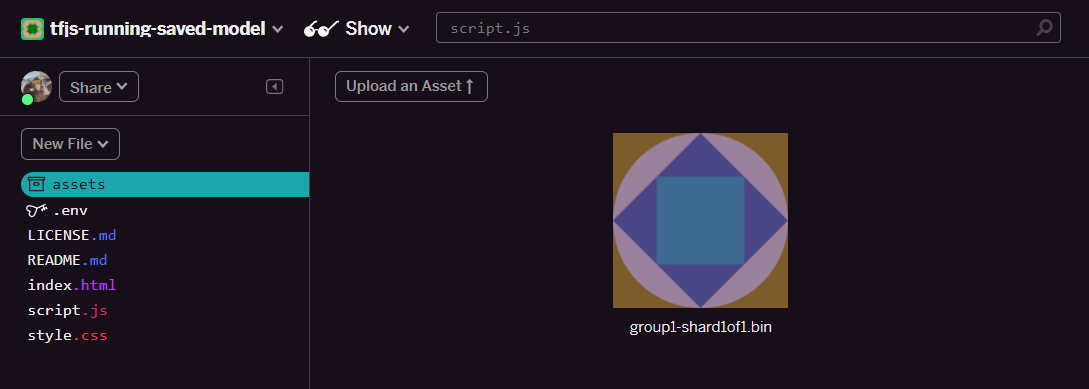

- Click on the "assets" folder in the left hand panel.

- Click "upload an asset" and select

group1-shard1of1.binto be uploaded into this folder. It should now look like this once uploaded:

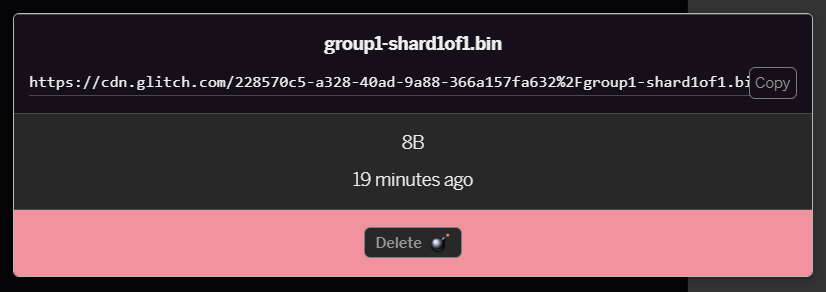

- If you click on the

group1-shard1of1.binfile you just uploaded you will be able to copy the URL to its location. Copy this path now as shown:

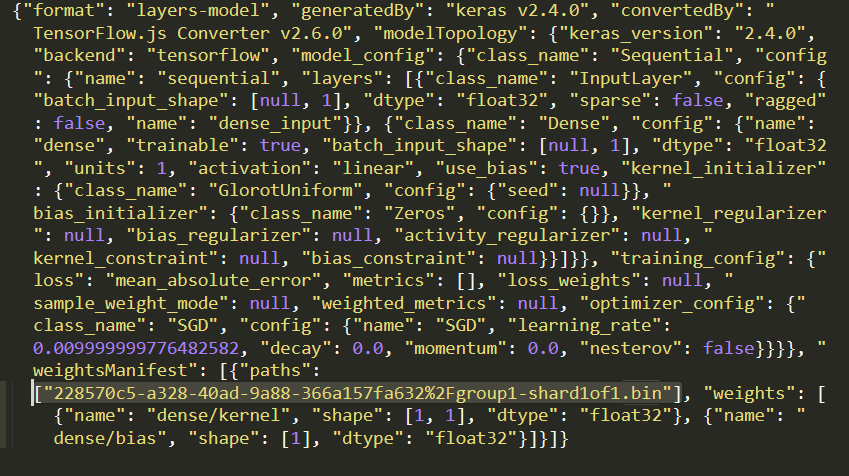

- Now edit

model.jsonusing your favourite text editor on your local machine and search (using CTRL+F) for thegroup1-shard1of1.binfile which will be mentioned somewhere within it.

Replace this filename with the url you copied from step 5, but delete the leading https://cdn.glitch.com/ that glitch generates from the copied path.

After editing it should look something like this (note how the leading server path has been removed so only the resulting uploaded filename is kept):  7. Now save and upload this edited

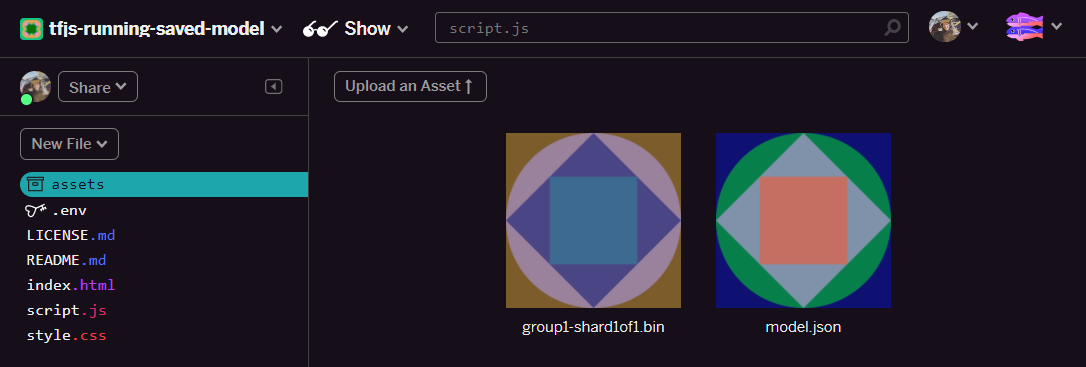

7. Now save and upload this edited model.json file to glitch by clicking on assets, then click the "upload an asset" button (important). If you do not use the physical button, and drag and drop, it will be uploaded as an editable file instead of on the CDN which will not be in the same folder and relative path is assumed when TensorFlow.js attempts to download the binary files for a given model. If you have done it correctly you should see 2 files in the assets folder like this:

Great! We are now ready to use our saved files with some actual code in the browser.

Loading the model

Now that we have hosted our converted files we can write a simple webpage to load these files and use them to make a prediction. Open script.js in the Glitch project folder and replace the contents of this file with the following after you have changed the const MODEL_URL to point to the generated Glitch.com link for your model.json file that you uploaded on Glitch:

script.js:

// Grab a reference to our status text element on the web page.

// Initially we print out the loaded version of TFJS.

const status = document.getElementById('status');

status.innerText = 'Loaded TensorFlow.js - version: ' + tf.version.tfjs;

// Specify location of our Model.json file we uploaded to the Glitch.com CDN.

const MODEL_URL = ‘YOUR MODEL.JSON URL HERE! CHANGE THIS!';

// Specify a test value we wish to use in our prediction.

// Here we use 950, so we expect the result to be close to 950,000.

const TEST_VALUE = 950.0

// Create an asynchronous function.

async function run() {

// Load the model from the CDN.

const model = await tf.loadLayersModel(MODEL_URL);

// Print out the architecture of the loaded model.

// This is useful to see that it matches what we built in Python.

console.log(model.summary());

// Create a 1 dimensional tensor with our test value.

const input = tf.tensor1d([TEST_VALUE]);

// Actually make the prediction.

const result = model.predict(input);

// Grab the result of prediction using dataSync method

// which ensures we do this synchronously.

status.innerText = 'Input of ' + TEST_VALUE +

'sqft predicted as $' + result.dataSync()[0];

}

// Call our function to start the prediction!

run();

Running the above code once you changed the MODEL_URL constant to point to your model.json path results in an output shown below.

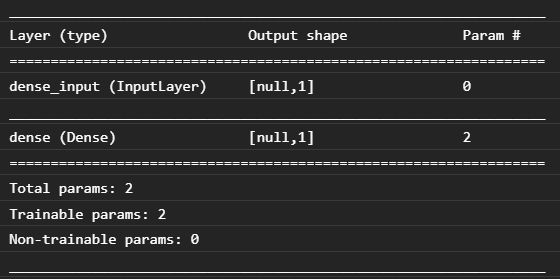

If we inspect the console of the web browser (Press F12 to bring up developer tools in browser) we can also see the model description for the loaded model which prints:

Comparing this with our Python code at the start of this codelab we can confirm that this is the same network we created with 1 dense input and one dense layer with 1 node.

Congratulations! You just ran a converted Python trained model in the web browser!

7. Models that do not convert

There will be times when more complex models that compile down to use less common operations will not be supported for conversion. The browser based version of TensorFlow.js is a complete rewrite of TensorFlow and as such we do not currently support all of the low level ops that TensorFlow C++ API has (there are 1000s) - though over time more are being added as we grow and as the core ops become more stable.

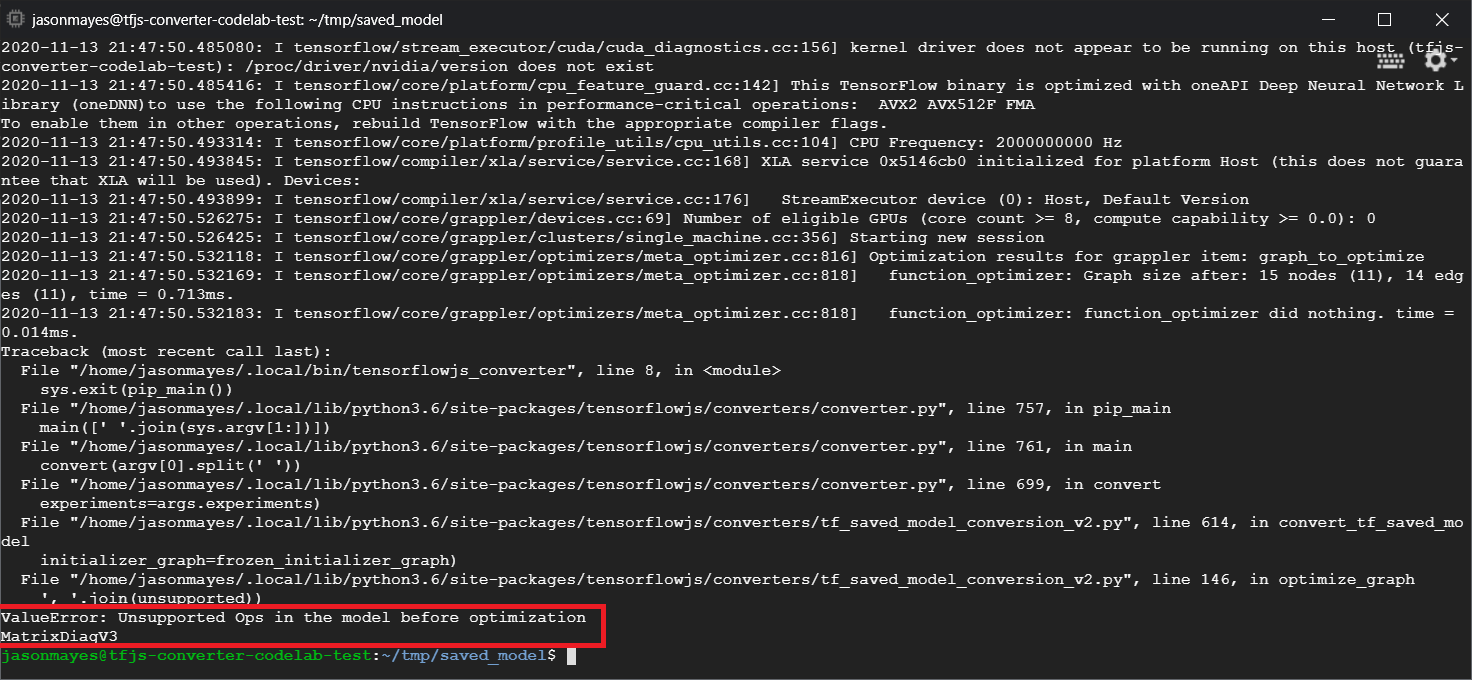

At time of writing, one such function in TensorFlow Python that generates an unsupported op when exported as a savedmodel is linalg.diag. If we try to convert a savedmodel that uses this in Python (which does support the resulting ops it produces), we will see an error similar to the one shown below:

Here we can see highlighted in red that the linalg.diag call compiled down to produce an op named MatrixDiagV3 which is not supported by TensorFlow.js in the web browser at the time of writing this codelab.

What to do?

You have two options.

- Implement this missing op in TensorFlow.js - we are an open source project and welcome contributions for things like new ops. Check this guide on writing new ops for TensorFlow.js. If you do manage to do this, you can then use the

Skip_op_checkflag on our command line converter to ignore this error and continue converting anyhow (it will assume this op is available in your new build of TensorFlow.js build you created that has the missing op supported). - Determine what part of your Python code produced the unsupported operation in the

savedmodelfile you exported. In a small set of code this may be easy to locate, but in more complex models this could require quite some investigation as there is currently no method to identify the high level Python function call that produced a given op once in thesavedmodelfile format. Once located however you can potentially change this to use a different method that is supported.

8. Congratulations

Congratulations, you have taken your first steps in using a Python model via TensorFlow.js in the web browser!

Recap

In this code lab we learnt how to:

- Set up our Linux environment to install Python based TensorFlow

- Export a Python ‘SavedModel'

- Install the TensorFlow.js command line converter

- Use the TensorFlow.js command line converter to create the required client side files

- Use the generated files in real web application

- Identify the models that will not convert and what would need to be implemented to allow them to convert in the future.

What's next?

Remember to tag us in anything you create using #MadeWithTFJS for a chance to be featured on social media or even showcased at future TensorFlow events. We would love to see what you convert and use client side in the browser!

More TensorFlow.js codelabs to go deeper

- Write a neural network from scratch in TensorFlow.js

- Make a smart webcam that can detect objects

- Custom image classification using transfer learning in TensorFlow.js