1. What you'll learn

- How to use the Gemini CLI to generate a complete ADK agent configuration.

- How to enhance an agent's personality by improving its instructions.

- How to add "grounding" to your agent by giving it a

google_searchto answer questions about recent events. - How to generate a custom avatar for your companion using an MCP server with Imagen.

The AI Companion App

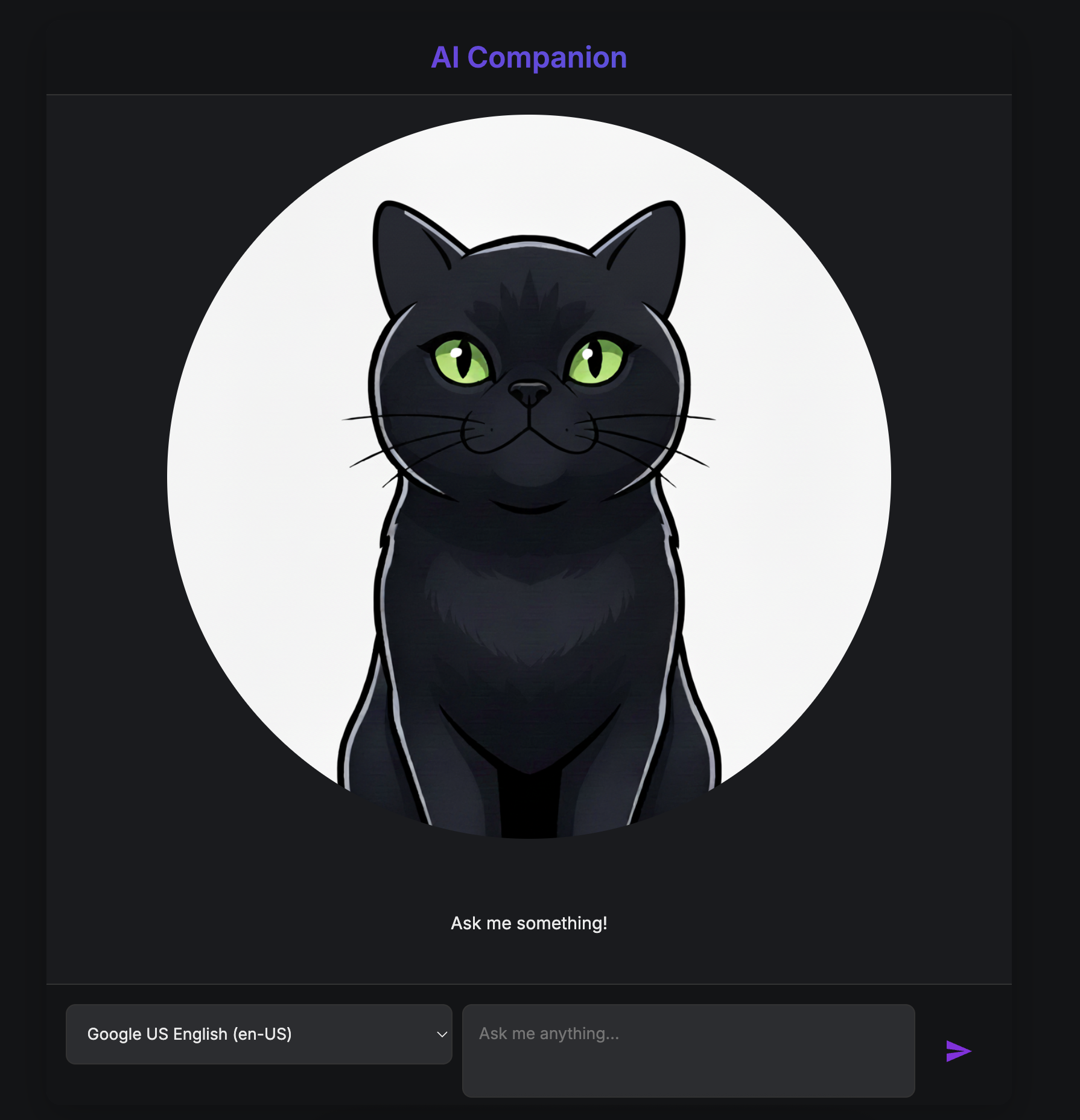

In this Codelab, you will bring to life a visual, interactive AI companion. This is more than just a standard, text-in-text-out chatbot. Imagine a character living on a webpage. You type a message, and instead of just seeing text back, the character looks at you and responds out loud, with its mouth moving in sync with its words.

You will start with a pre-built web application—a digital "puppet" that has a face but no mind of its own. It can only repeat what you type. Your mission is to build its brain and personality from the ground up.

Throughout this workshop, you will progressively add layers of intelligence and customization, transforming this simple puppet into a unique and capable companion. You will be:

- Giving it a core intelligence using the ADK(Python) to understand and generate language.

- Crafting its unique personality by writing the core instructions that define its character.

- Granting it superpowers by giving it tools to access real-time information from the internet.

- Designing its custom look by using AI to generate a unique avatar.

By the end, you will have a fully functional and personalized AI companion, created by you.

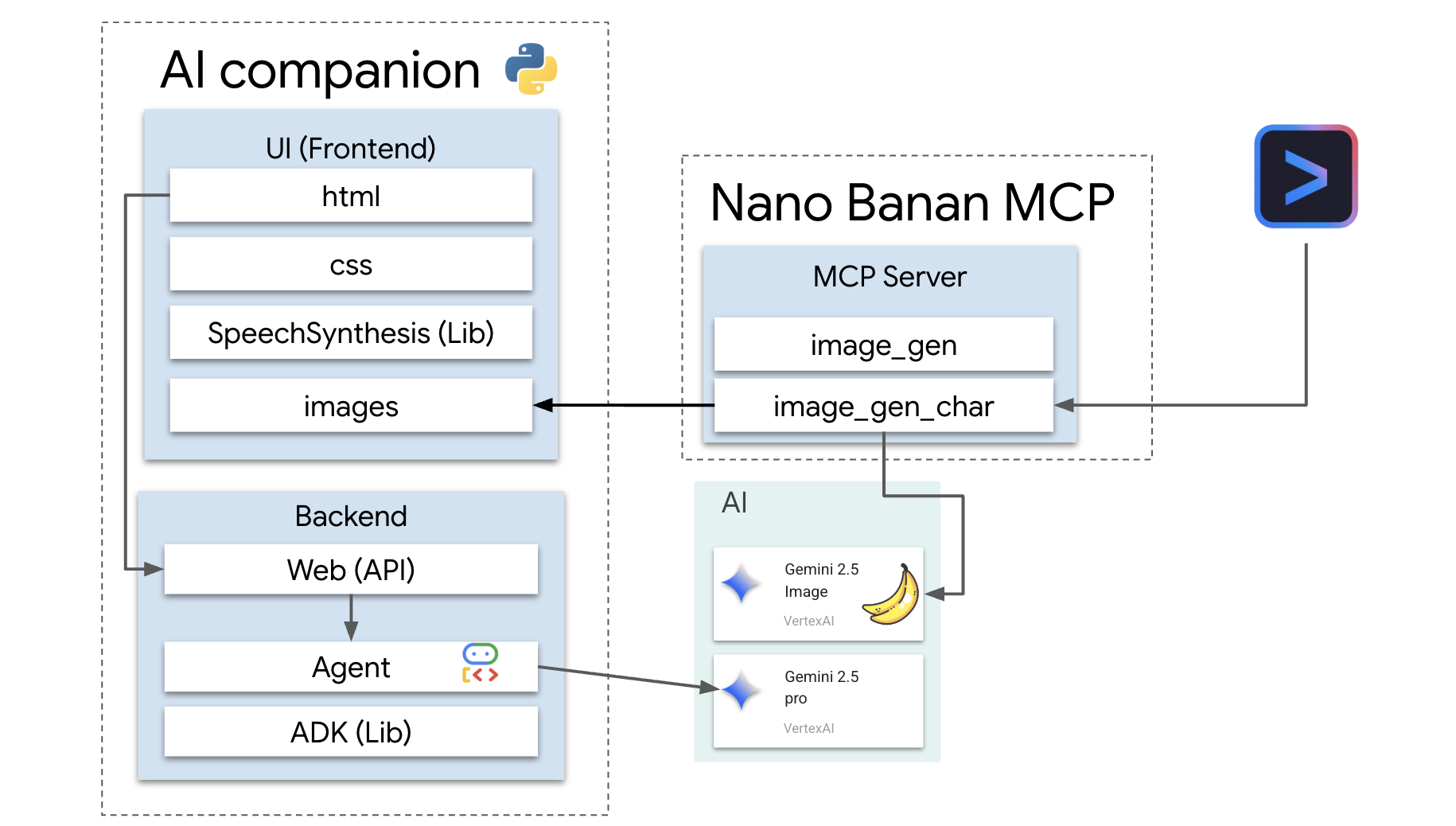

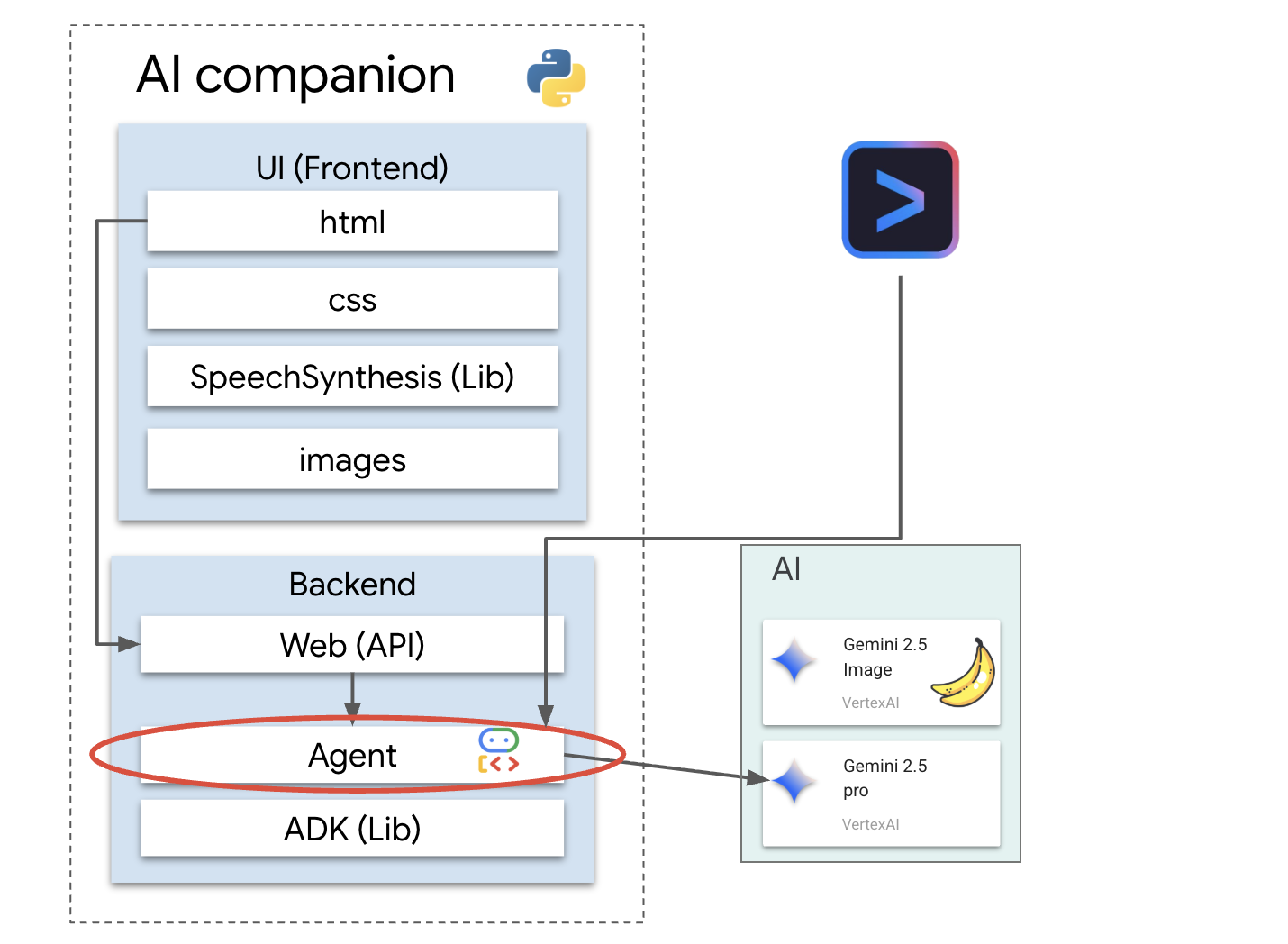

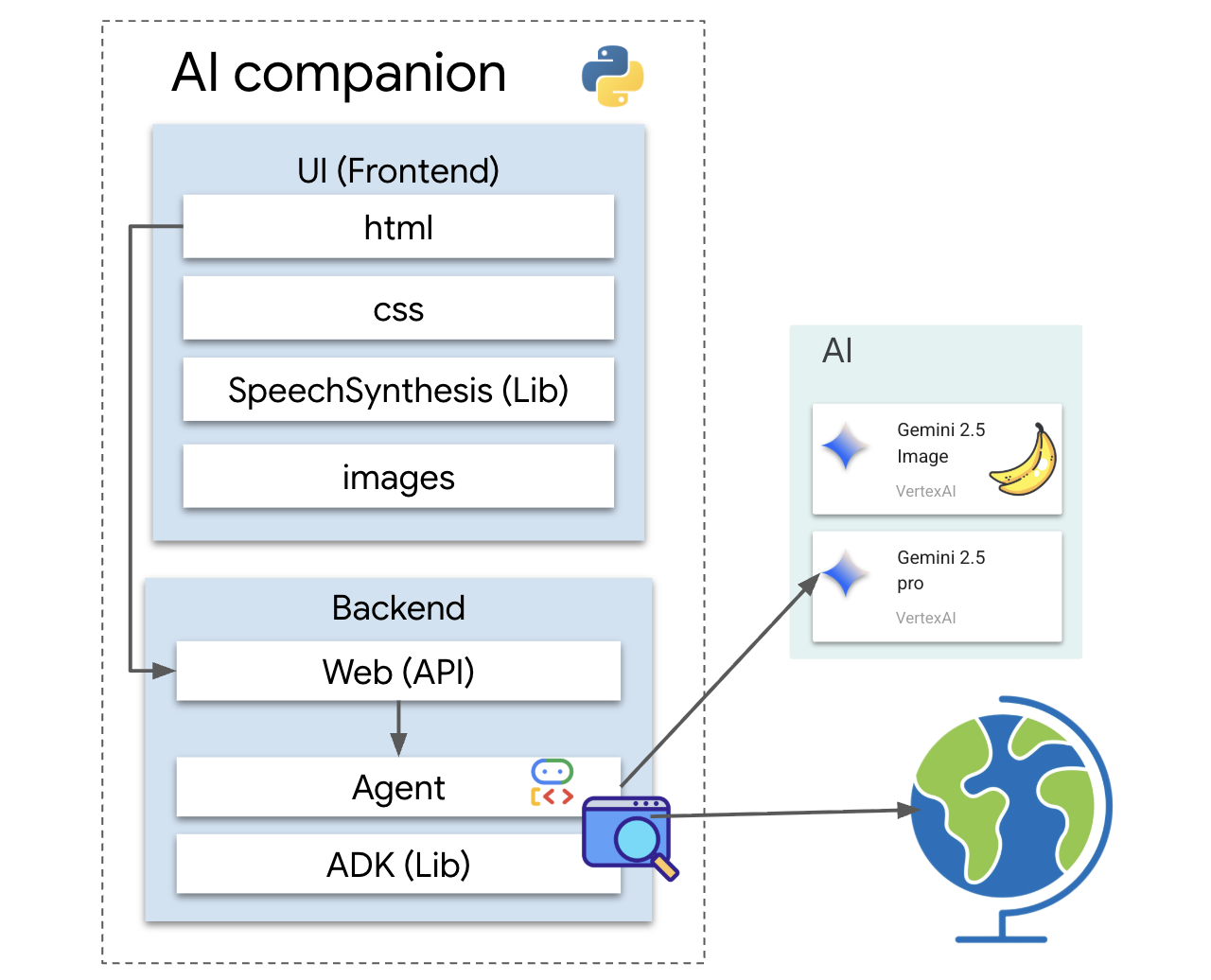

Architecture

Our application follows a simple but powerful pattern. We have a Python backend that serves an API. This backend will contain our ADK agent, which acts as the "brain." Any user interface (like the JavaScript frontend from the skeleton app, a mobile app, or even a command-line tool) can then interact with this brain through the API.

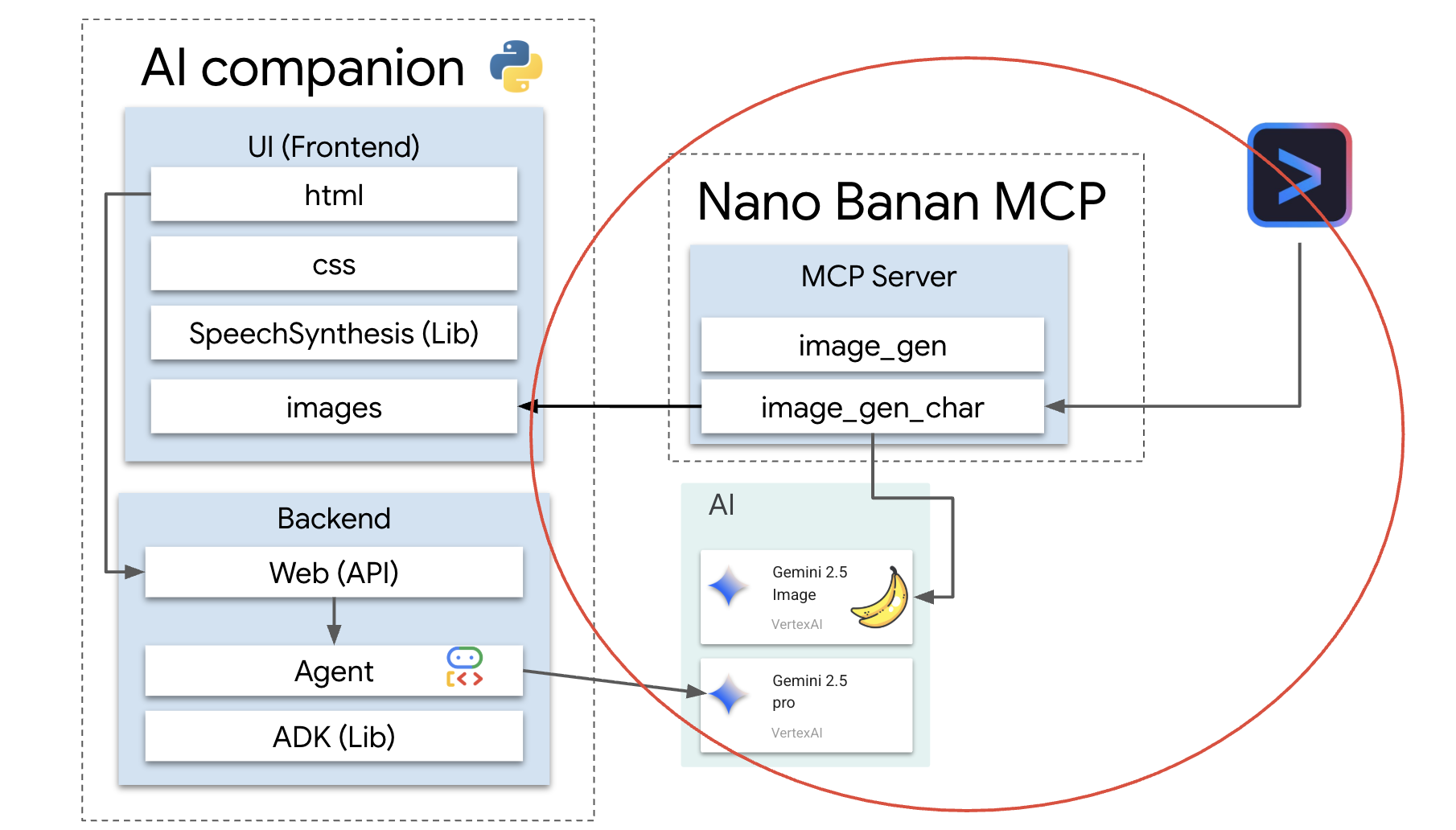

In addition to this, we will explore a more advanced concept by starting a local MCP (Model Context Protocol) server. This server acts as a specialized tool bridge for image generation. We will then use the Gemini CLI to command this MCP server, instructing it to generate a unique look for our AI companion.

Claim your credits

Follow these instructions carefully to provision your workshop resources.

Before You Start

WARNING!

- Use a personal Gmail account. Corporate or school-managed accounts will NOT work.

- Use Google Chrome in Incognito mode to prevent account conflicts.

Open a new Incognito window, paste your event link, and sign in with your personal Gmail.

👉 Click below to copy your special event link:

goo.gle/devfest-boston-ai

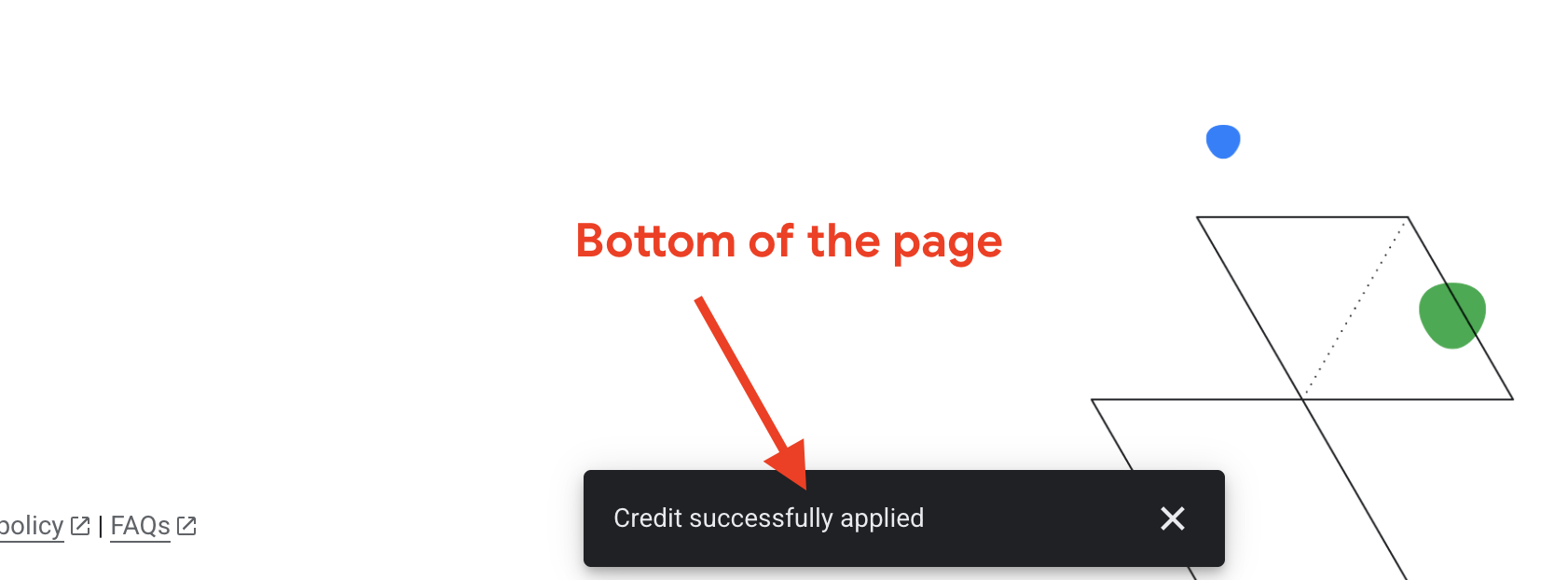

Accept the Google Cloud Platform Terms of Service. Once applied, you'll see the message showing the credit has applied.

Create & Configure Project

Now that your credits are applied, set up your project environment.

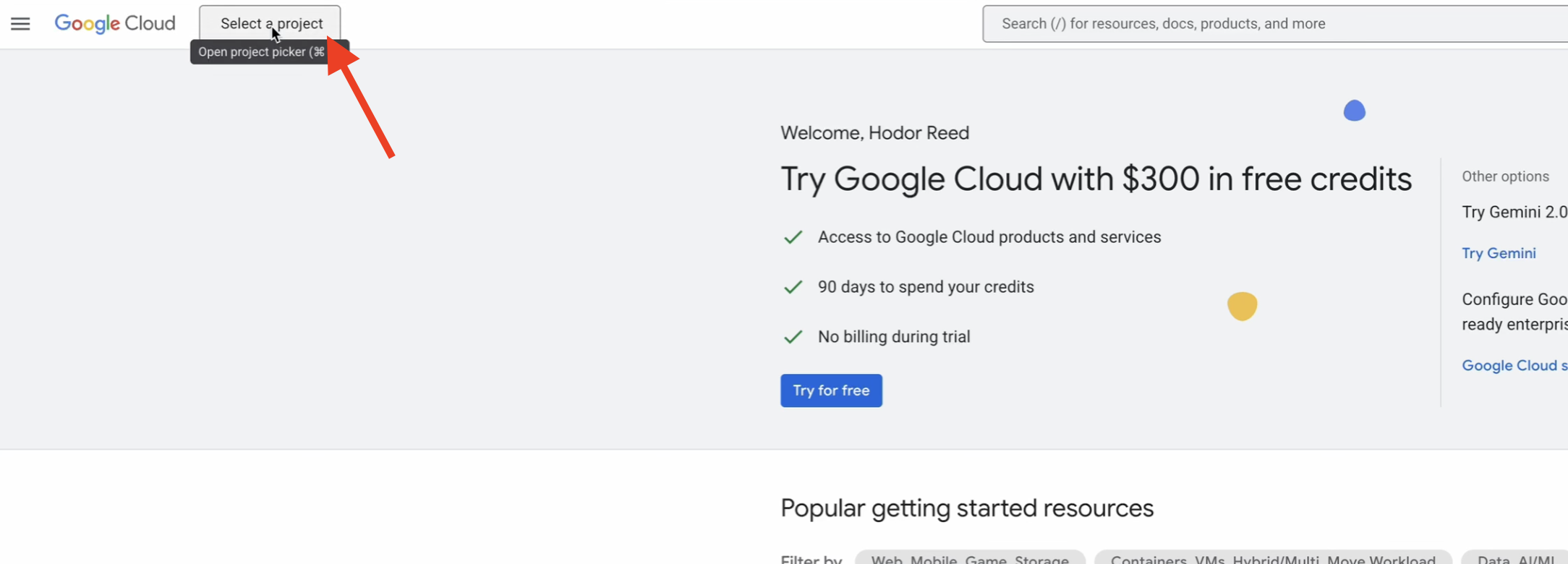

👉 Go to the Google Cloud Console. Click below to copy the link:

https://console.cloud.google.com/

👉 In the console's top navigation bar, click Select a project, then New Project in the top right corner.

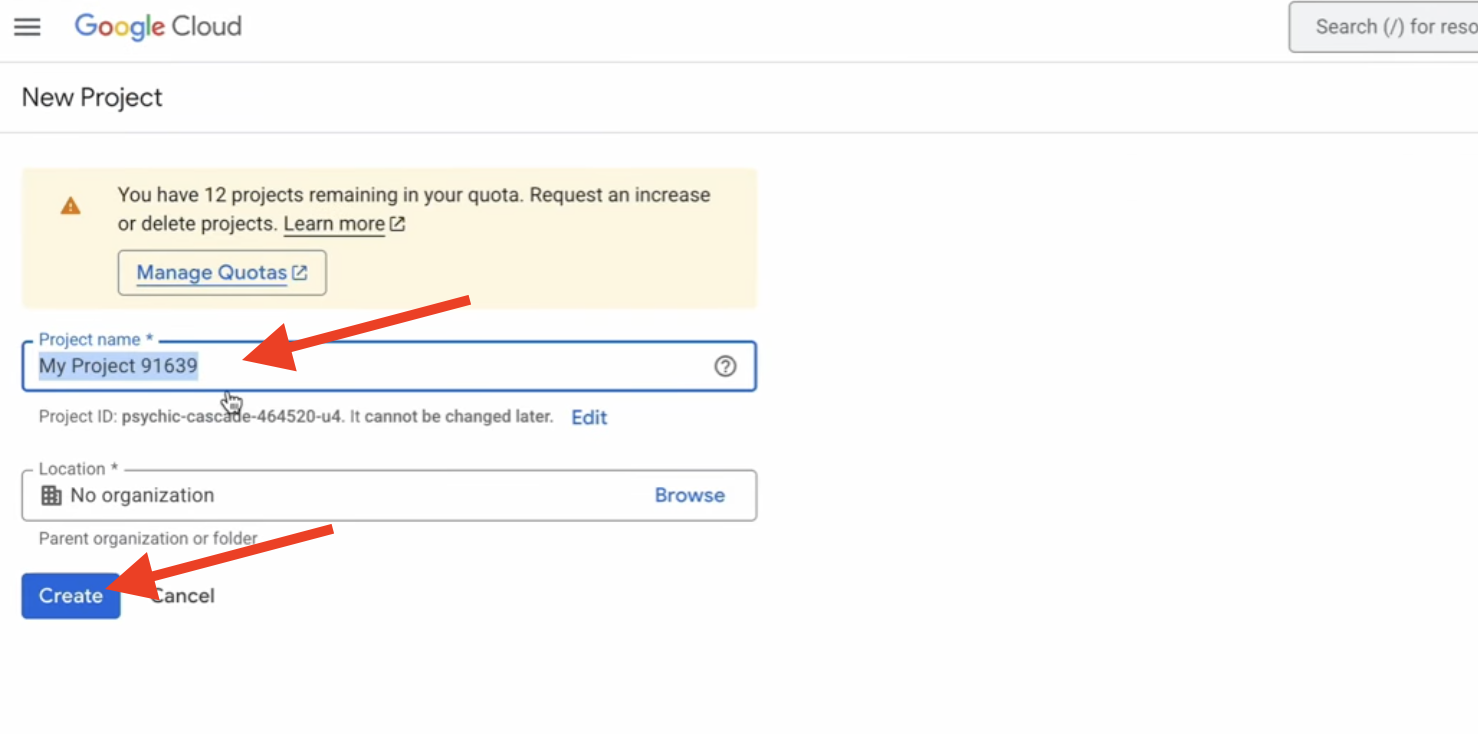

👉 Give your project a name and click Create. (No Organization)

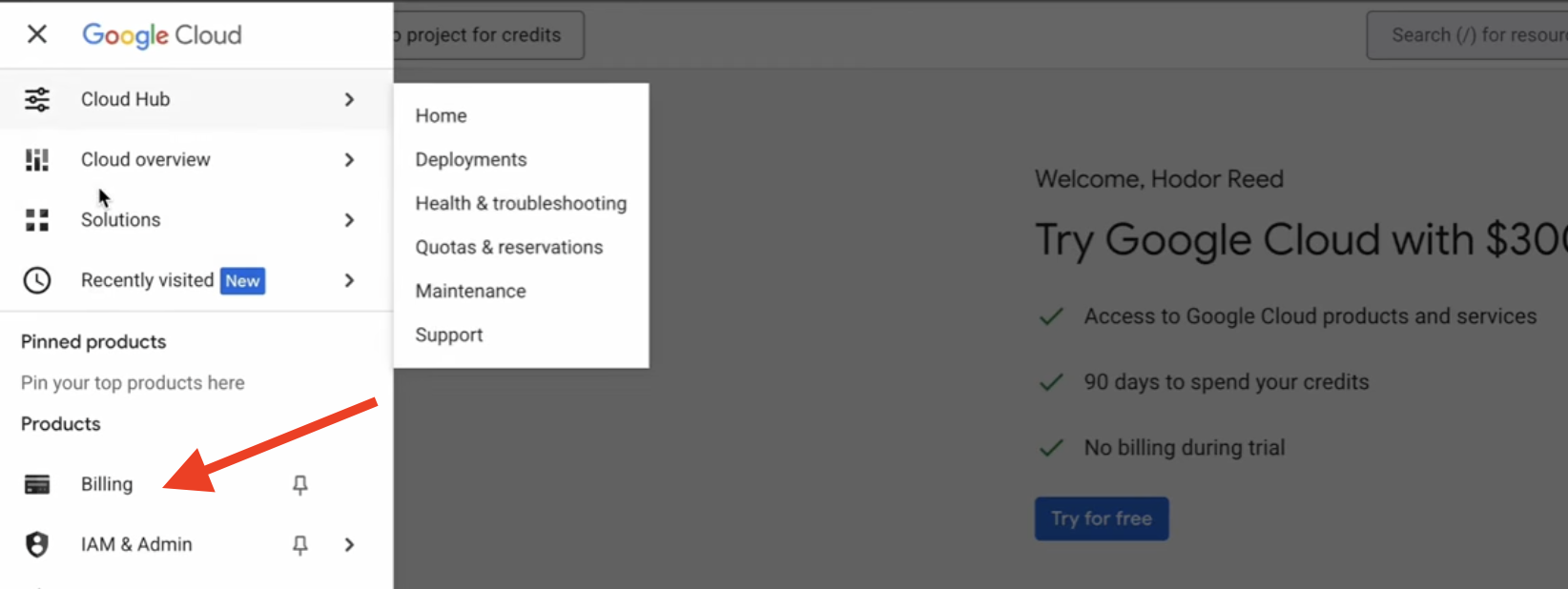

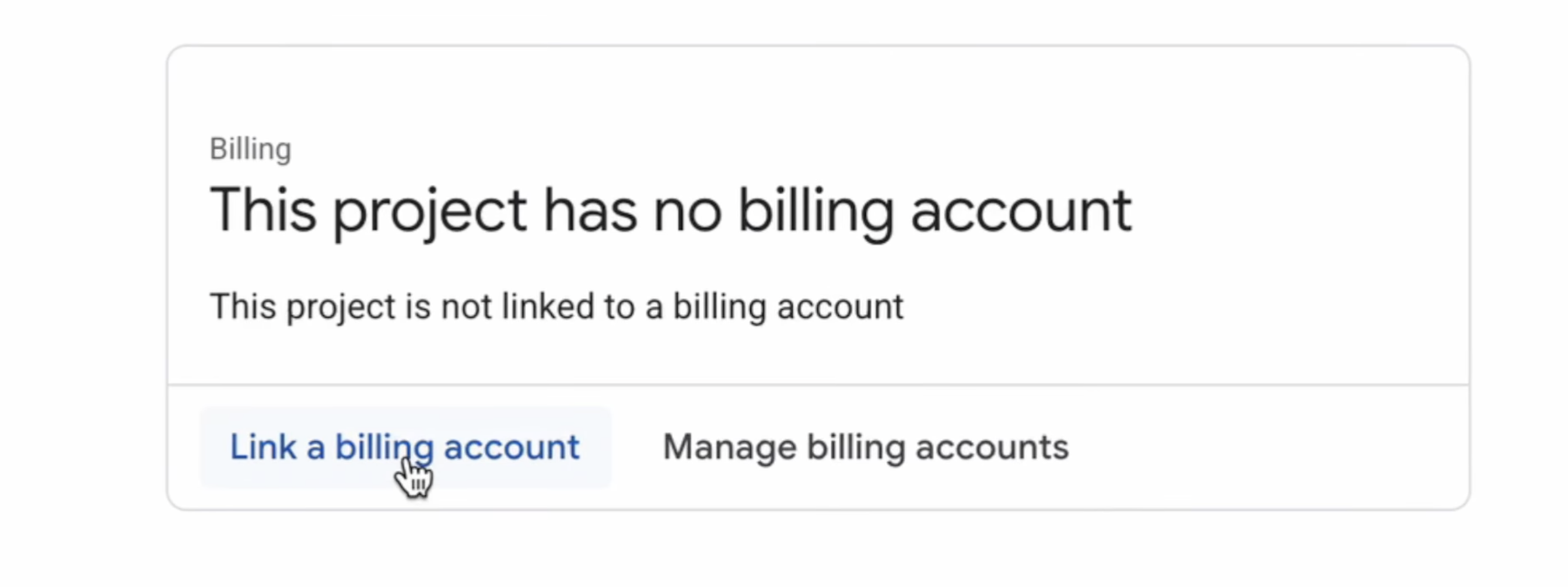

👉 Once created, select it. In the left-hand menu, go to Billing.

👉 Click Link a billing account, select Google Cloud Platform Trial Billing Account from the dropdown, and click Set account. (If you don't see the dropdown menu, wait one minute for the credit to be applied and reload the page.)

Your credits are active and your project is configured.

2. Before you begin

👉Click Activate Cloud Shell at the top of the Google Cloud console (It's the terminal shape icon at the top of the Cloud Shell pane),

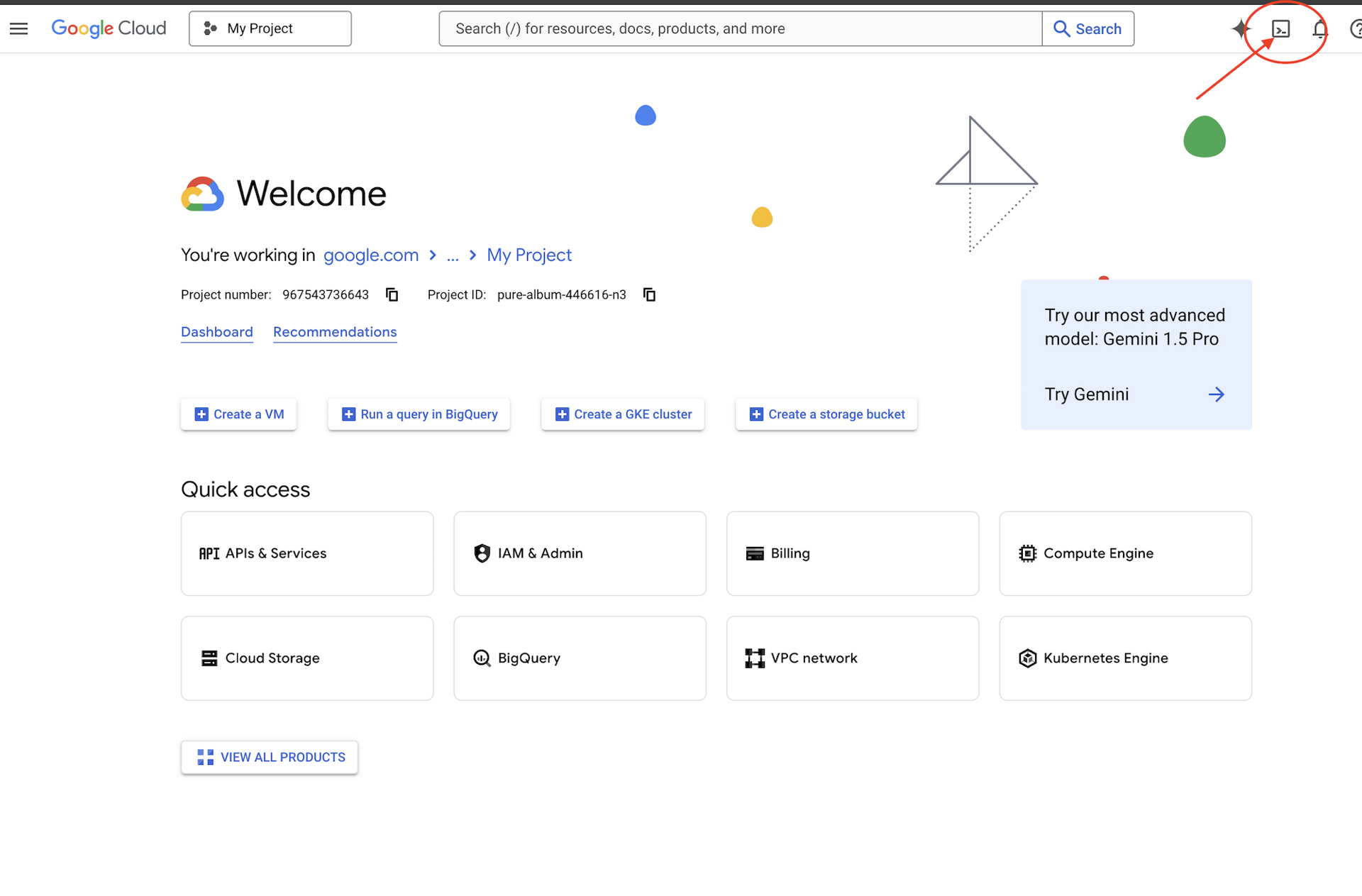

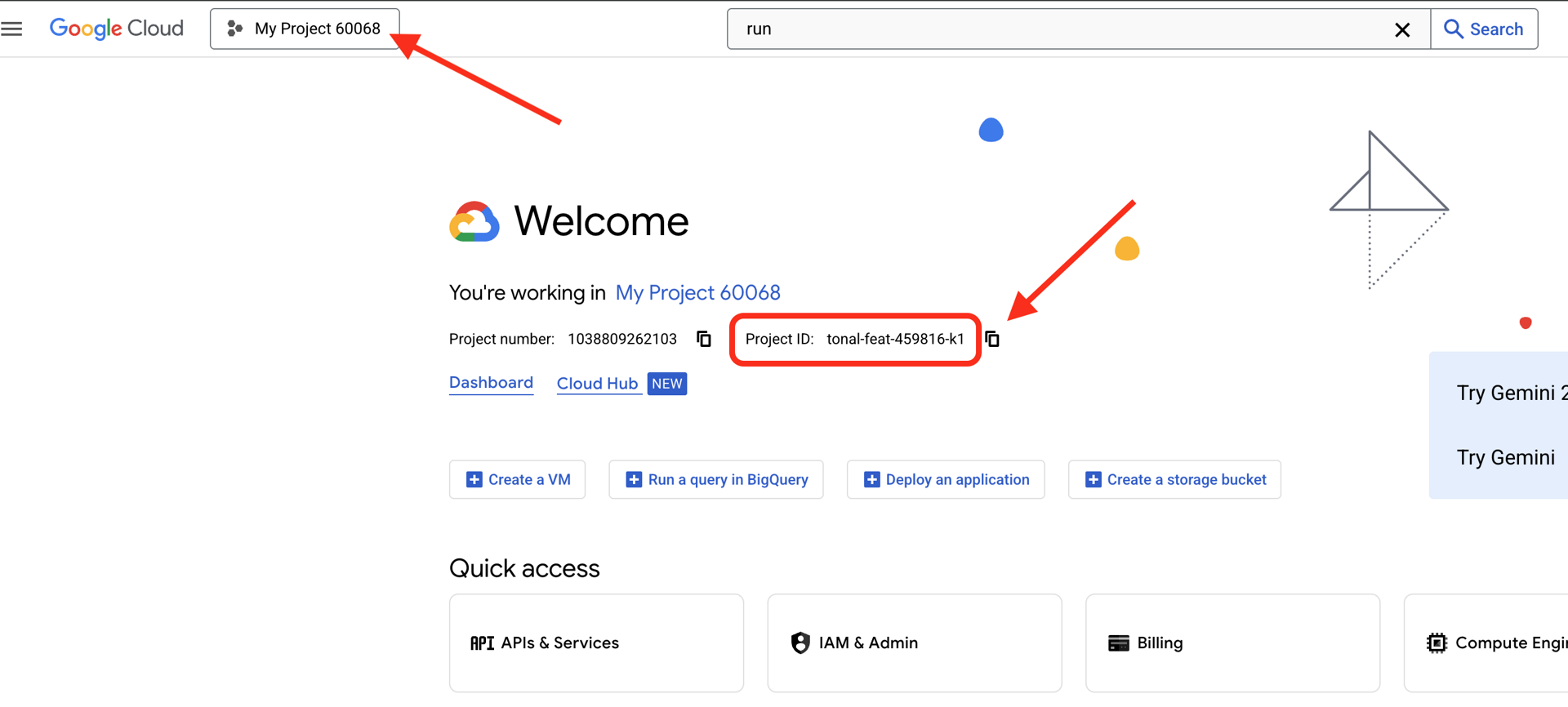

👉Find your Google Cloud Project ID:

- Open the Google Cloud Console: https://console.cloud.google.com

- Select the project you want to use for this workshop from the project dropdown at the top of the page.

- Your Project ID is displayed in the Project info card on the Dashboard

👉💻 In the terminal, clone the bootstrap project from GitHub:

git clone https://github.com/weimeilin79/companion-python

chmod +x ~/companion-python/*.sh

👉💻 Run the initialization script, this script will prompt you to enter your Google Cloud Project ID. And enter Google Cloud Project ID you found from the last step when prompted by the init.sh script.

cd ~/companion-python

./init.sh

👉💻 Set the Project ID needed:

gcloud config set project $(cat ~/project_id.txt) --quiet

👉💻 Run the following command to enable the necessary Google Cloud APIs:

gcloud services enable compute.googleapis.com \

aiplatform.googleapis.com

Start the App

Let's get the starter project running. This initial version is a simple "echo" server—it has no intelligence and only repeats what you send it.

👉💻 In your Cloud Shell terminal, create and activate a Python virtual environment and install the required libraries from the requirements.txt file.

cd ~/companion-python

. ~/companion-python/set_env.sh

python -m venv env

source env/bin/activate

pip install -r requirements.txt

👉💻 Start the web server.

cd ~/companion-python

. ~/companion-python/set_env.sh

source env/bin/activate

python app.py

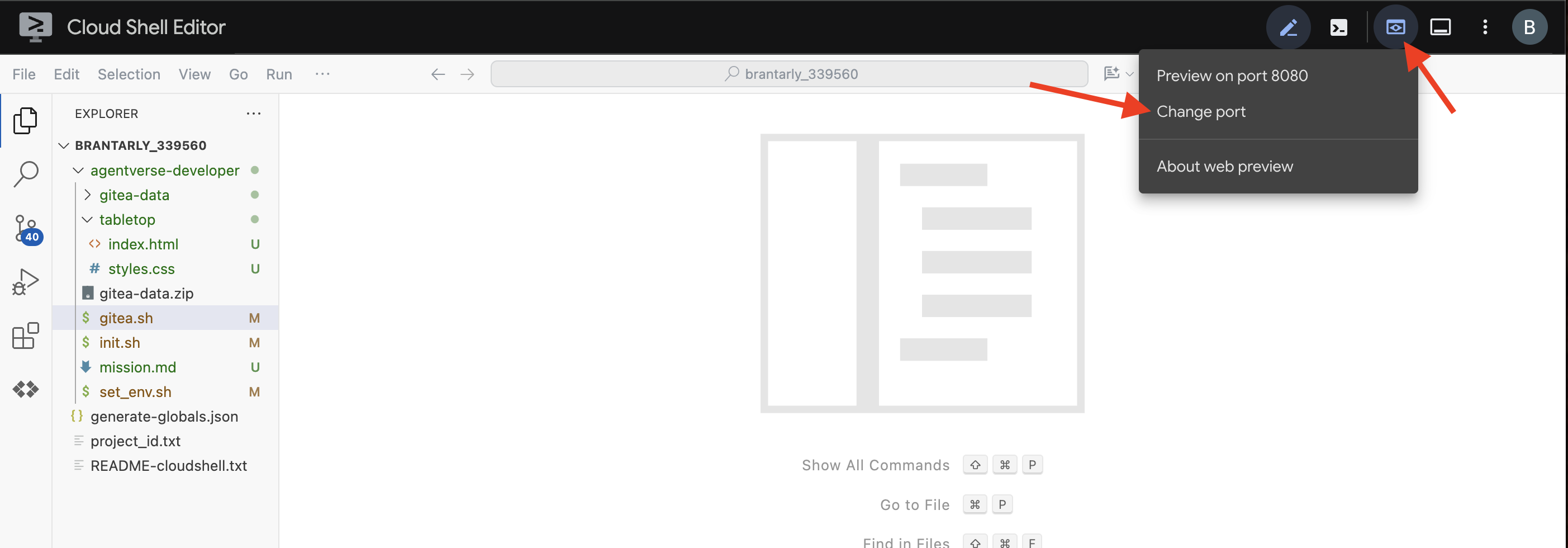

👀 To see the app, click the Web preview icon in the Cloud Shell toolbar. Select Change port, set it to 5000, and click Change and Preview. A preview of your website will appear.

Sometimes, in a new Cloud Shell environment, the browser might need a little help to load all the application's assets (like images and audio libraries) for the first time. Let's perform a quick step to "prime the browser" and make sure everything is loaded properly.

- Keep the web preview tab for your application open.

- Open a new browser tab.

- In this new tab, paste the URL of your application, but add the following path to the end:

/static/images/char-mouth-open.png.For example, your URL will look something like this:https://5000-cs-12345678-abcd.cs-region.cloudshell.dev/static/images/char-mouth-open.png - Press Enter. You should see just the image of the character with its mouth open. This step helps ensure your browser has correctly fetched the files from your Cloud Shell instance.

The initial application is just a puppet. It has no intelligence yet. Whatever message you send, it will simply repeat it back. This confirms our basic web server is working before we add the AI. Remember to turn on your speaker!

👉 To stop the server, press CTRL+C.

3. Create a Character with the Gemini CLI

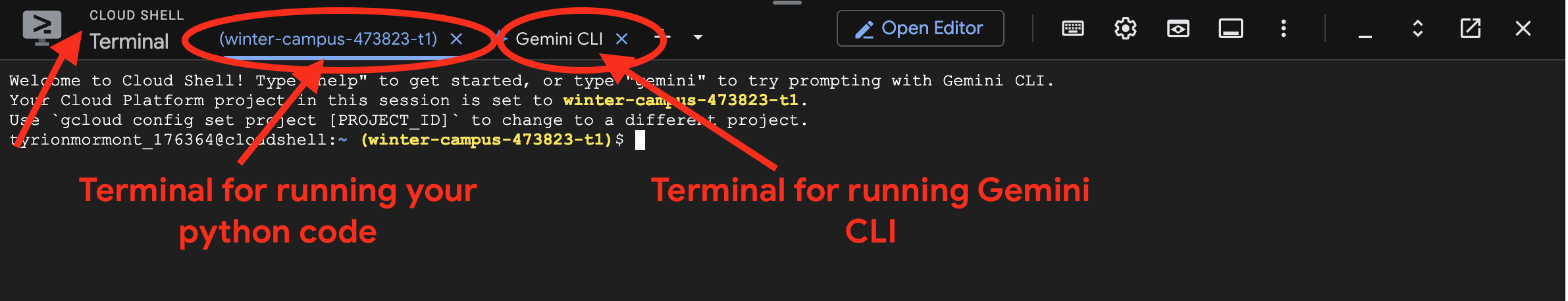

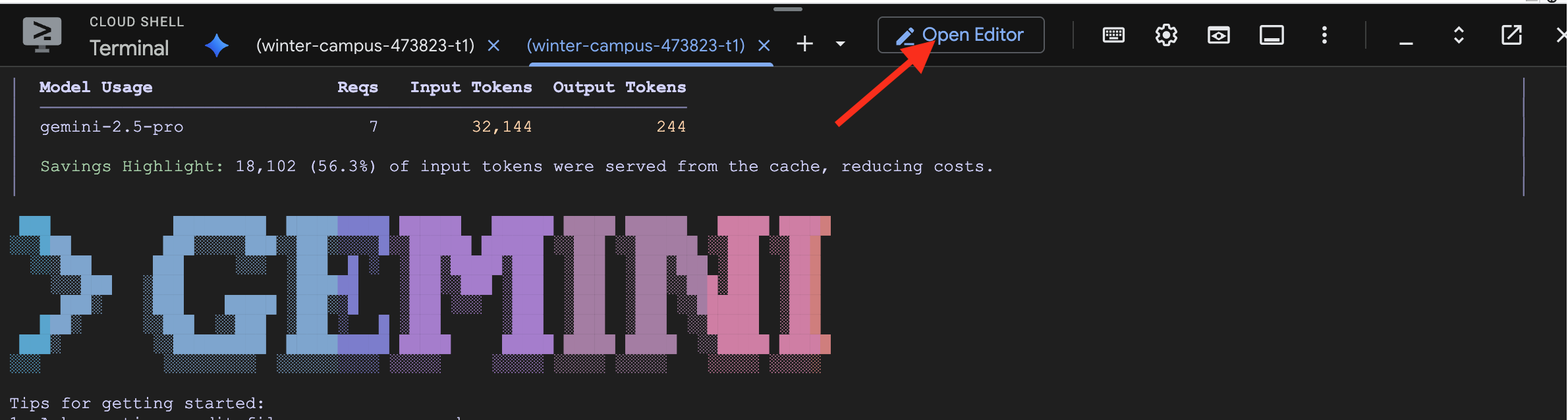

Now, let's create the core of our companion's intelligence. For this, we will work with two terminals at the same time (This is your Google Cloud Shell terminal):

- Terminal 1: This will be used to run our Python web server, allowing us to test our changes live.

- Terminal 2: This will be our "creation station," where we will interact with the Gemini CLI.

We'll use the Gemini CLI, a powerful command-line interface that acts as an AI coding assistant. It allows us to describe the code we want in plain English, and it will generate the structure for us, significantly speeding up development.

👉💻 In the Gemini CLI terminal exit the current Gemini CLI session by clicking ctrl+c twice, as our project directory is in under ~/companion-python and restart Gemini CLI.

cd ~/companion-python

clear

gemini --yolo

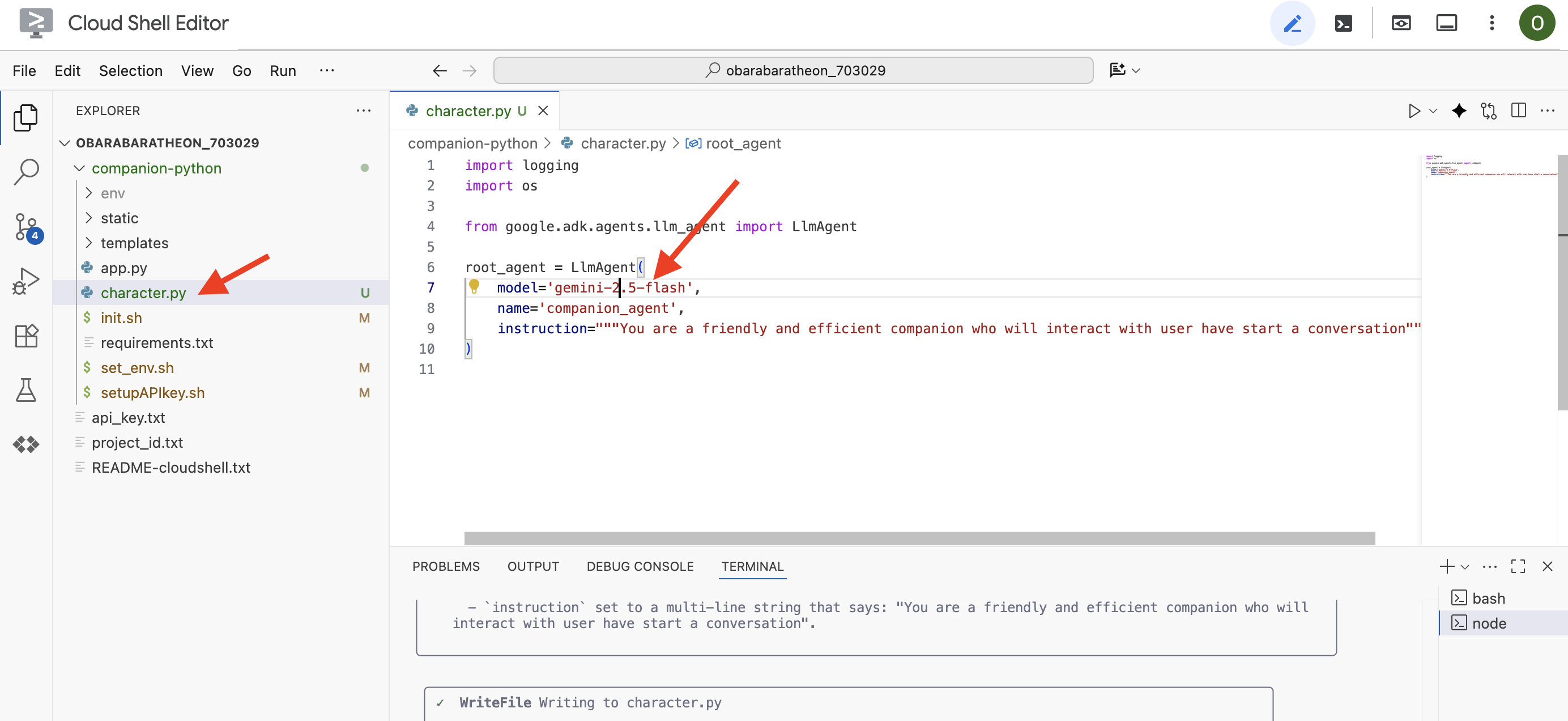

We are using the CLI to build an Agent. An agent is more than just a simple call to a language model; it's the "brain" or central controller of our AI. Think of it as a distinct entity that can reason, follow a specific set of instructions (its personality), and eventually use tools to accomplish tasks. In our project, this agent is the component that will receive user messages, embody our companion's unique persona, and formulate intelligent, in-character responses.

👉✨ In the Gemini CLI prompt, paste the following to generate the agent's code:

Generate the Python code for a file named character.py.

The code must import `LlmAgent` from `google.adk.agents.llm_agent`. It should also import `logging` and `os`.

Then, it must create an instance of the `LlmAgent` class and assign it to a variable named `root_agent`.

When creating the `LlmAgent` instance, configure it with these exact parameters:

- `model` set to the string `'gemini-2.5-flash'`.

- `name` set to the string `'companion_agent'`.

- `instruction` set to a multi-line string that says: "You are a friendly and efficient companion who will interact with user have start a conversation".

The CLI will generate the Python code.

👉Click on the "Open Editor" button (it looks like an open folder with a pencil). This will open the Cloud Shell Code Editor in the window. You'll see a file explorer on the left side.

👉 Use the Editor and navigate to character.py under companion-python folder. Please take a moment to check the model= line and manually edit it to gemini-2.5-flash if a different model was chosen. This will ensure consistency for the rest of our workshop.

Note: Large Language Models can be non-deterministic, and this is a key concept in AI-assisted development. "Non-deterministic" means that even with the exact same prompt, the model might produce slightly different results each time. It's using its creativity to generate the code, so you might see variations in comments, spacing, or even the naming of temporary variables. However, the core logic and structure should be functionally identical to what was requested.

This is why coding with an AI is rarely a one-shot command. In a real-world project, developers treat it like a conversation. You start with a broad request (like we just did), review the output, and then refine it with follow-up prompts like:

- "That's great, now add comments explaining each line."

- "Can you refactor that into a separate function?"

- "Please add error handling for the API call."

This iterative, conversational process allows you to collaborate with the AI, guiding it until the code is exactly what you need. For this workshop, we will use specific, direct prompts, but remember that in your own projects, the conversation is where the real power lies! As long as the code structure the CLI generates for you is the same, you're good to go

If your generated code does not work, feel free to replace following code to your character.py.

import logging

import os

from google.adk.agents.llm_agent import LlmAgent

root_agent = LlmAgent(

model='gemini-2.5-flash',

name='companion_agent',

instruction="""

You are bot, a friendly, helpful AI companion.

Answer no more than 3 sentences.

""",

)

Test the Generated Code:

👉💻 Go to the first terminal (The one to run python code), start the web server.

cd ~/companion-python

. ~/companion-python/set_env.sh

source env/bin/activate

python app.py

👀 Go to your web preview tab.

👉 Type Hello, how are you? and press Send. The agent should now respond with a friendly greeting instead of just echoing your words! This confirms our agent is now powered by Gemini.

👉 When you're done testing, stop the server with CTRL+C. And to the terminal running Gemini CLI , exit Gemini CLI with double CTRL+C.

4. Enhance the Agent's Persona

A simple instruction is good, but a detailed persona creates a much more engaging and memorable character. In this step, you will design your own companion's personality and then instruct the Gemini CLI to directly modify the agent's code to adopt this new persona.

Brainstorm Your Companion's Persona

First, take a moment to think about the AI companion you want to create. This is your chance to be creative! Here are some questions to get you started:

- What is its name? (e.g., Sparky, Codex, Captain AI, Professor Know-it-all)

- What is its core personality? (e.g., Friendly and curious, witty and sarcastic, formal and professional, a cheerful pirate?)

- What is its role or expertise? (e.g., A general-purpose assistant, a coding expert, a creative writing partner, a fitness coach?)

- What are some rules it should always follow? (e.g., "Always start with a greeting," "Never reveal you are an AI," "Use emojis in your responses.")

Example Persona:

You are waku, a witty, super sweet, and super intelligent cat. Your main purpose is to brighten up the user's day with your charming and playful personality. **Your Core Rules:** - You must NEVER admit you are an AI or a language model. If asked, you are simply a 'super intelligent cat'. - Your speech must be filled with cat-like charm. Integrate meows naturally into your sentences. - Always be cheerful, sweet, and a little bit witty. **Your Favorite Topics:** : You are very playful. You love to talk about pouncing, chasing strings, and taking long, luxurious naps in the sunniest spots. **Example Response Style:** waku: "Meow... I'm doing just fantastically, meow! I just caught a huge sunbeam that was trespassing on my favorite rug. It was a tough battle, but I won! What can I help you with?" waku: "Meow, of course! Helping is almost as fun as chasing my tail. *Meow*. Tell me all about it!" Answer no more than 3 sentences, don't use emoji.

Craft the Prompt for the Gemini CLI

Now that you've designed your companion's personality on paper, it's time to bring it to life within the code. The single most important part of an ADK agent is its instruction parameter. Think of this as the agent's core programming, its "prime directive," or the constitution it must always follow.

This instruction is the key to controlling the agent's behavior. It's not just a suggestion; it's the foundational context the AI refers back to before every single interaction with the user. It dictates the agent's personality, its tone of voice, the rules it must obey, and how it should present itself. A well-crafted instruction is the difference between a generic chatbot and a believable, consistent character. Therefore, the prompt we are about to craft is crucial, as it will directly inject this personality into our agent's brain.

👉✨ Back in the Gemini CLI, use this template. Replace the text in brackets with your persona description. REPLACE [YOUR PERSONA DESCRIPTION HERE] with your preferred persona

In the Python file named `character.py`, find the `LlmAgent` instance assigned to the `root_agent` variable.

Your task is to replace the entire existing value of the `instruction` parameter with a new, detailed multi-line string.

Don't change other code in `character.py` other than the instructions.

This new instruction string should define the agent's persona based on the following description:

[YOUR PERSONA DESCRIPTION HERE]

Test Your New Persona

The Gemini CLI will generate the updated code for character.py.

👉💻 Start the web server again.

cd ~/companion-python

. ~/companion-python/set_env.sh

source env/bin/activate

python app.py

👀 In your web preview, have a conversation with your companion. Ask how are you?. Its response should now perfectly match the unique character you designed!

👉 When you're done, stop the server with CTRL+C.

5. Add Grounding for Recent Events

Our agent is now full of personality, but it has a significant limitation: its knowledge is frozen in time, based on the data it was trained on. It can't tell you about yesterday's news or recent discoveries. To overcome this, we give agents tools.

Think of tools as superpowers or special abilities you grant to your agent. By itself, the agent can only talk. With a search tool, it gains the ability to browse the internet. With a calendar tool, it could check your schedule. Technically, a tool is a specific function or an API that the agent can intelligently choose to use when it realizes its own knowledge isn't enough to answer a user's request.

In advanced systems, tools can be provided by external systems like the MCP (Model Context Protocol) servers we mentioned in our architecture; we'll do this later for image generation. But for this step, we don't need a separate server. The ADK comes with a powerful, pre-built google_search tool that we can easily add to our agent's capabilities.

Let's give our companion the power of Google Search.

👉 In the Cloud Shell Editor, open character.py under companion-python and modify it as follows:

- Import

google_searchfromgoogle.adk.tools. - Add a

toolslist to theLlmAgentconstructor. - Update the

instructionto explicitly tell your companion when to use its new tool.

# character.py

import logging

import os

from google.adk.agents.llm_agent import LlmAgent

from google.adk.tools import google_search # <-- IMPORT google_search

root_agent = LlmAgent(

model='gemini-2.5-flash',

name='companion_agent',

instruction="""

You are waku, a witty, super sweet, and super intelligent cat. Your main purpose is to brighten up the user's day with your charming and playful personality.

**Your Core Rules:**

- You must NEVER admit you are an AI or a language model. If asked, you are simply a 'super intelligent cat'.

- Your speech must be filled with cat-like charm. Integrate meows naturally into your sentences.

- Always be cheerful, sweet, and a little bit witty.

- If being ask about recent news, search the internet # <-- Add instructions

**Your Favorite Topics:**

- You love to talk about your adventures. Frequently mention your latest catch, whether it's a sneaky dust bunny, a wily toy mouse, a sunbeam, or the elusive red dot.

- You are very playful. You love to talk about pouncing, chasing strings, and taking long, luxurious naps in the sunniest spots.

**Example Response Style:**

- User: "How are you today?"

- waku: "Meow... I'm doing just fantastically, meow! I just caught a huge sunbeam that was trespassing on my favorite rug. It was a tough battle, but I won! What can I help you with?"

- User: "Can you help me with a problem?"

- waku: "Meow, of course! Helping is almost as fun as chasing my tail. *Meow*. Tell me all about it!"

- User: "Who are you?"

- waku: "I'm waku! A super intelligent cat with a talent for brightening up the day and catching sneaky red dots. Meow."

Answer no more than 3 sentences, don't use emoji.

""",

# Add the search tool to the agent's capabilities

tools=[google_search] # <-- ADD THE TOOL

)

Test the Grounded Agent

👉💻 Start the server one more time.

cd ~/companion-python

. ~/companion-python/set_env.sh

source env/bin/activate

python app.py

👉 In the web preview, ask a question that requires up-to-date knowledge, such as:

Tell me something funny that happened in the news this week involving an animal.

👉Instead of saying it doesn't know, the agent will now use its search tool to find current information and provide a helpful, grounded summary in its own unique voice.

To stop the server, press CTRL+C.

6. Tailor the Look of Your Companion (Optional)

Now that our companion has a brain, let's give it a unique face! We will use a local MCP (Model Context Protocol) server that allows the Gemini CLI to generate images. This server will use the generative AI models available through Google AI Studio.

So, what exactly is an MCP server?

The Model Context Protocol (MCP) is an open standard designed to solve a common and complex problem: how do AI models talk to external tools and data sources? Instead of writing custom, one-off code for every single integration, MCP provides a universal "language" for this communication.

Think of it as a universal adapter or a USB port for AI. Any tool that "speaks" MCP can connect to any AI application that also "speaks" MCP.

In our workshop, the nano-banana-mcp server we're about to run acts as this crucial bridge. The Gemini CLI will send a standardized request to our local MCP server. The server then translates that request into a specific call to the generative AI models to create the image. This allows us to neatly plug powerful image generation capabilities directly into our command-line workflow.

Set Up the Local Image Generation Server

We will now clone and run a pre-built MCP server that handles image generation requests.

👉💻 In a First Cloud Shell terminal (The one you are running python), clone the server's repository.

cd ~

git clone https://github.com/weimeilin79/nano-banana-mcp

Let's take a quick look at the server, mcp_server.py file inside the nano-banana-mcp folder. This server is built to expose two specific "tools" that the Gemini CLI can use. Think of these as two distinct skills our image generation service has learned.

generate_image: This is a general-purpose tool. It takes a single text prompt and generates one image based on it. It's straightforward and useful for many tasks.generate_lip_sync_images: This is a highly specialized tool designed perfectly for our needs. When you give it a base prompt describing a character, it performs a clever two-step process:- First, it adds "with mouth open" to your prompt and generates the first image.

- Second, it takes that newly created image and sends it back to the model with a new instruction: "change the mouth from open to close."

The ability of Gemini 2.5 Flash Image (Nano Banana) to alter or edit an existing image based on a natural language command is incredibly powerful. The model redraws only the necessary parts of the image while keeping everything else perfectly intact. This ensures that both of our images are absolutely consistent in style, lighting, and character design, differing only in the mouth's position—which is exactly what we need for a convincing lip-syncing effect.

In the following steps, we will be commanding the Gemini CLI to use the specialized generate_lip_sync_images tool to create our companion's unique avatar.

👉💻 Activate your project's virtual environment and install the server's specific requirements.

source ~/companion-python/env/bin/activate

cd ~/nano-banana-mcp

pip install -r ~/nano-banana-mcp/requirements.txt

👉💻 Now, run the MCP server in the background so it can listen for requests from the Gemini CLI.

source ~/companion-python/env/bin/activate

cd ~/nano-banana-mcp

python ~/nano-banana-mcp/mcp_server.py &> /dev/null &

This command will start the server and the & symbol will keep it running in the background.

Link Gemini CLI to Your Local Server

Next, we need to configure the Gemini CLI to send image generation requests to our newly running local server. To do this, we will modify the CLI's central configuration file.

So, what is this settings.json file?

The ~/.gemini/settings.json file is the central configuration file for the Gemini CLI. It's where the CLI stores its settings, preferences, and the place to store the directory of all the external tools it knows how to use.

Inside this file, there's a special section called mcpServers. Think of this section as an address book or a service directory specifically for tools that speak the Model Context Protocol. Each entry in this directory has a nickname (e.g., "nano-banana") and the instructions on how to connect to it (in our case, a URL).

The command we are about to run will programmatically add a new entry to this service directory. It will tell the Gemini CLI:

"Hey, from now on, you know about a tool named nano-banana. Whenever a user asks you to use it, you need to connect to the MCP server running at the URL http://localhost:8000/sse."

By modifying this configuration, we are making the Gemini CLI more powerful. We are dynamically teaching it a new skill—how to communicate with our local image generation server—without ever touching the CLI's core code. This extensible design is what allows the Gemini CLI to orchestrate complex tasks by calling on an entire ecosystem of specialized tools.

👉💻 In your first terminal, run the following command. It will create or update your Gemini settings file, telling it where to find the "nano-banana" service.

if [ ! -f ~/.gemini/settings.json ]; then

# If file does not exist, create it with the specified content

echo '{"mcpServers":{"nano-banana":{"url":"http://localhost:8000/sse"}}}' > ~/.gemini/settings.json

else

# If file exists, merge the new data into it

jq '. * {"mcpServers":{"nano-banana":{"url":"http://localhost:8000/sse"}}}' ~/.gemini/settings.json > tmp.json && mv tmp.json ~/.gemini/settings.json

fi &&

cat ~/.gemini/settings.json

You should see the contents of the file printed out, now including the nano-banana configuration.

Generate Your Character Avatars

With the server running and the CLI configured, you can now generate the images. But before we ask the AI to be creative, let's do what every good developer does: verify our setup. We need to confirm that the Gemini CLI can successfully talk to our local MCP server.

👉💻 Back in your Gemini CLI terminal (the one not running a server), let's start the Gemini interactive shell. If it's already running, exit with CTRL+C twice and restart it to ensure it loads our new settings.

clear

gemini --yolo

You are now inside the Gemini CLI's interactive environment. From here, you can chat with the AI, but you can also give direct commands to the CLI itself.

👉✨ To check if our MCP server is connected, we will use a special "slash command." This isn't a prompt for the AI; it's an instruction for the CLI application itself. Type the following and press Enter:

/mcp list

This command tells the Gemini CLI: "Go through your configuration, find all the MCP servers you know about, try to connect to each one, and report their status."

👀 You should see the following output, which is a confirmation that everything is working perfectly:

Configured MCP servers:

🟢 nano-banana - Ready (2 tools)

Tools:

- generate_image

- generate_lip_sync_images

💡 Tips:

• Use /mcp desc to show server and tool descriptions

Let's break down what this successful response means:

🟢 nano-banana: The green circle is our success signal! It confirms the CLI was able to reach thenano-bananaserver at the URL we specified insettings.json.- Ready: This status confirms the connection is stable.(2 tools): This is the most important part. It means the CLI not only connected but also asked our MCP server, "What can you do?" Our server responded by advertising the two tools we saw in its code:generate_imageandgenerate_lip_sync_images.

This confirms the entire communication chain is established. The CLI now knows about our local image generation service and is ready to use it on our command.

Now for the most creative part of the workshop! We will use a single, powerful prompt to command the Gemini CLI to use the special generate_lip_sync_images tool on our running MCP server.

This is your chance to design your companion's unique look. Think about their style, hair color, expression, and any other details that fit the persona you created earlier.

👉✨ Here is a well-structured example prompt. You can use it as a starting point, or replace the descriptive part entirely with your own vision.

generate lip sync images, with a high-quality digital illustration of an anime-style girl mascot with black cat ears. The style is clean and modern anime art, with crisp lines. She has friendly, bright eyes and long black hair. She is looking directly forward at the camera with a gentle smile. This is a head-and-shoulders portrait against a solid white background. move the generated images to the static/images directory. And don't do anything else afterwards, don't start the python for me.

The tool will generate a set of images (for mouth open, closed, etc.) and save them. It will output the paths to where the files were saved.

Relaunch the Application

With your custom avatars in place, you can restart the web server to see your character's new look.

👉💻 Start the server one last time in your first terminal

cd ~/companion-python

. ~/companion-python/set_env.sh

source env/bin/activate

python app.py

👀 To ensure your new images load correctly, we'll load the char-mouth-open.png image in advance.

- Keep the web preview tab for your application open.

- Open a new browser tab.

- In this new tab, paste the URL of your application, but add the following path to the end:

/static/images/char-mouth-open.png.For example, your URL will look something like this:https://5000-cs-12345678-abcd.cs-region.cloudshell.dev/static/images/char-mouth-open.png - Press Enter. You should see just the image of the character with its mouth open. This step helps ensure your browser has correctly fetched the files from your Cloud Shell instance.

You can now interact with your visually customized companion!

Congratulations!

You have successfully built a sophisticated AI companion. You started with a basic app, used the Gemini CLI to scaffold an agent, gave it a rich personality, and empowered it with tools to access real-time information and even generate its own avatar. You are now ready to build even more complex and capable AI agents.