1. Introduction

Last Updated: 2022-03-28

Machine Learning

Machine learning is a field that evolves at a neck breaking speed. New research and possibilities are released every day enabling use cases that weren't possible before.

These releases usually have as a result a model. Models can be understood as very long Math equations that given an input (eg: image) outputs a result (eg: classification)

- What if you want to use one of these new models with your own data?

- How can you benefit from these State of the Art models for your use case or in your app?

This codelab will guide you through the process of customizing machine learning models to your own data the easy way.

Building and training Machine Learning models has many challenges:

- Takes a lot of time.

- Uses large amounts of data.

- Require expertise in fields like Maths and Statistics.

- Resource intensive: some models may take days to train.

Building new model architectures takes a lot of time, and may require many experiments and years of experience. But what if you could leverage all that knowledge and use it on your own data by just customizing state of the art research for your own problem? That's possible using a technique called Transfer Learning!

In this codelab you'll learn how to do Transfer Learning, why it works, and when to use it.

What you'll learn

- What Transfer Learning is and when to use it.

- How to use Transfer Learning.

- How to fine tune models.

- How to use TensorFlow Lite Model Maker.

- How to use TensorFlow Hub.

What you'll need

- All the code is executed using Google Colaboratory so you won't need to install anything on your machine. You only need access to the internet and a Google Account to sign into Colab.

- A basic knowledge of TensorFlow and the Keras API.

- Knowledge of Python.

If you don't have basic knowledge of TensorFlow or Machine Learning, you can still learn about Transfer Learning. Read the next step "What is Transfer Learning" for the theory behind the technique and then follow on to "Transfer Learning with Model Maker". If you want to go deeper and see the process in more detail, you will have that in the sections about Transfer Learning with TensorFlow Hub.

2. What is Transfer Learning?

A pre-trained model is a saved network that was previously trained on a large dataset, typically on a large-scale image-classification task. You either use the pretrained model as is, or use transfer learning to customize this model to a given task.

The intuition behind transfer learning for image classification is that if a model is trained on a large and general enough dataset, this model will effectively serve as a generic model of the visual world. You can then take advantage of these learned feature maps without having to start from scratch by training a large model on a large dataset.

There are two ways to customize a Machine Learning Model

- Feature Extraction: Use the representations learned by a previous network to extract significant features from new samples. You simply add a new classifier, which is trained from scratch, on top of the pretrained model so that you can repurpose the feature maps learned previously for the dataset. You do not need to (re)train the entire model. The base convolutional network already contains features that are generically useful for classifying pictures. However, the final classification part of the pretrained model is specific to the original classification task, and subsequently specific to the set of classes on which the model was trained.

- Fine-Tuning: Unfreeze a few of the top layers of a frozen model base and jointly train both the newly-added classifier layers and the last layers of the base model. This lets us "fine tune" the higher-order feature representations in the base model in order to make them more relevant for the specific task.

Feature extraction is faster to train, but with Fine-tuning you can achieve better results.

You'll try both (Feature Extraction and Fine-Tuning) using two different ways of doing Transfer Learning:

- TensorFlow Lite Model Maker library automatically does most of the data pipeline and model creation, making the process much easier. The resulting model is also easily exportable to be used on mobile and on the browser.

- TensorFlow Hub models leverage the vast repository of machine learning models available on TensorFlow Hub. Researchers and the community contribute these models making state of the art models available much faster and in greater variety.

3. Transfer Learning with Model Maker

Now that you know the idea behind Transfer Learning, let's start using the TensorFlow Lite Model Maker library, a tool to help do it the easy way.

The TensorFlow Lite Model Maker library is an open source lib that simplifies the process of Transfer Learning and makes the process much more approachable to non-ML developers like Mobile and Web developers.

The Colab notebook guides you through the follow steps:

- Load the data.

- Split data.

- Create and train the model

- Evaluate the model.

- Export the model.

After this step, you can start doing Transfer Learning with your own data following the same exact process.

Colaboratory

Next, let's go to Google Colab to train the custom model.

It takes about 15 minutes to go over the explanation and understand the basics of the notebook.

Pros:

- Easy way of customizing models.

- No need to understand TensorFlow or the Keras API.

- Open source tool that can be changed if the user needs something specific not implemented yet.

- Exports model directly for mobile or browser execution.

Cons

- Less configuration possibilities than building the full pipeline and model yourself, as with the two previous methods

- Even when choosing the base model, not all models can be used as base.

- Not suitable for large amounts of data where the data pipeline is more complex.

4. Find a model on TensorFlow Hub

By the end of this section, you'll be able to:

- Find Machine Learning models on TensorFlow Hub.

- Understand about collections.

- Understand different types of models.

To do Transfer Learning, you need to start with two things:

- Data, for example images from the subjects you want to recognize.

- A base model that you can customize to your data.

The data part is usually business dependent but the easiest path is to take lots of pictures of what you want to recognize. But what about the base model? Where can you find one? is where TensorFlow Hub can help.

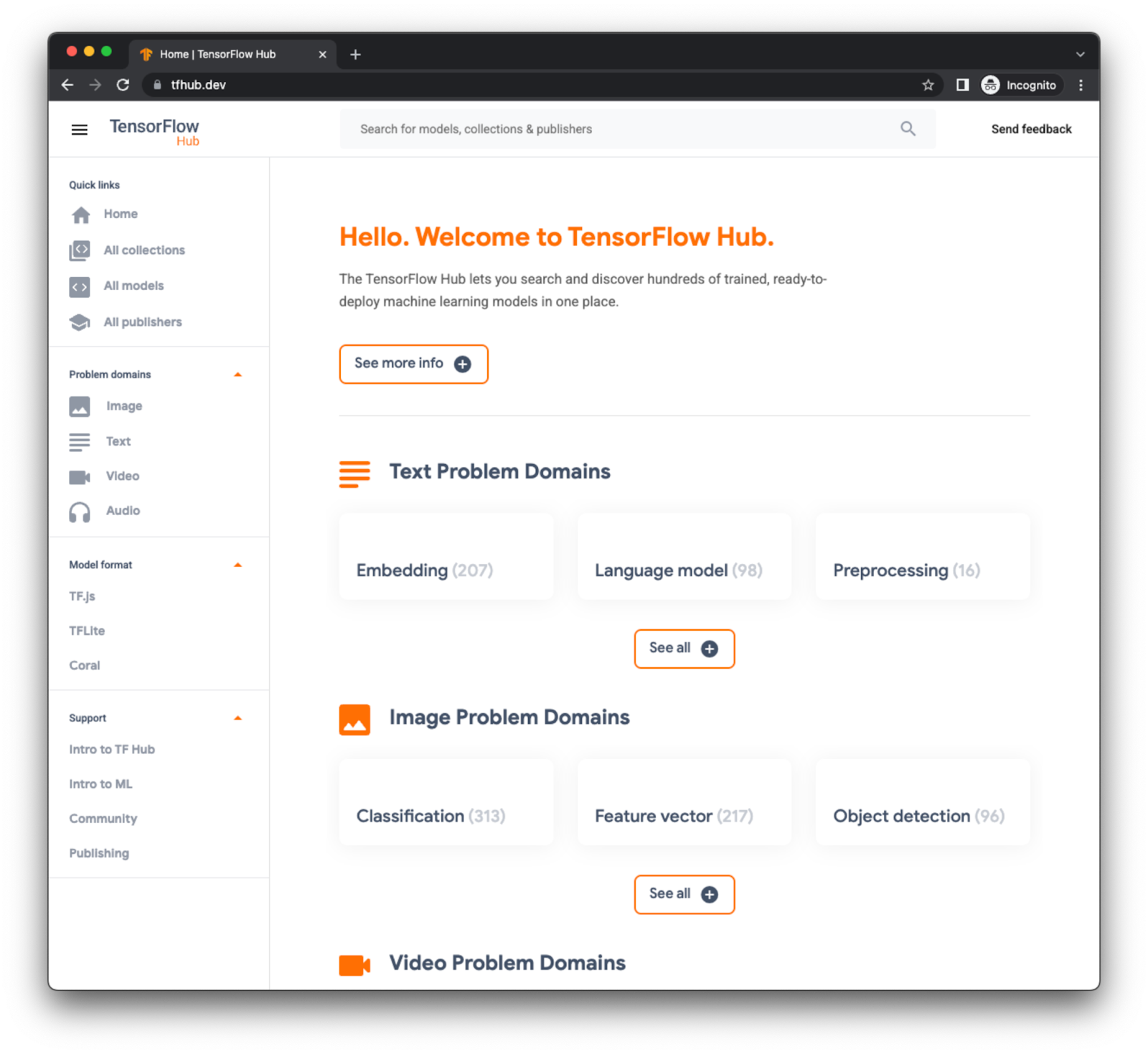

TensorFlow Hub is the model repository for your TensorFlow models needs.

You can search and read the documentation of thousands of models, readily available for you to use, and many of them are ready for Transfer Learning and Fine-tuning.

Searching a model

Let's first do a simple search for models to use on TensorFlow Hub that you can use in your code later.

Step 1: In your browser, open the site tfhub.dev.

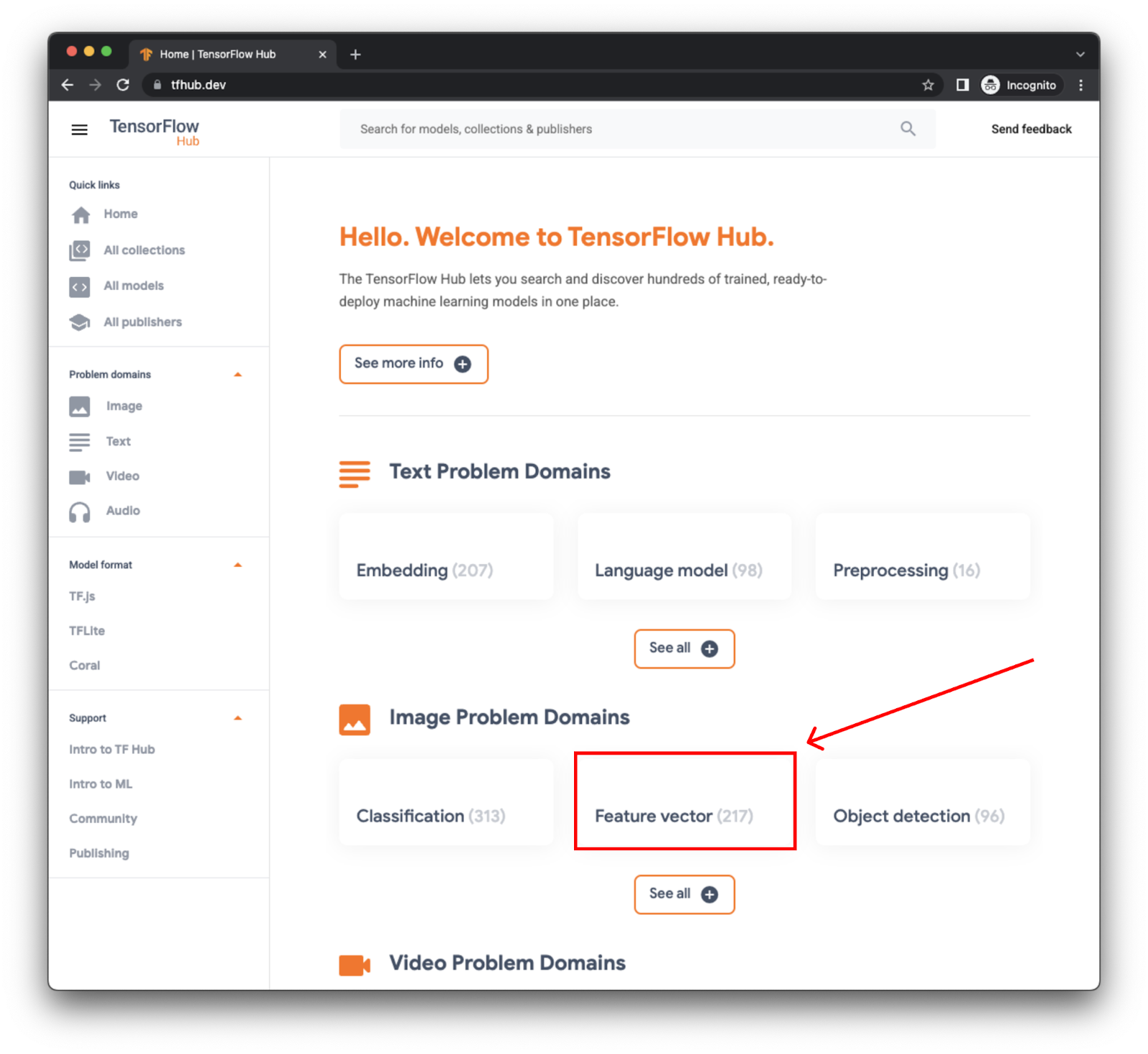

For Transfer Learning on the image domain, we need Feature Vectors. Feature Vectors are like classification models, but without the classification head.

Feature Vectors can convert images to a numerical representation in the Nth space (where N is the number of dimensions of the output layer of the model).

On TFHub you can search specifically for Feature Vectors by clicking on a specific card.

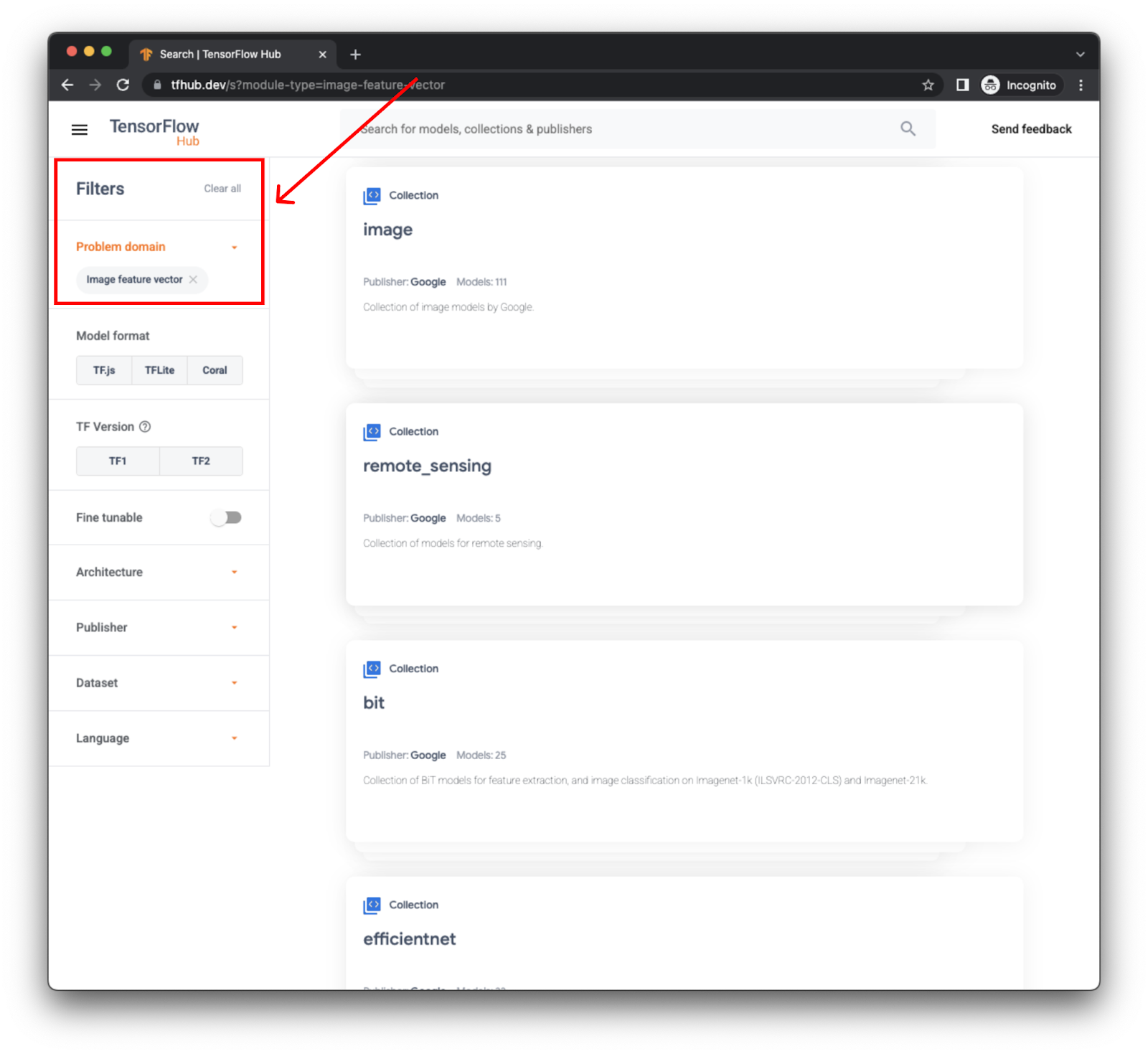

You can also search for a model's name with the filter on the left to only show Image feature vectors.

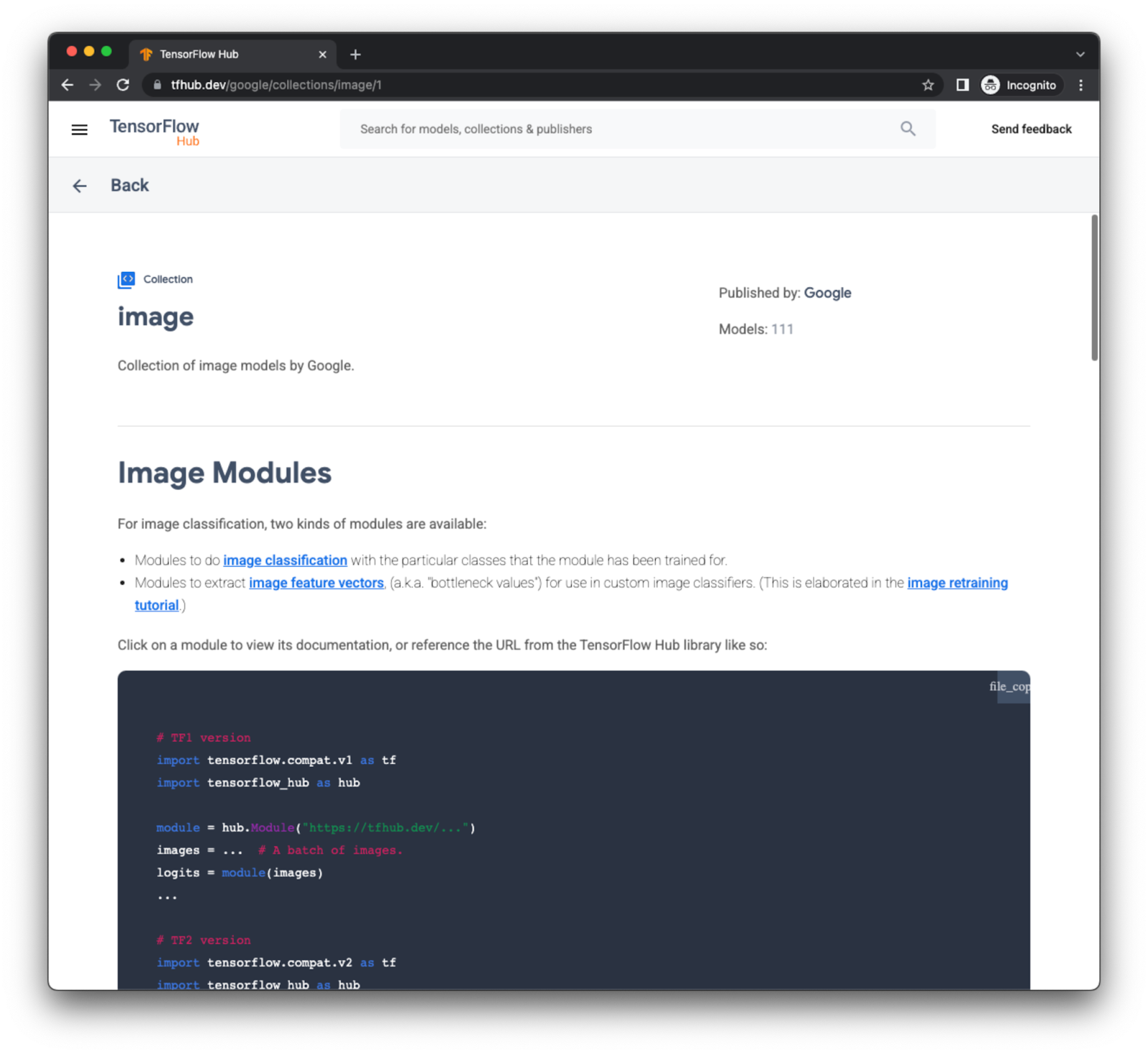

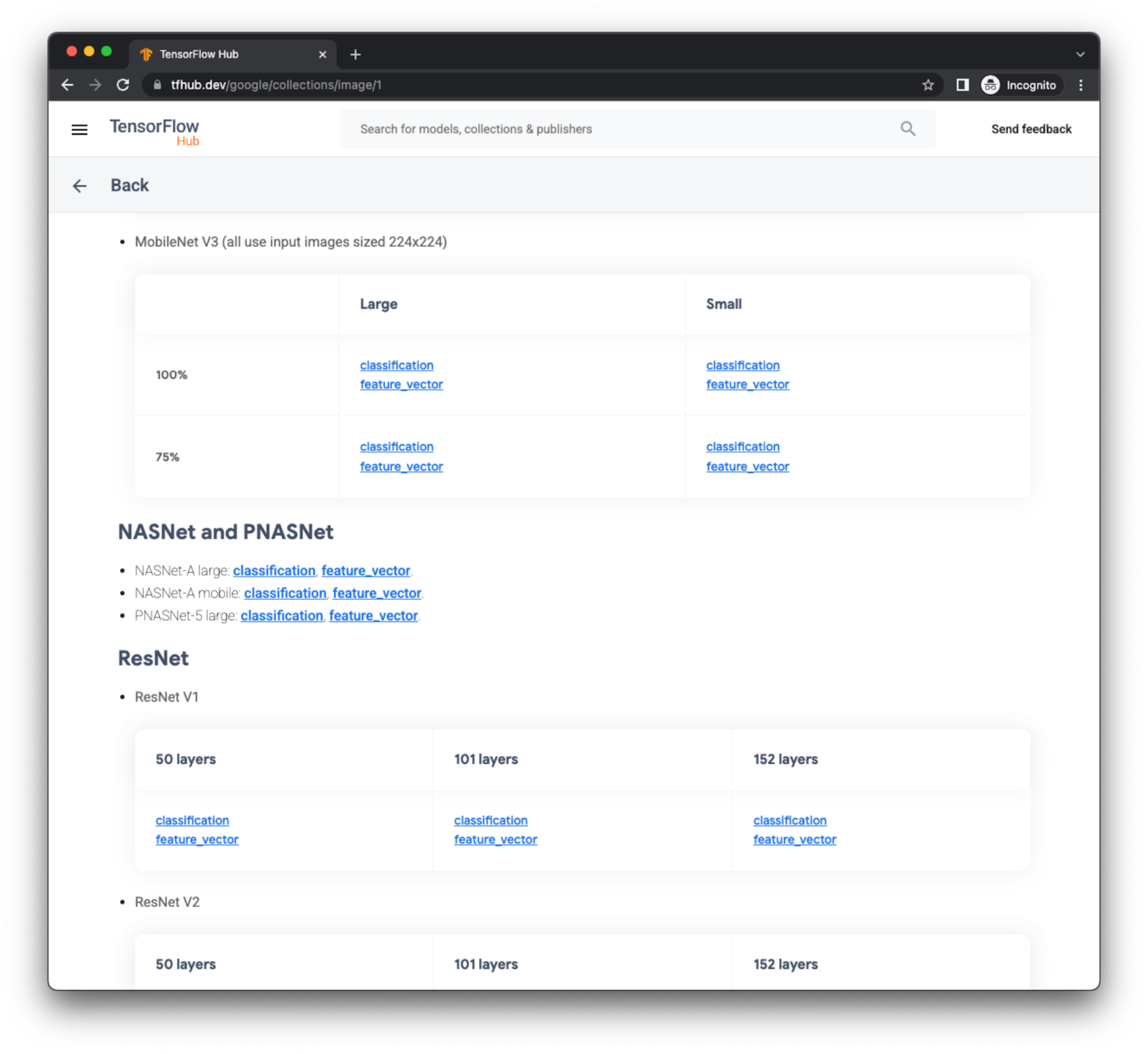

The cards with blue icons are collections of models. If you click on an image collection you'll have access to many similar models to choose from. Let's choose the image collection.

Scroll down and select MobileNet V3. Any of the feature vectors will do.

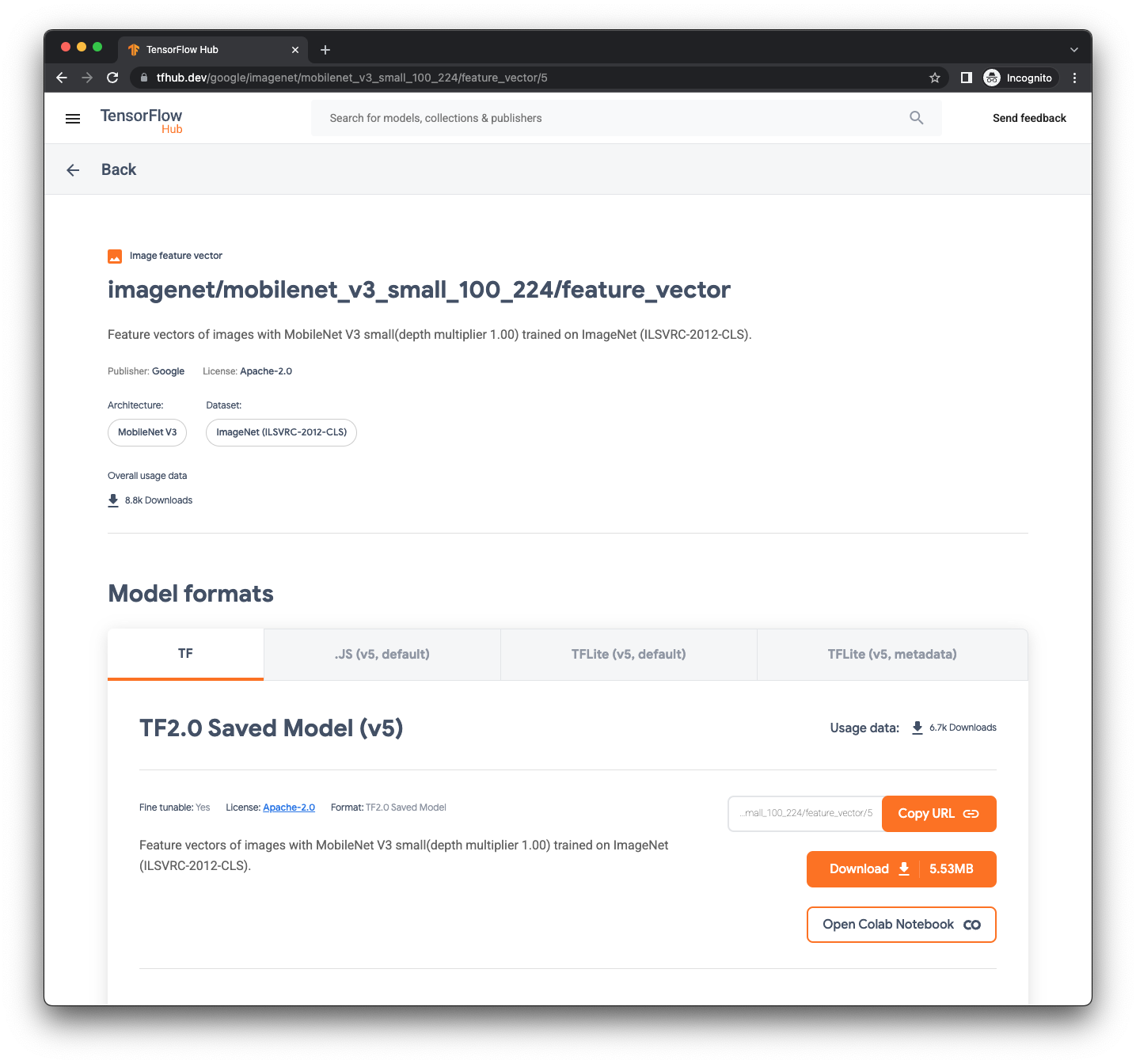

On the model's detail page you can read all the documentation for the model, see code snippets to try the model, or even try it directly on a Colab Notebook.

For now, all you need is the URL at the top. That's the model handle and the way you can easily access a model from the TensorFlow Hub library.

5. Transfer Learning with TensorFlow Hub

Now that you have chosen a model to use, let's customize it by loading it with the KerasLayer method from the TensorFlow Hub library.

This method loads a model in a way that it can be used as a layer on your model enabling you to build your model around this layer.

Previously, when you learned how to use Model Maker, all the internals were hidden from you to make it easier to understand. No, you'll see what Model Maker is doing behind the scenes.

Colaboratory

Next, let's go to Google Colab to train the custom model.

It takes about 20 minutes to go over the explanation and understand the basics of the notebook.

Pros:

- 1000s of available models contributed by Researchers and the community, trained on a variety of datasets.

- Models for all tasks, like vision, text and audio.

- Easy to experiment with different similar models. Changing the base model may require changing just one string.

Cons

- Still needs some TensorFlow/Keras expertise to use the models.

If you want to go even deeper, you can also do Transfer Learning using Keras Application. This is a very similar process to using TensorFlow Hub, but with only core TensorFlow APIs.

6. Congratulations

Congratulations, you've learned what Transfer Learning is and how to apply it to your own data!

In this Codelab you learned how to customize Machine Learning models to your own data using a technique called Transfer Learning

You tried 2 forms of Transfer Learning:

- Using a tool like TensorFlow Lite Model Maker.

- Using a Feature Vector from TensorFlow Hub.

Both options have their advantages and disadvantages, and many possible configurations for your particular needs

You also learned that you can go a little further and fine tune the models by tweaking their weights a little bit more to better fit your data.

Both options can do fine tuning on models.

Transfer Learning and fine tuning are not only for image related models. Since the idea is to use a learned representation of a domain to tune to your dataset, it can also be used for Text and Audio domains.

Next Steps

- Try it with your own data.

- Share with us what you build and tag TensorFlow on social media with your projects.

Learn More

- For more information about fine tuning for State of the Art models like BERT, see Fine-tuning a BERT model.

- For more information about Transfer Learning for audio models, see Transfer learning with YAMNet for environmental sound classification.