1. Overview

In this lab, you will learn to solve the complex problem of multimodal video transcription, using a single Gemini prompt!

You will analyze videos, looking to answer the following questions all at once:

- 1️⃣ What was said and when?

- 2️⃣ Who are the speakers?

- 3️⃣ Who said what?

Here is an example of what you'll achieve:

What you'll learn

- A methodology for addressing new or complex multimodal problems

- A prompt technique for decoupling data and preserving attention: tabular extraction

- Strategies for making the most of Gemini's 1M-token context in a single request

- Practical examples of multimodal video transcriptions

- Tips & optimizations

What you'll need

- Familiarity running Python in a notebook (in Colab or any other Jupyter environment)

- A Google Cloud project (Vertex AI) or a Gemini API key (Google AI Studio)

- 20-90 minutes (depending on whether you quick run or read & test everything)

ℹ️ The total cost to run this lab on Google Cloud is less than 5 USD.

Let's get started...

2. Before you begin

To use the Gemini API, you have two main options:

- Via Vertex AI with a Google Cloud project

- Via Google AI Studio with a Gemini API key

🛠️ Option 1 - Gemini API via Vertex AI

Requirements:

- A Google Cloud project

- The Vertex AI API must be enabled for this project

🛠️ Option 2 - Gemini API via Google AI Studio

Requirement:

- A Gemini API key

Learn more about getting a Gemini API key from Google AI Studio.

3. Run the notebook

Choose your preferred tool to open the notebook:

🧰 Tool A - Open the notebook in Colab

🧰 Tool B - Open the notebook in Colab Enterprise or Vertex AI Workbench

💡 This might be preferred if you already have a Google Cloud project configured with a Colab Enterprise or Vertex AI Workbench instance.

🧰 Tool C - Get the notebook from GitHub and run it in your own environment

⚠️ You will need to get the notebook from GitHub (or clone the repository) and run it in your own Jupyter environment.

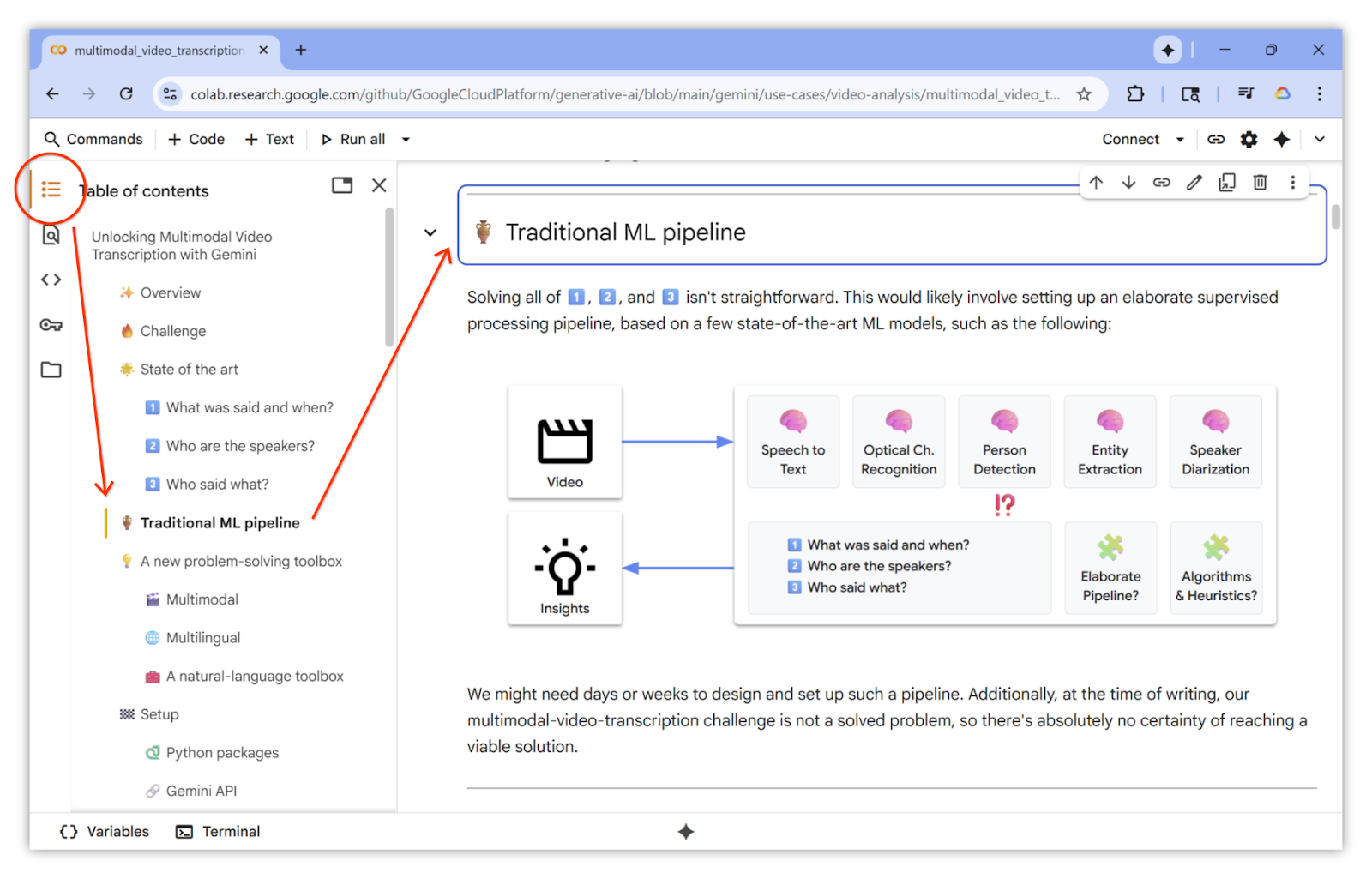

🗺️ Notebook table of contents

For easier navigation, make sure to expand and use the table of contents. Example:

🏁 Run the notebook

You are ready. You can now follow and run the notebook. Have fun!...

4. Congratulations!

Congratulations for completing the codelab!

You addressed this complex problem using the following techniques:

- Prototyping with open prompts to develop intuition about Gemini's natural strengths

- Taking into account how LLMs work under the hood

- Crafting increasingly specific prompts using a tabular extraction strategy

- Generating structured outputs to move towards production-ready code

- Adding data visualization for easier interpretation of responses and smoother iterations

- Adapting default parameters to optimize the results

- Conducting more tests, iterating, and even enriching the extracted data

These principles should apply to many other data extraction domains and allow you to solve your own complex problems.

Learn more

- Run other Gemini notebooks from the Google Cloud Generative AI repository

- Explore additional use cases in the Vertex AI Prompt Gallery

- Stay updated by following the Vertex AI Release Notes