1. Introduction

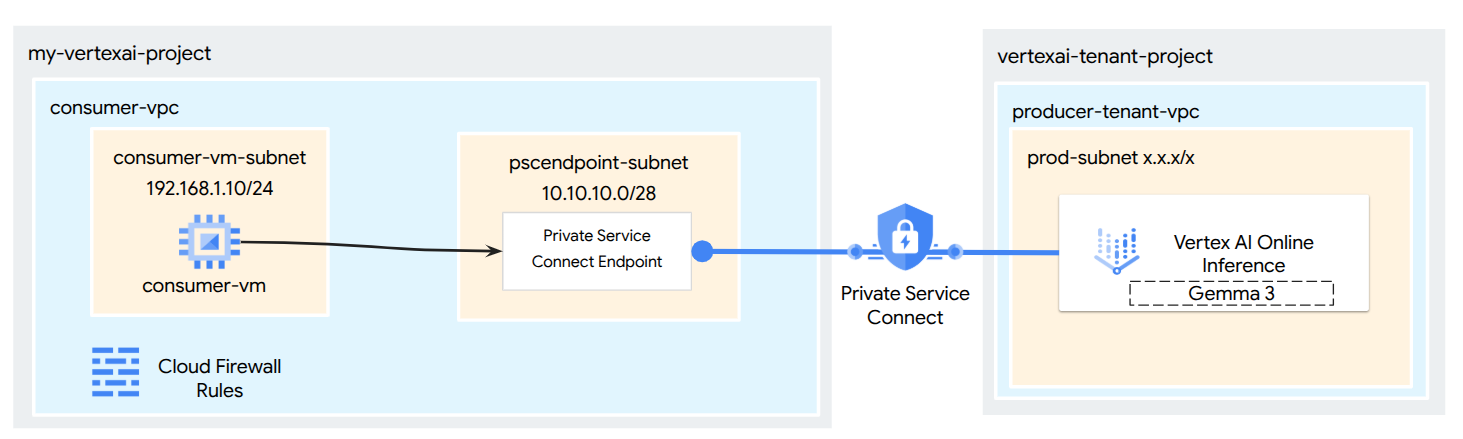

Leverage Private Service Connect to establish secure, private access for models deployed from the Vertex AI Model Garden. Instead of exposing a public endpoint, this method allows you to deploy your model to a private Vertex AI endpoint accessible only within your Virtual Private Cloud (VPC).

Private Service Connect creates an endpoint with an internal IP address inside your VPC, which connects directly to the Google-managed Vertex AI service hosting your model. This enables applications in your VPC and on-premises environments (via Cloud VPN or Interconnect) to send inference requests using private IPs. All network traffic remains on Google's network, which enhances security, reduces latency, and completely isolates your model's serving endpoint from the public internet.

What you'll build

In this tutorial, you will download Gemma 3 from Model Garden, hosted in Vertex AI Online Inference as a private endpoint accessible via Private Service Connect. Your end-to-end setup will include:

- Model Garden Model: You will select Gemma 3 from the Vertex AI Model Garden and deploy it to a Private Service Connect endpoint.

- Private Service Connect: You will configure a consumer endpoint in your Virtual Private Cloud (VPC) consisting of an internal IP address within your own network.

- Secure Connection to Vertex AI: The PSC endpoint will target the Service Attachment automatically generated by Vertex AI for your private model deployment. This establishes a private connection, ensuring traffic between your VPC and the model serving endpoint does not traverse the public internet.

- Client Configuration within your VPC: You will set up a client (e.g., Compute Engine VM) within your VPC to send inference requests to the deployed model using the internal IP address of the PSC endpoint.

By the end, you'll have a functional example of a Model Garden model being served privately, only accessible from within your designated VPC network.

What you'll learn

In this tutorial, you will learn how to deploy a model from Vertex AI Model Garden and make it securely accessible from your Virtual Private Cloud (VPC) using Private Service Connect (PSC). This method allows your applications within your VPC (the consumer) to privately connect to the Vertex AI model endpoint (the producer service) without traversing the public internet.

Specifically, you will learn:

- Understanding PSC for Vertex AI: How PSC enables private and secure consumer-to-producer connections. Your VPC can access the deployed Model Garden model using internal IP addresses.

- Deploying a Model with Private Access: How to configure a Vertex AI Endpoint for your Model Garden model to use PSC, making it a private endpoint.

- The Role of the Service Attachment: When you deploy a model to a private Vertex AI Endpoint, Google Cloud automatically creates a Service Attachment in a Google-managed tenant project. This Service Attachment exposes the model serving service to consumer networks.

- Creating a PSC Endpoint in Your VPC:

- How to obtain the unique Service Attachment URI from your deployed Vertex AI Endpoint details.

- How to reserve an internal IP address within your chosen subnet in your VPC.

- How to create a Forwarding Rule in your VPC that acts as the PSC Endpoint, targeting the Vertex AI Service Attachment. This endpoint makes the model accessible via the reserved internal IP.

- Establishing Private Connectivity: How the PSC Endpoint in your VPC connects to the Service Attachment, bridging your network with the Vertex AI service securely.

- Sending Inference Requests Privately: How to send prediction requests from resources (like Compute Engine VMs) within your VPC to the internal IP address of the PSC Endpoint.

- Validation: Steps to test and confirm that you can successfully send inference requests from your VPC to the deployed Model Garden model through the private connection.

By completing this, you'll be able to host models from Model Garden that are only reachable from your private network infrastructure.

What you'll need

Google Cloud Project

IAM Permissions

- AI Platform Admin (roles/ml.Admin)

- Compute Network Admin (roles/compute.networkAdmin)

- Compute Instance Admin (roles/compute.instanceAdmin)

- Compute Security Admin (roles/compute.securityAdmin)

- DNS Administrator (roles/dns.admin)

- IAP-secured Tunnel User (roles/iap.tunnelResourceAccessor)

- Logging Admin (roles/logging.admin)

- Notebooks Admin (roles/notebooks.admin)

- Project IAM Admin (roles/resourcemanager.projectIamAdmin)

- Service Account Admin (roles/iam.serviceAccountAdmin)

- Service Usage Admin (roles/serviceusage.serviceUsageAdmin)

2. Before you begin

Update the project to support the tutorial

This tutorial makes use of $variables to aid gcloud configuration implementation in Cloud Shell.

Inside Cloud Shell, perform the following:

gcloud config list project

gcloud config set project [YOUR-PROJECT-ID]

projectid=[YOUR-PROJECT-ID]

echo $projectid

API Enablement

Inside Cloud Shell, perform the following:

gcloud services enable "compute.googleapis.com"

gcloud services enable "aiplatform.googleapis.com"

gcloud services enable "serviceusage.googleapis.com"

3. Deploy Model

Follow the steps below to deploy your model from Model Garden

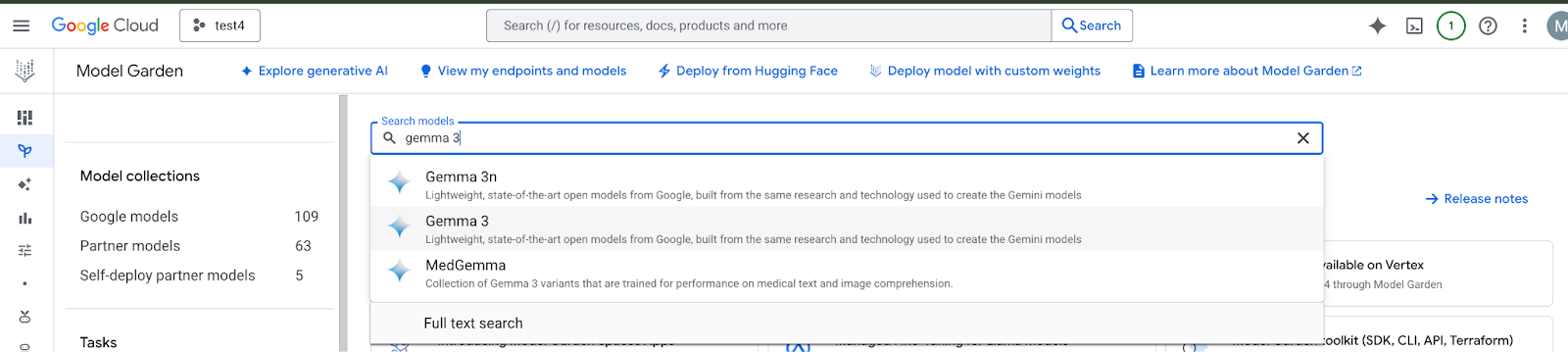

In the Google Cloud console, Go to Model Garden and search and select Gemma 3

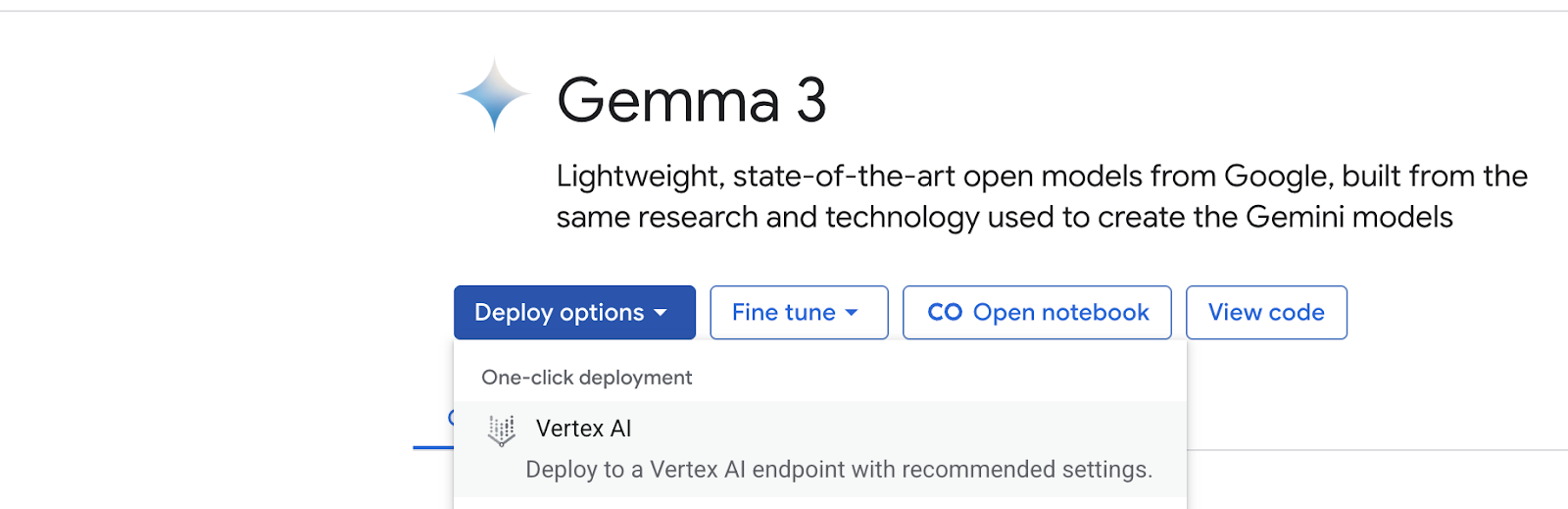

Click Deploy options and select Vertex AI

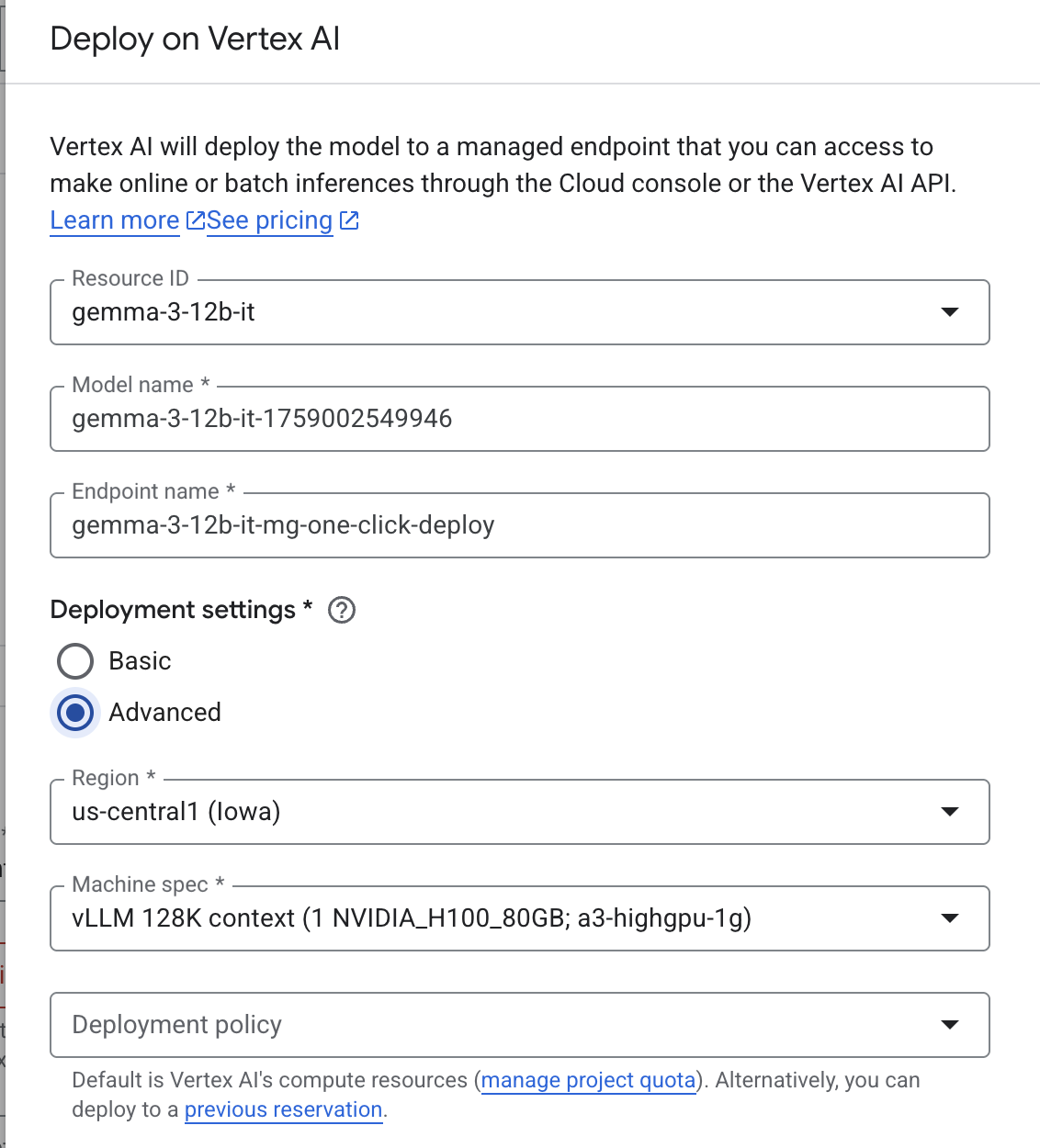

In the Deploy on Vertex AI pane, select Advanced. The pre-populated region and Machine spec are selected based on available capacity. You may change these values, although the codelab is tailored for us-central1.

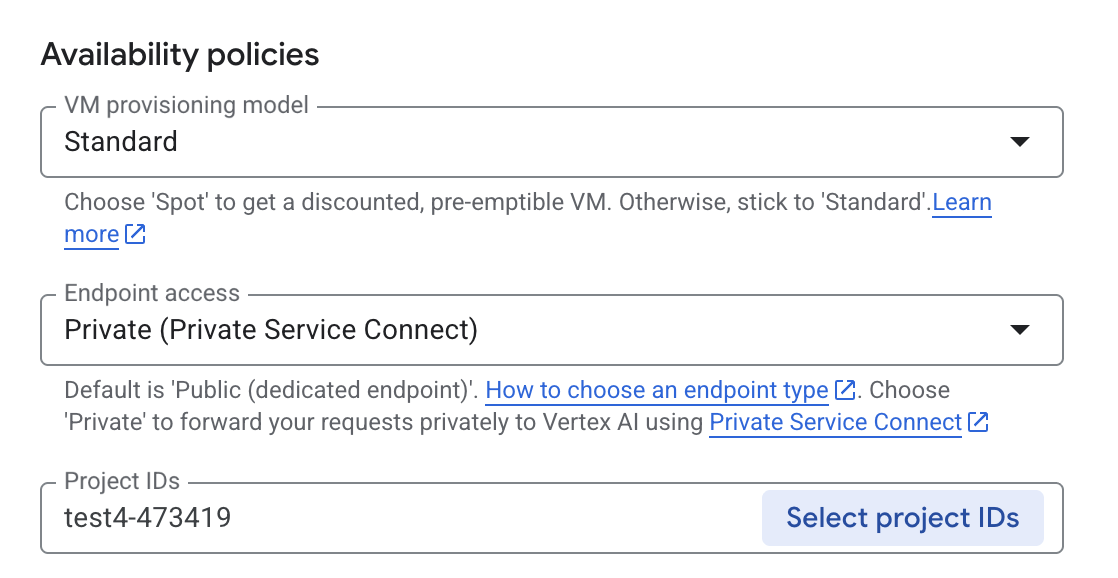

In the Deploy on Vertex AI pane, ensure Endpoint Access is configured as Private Service Connect then select your Project.

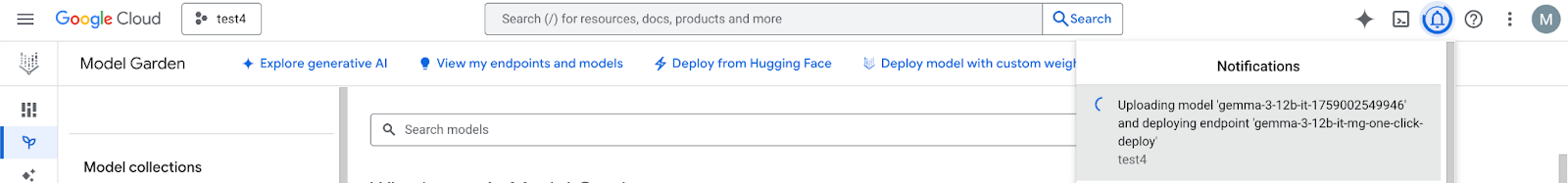

Leave all defaults for other options, then select Deploy at the bottom & Check your notifications for the deployment status.

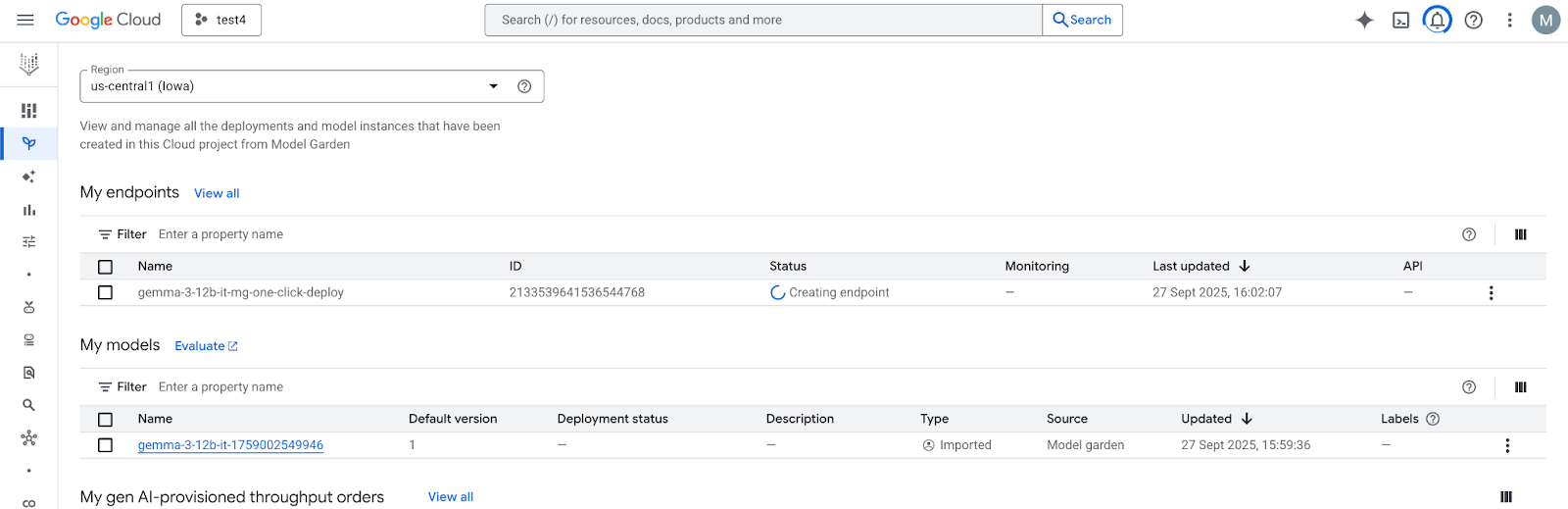

In model Garden, select the region, us-central1, that provides the Gemma 3 model and endpoint. Model deployment takes approximately 5 min.

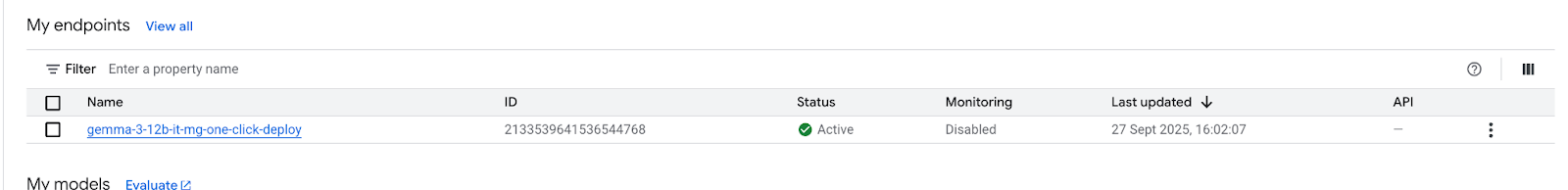

In 30 minutes, the endpoint will transition to "Active" once completed

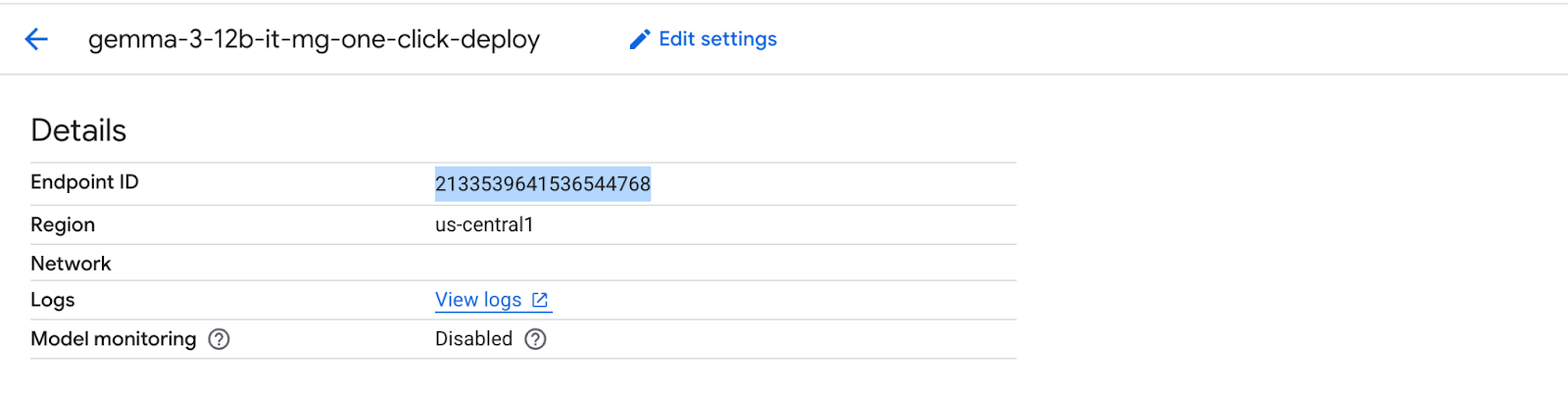

Obtain and note the Endpoint ID by selecting the endpoint.

Open cloud shell and perform the following to obtain the Private Service Connect Service Attachment URI. This URI string is used by the consumer when deploying a PSC consumer endpoint.

Inside Cloud Shell, update the Endpoint ID, then issue the following command.

gcloud ai endpoints describe [Endpoint ID] --region=us-central1 | grep -i serviceAttachment:

Below is an example:

user@cloudshell:$ gcloud ai endpoints describe 2124795225560842240 --region=us-central1 | grep -i serviceAttachment:

Using endpoint [https://us-central1-aiplatform.googleapis.com/]

serviceAttachment: projects/o9457b320a852208e-tp/regions/us-central1/serviceAttachments/gkedpm-52065579567eaf39bfe24f25f7981d

Copy the contents after serviceAttachment into a variable called "Service_attachment", you will need it later when creating the PSC connection.

user@cloudshell:$ Service_attachment=projects/o9457b320a852208e-tp/regions/us-central1/serviceAttachments/gkedpm-52065579567eaf39bfe24f25f7981d

4. Consumer Setup

Create the Consumer VPC

Inside Cloud Shell, perform the following:

gcloud compute networks create consumer-vpc --project=$projectid --subnet-mode=custom

Create the consumer VM subnet

Inside Cloud Shell, perform the following:

gcloud compute networks subnets create consumer-vm-subnet --project=$projectid --range=192.168.1.0/24 --network=consumer-vpc --region=us-central1 --enable-private-ip-google-access

Create the PSC Endpoint subnet

gcloud compute networks subnets create pscendpoint-subnet --project=$projectid --range=10.10.10.0/28 --network=consumer-vpc --region=us-central1

5. Enable IAP

To allow IAP to connect to your VM instances, create a firewall rule that:

- Applies to all VM instances that you want to be accessible by using IAP.

- Allows ingress traffic from the IP range 35.235.240.0/20. This range contains all IP addresses that IAP uses for TCP forwarding.

Inside Cloud Shell, create the IAP firewall rule.

gcloud compute firewall-rules create ssh-iap-consumer \

--network consumer-vpc \

--allow tcp:22 \

--source-ranges=35.235.240.0/20

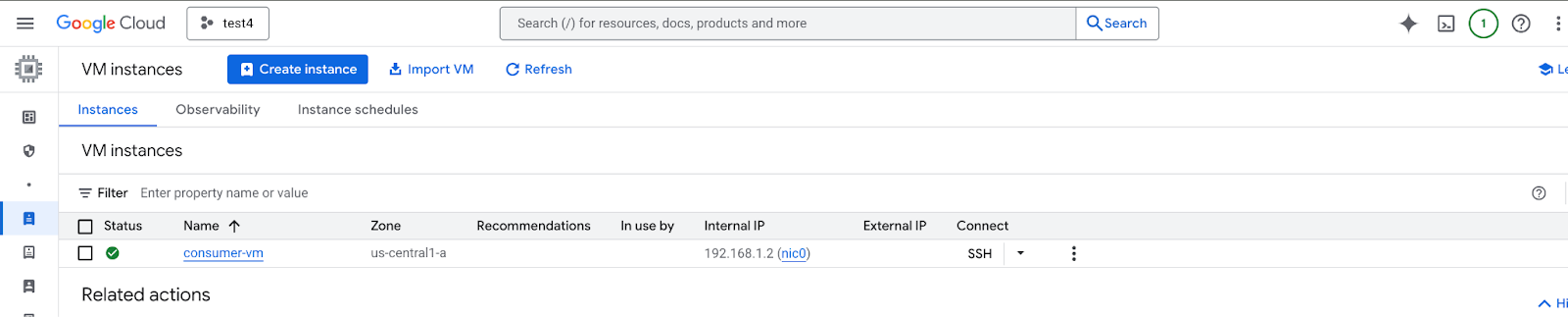

6. Create consumer VM instances

Inside Cloud Shell, create the consumer vm instance, consumer-vm.

gcloud compute instances create consumer-vm \

--project=$projectid \

--machine-type=e2-micro \

--image-family debian-11 \

--no-address \

--shielded-secure-boot \

--image-project debian-cloud \

--zone us-central1-a \

--subnet=consumer-vm-subnet

7. Private Service Connect Endpoints

The consumer creates a consumer endpoint (forwarding rule) with an internal IP address within their VPC. This PSC endpoint targets the producer's service attachment. Clients within the consumer VPC or hybrid network can send traffic to this internal IP address to reach the producer's service.

Reserve an IP address for the consumer endpoint.

Inside Cloud Shell, create the forwarding rule.

gcloud compute addresses create psc-address \

--project=$projectid \

--region=us-central1 \

--subnet=pscendpoint-subnet \

--addresses=10.10.10.6

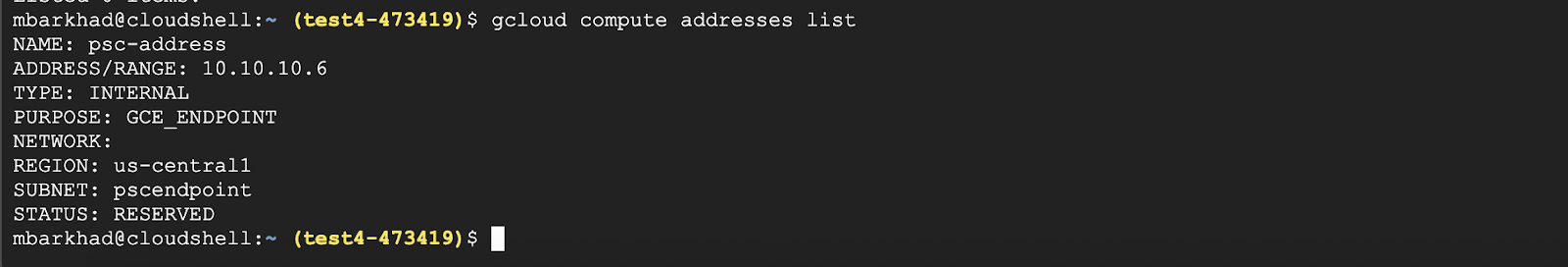

Verify that the IP address is reserved

Inside Cloud Shell, list the reserved IP Address.

gcloud compute addresses list

You should see the 10.10.10.6 IP address reserved.

Create the consumer endpoint by specifying the service attachment URI, target-service-attachment, that you captured in the previous step, Deploy Model section.

Inside Cloud Shell, describe the network attachment.

gcloud compute forwarding-rules create psc-consumer-ep \

--network=consumer-vpc \

--address=psc-address \

--region=us-central1 \

--target-service-attachment=$Service_attachment \

--project=$projectid

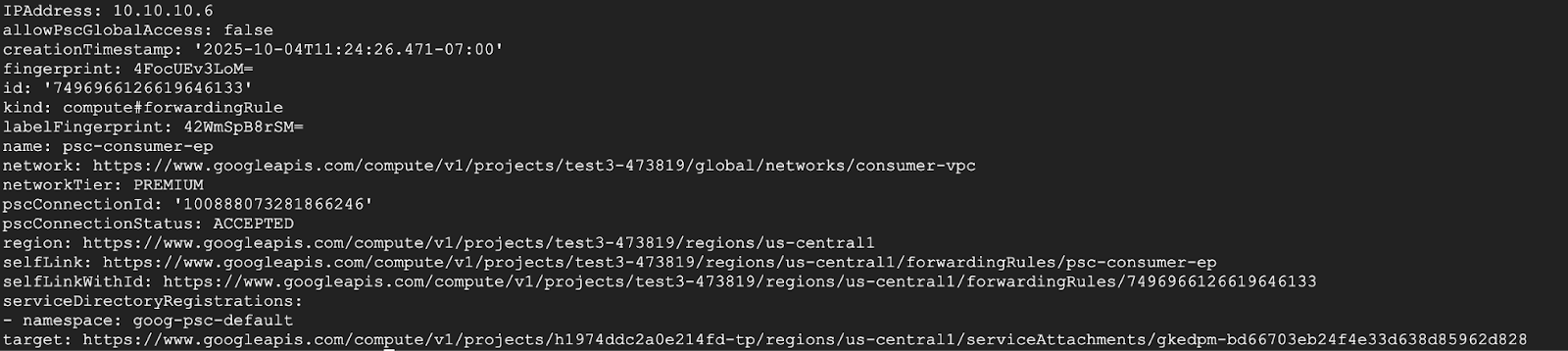

Verify that the service attachment accepts the endpoint

gcloud compute forwarding-rules describe psc-consumer-ep \

--project=$projectid \

--region=us-central1 \

In the response, verify that an "ACCEPTED" status appears in the pscConnectionStatus field

8. Test from Consumer VM

In Cloud Shell perform following steps to provide access to Consumer VM to access Vertex Model Garden API

SSH into Consumer VM

Re-authenticate with Application Default Credentials and specify Vertex AI scopes.

gcloud auth application-default login

--scopes=https://www.googleapis.com/auth/cloud-platform

Use this table below to generate a CURLl command, adjust based on your environment

Attribute | Value |

Protocol | HTTP |

Location | us-central1 |

Online Prediction Endpoint | 2133539641536544768 |

Project ID | test4-473419 |

Model | gemma-3-12b-it |

Private Service Connect Endpoint IP | 10.10.10.6 |

Messages | [{"role": "user","content": "What weighs more 1 pound of feathers or rocks?"}] |

Update and execute the curl command based on your environments details:

curl -k -v -X POST -H "Authorization: Bearer $(gcloud auth application-default print-access-token)" -H "Content-Type: application/json" http://[PSC-IP]/v1/projects/[Project-ID]/locations/us-central1/endpoints/[Predictions Endpoint]/chat/completions -d '{"model": "google/gemma-3-12b-it", "messages": [{"role": "user","content": "What weighs more 1 pound of feathers or rocks?"}] }'

Example:

curl -k -v -X POST -H "Authorization: Bearer $(gcloud auth application-default print-access-token)" -H "Content-Type: application/json" http://10.10.10.6/v1/projects/test4-473419/locations/us-central1/endpoints/2133539641536544768/chat/completions -d '{"model": "google/gemma-3-12b-it", "messages": [{"role": "user","content": "What weighs more 1 pound of feathers or rocks?"}] }'

FINAL RESULT - SUCCESS!!!

The result you should see prediction from Gemma 3 at the bottom of the output, this shows that you were able to hit the API endpoint privately through PSC endpoint

Connection #0 to host 10.10.10.6 left intact

{"id":"chatcmpl-9e941821-65b3-44e4-876c-37d81baf62e0","object":"chat.completion","created":1759009221,"model":"google/gemma-3-12b-it","choices":[{"index":0,"message":{"role":"assistant","reasoning_content":null,"content":"This is a classic trick question! They weigh the same. One pound is one pound, regardless of the material. 😊\n\n\n\n","tool_calls":[]},"logprobs":null,"finish_reason":"stop","stop_reason":106}],"usage":{"prompt_tokens":20,"total_tokens":46,"completion_tokens":26,"prompt_tokens_details":null},"prompt_logprobs":null

9. Clean up

From Cloud Shell, delete tutorial components.

gcloud ai endpoints undeploy-model ENDPOINT_ID --deployed-model-id=DEPLOYED_MODEL_ID --region=us-central1 --quiet

gcloud ai endpoints delete $ENDPOINT_ID --project=$projectid --region=us-central1 --quiet

gcloud ai models delete $MODEL_ID --project=$projectid --region=us-central1 --quiet

gcloud compute instances delete consumer-vm --zone=us-central1-a --quiet

gcloud compute forwarding-rules delete psc-consumer-ep --region=us-central1 --project=$projectid --quiet

gcloud compute addresses delete psc-address --region=us-central1 --project=$projectid --quiet

gcloud compute networks subnets delete pscendpoint-subnet consumer-vm-subnet --region=us-central1 --quiet

gcloud compute firewall-rules delete ssh-iap-consumer --project=$projectid

gcloud compute networks delete consumer-vpc --project=$projectid --quiet

gcloud projects delete $projectid --quiet

10. Congratulations

Congratulations, you've successfully configured and validated private access to the Gemma 3 API hosted on Vertex AI Prediction using a Private Service Connect Endpoint.

You created the consumer infrastructure, including reserving an internal IP address and configuring a Private Service Connect Endpoint (a forwarding rule) within your VPC. This endpoint securely connects to the Vertex AI service by targeting the service attachment associated with your deployed Gemma 3 model. This setup allows your applications within the VPC or connected networks to interact with the Gemma 3 API privately and securely, using an internal IP address, without requiring traffic to traverse the public internet.