1. Introduction

Google Antigravity (referred as Antigravity for the rest of the document) is an agentic IDE from Google. In Getting started with Antigravity codelab, you can learn the basics of Antigravity. In this codelab, we will use Antigravity to build Agent Skills, a lightweight, open format for extending AI agent capabilities with specialized knowledge and workflows. You will be able to learn what Agent Skills are, their benefits and how they are constructed. You will then build multiple Agent Skills ranging from a Git formatter, template generator, tool code scaffolding and more, all usable within Antigravity.

Prerequisites:

- Google Antigravity installed and configured.

- Basic understanding of Google Antigravity. It is recommended to complete the codelab: Getting Started with Google Antigravity.

2. Why Skills

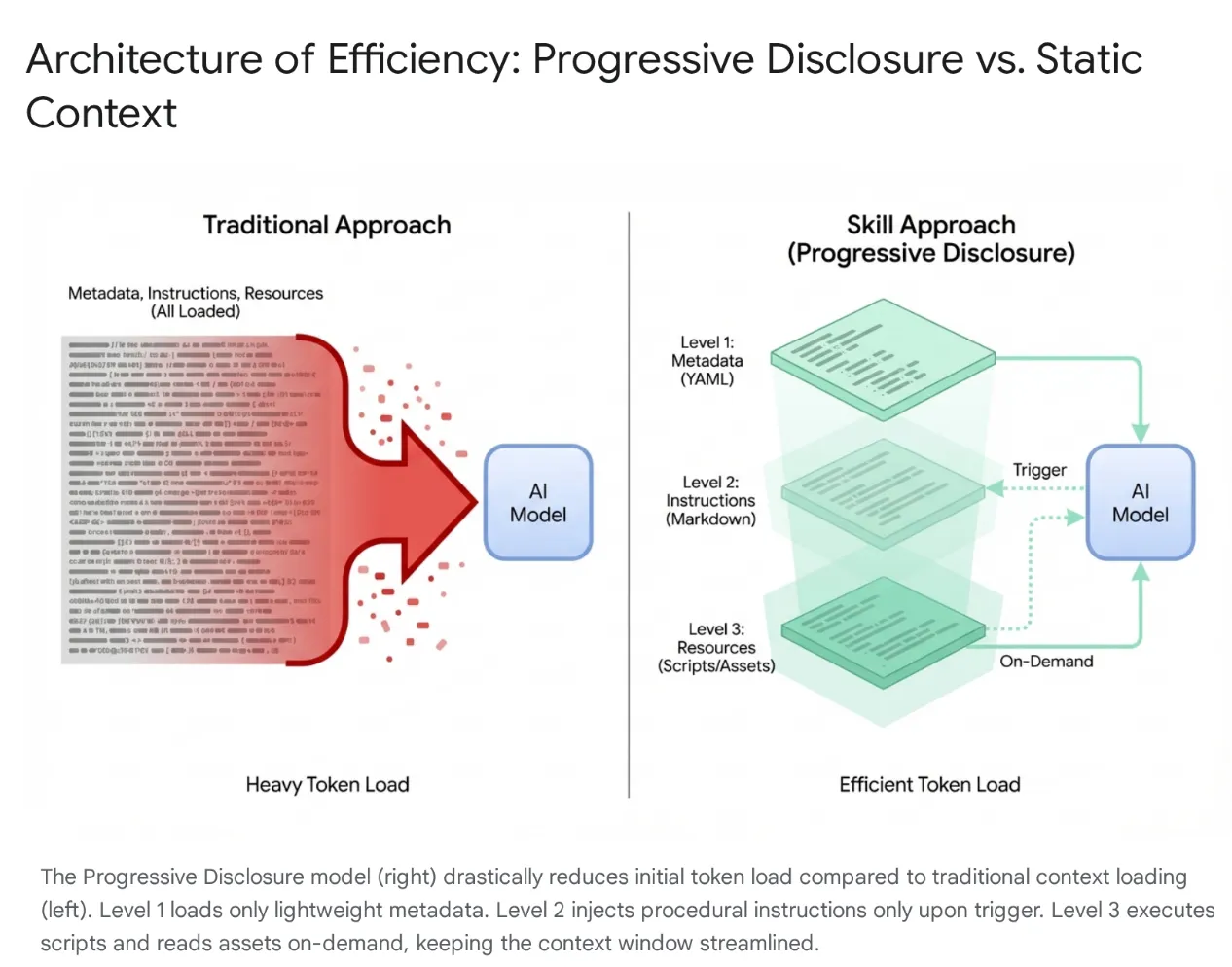

Modern AI agents have evolved from simple listeners to complex reasoners that integrate with local file systems and external tools (via MCP servers). However, indiscriminately loading an agent with entire codebases and hundreds of tools leads to Context Saturation and "Tool Bloat." Even with large context windows, dumping 40–50k tokens of unused tools into active memory causes high latency, financial waste, and "context rot," where the model becomes confused by irrelevant data.

The Solution: Agent Skills

To solve this, Anthropic introduced Agent Skills, shifting the architecture from monolithic context loading to Progressive Disclosure. Instead of forcing the model to "memorize" every specific workflow (like database migrations or security audits) at the start of a session, these capabilities are packaged into modular, discoverable units.

How It Works

The model is initially exposed only to a lightweight "menu" of metadata. It loads the heavy procedural knowledge (instructions and scripts) only when the user's intent specifically matches a skill. This ensures that a developer asking to refactor authentication middleware gets security context without loading unrelated CSS pipelines, keeping the context lean, fast, and cost-effective.

3. Agent Skills and Antigravity

In the Antigravity ecosystem, if the Agent Manager is the brain and the Editor is the canvas, Skills act as specialized training modules that bridge the gap between the generalist Gemini 3 model and your specific context. They allow the agent to "equip" a defined set of instructions and protocols—such as database migration standards or security checks—only when a relevant task is requested. By dynamically loading these execution protocols, Skills effectively transform the AI from a generic programmer into a specialist that rigorously adheres to an organization's codified best practices and safety standards.

What is a Skill in Antigravity?

In the context of Google Antigravity, a Skill is a directory-based package containing a definition file (SKILL.md) and optional supporting assets (scripts, references, templates).

It is a mechanism for on-demand capability extension.

- On-Demand: Unlike a System Prompt (which is always loaded), a Skill is only loaded into the agent's context when the agent determines it is relevant to the user's current request. This optimizes the context window and prevents the agent from being distracted by irrelevant instructions. In large projects with dozens of tools, this selective loading is crucial for performance and reasoning accuracy.

- Capability Extension: Skills can do more than just instruct; they can execute. By bundling Python or Bash scripts, a Skill can give the agent the ability to perform complex, multi-step actions on the local machine or external networks without the user needing to manually run commands. This transforms the agent from a text generator into a tool user.

Skills v/s the Ecosystem (Tools, Rules and Workflows)

While Model Context Protocol (MCP) functions as the agent's "hands"—providing heavy-duty, persistent connections to external systems like GitHub or PostgreSQL—Skills act as the "brains" that direct them.

MCP handles the stateful infrastructure, whereas Skills are lightweight, ephemeral task definitions that package the methodology for using those tools. This serverless approach allows agents to execute ad-hoc tasks (like generating changelogs or migrations) without the operational overhead of running persistent processes, loading the context only when the task is active and releasing it immediately after.

Functionally, Skills occupy a unique middle ground between "Rules" (passive, always-on guardrails) and "Workflows" (active, user-triggered macros). Unlike workflows that require specific commands (e.g., /test), Skills are agent-triggered: the model automatically detects the user's intent and dynamically equips the specific expertise required. This architecture allows for powerful composability; for example, a global Rule can enforce the use of a "Safe-Migration" Skill during database changes, or a single Workflow can orchestrate multiple Skills to build a robust deployment pipeline.

4. Creating Skills

Creating a Skill in Antigravity follows a specific directory structure and file format. This standardization ensures that skills are portable and that the agent can reliably parse and execute them. The design is intentionally simple, relying on widely understood formats like Markdown and YAML, lowering the barrier to entry for developers wishing to extend their IDE's capabilities.

Directory Structure

Skills can be defined at two scopes, allowing for both project-specific and user-specific customizations :

- Workspace Scope: Located in

<workspace-root>/.agent/skills/. These skills are available only within the specific project. This is ideal for project-specific scripts, such as deployment to a specific environment, database management for that app, or generating boilerplate code for a proprietary framework. - Global Scope: Located in

~/.gemini/antigravity/skills/. These skills are available across all projects on the user's machine. This is suitable for general utilities like "Format JSON," "Generate UUIDs," "Review Code Style," or integration with personal productivity tools.

A typical Skill directory looks like this:

my-skill/

├── SKILL.md # The definition file

├── scripts/ # [Optional] Python, Bash, or Node scripts

├── run.py

└── util.sh

├── references/ # [Optional] Documentation or templates

└── api-docs.md

└── assets/ # [Optional] Static assets (images, logos)

This structure separates concerns effectively. The logic (scripts) is separated from the instruction (SKILL.md) and the knowledge (references), mirroring standard software engineering practices.

The SKILL.md Definition File

The SKILL.md file is the brain of the Skill. It tells the agent what the skill is, when to use it, and how to execute it.

It consists of two parts:

- YAML Frontmatter

- Markdown Body.

YAML Frontmatter

This is the metadata layer. It is the only part of the skill that is indexed by the agent's high-level router. When a user sends a prompt, the agent semantic-matches the prompt against the description fields of all available skills.

---

name: database-inspector

description: Use this skill when the user asks to query the database, check table schemas, or inspect user data in the local PostgreSQL instance.

---

Key Fields:

- name: This is not mandatory. Must be unique within the scope. Lowercase, hyphens allowed (e.g.,

postgres-query,pr-reviewer). If it's not provided, it will default to the directory name. - description: This is mandatory and the most important field. It functions as the "trigger phrase." It must be descriptive enough for the LLM to recognize semantic relevance. A vague description like "Database tools" is insufficient. A precise description like "Executes read-only SQL queries against the local PostgreSQL database to retrieve user or transaction data. Use this for debugging data states" ensures the skill is picked up correctly.

The Markdown Body

The body contains the instructions. This is "prompt engineering" persisted to a file. When the skill is activated, this content is injected into the agent's context window.

The body should include:

- Goal: A clear statement of what the skill achieves.

- Instructions: Step-by-step logic.

- Examples: Few-shot examples of inputs and outputs to guide the model's performance.

- Constraints: "Do not" rules (e.g., "Do not run DELETE queries").

Example SKILL.md Body:

Database Inspector

Goal

To safely query the local database and provide insights on the current data state.

Instructions

- Analyze the user's natural language request to understand the data need.

- Formulate a valid SQL query.

- CRITICAL: Only SELECT statements are allowed.

- Use the script scripts/query_runner.py to execute the SQL.

- Command: python scripts/query_runner.py "SELECT * FROM..."

- Present the results in a Markdown table.

Constraints

- Never output raw user passwords or API keys.

- If the query returns > 50 rows, summarize the data instead of listing it all.

Script Integration

One of the most powerful features of Skills is the ability to delegate execution to scripts. This allows the agent to perform actions that are difficult or impossible for an LLM to do directly (like binary execution, complex mathematical calculation, or interacting with legacy systems).

Scripts are placed in the scripts/ subdirectory. The SKILL.md references them by relative path.

5. Authoring Skills

The goal of this section is to build out Skills that integrate into Antigravity and progressively show various features like resources / scripts / etc.

You can download the Skills from the Github repo here: https://github.com/rominirani/antigravity-skills.

We can look at placing each of these skills inside of either ~/.gemini/antigravity/skills folder or /.agent/skills folder.

Level 1 : The Basic Router ( git-commit-formatter )

Let's consider this as the "Hello World" of Skills.

Developers often write lazy commit messages e.g. "wip", "fix bug", "updates". Enforcing "Conventional Commits" manually is tedious and often forgotten. Let's implement a Skill that enforces the Conventional Commits specification. By simply instructing the agent on the rules, we allow it to act as the enforcer.

git-commit-formatter/

└── SKILL.md (Instructions only)

The SKILL.md file is shown below:

---

name: git-commit-formatter

description: Formats git commit messages according to Conventional Commits specification. Use this when the user asks to commit changes or write a commit message.

---

Git Commit Formatter Skill

When writing a git commit message, you MUST follow the Conventional Commits specification.

Format

`<type>[optional scope]: <description>`

Allowed Types

- **feat**: A new feature

- **fix**: A bug fix

- **docs**: Documentation only changes

- **style**: Changes that do not affect the meaning of the code (white-space, formatting, etc)

- **refactor**: A code change that neither fixes a bug nor adds a feature

- **perf**: A code change that improves performance

- **test**: Adding missing tests or correcting existing tests

- **chore**: Changes to the build process or auxiliary tools and libraries such as documentation generation

Instructions

1. Analyze the changes to determine the primary `type`.

2. Identify the `scope` if applicable (e.g., specific component or file).

3. Write a concise `description` in an imperative mood (e.g., "add feature" not "added feature").

4. If there are breaking changes, add a footer starting with `BREAKING CHANGE:`.

Example

`feat(auth): implement login with google`

How to Run This Example:

- Make a small change to any file in your workspace.

- Open the chat and type: Commit these changes.

- The Agent will not just run git commit. It will first activate the git-commit-formatter skill.

- Result: A conventional Git commit message will be proposed.

For e.g. I made Antigravity add some comments to a sample Python file and it ended up with a Git commit message like docs: add detailed comments to demo_primes.py.

Level 2: Asset Utilization (license-header-adder)

This is the "Reference" pattern.

Every source file in a corporate project might need a specific 20-line Apache 2.0 license header. Putting this static text directly into the prompt (or SKILL.md) is wasteful. It consumes tokens every time the skill is indexed, and the model might "hallucinate" typos in legal text.

Offloading the static text to a plain text file in a resources/ folder. The skill instructs the agent to read this file only when needed.

Converting loose data (like a JSON API response) to strict code (like Pydantic models) involves dozens of decisions. How should we name the classes? Should we use Optional? snake_case or camelCase? Writing out these 50 rules in English is tedious and error-prone.

LLMs are pattern-matching engines.

Showing them a golden example (Input -> Output) is often more effective than verbose instructions.

license-header-adder/

├── SKILL.md

└── resources/

└── HEADER_TEMPLATE.txt (The heavy text)

The SKILL.md file is shown below:

---

name: license-header-adder

description: Adds the standard open-source license header to new source files. Use involves creating new code files that require copyright attribution.

---

# License Header Adder Skill

This skill ensures that all new source files have the correct copyright header.

## Instructions

1. **Read the Template**:

First, read the content of the header template file located at `resources/HEADER_TEMPLATE.txt`.

2. **Prepend to File**:

When creating a new file (e.g., `.py`, `.java`, `.js`, `.ts`, `.go`), prepend the `target_file` content with the template content.

3. **Modify Comment Syntax**:

- For C-style languages (Java, JS, TS, C++), keep the `/* ... */` block as is.

- For Python, Shell, or YAML, convert the block to use `#` comments.

- For HTML/XML, use `<!-- ... -->`.

How to Run This Example:

- Create a new dummy python file:

touch my_script.py - Type:

Add the license header to my_script.py. - The agent will read

license-header-adder/resources/HEADER_TEMPLATE.txt. - It will paste the content exactly, verbatim, into your file.

Level 3: Learning by Example (json-to-pydantic)

The "Few-Shot" pattern.

Converting loose data (like a JSON API response) to strict code (like Pydantic models) involves dozens of decisions. How should we name the classes? Should we use Optional? snake_case or camelCase? Writing out these 50 rules in English is tedious and error-prone.

LLMs are pattern-matching engines. Showing them a golden example (Input -> Output) is often more effective than verbose instructions.

json-to-pydantic/

├── SKILL.md

└── examples/

├── input_data.json (The Before State)

└── output_model.py (The After State)

The SKILL.md file is shown below:

---

name: json-to-pydantic

description: Converts JSON data snippets into Python Pydantic data models.

---

# JSON to Pydantic Skill

This skill helps convert raw JSON data or API responses into structured, strongly-typed Python classes using Pydantic.

Instructions

1. **Analyze the Input**: Look at the JSON object provided by the user.

2. **Infer Types**:

- `string` -> `str`

- `number` -> `int` or `float`

- `boolean` -> `bool`

- `array` -> `List[Type]`

- `null` -> `Optional[Type]`

- Nested Objects -> Create a separate sub-class.

3. **Follow the Example**:

Review `examples/` to see how to structure the output code. notice how nested dictionaries like `preferences` are extracted into their own class.

- Input: `examples/input_data.json`

- Output: `examples/output_model.py`

Style Guidelines

- Use `PascalCase` for class names.

- Use type hints (`List`, `Optional`) from `typing` module.

- If a field can be missing or null, default it to `None`.

In the /examples folder , there is the JSON file and the output file i.e. Python file. Both of them are shown below:

input_data.json

{

"user_id": 12345,

"username": "jdoe_88",

"is_active": true,

"preferences": {

"theme": "dark",

"notifications": [

"email",

"push"

]

},

"last_login": "2024-03-15T10:30:00Z",

"meta_tags": null

}

output_model.py

from pydantic import BaseModel, Field

from typing import List, Optional

class Preferences(BaseModel):

theme: str

notifications: List[str]

class User(BaseModel):

user_id: int

username: str

is_active: bool

preferences: Preferences

last_login: Optional[str] = None

meta_tags: Optional[List[str]] = None

How to Run This Example:

- Provide the agent with a snippet of JSON (paste it in chat or point to a file).

{ "product": "Widget", "cost": 10.99, "stock": null }

- Type:

Convert this JSON to a Pydantic model. - The agent looks at the

examplespair in the skill folder. - It generates a Python class that perfectly mimics the coding style, imports, and structure of

output_model.py, including handling the null stock as Optional.

A sample output (product_model.py) is shown below:

from pydantic import BaseModel

from typing import Optional

class Product(BaseModel):

product: str

cost: float

stock: Optional[int] = None

Level 4: Procedural Logic (database-schema-validator)

This is the "Tool Use" Pattern.

If you ask an LLM "Is this schema safe?", it might say all is well, even if a critical primary key is missing, simply because the SQL looks correct.

Let's delegate this check to a deterministic Script. We use the Skill to route the agent to run a Python script that we wrote. The script provides binary (True/False) truth.

database-schema-validator/

├── SKILL.md

└── scripts/

└── validate_schema.py (The Validator)

The SKILL.md file is shown below:

---

name: database-schema-validator

description: Validates SQL schema files for compliance with internal safety and naming policies.

---

# Database Schema Validator Skill

This skill ensures that all SQL files provided by the user comply with our strict database standards.

Policies Enforced

1. **Safety**: No `DROP TABLE` statements.

2. **Naming**: All tables must use `snake_case`.

3. **Structure**: Every table must have an `id` column as PRIMARY KEY.

Instructions

1. **Do not read the file manually** to check for errors. The rules are complex and easily missed by eye.

2. **Run the Validation Script**:

Use the `run_command` tool to execute the python script provided in the `scripts/` folder against the user's file.

`python scripts/validate_schema.py <path_to_user_file>`

3. **Interpret Output**:

- If the script returns **exit code 0**: Tell the user the schema looks good.

- If the script returns **exit code 1**: Report the specific error messages printed by the script to the user and suggest fixes.

The validate_schema.py file is shown below:

import sys

import re

def validate_schema(filename):

"""

Validates a SQL schema file against internal policy:

1. Table names must be snake_case.

2. Every table must have a primary key named 'id'.

3. No 'DROP TABLE' statements allowed (safety).

"""

try:

with open(filename, 'r') as f:

content = f.read()

lines = content.split('\n')

errors = []

# Check 1: No DROP TABLE

if re.search(r'DROP TABLE', content, re.IGNORECASE):

errors.append("ERROR: 'DROP TABLE' statements are forbidden.")

# Check 2 & 3: CREATE TABLE checks

table_defs = re.finditer(r'CREATE TABLE\s+(?P<name>\w+)\s*\((?P<body>.*?)\);', content, re.DOTALL | re.IGNORECASE)

for match in table_defs:

table_name = match.group('name')

body = match.group('body')

# Snake case check

if not re.match(r'^[a-z][a-z0-9_]*$', table_name):

errors.append(f"ERROR: Table '{table_name}' must be snake_case.")

# Primary key check

if not re.search(r'\bid\b.*PRIMARY KEY', body, re.IGNORECASE):

errors.append(f"ERROR: Table '{table_name}' is missing a primary key named 'id'.")

if errors:

for err in errors:

print(err)

sys.exit(1)

else:

print("Schema validation passed.")

sys.exit(0)

except FileNotFoundError:

print(f"Error: File '{filename}' not found.")

sys.exit(1)

if __name__ == "__main__":

if len(sys.argv) != 2:

print("Usage: python validate_schema.py <schema_file>")

sys.exit(1)

validate_schema(sys.argv[1])

How to Run This Example:

- Create a bad SQL file

bad_schema.sql:CREATE TABLE users (name TEXT); - Type:

Validate bad_schema.sql. - The agent does not guess. It will invoke the script, which fails (Exit Code 1) and it will report to us that "The validation failed because the table ‘users' is missing a primary key."

Level 5: The Architect (adk-tool-scaffold)

This pattern covers most of the features available in Skills.

Complex tasks often require a sequence of operations that combine everything we've seen: creating files, following templates, and writing logic. Creating a new Tool for the ADK (Agent Development Kit) requires all of this.

We combine:

- Script (to handle the file creation/scaffolding)

- Template (to handle boilerplate in resources)

- An Example (to guide the logic generation).

adk-tool-scaffold/

├── SKILL.md

├── resources/

│ └── ToolTemplate.py.hbs (Jinja2 Template)

├── scripts/

│ └── scaffold_tool.py (Generator Script)

└── examples/

└── WeatherTool.py (Reference Implementation)

The SKILL.md file is shown below. You can refer to the repository of skills to check the files in the scripts, resources and examples folder. For this specific Skill, go to the adk-tool-scaffold skill.

---

name: adk-tool-scaffold

description: Scaffolds a new custom Tool class for the Agent Development Kit (ADK).

---

# ADK Tool Scaffold Skill

This skill automates the creation of standard `BaseTool` implementations for the Agent Development Kit.

Instructions

1. **Identify the Tool Name**:

Extract the name of the tool the user wants to build (e.g., "StockPrice", "EmailSender").

2. **Review the Example**:

Check `examples/WeatherTool.py` to understand the expected structure of an ADK tool (imports, inheritance, schema).

3. **Run the Scaffolder**:

Execute the python script to generate the initial file.

`python scripts/scaffold_tool.py <ToolName>`

4. **Refine**:

After generation, you must edit the file to:

- Update the `execute` method with real logic.

- Define the JSON schema in `get_schema`.

Example Usage

User: "Create a tool to search Wikipedia."

Agent:

1. Runs `python scripts/scaffold_tool.py WikipediaSearch`

2. Editing `WikipediaSearchTool.py` to add the `requests` logic and `query` argument schema.

How to Run this Example:

- Type:

Create a new ADK tool called StockPrice to fetch data from an API. - Step 1 (Scaffolding): The agent runs the python script. This instantly creates

StockPriceTool.pywith the correct class structure, imports, and class nameStockPriceTool. - Step 2 (Implementation): The agent "reads" the file it just made. It sees

# TODO: Implement logic. - Step 3 (Guidance): It's not sure how to define the JSON schema for the tool arguments. It checks

examples/WeatherTool.py. - Completion: It edits the file to add

requests.get(...)and defines the ticker argument in the schema, exactly matching the ADK style.

6. Congratulations

You have successfully completed the lab on Antigravity Skills and built the following Skills:

- Git commit formatter.

- License header adder.

- JSON to Pydantic.

- Database schema validator.

- ADK Tool scaffolding.

Agent Skills are definitely a great way to bring Antigravity to write code in your way, follow rules, and use your tools.

Reference docs

- Codelab : Getting Started with Google Antigravity

- Official Site : https://antigravity.google/

- Documentation: https://antigravity.google/docs

- Download : https://antigravity.google/download

- Antigravity Skills documentation: https://antigravity.google/docs/skills