1. 📖 Introduction

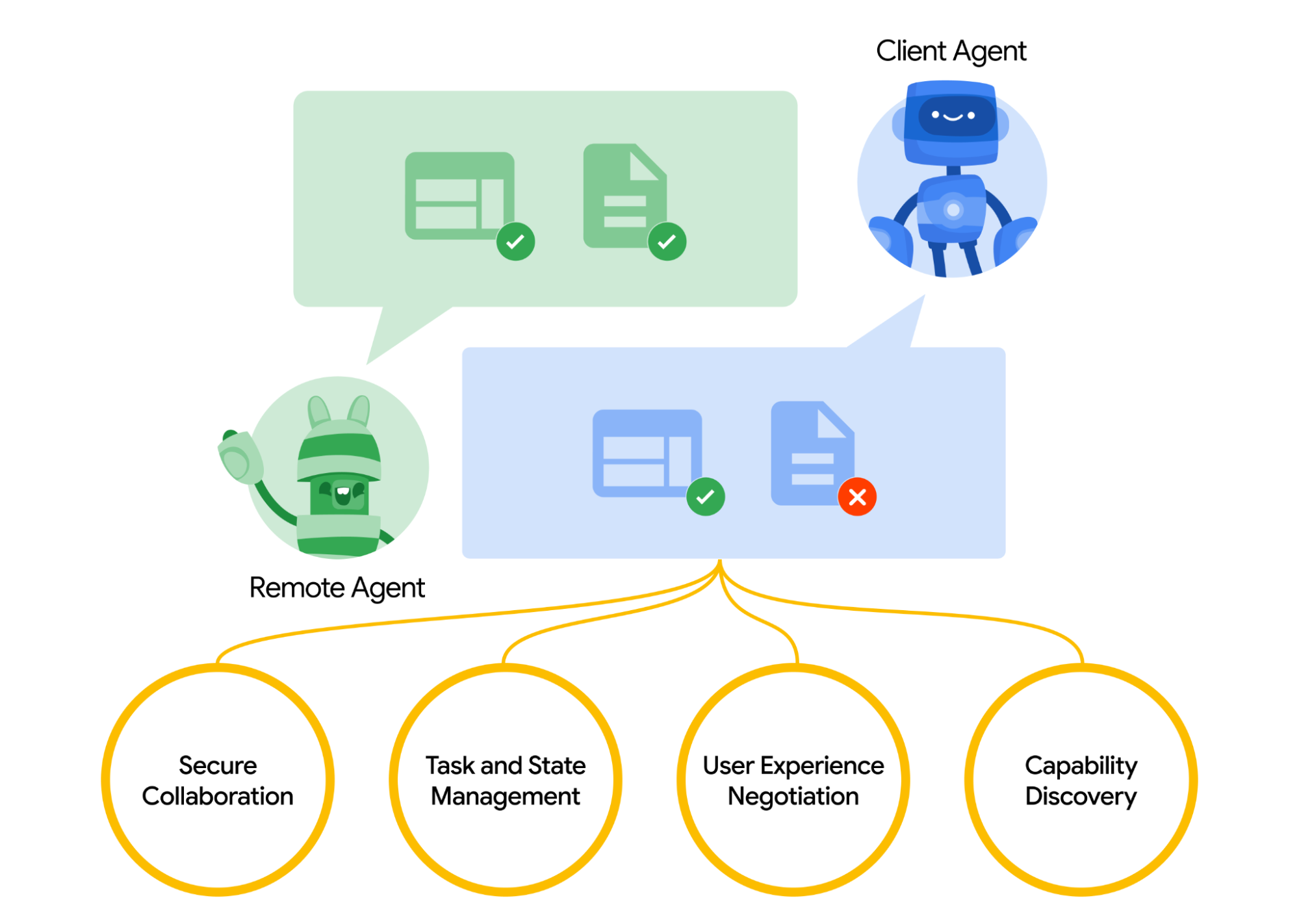

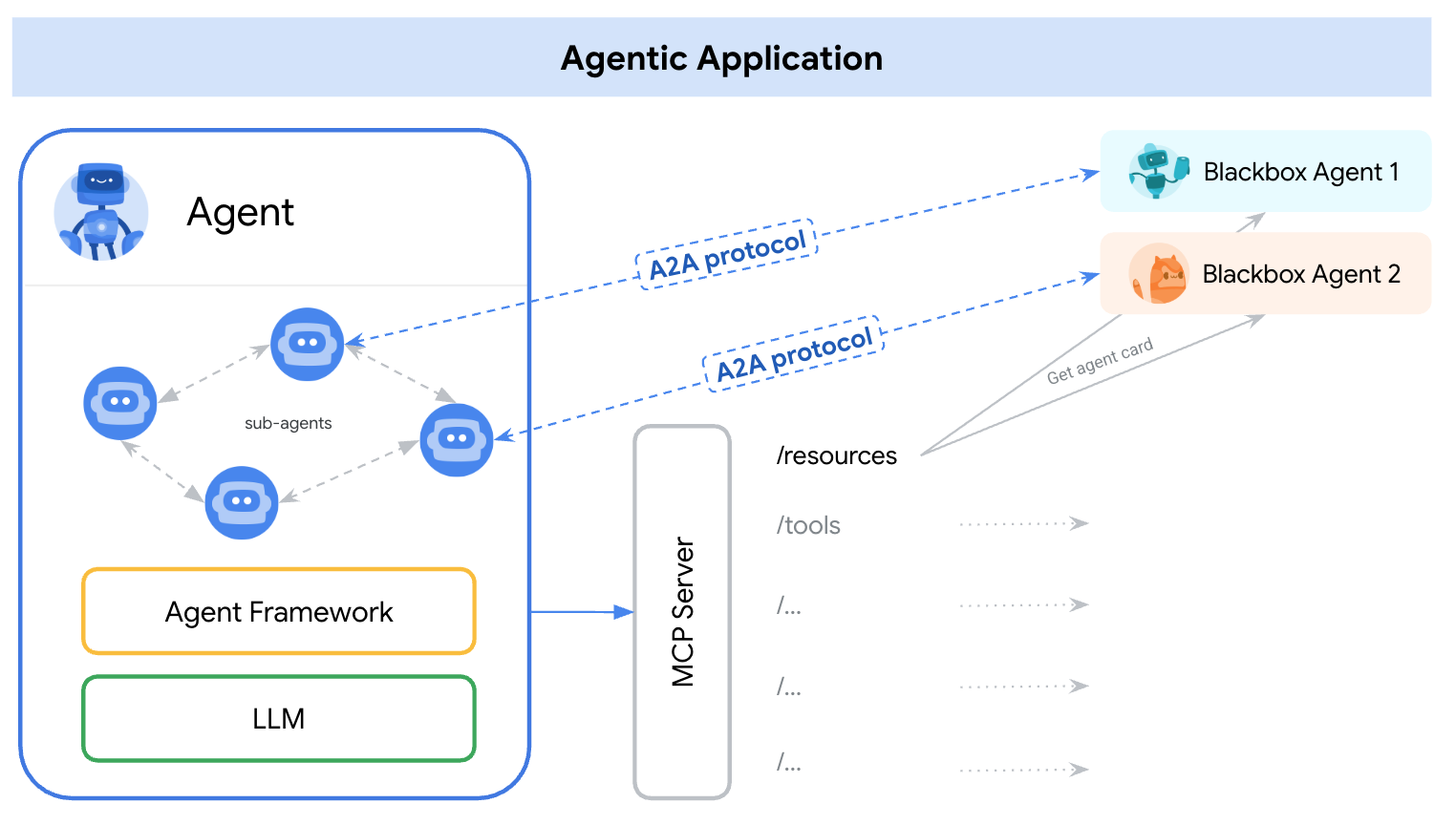

Agent2Agent (A2A) protocol is designed to standardize communication between AI agents, particularly for those which are deployed in external systems. Previously, such protocols were established for Tools called Model Context Protocol (MCP) which is an emerging standard to connect LLMs with data and resources. A2A tries to complement MCP where A2A is focused on a different problem, while MCP focuses on lowering complexity to connect agents with tools and data, A2A focuses on how to enable agents to collaborate in their natural modalities. It allows agents to communicate as agents (or as users) instead of as tools; for example, enable back-and-forth communication when you want to order something.

A2A is positioned to complement MCP, in the official documentation it is recommended that applications use MCP for tools and A2A for agents - represented by AgentCard ( We will discuss this later on ). The frameworks can then use A2A to communicate with their user, the remote agents, and other agents.

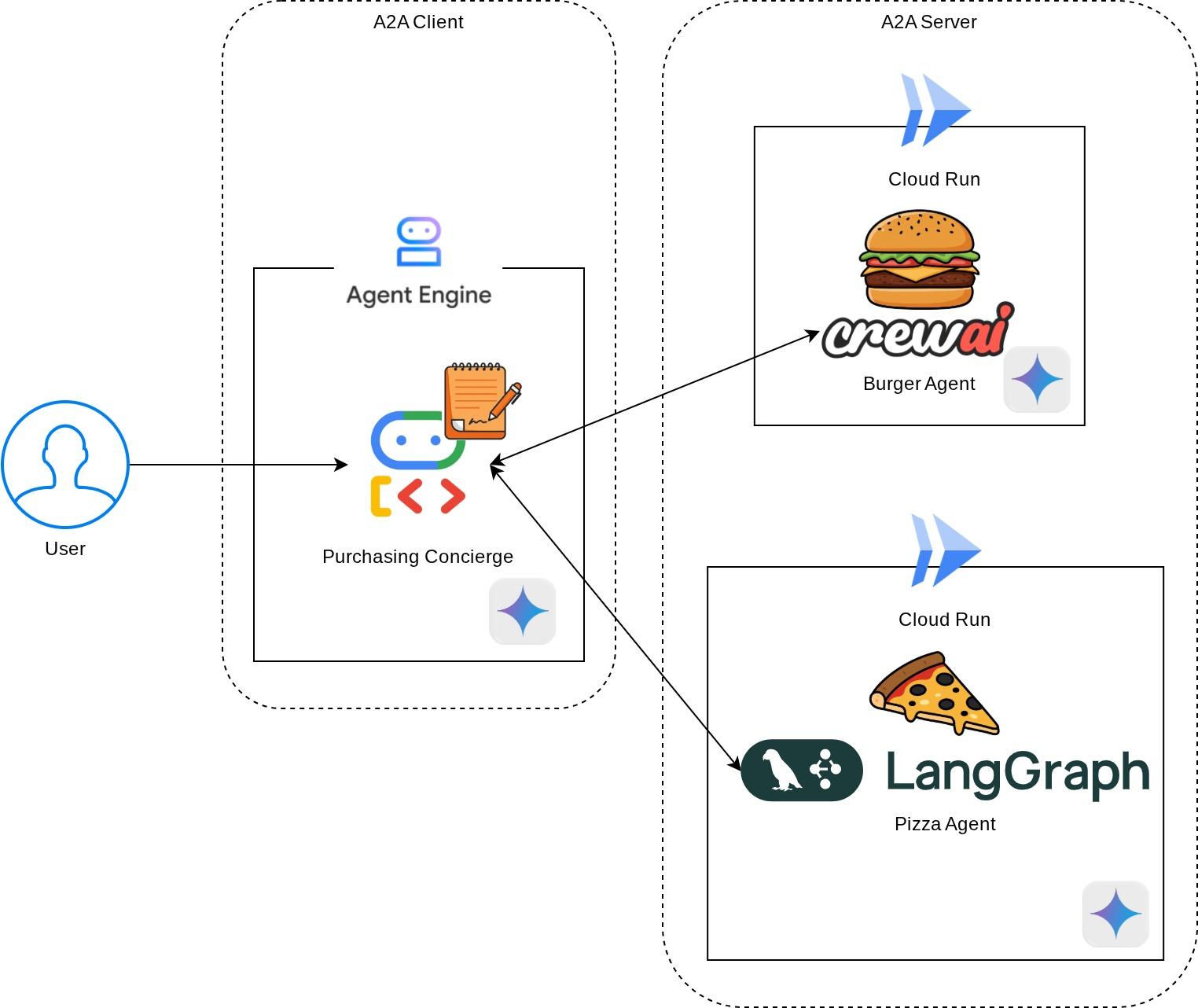

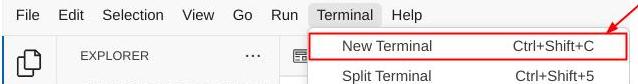

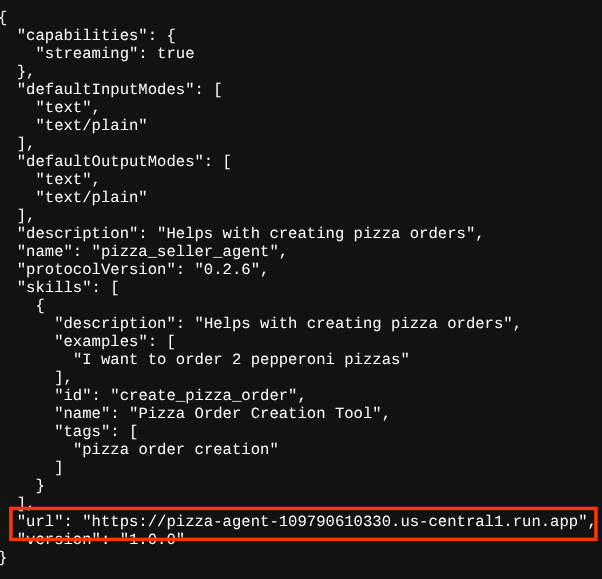

In this demo, we will start with implementation of A2A using its python SDK. We will explore a use case when we have a personal purchasing concierge which can help us to communicate with burger and pizza seller agents to handle our order.

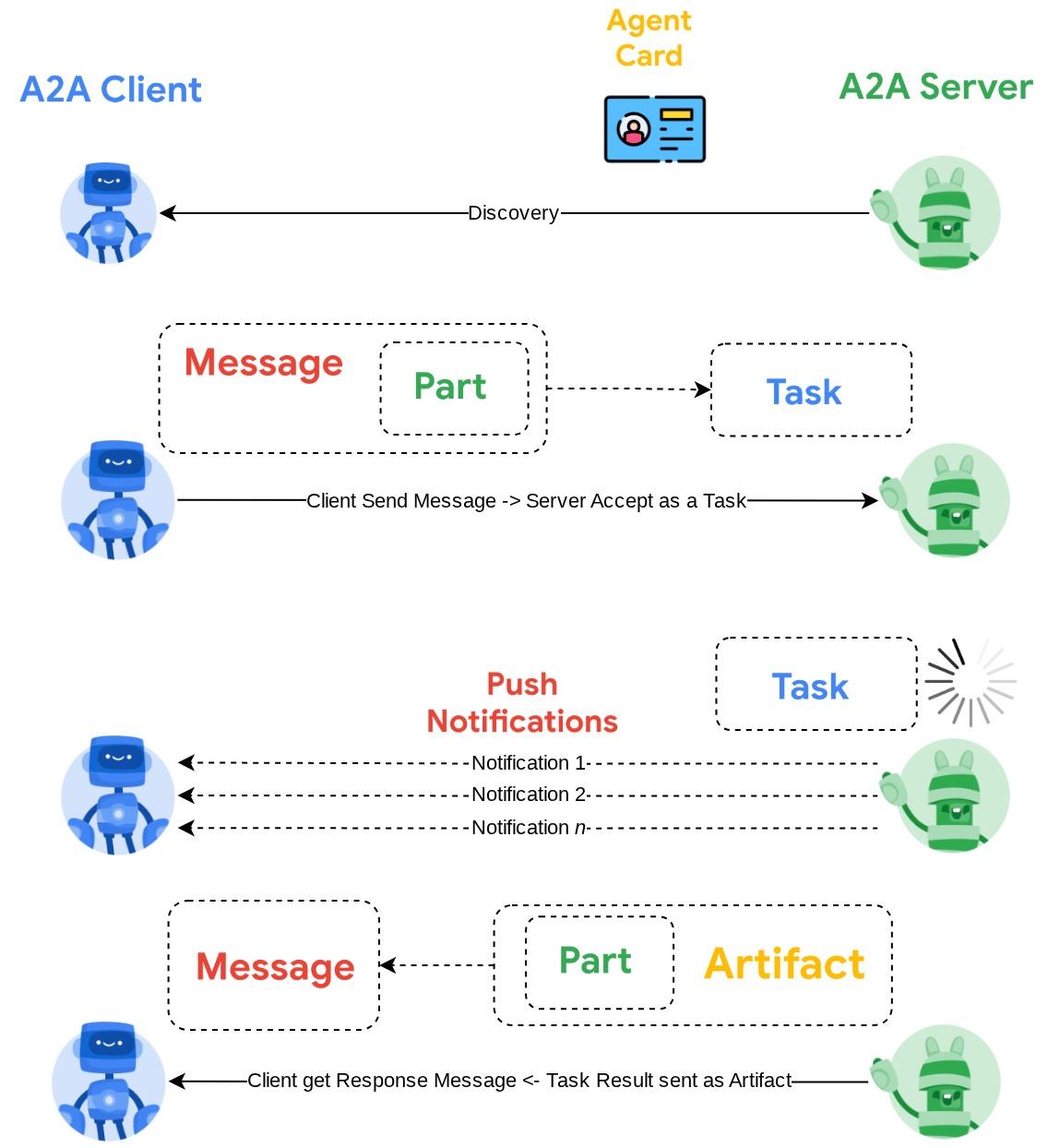

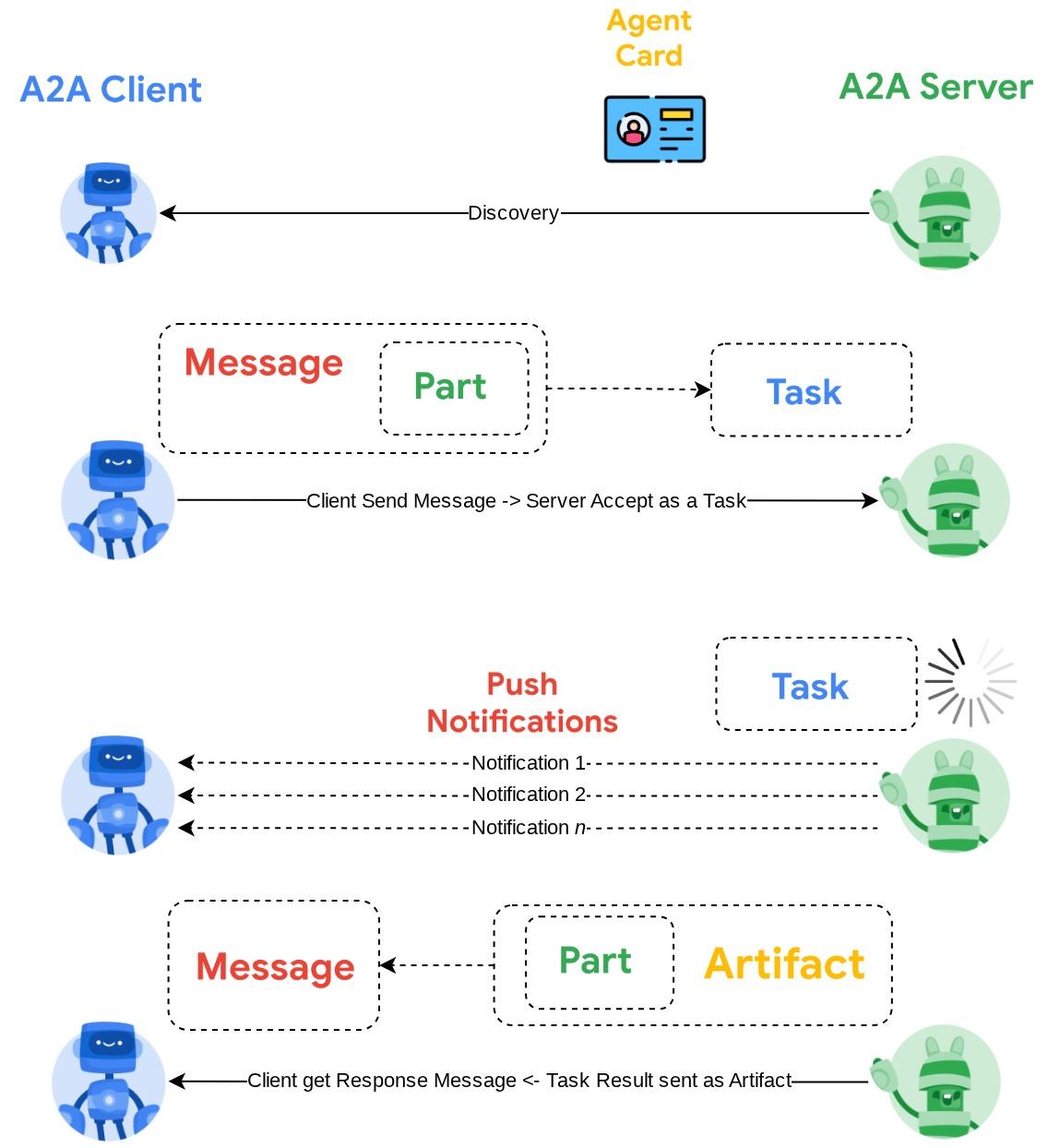

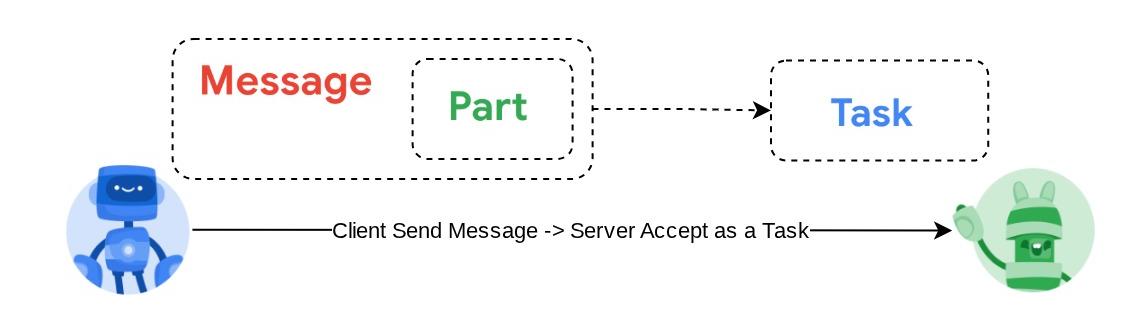

A2A utilizes client-server principle. Here is the typical A2A flow that you will expect in this tutorial

- A2A Client will first doing discovery on all accessible A2A Server agent card and utilize its information to build a connection client

- When required, A2A Client will send a message to the A2A Server, the server will evaluate this as a Task to be completed. If push notification receiver URL is configured on the A2A client and supported by A2A Server, the server will also capable to publish the state of the task progression to the receiving endpoint on the client

- After task finished, the A2A server will send the response artifact to the A2A Client

Through the codelab, you will employ a step by step approach as follows:

- Prepare Google Cloud project

- Setup work directory for coding environment

- Deploy burger agent to Cloud Run

- Deploy pizza agent to Cloud Run

- Deploy purchasing concierge to Agent Engine

- Interact with the purchasing concierge via local interface

Architecture Overview

You will deploy the following service architecture

You will deploy 2 services which will act as A2A server, the Burger agent ( backed by CrewAI agent framework ) and Pizza agent ( backed by Langgraph agent framework ). The user will only directly interact with the Purchasing concierge which will be run using Agent Development Kit (ADK) framework which will act as A2A client.

Each of these agents will have their own environment and deployment on their own.

Prerequisites

- Comfortable working with Python

- An understanding of basic full-stack architecture using HTTP service

What you'll learn

- Core structure of A2A Server

- Core structure of A2A Client

- Deploying agent service to Cloud Run

- Deploying agent service to Agent Engine

- How A2A Client connect to A2A Server

- Request and Response structure on non-streaming connection

What you'll need

- Chrome web browser

- A Gmail account

- A Cloud Project with billing account enabled

This codelab, designed for developers of all levels (including beginners), uses Python in its sample application. However, Python knowledge isn't required for understanding the concepts presented.

2. 🚀 Preparing Workshop Development Setup

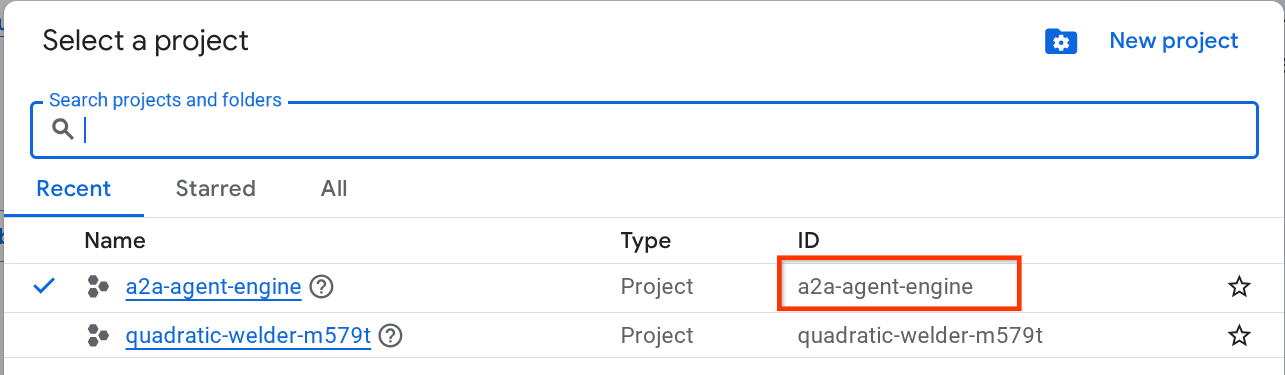

Step 1: Select Active Project in the Cloud Console

In the Google Cloud Console, on the project selector page, select or create a Google Cloud project (see top left section of your console)

Click on it, and you will see list of all of your project like this example,

The value that is indicated by the red box is the PROJECT ID and this value will be used throughout the tutorial.

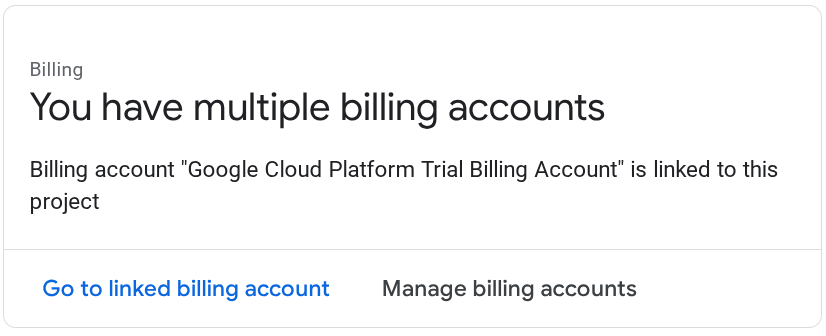

Make sure that billing is enabled for your Cloud project. To check this, click on the burger icon ☰ on your top left bar which shows the Navigation Menu and find the Billing menu

If you see the "Google Cloud Platform Trial Billing Account" is linked, your project is ready to be utilized for this tutorial. If not, go back to the start of this tutorial and redeem the billing account

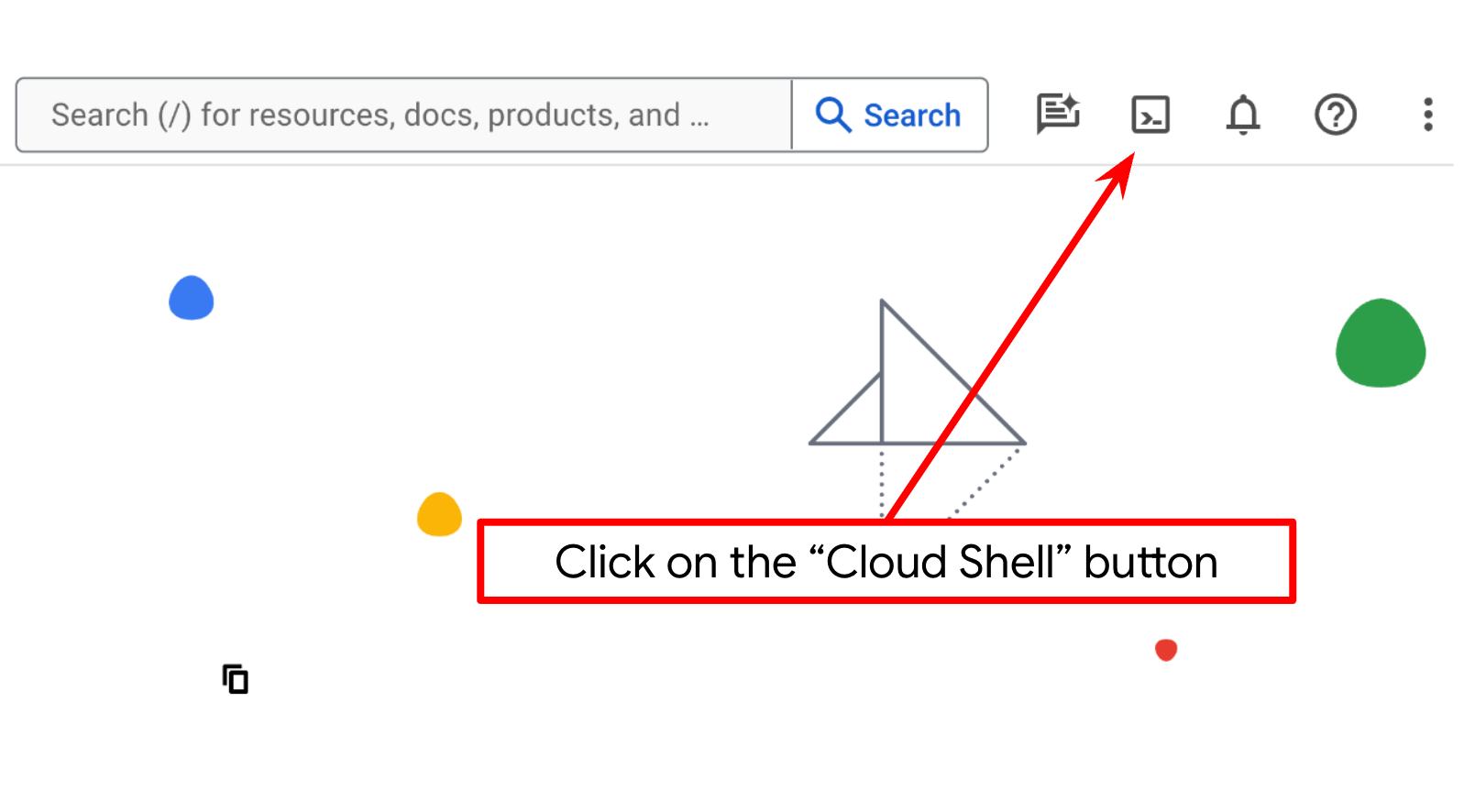

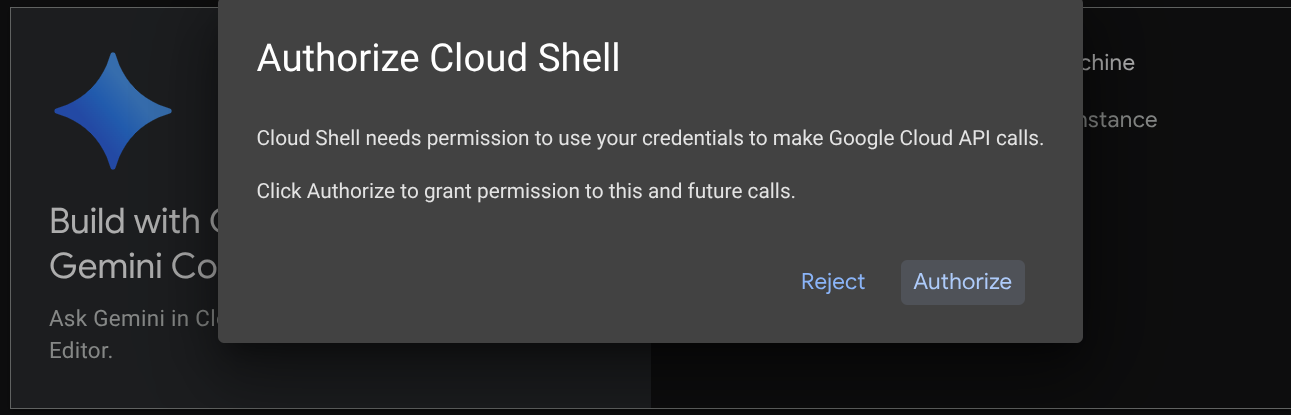

Step 2: Familiarize with Cloud Shell

You'll use Cloud Shell for most part of the tutorials, Click Activate Cloud Shell at the top of the Google Cloud console. If it prompts you to authorize, click Authorize

Once connected to Cloud Shell, we will need to check whether the shell ( or terminal ) is already authenticated with our account

gcloud auth list

If you see your personal gmail like below example output, all is good

Credentialed Accounts

ACTIVE: *

ACCOUNT: alvinprayuda@gmail.com

To set the active account, run:

$ gcloud config set account `ACCOUNT`

If not, try refreshing your browser and ensure you click the Authorize when prompted ( it might be interrupted due to connection issue )

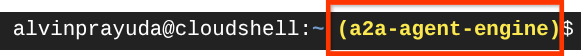

Next, we also need to check whether the shell is already configured to the correct PROJECT ID that you have, if you see there is value inside ( ) before the $ icon in the terminal ( in below screenshot, the value is "a2a-agent-engine" ) this value shows the configured project for your active shell session.

If the shown value is already correct, you can skip the next command. However if it's not correct or missing, run the following command

gcloud config set project <YOUR_PROJECT_ID>

Then, clone the template working directory for this codelab from Github, run the following command. It will create the working directory in the purchasing-concierge-a2a directory

git clone https://github.com/alphinside/purchasing-concierge-intro-a2a-codelab-starter.git purchasing-concierge-a2a

Step 3: Familiarize with Cloud Shell Editor and Setup Application Working Directory

Now, we can set up our code editor to do some coding stuff. We will use the Cloud Shell Editor for this

Click on the Open Editor button, this will open a Cloud Shell Editor

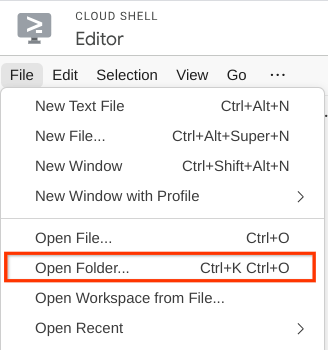

After that, go to the top section of the Cloud Shell Editor and click File->Open Folder, find your username directory and find the purchasing-concierge-a2a directory then click the OK button. This will make the chosen directory as the main working directory. In this example, the username is alvinprayuda, hence the directory path is shown below

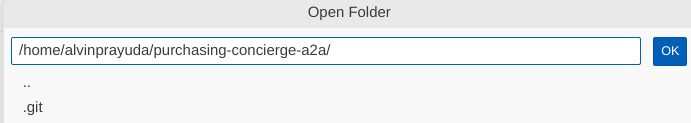

Now, your Cloud Shell Editor should look like this

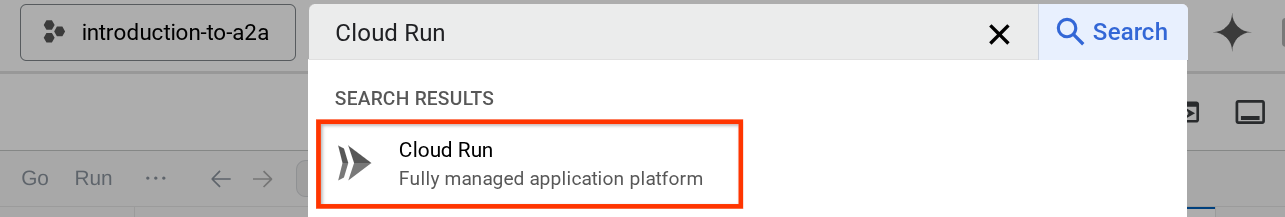

Now, open the terminal for the editor. You can do it by clicking on Terminal -> New Terminal on the menu bar, or use Ctrl + Shift + C , it will open a terminal window on the bottom part of the browser

Your current active terminal should be inside the purchasing-concierge-a2a working directory. We will utilize Python 3.12 in this codelab and we will use uv python project manager to simplify the need of creating and managing python version and virtual environment. This uv package is already preinstalled on Cloud Shell.

Run this command to install the required dependencies to the virtual environment on the .venv directory

uv sync --frozen

Check the pyproject.toml to see the declared dependencies for this tutorial which are a2a-sdk, google-adk, and gradio.

Now, we will need to enable the required APIs via the command shown below. This could take a while.

gcloud services enable aiplatform.googleapis.com \

run.googleapis.com \

cloudbuild.googleapis.com \

cloudresourcemanager.googleapis.com

On successful execution of the command, you should see a message similar to the one shown below:

Operation "operations/..." finished successfully.

3. 🚀 Deploying A2A Server Remote Seller Agents to Cloud Run

In this step, we will deploy these two remote seller agents marked by the red box. The burger agent will be powered by CrewAI agent framework and the pizza agent will be powered by Langgraph agent

4. 🚀 Deploying Burger Seller Agent - A2A Server

The burger agent source code is under the remote_seller_agents/burger_agent directory.

All files that exist under remote_seller_agents/burger_agent directory are already sufficient to deploy our agent to Cloud Run so that it can be accessible as a service. Run the following command to deploy it

gcloud run deploy burger-agent \

--source remote_seller_agents/burger_agent \

--port=8080 \

--allow-unauthenticated \

--min 1 \

--region us-central1 \

--update-env-vars GOOGLE_CLOUD_LOCATION=us-central1 \

--update-env-vars GOOGLE_CLOUD_PROJECT={your-project-id}

If you are prompted that a container repository will be created for deploying from source, answer Y. After successful deployment it will show a log like this.

Service [burger-agent] revision [burger-agent-xxxxx-xxx] has been deployed and is serving 100 percent of traffic. Service URL: https://burger-agent-xxxxxxxxx.us-central1.run.app

The xxxx part here will be a unique identifier when we deploy the service.

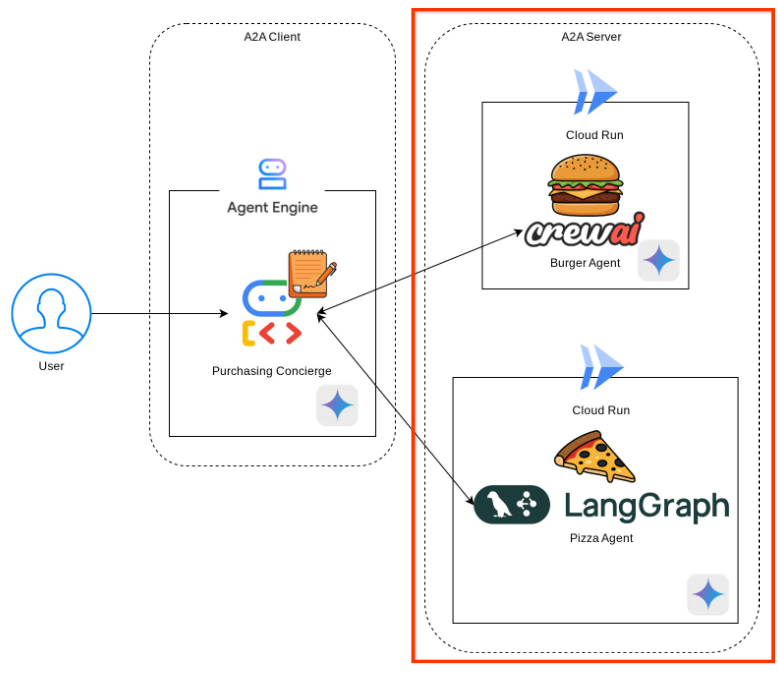

Open a new browser tab and go to https://burger-agent-xxxxxxxxx.us-central1.run.app/.well-known/agent.json route of those deployed burger agent services via browser. This is the URL to access the deployed A2A server agent card.

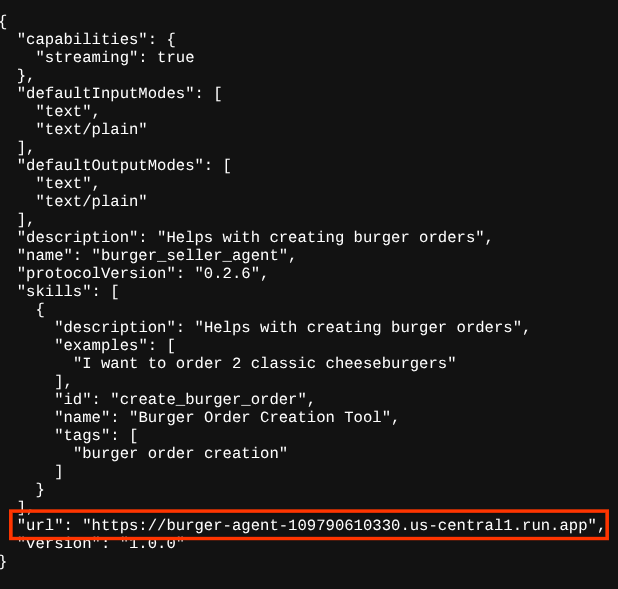

If successfully deployed, you will see the response like shown below in your browser when accessing the agent card

This is the burger agent card information that should be accessible for discovery purposes.

Notice that the url value is still set at http://0.0.0.0:8080/ here. This url value should be the main information for A2A Client to send messages from the outside world and it is not configured properly.

We need to update this value to the URL of our burger agent service by adding an additional environment variable HOST_OVERRIDE.

Updating the Burger Agent URL Value on Agent Card via Environment Variable

To add HOST_OVERRIDE to burger agent service, do the following steps

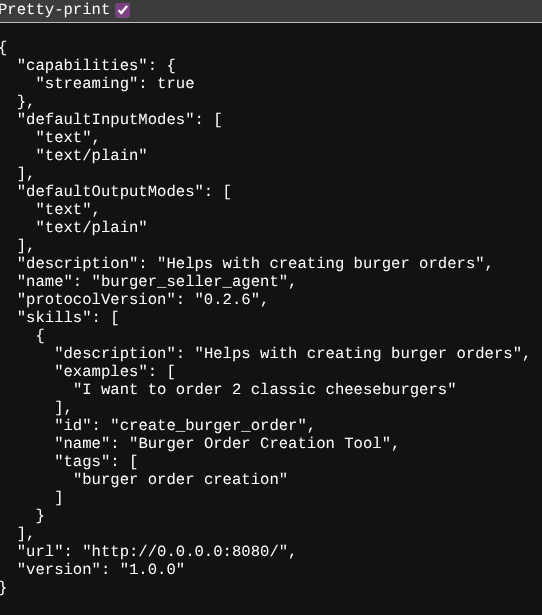

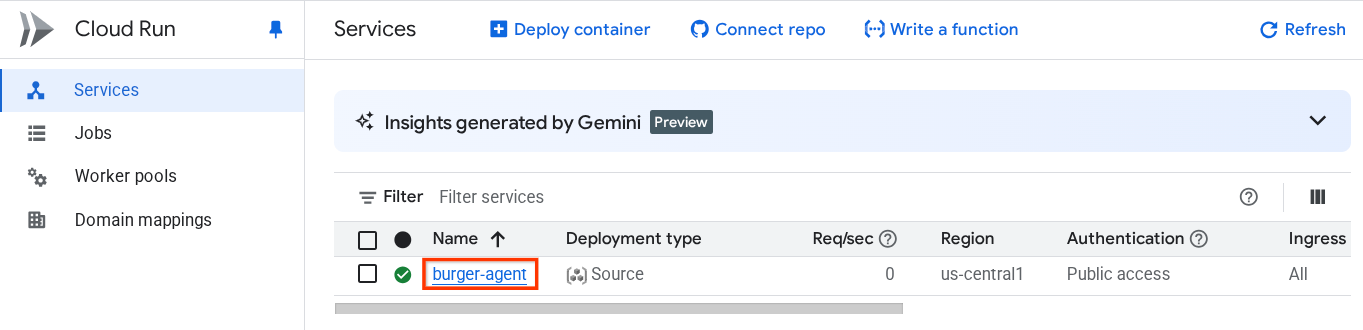

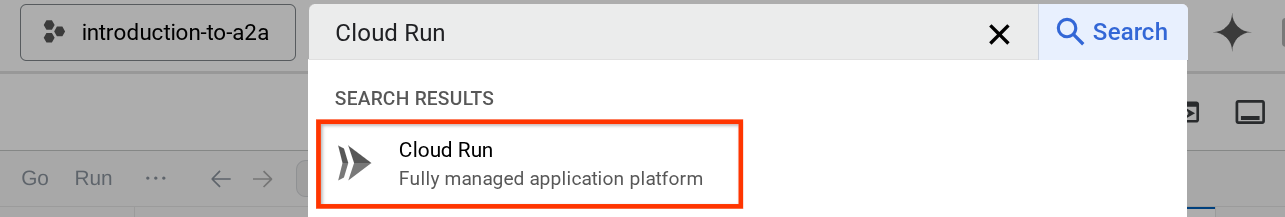

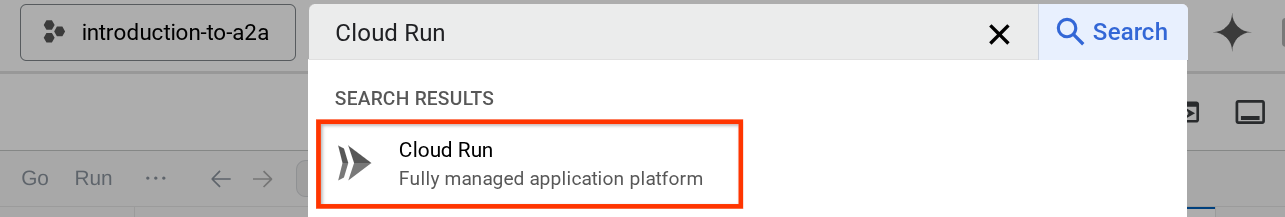

- Search Cloud Run on search bar on top of your cloud console

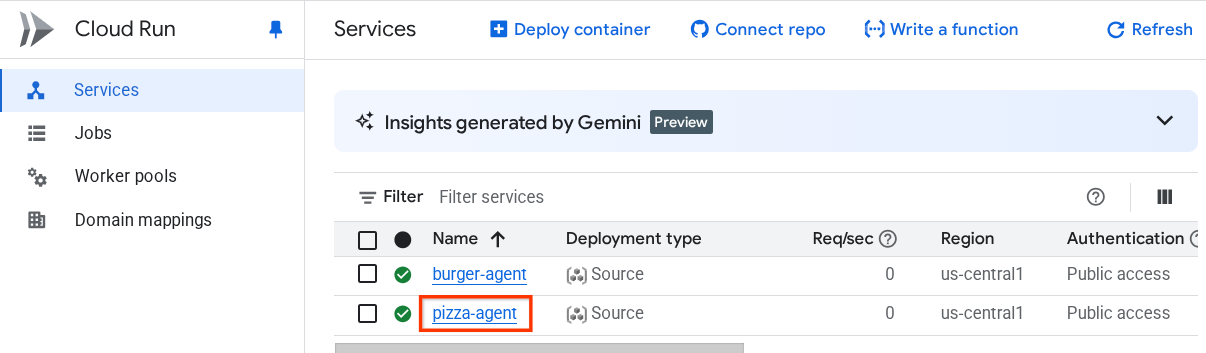

- Click on previously deployed burger-agent cloud run service

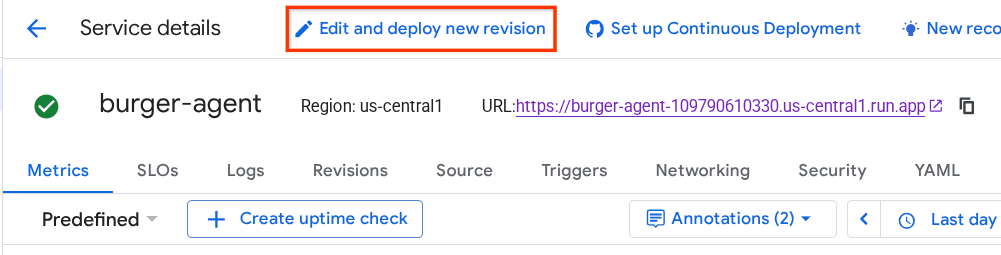

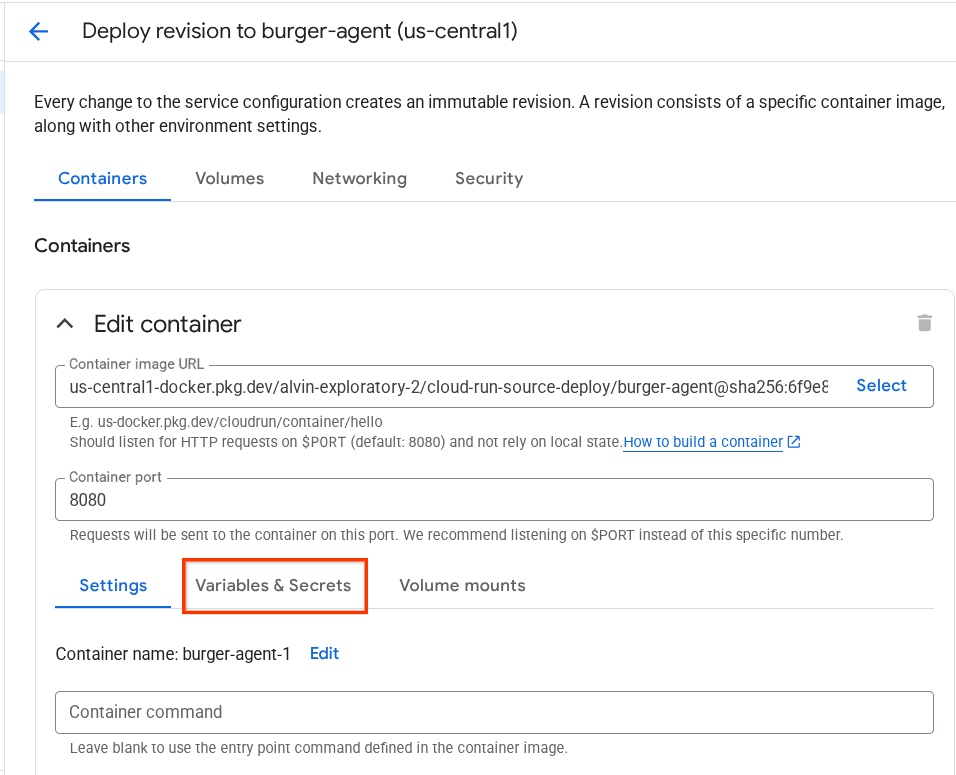

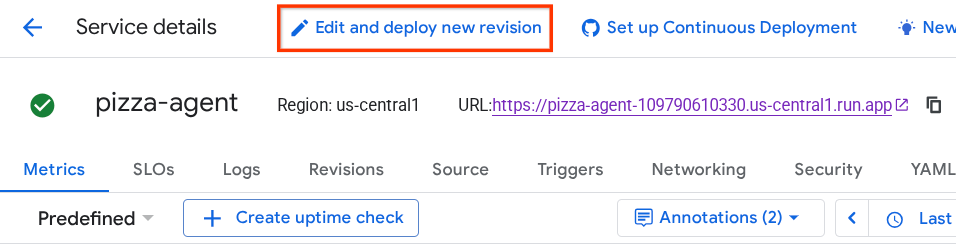

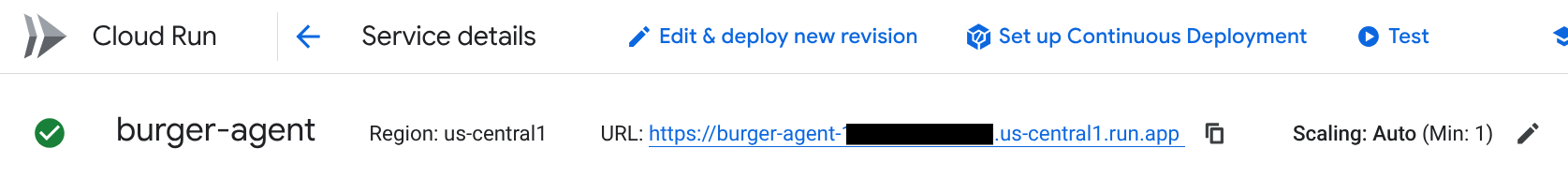

- Copy the burger-service URL, then click on the Edit and deploy new revision

- Then, click on Variable & Secrets section

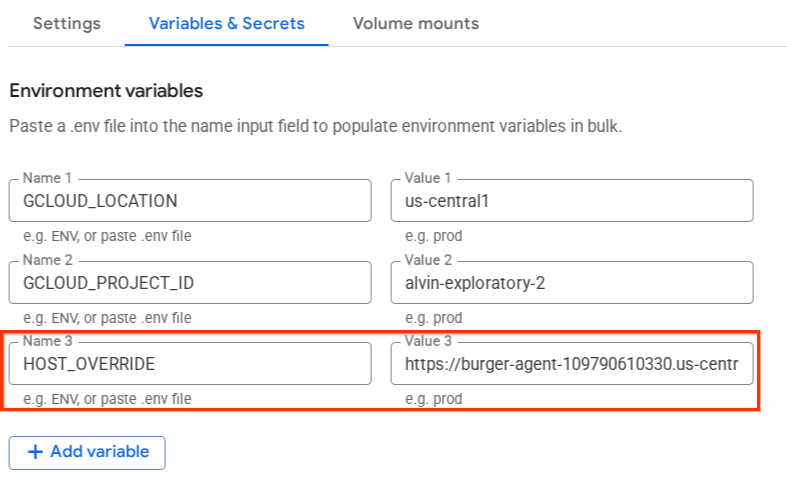

- After that, click Add variable and set the

HOST_OVERRIDEthe value to the service URL ( the one withhttps://burger-agent-xxxxxxxxx.us-central1.run.apppattern )

- Finally, click the deploy button to redeploy your service

When you access the burger-agent agent card again in the browser https://burger-agent-xxxxxxxxx.us-central1.run.app/.well-known/agent.json , the url value will already be properly configured

5. 🚀 Deploying Pizza Seller Agent - A2A Server

Similarly, pizza agent source code is under the remote_seller_agents/pizza_agent directory.

Similar to the previous burger-agent deployment step, All files that exist under remote_seller_agents/pizza_agent directory are already sufficient to deploy our agent to Cloud Run so that it can be accessible as a service. Run the following command to deploy it

gcloud run deploy pizza-agent \

--source remote_seller_agents/pizza_agent \

--port=8080 \

--allow-unauthenticated \

--min 1 \

--region us-central1 \

--update-env-vars GOOGLE_CLOUD_LOCATION=us-central1 \

--update-env-vars GOOGLE_CLOUD_PROJECT={your-project-id}

After successful deployment it will show a log like this.

Service [pizza-agent] revision [pizza-agent-xxxxx-xxx] has been deployed and is serving 100 percent of traffic. Service URL: https://pizza-agent-xxxxxxxxx.us-central1.run.app

The xxxx part here will be a unique identifier when we deploy the service.

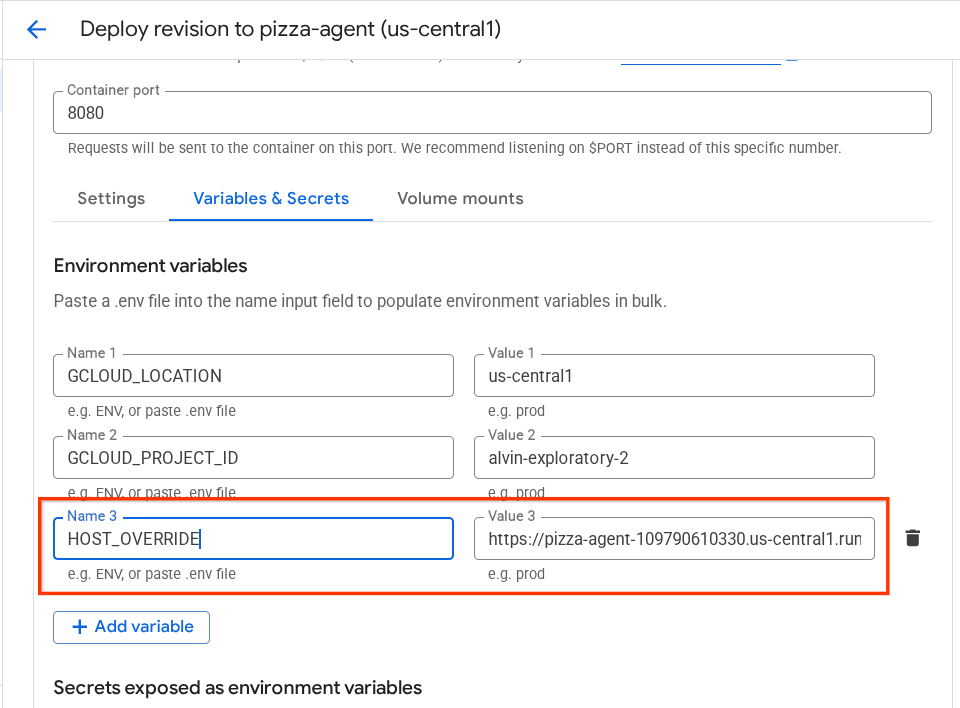

It's the same case with the burger agent, when you try to go to the https://pizza-agent-xxxxxxxxx.us-central1.run.app/.well-known/agent.json route of those deployed pizza agent services via browser to access the A2A server agent card, the pizza agent url value on its agent card is not properly configured yet. We also need to add HOST_OVERRIDE to its environment variable

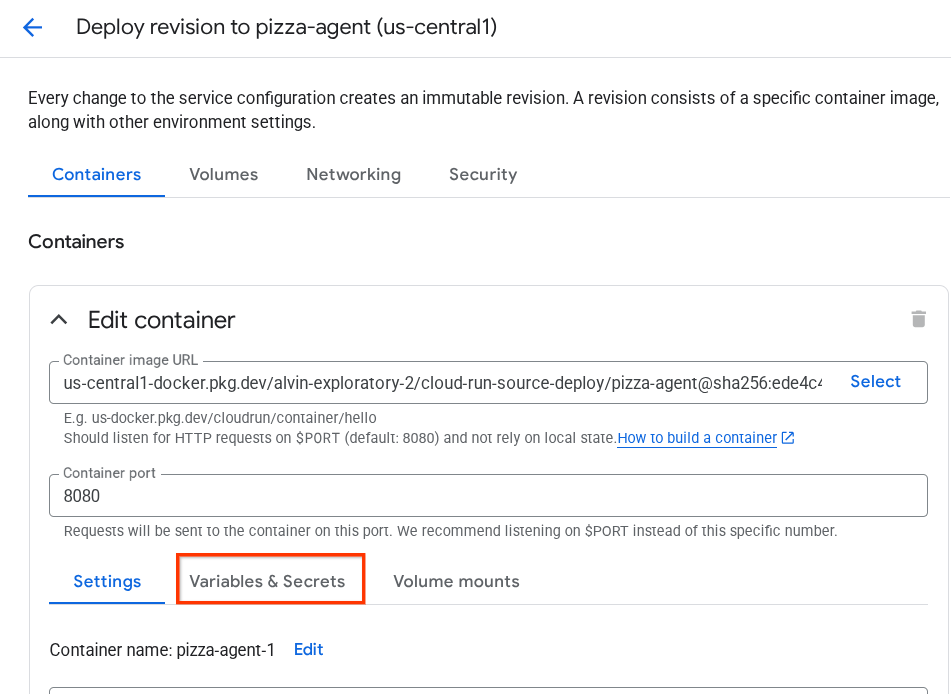

Updating the Pizza Agent URL Value on Agent Card via Environment Variable

To add HOST_OVERRIDE to pizza agent service, do the following steps

- Search Cloud Run on search bar on top of your cloud console

- Click on previously deployed pizza-agent cloud run service

- Click on the Edit and deploy new revision

- Copy the pizza-service URL, then click on Variable & Secrets section

- After that, click Add variable and set the

HOST_OVERRIDEthe value to the service URL ( the one withhttps://pizza-agent-xxxxxxxxx.us-central1.run.apppattern )

- Finally, click the deploy button to redeploy your service

Now, when you access the pizza-agent agent card again in the browser https://pizza-agent-xxxxxxxxx.us-central1.run.app/.well-known/agent.json, the url value will already be properly configured

At this point, we already successfully deploy both burger and pizza services to Cloud Run.

6. 🚀 Deploying the Purchasing Concierge - A2A Client to Agent Engine

In this step, we will deploy the purchasing concierge agent. This agent is the one that we will interact with.

The source code of our purchasing concierge agent is under the purchasing_concierge directory. The agent initialization can be inspected on the purchasing_concierge/purchasing_agent.py script.

Follow this steps to deploy it :

- First, we need to create our staging storage in Cloud Storage

gcloud storage buckets create gs://purchasing-concierge-{your-project-id} --location=us-central1

- Now, we need to prepare the .env variable first, let's copy the .env.example into .env file

cp .env.example .env

- Now, open the .env file and you will see the following content

GOOGLE_GENAI_USE_VERTEXAI=TRUE

GOOGLE_CLOUD_PROJECT={your-project-id}

GOOGLE_CLOUD_LOCATION=us-central1

STAGING_BUCKET=gs://purchasing-concierge-{your-project-id}

PIZZA_SELLER_AGENT_URL={your-pizza-agent-url}

BURGER_SELLER_AGENT_URL={your-burger-agent-url}

AGENT_ENGINE_RESOURCE_NAME={your-agent-engine-resource-name}

This agent will be communicating with the burger and pizza agent, thus we need to provide the proper credentials for both of them. We will need to update the PIZZA_SELLER_AGENT_URL and BURGER_SELLER_AGENT_URL with the Cloud Run URL from previous steps.

If you forget about this, visit the Cloud Run console. Type "Cloud Run" on the search bar on top of your console and right click on the Cloud Run icon to open it in a new tab

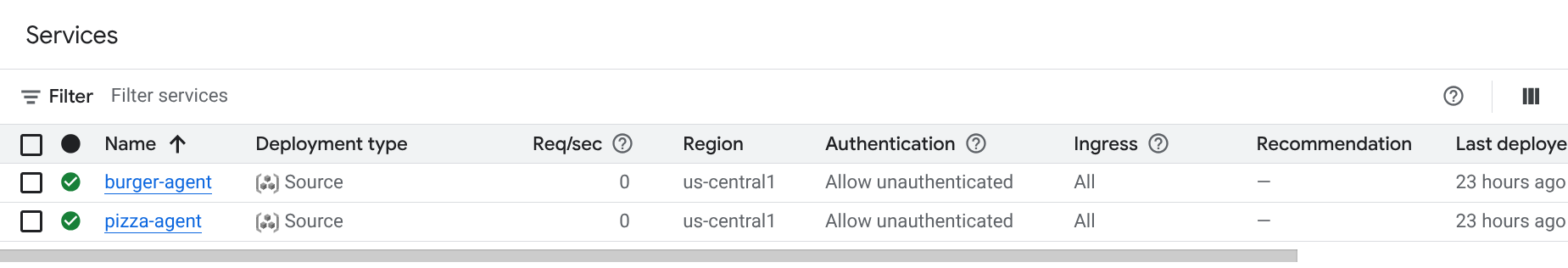

You should see our previous deployed remote seller agent services like shown below

Now to see the public URL of those services, click on the one of the service and you'll be redirected to the Service details page. You can see the URL on the top area right beside the Region information

The final environment variable should look similar to this one

GOOGLE_GENAI_USE_VERTEXAI=TRUE

GOOGLE_CLOUD_PROJECT={your-project-id}

GOOGLE_CLOUD_LOCATION=us-central1

STAGING_BUCKET=gs://purchasing-concierge-{your-project-id}

PIZZA_SELLER_AGENT_URL=https://pizza-agent-xxxxx.us-central1.run.app

BURGER_SELLER_AGENT_URL=https://burger-agent-xxxxx.us-central1.run.app

AGENT_ENGINE_RESOURCE_NAME={your-agent-engine-resource-name}

- Now, we are ready to deploy our purchasing concierge agent. We will deploy it to the agent engine and the deployment code is inside

deploy_to_agent_engine.pyscript.

We can deploy it by running the script:

uv run deploy_to_agent_engine.py

After successful deployment it will show a log like this. It will show you the Agent Engine resource name as "projects/xxxx/locations/us-central1/reasoningEngines/yyyy"

AgentEngine created. Resource name: projects/xxxx/locations/us-central1/reasoningEngines/yyyy

To use this AgentEngine in another session:

agent_engine = vertexai.agent_engines.get('projects/xxxx/locations/us-central1/reasoningEngines/yyyy)

Deployed remote app resource: projects/xxxx/locations/us-central1/reasoningEngines/xxxx

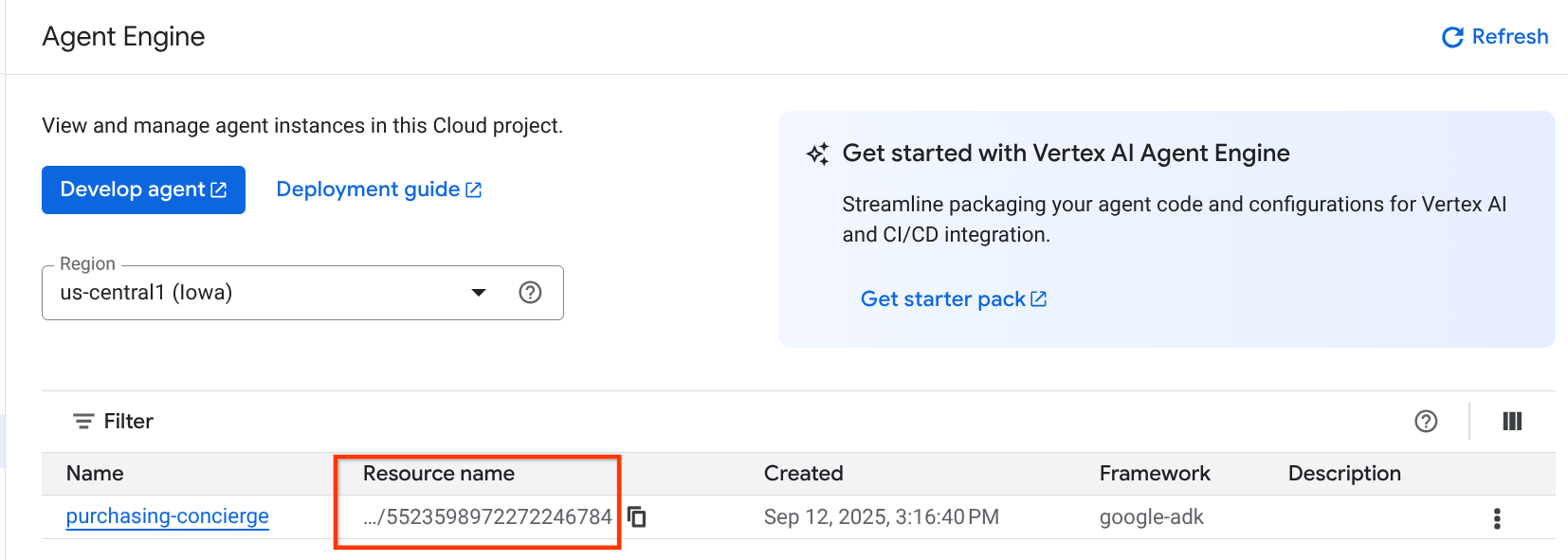

And when we inspect it in agent engine dashboard, ( search for "agent engine" on the search bar ) it will shown our previous deployment

You can also inspect that the Agent Engine resource name is displayed there.Then, we can utilize this resource name to test it

After that, update the AGENT_ENGINE_RESOURCE_NAME in the .env file with this value. Make sure you provide the correct agent engine resource name. Your .env file should look like this:

GOOGLE_GENAI_USE_VERTEXAI=TRUE

GOOGLE_CLOUD_PROJECT={your-project-id}

GOOGLE_CLOUD_LOCATION=us-central1

STAGING_BUCKET=gs://purchasing-concierge-{your-project-id}

PIZZA_SELLER_AGENT_URL=https://pizza-agent-xxxxx.us-central1.run.app

BURGER_SELLER_AGENT_URL=https://burger-agent-xxxxx.us-central1.run.app

AGENT_ENGINE_RESOURCE_NAME=projects/xxxx/locations/us-central1/reasoningEngines/yyyy

Testing the Deployed Agent on Agent Engine

Interaction with the agent engine can be done via curl command and SDK. For example, run the following command to try having an interaction with the deployed agent.

You can try sending this query to check whether the agent is successfully deployed. Run the following test_agent_engine.sh script

bash test_agent_engine.sh

You can inspect the script, and see that we try to ask "List available burger menu please" to the agent

If successful it will show several response events streamed on your console like this

{

"content": {

"parts": [

{

"text": "Here is our burger menu:\n- Classic Cheeseburger: IDR 85K\n- Double Cheeseburger: IDR 110K\n- Spicy Chicken Burger: IDR 80K\n- Spicy Cajun Burger: IDR 85K"

}

],

"role": "model"

},

"usage_metadata": {

"candidates_token_count": 51,

"candidates_tokens_details": [

{

"modality": "TEXT",

"token_count": 51

}

],

"prompt_token_count": 907,

"prompt_tokens_details": [

{

"modality": "TEXT",

"token_count": 907

}

],

"total_token_count": 958,

"traffic_type": "ON_DEMAND"

},

"invocation_id": "e-14679918-af68-45f1-b942-cf014368a733",

"author": "purchasing_agent",

"actions": {

"state_delta": {},

"artifact_delta": {},

"requested_auth_configs": {}

},

"id": "dbe7fc43-b82a-4f3e-82aa-dd97afa8f15b",

"timestamp": 1754287348.941454

}

We will try using UI in the next step, however let's discuss what are the core components and typical flow of A2A clients first

7. 🚀 Integration Testing and Payload Inspection

Now let's inspect our purchasing concierge with the remote agent interaction using a web UI. Run the following command to deploy a Gradio app. Running this app require you to already filled the .env file correctly

uv run purchasing_concierge_ui.py

It will shown the following output if succeeded

* Running on local URL: http://0.0.0.0:8080 * To create a public link, set `share=True` in `launch()`.

Then, Ctrl + click the http://0.0.0.0:8080 url on the terminal or click the web preview button to open the web UI

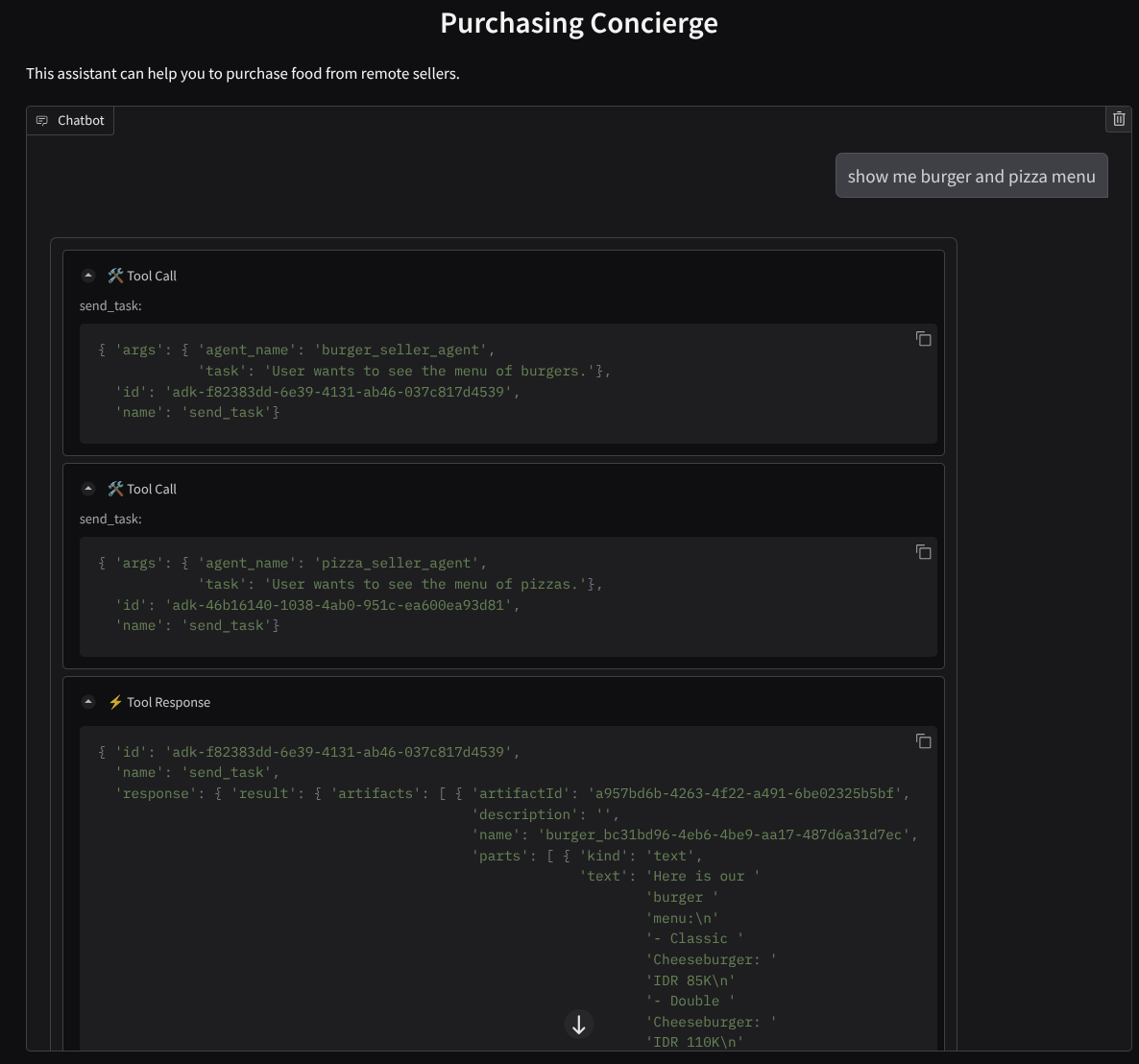

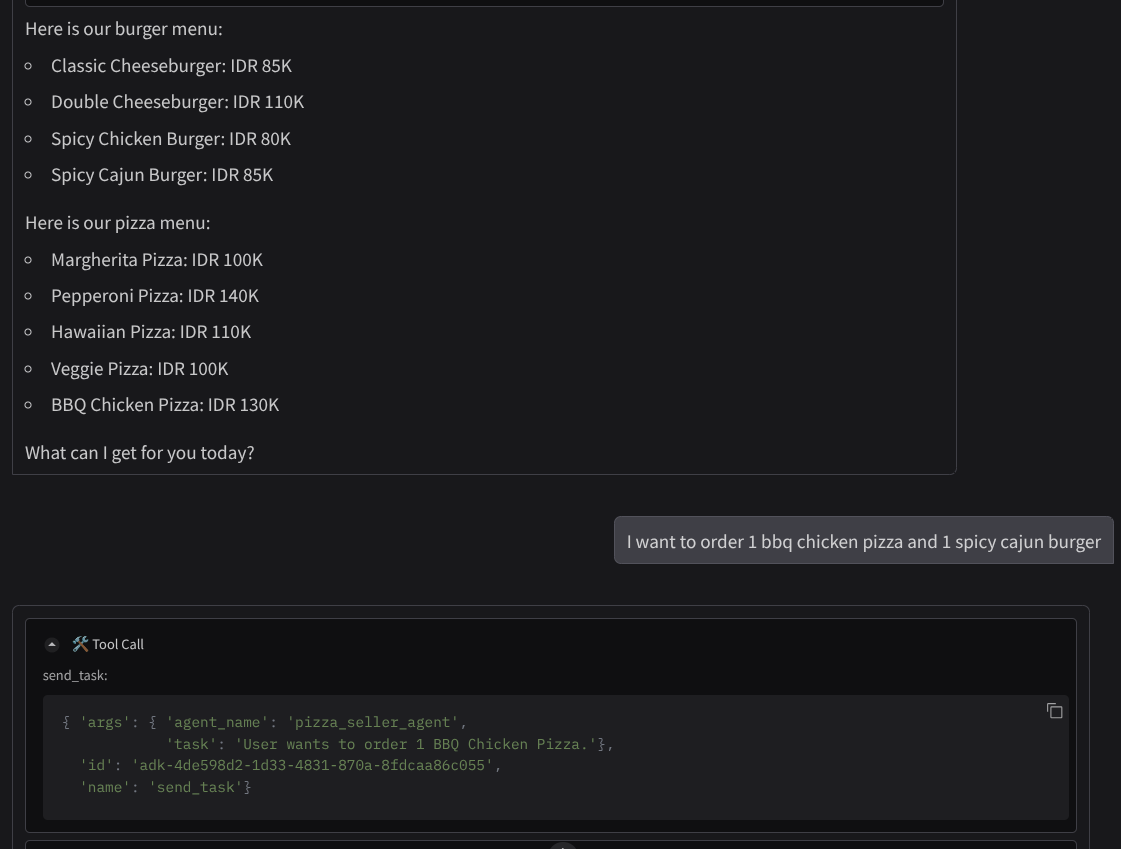

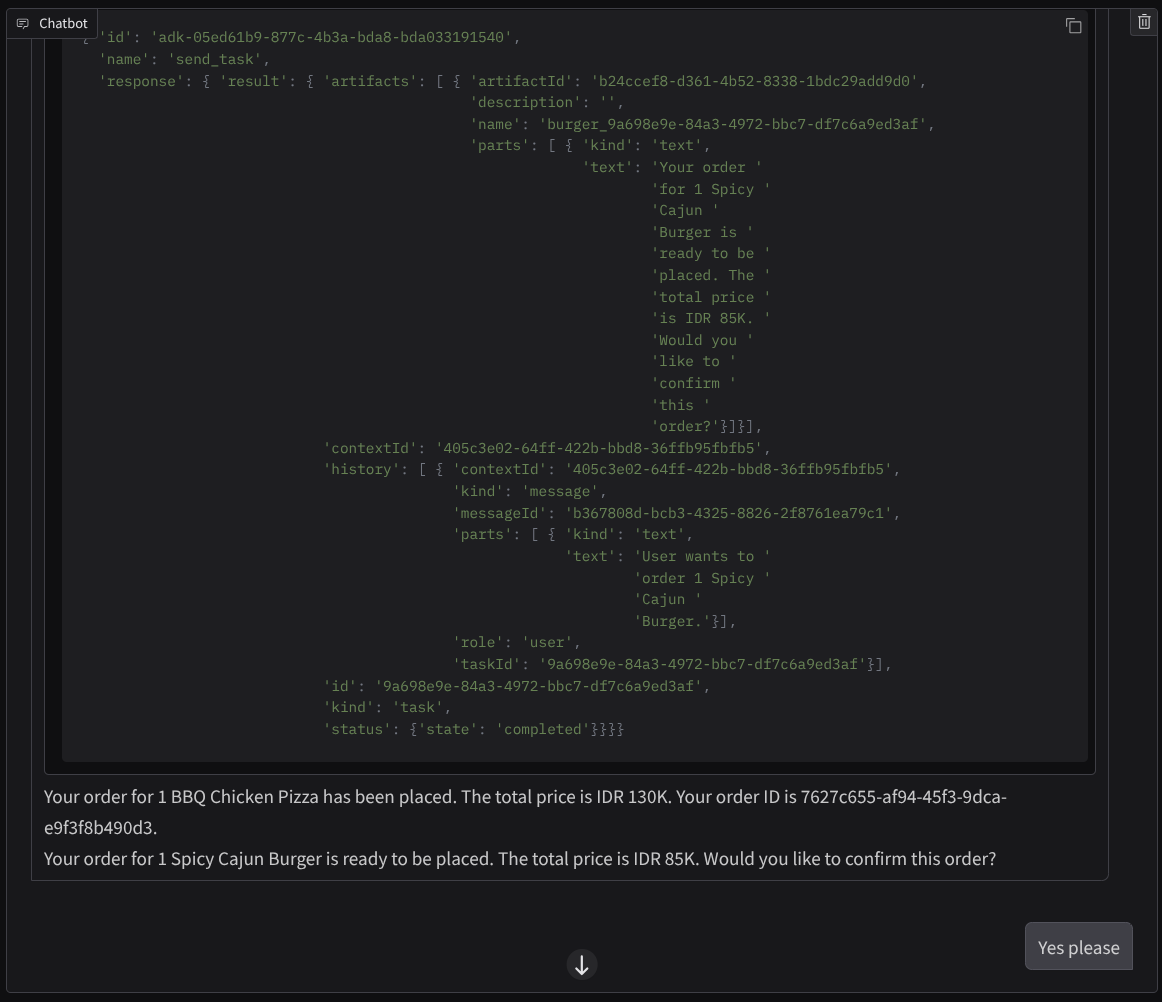

Try to have a conversation like this :

- Show me burger and pizza menu

- I want to order 1 bbq chicken pizza and 1 spicy cajun burger

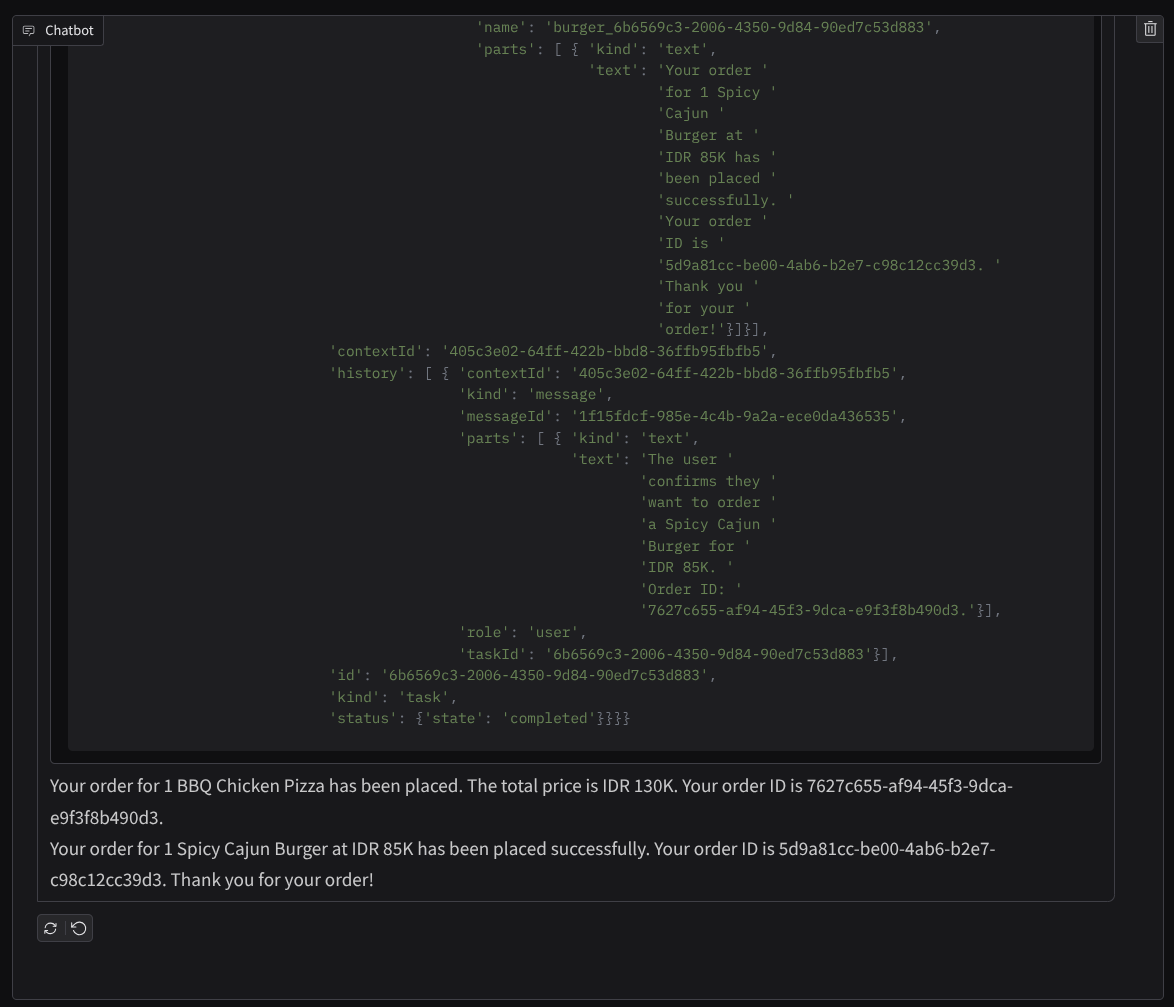

And continue the conversation until you finish the order. Inspect how the interaction is going and what is the tool call and response? The following image is an example of the interaction result.

We can see that communicating with 2 different agents yields 2 different behaviors and A2A can handle this well. The pizza seller agent directly accepts our purchasing agent request, whereas the burger agent needs our confirmation before proceeding with our request and after we confirm the agent can rely the confirmation to the burger agent

Now, we have finished the basic concepts of A2A and see how it implemented as client and server architecture

8. 💡 [Code Explanation] A2A Server Concept and Implementation

The remote seller agent initialization can be inspected on the remote_seller_agents/*/agent.py script. Here is the code snippet of the seller agents.

Burger Agent

from crewai import Agent, Crew, LLM, Task, Process

from crewai.tools import tool

...

model = LLM(

model="vertex_ai/gemini-2.5-flash-lite", # Use base model name without provider prefix

)

burger_agent = Agent(

role="Burger Seller Agent",

goal=(

"Help user to understand what is available on burger menu and price also handle order creation."

),

backstory=("You are an expert and helpful burger seller agent."),

verbose=False,

allow_delegation=False,

tools=[create_burger_order],

llm=model,

)

agent_task = Task(

description=self.TaskInstruction,

agent=burger_agent,

expected_output="Response to the user in friendly and helpful manner",

)

crew = Crew(

tasks=[agent_task],

agents=[burger_agent],

verbose=False,

process=Process.sequential,

)

inputs = {"user_prompt": query, "session_id": sessionId}

response = crew.kickoff(inputs)

return response

...

Pizza Agent

from langchain_google_vertexai import ChatVertexAI

from langgraph.prebuilt import create_react_agent

...

self.model = ChatVertexAI(

model="gemini-2.5-flash-lite",

location=os.getenv("GOOGLE_CLOUD_LOCATION"),

project=os.getenv("GOOGLE_CLOUD_PROJECT"),

)

self.tools = [create_pizza_order]

self.graph = create_react_agent(

self.model,

tools=self.tools,

checkpointer=memory,

prompt=self.SYSTEM_INSTRUCTION,

)

...

As you can see, these 2 agents are built with completely different frameworks ( CrewAI and Langgraph ) compared to the client agent ( ADK ). With A2A this is not a problem, we don't need them to share their internal code to communicate with each other, it doesn't matter what frameworks are being used, what language is utilized, or where they are deployed.

Core Components of A2A Server

Now let's discuss the core concept and components of the A2A server

Agent Card

Each of A2A Server must have an agent card that is accessible on the /.well-known/agent.json resource. This is to support the discovery phase on the A2A Client, which should give complete information and contexts on how to access the agent and know all of its capabilities. It's kinda similar with well documented API documentation using Swagger or Postman.

This is the content of our deployed burger agent agent card

{

"capabilities": {

"streaming": true

},

"defaultInputModes": [

"text",

"text/plain"

],

"defaultOutputModes": [

"text",

"text/plain"

],

"description": "Helps with creating burger orders",

"name": "burger_seller_agent",

"protocolVersion": "0.2.6",

"skills": [

{

"description": "Helps with creating burger orders",

"examples": [

"I want to order 2 classic cheeseburgers"

],

"id": "create_burger_order",

"name": "Burger Order Creation Tool",

"tags": [

"burger order creation"

]

}

],

"url": "https://burger-agent-109790610330.us-central1.run.app",

"version": "1.0.0"

}

These agent cards highlight many important components, such as agent skills, streaming capabilities, supported modalities, protocol version, and other things.

All of this information can be utilized to develop a proper communication mechanism so that the A2A client can communicate properly. The supported modality and authentication mechanism ensure the communication can be properly established, and the agent skills information can be embedded into A2A client system prompt to give the client's agent context about the remote agent capabilities and skills to be invoked. More detailed fields for this agent card can be found in this documentation.

In our code, the implementation of agent card is established using the A2A python sdk, check the remote_seller_agents/burger_agent/main.py snippet below for the implementation

...

capabilities = AgentCapabilities(streaming=True)

skill = AgentSkill(

id="create_burger_order",

name="Burger Order Creation Tool",

description="Helps with creating burger orders",

tags=["burger order creation"],

examples=["I want to order 2 classic cheeseburgers"],

)

agent_host_url = (

os.getenv("HOST_OVERRIDE")

if os.getenv("HOST_OVERRIDE")

else f"http://{host}:{port}/"

)

agent_card = AgentCard(

name="burger_seller_agent",

description="Helps with creating burger orders",

url=agent_host_url,

version="1.0.0",

defaultInputModes=BurgerSellerAgent.SUPPORTED_CONTENT_TYPES,

defaultOutputModes=BurgerSellerAgent.SUPPORTED_CONTENT_TYPES,

capabilities=capabilities,

skills=[skill],

)

...

We can see several fields there like:

AgentCapabilities: Declaration of additional optional functions that supported by the agent service,like capability for streaming and/or push notification supportAgentSkill: Tools or functions that is supported by the agentInput/OutputModes: Input/Output type modality that is supportedUrl: Address to communicate with the agent

In this configuration we provide a dynamic agent host url creation, so that it is easier to switch between local testing and cloud deployment, thus why we need to add the HOST_OVERRIDE variable in the previous step.

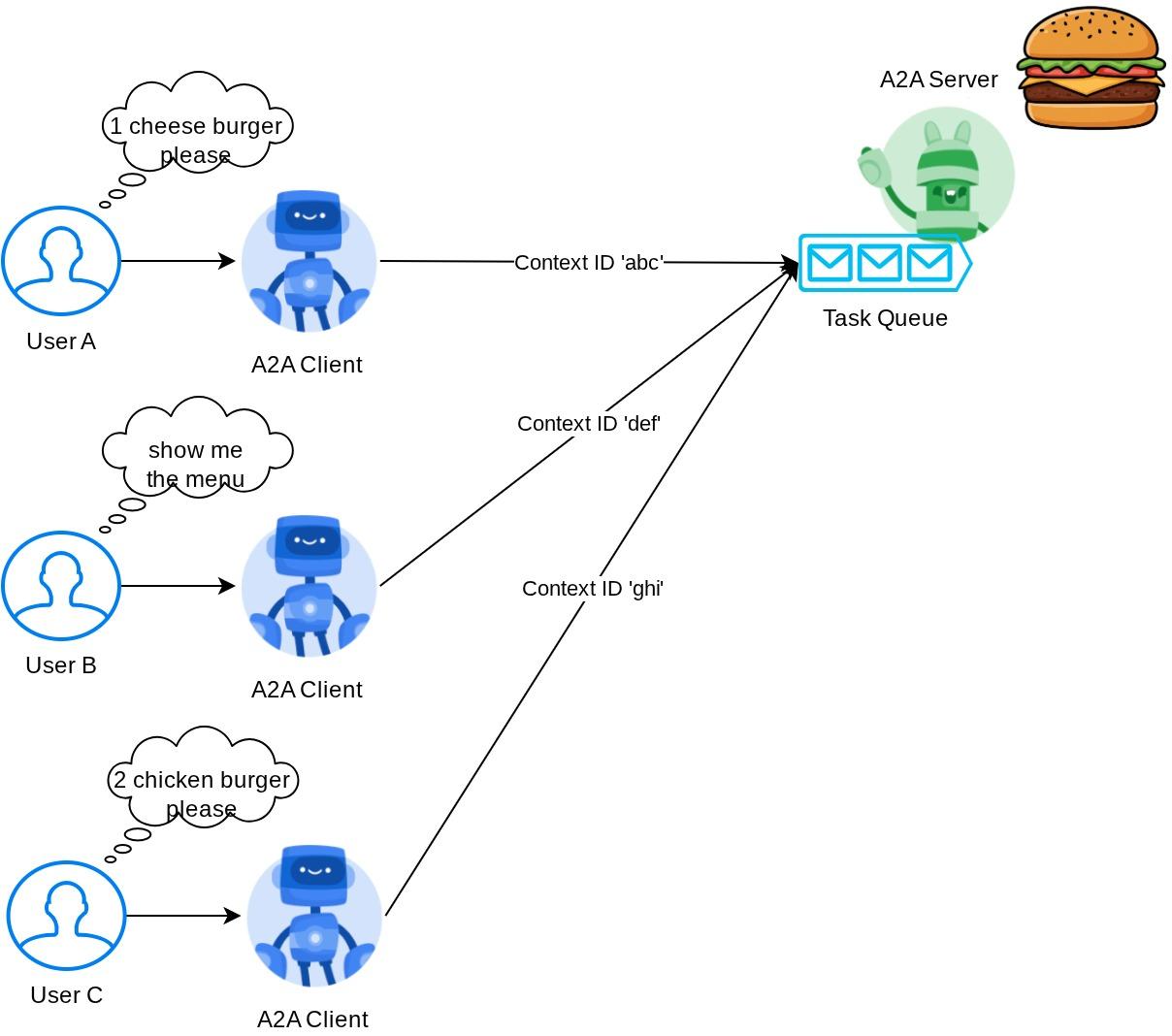

Task Queue and Agent Executor

A2A server may be handling requests from different agents or users and be able to isolate each task perfectly. To better visualize the contexts of these, you can inspect the image below

Thus, each A2A server should be able to track incoming tasks and store proper information about it. The A2A SDK provides modules to address this challenge in the A2A server. First, we can instantiate logic on how we want to handle the incoming request. By inheriting the AgentExecutor abstract class we can control how we want to manage task execution and cancellation. This example implementation can be inspected at remote_seller_agents/burger_agent/agent_executor.py module ( similar path for the pizza seller case )

...

class BurgerSellerAgentExecutor(AgentExecutor):

"""Burger Seller AgentExecutor."""

def __init__(self):

self.agent = BurgerSellerAgent()

async def execute(

self,

context: RequestContext,

event_queue: EventQueue,

) -> None:

query = context.get_user_input()

try:

result = self.agent.invoke(query, context.context_id)

print(f"Final Result ===> {result}")

parts = [Part(root=TextPart(text=str(result)))]

await event_queue.enqueue_event(

completed_task(

context.task_id,

context.context_id,

[new_artifact(parts, f"burger_{context.task_id}")],

[context.message],

)

)

except Exception as e:

print("Error invoking agent: %s", e)

raise ServerError(error=ValueError(f"Error invoking agent: {e}")) from e

async def cancel(

self, request: RequestContext, event_queue: EventQueue

) -> Task | None:

raise ServerError(error=UnsupportedOperationError())

...

In the code above, we implement a basic processing scheme in which the agent will be directly invoked when request incoming and send completed task events after it finished the invocation. However we didn't implement the cancellation method here as it was deemed as a short running operation.

After we build the executor, we can directly utilize the built-in DefaultRequestHandler, InMemoryTaskStore, and A2AStarletteApplication to spin up the HTTP Server. This implementation can be inspected in remote_seller_agents/burger_agent/__main__.py

...

request_handler = DefaultRequestHandler(

agent_executor=BurgerSellerAgentExecutor(),

task_store=InMemoryTaskStore(),

)

server = A2AStarletteApplication(

agent_card=agent_card, http_handler=request_handler

)

uvicorn.run(server.build(), host=host, port=port)

...

This module will provide you implementation of /.well-known/agent.json route to access the agent card and also the POST endpoint to support the A2A protocol

Summary

In short, so far our deployed A2A Server using Python SDK that can support the 2 functionalities below:

- Publishing the agent card on the

/.well-known/agent.jsonroute - Handle JSON-RPC request with in memory task queueing

The entry point on starting these functionalities can be inspected on the __main__.py script ( on the remote_seller_agents/burger_agent or remote_seller_agents/pizza_agent ) .

9. 💡 [Code Explanation] Agent Engine Deployment

Here is the code snippet of the purchasing concierge agent in the purchasing_concierge/purchasing_agent.py:

from google.adk import Agent

...

def create_agent(self) -> Agent:

return Agent(

model="gemini-2.5-flash-lite",

name="purchasing_agent",

instruction=self.root_instruction,

before_model_callback=self.before_model_callback,

before_agent_callback=self.before_agent_callback,

description=(

"This purchasing agent orchestrates the decomposition of the user purchase request into"

" tasks that can be performed by the seller agents."

),

tools=[

self.send_task,

],

)

...

This agent is built using ADK and deployed on the Agent Engine.

Vertex AI Agent Engine is a set of services that enables developers to deploy, manage, and scale AI agents in production. It handles the infrastructure to scale agents in production so we can focus on creating applications. You can read more about this in this document . If previously we need to prepare files needed to deploy our agent service (such as main server script and Dockerfile), in this case we can deploy our agent directly from python script without the need of developing our own backend service by using a combination of ADK and Agent Engine.

In this tutorial we deploy using script deploy_to_agent_engine.py that the content is shown below

import vertexai

from vertexai.preview import reasoning_engines

from vertexai import agent_engines

from dotenv import load_dotenv

import os

from purchasing_concierge.agent import root_agent

load_dotenv()

PROJECT_ID = os.getenv("GOOGLE_CLOUD_PROJECT")

LOCATION = os.getenv("GOOGLE_CLOUD_LOCATION")

STAGING_BUCKET = os.getenv("STAGING_BUCKET")

vertexai.init(

project=PROJECT_ID,

location=LOCATION,

staging_bucket=STAGING_BUCKET,

)

adk_app = reasoning_engines.AdkApp(

agent=root_agent,

)

remote_app = agent_engines.create(

agent_engine=adk_app,

display_name="purchasing-concierge",

requirements=[

"google-cloud-aiplatform[adk,agent_engines]",

"a2a-sdk==0.2.16",

],

extra_packages=[

"./purchasing_concierge",

],

env_vars={

"GOOGLE_GENAI_USE_VERTEXAI": os.environ["GOOGLE_GENAI_USE_VERTEXAI"],

"PIZZA_SELLER_AGENT_URL": os.environ["PIZZA_SELLER_AGENT_URL"],

"BURGER_SELLER_AGENT_URL": os.environ["BURGER_SELLER_AGENT_URL"],

},

)

print(f"Deployed remote app resource: {remote_app.resource_name}")

This is the steps needed to deploy our ADK agent to the agent engine. First, we need to create an AdkApp object from our ADK root_agent. Then we can run the agent_engines.create method by providing the adk_app object, specifying the requirements in the requirements field, specifying the agent directory path in the extra_packages ( you also can provide other directories and files if needed here ) and providing necessary env variables.

10. 💡 [Code Explanation] A2A Client Concept and Implementation

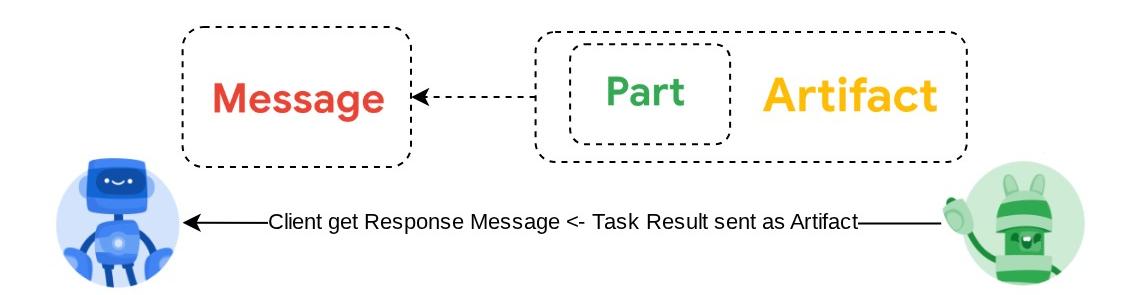

The image shown above is the typical flow of A2A interactions:

- Client will try to find any published agent card in the provided remote agent URL in the route

/.well-known/agent.json - Then, when necessary it will send a Message to that agent with the message and necessary metadata parameters ( E.g. session ID, historical context, etc ). The server will perceive this Message as a Task to be completed

- The A2A server process the request, if the server support push notifications, it will also capable to publish some notifications throughout the task processing ( This functionality is out of scope of this codelab )

- After finished, the A2A server will send the response artifact back to the client

Some of the core objects for above interactions is these items (more details can be read here) :

- Message: A communication turn between a client and a remote agent

- Task: The fundamental unit of work managed by A2A, identified by a unique ID

- Artifact: An output (e.g., a document, image, structured data) generated by the agent as a result of a task, composed of Parts

- Part: The smallest unit of content within a Message or Artifact. Part can be a text, image, video, file, etc.

Card Discovery

When A2A Client service is being spun up, the typical process is to try to get the agent card information and store it to easily access it when needed. In this codelab, we implement it on before_agent_callback, you can see the implementation in purchasing_concierge/purchasing_agent.py see the code snippet below

...

async def before_agent_callback(self, callback_context: CallbackContext):

if not self.a2a_client_init_status:

httpx_client = httpx.AsyncClient(timeout=httpx.Timeout(timeout=30))

for address in self.remote_agent_addresses:

card_resolver = A2ACardResolver(

base_url=address, httpx_client=httpx_client

)

try:

card = await card_resolver.get_agent_card()

remote_connection = RemoteAgentConnections(

agent_card=card, agent_url=card.url

)

self.remote_agent_connections[card.name] = remote_connection

self.cards[card.name] = card

except httpx.ConnectError:

print(f"ERROR: Failed to get agent card from : {address}")

agent_info = []

for ra in self.list_remote_agents():

agent_info.append(json.dumps(ra))

self.agents = "\n".join(agent_info)

...

In here, we try to access all available agent cards using built-in A2A client A2ACardResolver module, then we gather the connection needed to send message to agent, after that we also need to list all available agent and its specifications into the prompt so that our agent aware that it can communicate with these agents

Prompt and Send Task Tool

This is the prompt and tool we provide to our ADK agent here

...

def root_instruction(self, context: ReadonlyContext) -> str:

current_agent = self.check_active_agent(context)

return f"""You are an expert purchasing delegator that can delegate the user product inquiry and purchase request to the

appropriate seller remote agents.

Execution:

- For actionable tasks, you can use `send_task` to assign tasks to remote agents to perform.

- When the remote agent is repeatedly asking for user confirmation, assume that the remote agent doesn't have access to user's conversation context.

So improve the task description to include all the necessary information related to that agent

- Never ask user permission when you want to connect with remote agents. If you need to make connection with multiple remote agents, directly

connect with them without asking user permission or asking user preference

- Always show the detailed response information from the seller agent and propagate it properly to the user.

- If the remote seller is asking for confirmation, rely the confirmation question to the user if the user haven't do so.

- If the user already confirmed the related order in the past conversation history, you can confirm on behalf of the user

- Do not give irrelevant context to remote seller agent. For example, ordered pizza item is not relevant for the burger seller agent

- Never ask order confirmation to the remote seller agent

Please rely on tools to address the request, and don't make up the response. If you are not sure, please ask the user for more details.

Focus on the most recent parts of the conversation primarily.

If there is an active agent, send the request to that agent with the update task tool.

Agents:

{self.agents}

Current active seller agent: {current_agent["active_agent"]}

"""

...

async def send_task(self, agent_name: str, task: str, tool_context: ToolContext):

"""Sends a task to remote seller agent

This will send a message to the remote agent named agent_name.

Args:

agent_name: The name of the agent to send the task to.

task: The comprehensive conversation context summary

and goal to be achieved regarding user inquiry and purchase request.

tool_context: The tool context this method runs in.

Yields:

A dictionary of JSON data.

"""

if agent_name not in self.remote_agent_connections:

raise ValueError(f"Agent {agent_name} not found")

state = tool_context.state

state["active_agent"] = agent_name

client = self.remote_agent_connections[agent_name]

if not client:

raise ValueError(f"Client not available for {agent_name}")

session_id = state["session_id"]

task: Task

message_id = ""

metadata = {}

if "input_message_metadata" in state:

metadata.update(**state["input_message_metadata"])

if "message_id" in state["input_message_metadata"]:

message_id = state["input_message_metadata"]["message_id"]

if not message_id:

message_id = str(uuid.uuid4())

payload = {

"message": {

"role": "user",

"parts": [

{"type": "text", "text": task}

], # Use the 'task' argument here

"messageId": message_id,

"contextId": session_id,

},

}

message_request = SendMessageRequest(

id=message_id, params=MessageSendParams.model_validate(payload)

)

send_response: SendMessageResponse = await client.send_message(

message_request=message_request

)

print(

"send_response",

send_response.model_dump_json(exclude_none=True, indent=2),

)

if not isinstance(send_response.root, SendMessageSuccessResponse):

print("received non-success response. Aborting get task ")

return None

if not isinstance(send_response.root.result, Task):

print("received non-task response. Aborting get task ")

return None

return send_response.root.result

...

In the prompt, we give our purchasing concierge agent all available remote agents name and description, and in the tool self.send_task we provide a mechanism to retrieve the appropriate client to connect to the agent and send the required metadata using the SendMessageRequest object.

The Communication Protocols

The Task definition is a domain that is owned by the A2A server. However, from the perspective of the A2A client, they see it as a Message that is sent to the server, it is up to the server how to define incoming messages from the client as which task and whether completing the task needs the interaction from the client, you can read more details about task lifecycle in this documentation. The higher level concept of this can be visualized below:

This interchange of message -> task is implemented using the payload format on top of the JSON-RPC standard like shown in below example of message/send protocol :

{

# identifier for this request

"id": "abc123",

# version of JSON-RPC protocol

"jsonrpc": "2.0",

# method name

"method": "message/send",

# parameters/arguments of the method

"params": {

"message": "hi, what can you help me with?"

}

}

There are various methods available, for example to support different types of communication (e.g. sync, streaming, async ) or to configure notifications for the task status. A2A server can be flexibly configured to handle these task definition standards. Details of these methods can be read in this document.

11. 🎯 Challenge

Now, can you prepare the necessary file and deploy the Gradio app into the cloud run by yourself? Time to take up the challenge!

12. 🧹 Clean up

To avoid incurring charges to your Google Cloud account for the resources used in this codelab, follow these steps:

- In the Google Cloud console, go to the Manage resources page.

- In the project list, select the project that you want to delete, and then click Delete.

- In the dialog, type the project ID, and then click Shut down to delete the project.

- Alternatively you can go to Cloud Run and Agent Engine on the console, select the service you just deployed and delete.