1. Introduction

Static custom routes influence the default routing behavior in a VPC. IPv6 custom routes now support new next-hop attributes: next-hop-gateway, next-hop-instance and next-hop-address. This codelab describes how to use IPv6 custom routes with these new next-hop options using two VPCs connected by a multi-NIC VM instance. You will also demonstrate mixing ULA and GUA addressing and providing reachability to the ULA VPC to the public internet using the new custom route capability.

What you'll learn

- How to create an IPv6 custom route with a next-hop-ilb next-hop by specifying the ILB's name

- How to create an IPv6 custom route with a next-hop-ilb next-hop by specifying the ILB's IPv6 address

What you'll need

- Google Cloud Project

2. Before you begin

Update the project to support the codelab

This Codelab makes use of $variables to aid gcloud configuration implementation in Cloud Shell.

Inside Cloud Shell, perform the following

gcloud config list project

gcloud config set project [YOUR-PROJECT-NAME]

export projectname=$(gcloud config list --format="value(core.project)")

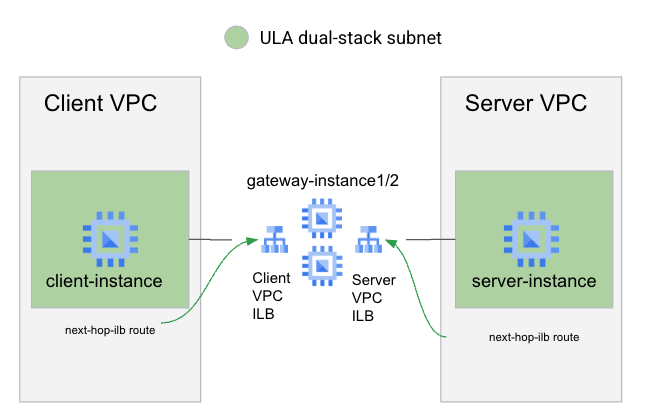

Overall Lab Architecture

To demonstrate both types of custom route next-hops, you will create 2 VPCs: A client and server VPCs that use ULA addressing.

For the client VPC to access the server, you will utilize a custom route using next-hop-ilb pointing at an ILB (using the ILB's name) in front of a group of multi-NIC gateway instances that are sandwiched between two ILBs. To provide routing back to the client instance (after deleting the default ::/0 route), you will utilize a custom route with next-hop-ilb (using the ILB's address) pointing at the ILB.

3. Client VPC Setup

Create the Client VPC

Inside Cloud Shell, perform the following:

gcloud compute networks create client-vpc \

--project=$projectname \

--subnet-mode=custom --mtu=1500 \

--bgp-routing-mode=regional \

--enable-ula-internal-ipv6

Create the Client subnet

Inside Cloud Shell, perform the following:

gcloud compute networks subnets create client-subnet \

--network=client-vpc \

--project=$projectname \

--range=192.168.1.0/24 \

--stack-type=IPV4_IPV6 \

--ipv6-access-type=internal \

--region=us-central1

Record the assigned IPv6 subnet in an environment variable using this command

export client_subnet=$(gcloud compute networks subnets \

describe client-subnet \

--project $projectname \

--format="value(internalIpv6Prefix)" \

--region us-central1)

Launch client instance

Inside Cloud Shell, perform the following:

gcloud compute instances create client-instance \

--subnet client-subnet \

--stack-type IPV4_IPV6 \

--zone us-central1-a \

--project=$projectname

Add firewall rule to for client VPC traffic

Inside Cloud Shell, perform the following:

gcloud compute firewall-rules create allow-gateway-client \

--direction=INGRESS --priority=1000 \

--network=client-vpc --action=ALLOW \

--rules=tcp --source-ranges=$client_subnet \

--project=$projectname

Add firewall rule to allow IAP for the client instance

Inside Cloud Shell, perform the following:

gcloud compute firewall-rules create allow-iap-client \

--direction=INGRESS --priority=1000 \

--network=client-vpc --action=ALLOW \

--rules=tcp:22 --source-ranges=35.235.240.0/20 \

--project=$projectname

Confirm SSH access into the client instance

Inside Cloud Shell, log into the client-instance:

gcloud compute ssh client-instance \

--project=$projectname \

--zone=us-central1-a \

--tunnel-through-iap

If successful, you'll see a terminal window from the client instance. Exit from the SSH session to continue on with the codelab.

4. Server VPC Setup

Create the server VPC

Inside Cloud Shell, perform the following:

gcloud compute networks create server-vpc \

--project=$projectname \

--subnet-mode=custom --mtu=1500 \

--bgp-routing-mode=regional \

--enable-ula-internal-ipv6

Create the server subnets

Inside Cloud Shell, perform the following:

gcloud compute networks subnets create server-subnet \

--network=server-vpc \

--project=$projectname \

--range=192.168.0.0/24 \

--stack-type=IPV4_IPV6 \

--ipv6-access-type=internal \

--region=us-central1

Record the assigned subnet in an environment variable using this command

export server_subnet=$(gcloud compute networks subnets \

describe server-subnet \

--project $projectname \

--format="value(internalIpv6Prefix)" \

--region us-central1)

Launch server VM

Inside Cloud Shell, perform the following:

gcloud compute instances create server-instance \

--subnet server-subnet \

--stack-type IPV4_IPV6 \

--zone us-central1-a \

--project=$projectname

Add firewall rule to allow access to the server from client

Inside Cloud Shell, perform the following:

gcloud compute firewall-rules create allow-client-server \

--direction=INGRESS --priority=1000 \

--network=server-vpc --action=ALLOW \

--rules=tcp --source-ranges=$client_subnet \

--project=$projectname

Add firewall rule to allow IAP

Inside Cloud Shell, perform the following:

gcloud compute firewall-rules create allow-iap-server \

--direction=INGRESS --priority=1000 \

--network=server-vpc --action=ALLOW \

--rules=tcp:22 \

--source-ranges=35.235.240.0/20 \

--project=$projectname

Install Apache in ULA server instance

Inside Cloud Shell, log into the client-instance:

gcloud compute ssh server-instance \

--project=$projectname \

--zone=us-central1-a \

--tunnel-through-iap

Inside the Server VM shell, run the following command

sudo apt update && sudo apt -y install apache2

Verify that Apache is running

sudo systemctl status apache2

Overwrite the default web page

echo '<!doctype html><html><body><h1>Hello World! From Server Instance!</h1></body></html>' | sudo tee /var/www/html/index.html

Exit from the SSH session to continue on with the codelab.

5. Create Gateway Instances

Create multi-NIC gateway instance template

Inside Cloud Shell, perform the following:

gcloud compute instance-templates create gateway-instance-template \

--project=$projectname \

--instance-template-region=us-central1 \

--region=us-central1 \

--network-interface=stack-type=IPV4_IPV6,subnet=client-subnet,no-address \

--network-interface=stack-type=IPV4_IPV6,subnet=server-subnet,no-address \

--can-ip-forward \

--metadata=startup-script='#! /bin/bash

sudo sysctl -w net.ipv6.conf.ens4.accept_ra=2

sudo sysctl -w net.ipv6.conf.ens5.accept_ra=2

sudo sysctl -w net.ipv6.conf.ens4.accept_ra_defrtr=1

sudo sysctl -w net.ipv6.conf.all.forwarding=1'

Create multi-NIC gateway instance group

Inside Cloud Shell, perform the following:

gcloud compute instance-groups managed create gateway-instance-group \

--project=$projectname \

--base-instance-name=gateway-instance \

--template=projects/$projectname/regions/us-central1/instanceTemplates/gateway-instance-template \

--size=2 \

--zone=us-central1-a

Verify gateway instances

To ensure that our startup script was passed correctly and that the v6 routing table is correct. SSH to one of the gateway instances

Inside Cloud Shell, list the gateway instances by running the following:

gcloud compute instances list \

--project=$projectname \

--zones=us-central1-a \

--filter name~gateway \

--format 'csv(name)'

Note one of the instance names and use in the next command to SSH to the instance.

Inside Cloud Shell, log into one of the gateway instances

gcloud compute ssh gateway-instance-<suffix> \

--project=$projectname \

--zone=us-central1-a \

--tunnel-through-iap

Inside the gateway VM shell, run the following command to check IPv6 forwarding

sudo sysctl net.ipv6.conf.all.forwarding

The command should return value of "1" indicating that IPv6 forwarding is enabled.

Verify the IPv6 routing table on the instance

ip -6 route show

Sample output showing both ULA and GUA subnet routes, with the default route pointing at the GUA interface.

::1 dev lo proto kernel metric 256 pref medium

2600:1900:4000:7a7f:0:1:: dev ens4 proto kernel metric 256 expires 83903sec pref medium

2600:1900:4000:7a7f::/65 via fe80::4001:c0ff:fea8:101 dev ens4 proto ra metric 1024 expires 88sec pref medium

fd20:3df:8d5c::1:0:0 dev ens5 proto kernel metric 256 expires 83904sec pref medium

fd20:3df:8d5c::/64 via fe80::4001:c0ff:fea8:1 dev ens5 proto ra metric 1024 expires 84sec pref medium

fe80::/64 dev ens5 proto kernel metric 256 pref medium

fe80::/64 dev ens4 proto kernel metric 256 pref medium

default via fe80::4001:c0ff:fea8:101 dev ens4 proto ra metric 1024 expires 88sec pref medium

Exit from the SSH session to continue on with the codelab.

6. Create Load Balancer Components

Before we can create routes in both VPCs, we will need to create internal passthrough load balancers on both sides of the gateway instances to forward traffic.

Load balancers created in this codelab are comprised of

- Health check: In this codelab, we will create simple health checks that target port 22. Note that health checks will not work as deployed (this would involve adding firewall rules to allow health checks and to create special routes on the gateway instances). Since this codelab is focused on IPv6 forwarding we will rely on the default traffic distribution behavior of internal pass-through load balancers when all backends are unhealthy, namely, to forward to all backends as a last resort.

- Backend service: we will use protocol TCP for the backend service. But since the load balancers are created for routing purposes all protocols are forwarded regardless of the backend service protocol.

- Forwarding rule: we create a forwarding rule per VPC .

- Internal IPv6 address: in this codelab, we will let the forwarding rule allocate IPv6 addresses automatically from the subnet

Create Health Check

Inside Cloud Shell, perform the following:

gcloud compute health-checks create tcp tcp-hc-22 \

--project=$projectname \

--region=us-central1 \

--port=22

Create Backend Services

Inside Cloud Shell, perform the following:

gcloud compute backend-services create bes-ilb-clientvpc \

--project=$projectname \

--load-balancing-scheme=internal \

--protocol=tcp \

--network=client-vpc \

--region=us-central1 \

--health-checks=tcp-hc-22 \

--health-checks-region=us-central1

gcloud compute backend-services create bes-ilb-servervpc \

--project=$projectname \

--load-balancing-scheme=internal \

--protocol=tcp \

--network=server-vpc \

--region=us-central1 \

--health-checks=tcp-hc-22 \

--health-checks-region=us-central1

Add Instance Group to Backend Service

Inside Cloud Shell, perform the following:

gcloud compute backend-services add-backend bes-ilb-clientvpc \

--project=$projectname \

--region=us-central1 \

--instance-group=gateway-instance-group \

--instance-group-zone=us-central1-a

gcloud compute backend-services add-backend bes-ilb-servervpc \

--project=$projectname \

--region=us-central1 \

--instance-group=gateway-instance-group \

--instance-group-zone=us-central1-a

Create Forwarding Rules

Inside Cloud Shell, perform the following:

gcloud compute forwarding-rules create fr-ilb-clientvpc \

--project=$projectname \

--region=us-central1 \

--load-balancing-scheme=internal \

--network=client-vpc \

--subnet=client-subnet \

--ip-protocol=TCP \

--ip-version=IPV6 \

--ports=ALL \

--backend-service=bes-ilb-clientvpc \

--backend-service-region=us-central1

gcloud compute forwarding-rules create fr-ilb-servervpc \

--project=$projectname \

--region=us-central1 \

--load-balancing-scheme=internal \

--network=server-vpc \

--subnet=server-subnet \

--ip-protocol=TCP \

--ip-version=IPV6 \

--ports=ALL \

--backend-service=bes-ilb-servervpc \

--backend-service-region=us-central1

Record the IPv6 addresses of both forwarding rules by issuing the following commands in Cloudshell:

export fraddress_client=$(gcloud compute forwarding-rules \

describe fr-ilb-clientvpc \

--project $projectname \

--format="value(IPAddress)" \

--region us-central1)

export fraddress_server=$(gcloud compute forwarding-rules \

describe fr-ilb-servervpc \

--project $projectname \

--format="value(IPAddress)" \

--region us-central1)

7. Create and test routes to load balancers (using the load balancer address)

In this section, you will add routes to both the client and server VPCs by using the load balancers' IPv6 addresses as the next-hops.

Make note of server addresses

Inside Cloud Shell, perform the following:

gcloud compute instances list \

--project $projectname \

--zones us-central1-a \

--filter="name~server-instance" \

--format='value[separator=","](name,networkInterfaces[0].ipv6Address)'

This should output both server instance names and their IPv6 prefixes. Sample output

server-instance,fd20:3df:8d5c:0:0:0:0:0

Make note of the server address as you will use it later in curl commands from the client instance. Unfortunately environment variables cannot be easily used to store these as they don't transfer over SSH sessions.

Run curl command from client to ULA server instance

To see the behavior before adding any new routes. Run a curl command from the client instance towards the server-instance1.

Inside Cloud Shell, log into the client-instance:

gcloud compute ssh client-instance \

--project=$projectname \

--zone=us-central1-a \

--tunnel-through-iap

Inside the client instance, perform a curl using the ULA IPV6 address of the server1 instance (the command sets a short timeout of 5s to avoid curl waiting for too long)

curl -m 5.0 -g -6 'http://[ULA-ipv6-address-of-server1]:80/'

This curl command should timeout because the Client VPC doesn't have a route towards the Server VPC yet.

Let's try to fix that! Exit from the SSH session for now.

Add custom route in client VPC

Since the client VPC is missing a route towards the ULA prefix. Let's add it now by creating a route that points towards the client-side ILB by address.

Note: IPv6 internal passthrough load balancers are assigned /96 addresses. It is necessary to strip the /96 mask from the address before passing it to the next command. (below bash in-place substitution is used)

Inside Cloud Shell, perform the following:

gcloud compute routes create client-to-server-route \

--project=$projectname \

--destination-range=$server_subnet \

--network=client-vpc \

--next-hop-ilb=${fraddress_client//\/96}

SSH back to the client instance:

gcloud compute ssh client-instance \

--project=$projectname \

--zone=us-central1-a \

--tunnel-through-iap

Inside the client instance, attempt the curl to the server instance again. (the command sets a short timeout of 5s to avoid curl waiting for too long)

curl -m 5.0 -g -6 'http://[ULA-ipv6-address-of-server1]:80/'

This curl command still times out because the server VPC, doesn't have a route back towards the client VPC through the gateway instance yet.

Exit from the SSH session to continue on with the codelab.

Add custom route in Server VPC

Inside Cloud Shell, perform the following:

gcloud compute routes create server-to-client-route \

--project=$projectname \

--destination-range=$client_subnet \

--network=server-vpc \

--next-hop-ilb=${fraddress_server//\/96}

SSH back to the client instance:

gcloud compute ssh client-instance \

--project=$projectname \

--zone=us-central1-a \

--tunnel-through-iap

Inside the client instance, attempt the curl to the server instance one more time.

curl -m 5.0 -g -6 'http://[ULA-ipv6-address-of-server1]:80/'

This curl command now succeeds showing you have end-to-end reachability from the client instance towards the ULA server instance. This connectivity is only possible now through the use of IPv6 custom routes with next-hop-ilb as next-hops.

Sample Output

<user id>@client-instance:~$ curl -m 5.0 -g -6 'http://[fd20:3df:8d5c:0:0:0:0:0]:80/'

<!doctype html><html><body><h1>Hello World! From Server Instance!</h1></body></html>

Exit from the SSH session to continue on with the codelab.

8. Create and test routes to load balancers (using the load balancer name)

Alternatively, next-hop-ilb can also reference the load balancer's name instead of its IPv6 address. In this section we go over the procedure to do that and test that connectivity is still established between client and server.

Delete previous routes

Let's restore the environment to before adding any custom routes by deleting the custom routes that use the instance name.

Inside Cloud Shell, perform the following:

gcloud compute routes delete client-to-server-route --quiet --project=$projectname

gcloud compute routes delete server-to-client-route --quiet --project=$projectname

Run curl command from client to ULA server instance

To confirm that the previous routes have been deleted successfully, run a curl command from the client instance towards the server-instance1.

Inside Cloud Shell, log into the client-instance:

gcloud compute ssh client-instance \

--project=$projectname \

--zone=us-central1-a \

--tunnel-through-iap

Inside the client instance, perform a curl using the ULA IPV6 address of the server1 instance (the command sets a short timeout of 5s to avoid curl waiting for too long)

curl -m 5.0 -g -6 'http://[ULA-ipv6-address-of-server1]:80/'

This curl command should timeout because the Client VPC doesn't have a route anymore towards the Server VPC.

Add custom routes in client and server VPCs

Let's re-add the custom routes in both client and server VPCs but instead of using the ILB's address we'll use the ILB's name and region in the command.

Inside Cloud Shell, perform the following:

gcloud compute routes create client-to-server-route \

--project=$projectname \

--destination-range=$server_subnet \

--network=client-vpc \

--next-hop-ilb=fr-ilb-clientvpc \

--next-hop-ilb-region=us-central1

gcloud compute routes create server-to-client-route \

--project=$projectname \

--destination-range=$client_subnet \

--network=server-vpc \

--next-hop-ilb=fr-ilb-servervpc \

--next-hop-ilb-region=us-central1

SSH back to the client instance:

gcloud compute ssh client-instance \

--project=$projectname \

--zone=us-central1-a \

--tunnel-through-iap

Inside the client instance, attempt the curl to the server instance again. (the command sets a short timeout of 5s to avoid curl waiting for too long)

curl -m 5.0 -g -6 'http://[ULA-ipv6-address-of-server1]:80/'

This curl command now succeeds showing you have end-to-end reachability from the client instance towards the ULA server instance.

9. Clean up

Clean up custom routes

Inside Cloud Shell, perform the following:

gcloud compute routes delete client-to-server-route --quiet --project=$projectname

gcloud compute routes delete server-to-client-route --quiet --project=$projectname

Clean up LB components

Inside Cloud Shell, perform the following:

gcloud compute forwarding-rules delete fr-ilb-clientvpc --region us-central1 --quiet --project=$projectname

gcloud compute forwarding-rules delete fr-ilb-servervpc --region us-central1 --quiet --project=$projectname

gcloud compute backend-services delete bes-ilb-clientvpc --region us-central1 --quiet --project=$projectname

gcloud compute backend-services delete bes-ilb-servervpc --region us-central1 --quiet --project=$projectname

gcloud compute health-checks delete tcp-hc-22 --region us-central1 --quiet --project=$projectname

Clean up instances and instance template

Inside Cloud Shell, perform the following:

gcloud compute instances delete client-instance --zone us-central1-a --quiet --project=$projectname

gcloud compute instances delete server-instance --zone us-central1-a --quiet --project=$projectname

gcloud compute instance-groups managed delete gateway-instance-group --zone us-central1-a --quiet --project=$projectname

gcloud compute instance-templates delete gateway-instance-template --region us-central1 --quiet --project=$projectname

Clean up subnets

Inside Cloud Shell, perform the following:

gcloud compute networks subnets delete client-subnet --region=us-central1 --quiet --project=$projectname

gcloud compute networks subnets delete server-subnet --region=us-central1 --quiet --project=$projectname

Clean up firewall rules

Inside Cloud Shell, perform the following:

gcloud compute firewall-rules delete allow-iap-client --quiet --project=$projectname

gcloud compute firewall-rules delete allow-iap-server --quiet --project=$projectname

gcloud compute firewall-rules delete allow-gateway-client --quiet --project=$projectname

gcloud compute firewall-rules delete allow-client-server --quiet --project=$projectname

Clean up VPCs

Inside Cloud Shell, perform the following:

gcloud compute networks delete client-vpc --quiet --project=$projectname

gcloud compute networks delete server-vpc --quiet --project=$projectname

10. Congratulations

You have successfully used static custom IPv6 routes with next-hops set to next-hop-ilb. You also validated end-to-end IPv6 communication using those routes.

What's next?

Check out some of these codelabs...

- Access Google APIs from on-premises hosts using IPv6 addresses

- IP addressing options IPv4 and IPv6

- Using IPv6 Static Routes next hop instance, next hop address and next hop gateway