1. ก่อนเริ่มต้น

หนึ่งในความก้าวหน้าด้านแมชชีนเลิร์นนิงที่น่าตื่นเต้นที่สุดเมื่อเร็วๆ นี้คือโมเดลภาษาขนาดใหญ่ (LLM) โดยสามารถใช้เพื่อสร้างข้อความ แปลภาษา และตอบคำถามอย่างครอบคลุมและให้ข้อมูลที่เป็นประโยชน์ LLM เช่น LaMDA และ PaLM ของ Google ได้รับการฝึกด้วยข้อมูลข้อความจำนวนมาก ซึ่งช่วยให้เรียนรู้รูปแบบทางสถิติและความสัมพันธ์ระหว่างคำและวลีได้ ซึ่งช่วยให้โมเดลสร้างข้อความที่คล้ายกับข้อความที่มนุษย์เขียนขึ้น และแปลภาษาได้อย่างแม่นยำสูง

LLM มีขนาดใหญ่มากในแง่ของพื้นที่เก็บข้อมูล และโดยทั่วไปต้องใช้กำลังการประมวลผลจำนวนมากในการเรียกใช้ ซึ่งหมายความว่ามักจะมีการติดตั้งใช้งานในระบบคลาวด์ และเป็นเรื่องที่ท้าทายพอสมควรสำหรับแมชชีนเลิร์นนิงในอุปกรณ์ (ODML) เนื่องจากกำลังการคำนวณที่จำกัดในอุปกรณ์เคลื่อนที่ แต่คุณสามารถเรียกใช้ LLM ขนาดเล็กกว่า (เช่น GPT-2) ในอุปกรณ์ Android รุ่นใหม่และยังคงได้รับผลลัพธ์ที่น่าประทับใจ

นี่คือการสาธิตการเรียกใช้โมเดล PaLM ของ Google เวอร์ชันที่มีพารามิเตอร์ 1.5 พันล้านรายการใน Google Pixel 7 Pro โดยไม่มีการเร่งความเร็วในการเล่น

ในโค้ดแล็บนี้ คุณจะได้เรียนรู้เทคนิคและเครื่องมือในการสร้างแอปที่ทำงานด้วย LLM (ใช้ GPT-2 เป็นโมเดลตัวอย่าง) โดยใช้สิ่งต่อไปนี้

- KerasNLP เพื่อโหลด LLM ที่ฝึกไว้ล่วงหน้า

- KerasNLP เพื่อปรับแต่ง LLM

- TensorFlow Lite เพื่อแปลง เพิ่มประสิทธิภาพ และติดตั้งใช้งาน LLM บน Android

ข้อกำหนดเบื้องต้น

- มีความรู้ระดับกลางเกี่ยวกับ Keras และ TensorFlow Lite

- ความรู้พื้นฐานเกี่ยวกับการพัฒนาแอป Android

สิ่งที่คุณจะได้เรียนรู้

- วิธีใช้ KerasNLP เพื่อโหลด LLM ที่ผ่านการฝึกมาก่อนและปรับแต่ง

- วิธีควอนไตซ์และแปลง LLM เป็น TensorFlow Lite

- วิธีเรียกใช้การอนุมานในโมเดล TensorFlow Lite ที่แปลงแล้ว

สิ่งที่คุณต้องมี

- สิทธิ์เข้าถึง Colab

- Android Studio เวอร์ชันล่าสุด

- อุปกรณ์ Android รุ่นใหม่ที่มี RAM มากกว่า 4 GB

2. ตั้งค่า

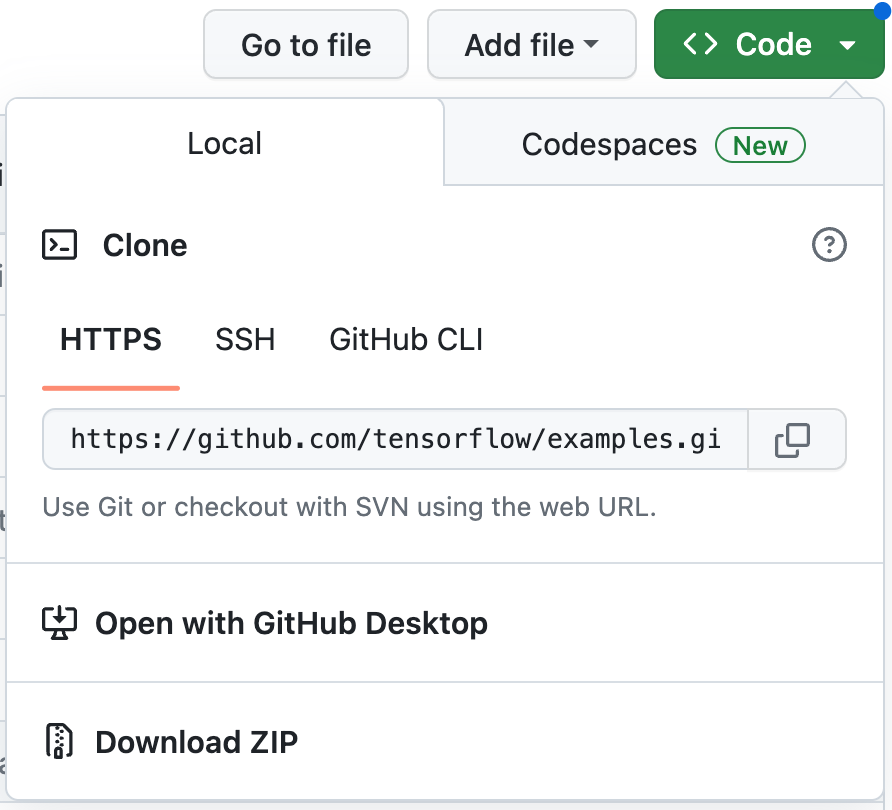

วิธีดาวน์โหลดโค้ดสำหรับ Codelab นี้

- ไปที่ที่เก็บ GitHub สำหรับ Codelab นี้

- คลิกโค้ด > ดาวน์โหลด ZIP เพื่อดาวน์โหลดโค้ดทั้งหมดสำหรับ Codelab นี้

- แตกไฟล์ ZIP ที่ดาวน์โหลดเพื่อคลายโฟลเดอร์รูท

examplesที่มีทรัพยากรทั้งหมดที่คุณต้องการ

3. เรียกใช้แอปเริ่มต้น

- นำเข้าโฟลเดอร์

examples/lite/examples/generative_ai/androidไปยัง Android Studio - เริ่มโปรแกรมจำลอง Android แล้วคลิก

Run ในเมนูการนำทาง

Run ในเมนูการนำทาง

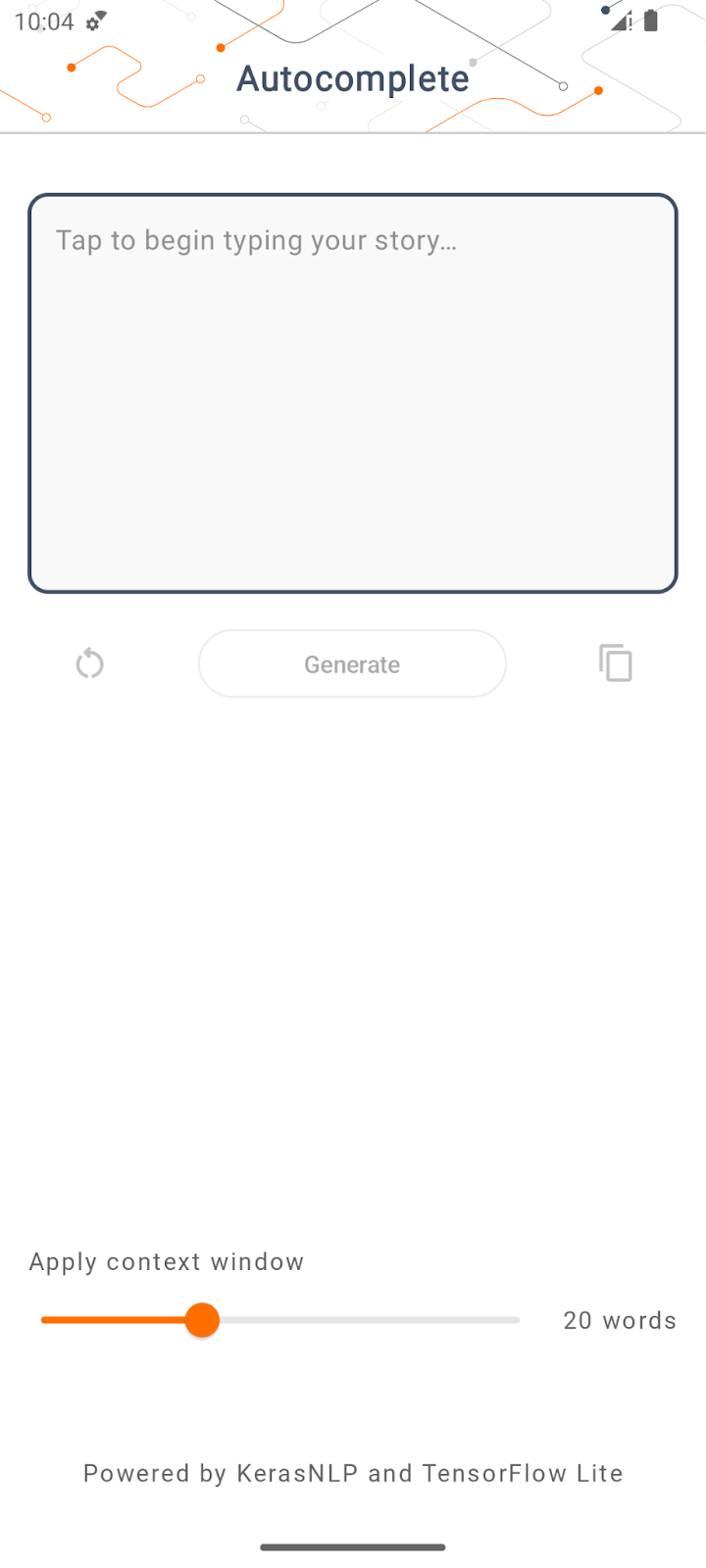

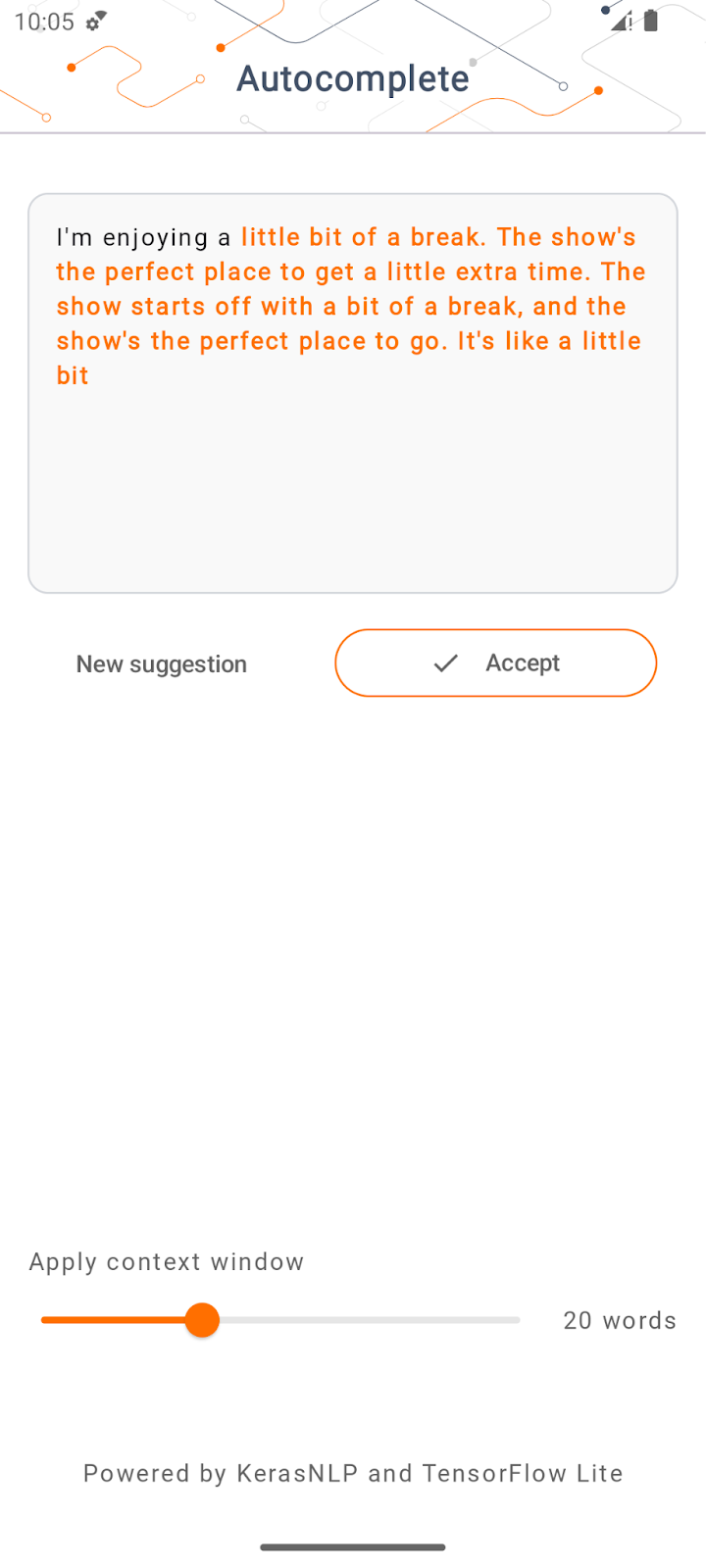

เรียกใช้และสำรวจแอป

แอปควรเปิดขึ้นในอุปกรณ์ Android แอปนี้ชื่อว่า "เติมข้อความอัตโนมัติ" UI ใช้งานง่ายมาก คุณสามารถพิมพ์คำเริ่มต้นในกล่องข้อความแล้วแตะสร้าง จากนั้นแอปจะเรียกใช้การอนุมานใน LLM และสร้างข้อความเพิ่มเติมตามข้อมูลที่คุณป้อน

ตอนนี้หากคุณแตะสร้างหลังจากพิมพ์คำบางคำ จะไม่มีอะไรเกิดขึ้น เนื่องจากยังไม่ได้เรียกใช้ LLM

4. เตรียม LLM สำหรับการติดตั้งใช้งานในอุปกรณ์

- เปิด Colab แล้วเรียกใช้สมุดบันทึก (ซึ่งโฮสต์อยู่ในที่เก็บ GitHub ของ TensorFlow Codelabs)

5. สร้างแอป Android ให้เสร็จสมบูรณ์

ตอนนี้คุณได้แปลงโมเดล GPT-2 เป็น TensorFlow Lite แล้ว ในที่สุดคุณก็สามารถนําไปใช้ในแอปได้

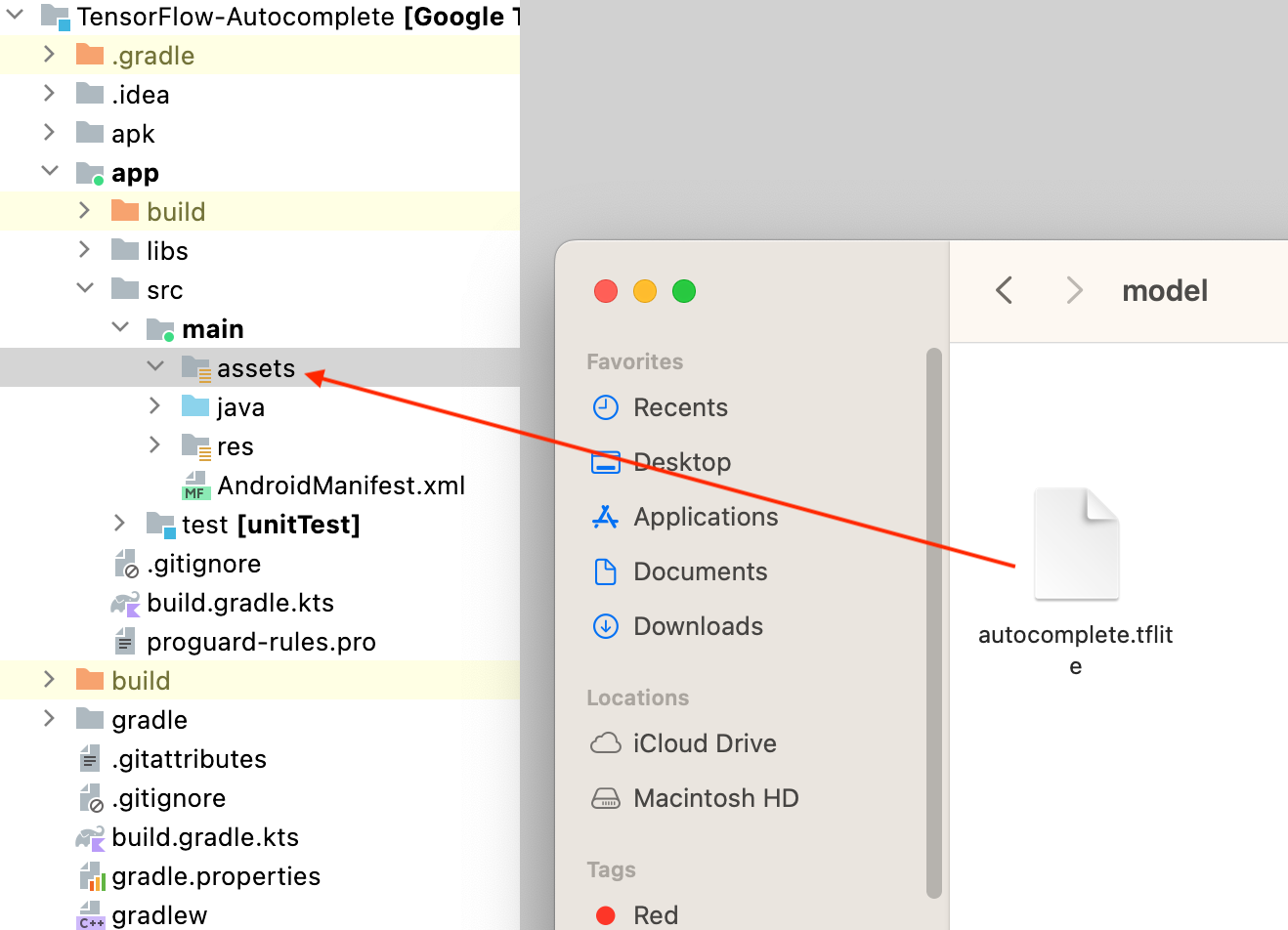

เรียกใช้แอป

- ลาก

autocomplete.tfliteไฟล์โมเดลที่ดาวน์โหลดจากขั้นตอนสุดท้ายไปยังโฟลเดอร์app/src/main/assets/ใน Android Studio

- คลิก

เรียกใช้ในเมนูการนำทาง จากนั้นรอให้แอปโหลด

เรียกใช้ในเมนูการนำทาง จากนั้นรอให้แอปโหลด - พิมพ์คำเริ่มต้นในช่องข้อความ แล้วแตะสร้าง

6. หมายเหตุเกี่ยวกับ AI ที่มีความรับผิดชอบ

ดังที่ระบุไว้ในประกาศเกี่ยวกับ GPT-2 ของ OpenAI ฉบับเดิม โมเดล GPT-2 มีข้อควรระวังและข้อจำกัดที่สำคัญ ในความเป็นจริงแล้ว ปัจจุบัน LLM มักจะมีความท้าทายที่รู้จักกันดี เช่น คำตอบที่ไม่สมเหตุสมผล เอาต์พุตที่ไม่เหมาะสม ความเป็นธรรม และอคติ เนื่องจากโมเดลเหล่านี้ได้รับการฝึกด้วยข้อมูลในโลกแห่งความเป็นจริง ซึ่งทำให้โมเดลสะท้อนปัญหาในโลกแห่งความเป็นจริง

Codelab นี้สร้างขึ้นเพื่อแสดงวิธีสร้างแอปที่ขับเคลื่อนโดย LLM ด้วยเครื่องมือ TensorFlow เท่านั้น โมเดลที่สร้างขึ้นในโค้ดแล็บนี้มีไว้เพื่อวัตถุประสงค์ทางการศึกษาเท่านั้น และไม่ได้มีไว้เพื่อการใช้งานจริง

การใช้งาน LLM ในการผลิตต้องมีการเลือกชุดข้อมูลการฝึกอย่างรอบคอบและการลดความเสี่ยงด้านความปลอดภัยอย่างครอบคลุม ดูข้อมูลเพิ่มเติมเกี่ยวกับ Responsible AI ในบริบทของ LLM ได้โดยดูเซสชันทางเทคนิค Safe and Responsible Development with Generative Language Models ที่ Google I/O 2023 และดูชุดเครื่องมือ Responsible AI

7. บทสรุป

ยินดีด้วย คุณสร้างแอปเพื่อสร้างข้อความที่สอดคล้องกันโดยอิงตามอินพุตของผู้ใช้ด้วยการเรียกใช้โมเดลภาษาขนาดใหญ่ที่ฝึกไว้ล่วงหน้าบนอุปกรณ์เท่านั้น