1. Introduction

Overview

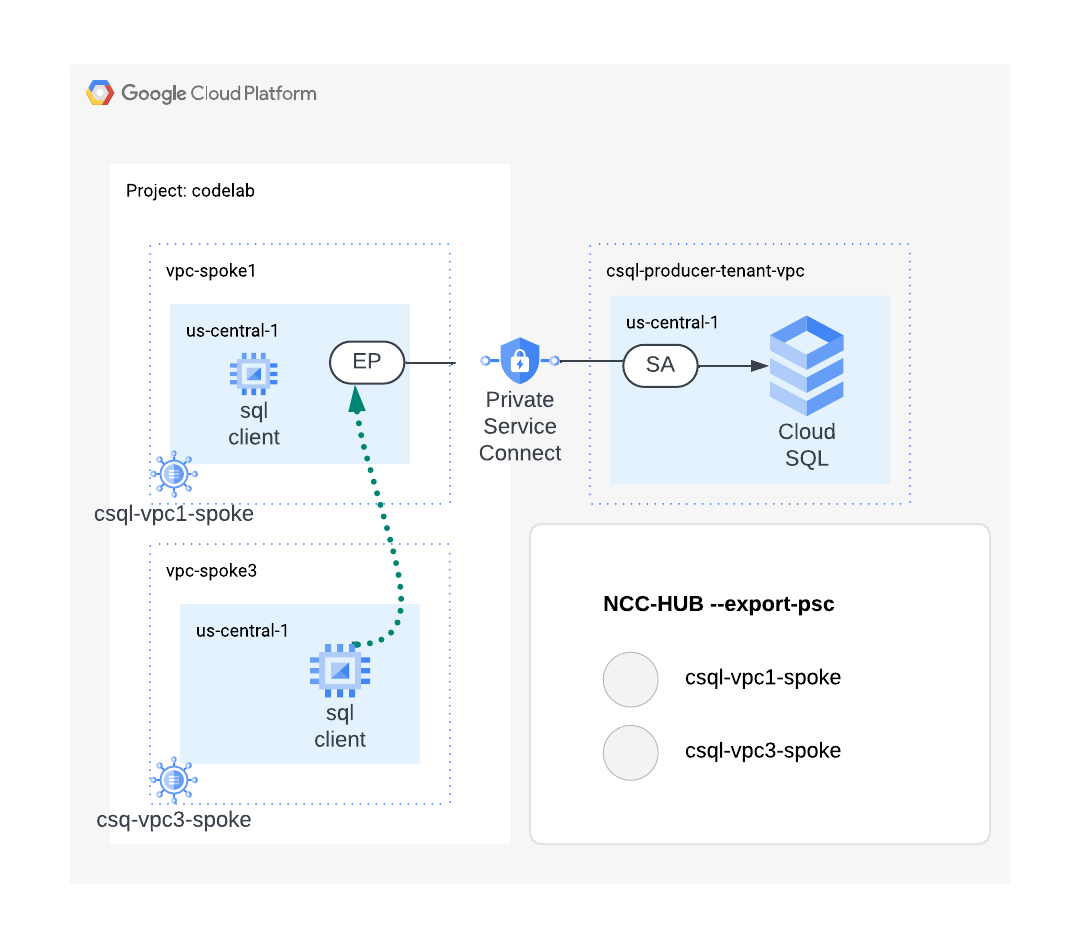

In this lab, users will explore how Network Connectivity Center's hub propagates a Private Service Connect endpoint to VPC spokes.

The hub resource provides a centralized connectivity management model to interconnect VPC spokes traffic to PSC-endpoints.

What you'll build

In this codelab, you'll build a NCC network that will propagate a private service connect endpoint to Cloud SQL instance.

What you'll learn

- Use Private Service Connect to connect to a Cloud SQL instance

- Use NCC hub to propagate the PSC subnet to all VPC spokes to allow network connectivity from multiple VPC networks.

What you'll need

- Knowledge of GCP Cloud Networking

- Basic Knowledge of Cloud SQL

- Google Cloud Project

- Check your Quota:Networks and request additional Networks if required, screenshot below:

Objectives

- Setup the GCP Environment

- Setup Cloud SQL instance for MySql with Private Service Sonnect

- Configure Network Connectivity Center Hub to propagate PSC endpoints

- Configure Network Connectivity Center with VPC as spoke

- Validate Data Path

- Explore NCC serviceability features

- Clean up resources

Before you begin

Google Cloud Console and Cloud Shell

To interact with GCP, we will use both the Google Cloud Console and Cloud Shell throughout this lab.

NCC Hub Project Google Cloud Console

The Cloud Console can be reached at https://console.cloud.google.com.

Set up the following items in Google Cloud to make it easier to configure Network Connectivity Center:

In the Google Cloud Console, on the project selector page, select or create a Google Cloud project.

Launch the Cloud Shell. This Codelab makes use of $variables to aid gcloud configuration implementation in Cloud Shell.

gcloud auth list

gcloud config list project

gcloud config set project $project

project=[YOUR-PROJECT-NAME]

echo $project

IAM Roles

NCC requires IAM roles to access specific APIs. Be sure to configure your user with the NCC IAM roles as required.

Role/Description | Permissions |

networkconnectivity.networkAdmin - Allows network administrators to manage hub and spokes. | networkconnectivity.hubs.networkconnectivity.spokes. |

networkconnectivity.networkSpokeManager - Allows adding and managing spokes in a hub. To be used in Shared VPC where the host-project owns the Hub, but other admins in other projects can add spokes for their attachments to the Hub. | networkconnectivity.spokes.** |

networkconnectivity.networkUsernetworkconnectivity.networkViewer - Allows network users to view different attributes of hub and spokes. | networkconnectivity.hubs.getnetworkconnectivity.hubs.listnetworkconnectivity.spokes.getnetworkconnectivity.spokes.listnetworkconnectivity.spokes.aggregatedList |

2. Setup the Network Environment

Overview

In this section, we'll deploy the two VPC networks and firewall rules in a single project. The logical diagram illustrates the network environment that will be setup in this step.

Create VPC1 and a Subnet

The VPC network contains subnets that you'll install GCE VM for data path validation

vpc_spoke_network_name="vpc1-spoke"

vpc_spoke_subnet_name="subnet1"

vpc_spoke_subnet_ip_range="10.0.1.0/24"

region="us-central1"

zone="us-central1-a"

gcloud compute networks create "${vpc_spoke_network_name}" \

--subnet-mode=custom \

gcloud compute networks subnets create "${vpc_spoke_subnet_name}" \

--network="${vpc_spoke_network_name}" \

--range="${vpc_spoke_subnet_ip_range}" \

--region="${region}"

Create a PSC subnet in VPC

Use the command below to create a subnet in the VPC spoke that will be allocated to the PSC-EP.

vpc_spoke_network_name="vpc1-spoke"

vpc_spoke_subnet_name="csql-psc-subnet"

region="us-central1"

vpc_spoke_subnet_ip_range="192.168.0.0/24"

gcloud compute networks subnets create "${vpc_spoke_subnet_name}" \

--network="${vpc_spoke_network_name}" \

--range="${vpc_spoke_subnet_ip_range}" \

--region="${region}"

Create VPC3 and a Subnet

vpc_spoke_network_name="vpc3-spoke"

vpc_spoke_subnet_name="subnet3"

vpc_spoke_subnet_ip_range="10.0.3.0/24"

region="us-central1"

zone="us-central1-a"

gcloud compute networks create "${vpc_spoke_network_name}" \

--subnet-mode=custom \

gcloud compute networks subnets create "${vpc_spoke_subnet_name}" \

--network="${vpc_spoke_network_name}" \

--range="${vpc_spoke_subnet_ip_range}" \

--region="${region}"

Configure VPC1's Firewall Rules

These rules will allow network connectivity from RFC1918 and Identity Access Proxy ranges

vpc_spoke_network_name="vpc1-spoke"

gcloud compute firewall-rules create vpc1-allow-all \

--network="${vpc_spoke_network_name}" \

--allow=all \

--source-ranges=10.0.0.0/8,172.16.0.0/12,192.168.0.0/16

gcloud compute firewall-rules create vpc1-allow-iap \

--network="${vpc_spoke_network_name}" \

--allow all \

--source-ranges 35.235.240.0/20

Configure Routing VPC and VPC Firewall Rules

vpc_spoke_network_name="vpc3-spoke"

gcloud compute firewall-rules create vpc3-allow-all \

--network="${vpc_spoke_network_name}" \

--allow=all \

--source-ranges=10.0.0.0/8,172.16.0.0/12,192.168.0.0/16

gcloud compute firewall-rules create vpc3-allow-iap \

--network="${vpc_spoke_network_name}" \

--allow all \

--source-ranges 35.235.240.0/20

Configure GCE VM in VPC1

You'll need temporary internet access to install packages, so configure the instance to use an external IP address.

vm_vpc1_spoke_name="csql-vpc1-vm"

vpc_spoke_network_name="vpc1-spoke"

vpc_spoke_subnet_name="subnet1"

region="us-central1"

zone="us-central1-a"

gcloud compute instances create "${vm_vpc1_spoke_name}" \

--machine-type="e2-medium" \

--subnet="${vpc_spoke_subnet_name}" \

--zone="${zone}" \

--image-family=debian-11 \

--image-project=debian-cloud \

--metadata=startup-script='#!/bin/bash

sudo apt-get update

sudo apt-get install -y default-mysql-client'

Configure GCE VM in VPC3

You'll need temporary internet access to install packages, so configure the instance to use an external IP address.

vm_vpc_spoke_name="csql-vpc3-vm"

vpc_spoke_network_name="vpc3-spoke"

vpc_spoke_subnet_name="subnet3"

region="us-central1"

zone="us-central1-a"

gcloud compute instances create "${vm_vpc_spoke_name}" \

--machine-type="e2-medium" \

--subnet="${vpc_spoke_subnet_name}" \

--zone="${zone}" \

--image-family=debian-11 \

--image-project=debian-cloud \

--metadata=startup-script='#!/bin/bash

sudo apt-get update

sudo apt-get install -y default-mysql-client'

3. Create the Cloud SQL Instance

Use the below commands to create an instance and to enable Private Service Connect .

This will take a few minutes.

gcloud config set project ${project}

gcloud sql instances create mysql-instance \

--project="${project}" \

--region=us-central1 \

--enable-private-service-connect \

--allowed-psc-projects="${project}" \

--availability-type=zonal \

--no-assign-ip \

--tier=db-f1-micro \

--database-version=MYSQL_8_0 \

--enable-bin-log

Identify the Cloud SQL instance's service attachment URI

Use the gcloud sql instances describe command to view information about an instance with Private Service Connect enabled. Take note of the pscServiceAttachmentLink field which displays the URI that points to the service attachment of the instance. We'll need this in the next section.

gcloud sql instances describe mysql-instance \

--format='value(pscServiceAttachmentLink)'

4. PSC endpoint to Cloud Sql

Reserve an Internal IP address for the PSC Endpoint

Use the command below to reserve an internal IP address for the Private Service Connect endpoint,

region="us-central1"

vpc_spoke_subnet_name="csql-psc-subnet"

gcloud compute addresses create csql-psc-ip \

--subnet="${vpc_spoke_subnet_name}" \

--region="${region}" \

--addresses=192.168.0.253

Lookup the NAME associated with the reserved IP address. This will be used in the forwarding rule configuration.

gcloud compute addresses list \

--filter="name=csql-psc-ip"

Create the Private Service Connect Forwarding Rule in VPC1

Use the command below to create the Private Service Connect endpoint and point it to the Cloud SQL service attachment.

vpc_spoke_network_name="vpc1-spoke"

vpc_spoke_subnet_name="csql-psc-subnet"

region="us-central1"

csql_psc_ep_name="csql-psc-ep"

sa_uri=$(gcloud sql instances describe mysql-instance \

--format='value(pscServiceAttachmentLink)')

echo "$sa_uri"

gcloud compute forwarding-rules create "${csql_psc_ep_name}" \

--address=csql-psc-ip \

--region="${region}" \

--network="${vpc_spoke_network_name}" \

--target-service-attachment="${sa_uri}" \

--allow-psc-global-access

Use the command below to verify that the cSQL service attachment accepts the endpoint

gcloud compute forwarding-rules describe csql-psc-ep \

--region=us-central1 \

--format='value(pscConnectionStatus)'

Verify the datapath to MySQL from VPC1

When you create a new Cloud SQL instance, you must set a password for the default user account before you can connect to the instance.

gcloud sql users set-password root \

--host=% \

--instance=mysql-instance \

--prompt-for-password

Use the below command to locate the IP address of the PSC endpoint that is associated to Cloud SQL's service attachment.

gcloud compute addresses describe csql-psc-ip \

--region=us-central1 \

--format='value(address)'

Connect to Cloud Sql Instance from a VM in VPC1

Open a SSH session to csql-vpc1-vm

gcloud compute ssh csql-vpc1-vm \

--zone=us-central1-a \

--tunnel-through-iap

Use the command below to connect to the Cloud Sql instance. When prompted enter the password that was created in the step above.

mysql -h 192.168.0.253 -u root -p

The output below is displayed upon a successful login,

Welcome to the MariaDB monitor. Commands end with ; or \g.

Your MySQL connection id is 8350

Server version: 8.0.31-google (Google)

Copyright (c) 2000, 2018, Oracle, MariaDB Corporation Ab and others.

Type 'help;' or '\h' for help. Type '\c' to clear the current input statement.

MySQL [(none)]>

Use show databases; command to verify the databases that is created by default on MySql.

MySQL [(none)]> show databases;

Connect to the Cloud Sql Instance from a VM in VPC3

Open a SSH session to csql-vpc3-vm,

gcloud compute ssh csql-vpc3-vm \

--zone=us-central1-a \

--tunnel-through-iap

Use the command below to connect to the Cloud Sql instance. When prompted enter the password that was created in the step above.

mysql -h 192.168.0.253 -u root -p

The session from the VM residing on VPC3 fails because there is no datapath from VPC3 to the Private Service Connect Endpoint. Use the key strokes to breakout of the session.

Ctrl + C

5. Network Connectivity Center Hub

Overview

In this section, we'll configure a NCC Hub using gcloud commands. The NCC Hub will serve as the control plane responsible for building the datapath from VPC spokes to the Private Service Connect endpoint.

Enable API Services

Enable the network connectivity API in case it is not yet enabled:

gcloud services enable networkconnectivity.googleapis.com

Create NCC Hub

Use the gcloud command below to create a NCC hub. The "–export-psc" flag instructs NCC Hub to propagate known PSC endpoints to all VPC spokes.

hub_name="ncc-hub"

gcloud network-connectivity hubs create "${hub_name}" \

--export-psc

Describe the newly created NCC Hub. Note the name and associated path.

gcloud network-connectivity hubs describe ncc-hub

Configure VPC1 as a NCC spoke

hub_name="ncc-hub"

vpc_spoke_name="sql-vpc1-spoke"

vpc_spoke_network_name="vpc1-spoke"

gcloud network-connectivity spokes linked-vpc-network create "${vpc_spoke_name}" \

--hub="${hub_name}" \

--vpc-network="${vpc_spoke_network_name}" \

--global

Configure VPC3 as a NCC spoke

hub_name="ncc-hub"

vpc_spoke_name="sql-vpc3-spoke"

vpc_spoke_network_name="vpc3-spoke"

gcloud network-connectivity spokes linked-vpc-network create "${vpc_spoke_name}" \

--hub="${hub_name}" \

--vpc-network="${vpc_spoke_network_name}" \

--global

Use the below command to check NCC Hub's route table for a route to the PSC subnet.

gcloud network-connectivity hubs route-tables routes list \

--route_table=default \

--hub=ncc-hub

6. Verify the NCC data path

In this step, we'll validate the data path between NCC hybrid and VPC spoke.

Verify the NCC configured datapath to Cloud Sql Instances PSC endpoint

Use the output from these gcloud commands to log on to the on prem VM.

gcloud compute instances list --filter="name=csql-vpc3-vm"

Log on to the VM instance residing in the on-prem network.

gcloud compute ssh csql-vpc3-vm \

--zone=us-central1-a \

--tunnel-through-iap

Use the mysql command below to connect to the Cloud Sql instance. When prompted enter the password that was created in the step above.

mysql -h 192.168.0.253 -u root -p

The output below is displayed upon a successful login,

Welcome to the MariaDB monitor. Commands end with ; or \g.

Your MySQL connection id is 8501

Server version: 8.0.31-google (Google)

Copyright (c) 2000, 2018, Oracle, MariaDB Corporation Ab and others.

Type 'help;' or '\h' for help. Type '\c' to clear the current input statement.

MySQL [(none)]> show databases;

+--------------------+

| Database |

+--------------------+

| information_schema |

| mysql |

| performance_schema |

| sys |

+--------------------+

4 rows in set (0.005 sec)

Use show databases; command to verify the databases that is created by default on MySql

MySQL [(none)]> show databases;

7. Clean Up

Login to cloud shell and delete GCP resources.

Delete the Cloud Sql PSC Endpoints

gcloud compute forwarding-rules delete csql-psc-ep \

--region=us-central1 \

--quiet

gcloud compute addresses delete csql-psc-ip \

--region=us-central1 \

--quiet

gcloud compute networks subnets delete csql-psc-subnet \

--region=us-central1 \

--quiet

Delete the Cloud Sql Instance

gcloud sql instances delete mysql-instance --quiet

Delete Firewall Rules

vpc_spoke_network_name="vpc3-spoke"

gcloud compute firewall-rules delete vpc3-allow-all \ --network="${vpc_spoke_network_name}"

gcloud compute firewall-rules delete vpc3-allow-iap \ --network="${vpc_spoke_network_name}"

vpc_spoke_network_name="vpc1-spoke"

gcloud compute firewall-rules delete vpc1-allow-all \ --network="${vpc_spoke_network_name}"

gcloud compute firewall-rules delete vpc1-allow-iap \ --network="${vpc_spoke_network_name}"

Delete GCE Instances in VPC1 and VPC3

vm_vpc1_spoke_name="csql-vpc1-vm"

zone="us-central1-a"

gcloud compute instances delete "${vm_vpc1_spoke_name}" \

--zone="${zone}" \

--quiet

vm_vpc_spoke_name="csql-vpc3-vm"

zone="us-central1-a"

gcloud compute instances delete "${vm_vpc_spoke_name}" \

--zone="${zone}" --quiet

Delete the NCC spokes

vpc_spoke_name="sql-vpc1-spoke"

gcloud network-connectivity spokes delete "${vpc_spoke_name}" \

--global \

--quiet

vpc_spoke_name="sql-vpc3-spoke"

gcloud network-connectivity spokes delete "${vpc_spoke_name}" \

--global \

--quiet

Delete NCC Hub

hub_name="ncc-hub"

gcloud network-connectivity hubs delete "${hub_name}" \

--project=${project}

Delete the Subnets in all VPCs

vpc_spoke_subnet_name="csql-psc-subnet"

region="us-central1"

gcloud compute networks subnets delete "${vpc_spoke_subnet_name}" \

--region="${region}" \

--quiet

vpc_spoke_subnet_name="subnet1"

region="us-central1"

gcloud compute networks subnets delete "${vpc_spoke_subnet_name}" \

--region="${region}" \

--quiet

vpc_spoke_subnet_name="subnet3"

region="us-central1"

gcloud compute networks subnets delete "${vpc_spoke_subnet_name}" \

--region="${region}" \

--quiet

Delete VPC1 and VPC3

gcloud compute networks delete vpc1-spoke vpc3-spoke

8. Congratulations!

You have completed the Private Service Connect propagation with Network Connectivity Center Lab!

What you covered

- Private Service Connect endpoint propagation with Network Connectivity Center

Next Steps