1. Introduction

Overview

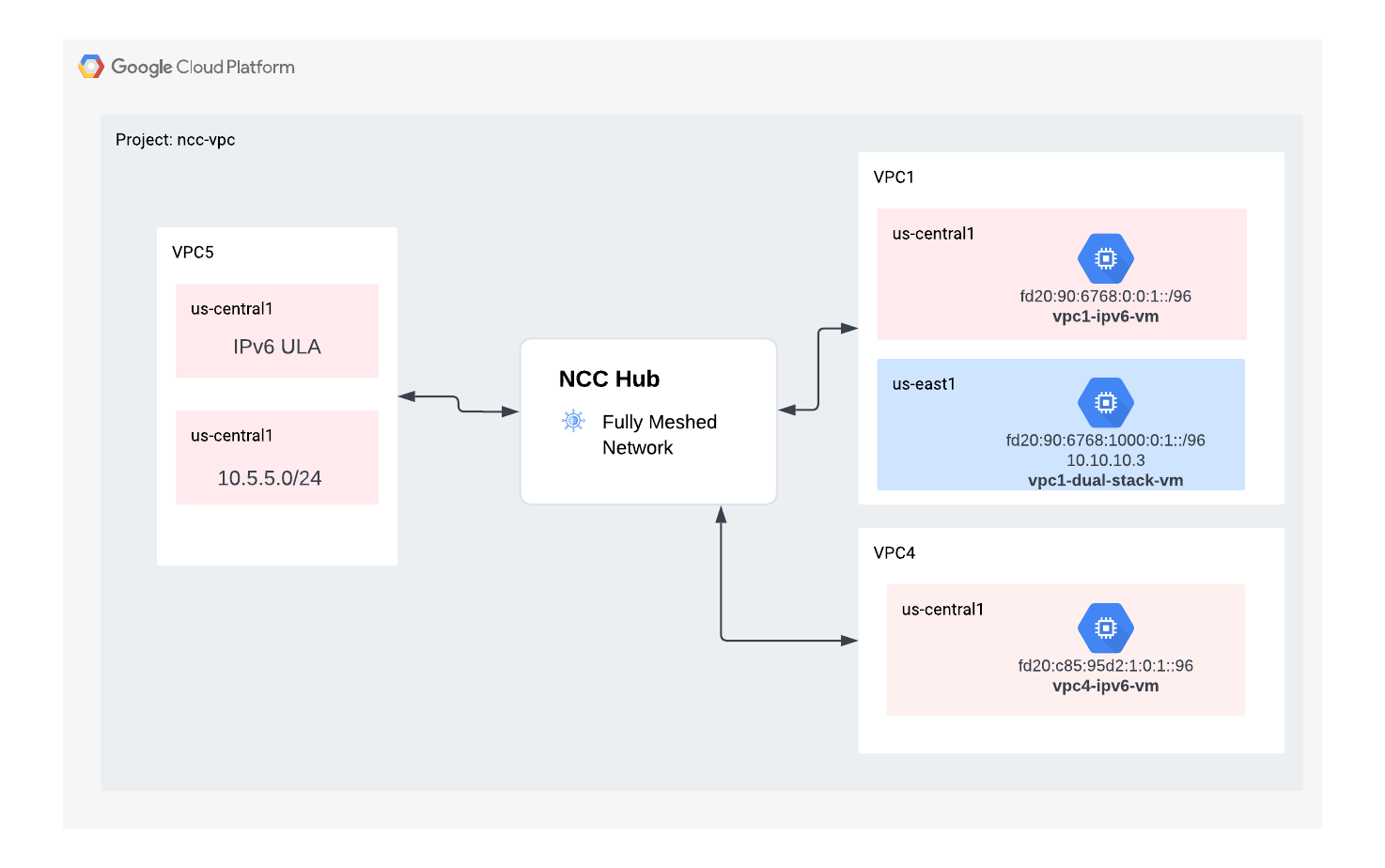

In this lab, users will explore how Network Connectivity Center(NCC) can be used to establish Inter-VPC connectivity at scale through the support for VPC Spokes. When users define a VPC as an VPC spoke, this enables them to connect it to multiple VPC networks together via the NCC Hub. NCC with VPC spoke configuration reduces the operational complexity of managing pairwise inter-VPC connectivity via VPC peering, instead using a centralized connectivity management model.

Recall that Network Connectivity Center (NCC) is a hub-and-spoke control plane model for network connectivity management in Google Cloud. The hub resource provides a centralized connectivity management model to interconnect spokes.

What you'll build

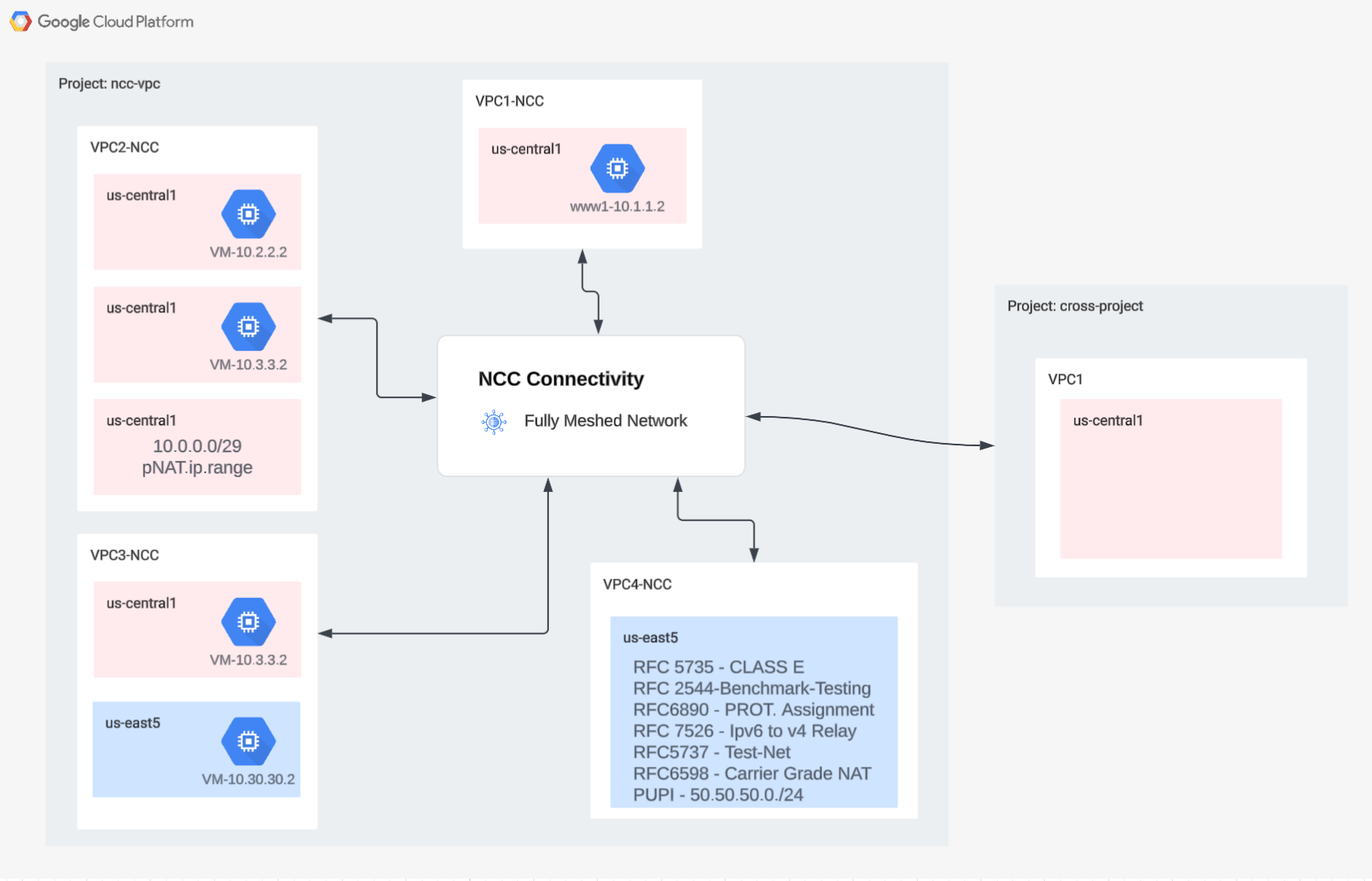

In this codelab, you'll build a logical hub and spoke topology with the NCC hub that will implement a fully meshed VPC connectivity fabric across three distinct VPCs.

What you'll learn

- Full Mesh VPC Connectivity with NCC

- Private NAT across VPC

What you'll need

- Knowledge of GCP VPC network

- Knowledge of Cloud Router and BGP routing

- Two separate GCP projects

- This Codelab requires 5 VPCs. One of those VPC(s) must reside in a separate project than the NCC hub

- Check your Quota:Networks and request additional Networks if required, screenshot below:

Objectives

- Setup the GCP Environment

- Configure Network Connectivity Center with VPC as spoke

- Validate Data Path

- Explore NCC serviceability features

- Clean up used resources

Before you begin

Google Cloud Console and Cloud Shell

To interact with GCP, we will use both the Google Cloud Console and Cloud Shell throughout this lab.

NCC Hub Project Google Cloud Console

The Cloud Console can be reached at https://console.cloud.google.com.

Set up the following items in Google Cloud to make it easier to configure Network Connectivity Center:

In the Google Cloud Console, on the project selector page, select or create a Google Cloud project.

Launch the Cloud Shell. This Codelab makes use of $variables to aid gcloud configuration implementation in Cloud Shell.

gcloud auth list

gcloud config list project

gcloud config set project [HUB-PROJECT-NAME]

projectname=[HUB-PROJECT-NAME]

echo $projectname

gcloud config set compute/zone us-central1-a

gcloud config set compute/region us-central1

IAM Roles

NCC requires IAM roles to access specific APIs. Be sure to configure your user with the NCC IAM roles as required.

Role/Description | Permissions |

networkconnectivity.networkAdmin - Allows network administrators to manage hub and spokes. | networkconnectivity.hubs.networkconnectivity.spokes. |

networkconnectivity.networkSpokeManager - Allows adding and managing spokes in a hub. To be used in Shared VPC where the host-project owns the Hub, but other admins in other projects can add spokes for their attachments to the Hub. | networkconnectivity.spokes.** |

networkconnectivity.networkUsernetworkconnectivity.networkViewer - Allows network users to view different attributes of hub and spokes. | networkconnectivity.hubs.getnetworkconnectivity.hubs.listnetworkconnectivity.spokes.getnetworkconnectivity.spokes.listnetworkconnectivity.spokes.aggregatedList |

2. Setup the Network Environment

Overview

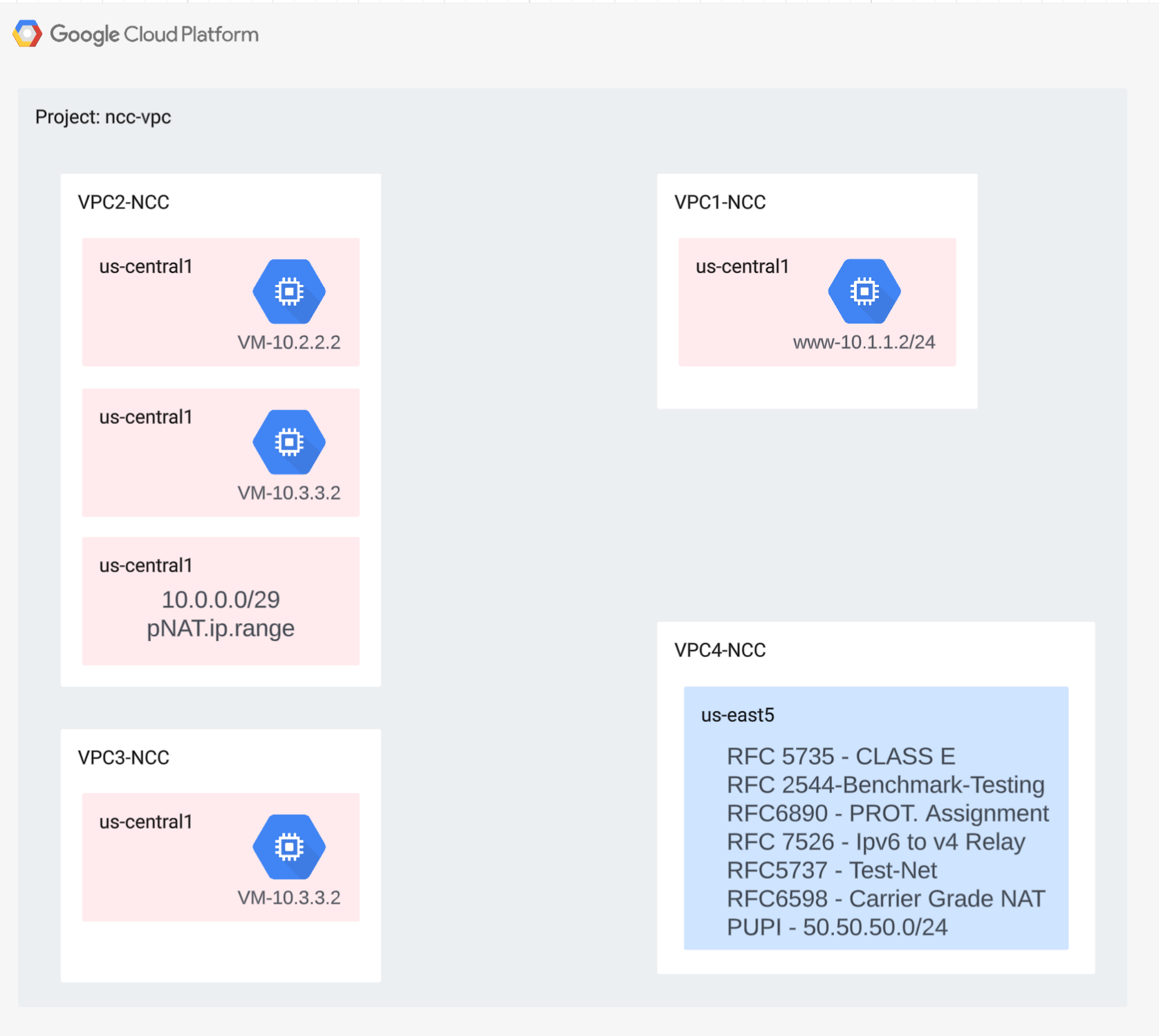

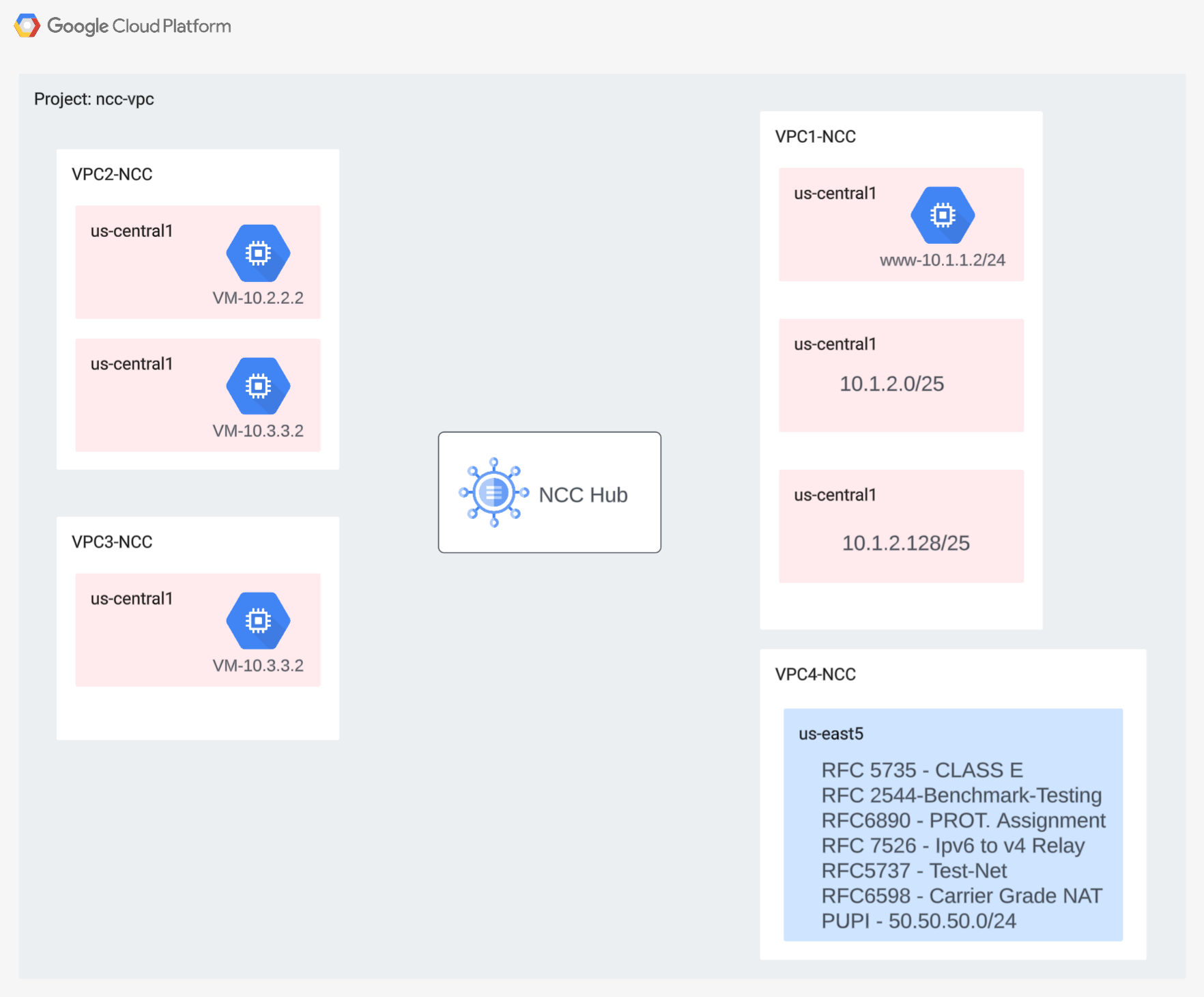

In this section, we'll deploy the VPC networks and firewall rules in a single project. The logical diagram illustrates the network environment that will be setup in this step.

To demonstrate cross project spoke support, in a later step, we'll deploy a VPC and firewall rules in a different project.

Create the VPCs and the Subnets

The VPC network contains subnets that you'll install GCE VM for data path validation

gcloud compute networks create vpc1-ncc --subnet-mode custom

gcloud compute networks create vpc2-ncc --subnet-mode custom

gcloud compute networks create vpc3-ncc --subnet-mode custom

gcloud compute networks create vpc4-ncc --subnet-mode custom

gcloud compute networks subnets create vpc1-ncc-subnet1 \

--network vpc1-ncc --range 10.1.1.0/24 --region us-central1

gcloud compute networks subnets create vpc1-ncc-subnet2 \

--network vpc1-ncc --range 10.1.2.0/25 --region us-central1

gcloud compute networks subnets create vpc1-ncc-subnet3 \

--network vpc1-ncc --range 10.1.2.128/25 --region us-central1

gcloud compute networks subnets create vpc2-ncc-subnet1 \

--network vpc2-ncc --range 10.2.2.0/24 --region us-central1

VPC Supported Subnet Ranges

NCC supports all valid IPv4 subnet ranges including privately used public IP addresses. In this step, create valid IP ranges in VPC4 that will be imported into the hub route table.

gcloud compute networks subnets create benchmark-testing-rfc2544 \

--network vpc4-ncc --range 198.18.0.0/15 --region us-east1

gcloud compute networks subnets create class-e-rfc5735 \

--network vpc4-ncc --range 240.0.0.0/4 --region us-east1

gcloud compute networks subnets create ietf-protcol-assignment-rfc6890 \

--network vpc4-ncc --range 192.0.0.0/24 --region us-east1

gcloud compute networks subnets create ipv6-4-relay-rfc7526 \

--network vpc4-ncc --range 192.88.99.0/24 --region us-east1

gcloud compute networks subnets create pupi \

--network vpc4-ncc --range 50.50.50.0/24 --region us-east1

gcloud compute networks subnets create test-net-1-rfc5737 \

--network vpc4-ncc --range 192.0.2.0/24 --region us-east1

gcloud compute networks subnets create test-net-2-rfc5737 \

--network vpc4-ncc --range 198.51.100.0/24 --region us-east1

gcloud compute networks subnets create test-net-3-rfc5737 \

--network vpc4-ncc --range 203.0.113.0/24 --region us-east1

Create Overlapping Subnet Ranges

NCC will not import overlapping IP ranges into the hub route table. Users will work around this restriction in a later step. For now, create two overlapping Ip ranges for VPC2 and VPC3.

gcloud compute networks subnets create overlapping-vpc2 \

--network vpc3-ncc --range 10.3.3.0/24 --region us-central1

gcloud compute networks subnets create overlapping-vpc3 \

--network vpc2-ncc --range 10.3.3.0/24 --region us-central1

Configure VPC Firewall Rules

Configure firewall rules on each VPC to allow

- SSH

- Internal IAP

- 10.0.0.0/8 range

gcloud compute firewall-rules create ncc1-vpc-internal \

--network vpc1-ncc \

--allow all \

--source-ranges 10.0.0.0/8

gcloud compute firewall-rules create ncc2-vpc-internal \

--network vpc2-ncc \

--allow all \

--source-ranges 10.0.0.0/8

gcloud compute firewall-rules create ncc3-vpc-internal \

--network vpc3-ncc \

--allow all \

--source-ranges 10.0.0.0/8

gcloud compute firewall-rules create ncc4-vpc-internal \

--network vpc4-ncc \

--allow all \

--source-ranges 10.0.0.0/8

gcloud compute firewall-rules create ncc1-vpc-iap \

--network vpc1-ncc \

--allow all \

--source-ranges 35.235.240.0/20

gcloud compute firewall-rules create ncc2-vpc-iap \

--network vpc2-ncc \

--allow=tcp:22 \

--source-ranges 35.235.240.0/20

gcloud compute firewall-rules create ncc3-vpc-iap \

--network vpc3-ncc \

--allow=tcp:22 \

--source-ranges 35.235.240.0/20

gcloud compute firewall-rules create ncc4-vpc-iap \

--network vpc4-ncc \

--allow=tcp:22 \

--source-ranges 35.235.240.0/20

Configure GCE VM in Each VPC

You'll need temporary internet access to install packages on "vm1-vpc1-ncc."

Create four virtual machines, each VM will be assigned to one of the VPCs previously created

gcloud compute instances create vm1-vpc1-ncc \

--subnet vpc1-ncc-subnet1 \

--metadata=startup-script='#!/bin/bash

apt-get update

apt-get install apache2 -y

apt-get install tcpdump -y

service apache2 restart

echo "

<h3>Web Server: www-vm1</h3>" | tee /var/www/html/index.html'

gcloud compute instances create vm2-vpc2-ncc \

--zone us-central1-a \

--subnet vpc2-ncc-subnet1 \

--no-address

gcloud compute instances create pnat-vm-vpc2 \

--zone us-central1-a \

--subnet overlapping-vpc3 \

--no-address

gcloud compute instances create vm1-vpc4-ncc \

--zone us-east1-b \

--subnet class-e-rfc5735 \

--no-address

3. Network Connectivity Center Hub

Overview

In this section, we'll configure a NCC Hub using gcloud commands. The NCC Hub will serve as the control plane responsible for building routing configuration between each VPC spoke.

Enable API Services

Enable the network connectivity API in case it is not yet enabled:

gcloud services enable networkconnectivity.googleapis.com

Create NCC Hub

Create a NCC hub using the gCloud command

gcloud network-connectivity hubs create ncc-hub

Example output

Create request issued for: [ncc-hub]

Waiting for operation [projects/user-3p-dev/locations/global/operations/operation-1668793629598-5edc24b7ee3ce-dd4c765b-5ca79556] to complete...done.

Created hub [ncc-hub]

Describe the newly created NCC Hub. Note the name and associated path.

gcloud network-connectivity hubs describe ncc-hub

gcloud network-connectivity hubs describe ncc-hub

createTime: '2023-11-02T02:28:34.890423230Z'

name: projects/user-3p-dev/locations/global/hubs/ncc-hub

routeTables:

- projects/user-3p-dev/locations/global/hubs/ncc-hub/routeTables/default

state: ACTIVE

uniqueId: de749c4c-0ef8-4888-8622-1ea2d67450f8

updateTime: '2023-11-02T02:28:48.613853463Z'

NCC Hub introduced a routing table that defines the control plane for creating data connectivity. Find the name of NCC Hub's routing table

gcloud network-connectivity hubs route-tables list --hub=ncc-hub

NAME: default

HUB: ncc-hub

DESCRIPTION:

Find the URI of the NCC default route table.

gcloud network-connectivity hubs route-tables describe default --hub=ncc-hub

createTime: '2023-02-24T17:32:58.786269098Z'

name: projects/user-3p-dev/locations/global/hubs/ncc-hub/routeTables/default

state: ACTIVE

uid: eb1fdc35-2209-46f3-a8d6-ad7245bfae0b

updateTime: '2023-02-24T17:33:01.852456186Z'

List the contents of the NCC Hub's default routing table. Note* NCC Hub's route table will be empty until spokes are

gcloud network-connectivity hubs route-tables routes list --hub=ncc-hub --route_table=default

The NCC Hub's route table should be empty.

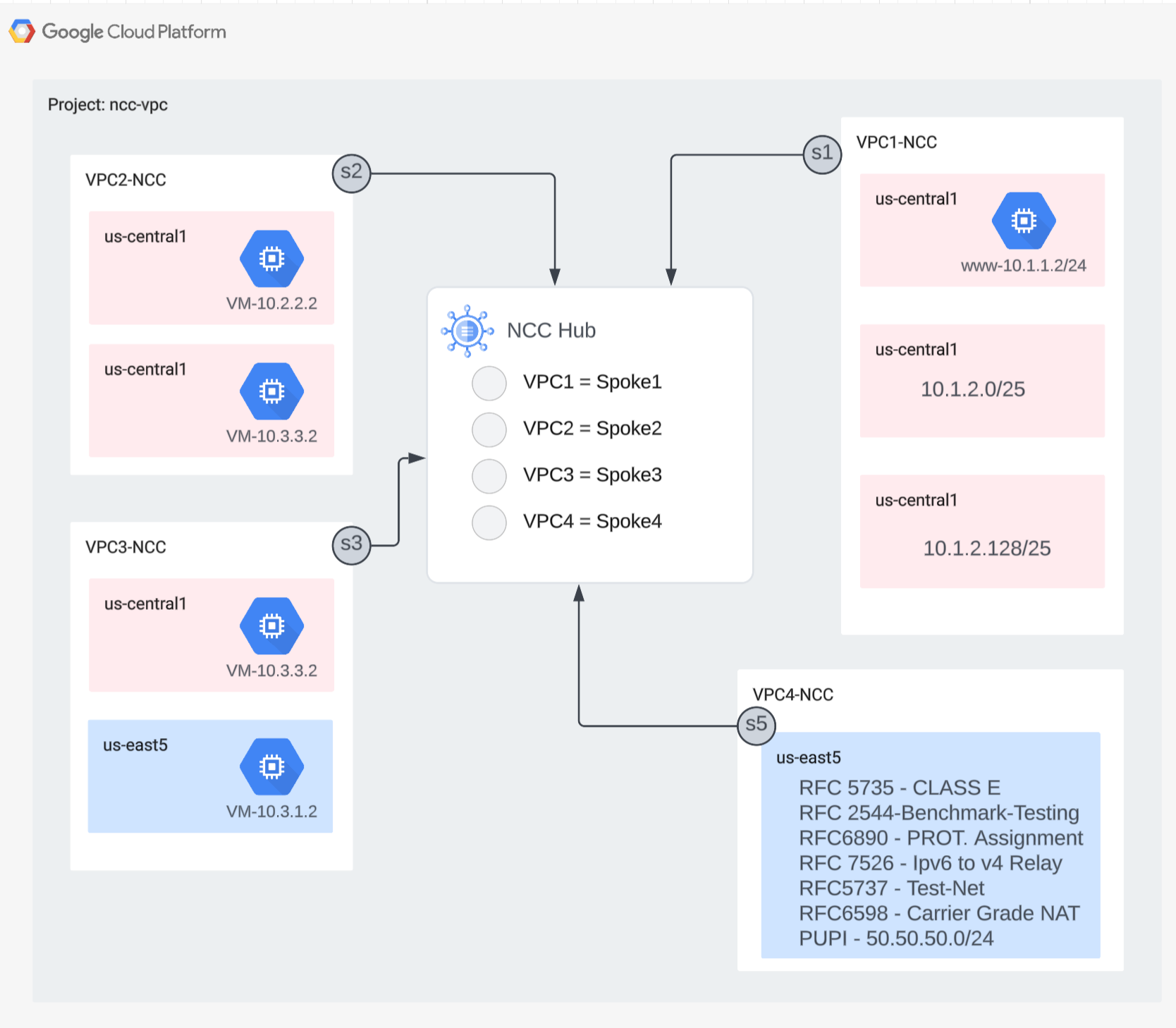

4. NCC with VPC Spokes

Overview

In this section, you'll configure three VPC(s) as a NCC Spoke using gCloud commands.

Configure VPC(s) as a NCC Spoke

Configure the following VPC(s) as as NCC Spoke in this order

- VPC4

- VPC1

- VPC2

- VPC3

Configure VPC4 as an NCC spoke and assign it to the NCC hub that was previously created. NCC spoke API calls require a location to be specified. The flag "–global" simplifies the gcloud syntax by allowing the user to avoid specifying a full URI path when configuring a new NCC spoke.

gcloud network-connectivity spokes linked-vpc-network create vpc4-spoke4 \

--hub=ncc-hub \

--vpc-network=vpc4-ncc \

--global

Configure VPC1 as an NCC spoke.

Administrators can exclude subnet routes from being exported from a VPC spoke into the NCC hub's route table. In this part of the codelab, create an exclude export rule based on a summary prefix to prevent VPC1's subnet from exporting into the NCC Hub route table.

Use this gcloud command to list all subnets belonging to VPC1.

gcloud config set accessibility/screen_reader false

gcloud compute networks subnets list --network=vpc1-ncc

Note the pair of /25 subnets previously created in the setup section.

NAME REGION NETWORK RANGE STACK_TYPE

vpc1-ncc-subnet1 us-central1 vpc1-ncc 10.1.1.0/24 IPV4_ONLY

vpc1-ncc-subnet2 us-central1 vpc1-ncc 10.1.2.0/25 IPV4_ONLY

vpc1-ncc-subnet3 us-central1 vpc1-ncc 10.1.2.128/25 IPV4_ONLY

Configure VPC1 as an NCC spoke and exclude the pair of /25 subnets from being imported into the hub routing table by using the "export-exclude-ranges" keyword to filter the /24 summary route from that specific range..

gcloud network-connectivity spokes linked-vpc-network create vpc1-spoke1 \

--hub=ncc-hub \

--vpc-network=vpc1-ncc \

--exclude-export-ranges=10.1.2.0/24 \

--global

Note* Users can filter up to 16 unique IP ranges per NCC spoke.

List the contents of the NCC Hub's default routing table. What happened to the pair of /25 subnets on NCC Hub's routing table?

gcloud network-connectivity hubs route-tables routes list --hub=ncc-hub --route_table=default --filter="NEXT_HOP:vpc1-ncc"

IP_CIDR_RANGE STATE TYPE NEXT_HOP HUB ROUTE_TABLE

10.1.1.0/24 ACTIVE VPC_PRIMARY_SUBNET vpc1-ncc ncc-hub default

Configure VPC2 as an NCC spoke

gcloud network-connectivity spokes linked-vpc-network create vpc2-spoke2 \

--hub=ncc-hub \

--vpc-network=vpc2-ncc \

--global

Configure VPC3 as an NCC spoke and assign it to the NCC hub that was previously created.

gcloud network-connectivity spokes linked-vpc-network create vpc3-spoke3 \

--hub=ncc-hub \

--vpc-network=vpc3-ncc \

--global

What happened?

ERROR: (gcloud.network-connectivity.spokes.linked-vpc-network.create) Invalid resource state for "https://www.googleapis.com/compute/v1/projects/xxxxxxxx/global/networks/vpc3-ncc": 10.3.3.0/24 (SUBNETWORK) overlaps with 10.3.3.0/24 (SUBNETWORK) from "projects/user-3p-dev/global/networks/vpc2-ncc" (peer)

NCC Hub detected overlapping IP range with VPC2. Recall that VPC2 and VPC3 were both setup with the same 10.3.3.0/24 IP subnet.

Filtering Overlapping IP ranges with Exclude Export

Use the gcloud command to update the VPC2 spoke to exclude the overlapping IP range.

gcloud network-connectivity spokes linked-vpc-network update vpc2-spoke2 \

--hub=ncc-hub \

--vpc-network=vpc2-ncc \

--exclude-export-ranges=10.3.3.0/24 \

--global

Use the gcloud command to update the NCC spoke for VPC3 to exclude the overlapping subnet range.

gcloud network-connectivity spokes linked-vpc-network create vpc3-spoke3 \

--hub=ncc-hub \

--vpc-network=vpc3-ncc \

--exclude-export-ranges=10.3.3.0/24 \

--global

List the contents of the NCC Hub's default routing table and examine the output.

gcloud network-connectivity hubs route-tables routes list --hub=ncc-hub --route_table=default

The overlapping IP ranges from VPC2 and VPC3 are excluded. NCC Hub routing table supports all valid IPv4 valid range types.

Privately used public IP addresses (PUPI) subnets ranges are NOT exported from the VPC spoke to the NCC hub route table by default.

Use the gcloud command to confirm the network 50.50.50.0/24 is NOT in the NCC Hub's routing table.

gcloud network-connectivity hubs route-tables routes list --hub=ncc-hub --route_table=default --filter="NEXT_HOP:vpc-ncc4"

Use the gcloud command below to update VPC4-Spoke to enable PUPI address exchange across NCC VPCs.

gcloud network-connectivity spokes linked-vpc-network update vpc4-spoke4 \

--include-export-ranges=ALL_IPV4_RANGES \

--global

Use the gcloud command to find the network 50.50.50.0/24 in the NCC Hub's routing table.

gcloud network-connectivity hubs route-tables routes list --hub=ncc-hub --route_table=default | grep 50.50.50.0/24

5. NCC with Cross Project Spokes

Overview

So far, you've configured NCC spokes that belong in the same project as the Hub. In this section, you'll configure VPC as a NCC Spoke from a separate project other than the NCC Hub using gCloud commands.

This allows project owners who are managing their own VPC's to participate in network connectivity with NCC Hub.

Cross Project: Google Cloud Console and Cloud Shell

To interact with GCP, we will use both the Google Cloud Console and Cloud Shell throughout this lab.

Cross Project Spoke Google Cloud Console

The Cloud Console can be reached at https://console.cloud.google.com.

Set up the following items in Google Cloud to make it easier to configure Network Connectivity Center:

In the Google Cloud Console, on the project selector page, select or create a Google Cloud project.

Launch the Cloud Shell. This Codelab makes use of $variables to aid gcloud configuration implementation in Cloud Shell.

gcloud auth list

gcloud config list project

gcloud config set project [YOUR-CROSSPROJECT-NAME]

xprojname=[YOUR-CROSSPROJECT-NAME]

echo $xprojname

gcloud config set compute/zone us-central1-a

gcloud config set compute/region us-central1

IAM Roles

NCC requires IAM roles to access specific APIs. Be sure to configure your user with the NCC IAM roles as required.

At a minimum, the cross project spoke administrator must be granted the IAM role: "networkconnectivity.networkSpokeManager. "

The table below lists the IAM role required for NCC Hub and Spoke admin for reference.

Role/Description | Permissions |

networkconnectivity.networkAdmin - Allows network administrators to manage hub and spokes. | networkconnectivity.hubs.networkconnectivity.spokes. |

networkconnectivity.networkSpokeManager - Allows adding and managing spokes in a hub. To be used in Shared VPC where the host-project owns the Hub, but other admins in other projects can add spokes for their attachments to the Hub. | networkconnectivity.spokes.** |

networkconnectivity.networkUsernetworkconnectivity.networkViewer - Allows network users to view different attributes of hub and spokes. | networkconnectivity.hubs.getnetworkconnectivity.hubs.listnetworkconnectivity.spokes.getnetworkconnectivity.spokes.listnetworkconnectivity.spokes.aggregatedList |

Create the VPCs and the Subnets in the Cross Project

The VPC network contains subnets that you'll install GCE VM for data path validation

gcloud compute networks create xproject-vpc \

--subnet-mode custom

gcloud compute networks subnets create xprj-net-1 \

--network xproject-vpc \

--range 10.100.1.0/24 \

--region us-central1

gcloud compute networks subnets create xprj-net-2 \

--network xproject-vpc \

--range 10.100.2.0/24 \

--region us-central1

NCC Hub Project URI

Use this gcloud command to find the NCC Hub URI. You'll need the URI path to configure the cross project NCC spoke in the next step.

gcloud network-connectivity hubs describe ncc-hub

Cross Project Spoke VPC

Login to the other project where the VPC is NOT part of the NCC Hub project. On cloudshell, use this command to configure a VPC as an NCC spoke.

- HUB_URI should be the URI of a hub in a different project.

- VPC_URI should be in the same project as the spoke

- VPC-network specifies the VPC in this cross project will join the NCC Hub in another project

gcloud network-connectivity spokes linked-vpc-network create xproj-spoke \

--hub=projects/[YOUR-PROJECT-NAME]/locations/global/hubs/ncc-hub \

--global \

--vpc-network=xproject-vpc

.

Create request issued for: [xproj-spoke]

Waiting for operation [projects/xproject/locations/global/operations/operation-1689790411247-600dafd351158-2b862329-19b747f1] to complete...done.

Created spoke [xproj-spoke].

createTime: '2023-07-19T18:13:31.388500663Z'

hub: projects/[YOUR-PROJECT-NAME]/locations/global/hubs/ncc-hub

linkedVpcNetwork:

uri: https://www.googleapis.com/compute/v1/projects/xproject/global/networks/xproject-vpc

name: projects/xproject/locations/global/spokes/xproj-spoke

reasons:

- code: PENDING_REVIEW

message: Spoke is Pending Review

spokeType: VPC_NETWORK

state: INACTIVE

uniqueId: 46b4d091-89e2-4760-a15d-c244dcb7ad69

updateTime: '2023-07-19T18:13:38.652800902Z'

What is the state of the cross project NCC spoke? Why?

6. Reject or Accepting Cross Project Spoke

Overview

NCC Hub admins must explicitly accept a cross project spoke to join the hub. This prevents project owners from attaching rogue NCC spokes to the NCC global routing table. Once a spoke has been accepted or rejected, it can subsequently be rejected or accepted as many times as desired by running the commands above.

Return to the project where the NCC hub is located by logging into cloud shell.

Identify the Cross Project Spokes to Review

gcloud network-connectivity hubs list-spokes ncc-hub \

--filter="reason:PENDING_REVIEW"

Accepting a spoke

gcloud network-connectivity hubs accept-spoke ncc-hub --spoke=xproj-spoke

Optional: Rejecting a spoke

gcloud network-connectivity spokes reject-spoke ncc-hub --spoke=xproj-spoke

--details="some reason to reject"

Listing the Active Spokes on Hub

gcloud network-connectivity hubs list-spokes ncc-hub \

--filter="state:ACTIVE"

NAME PROJECT LOCATION TYPE STATE STATE REASON

Xproj-spoke xproj global VPC_NETWORK ACTIVE

vpc4-spoke4 user-3p-dev global VPC_NETWORK ACTIVE

vpc1-spoke1 user-3p-dev global VPC_NETWORK ACTIVE

vpc2-spoke2 user-3p-dev global VPC_NETWORK ACTIVE

vpc3-spoke3 user-3p-dev global VPC_NETWORK ACTIVE

List subnet routes on the Hub

From the output, can you see the subnet routes from the cross VPC spoke?

gcloud network-connectivity hubs route-tables routes list \

--route_table=default \

--hub=ncc-hub \

--filter="NEXT_HOP:xprj-vpc"

IP_CIDR_RANGE STATE TYPE NEXT_HOP HUB ROUTE_TABLE

10.100.1.0/24 ACTIVE VPC_PRIMARY_SUBNET xprj-vpc ncc-hub default

Update Cross Project Spoke VPC with Include-Export filter

Login to the project where the VPC is NOT part of the NCC Hub project. On cloudshell, use this command to configure a VPC as an NCC spoke.

- HUB_URI should be the URI of a hub in a different project.

- VPC_URI should be in the same project as the spoke

- VPC-network specifies the VPC in this cross project will join the NCC Hub in another project

- Import only the subnet range 10.100.2.0/24 into the NCC Hub Route Table

- Note the "ETAG" value from the output. This value is generated by NCC and you'll need to provide this value to the NCC hub administrator. The NCC hub admin will need to reference this value when accepting the cross project spoke's request to join the hub.

gcloud network-connectivity spokes linked-vpc-network update xproj-spoke \

--hub=projects/[YOUR-PROJECT-NAME]/locations/global/hubs/ncc-hub \

--global \

--include-export-ranges=10.100.2.0/24

Update request issued for: [xprj-vpc]

Waiting for operation [projects]/xproject/locations/global/operations/operation-1742936388803-6313100521cae-020ac5d2-58

52fbba] to complete...done.

Updated spoke [xprj-vpc].

createTime: '2025-02-14T14:25:41.996129250Z'

etag: '4'

fieldPathsPendingUpdate:

- linked_vpc_network.include_export_ranges

group: projects/xxxxxxxx/locations/global/hubs/ncc-hub/groups/default

hub: projects/xxxxxxxx/locations/global/hubs/ncc-hub

linkedVpcNetwork:

includeExportRanges:

- 10.100.2.0/24

uri: https://www.googleapis.com/compute/v1/projects/xproject/global/networks/vpc1-spoke

name: projects/xproject/locations/global/spokes/xprj-vpc

reasons:

- code: UPDATE_PENDING_REVIEW

message: Spoke update is Pending Review

spokeType: VPC_NETWORK

state: ACTIVE

uniqueId: 182e0f8f-91cf-481c-a081-ea6f7e40fb0a

updateTime: '2025-03-25T20:59:51.995734879Z'

Identify the Updated Cross Project Spokes to Review

Login to the project that is hosting the NCC hub. On cloudshell, use this command to check the status of the cross project VPC spoke update.

- What is the ETAG value? This value should match the output from the vpc spoke update.

gcloud network-connectivity hubs list-spokes ncc-hub \

--filter="reasons:UPDATE_PENDING_REVIEW" \

--format=yaml

Accept the updated changes from the cross project spoke

Use the command to accept the cross project spoke's request to join NCC Hub

gcloud network-connectivity hubs accept-spoke-update ncc-hub \

--spoke=https://www.googleapis.com/networkconnectivity/v1/projects/xproject/locations/global/spokes/xproj-spoke \

--spoke-etag={etag value}

Optionally, reject the updated changes from the cross project spoke

Use the command to reject the cross project spoke's request to join NCC Hub

gcloud network-connectivity hubs reject-spoke-update ncc-hub \

--spoke=https://www.googleapis.com/networkconnectivity/v1/projects/xproject/locations/global/spokes/xproj-spoke \

--details="not today" \

--spoke-etag={etag value}

Verify the cross project spoke has joined the NCC hub

gcloud network-connectivity hubs list-spokes ncc-hub \ --filter="name:xproj-spoke"

7. Private NAT Between VPC(s)

Overview

In this section, you'll configure private NAT for overlapping subnet ranges between two VPCs. Note that private NAT between VPC(s) requires NCC.

In the previous section VPC2 and VPC3 are configured with an overlapping subnet range of "10.3.3.0/24." Both VPC(s) are configured as an NCC spoke to exclude the overlapping subnet from being inserted into the NCC hub route table, which means there is no layer 3 data path to reach hosts that reside on that subnet.

Use these commands in the NCC hub project to find the overlapping subnet range(s).

gcloud compute networks subnets list --network vpc2-ncc

gcloud compute networks subnets list --network vpc3-ncc

On vpc2-ncc, what is the subnet name that contains the overlapping IP Range?

*Note and save the subnet name somewhere. You'll configure source NAT for this range.

Configure Private NAT

Dedicate a routable subnet range to source NAT traffic from VPC2's overlapping subnet. By configuring a non-overlapping subnet range using the "–purpose=PRIVATE_NAT" flag.

gcloud beta compute networks subnets create ncc2-spoke-nat \

--network=vpc2-ncc \

--region=us-central1 \

--range=10.10.10.0/29 \

--purpose=PRIVATE_NAT

Create a dedicated cloud router to perform private NAT

gcloud compute routers create private-nat-cr \

--network vpc2-ncc \

--region us-central1

Configure cloud router to source NAT the overlapping range of 10.3.3.0/24 from vpc2-ncc. In the example configuration below, "overlapping-vpc3" is the name of the overlapping subnet. The "ALL" keyword specifies that all IP ranges in the subnet will be source NAT'd.

gcloud beta compute routers nats create ncc2-nat \

--router=private-nat-cr \

--type=PRIVATE \

--nat-custom-subnet-ip-ranges=overlapping-vpc3:ALL \

--router-region=us-central1

The previous steps created a pool of NAT IP ranges and the specific subnet that will be translated. In this step, create NAT Rule "1" that translates network packets matching traffic sourced from the overlapping subnet range if the destination network takes a path from the NCC hub routing table.

gcloud beta compute routers nats rules create 1 \

--router=private-nat-cr \

--region=us-central1 \

--match='nexthop.hub == "//networkconnectivity.googleapis.com/projects/$projectname/locations/global/hubs/ncc-hub"' \

--source-nat-active-ranges=ncc2-spoke-nat \

--nat=ncc2-nat

Verify the Data Path for Private NAT

gcloud beta compute routers nats describe ncc2-nat --router=private-nat-cr

Example output

enableDynamicPortAllocation: true

enableEndpointIndependentMapping: false

endpointTypes:

- ENDPOINT_TYPE_VM

name: ncc2-nat

rules:

- action:

sourceNatActiveRanges:

- https://www.googleapis.com/compute/beta/projects/xxxxxxxx/regions/us-central1/subnetworks/ncc2-spoke-nat

match: nexthop.hub == "//networkconnectivity.googleapis.com/projects/xxxxxxxx/locations/global/hubs/ncc-hub"

ruleNumber: 1

sourceSubnetworkIpRangesToNat: LIST_OF_SUBNETWORKS

subnetworks:

- name: https://www.googleapis.com/compute/beta/projects/xxxxxxxx/regions/us-central1/subnetworks/overlapping-vpc3

sourceIpRangesToNat:

- ALL_IP_RANGES

type: PRIVATE

Optionally,

- Switch to the web console

- navigate to "Network Services > Cloud NAT > ncc2-nat"

Verify that dynamic port allocation is enabled by default.

Next, you'll verify the data path that uses the private NAT path configured for VPC2.

Open a SSH session to "vm1-vpc1-ncc" and use tcpdump command below to capture packets sourced from the NAT pool range "10.10.10.0/29."

vm1-vpc1-ncc

sudo tcpdump -i any net 10.10.10.0/29 -n

At the time of writing this codelab, private NAT does not support ICMP packets. SSH session to "pNat-vm-vpc2" and use the curl command as shown below to connect to "vm1-vpc1-ncc" on port TCP 80.

pnat-vm-vpc2

curl 10.1.1.2 -v

Examine tcpdump's output on "vm1-vpc1-ncc." What is the source IP address that originated the TCP session to our web server on "vm1-vpc1-ncc."

tcpdump: data link type LINUX_SLL2

tcpdump: verbose output suppressed, use -v[v]... for full protocol decode

listening on any, link-type LINUX_SLL2 (Linux cooked v2), snapshot length 262144 bytes

19:05:27.504761 ens4 In IP 10.10.10.2.1024 > 10.1.1.2:80: Flags [S], seq 2386228656, win 65320, options [mss 1420,sackOK,TS val 3955849029 ecr 0,nop,wscale 7], length 0

19:05:27.504805 ens4 Out IP 10.1.1.2:80 > 10.10.10.2.1024: Flags [S.], seq 48316785, ack 2386228657, win 64768, options [mss 1420,sackOK,TS val 1815983704 ecr 3955849029,nop,wscale 7], length 0

<output snipped>

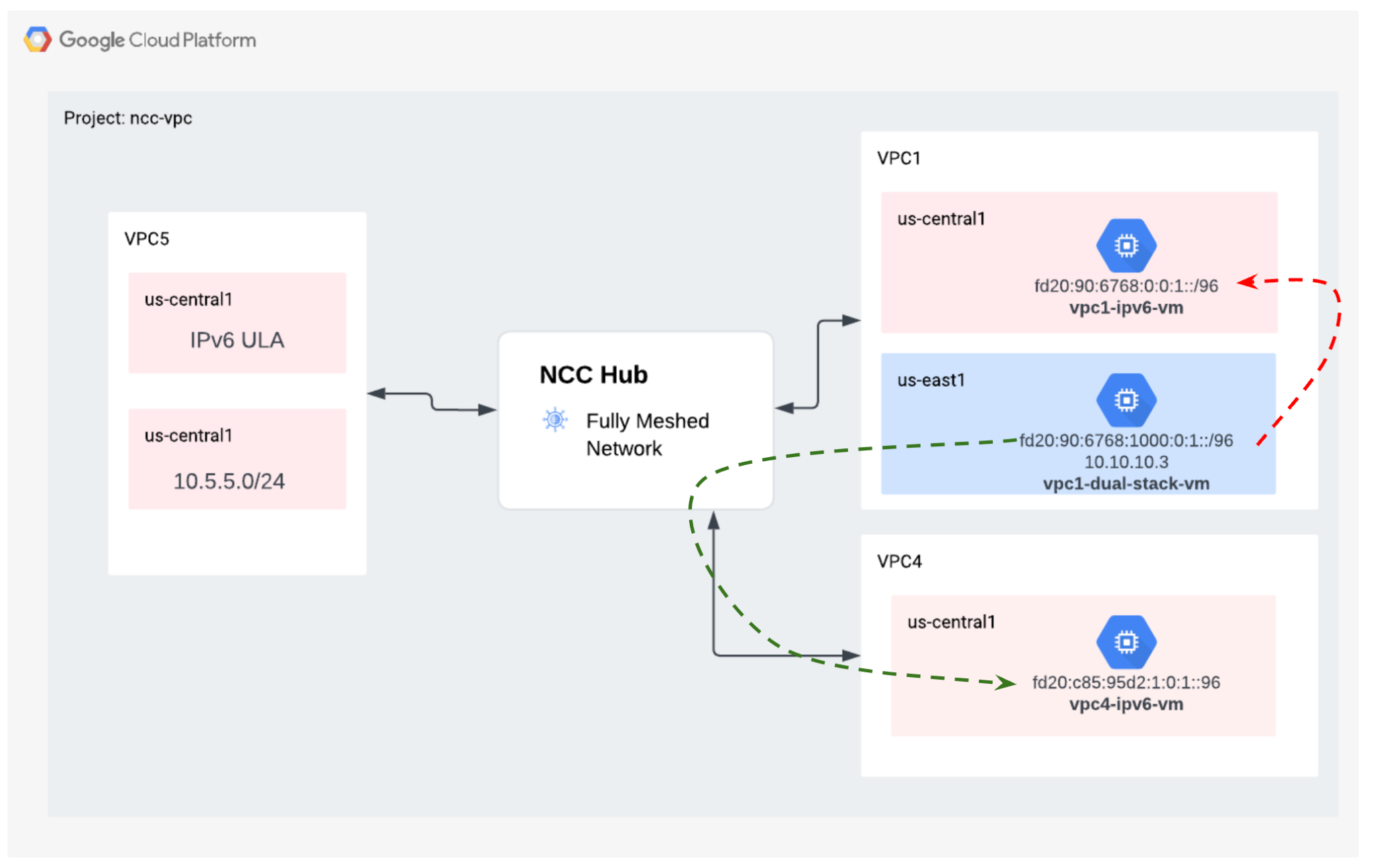

8. NCC Support for IPv6 Subnets

Network Connectivity Center supports IPv6 subnet exchange and dynamic routes exchange between NCC VPC Spokes and Hybrid Spokes. In this section, configure NCC to support, IPv6-only and dual-stack IPv4-and-IPv6 subnet route exchange mode.

Create a new VPC for IPv6 that will join NCC-Hub as a VPC spoke. GCP will automatically assign all ULA addresses from the fd20::/20 range.

gcloud compute networks create vpc5-ncc \

--subnet-mode custom \

--enable-ula-internal-ipv6

gcloud compute networks subnets create vpc5-ext-ipv6 \ --network=vpc5-ncc \

--stack-type=IPV6 \

--ipv6-access-type=EXTERNAL \

--region=us-central1

gcloud compute networks subnets create vpc5-ipv4-subnet1 \

--network vpc5-ncc \

--range 10.5.5.0/24 \

--region us-central1

Use this command to configure VPC5 as an NCC spoke and exclude the IPv4 subnet route from being exported into the hub route table. Export the IPv6 ULA network into the NCC hub route table.

gcloud network-connectivity spokes linked-vpc-network create vpc5-spoke5 \

--hub=ncc-hub \

--vpc-network=vpc5-ncc \

--exclude-export-ranges=10.5.5.0/24

--global

Enable VPC1 and VPC4 for private IPv6 Unique Local Addresses (ULAs). GCP will automatically assign all ULA addresses from the fd20::/20 range.

gcloud compute networks update vpc-ncc4 \

--enable-ula-internal-ipv6

gcloud compute networks update vpc-ncc1 \

--enable-ula-internal-ipv6

Create a native ipv6 and a dual-stack ipv4_v6 subnet in VPC1

gcloud compute networks subnets create vpc1-ipv6-sn1 \

--network=vpc-ncc1 \

--stack-type=IPV6_ONLY \

--ipv6-access-type=INTERNAL \

--region=us-central1

gcloud compute networks subnets create vpc1-ipv64-sn2 \

--network=vpc-ncc1 \

--range=10.10.10.0/24 \

--stack-type=IPV4_IPV6 \

--ipv6-access-type=INTERNAL \

--region=us-east1

Create a native ipv6 and a dual-stack ipv4_v6 subnet in VPC4

gcloud compute networks subnets create vpc4-ipv6-sn1 \

--network=vpc-ncc4 \

--stack-type=IPV6_ONLY \

--ipv6-access-type=INTERNAL \

--region=us-central1

gcloud compute networks subnets create vpc4-ipv64-sn2 \

--network=vpc-ncc4 \

--range=10.40.40.0/24 \

--stack-type=IPV4_IPV6 \

--ipv6-access-type=INTERNAL \

--region=us-east1

On VPC1, create an IPv6 VPC firewall rule to permit traffic sourced from IPv6 ULA range.

gcloud compute firewall-rules create allow-icmpv6-ula-ncc1 \

--network=vpc-ncc1 \

--action=allow \

--direction=ingress \

--rules=all \

--source-ranges=fd20::/20

On VPC4, create an IPv6 VPC firewall rule to permit traffic sourced from IPv6 ULA range.

gcloud compute firewall-rules create allow-icmpv6-ula-ncc4 \

--network=vpc-ncc4 \

--action=allow \

--direction=ingress \

--rules=all \

--source-ranges=fd20::/20

Create three GCE IPv6 instances to verify data path connectivity in the next section. Note: "vpc1-dualstack-vm" will be used as a bastion host to gain out of band access to the native IPv6 GCE VMs.

gcloud compute instances create vpc4-ipv6-vm \

--zone us-central1-a \

--subnet=vpc4-ipv6-sn1 \

--stack-type=IPV6_ONLY

gcloud compute instances create vpc1-ipv6-vm \

--zone us-central1-a \

--subnet=vpc1-ipv6-sn1 \

--stack-type=IPV6_ONLY

gcloud compute instances create vpc1-dual-stack-vm \

--zone us-east1-b \

--network=vpc-ncc1 \

--subnet=vpc2-ipv64-sn2 \

--stack-type=IPV4_IPV6

Check NCC Hub for IPv6 subnets

Check the NCC hub route table for IPv6 ULA subnets.

gcloud network-connectivity hubs route-tables routes list --route_table=default \

--hub=ncc-hub \

--filter="IP_CIDR_RANGE:fd20"

Note the output from the command above did not list the IPv6 subnets. By default, IPv6 subnets from VPC spokes are NOT included for export to the NCC hub route table.

Listed 0 items.

Use the gcloud commands below to update VPC1 and VPC4 spokes to export IPv6 subnets into the NCC hub route table.

gcloud network-connectivity spokes linked-vpc-network update vpc1-spoke1 \

--global \

--include-export-ranges=ALL_IPV6_RANGES

gcloud network-connectivity spokes linked-vpc-network update vpc4-spoke4 \

--global \

--include-export-ranges=ALL_IPV6_RANGES

gcloud network-connectivity spokes linked-vpc-network update vpc5-spoke5 \

--global \

--include-export-ranges=ALL_IPV6_RANGES

Once again, check the NCC hub route table for IPv6 ULA subnets.

gcloud network-connectivity hubs route-tables routes list --route_table=default \

--hub=ncc-hub \

--filter="IP_CIDR_RANGE:fd20"

Example output

IP_CIDR_RANGE PRIORITY LOCATION STATE TYPE SITE_TO_SITE NEXT_HOP HUB ROUTE_TABLE

fd20:c95:95d2:1000:0:0:0:0/64 us-east1 ACTIVE VPC_PRIMARY_SUBNET N/A vpc-ncc4 ncc-hub default

fd20:c95:95d2:1:0:0:0:0/64 us-central1 ACTIVE VPC_PRIMARY_SUBNET N/A vpc-ncc4 ncc-hub default

fd20:670:3823:0:0:0:0:0/64 us-central1 ACTIVE VPC_PRIMARY_SUBNET N/A vpc-ncc5 ncc-hub default

fd20:90:6768:1000:0:0:0:0/64 us-east1 ACTIVE VPC_PRIMARY_SUBNET N/A vpc-ncc2 ncc-hub default

fd20:90:6768:0:0:0:0:0/64 us-central1 ACTIVE VPC_PRIMARY_SUBNET N/A vpc-ncc2 ncc-hub default

Filter IPv6 subnets with NCC VPC spokes

Check the NCC hub route table for external IPv6 subnet routes

gcloud network-connectivity hubs route-tables routes list --route_table=default \

--hub=ncc-hub \

--filter="NEXT_HOP:vpc-ncc5"

Example output, the ncc hub route table has learned the external IPv6 range.

IP_CIDR_RANGE PRIORITY LOCATION STATE TYPE SITE_TO_SITE NEXT_HOP HUB ROUTE_TABLE

10.5.5.0/24 us-central1 ACTIVE VPC_PRIMARY_SUBNET N/A vpc-ncc5 ncc-hub default

2600:1900:4001:ce6:0:0:0:0/64 us-central1 ACTIVE VPC_PRIMARY_SUBNET N/A vpc-ncc5 ncc-hub default

fd20:670:3823:0:0:0:0:0/64 us-central1 ACTIVE VPC_PRIMARY_SUBNET N/A vpc-ncc5 ncc-hub default

10.50.10.0/24 us-central1 ACTIVE VPC_PRIMARY_SUBNET N/A vpc-ncc5 ncc-hub default

Use the gcloud command below to update VPC5 spokes to exclude the two specific internal IPv6 subnets and the one external IPv6 subnet from being injected into the NCC hub route table.

gcloud network-connectivity spokes linked-vpc-network update vpc5-spoke5 \

--global \

--include-export-ranges=fd20:670:3823:0:0:0:0:0/48 \

--exclude-export-ranges=fd20:670:3823:1::/64,fd20:670:3823:2::/64

Use the gcloud command to verify the VPC5 spoke's export filters

gcloud network-connectivity spokes linked-vpc-network update vpc5-spoke5 \

--global \

--include-export-ranges=fd20:670:3823:0:0:0:0:0/48 \

--exclude-export-ranges=fd20:670:3823:1::/64,fd20:670:3823:2::/64

Check the NCC hub route table for external IPv6 subnet routes

gcloud network-connectivity hubs route-tables routes list --route_table=default \

--hub=ncc-hub \

--filter="NEXT_HOP:vpc-ncc5"

Example output, the ncc hub route table has learned the external IPv6 range.

IP_CIDR_RANGE PRIORITY LOCATION STATE TYPE SITE_TO_SITE NEXT_HOP HUB ROUTE_TABLE

fd20:670:3823:0:0:0:0:0/64 us-central1 ACTIVE VPC_PRIMARY_SUBNET N/A vpc-ncc5 demo-mesh-hub default

9. Verify Data Path Connectivity

IPv4 Data path Connectivity

Refer to the diagram, verify the IPv4 data path between each virtual machine.

SSH to "vm1-vpc1-ncc" and start TCP dump to trace ICMP packets from " vm2-vpc2-ncc." As a reminder this VM resides on VPC2.

vm1-vpc1-ncc

sudo tcpdump -i any icmp -v -e -n

Establish a SSH session to "vm1-vpc2-ncc" and "ping" the ip address of "vm1-vpc1-ncc."

vm1-vpc2-ncc

ping 10.1.1.2

Establish a SSH to "vm1-vpc2-ncc" and "ping" the ip address of "vm1-vpc4-ncc."

vm1-vpc2-ncc

ping 240.0.0.2

IPv6 Data path Connectivity

Refer to the diagram, verify the IP64 data path between each virtual machine.

Use the gcloud command to list the IP address for each IPv6 enabled instance.

gcloud compute instances list --filter="INTERNAL_IP:fd20"

Sample output

NAME ZONE MACHINE_TYPE PREEMPTIBLE INTERNAL_IP EXTERNAL_IP STATUS

vpc1-ipv6-vm us-central1-a n1-standard-1 fd20:90:6768:0:0:1:0:0/96 RUNNING

vpc4-ipv6-vm us-central1-a n1-standard-1 fd20:c95:95d2:1:0:1:0:0/96 RUNNING

vpc1-dual-stack-vm us-east1-b n1-standard-1 10.10.10.3 XXX.196.137.107 RUNNING

fd20:90:6768:1000:0:1:0:0/96

Establish a SSH session to "vpc1-dualstack-vm" and "ping" the IPv6 address of "vpc1-ipv6-vm" to validate IPv6 connectivity within a global VPC

ping fd20:90:6768:1000:0:1::

Establish a SSH session to "vpc1-dualstack-vm" and "ping" the IPv6 address of "vpc4-ipv6-vm" to validate IPv6 connectivity across an NCC connection.

ping fd20:c95:95d2:1:0:1::

10. Clean Up

Login to cloud shell and delete VM instances in the hub and branch site networks

Delete private VPC Nat configurations

gcloud beta compute routers nats rules delete 1 \

--nat=ncc2-nat \

--router=private-nat-cr \

--region=us-central1 \

--quiet

gcloud beta compute routers nats delete ncc2-nat \

--router=private-nat-cr \

--router-region=us-central1 \

--quiet

gcloud compute routers delete private-nat-cr \

--region=us-central1 \

--quiet

Delete ncc spokes

gcloud network-connectivity spokes delete vpc1-spoke1 --global --quiet

gcloud network-connectivity spokes delete vpc2-spoke2 --global --quiet

gcloud network-connectivity spokes delete vpc3-spoke3 --global --quiet

gcloud network-connectivity spokes delete vpc4-spoke4 --global --quiet

Reject cross project spoke

Reject the cross project VPC spoke from the NCC hub.

gcloud network-connectivity spokes reject projects/$xprojname/locations/global/spokes/xproj-spoke \--details="cleanup" \

--global

Delete NCC Hub

gcloud network-connectivity hubs delete ncc-hub --quiet

Delete Firewall Rules

gcloud compute firewall-rules delete ncc1-vpc-internal --quiet

gcloud compute firewall-rules delete ncc2-vpc-internal --quiet

gcloud compute firewall-rules delete ncc3-vpc-internal --quiet

gcloud compute firewall-rules delete ncc4-vpc-internal --quiet

gcloud compute firewall-rules delete ncc1-vpc-iap --quiet

gcloud compute firewall-rules delete ncc2-vpc-iap --quiet

gcloud compute firewall-rules delete ncc3-vpc-iap --quiet

gcloud compute firewall-rules delete ncc4-vpc-iap --quiet

gcloud compute firewall-rules delete allow-icmpv6-ula-ncc1

gcloud compute firewall-rules delete allow-icmpv6-ula-ncc4

Delete GCE Instances

gcloud compute instances delete vm1-vpc1-ncc --zone=us-central1-a --quiet

gcloud compute instances delete vm2-vpc2-ncc --zone=us-central1-a --quiet

gcloud compute instances delete pnat-vm-vpc2 --zone=us-central1-a --quiet

gcloud compute instances delete vm1-vpc4-ncc --zone=us-east1-b --quiet

gcloud compute instances delete vpc4-ipv6-vm --zone us-central1-a --quiet

gcloud compute instances delete vpc2-dual-stack-vm --zone us-east1-b --quiet

gcloud compute instances delete vpc2-ipv6-vm --zone us-central1-a --quiet

Delete VPC Subnets

gcloud compute networks subnets delete ncc2-spoke-nat --region us-central1 --quiet

gcloud compute networks subnets delete vpc1-ncc-subnet1 --region us-central1 --quiet

gcloud compute networks subnets delete vpc1-ncc-subnet2 --region us-central1 --quiet

gcloud compute networks subnets delete vpc1-ncc-subnet3 --region us-central1 --quiet

gcloud compute networks subnets delete vpc2-ncc-subnet1 --region us-central1 --quiet

gcloud compute networks subnets delete overlapping-vpc2 --region us-central1 --quiet

gcloud compute networks subnets delete overlapping-vpc3 --region us-central1 --quiet

gcloud compute networks subnets delete benchmark-testing-rfc2544 --region us-east1 --quiet

gcloud compute networks subnets delete class-e-rfc5735 --region us-east1 --quiet

gcloud compute networks subnets delete ietf-protcol-assignment-rfc6890 --region us-east1 --quiet

gcloud compute networks subnets delete ipv6-4-relay-rfc7526 --region us-east1 --quiet

gcloud compute networks subnets delete pupi --region us-east1 --quiet

gcloud compute networks subnets delete test-net-1-rfc5737 --region us-east1 --quiet

gcloud compute networks subnets delete test-net-2-rfc5737 --region us-east1 --quiet

gcloud compute networks subnets delete test-net-3-rfc5737 --region us-east1 --quiet

gcloud compute networks subnets delete vpc1-ipv64-sn2 --region=us-east1 --quiet

gcloud compute networks subnets delete vpc1-ipv6-sn1 --region=us-central1 --quiet

gcloud compute networks subnets delete vpc4-ipv64-sn2 --region=us-east1 --quiet

gcloud compute networks subnets delete vpc4-ipv6-sn1 --region=us-central1 --quiet

gcloud compute networks subnets delete vpc5-ext-ipv6 --region=us-central1 --quiet

Delete VPC(s)

gcloud compute networks delete vpc1-ncc vpc2-ncc vpc3-ncc vpc4-ncc, vpc5-ncc --quiet

11. Congratulations!

You have completed the Network Connectivity Center Lab!

What you covered

- Configured Full Mesh VPC Peering network with NCC Hub

- NCC Spoke Exclude Filter

- Cross Project Spoke Support

- Private NAT between VPC

Next Steps

©Google, LLC or its affiliates. All rights reserved. Do not distribute.