1. Before you begin

In this codelab, you'll review code created using TensorFlow and TensorFlow Lite Model Maker to create a model using a dataset based on comment spam. The original data is available on kaggle. It has been gathered into a single CSV, and cleaned up by removing broken text, markup, repeated words and more. This will make it easier to focus on the model instead of the text.

The code you'll be reviewing has been supplied here, but it's highly recommended that you follow along with the code in Google Colab.

Prerequisites

- This codelab was written for experienced developers who are new to machine learning.

- This codelab is part of the Get Started with Text Classification for Mobile pathway. If you haven't yet completed the previous activities, please stop and do so now.

What you'll learn

- How to install TensorFlow Lite Model Maker using Google Colab

- How to download the data from the Cloud server to your device

- How to use a data loader

- How to build the model

What you'll need

- Access to Google Colab

2. Install TensorFlow Lite Model Maker

Open the colab. The first cell in the notebook will install TensorFLow Lite Model Maker for you:

!pip install -q tflite-model-maker

Once it has completed, move on to the next cell.

3. Import the code

The next cell has a number of imports that the code in the notebook will need to use:

import numpy as np

import os

from tflite_model_maker import configs

from tflite_model_maker import ExportFormat

from tflite_model_maker import model_spec

from tflite_model_maker import text_classifier

from tflite_model_maker.text_classifier import DataLoader

import tensorflow as tf

assert tf.__version__.startswith('2')

tf.get_logger().setLevel('ERROR')

This will also check to see if you are running TensorFlow 2.x, which is a requirement to use Model Maker.

4. Download the data

Next you'll download the data from the Cloud server to your device, and set data_file to point at the local file:

data_file = tf.keras.utils.get_file(fname='comment-spam.csv',

origin='https://storage.googleapis.com/laurencemoroney-blog.appspot.com/lmblog_comments.csv',

extract=False)

Model Maker can train models from simple CSV files like this one. You just need to specify which columns hold the text and which hold the labels. You'll see how to do that later on in the codelab.

5. Pre-learned embeddings

Generally, when using Model Maker, you don't build models from scratch. You use existing models that you customize to your needs.

For language models, like this one, this involves using pre-learned embeddings. The idea behind an embedding is that words are converted into numbers, with each word in your overall corpus given a number. An embedding is a vector that is used to determine the sentiment of that word by establishing a "direction" for the word. For example, words that are used frequently in comment spam messages will end up having their vectors point in a similar direction, and words that don't will point in the opposite direction.

By using pre-learned embeddings, you get to start with a corpus, or collection, of words that have already had sentiment learned from a large body of text. This will get you to a solution much faster than starting from zero.

Model Maker provides several pre-learned embeddings you can use, but the simplest and quickest to begin with is average_word_vec.

Here's the code:

spec = model_spec.get('average_word_vec')

spec.num_words = 2000

spec.seq_len = 20

spec.wordvec_dim = 7

The num_words parameter

You will also specify the number of words you want your model to use.

You might think "the more the better," but there's generally a right number based on the frequency each word is used. If you use every word in the entire corpus, you could end up with the model trying to learn and establish the direction of words that are only used once. You'll find in any text corpus that many words are only ever used once or twice, and it's generally not worth having them used in your model as they have negligible impact on the overall sentiment. You can tune your model on the number of words you want by using the num_words parameter.

A smaller number here might give a smaller and quicker model, but it could be less accurate, as it recognizes less words. A larger number here will have a larger and slower model. Finding the sweet spot is key!

The wordvec_dim parameter

The wordved_dim parameter is the number of dimensions you want to use for the vector for each word. The rule of thumb determined from research is that it is the fourth root of the number of words. For example, if you're using 2000 words, a good starting point is 7. If you change the number of words you use, you can also change this.

The seq_len parameter

Models are generally very rigid when it comes to input values. For a language model, this means that the language model can classify sentences of a particular, static, length. That's determined by the seq_len parameter, or sequence length.

When you convert words into numbers (or tokens), a sentence then becomes a sequence of these tokens. So your model will be trained (in this case) to classify and recognize sentences that have 20 tokens. If the sentence is longer than this, it will be truncated. If it's shorter, it will be padded. You'll see a dedicated <PAD> token in the corpus that will be used for this.

6. Use a data loader

Earlier you downloaded the CSV file. Now it's time to use a data loader to turn this into training data that the model can recognize:

data = DataLoader.from_csv(

filename=data_file,

text_column='commenttext',

label_column='spam',

model_spec=spec,

delimiter=',',

shuffle=True,

is_training=True)

train_data, test_data = data.split(0.9)

If you open the CSV file in an editor, you'll see that each line just has two values, and these are described with text in the first line of the file. Typically, each entry is then deemed to be a column.

You'll see that the descriptor for the first column is commenttext, and that the first entry on each line is the text of the comment. Similarly, the descriptor for the second column is spam, and you'll see that the second entry on each line is True or False, to denote if that text is considered comment spam or not. The other properties set the model_spec that you created earlier, along with a delimiter character, which in this case is a comma as the file is comma separated. You will use this data for training the model, so is_Training is set to True.

You will want to hold back a portion of the data for testing the model. Split the data, with 90% of it for training, and the other 10% for testing/evaluation. Because we're doing this we want to make sure that the testing data is chosen at random, and isn't the ‘bottom' 10% of the dataset, so you use shuffle=True when loading the data to randomize it.

7. Build the model

The next cell is simply to build the model, and it's a single line of code:

# Build the model

model = text_classifier.create(train_data, model_spec=spec, epochs=50,

validation_data=test_data)

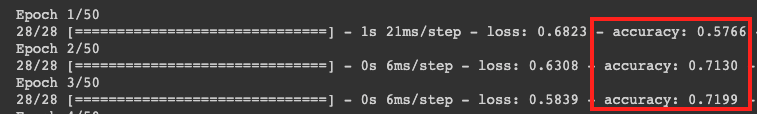

This creates a text classifier model with Model Maker, and you specify the training data you want to use (as set up in step 4), the model specification (as set up in step 4), and a number of epochs, in this case 50.

The basic principle of Machine Learning is that it is a form of pattern matching. Initially, it will load the pre-trained weights for the words, and attempt to group them together with a prediction of which ones, when grouped together, indicate spam, and which ones don't. The first time around, it's likely to be close to 50:50, as the model is only getting started.

It will then measure the results of this, and run optimization code to tweak its prediction, then try again. This is an epoch. So, by specifying epochs=50, it will go through that "loop" 50 times.

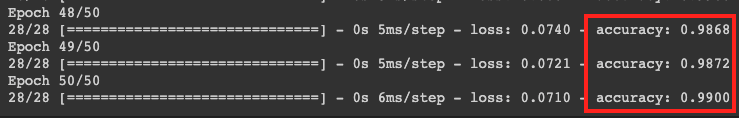

By the time you reach the 50th epoch, the model will report a much higher level of accuracy. In this case showing 99%!

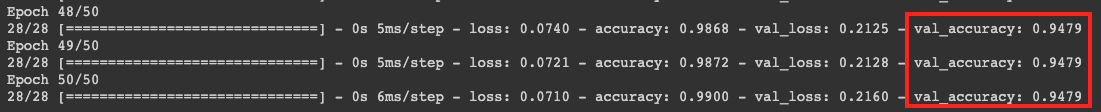

On the right hand side, you'll see validation accuracy figures. These will typically be a bit lower than the training accuracy, as they are an indication of how the model classifies data it hasn't previously ‘seen'. It uses the 10% test data that we set aside earlier.

8. Export the model

Once your training is done, you can then export the model.

TensorFlow trains a model in its own format, and this needs to be converted to TFLITE format to be used within a mobile app. Model Maker handles this complexity for you.

Simply export the model, specifying a directory:

model.export(export_dir='/mm_spam')

Within that directory, you'll see a model.tflite file. Download it. You'll need it in the next codelab, where you add it to your Android App!

iOS Considerations

The .tflite model you just exported works well for Android, because metadata about the model is embedded within it, and Android Studio can read that metadata.

This metadata is very important as it includes a dictionary of tokens representing words as the model recognizes them. Remember earlier when you learned that words become tokens, and these tokens are then given vectors for their sentiment? Your mobile app will need to know these tokens. For example, if "dog" was tokenized to 42, and your users type "dog" into a sentence, your app will then need to convert "dog" to 42 in order to have the model understand it. As an Android developer, you'll have a ‘TensorFlow Lite Task Library' that makes using this easier, but on iOS you'll need to process the vocabulary, so you'll need to have it available. Model Maker can export this for you by specifying the export_format parameter. So, to get the labels and the vocab for your model, you can use this:

model.export(export_dir='/mm_spam/',

export_format=[ExportFormat.LABEL, ExportFormat.VOCAB])

9. Congratulations

This codelab took you through Python Code for building and exporting your model. You'll have a .tflite file at the end of it.

In the next codelab you'll see how to edit your Android App to use this model so you can begin classifying spam comments.