1. Objectives

Overview

This codelab will focus on creating a Vertex AI Vision application end-to-end to send events with events management feature. We will use the pretrained Specialized model Occupancy analytics' inbuilt features to generate events based on capture of following things:

- Count the number of vehicles and person crossing a road at a certain line.

- Count the number of vehicles/person in any fixed region of the road.

- Detecting congestion in any part of the road.

What you'll learn

- How to ingest videos for streaming

- How to create an application in Vertex AI Vision

- Different features available in Occupancy Analytics and how to use them

- How to deploy the app

- How to search for videos in your storage Vertex AI Vision's Media Warehouse.

- How to create a Cloud Function that process Occupancy Analytics model's data.

- How to create a Pub/Sub Topic & subscription.

- How to set up event management to send events through Pub/Sub Topic.

2. Before You Begin

- In the Google Cloud console, on the project selector page, select or create a Google Cloud project. Note: If you don't plan to keep the resources that you create in this procedure, create a project instead of selecting an existing project. After you finish these steps, you can delete the project, removing all resources associated with the project. Go to project selector

- Make sure that billing is enabled for your Cloud project. Learn how to check if billing is enabled on a project.

- Enable the Compute Engine and Vision AI APIs. Enable the APIs

Create a service account:

- In the Google Cloud console, go to the Create service account page. Go to Create service account

- Select your project.

- In the Service account name field, enter a name. The Google Cloud console fills in the Service account ID field based on this name. In the Service account description field, enter a description. For example, Service account for quickstart.

- Click Create and continue.

- To provide access to your project, grant the following role(s) to your service account: Vision AI > Vision AI Editor, Compute Engine > Compute Instance Admin (beta), Storage > Storage Object Viewer † . In the Select a role list, select a role. For additional roles, click Add another role and add each additional role. Note: The Role field affects which resources your service account can access in your project. You can revoke these roles or grant additional roles later. In production environments, do not grant the Owner, Editor, or Viewer roles. Instead, grant a predefined role or custom role that meets your needs.

- Click Continue.

- Click Done to finish creating the service account. Do not close your browser window. You will use it in the next step.

Create a service account key:

- In the Google Cloud console, click the email address for the service account that you created.

- Click Keys.

- Click Add key, and then click Create new key.

- Click Create. A JSON key file is downloaded to your computer.

- Click Close.

- Install and initialize the Google Cloud CLI.

† Role only needed if you copy a sample video file from a Cloud Storage bucket.

3. Ingest a video file for streaming

You can use vaictl to stream the video data to your occupancy analytics app.

Begin by activating the Vision AI API in the Cloud Console

Register a new stream

- Click streams tab on the left panel of Vertex AI Vision.

- Click on Register

- In the Stream name enter ‘traffic-stream'

- In region enter ‘us-central1'

- Click register

The stream will take a couple of minutes to get registered.

Prepare a sample video

- You can copy a sample video with the following gsutil cp command. Replace the following variable:

- SOURCE: The location of a video file to use. You can use your own video file source (for example, gs://BUCKET_NAME/FILENAME.mp4), or use the sample video (gs://cloud-samples-data/vertex-ai-vision/street_vehicles_people.mp4 )(video with people and vehicles, source)

export SOURCE=gs://cloud-samples-data/vertex-ai-vision/street_vehicles_people.mp4 gsutil cp $SOURCE .

Ingest data into your stream

- To send this local video file to the app input stream, use the following command. You must make the following variable substitutions:

- PROJECT_ID: Your Google Cloud project ID.

- LOCATION_ID: Your location ID. For example, us-central1. For more information, see Cloud locations.

- LOCAL_FILE: The filename of a local video file. For example, street_vehicles_people.mp4.

- –loop flag: Optional. Loops file data to simulate streaming.

export PROJECT_ID=<Your Google Cloud project ID> export LOCATION_ID=us-central1 export LOCAL_FILE=street_vehicles_people.mp4

- This command streams a video file to a stream. If you use the –loop flag, the video is looped into the stream until you stop the command. We will run this command as a background job so that it keeps streaming.

- ( add nohup at the beginning and ‘&' at the end to make it background job)

nohup vaictl -p $PROJECT_ID \

-l $LOCATION_ID \

-c application-cluster-0 \

--service-endpoint visionai.googleapis.com \

send video-file to streams 'traffic-stream' --file-path $LOCAL_FILE --loop &

It might take ~100 seconds between starting the vaictl ingest operation and the video appearing in the dashboard.

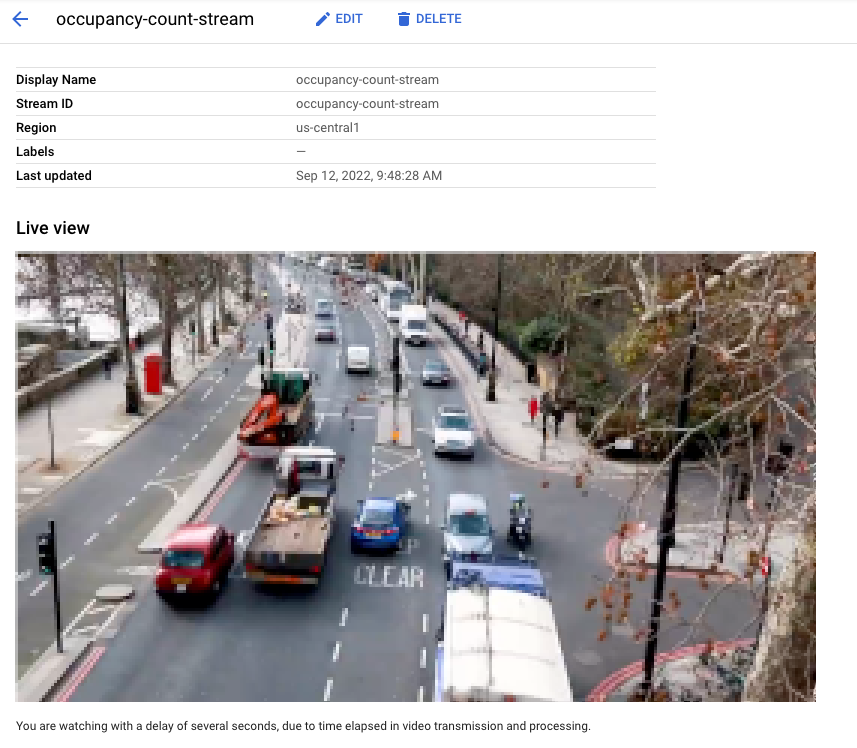

After the stream ingestion is available, you can see the video feed in the Streams tab of the Vertex AI Vision dashboard by selecting the traffic-stream stream.

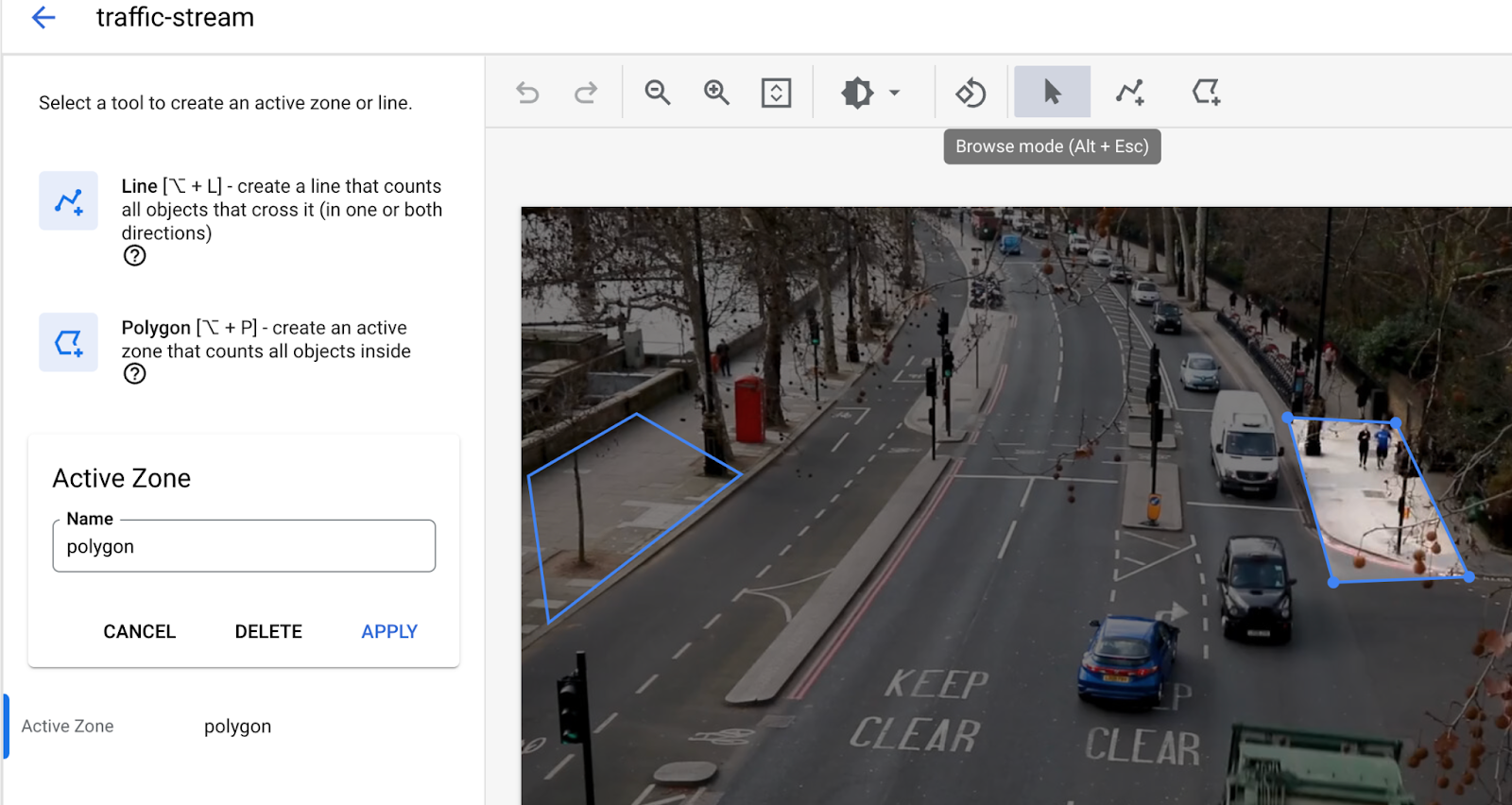

Live view of video being ingested into the stream in the Google Cloud console. Video credit: Elizabeth Mavor on Pixabay (pixelation added).

Live view of video being ingested into the stream in the Google Cloud console. Video credit: Elizabeth Mavor on Pixabay (pixelation added).

4. Create A Cloud Function

We'll need a Cloud Function to digest model's data & generate events that will later be send through the event channel.

You can learn more about Cloud Function here

Create a Cloud Function that listens to your model

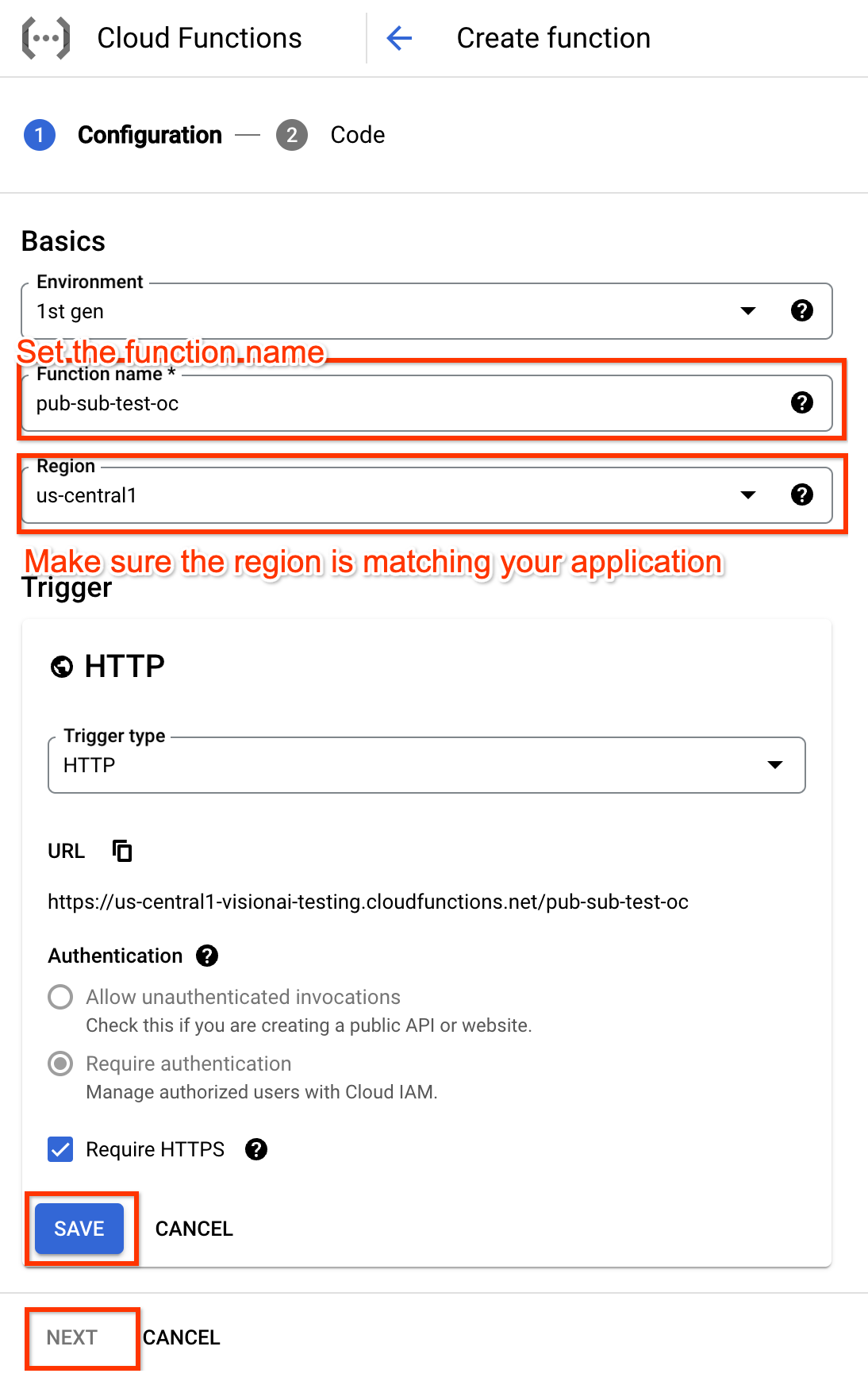

- Navigate to the Cloud Function UI creation page.

- Set the function name, which will later be used to referred to this Cloud Function in Event Management settings.

- Make sure the region is matching your application.

- Adjust then save the trigger settings.

- Click the next button to go the "code" part.

- Edit your cloud function. Here's an example with Node.js runtime.

/**

* Responds to any HTTP request.

*

* @param {!express:Request} req HTTP request context.

* @param {!express:Response} res HTTP response context.

*/

exports.hello_http = (req, res) => {

// Logging statement can be read with cmd `gcloud functions logs read {$functionName}`.

// For more about logging, please see https://cloud.google.com/functions/docs/monitoring

// The processor output will be stored in req.body.

const messageString = constructMessage(req.body);

// Send your message to operator output with res HTTP response context.

res.status(200).send(messageString);

};

function constructMessage(data) {

/**

* Typically, your processor output should contains appPlatformMetadata & it's designed output.

* For example here, if your output is of tyoe OccupancyCountingPredictionResult, you will need

* to construct the return annotation as such.

*/

// access appPlatformMetat.

const appPlatformMetadata = data.appPlatformMetadata;

// access annotations.

const annotations = data.annotations.map(annotation => {

// This is a mock OccupancyCountingPredictionResult annotation.

return {"annotation" : {"track_info": {"track_id": "12345"}}};

});

const events = [];

for(const annotation of annotations) {

events.push({

"event_message": "Detection event",

"payload" : {

"description" : "object detected"

},

"event_id" : "track_id_12345"

});

}

/**

* Typically, your cloud function should return a string represent a JSON which has two fields:

* "annotations" must follow the specification of the target model.

* "events" should be of type "AppPlatformEventBody".

*/

const messageJson = {

"annotations": annotations,

"events": events,

};

return JSON.stringify(messageJson);

}

- Click "Deploy" button to deploy function.

5. Create Pub/Sub Topic & subscription

We'll need to provide a Pub/Sub topic to the application which it can send the event to. To receive the events, a Pub/Sub subscription need to subscribe to configured optic.

You can learn more about Pub/Sub topic here and subscription here.

Create A Pub/Sub Topic

To create a Pub/Sub topic, you can use gcloud CLI: (You should replace SUBSCRIPTION_ID with real value from your set up)

gcloud pubsub topics create TOPIC_ID

Alternatively, you can use the Pub/Sub UI

Create A Pub/Sub Subscription

To create a Pub/Sub subscription, you can use gcloud CLI: (You should replace SUBSCRIPTION_ID & TOPIC_ID with real value from your set up)

gcloud pubsub subscriptions create SUBSCRIPTION_ID \

--topic=TOPIC_ID \

Alternatively, you can use the Pub/Sub UI

6. Create an application

The first step is to create an app that processes your data. An app can be thought of as an automated pipeline that connects the following:

- Data ingestion: A video feed is ingested into a stream.

- Data analysis: An AI(Computer Vision) model can be added after the ingestion.

- Data storage: The two versions of the video feed (the original stream and the stream processed by the AI model) can be stored in a media warehouse.

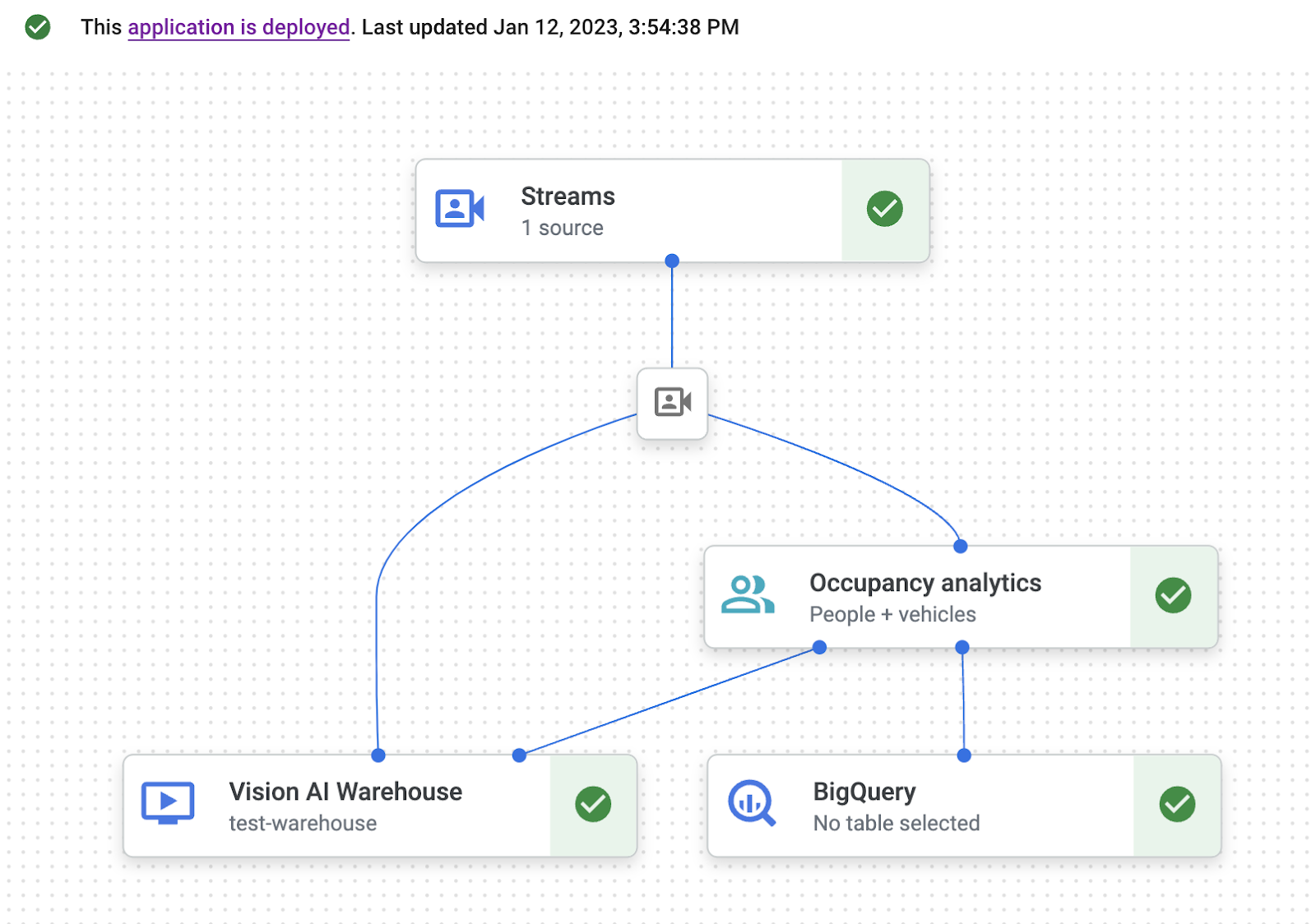

In the Google Cloud console an app is represented as a graph.

Create an empty app

Before you can populate the app graph, you must first create an empty app.

Create an app in the Google Cloud console.

- Go to Google Cloud console.

- Open the Applications tab of the Vertex AI Vision dashboard.

- Click the add Create button.

- Enter traffic-app as the app name and choose your region.

- Click Create.

Add app component nodes

After you have created the empty application, you can then add the three nodes to the app graph:

- Ingestion node: The stream resource that ingests data.

- Processing node: The occupancy analytics model that acts on ingested data.

- Storage node: The media warehouse that stores processed videos, and serves as a metadata store. The metadata stores include analytics information about ingested video data, and inferred information by the AI models.

Add component nodes to your app in the console.

- Open the Applications tab of the Vertex AI Vision dashboard. Go to the Applications tab

- In the traffic-app line, select View graph. This takes you to the graph visualization of the processing pipeline.

Add a data ingestion node

- To add an input stream node, select the Streams option in the Connectors section of the side menu.

- In the Source section of the Stream menu that opens, select Add streams.

- In the Add streams menu, choose Register new streams and add traffic-stream as the stream name.

- To add the stream to the app graph, click Add streams.

Add a data processing node

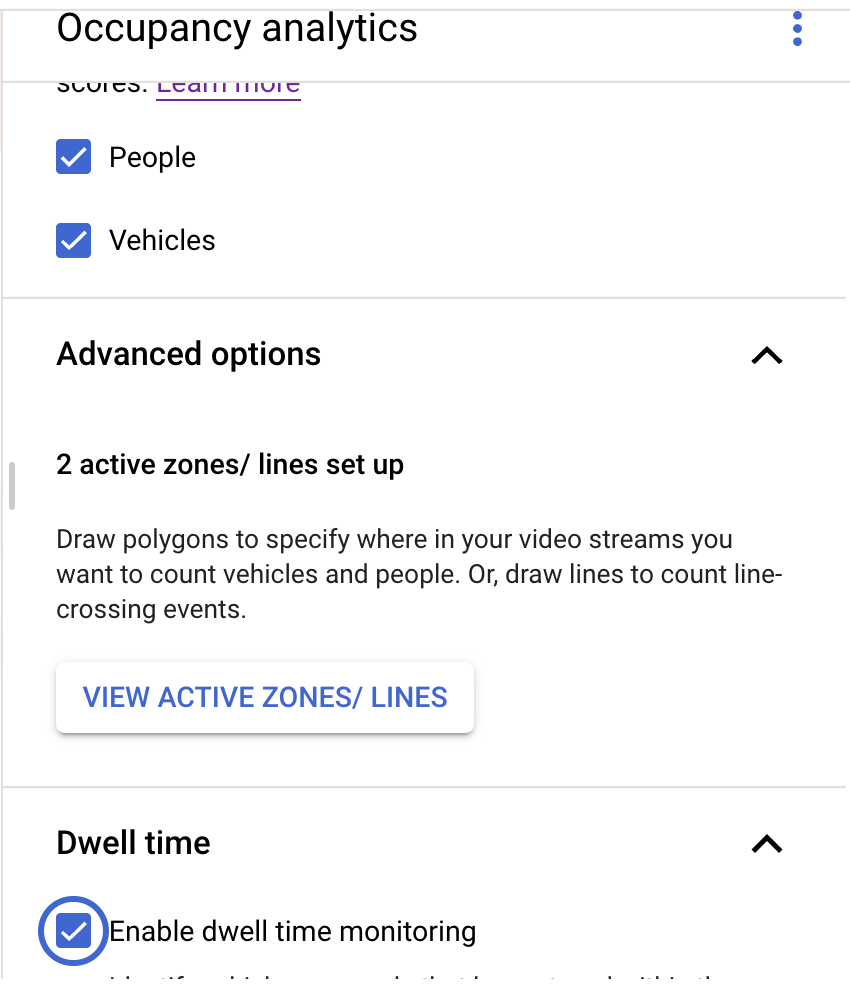

- To add the occupancy count model node, select the occupancy analytics option in the Specialized models section of the side menu.

- Leave the default selections People and Vehicles.

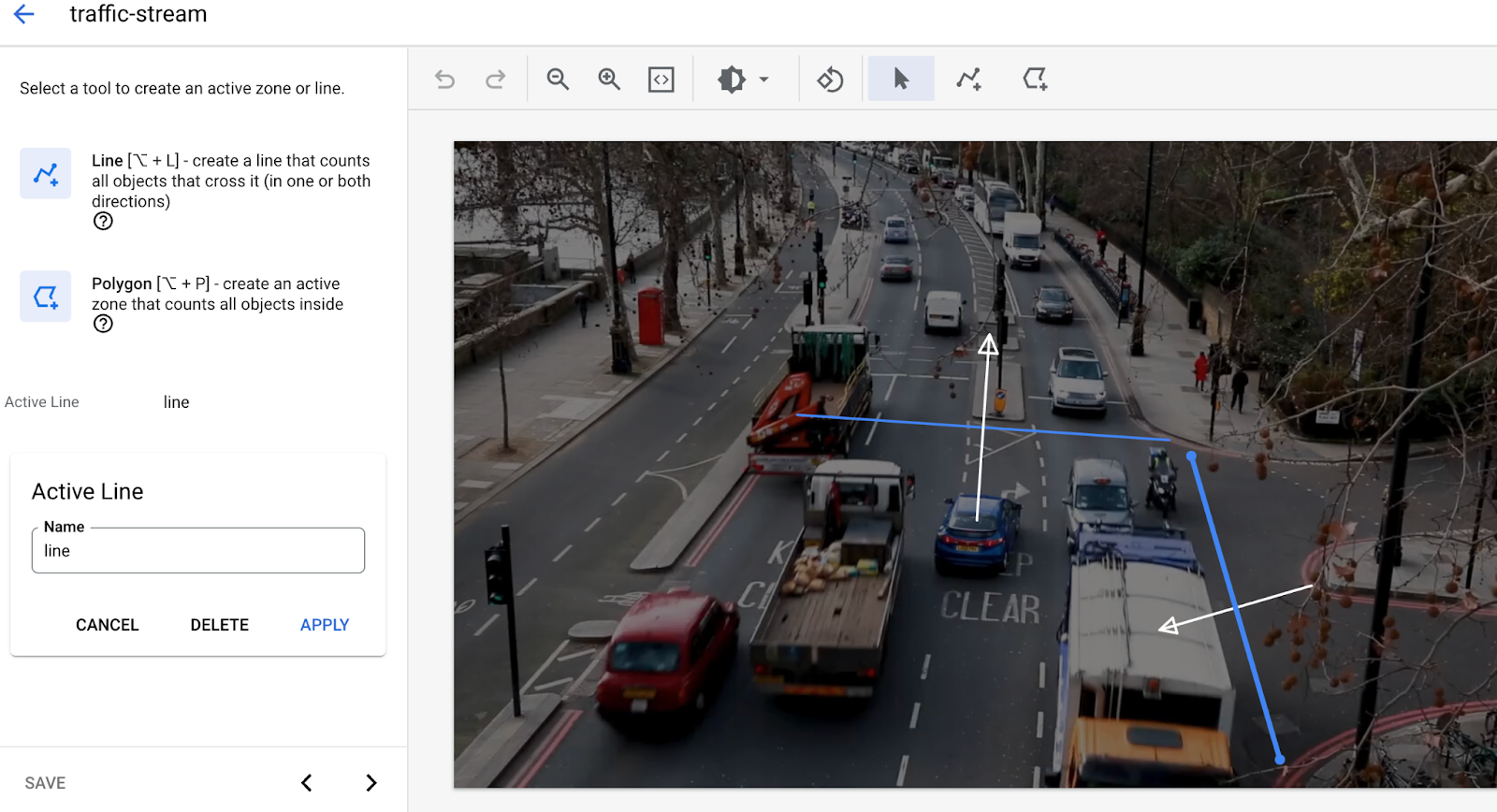

- Add Lines in Line crossing. Use the multi point line tool to draw the lines where you need to detect cars or people leaving or entering.

- Draw the active zones to count people/vehicles in that zone.

- Add settings for dwell time to detect congestion if an active zone is drawn.

- (currently active zone and line crossing both are not supported simultaneously. Use only one feature at a time.)

Add a data storage node

- To add the output destination (storage) node, select the Vertex AI Vision's Media Warehouse option in the Connectors section of the side menu.

- In the Vertex AI Vision's Media Warehouse menu, click Connect warehouse.

- In the Connect warehouse menu, select Create new warehouse. Name the warehouse traffic-warehouse, and leave the TTL duration at 14 days.

- Click the Create button to add the warehouse.

7. Configure Event Management

Duration 02:00

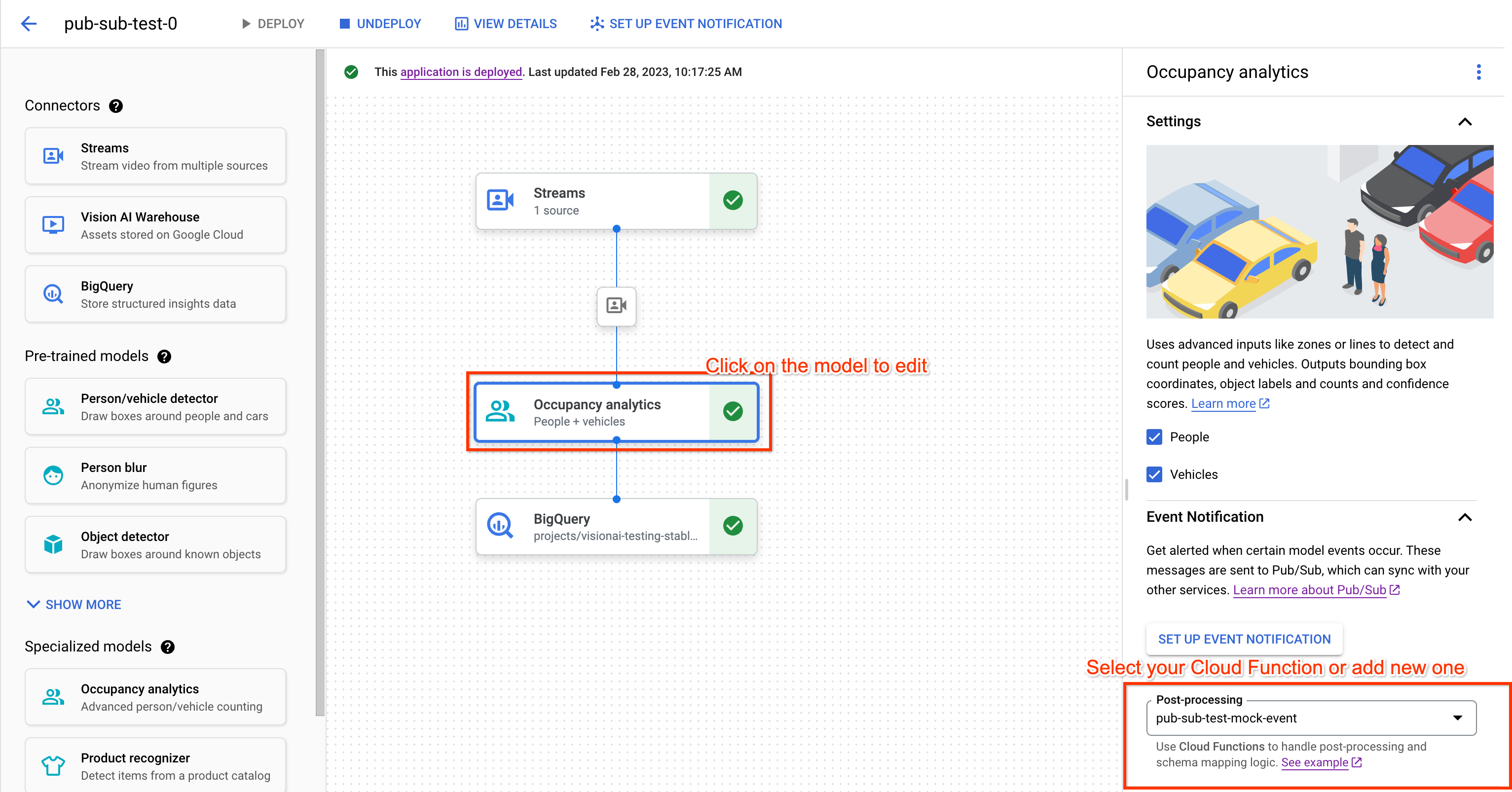

We'll connect model to previous created Cloud Function for post-processing, where the Cloud Function can freely digest the model's output and generate events suit your need. Then we'll configure the event channel by configure the previously created Pub/Sub Topic as our target. You can also set an minimal interval, which will help avoid your event channel getting flooded by the same event in a short period of time.

Select Cloud Function for post-processing

- Click on the data processing node(occupancy analytics) on your application graph to open side menu.

- Select your Cloud Function (identified by its function name) in Post-processing drop down.

- The application graph will auto-save your changes.

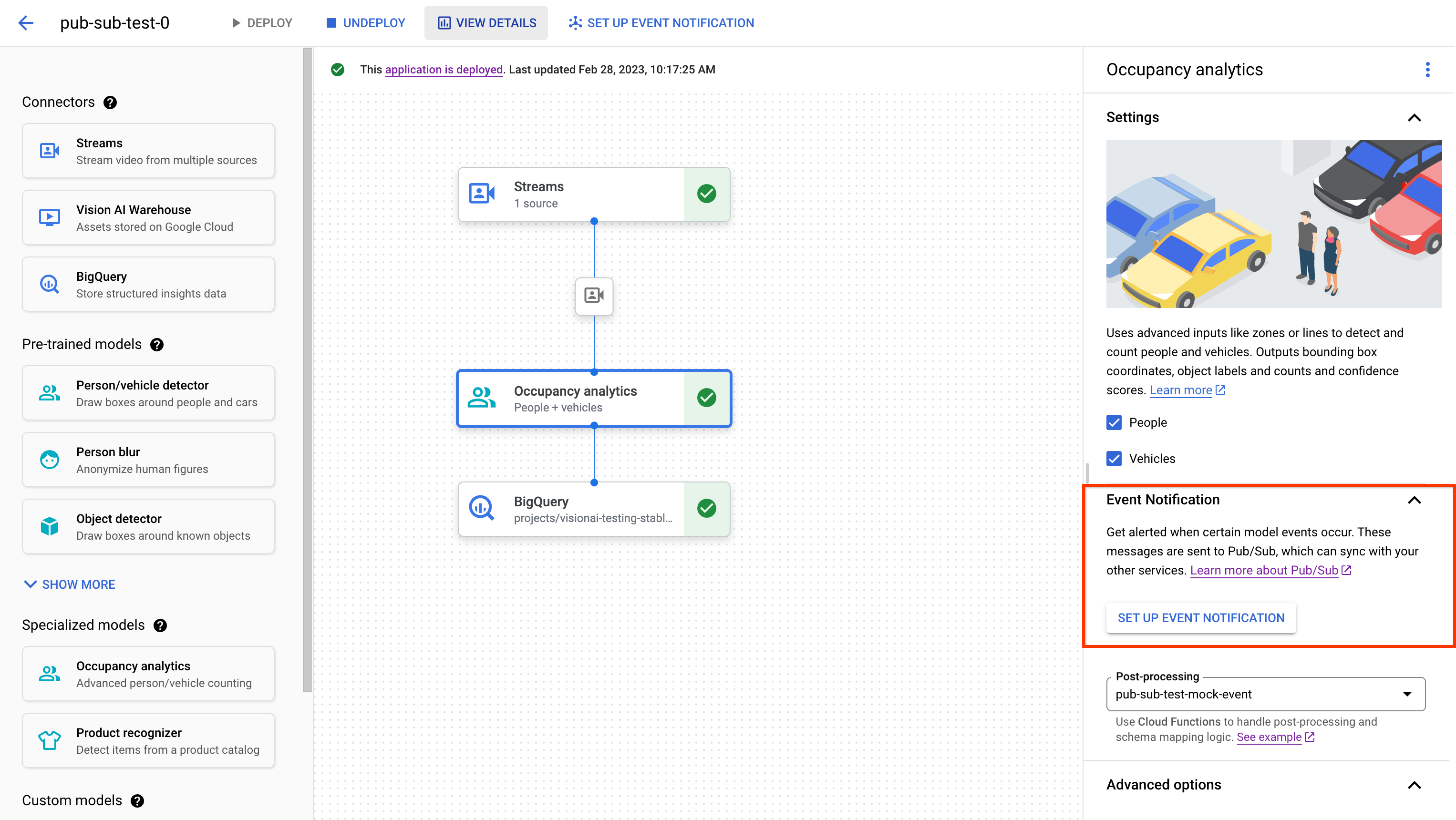

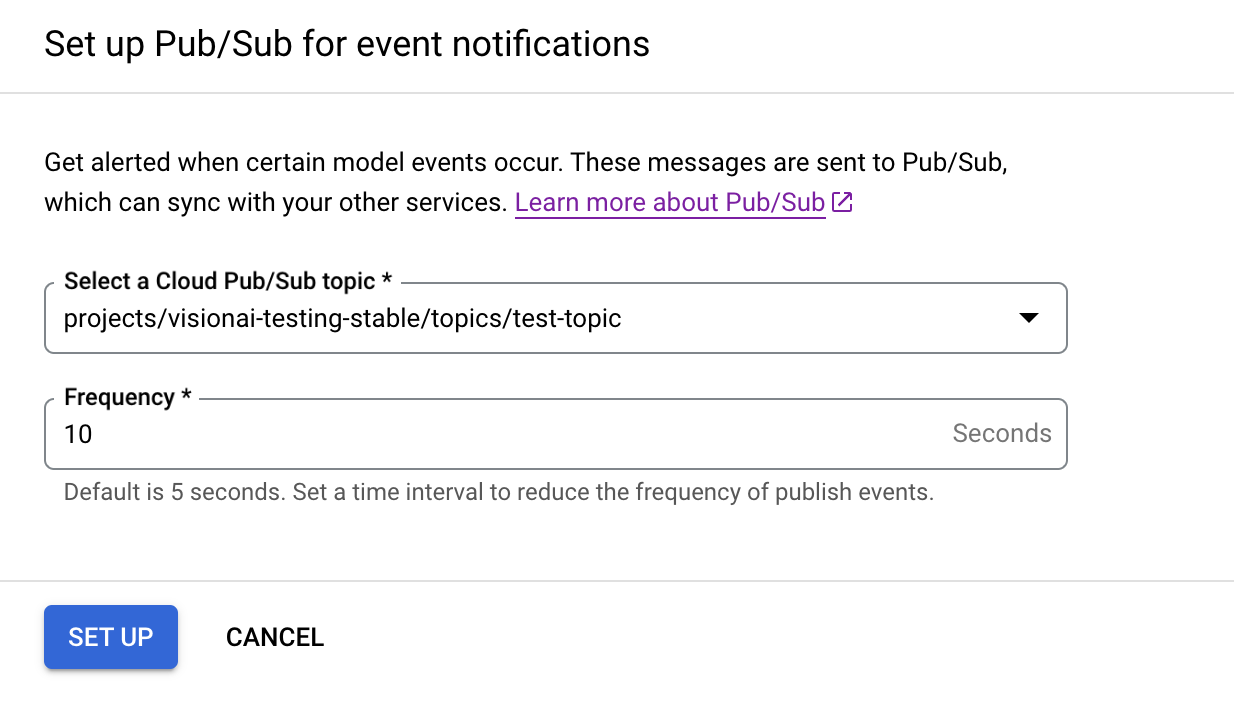

Configure Event Channel

- Click on the data processing node(occupancy analytics) on your application graph to open side menu.

- Click "SET UP EVENT NOTIFICATION" in Event Notification section.

- Select your Pub/Sub Topic in the drop down.

- (optional) set the minimal interval/frequency for event publishing.

8. Deploy your app for use

After you have built your end-to-end app with all the necessary components, the last step to using the app is to deploy it.

- Open the Applications tab of the Vertex AI Vision dashboard. Go to the Applications tab

- Select View graph next to the traffic-app app in the list.

- From the application graph builder page, click the Deploy button.

- In the following confirmation dialog, select Deploy. The deploy operation might take several minutes to complete. After deployment finishes, green check marks appear next to the nodes.

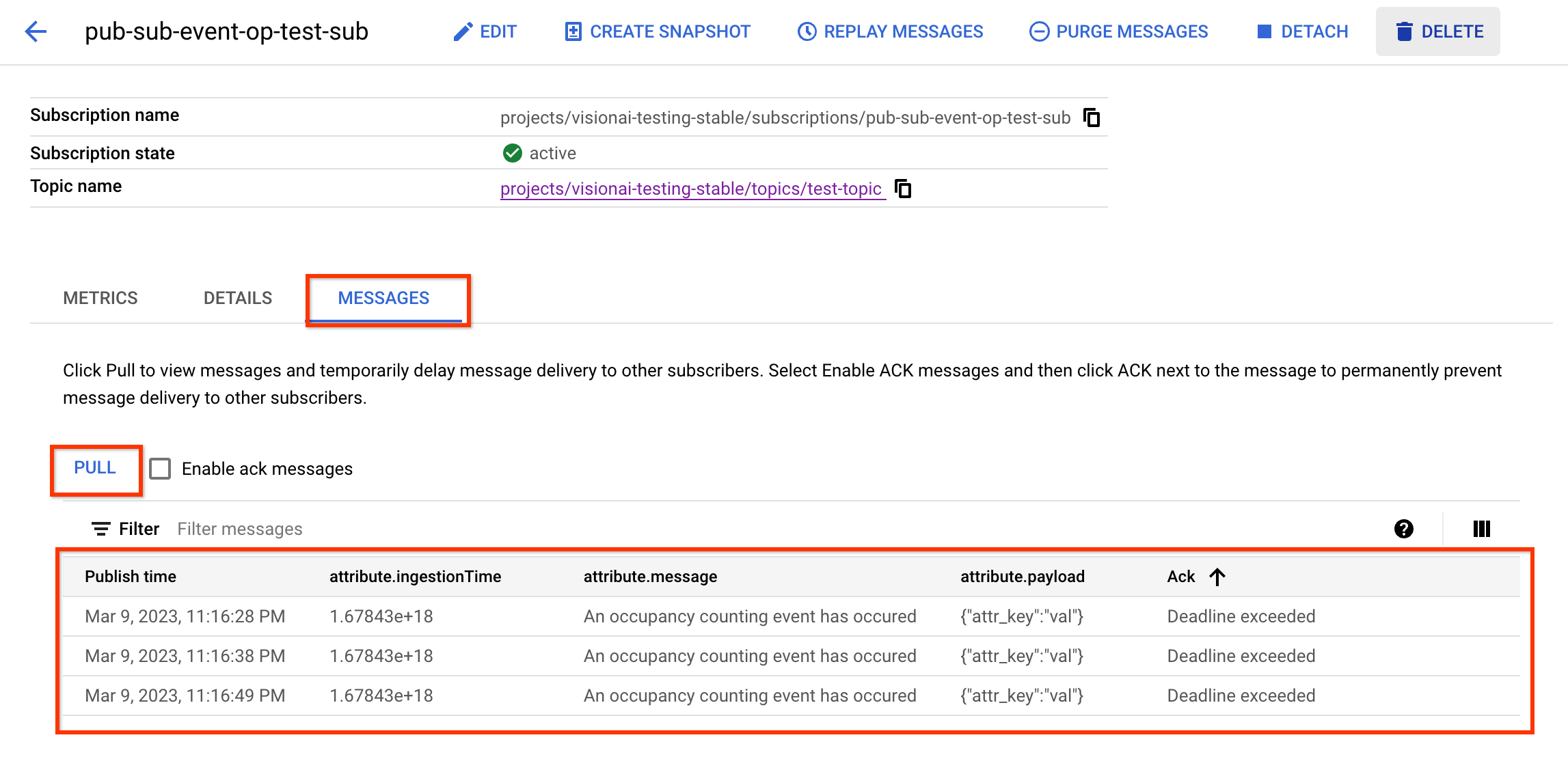

9. Verify Events/Messages in Pub/Sub subscription

After you ingest video data into your processing app, the Cloud Function should generate events once occupancy analytics model outputs annotation. Then those events should be publish as messages through your Pub/Sub topic & received by your subscription.

Following steps are assuming you have a pull subscription.

- Open the Pub/Sub Subscription list on your project & find corresponding subscription. Go to Pub/Sub subscription list page

- Go to "Message" tab.

- Click on "pull" button.

- View your message in the table.

Alternatively you can learn how to receive message without UI. Go to subscription page

10. Congratulations

Congratulations, you finished the lab!

Clean up

To avoid incurring charges to your Google Cloud account for the resources used in this tutorial, either delete the project that contains the resources, or keep the project and delete the individual resources.

Delete the project

Delete individual resources

Resources

https://cloud.google.com/vision-ai/docs/overview

https://cloud.google.com/vision-ai/docs/occupancy-count-tutorial

Feedback

Click here to Provide Feedback