1. Misi

Anda telah mengidentifikasi diri Anda ke AI darurat dan suar Anda kini berdenyut di peta planet—tetapi hampir tidak terlihat, hilang di antara statis. Tim penyelamat yang memindai dari orbit dapat melihat sesuatu di koordinat Anda, tetapi mereka tidak dapat mengunci lokasi. Sinyal terlalu lemah.

Untuk meningkatkan kekuatan sinyal beacon Anda hingga penuh, Anda harus mengonfirmasi lokasi persis Anda. Sistem navigasi pod rusak, tetapi kecelakaan tersebut menyebarkan bukti yang dapat diselamatkan di seluruh lokasi pendaratan. Contoh tanah. Flora aneh. Pemandangan langit malam alien yang terlihat jelas.

Jika Anda dapat menganalisis bukti ini dan menentukan wilayah planet tempat Anda berada, AI dapat melakukan triangulasi posisi Anda dan memperkuat sinyal suar. Kemudian—mungkin—seseorang akan menemukan Anda.

Saatnya menyatukan semua bagian.

Prasyarat

⚠️ Level ini memerlukan penyelesaian Level 0.

Sebelum memulai, pastikan Anda memiliki:

- [ ]

config.jsondi root project dengan ID dan koordinat peserta Anda - [ ] Avatar Anda terlihat di peta dunia

- [ ] Beacon Anda ditampilkan (redup) di koordinat Anda

Jika Anda belum menyelesaikan Level 0, mulailah dari sana terlebih dahulu.

Yang Akan Anda Buat

Di level ini, Anda akan membuat sistem AI multi-agen yang menganalisis bukti lokasi kecelakaan menggunakan pemrosesan paralel:

Tujuan Pembelajaran

Konsep | Yang Akan Anda Pelajari |

Sistem Multi-Agen | Membangun agen khusus dengan tanggung jawab tunggal |

ParallelAgent | Menyusun agen independen untuk dijalankan secara bersamaan |

before_agent_callback | Mengambil konfigurasi dan menetapkan status sebelum agen berjalan |

ToolContext | Mengakses nilai status dalam fungsi alat |

Server MCP Kustom | Membangun alat dengan pola imperatif (kode Python di Cloud Run) |

OneMCP BigQuery | Menghubungkan ke MCP terkelola Google untuk akses BigQuery |

AI multimodal | Menganalisis gambar dan video+audio dengan Gemini |

Orkestrasi Agen | Mengoordinasikan beberapa agen dengan orkestrator root |

Cloud Deployment | Men-deploy server dan agen MCP ke Cloud Run |

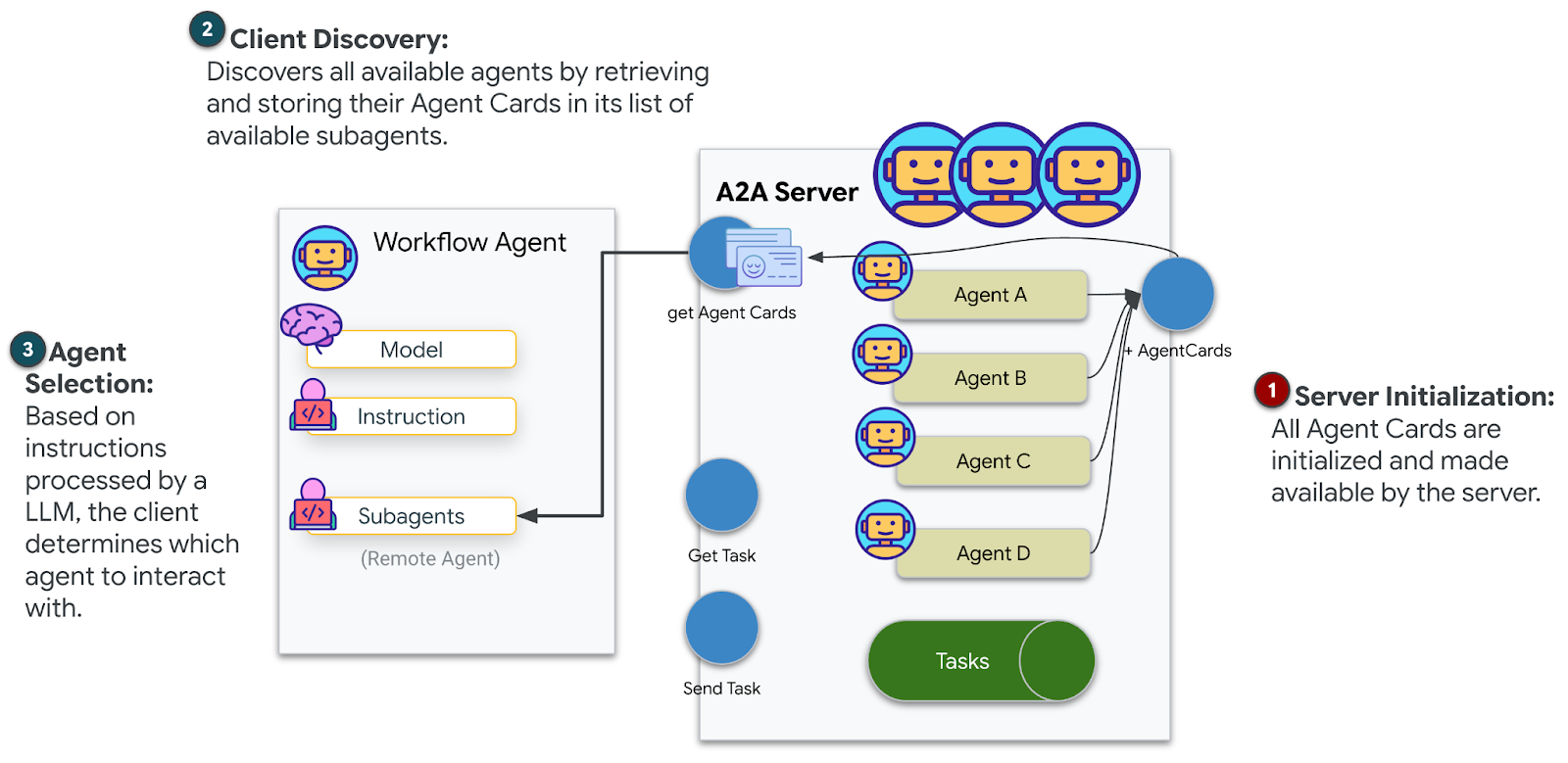

Persiapan A2A | Menyusun agen untuk komunikasi antar-agen di masa mendatang |

Bioma Planet

Permukaan planet dibagi menjadi empat bioma berbeda, masing-masing dengan karakteristik unik:

Koordinat Anda menentukan bioma tempat Anda jatuh. Bukti di lokasi kecelakaan Anda mencerminkan karakteristik bioma tersebut:

Bioma | Kuadran | Bukti Geologis | Bukti Botani | Bukti Astronomi |

🧊 CRYO | NW (x<50, y≥50) | Metana beku, kristal es | Pakis salju, flora kriogenik | Bintang raksasa biru |

🌋 VOLCANIC | NE (x≥50, y≥50) | Deposit obsidian | Bunga api, flora tahan panas | Bintang biner katai merah |

💜 BIOLUMINESCENT | SW (x<50, y<50) | Tanah fosforesen | Jamur bercahaya, tumbuhan berpendar | Pulsar hijau |

🦴 MEMBATU | SE (x≥50, y<50) | Endapan amber, mineral ite | Pohon yang membatu, flora purba | Matahari kuning |

Tugas Anda: membangun agen AI yang dapat menganalisis bukti dan menyimpulkan bioma tempat Anda berada.

2. Menyiapkan Lingkungan Anda

Perlu Kredit Google Cloud?

• Jika Anda menghadiri workshop yang dipandu instruktur: Instruktur akan memberikan kode kredit kepada Anda. Gunakan kode yang mereka berikan.

• Jika Anda menyelesaikan Codelab ini sendiri: Anda dapat menukarkan kredit Google Cloud gratis untuk menutupi biaya workshop. Klik link ini untuk mendapatkan kredit dan ikuti langkah-langkah dalam panduan video di bawah untuk menerapkannya ke akun Anda.

Menjalankan Skrip Penyiapan Lingkungan

Sebelum membuat bukti, Anda harus mengaktifkan Google Cloud API yang diperlukan, termasuk OneMCP untuk BigQuery yang menyediakan akses MCP terkelola ke BigQuery.

👉💻 Jalankan skrip penyiapan lingkungan:

cd $HOME/way-back-home/level_1

chmod +x setup/setup_env.sh

./setup/setup_env.sh

Anda akan melihat output seperti:

================================================================

Level 1: Environment Setup

================================================================

Project: your-project-id

[1/6] Enabling core Google Cloud APIs...

✓ Vertex AI API enabled

✓ Cloud Run API enabled

✓ Cloud Build API enabled

✓ BigQuery API enabled

✓ Artifact Registry API enabled

✓ IAM API enabled

[2/6] Enabling OneMCP BigQuery (Managed MCP)...

✓ OneMCP BigQuery enabled

[3/6] Setting up service account and IAM permissions...

✓ Service account 'way-back-home-sa' created

✓ Vertex AI User role granted

✓ Cloud Run Invoker role granted

✓ BigQuery User role granted

✓ BigQuery Data Viewer role granted

✓ Storage Object Viewer role granted

[4/6] Configuring Cloud Build IAM for deployments...

✓ Cloud Build can now deploy services as way-back-home-sa

✓ Cloud Run Admin role granted to Compute SA

[5/6] Creating Artifact Registry repository...

✓ Repository 'way-back-home' created

[6/6] Creating environment variables file...

Found PARTICIPANT_ID in config.json: abc123...

✓ Created ../set_env.sh

================================================================

✅ Environment Setup Complete!

================================================================

Variabel Lingkungan Sumber

👉💻 Sumber variabel lingkungan:

source $HOME/way-back-home/set_env.sh

Instal Dependensi

👉💻 Instal dependensi Python Level 1:

cd $HOME/way-back-home/level_1

uv sync

Menyiapkan Katalog Bintang

👉💻 Siapkan katalog bintang di BigQuery:

uv run python setup/setup_star_catalog.py

Anda akan melihat:

Setting up star catalog in project: your-project-id

==================================================

✓ Dataset way_back_home already exists

✓ Created table star_catalog

✓ Inserted 12 rows into star_catalog

📊 Star Catalog Summary:

----------------------------------------

NE (VOLCANIC): 3 stellar patterns

NW (CRYO): 3 stellar patterns

SE (FOSSILIZED): 3 stellar patterns

SW (BIOLUMINESCENT): 3 stellar patterns

----------------------------------------

✓ Star catalog is ready for triangulation queries

==================================================

✅ Star catalog setup complete!

3. Membuat Bukti Lokasi Kecelakaan

Sekarang buat bukti lokasi kecelakaan yang dipersonalisasi berdasarkan koordinat Anda.

Menjalankan Pembuat Bukti

👉💻 Dari direktori level_1, jalankan:

cd $HOME/way-back-home/level_1

uv run python generate_evidence.py

Anda akan melihat output seperti:

✓ Welcome back, Explorer_Aria!

Coordinates: (23, 67)

Ready to analyze your crash site.

📍 Crash site analysis initiated...

Generating evidence for your location...

🔬 Generating soil sample...

✓ Soil sample captured: outputs/soil_sample.png

✨ Capturing star field...

✓ Star field captured: outputs/star_field.png

🌿 Recording flora activity...

(This may take 1-2 minutes for video generation)

Generating video...

Generating video...

Generating video...

✓ Flora recorded: outputs/flora_recording.mp4

📤 Uploading evidence to Mission Control...

✓ Config updated with evidence URLs

==================================================

✅ Evidence generation complete!

==================================================

Meninjau Bukti Anda

👉 Luangkan waktu sejenak untuk melihat file bukti yang dihasilkan di folder outputs/. Setiap lokasi mencerminkan karakteristik bioma lokasi kecelakaan Anda—meskipun Anda tidak akan mengetahui bioma mana yang dimaksud hingga agen AI Anda menganalisisnya.

Bukti yang dihasilkan mungkin terlihat seperti ini, bergantung pada lokasi Anda:

4. Membangun Server MCP Kustom

Sistem analisis di dalam pod pelarian Anda rusak, tetapi data sensor mentah selamat dari kecelakaan. Anda akan membangun server MCP dengan FastMCP yang menyediakan alat analisis geologi dan botani.

Membuat Alat Analisis Geologi

Alat ini menganalisis gambar sampel tanah untuk mengidentifikasi komposisi mineral.

👉✏️ Buka $HOME/way-back-home/level_1/mcp-server/main.py dan temukan #REPLACE-GEOLOGICAL-TOOL. Ganti dengan:

GEOLOGICAL_PROMPT = """Analyze this alien soil sample image.

Classify the PRIMARY characteristic (choose exactly one):

1. CRYO - Frozen/icy minerals, crystalline structures, frost patterns,

blue-white coloration, permafrost indicators

2. VOLCANIC - Volcanic rock, basalt, obsidian, sulfur deposits,

red-orange minerals, heat-formed crystite structures

3. BIOLUMINESCENT - Glowing particles, phosphorescent minerals,

organic-mineral hybrids, purple-green luminescence

4. FOSSILIZED - Ancient compressed minerals, amber deposits,

petrified organic matter, golden-brown stratification

Respond ONLY with valid JSON (no markdown, no explanation):

{

"biome": "CRYO|VOLCANIC|BIOLUMINESCENT|FOSSILIZED",

"confidence": 0.0-1.0,

"minerals_detected": ["mineral1", "mineral2"],

"description": "Brief description of what you observe"

}

"""

@mcp.tool()

def analyze_geological(

image_url: Annotated[

str,

Field(description="Cloud Storage URL (gs://...) of the soil sample image")

]

) -> dict:

"""

Analyzes a soil sample image to identify mineral composition and classify the planetary biome.

Args:

image_url: Cloud Storage URL of the soil sample image (gs://bucket/path/image.png)

Returns:

dict with biome, confidence, minerals_detected, and description

"""

logger.info(f">>> 🔬 Tool: 'analyze_geological' called for '{image_url}'")

try:

response = client.models.generate_content(

model="gemini-2.5-flash",

contents=[

GEOLOGICAL_PROMPT,

genai_types.Part.from_uri(file_uri=image_url, mime_type="image/png")

]

)

result = parse_json_response(response.text)

logger.info(f" ✓ Geological analysis complete: {result.get('biome', 'UNKNOWN')}")

return result

except Exception as e:

logger.error(f" ✗ Geological analysis failed: {str(e)}")

return {"error": str(e), "biome": "UNKNOWN", "confidence": 0.0}

Membuat Alat Analisis Botani

Alat ini menganalisis rekaman video flora, termasuk trek audio.

👉✏️ Di file yang sama ($HOME/way-back-home/level_1/mcp-server/main.py), temukan #REPLACE-BOTANICAL-TOOL dan ganti dengan:

BOTANICAL_PROMPT = """Analyze this alien flora video recording.

Pay attention to BOTH:

1. VISUAL elements: Plant appearance, movement patterns, colors, bioluminescence

2. AUDIO elements: Ambient sounds, rustling, organic noises, frequencies

Classify the PRIMARY biome (choose exactly one):

1. CRYO - Crystalline ice-plants, frost-covered vegetation,

crackling/tinkling sounds, slow brittle movements, blue-white flora

2. VOLCANIC - Heat-resistant plants, sulfur-adapted species,

hissing/bubbling sounds, smoke-filtering vegetation, red-orange flora

3. BIOLUMINESCENT - Glowing plants, pulsing light patterns,

humming/resonating sounds, reactive to stimuli, purple-green flora

4. FOSSILIZED - Ancient petrified plants, amber-preserved specimens,

deep resonant sounds, minimal movement, golden-brown flora

Respond ONLY with valid JSON (no markdown, no explanation):

{

"biome": "CRYO|VOLCANIC|BIOLUMINESCENT|FOSSILIZED",

"confidence": 0.0-1.0,

"species_detected": ["species1", "species2"],

"audio_signatures": ["sound1", "sound2"],

"description": "Brief description of visual and audio observations"

}

"""

@mcp.tool()

def analyze_botanical(

video_url: Annotated[

str,

Field(description="Cloud Storage URL (gs://...) of the flora video recording")

]

) -> dict:

"""

Analyzes a flora video recording (visual + audio) to identify plant species and classify the biome.

Args:

video_url: Cloud Storage URL of the flora video (gs://bucket/path/video.mp4)

Returns:

dict with biome, confidence, species_detected, audio_signatures, and description

"""

logger.info(f">>> 🌿 Tool: 'analyze_botanical' called for '{video_url}'")

try:

response = client.models.generate_content(

model="gemini-2.5-flash",

contents=[

BOTANICAL_PROMPT,

genai_types.Part.from_uri(file_uri=video_url, mime_type="video/mp4")

]

)

result = parse_json_response(response.text)

logger.info(f" ✓ Botanical analysis complete: {result.get('biome', 'UNKNOWN')}")

return result

except Exception as e:

logger.error(f" ✗ Botanical analysis failed: {str(e)}")

return {"error": str(e), "biome": "UNKNOWN", "confidence": 0.0}

Menguji Server MCP Secara Lokal

👉💻 Menguji server MCP:

cd $HOME/way-back-home/level_1/mcp-server

pip install -r requirements.txt

python main.py

Anda akan melihat:

[INFO] Initialized Gemini client for project: your-project-id

[INFO] 🚀 Location Analyzer MCP Server starting on port 8080

[INFO] 📍 MCP endpoint: http://0.0.0.0:8080/mcp

[INFO] 🔧 Tools: analyze_geological, analyze_botanical

Server FastMCP kini berjalan dengan transpor HTTP. Tekan Ctrl+C untuk berhenti.

Men-deploy Server MCP ke Cloud Run

👉💻 Deploy:

cd $HOME/way-back-home/level_1/mcp-server

source $HOME/way-back-home/set_env.sh

gcloud builds submit . \

--config=cloudbuild.yaml \

--substitutions=_REGION="$REGION",_REPO_NAME="$REPO_NAME",_SERVICE_ACCOUNT="$SERVICE_ACCOUNT"

Simpan URL Layanan

👉💻 Simpan URL layanan:

export MCP_SERVER_URL=$(gcloud run services describe location-analyzer \

--region=$REGION --format='value(status.url)')

echo "MCP Server URL: $MCP_SERVER_URL"

# Add to set_env.sh for later use

echo "export MCP_SERVER_URL=\"$MCP_SERVER_URL\"" >> $HOME/way-back-home/set_env.sh

5. Membangun Agen Spesialis

Sekarang Anda akan membuat tiga agen spesialis, masing-masing dengan satu tanggung jawab.

Membuat Agen Analisis Geologi

👉✏️ Buka agent/agents/geological_analyst.py dan temukan #REPLACE-GEOLOGICAL-AGENT. Ganti dengan:

from google.adk.agents import Agent

from agent.tools.mcp_tools import get_geological_tool

geological_analyst = Agent(

name="GeologicalAnalyst",

model="gemini-2.5-flash",

description="Analyzes soil samples to classify planetary biome based on mineral composition.",

instruction="""You are a geological specialist analyzing alien soil samples.

## YOUR EVIDENCE TO ANALYZE

Soil sample URL: {soil_url}

## YOUR TASK

1. Call the analyze_geological tool with the soil sample URL above

2. Examine the results for mineral composition and biome indicators

3. Report your findings clearly

The four possible biomes are:

- CRYO: Frozen, icy minerals, blue/white coloring

- VOLCANIC: Magma, obsidian, volcanic rock, red/orange coloring

- BIOLUMINESCENT: Glowing, phosphorescent minerals, purple/green

- FOSSILIZED: Amber, ancient preserved matter, golden/brown

## REPORTING FORMAT

Always report your classification clearly:

"GEOLOGICAL ANALYSIS: [BIOME] (confidence: X%)"

Include a brief description of what you observed in the sample.

## IMPORTANT

- You do NOT synthesize with other evidence

- You do NOT confirm locations

- Just analyze the soil sample and report what you find

- Call the tool immediately with the URL provided above""",

tools=[get_geological_tool()]

)

Membuat Agen Analisis Botani

👉✏️ Buka agent/agents/botanical_analyst.py dan temukan #REPLACE-BOTANICAL-AGENT. Ganti dengan:

from google.adk.agents import Agent

from agent.tools.mcp_tools import get_botanical_tool

botanical_analyst = Agent(

name="BotanicalAnalyst",

model="gemini-2.5-flash",

description="Analyzes flora recordings to classify planetary biome based on plant life and ambient sounds.",

instruction="""You are a botanical specialist analyzing alien flora recordings.

## YOUR EVIDENCE TO ANALYZE

Flora recording URL: {flora_url}

## YOUR TASK

1. Call the analyze_botanical tool with the flora recording URL above

2. Pay attention to BOTH visual AND audio elements in the recording

3. Report your findings clearly

The four possible biomes are:

- CRYO: Frost ferns, crystalline plants, cold wind sounds, crackling ice

- VOLCANIC: Fire blooms, heat-resistant flora, crackling/hissing sounds

- BIOLUMINESCENT: Glowing fungi, luminescent plants, ethereal hum, chiming

- FOSSILIZED: Petrified trees, ancient formations, deep resonant sounds

## REPORTING FORMAT

Always report your classification clearly:

"BOTANICAL ANALYSIS: [BIOME] (confidence: X%)"

Include descriptions of what you SAW and what you HEARD.

## IMPORTANT

- You do NOT synthesize with other evidence

- You do NOT confirm locations

- Just analyze the flora recording and report what you find

- Call the tool immediately with the URL provided above""",

tools=[get_botanical_tool()]

)

Membuat Agen Analisis Astronomi

Agen ini menggunakan pendekatan yang berbeda dengan dua pola alat:

- Local FunctionTool: Gemini Vision untuk mengekstrak fitur bintang

- OneMCP BigQuery: Membuat kueri katalog bintang melalui MCP terkelola Google

👉✏️ Buka agent/agents/astronomical_analyst.py dan temukan #REPLACE-ASTRONOMICAL-AGENT. Ganti dengan:

from google.adk.agents import Agent

from agent.tools.star_tools import (

extract_star_features_tool,

get_bigquery_mcp_toolset,

)

# Get the BigQuery MCP toolset

bigquery_toolset = get_bigquery_mcp_toolset()

astronomical_analyst = Agent(

name="AstronomicalAnalyst",

model="gemini-2.5-flash",

description="Analyzes star field images and queries the star catalog via OneMCP BigQuery.",

instruction="""You are an astronomical specialist analyzing alien night skies.

## YOUR EVIDENCE TO ANALYZE

Star field URL: {stars_url}

## YOUR TWO TOOLS

### TOOL 1: extract_star_features (Local Gemini Vision)

Call this FIRST with the star field URL above.

Returns: "primary_star": "...", "nebula_type": "...", "stellar_color": "..."

### TOOL 2: BigQuery MCP (execute_query)

Call this SECOND with the results from Tool 1.

Use this exact SQL query (replace the placeholders with values from Step 1):

SELECT quadrant, biome, primary_star, nebula_type

FROM `{project_id}.way_back_home.star_catalog`

WHERE LOWER(primary_star) = LOWER('PRIMARY_STAR_FROM_STEP_1')

AND LOWER(nebula_type) = LOWER('NEBULA_TYPE_FROM_STEP_1')

LIMIT 1

## YOUR WORKFLOW

1. Call extract_star_features with: {stars_url}

2. Get the primary_star and nebula_type from the result

3. Call execute_query with the SQL above (replacing placeholders)

4. Report the biome and quadrant from the query result

## BIOME REFERENCE

| Biome | Quadrant | Primary Star | Nebula Type |

|-------|----------|--------------|-------------|

| CRYO | NW | blue_giant | ice_blue |

| VOLCANIC | NE | red_dwarf_binary | fire |

| BIOLUMINESCENT | SW | green_pulsar | purple_magenta |

| FOSSILIZED | SE | yellow_sun | golden |

## REPORTING FORMAT

"ASTRONOMICAL ANALYSIS: [BIOME] in [QUADRANT] quadrant (confidence: X%)"

Include a description of the stellar features you observed.

## IMPORTANT

- You do NOT synthesize with other evidence

- You do NOT confirm locations

- Just analyze the stars and report what you find

- Start by calling extract_star_features with the URL above""",

tools=[extract_star_features_tool, bigquery_toolset]

)

6. Membangun Koneksi Alat MCP

Sekarang Anda akan membuat wrapper Python yang memungkinkan agen ADK Anda berkomunikasi dengan server MCP. Wrapper ini menangani siklus proses koneksi — membuat sesi, memanggil alat, dan mengurai respons.

Membuat Koneksi Alat MCP (MCP Kustom)

Tindakan ini akan terhubung ke server FastMCP kustom yang di-deploy di Cloud Run.

👉✏️ Buka agent/tools/mcp_tools.py dan temukan #REPLACE-MCP-TOOL-CONNECTION. Ganti dengan:

import os

import logging

from google.adk.tools.mcp_tool.mcp_toolset import MCPToolset

from google.adk.tools.mcp_tool.mcp_session_manager import StreamableHTTPConnectionParams

logger = logging.getLogger(__name__)

MCP_SERVER_URL = os.environ.get("MCP_SERVER_URL")

_mcp_toolset = None

def get_mcp_toolset():

"""Get the MCPToolset connected to the location-analyzer server."""

global _mcp_toolset

if _mcp_toolset is not None:

return _mcp_toolset

if not MCP_SERVER_URL:

raise ValueError(

"MCP_SERVER_URL not set. Please run:\n"

" export MCP_SERVER_URL='https://location-analyzer-xxx.a.run.app'"

)

# FastMCP exposes MCP protocol at /mcp endpoint

mcp_endpoint = f"{MCP_SERVER_URL}/mcp"

logger.info(f"[MCP Tools] Connecting to: {mcp_endpoint}")

_mcp_toolset = MCPToolset(

connection_params=StreamableHTTPConnectionParams(

url=mcp_endpoint,

timeout=120, # 2 minutes for Gemini analysis

)

)

return _mcp_toolset

def get_geological_tool():

"""Get the geological analysis tool from the MCP server."""

return get_mcp_toolset()

def get_botanical_tool():

"""Get the botanical analysis tool from the MCP server."""

return get_mcp_toolset()

Membuat Alat Analisis Bintang (OneMCP BigQuery)

Katalog bintang yang Anda muat sebelumnya ke BigQuery berisi pola bintang untuk setiap bioma. Daripada menulis kode klien BigQuery untuk membuat kueri, kita terhubung ke server BigQuery OneMCP Google — yang mengekspos kemampuan execute_query BigQuery sebagai alat MCP yang dapat digunakan langsung oleh agen ADK mana pun.

👉✏️ Buka agent/tools/star_tools.py dan temukan #REPLACE-STAR-TOOLS. Ganti dengan:

import os

import json

import logging

from google import genai

from google.genai import types as genai_types

from google.adk.tools import FunctionTool

from google.adk.tools.mcp_tool.mcp_toolset import MCPToolset

from google.adk.tools.mcp_tool.mcp_session_manager import StreamableHTTPConnectionParams

import google.auth

import google.auth.transport.requests

logger = logging.getLogger(__name__)

# =============================================================================

# CONFIGURATION - Environment variables only

# =============================================================================

PROJECT_ID = os.environ.get("GOOGLE_CLOUD_PROJECT", "")

if not PROJECT_ID:

logger.warning("[Star Tools] GOOGLE_CLOUD_PROJECT not set")

# Initialize Gemini client for star feature extraction

genai_client = genai.Client(

vertexai=True,

project=PROJECT_ID or "placeholder",

location=os.environ.get("GOOGLE_CLOUD_LOCATION", "us-central1")

)

logger.info(f"[Star Tools] Initialized for project: {PROJECT_ID}")

# =============================================================================

# OneMCP BigQuery Connection

# =============================================================================

BIGQUERY_MCP_URL = "https://bigquery.googleapis.com/mcp"

_bigquery_toolset = None

def get_bigquery_mcp_toolset():

"""

Get the MCPToolset connected to Google's BigQuery MCP server.

This uses OAuth 2.0 authentication with Application Default Credentials.

The toolset provides access to BigQuery's pre-built MCP tools like:

- execute_query: Run SQL queries

- list_datasets: List available datasets

- get_table_schema: Get table structure

"""

global _bigquery_toolset

if _bigquery_toolset is not None:

return _bigquery_toolset

logger.info("[Star Tools] Connecting to OneMCP BigQuery...")

# Get OAuth credentials

credentials, project_id = google.auth.default(

scopes=["https://www.googleapis.com/auth/bigquery"]

)

# Refresh to get a valid token

credentials.refresh(google.auth.transport.requests.Request())

oauth_token = credentials.token

# Configure headers for BigQuery MCP

headers = {

"Authorization": f"Bearer {oauth_token}",

"x-goog-user-project": project_id or PROJECT_ID

}

# Create MCPToolset with StreamableHTTP connection

_bigquery_toolset = MCPToolset(

connection_params=StreamableHTTPConnectionParams(

url=BIGQUERY_MCP_URL,

headers=headers

)

)

logger.info("[Star Tools] Connected to BigQuery MCP")

return _bigquery_toolset

# =============================================================================

# Local FunctionTool: Star Feature Extraction

# =============================================================================

# This is a LOCAL tool that calls Gemini directly - demonstrating that

# you can mix local FunctionTools with MCP tools in the same agent.

STAR_EXTRACTION_PROMPT = """Analyze this alien night sky image and extract stellar features.

Identify:

1. PRIMARY STAR TYPE: blue_giant, red_dwarf, red_dwarf_binary, green_pulsar, yellow_sun, etc.

2. NEBULA TYPE: ice_blue, fire, purple_magenta, golden, etc.

3. STELLAR COLOR: blue_white, red_orange, green_purple, yellow_gold, etc.

Respond ONLY with valid JSON:

{"primary_star": "...", "nebula_type": "...", "stellar_color": "...", "description": "..."}

"""

def _parse_json_response(text: str) -> dict:

"""Parse JSON from Gemini response, handling markdown formatting."""

cleaned = text.strip()

if cleaned.startswith("```json"):

cleaned = cleaned[7:]

elif cleaned.startswith("```"):

cleaned = cleaned[3:]

if cleaned.endswith("```"):

cleaned = cleaned[:-3]

cleaned = cleaned.strip()

try:

return json.loads(cleaned)

except json.JSONDecodeError as e:

logger.error(f"Failed to parse JSON: {e}")

return {"error": f"Failed to parse response: {str(e)}"}

def extract_star_features(image_url: str) -> dict:

"""

Extract stellar features from a star field image using Gemini Vision.

This is a LOCAL FunctionTool - we call Gemini directly, not through MCP.

The agent will use this alongside the BigQuery MCP tools.

"""

logger.info(f"[Stars] Extracting features from: {image_url}")

response = genai_client.models.generate_content(

model="gemini-2.5-flash",

contents=[

STAR_EXTRACTION_PROMPT,

genai_types.Part.from_uri(file_uri=image_url, mime_type="image/png")

]

)

result = _parse_json_response(response.text)

logger.info(f"[Stars] Extracted: primary_star={result.get('primary_star')}")

return result

# Create the local FunctionTool

extract_star_features_tool = FunctionTool(extract_star_features)

7. Membangun Orchestrator

Sekarang, buat kru paralel dan orkestrator root yang mengoordinasikan semuanya.

Membuat Tim Analisis Paralel

Mengapa menjalankan ketiga spesialis secara paralel? Karena keduanya benar-benar independen — analis geologi tidak perlu menunggu hasil analis botani, dan sebaliknya. Setiap spesialis menganalisis bukti yang berbeda menggunakan alat yang berbeda. ParallelAgent menjalankan ketiganya secara bersamaan, sehingga mengurangi total waktu analisis dari ~30 dtk (berurutan) menjadi ~10 dtk (paralel).

👉✏️ Buka agent/agent.py dan temukan #REPLACE-PARALLEL-CREW. Ganti dengan:

import os

import logging

import httpx

from google.adk.agents import Agent, ParallelAgent

from google.adk.agents.callback_context import CallbackContext

# Import specialist agents

from agent.agents.geological_analyst import geological_analyst

from agent.agents.botanical_analyst import botanical_analyst

from agent.agents.astronomical_analyst import astronomical_analyst

# Import confirmation tool

from agent.tools.confirm_tools import confirm_location_tool

logger = logging.getLogger(__name__)

# =============================================================================

# BEFORE AGENT CALLBACK - Fetches config and sets state

# =============================================================================

async def setup_participant_context(callback_context: CallbackContext) -> None:

"""

Fetch participant configuration and populate state for all agents.

This callback:

1. Reads PARTICIPANT_ID and BACKEND_URL from environment

2. Fetches participant data from the backend API

3. Sets state values: soil_url, flora_url, stars_url, username, x, y, etc.

4. Returns None to continue normal agent execution

"""

participant_id = os.environ.get("PARTICIPANT_ID", "")

backend_url = os.environ.get("BACKEND_URL", "https://api.waybackhome.dev")

project_id = os.environ.get("GOOGLE_CLOUD_PROJECT", "")

logger.info(f"[Callback] Setting up context for participant: {participant_id}")

# Set project_id and backend_url in state immediately

callback_context.state["project_id"] = project_id

callback_context.state["backend_url"] = backend_url

callback_context.state["participant_id"] = participant_id

if not participant_id:

logger.warning("[Callback] No PARTICIPANT_ID set - using placeholder values")

callback_context.state["username"] = "Explorer"

callback_context.state["x"] = 0

callback_context.state["y"] = 0

callback_context.state["soil_url"] = "Not available - set PARTICIPANT_ID"

callback_context.state["flora_url"] = "Not available - set PARTICIPANT_ID"

callback_context.state["stars_url"] = "Not available - set PARTICIPANT_ID"

return None

# Fetch participant data from backend API

try:

url = f"{backend_url}/participants/{participant_id}"

logger.info(f"[Callback] Fetching from: {url}")

async with httpx.AsyncClient(timeout=30.0) as client:

response = await client.get(url)

response.raise_for_status()

data = response.json()

# Extract evidence URLs

evidence_urls = data.get("evidence_urls", {})

# Set all state values for sub-agents to access

callback_context.state["username"] = data.get("username", "Explorer")

callback_context.state["x"] = data.get("x", 0)

callback_context.state["y"] = data.get("y", 0)

callback_context.state["soil_url"] = evidence_urls.get("soil", "Not available")

callback_context.state["flora_url"] = evidence_urls.get("flora", "Not available")

callback_context.state["stars_url"] = evidence_urls.get("stars", "Not available")

logger.info(f"[Callback] State populated for {data.get('username')}")

except Exception as e:

logger.error(f"[Callback] Error fetching participant config: {e}")

callback_context.state["username"] = "Explorer"

callback_context.state["x"] = 0

callback_context.state["y"] = 0

callback_context.state["soil_url"] = f"Error: {e}"

callback_context.state["flora_url"] = f"Error: {e}"

callback_context.state["stars_url"] = f"Error: {e}"

return None

# =============================================================================

# PARALLEL ANALYSIS CREW

# =============================================================================

evidence_analysis_crew = ParallelAgent(

name="EvidenceAnalysisCrew",

description="Runs geological, botanical, and astronomical analysis in parallel.",

sub_agents=[geological_analyst, botanical_analyst, astronomical_analyst]

)

Membuat Root Orchestrator

Sekarang, buat agen root yang mengoordinasikan semuanya dan menggunakan callback.

👉✏️ Di file yang sama (agent/agent.py), temukan #REPLACE-ROOT-ORCHESTRATOR. Ganti dengan:

root_agent = Agent(

name="MissionAnalysisAI",

model="gemini-2.5-flash",

description="Coordinates crash site analysis to confirm explorer location.",

instruction="""You are the Mission Analysis AI coordinating a rescue operation.

## Explorer Information

- Name: {username}

- Coordinates: ({x}, {y})

## Evidence URLs (automatically provided to specialists via state)

- Soil sample: {soil_url}

- Flora recording: {flora_url}

- Star field: {stars_url}

## Your Workflow

### STEP 1: DELEGATE TO ANALYSIS CREW

Tell the EvidenceAnalysisCrew to analyze all the evidence.

The evidence URLs are already available to the specialists.

### STEP 2: COLLECT RESULTS

Each specialist will report:

- "GEOLOGICAL ANALYSIS: [BIOME] (confidence: X%)"

- "BOTANICAL ANALYSIS: [BIOME] (confidence: X%)"

- "ASTRONOMICAL ANALYSIS: [BIOME] in [QUADRANT] quadrant (confidence: X%)"

### STEP 3: APPLY 2-OF-3 AGREEMENT RULE

- If 2 or 3 specialists agree → that's the answer

- If all 3 disagree → use judgment based on confidence

### STEP 4: CONFIRM LOCATION

Call confirm_location with the determined biome.

## Biome Reference

| Biome | Quadrant | Key Characteristics |

|-------|----------|---------------------|

| CRYO | NW | Frozen, blue, ice crystals |

| VOLCANIC | NE | Magma, red/orange, obsidian |

| BIOLUMINESCENT | SW | Glowing, purple/green |

| FOSSILIZED | SE | Amber, golden, ancient |

## Response Style

Be encouraging and narrative! Celebrate when the beacon activates!

""",

sub_agents=[evidence_analysis_crew],

tools=[confirm_location_tool],

before_agent_callback=setup_participant_context

)

Membuat Alat Konfirmasi Lokasi

Ini adalah bagian terakhir — alat yang benar-benar mengonfirmasi lokasi Anda ke Mission Control dan mengaktifkan suar Anda. Saat orkestrator root menentukan bioma tempat Anda berada (menggunakan aturan kesepakatan 2 dari 3), orkestrator akan memanggil alat ini untuk mengirimkan hasilnya ke backend API.

Alat ini menggunakan ToolContext, yang memberinya akses ke nilai status (seperti participant_id dan backend_url) yang ditetapkan oleh before_agent_callback sebelumnya.

👉✏️ Di agent/tools/confirm_tools.py, temukan #REPLACE-CONFIRM-TOOL. Ganti dengan:

import os

import logging

import requests

from google.adk.tools import FunctionTool

from google.adk.tools.tool_context import ToolContext

logger = logging.getLogger(__name__)

BIOME_TO_QUADRANT = {

"CRYO": "NW",

"VOLCANIC": "NE",

"BIOLUMINESCENT": "SW",

"FOSSILIZED": "SE"

}

def _get_actual_biome(x: int, y: int) -> tuple[str, str]:

"""Determine actual biome and quadrant from coordinates."""

if x < 50 and y >= 50:

return "NW", "CRYO"

elif x >= 50 and y >= 50:

return "NE", "VOLCANIC"

elif x < 50 and y < 50:

return "SW", "BIOLUMINESCENT"

else:

return "SE", "FOSSILIZED"

def confirm_location(biome: str, tool_context: ToolContext) -> dict:

"""

Confirm the explorer's location and activate the rescue beacon.

Uses ToolContext to read state values set by before_agent_callback.

"""

# Read from state (set by before_agent_callback)

participant_id = tool_context.state.get("participant_id", "")

x = tool_context.state.get("x", 0)

y = tool_context.state.get("y", 0)

backend_url = tool_context.state.get("backend_url", "https://api.waybackhome.dev")

# Fallback to environment variables

if not participant_id:

participant_id = os.environ.get("PARTICIPANT_ID", "")

if not backend_url:

backend_url = os.environ.get("BACKEND_URL", "https://api.waybackhome.dev")

if not participant_id:

return {"success": False, "message": "❌ No participant ID available."}

biome_upper = biome.upper().strip()

if biome_upper not in BIOME_TO_QUADRANT:

return {"success": False, "message": f"❌ Unknown biome: {biome}"}

# Get actual biome from coordinates

actual_quadrant, actual_biome = _get_actual_biome(x, y)

if biome_upper != actual_biome:

return {

"success": False,

"message": f"❌ Mismatch! Analysis: {biome_upper}, Actual: {actual_biome}"

}

quadrant = BIOME_TO_QUADRANT[biome_upper]

try:

response = requests.patch(

f"{backend_url}/participants/{participant_id}/location",

params={"x": x, "y": y},

timeout=10

)

response.raise_for_status()

return {

"success": True,

"message": f"🔦 BEACON ACTIVATED!\n\nLocation: {biome_upper} in {quadrant}\nCoordinates: ({x}, {y})"

}

except requests.exceptions.ConnectionError:

return {

"success": True,

"message": f"🔦 BEACON ACTIVATED! (Local)\n\nLocation: {biome_upper} in {quadrant}",

"simulated": True

}

except Exception as e:

return {"success": False, "message": f"❌ Failed: {str(e)}"}

confirm_location_tool = FunctionTool(confirm_location)

8. Menguji dengan UI Web ADK

Sekarang, mari kita uji sistem multi-agen lengkap secara lokal.

Mulai Server Web ADK

👉💻 Tetapkan variabel lingkungan dan mulai server web ADK:

cd $HOME/way-back-home/level_1

source $HOME/way-back-home/set_env.sh

# Verify environment is set

echo "PARTICIPANT_ID: $PARTICIPANT_ID"

echo "MCP Server: $MCP_SERVER_URL"

# Start ADK web server

uv run adk web

Anda akan melihat:

+-----------------------------------------------------------------------------+

| ADK Web Server started |

| |

| For local testing, access at http://localhost:8000. |

+-----------------------------------------------------------------------------+

INFO: Application startup complete.

INFO: Uvicorn running on http://0.0.0.0:8000 (Press CTRL+C to quit)

Mengakses UI Web

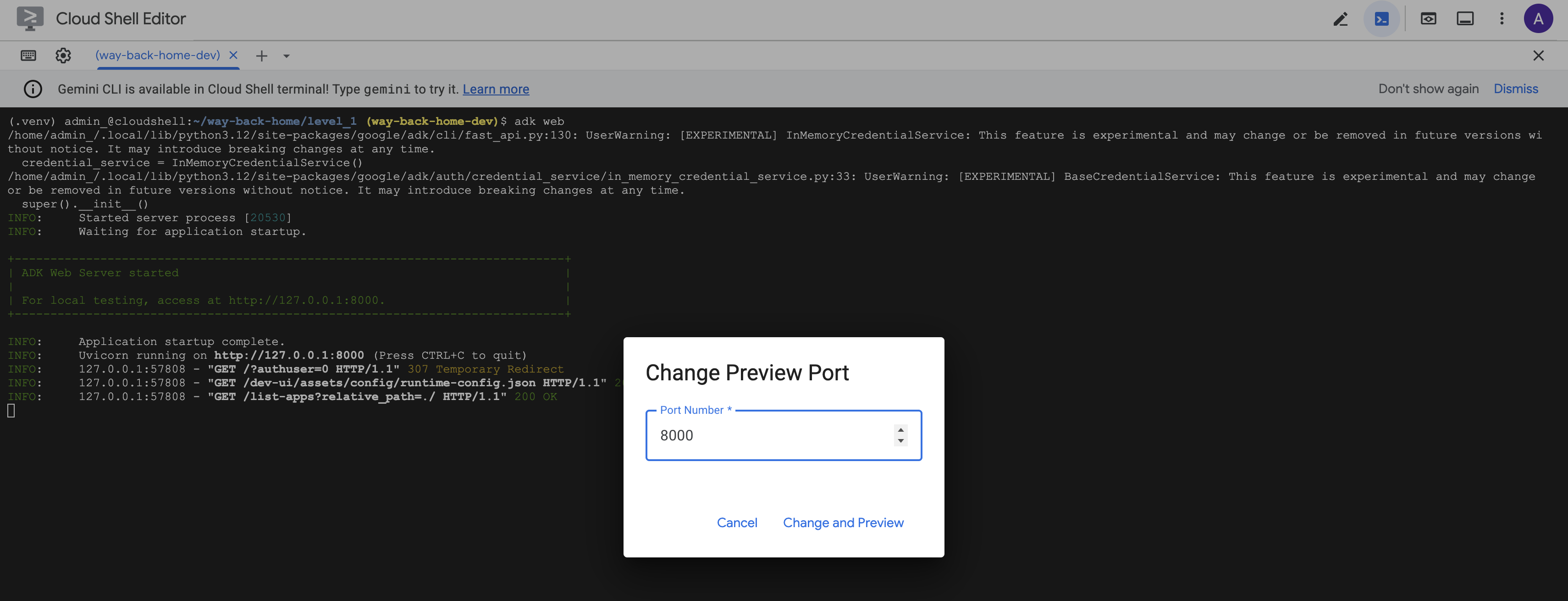

👉 Dari ikon Pratinjau web di toolbar Cloud Shell (kanan atas), pilih Ubah port.

![]()

👉 Tetapkan port ke 8000 dan klik "Change and Preview".

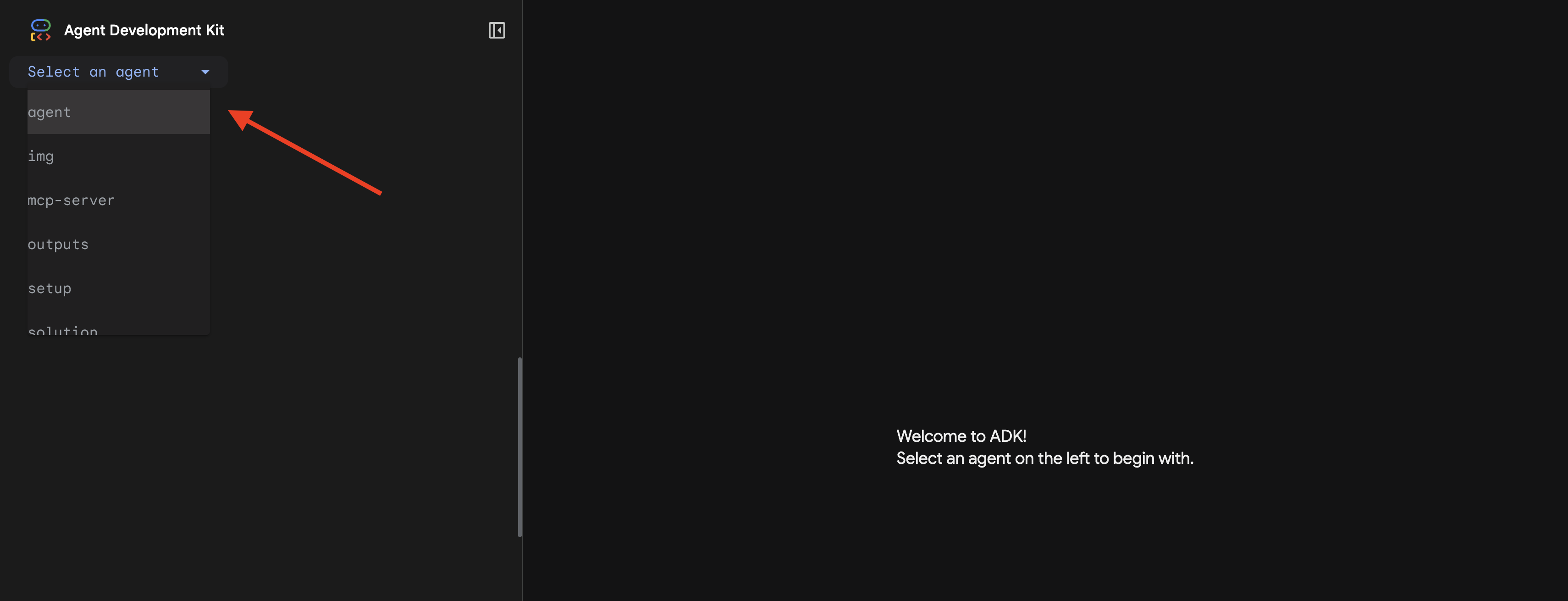

👉 UI Web ADK akan terbuka. Pilih agen dari menu dropdown.

Menjalankan Analisis

👉 Di antarmuka chat, ketik:

Analyze the evidence from my crash site and confirm my location to activate the beacon.

Tonton cara kerja sistem multi-agen:

👉 Setelah ketiga agen menyelesaikan analisisnya, ketik:

Where am I?

Cara sistem memproses permintaan Anda:

Panel rekaman aktivitas di sebelah kanan menampilkan semua interaksi agen dan panggilan alat.

👉 Tekan Ctrl+C di terminal untuk menghentikan server setelah selesai menguji.

9. Men-deploy ke Cloud Run

Sekarang deploy sistem multi-agen Anda ke Cloud Run agar siap A2A.

Men-deploy Agen

👉💻 Deploy ke Cloud Run menggunakan ADK CLI:

cd $HOME/way-back-home/level_1

source $HOME/way-back-home/set_env.sh

uv run adk deploy cloud_run \

--project=$GOOGLE_CLOUD_PROJECT \

--region=$REGION \

--service_name=mission-analysis-ai \

--with_ui \

--a2a \

./agent

Saat diminta Do you want to continue (Y/n) dan Allow unauthenticated invocations to [mission-analysis-ai] (Y/n)?, masukkan Y untuk keduanya guna men-deploy dan mengizinkan akses publik ke agen A2A Anda.

Anda akan melihat output seperti:

Building and deploying agent to Cloud Run...

✓ Container built successfully

✓ Deploying to Cloud Run...

✓ Service deployed: https://mission-analysis-ai-abc123-uc.a.run.app

Menetapkan Variabel Lingkungan di Cloud Run

Agen yang di-deploy memerlukan akses ke variabel lingkungan. Perbarui layanan:

👉💻 Tetapkan variabel lingkungan yang diperlukan:

gcloud run services update mission-analysis-ai \

--region=$REGION \

--set-env-vars="GOOGLE_CLOUD_PROJECT=$GOOGLE_CLOUD_PROJECT,GOOGLE_CLOUD_LOCATION=$REGION,MCP_SERVER_URL=$MCP_SERVER_URL,BACKEND_URL=$BACKEND_URL,PARTICIPANT_ID=$PARTICIPANT_ID,GOOGLE_GENAI_USE_VERTEXAI=True"

Simpan URL Agen

👉💻 Dapatkan URL yang di-deploy:

export AGENT_URL=$(gcloud run services describe mission-analysis-ai \

--region=$REGION --format='value(status.url)')

echo "Agent URL: $AGENT_URL"

# Add to set_env.sh

echo "export LEVEL1_AGENT_URL=\"$AGENT_URL\"" >> $HOME/way-back-home/set_env.sh

Memverifikasi Deployment

👉💻 Uji agen yang di-deploy dengan membuka URL di browser Anda (flag --with_ui men-deploy antarmuka web ADK), atau uji melalui curl:

curl -X GET "$AGENT_URL/list-apps"

Anda akan melihat respons yang mencantumkan agen Anda.

10. Kesimpulan

🎉 Level 1 Selesai!

Beacon penyelamat Anda kini memancarkan sinyal dengan kekuatan penuh. Sinyal yang diukur dengan triangulasi menembus gangguan atmosfer, yaitu pulsa stabil yang mengatakan "Saya di sini. Saya selamat. Datang dan temui saya."

Namun, Anda bukan satu-satunya orang di planet ini. Saat suar Anda aktif, Anda melihat cahaya lain berkedip di cakrawala—penyintas lain, lokasi kecelakaan lain, penjelajah lain yang berhasil selamat.

![]()

Di Level 2, Anda akan mempelajari cara memproses sinyal SOS yang masuk dan berkoordinasi dengan korban selamat lainnya. Penyelamatan ini bukan hanya tentang ditemukan, tetapi juga tentang saling menemukan.

Pemecahan masalah

"MCP_SERVER_URL not set"

export MCP_SERVER_URL=$(gcloud run services describe location-analyzer \

--region=$REGION --format='value(status.url)')

"PARTICIPANT_ID tidak ditetapkan"

source $HOME/way-back-home/set_env.sh

echo $PARTICIPANT_ID

"BigQuery table not found" (Tabel BigQuery tidak ditemukan)

uv run python setup/setup_star_catalog.py

"Spesialis meminta URL" Artinya, pembuatan template {key} tidak berfungsi. Periksa:

- Apakah

before_agent_callbackditetapkan pada agen root? - Apakah callback menetapkan nilai status dengan benar?

- Apakah sub-agen menggunakan

{soil_url}(bukan f-string)?

"Ketiga analisis tidak setuju" Buat ulang bukti: uv run python generate_evidence.py

"Agen tidak merespons di web adk"

- Pastikan port 8000 sudah benar

- Verifikasi MCP_SERVER_URL dan PARTICIPANT_ID telah ditetapkan

- Periksa pesan error di terminal

Ringkasan Arsitektur

Komponen | Jenis | Pola | Tujuan |

setup_participant_context | Telepon Balik | before_agent_callback | Mengambil konfigurasi, menetapkan status |

GeologicalAnalyst | Agen | Templating {soil_url} | Klasifikasi tanah |

BotanicalAnalyst | Agen | Templating {flora_url} | Klasifikasi flora |

AstronomicalAnalyst | Agen | {stars_url}, {project_id} | Triangulasi bintang |

confirm_location | Alat | Akses status ToolContext | Aktifkan beacon |

EvidenceAnalysisCrew | ParallelAgent | Komposisi sub-agen | Menjalankan spesialis secara bersamaan |

MissionAnalysisAI | Agen (Root) | Orchestrator + callback | Mengatur + menyintesis |

location-analyzer | Server FastMCP | MCP Kustom | Analisis Geologi + Botani |

bigquery.googleapis.com/mcp | OneMCP | MCP Terkelola | Akses BigQuery |

Konsep Utama yang Dikuasai

✓ before_agent_callback: Mengambil konfigurasi sebelum agen berjalan

✓ {key} State Templating: Mengakses nilai status dalam petunjuk agen

✓ ToolContext: Mengakses nilai status dalam fungsi alat

✓ State Sharing: Status induk otomatis tersedia untuk sub-agen melalui InvocationContext

✓ Multi-Agent Architecture: Agen khusus dengan satu tanggung jawab

✓ ParallelAgent: Eksekusi serentak tugas independen

✓ Custom MCP Server: Server MCP Anda sendiri di Cloud Run

✓ OneMCP BigQuery: Pola MCP terkelola untuk akses database

✓ Cloud Deployment: Deployment tanpa status menggunakan variabel lingkungan

✓ A2A Preparation: Agen siap untuk komunikasi antar-agen

Untuk Non-Gamer: Penerapan di Dunia Nyata

"Menentukan lokasi Anda" mewakili Analisis Pakar Paralel dengan Konsensus—menjalankan beberapa analisis AI khusus secara bersamaan dan menyintesis hasilnya.

Aplikasi Perusahaan

Kasus Penggunaan | Pakar Paralel | Aturan Sintesis |

Diagnosis Medis | Analis gambar, analis gejala, analis lab | Nilai minimum keyakinan 2 dari 3 |

Deteksi Penipuan | Analis transaksi, analis perilaku, analis jaringan | 1 tanda = tinjauan |

Pemrosesan Dokumen | Agen OCR, agen klasifikasi, agen ekstraksi | Semua harus setuju |

Quality Control | Pemeriksa visual, analis sensor, pemeriksa spesifikasi | Lulus 2 dari 3 |

Insight Arsitektur Utama

- before_agent_callback untuk konfigurasi: Ambil konfigurasi sekali di awal, isi status untuk semua sub-agen. Tidak ada pembacaan file konfigurasi di sub-agen.

- {key} State Templating: Deklaratif, bersih, idiomatik. Tidak ada f-string, tidak ada impor, tidak ada manipulasi sys.path.

- Mekanisme konsensus: Perjanjian 2 dari 3 menangani ambiguitas secara efektif tanpa memerlukan perjanjian bulat.

- ParallelAgent untuk tugas independen: Jika analisis tidak saling bergantung, jalankan secara bersamaan agar lebih cepat.

- Dua pola MCP: Kustom (buat sendiri) vs. OneMCP (di-hosting Google). Keduanya menggunakan StreamableHTTP.

- Deployment stateless: Kode yang sama berfungsi secara lokal dan di-deploy. Variabel lingkungan + backend API = tidak ada file konfigurasi dalam container.

Apa Langkah Selanjutnya?

Level 2: Pemrosesan Sinyal SOS →

Pelajari cara memproses sinyal bahaya yang masuk dari penyintas lain menggunakan pola berbasis peristiwa dan koordinasi agen yang lebih canggih.