1. Introducción

Gracias al progreso reciente en el aprendizaje automático, es relativamente fácil para las computadoras reconocer objetos en imágenes. En este codelab, te guiaremos por un recorrido de extremo a extremo para compilar un modelo de clasificación de imágenes que pueda reconocer diferentes tipos de objetos y, luego, implementar el modelo en una app para iOS y Android. ML Kit y AutoML te permiten crear e implementar el modelo a gran escala sin ninguna experiencia en aprendizaje automático.

¿Qué es ML Kit?

ML Kit es un SDK para dispositivos móviles que lleva la experiencia de Google en aprendizaje automático a las apps para iOS y Android en un paquete potente y fácil de usar. Independientemente de si eres nuevo o tienes experiencia en el aprendizaje automático, puedes implementar fácilmente la funcionalidad que necesitas con solo unas pocas líneas de código. Existen varias APIs que pueden reconocer texto, rostros, etc., que puedes usar de inmediato. Sin embargo, si necesitas reconocer objetos que las APIs no admiten, como reconocer diferentes tipos de flores a partir de una imagen, debes entrenar tu propio modelo. Aquí es donde AutoML puede ayudarte.

¿Qué es AutoML?

Cloud AutoML es un conjunto de productos de aprendizaje automático que permite a los desarrolladores con experiencia limitada en esta área entrenar modelos de alta calidad específicos para las necesidades de su empresa mediante el aprendizaje por transferencia de vanguardia de Google y la tecnología de búsqueda de arquitectura neuronal.

En este codelab, usaremos AutoML Vision Edge en el ML Kit para entrenar un modelo de clasificación de flores. El modelo se entrena en la nube, pero luego la app lo agrupa o lo descarga para ejecutar las inferencias en su totalidad en el dispositivo.

Qué aprenderás

- Cómo entrenar un modelo de clasificación de imágenes con AutoML Vision Edge en el Kit de AA.

- Cómo ejecutarlo en una app de muestra para Android o iOS con el SDK del ML Kit

Requisitos

En la app para Android

- Una versión reciente de Android Studio (v3.4 y versiones posteriores)

- Emulador de Android Studio con Play Store o un dispositivo Android físico (versión 5.0 o posterior)

- Conocimientos básicos sobre el desarrollo de Android en Kotlin

En la app para iOS

- Una versión reciente de Xcode (v10.2 y versiones posteriores)

- Simulador de iOS o dispositivo iOS físico (versión 9.0 o posterior)

- CocoaPods

- Conocimientos básicos sobre el desarrollo para iOS en Swift

2. Configuración

Descarga el código y el conjunto de datos de entrenamiento

Descarga un archivo ZIP que contenga el código fuente para este codelab y un conjunto de datos de entrenamiento. Extrae el archivo en tu máquina local.

Crea un proyecto de Firebase console

- Dirígete a Firebase console.

- Selecciona Create New Project y asígnale el nombre “ML Kit Codelab”.

Configura la app para Android

- Agrega la app para Android al proyecto de Firebase. Nombre del paquete de Android:

com.google.firebase.codelab.mlkit.automl - Descarga el archivo de configuración

google-services.jsony colócalo en la app para Android enandroid/mlkit-automl/app/google-services.json.

Configura la app para iOS

- Agregar nuestra app para iOS al proyecto de Firebase ID del paquete de iOS:

com.google.firebase.codelab.mlkit.automl - Descarga el archivo de configuración

GoogleService-Info.plisty sigue las instrucciones para agregarlo a la app para iOS enios/mlkit-automl/GoogleService-Info.plist.

3. Prepara el conjunto de datos de entrenamiento

Si quieres entrenar un modelo para que reconozca diferentes tipos de objetos, debes preparar un conjunto de imágenes y etiquetar cada una de ellas. En este codelab, creamos un archivo de fotos de flores con licencia de Creative Commons para que lo uses.

El conjunto de datos está empaquetado en un archivo ZIP llamado flower_photos.zip que se incluye en el archivo ZIP que descargaste en el paso anterior.

Explora el conjunto de datos

Si extraes el archivo flower_photos.zip, verás que el conjunto de datos contiene imágenes de 5 tipos de flores: diente de león, margarita, tulipanes, girasoles y rosas, que están organizadas en carpetas con los nombres de las flores. Esta es una manera práctica de crear un conjunto de datos de entrenamiento para alimentar a AutoML y entrenar un modelo de clasificación de imágenes.

Hay 200 imágenes para cada tipo de flor en este conjunto de datos de entrenamiento. Solo necesitas un mínimo de 10 imágenes por clase para entrenar un modelo. Sin embargo, más imágenes de entrenamiento generalmente darán lugar a mejores modelos.

4. Entrenar un modelo

Subir el conjunto de datos de entrenamiento

- En Firebase console, abre el proyecto que acabas de crear.

- Selecciona Kit de AA > AutoML.

- Es posible que veas algunas pantallas de bienvenida. Selecciona Comenzar cuando corresponda.

- Una vez que finalice el progreso de configuración, selecciona Agregar conjunto de datos y asígnale el nombre “Flores”.

- En Objetivo del modelo, elige Clasificación con una sola etiqueta,ya que los datos de entrenamiento solo contienen una etiqueta por imagen.

- Selecciona Crear.

- Sube el archivo

flower_photos.zipque descargaste en el paso anterior para importar el conjunto de datos de entrenamiento de flores. - Espera unos minutos hasta que finalice la tarea de importación.

- Ahora puedes confirmar que el conjunto de datos se importó correctamente.

- Debido a que todas las imágenes del conjunto de datos de entrenamiento se etiquetaron, puedes proceder a entrenar un modelo.

Entrena un modelo de clasificación de imágenes

Dado que nuestro modelo se ejecutará en un dispositivo móvil con capacidad de procesamiento y almacenamiento limitados, debemos tener en cuenta no solo la precisión del modelo, sino también su tamaño y velocidad. Siempre existe una compensación entre la precisión del modelo, la latencia (es decir, el tiempo que se tarda en clasificar una imagen) y el tamaño del modelo. Por lo general, un modelo con mayor precisión también es más grande y tardará más en clasificar una imagen.

AutoML te ofrece varias opciones: puedes elegir optimizar la exactitud, la latencia y el tamaño del modelo, o el equilibrio entre ellas. También puedes elegir cuánto tiempo permites que se entrene el modelo. Los conjuntos de datos más grandes necesitan entrenarse por más tiempo.

Estos son los pasos que debes seguir si quieres entrenar el modelo por tu cuenta.

- Selecciona Entrenar modelo.

- Selecciona la opción Uso general y el tiempo de entrenamiento de 1 hora de procesamiento.

- Espera un tiempo (probablemente varias horas) para que finalice la tarea de entrenamiento.

- Una vez finalizada la tarea de entrenamiento, verás métricas de evaluación sobre el rendimiento del modelo entrenado.

5. Usa el modelo en apps para dispositivos móviles

Preparación

- Este codelab contiene una app de ejemplo de iOS y Android que muestra cómo usar el modelo de clasificación de imágenes que entrenamos anteriormente en una app para dispositivos móviles. Ambas apps tienen funciones similares. Puedes elegir la plataforma que te resulte más familiar.

- Antes de continuar, asegúrate de que descargaste las apps de ejemplo y las configuraste en el paso 2.

- También asegúrate de que tu entorno local esté configurado para poder compilar apps para la plataforma que hayas elegido (Android/iOS).

Descargar el modelo de clasificación de imágenes

- Si entrenaste un modelo en el paso anterior, selecciona Descargar para obtener el modelo.

- Si no entrenaste un modelo o tu tarea de entrenamiento aún no ha finalizado, puedes usar el modelo que se incluye en las apps de ejemplo.

Agrega el modelo a las apps de ejemplo

Solo debes agregar el modelo a las apps de ejemplo y estas funcionarán de inmediato. Para obtener una guía completa sobre cómo integrar AutoML del ML Kit en tu app, consulta nuestra documentación ( Android, iOS). El código que interactúa con el SDK del ML Kit se encuentra en los archivos ImageClassifier.kt y ImageClassifier.swift, respectivamente, por lo que puedes comenzar a partir de allí para explorar cómo funcionan las apps.

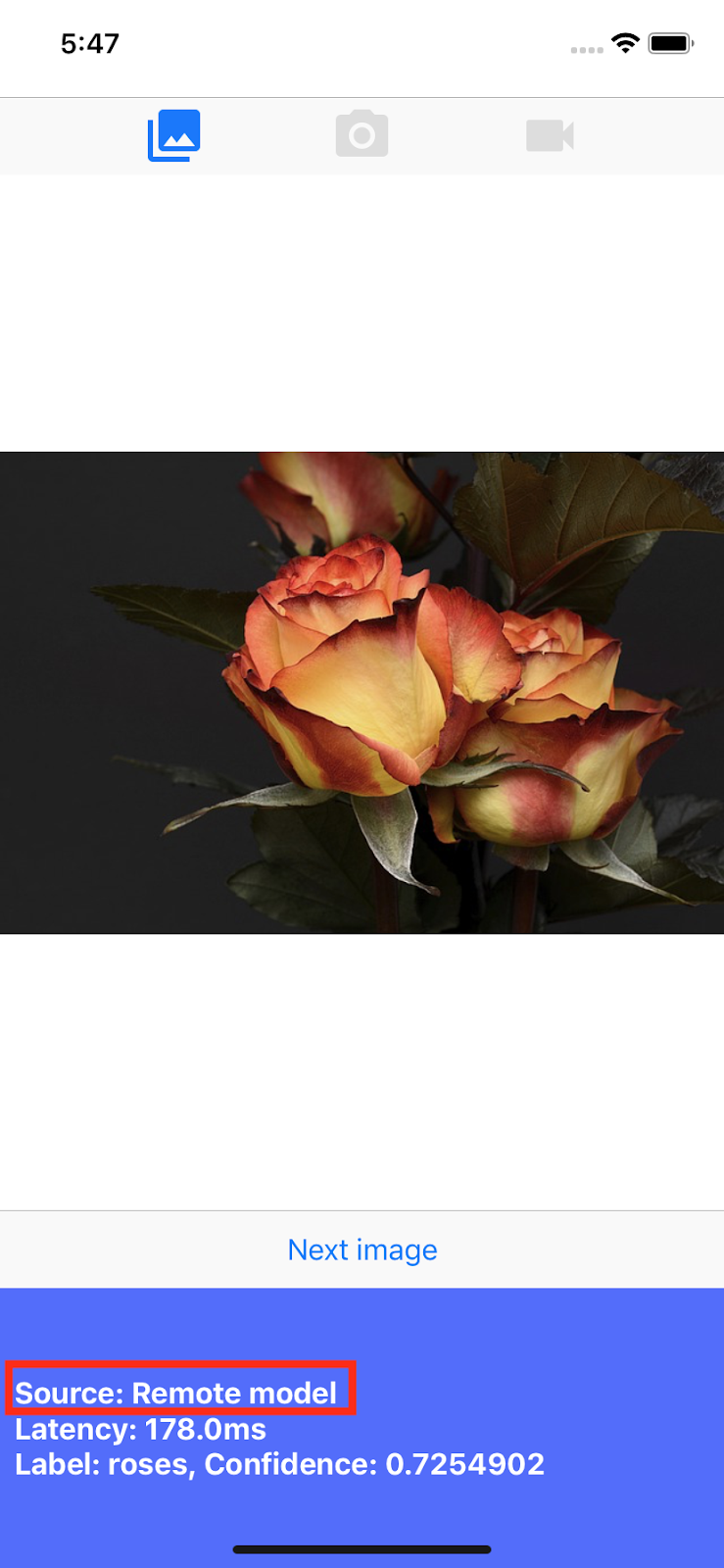

Hay dos opciones para implementar el modelo: local y remoto.

- El modelo local se usa principalmente para empaquetar el modelo de clasificación de imágenes en el objeto binario de tu app, aunque también se puede proporcionar un modelo guardado en el almacenamiento local. Con los paquetes, el modelo estará disponible inmediatamente para los usuarios después de que descarguen tu app de la App Store o Play Store, y funcionará sin conexión a Internet.

- Modelo remoto significa que el modelo está alojado en Firebase y solo se descargará en el dispositivo de tu usuario cuando sea necesario por primera vez. Después de eso, el modelo también funcionará sin conexión.

App para Android

- Abre Android Studio.

- Importa la app para Android en

android/mlkit-automl/ - (Opcional) Extrae el modelo que descargaste y copia su contenido en el modelo que se incluye en la app de ejemplo.

- Ahora, haz clic en Run (

) en la barra de herramientas de Android Studio y verifica que pueda reconocer diferentes tipos de flores.

) en la barra de herramientas de Android Studio y verifica que pueda reconocer diferentes tipos de flores.

App para iOS

- Abre Terminal y ve a la carpeta

ios/mlkit-automl/. - Ejecuta

pod installpara descargar dependencias a través de CocoaPods - Ejecuta

open MLVisionExample.xcworkspace/para abrir el lugar de trabajo del proyecto en Xcode. - Extrae el modelo que descargaste y copia su contenido sobre el modelo que se incluye en la app de ejemplo en

ios/ml-automl/Resources/automl/(opcional).

- Ahora, haz clic en Run (

) en la barra de herramientas de Xcode y verifica que pueda reconocer diferentes tipos de flores.

) en la barra de herramientas de Xcode y verifica que pueda reconocer diferentes tipos de flores.

6. Usa el modelo remoto (opcional)

El modelo remoto del Kit de AA te permite no incluir los modelos de Tensorflow Lite en el objeto binario de tu app, sino descargarlos a pedido desde Firebase cuando sea necesario. Los modelos remotos tienen varios beneficios en comparación con los modelos locales:

- Objeto binario de app más pequeño

- Poder actualizar modelos sin actualizar la app

- Pruebas A/B con varias versiones de un modelo

En este paso, publicaremos un modelo remoto y lo usaremos en las apps de ejemplo. Asegúrate de haber terminado de entrenar tu modelo en el paso 4 de este codelab.

Publica el modelo

- Dirígete a Firebase console.

- Selecciona el “Codelab de AutoML del Kit de AA”. proyecto que creaste anteriormente.

- Selecciona Kit de AA > AutoML

- Selecciona "Flores". que creaste anteriormente.

- Confirma que se haya completado la tarea de entrenamiento y, luego, selecciona el modelo.

- Selecciona Publicar y asígnale el nombre “mlkit_flowers”.

Reconoce flores con el modelo remoto

Las apps de ejemplo están configuradas para usar el modelo remoto si está disponible. Después de publicar el modelo remoto, solo tendrás que volver a ejecutar las apps para activar la descarga del modelo. Puedes verificar que la app usa el modelo remoto desde la sección "Fuente". en el pie de página de la pantalla de la aplicación. Consulta la sección de "Solución de problemas" sección a continuación si no funciona.

Soluciona problemas

Si la app de ejemplo aún usa el modelo local, verifica que el nombre del modelo remoto esté configurado correctamente dentro del código.

App para Android

- Ve a

ImageClassifier.kty busca este bloque.

val remoteModel = FirebaseRemoteModel.Builder(REMOTE_MODEL_NAME).build()

- Verifica que el nombre del modelo establecido en el código coincida con el nombre del modelo que publicaste anteriormente a través de Firebase console.

- Ahora, haz clic en Run (

) en la barra de herramientas de Android Studio para volver a ejecutar la app.

) en la barra de herramientas de Android Studio para volver a ejecutar la app.

App para iOS

- Ve a

ImageClassifier.swifty busca este bloque

return RemoteModel(

name: Constant.remoteAutoMLModelName,

allowsModelUpdates: true,

initialConditions: initialConditions,

updateConditions: updateConditions

)

- Verifica que el nombre del modelo establecido en el código coincida con el nombre del modelo que publicaste anteriormente a través de Firebase console.

- Ahora, haz clic en Run (

) en la barra de herramientas de Xcode para volver a ejecutar la app.

) en la barra de herramientas de Xcode para volver a ejecutar la app.

7. ¡Felicitaciones!

Realizaste un proceso completo de entrenamiento de un modelo de clasificación de imágenes con tus propios datos de entrenamiento mediante AutoML y, luego, usaste el modelo en una app para dispositivos móviles con ML Kit.

Consulta nuestra documentación para obtener información sobre cómo integrar AutoML Vision Edge en ML Kit a tu propia app.

También puedes probar nuestras apps de ejemplo de ML Kit para ver otras funciones de Firebase ML Kit.

Muestras de Android

Muestras de iOS