1. Прежде чем начать

Эта лаборатория разработана на основе конечного результата предыдущей лаборатории из этой серии для обнаружения спама в комментариях с помощью TensorFlow.js.

В последней лаборатории вы создали полнофункциональную веб-страницу для вымышленного видеоблога. Вы могли фильтровать комментарии на предмет спама до того, как они были отправлены на сервер для хранения или другим подключенным клиентам, используя предварительно обученную модель обнаружения спама в комментариях на базе TensorFlow.js в браузере.

Конечный результат этой кодовой лаборатории показан ниже:

Хотя это работало очень хорошо, существуют крайние случаи, которые необходимо изучить, но которые не удалось обнаружить. Вы можете переобучить модель, чтобы учесть ситуации, с которыми она не смогла справиться.

В этой лаборатории основное внимание уделяется использованию обработки естественного языка (искусству понимания человеческого языка с помощью компьютера) и показано, как изменить существующее веб-приложение, которое вы создали (настоятельно рекомендуется изучать кодовые лаборатории по порядку ), чтобы решить самые сложные задачи. реальная проблема спама в комментариях, с которой наверняка столкнутся многие веб-разработчики, работающие над одним из постоянно растущего числа популярных веб-приложений, существующих сегодня.

В этой кодовой лаборатории вы сделаете еще один шаг вперед, переобучив свою модель машинного обучения для учета изменений в содержимом спам-сообщений, которые могут меняться с течением времени, на основе текущих тенденций или популярных тем обсуждений, что позволит вам поддерживать модель в актуальном состоянии и учитывать такие изменения.

Предварительные условия

- Завершен первый кодлаб из этой серии.

- Базовые знания веб-технологий, включая HTML, CSS и JavaScript.

Что ты построишь

Вы будете повторно использовать ранее созданный веб-сайт для вымышленного видеоблога с разделом комментариев в реальном времени и обновите его, чтобы загрузить специально обученную версию модели обнаружения спама с помощью TensorFlow.js, чтобы она работала лучше в крайних случаях, в которых раньше она не работала. . Конечно, как веб-разработчики и инженеры, вы можете изменить этот гипотетический UX для повторного использования на любом веб-сайте, над которым вы работаете в своих повседневных должностях, и адаптировать решение для любого сценария использования клиента — возможно, это блог, форум или что-то еще. форма CMS, например, Drupal.

Давайте займемся взломом...

Что вы узнаете

Вы будете:

- Определите крайние случаи, в которых предварительно обученная модель давала сбой.

- Переобучите модель классификации спама, созданную с помощью Model Maker.

- Экспортируйте эту модель на основе Python в формат TensorFlow.js для использования в браузерах.

- Обновите размещенную модель и ее словарь недавно обученной моделью и проверьте результаты.

Для этой лабораторной работы предполагается знание HTML5, CSS и JavaScript. Вы также запустите некоторый код Python через блокнот «совместной лаборатории», чтобы повторно обучить модель, созданную с помощью Model Maker, но для этого не требуется никакого знания Python.

2. Настройтесь на кодирование

Вы снова будете использовать Glitch.com для размещения и изменения веб-приложения. Если вы еще не выполнили необходимые условия , вы можете клонировать конечный результат здесь в качестве отправной точки. Если у вас есть вопросы о том, как работает код, настоятельно рекомендуется пройти предыдущую лабораторную работу по коду , в которой описано, как создать это работающее веб-приложение, прежде чем продолжить.

В Glitch просто нажмите кнопку «ремикс», чтобы создать его форк и создать новый набор файлов, которые вы сможете редактировать.

3. Найдите крайние случаи в предыдущем решении

Если вы откроете готовый веб-сайт, который вы только что клонировали, и попытаетесь набрать несколько комментариев, вы заметите, что большую часть времени он работает так, как задумано, блокируя комментарии, которые звучат как спам, как и ожидалось, и разрешая законные ответы.

Однако, если вы проявите хитрость и попытаетесь сформулировать вещи, чтобы разрушить модель, в какой-то момент вы, вероятно, добьетесь успеха. Методом проб и ошибок вы можете вручную создавать примеры, подобные показанным ниже. Попробуйте вставить их в существующее веб-приложение, проверьте консоль и посмотрите вероятность того, что комментарий окажется спамом:

Законные комментарии опубликованы без проблем (истинно отрицательные):

- «Ух ты, мне нравится это видео, потрясающая работа». Вероятность спама: 47,91854%

- «Очень понравились эти демо! Есть какие-нибудь подробности?» Вероятность спама: 47,15898%

- «На какой сайт я могу зайти, чтобы узнать больше?» Вероятность спама: 15,32495%

Это здорово, вероятность всего вышеперечисленного довольно низка и успешно проходит через SPAM_THRESHOLD по умолчанию с минимальной вероятностью 75%, прежде чем будет предпринято действие (определено в коде script.js из предыдущей кодовой лаборатории).

Теперь давайте попробуем написать еще несколько острых комментариев, которые будут помечены как спам, хотя это не так...

Законные комментарии, помеченные как спам (ложные срабатывания):

- «Может ли кто-нибудь дать ссылку на сайт маски, которую он носит?» Вероятность спама: 98,46466%

- «Могу ли я купить эту песню на Spotify? Кто-нибудь, пожалуйста, дайте мне знать!» Вероятность спама: 94,40953%

- «Может ли кто-нибудь связаться со мной и рассказать о том, как загрузить TensorFlow.js?» Вероятность спама: 83,20084%

О, нет! Похоже, эти законные комментарии помечаются как спам, хотя их следует разрешить. Как вы можете это исправить?

Один простой вариант — увеличить SPAM_THRESHOLD до уровня уверенности более 98,5%. В этом случае эти неверно классифицированные комментарии будут опубликованы. Имея это в виду, давайте продолжим рассматривать другие возможные результаты ниже...

Спам-комментарии, помеченные как спам (действительно положительные):

- «Это круто, но посмотрите ссылки для скачивания на моем сайте, они лучше!» Вероятность спама: 99,77873%

- «Я знаю людей, которые могут купить вам лекарства, просто посмотрите мой профиль, чтобы узнать подробности». Вероятность спама: 98,46955%

- «Посмотрите мой профиль, чтобы загрузить еще более потрясающие видео, которые еще лучше! http://example.com» Вероятность спама: 96,26383%

Хорошо, это работает, как и ожидалось, с нашим первоначальным порогом в 75 %, но, учитывая, что на предыдущем шаге вы изменили SPAM_THRESHOLD , чтобы обеспечить уверенность более 98,5 %, это будет означать, что 2 примера здесь будут пропущены, поэтому, возможно, порог слишком высок. . Может быть, 96% лучше? Но если вы это сделаете, то один из комментариев в предыдущем разделе (ложные срабатывания) будет помечен как спам, хотя он и был законным, поскольку его рейтинг составил 98,46466%.

В этом случае, вероятно, лучше всего перехватить все эти настоящие спам-комментарии и просто переобучиться на описанные выше ошибки. Установив пороговое значение 96%, все истинные положительные результаты по-прежнему будут фиксироваться, и вы исключите 2 ложных положительных результата, указанных выше. Не так уж плохо для простого изменения одного числа.

Давайте продолжим...

Спам-комментарии, которые было разрешено к публикации (ложноотрицательные):

- «Посмотрите мой профиль, чтобы загрузить еще больше потрясающих видео, которые еще лучше!» Вероятность спама: 7,54926%

- " Получите скидку на наши занятия в тренажерном зале, см. профиль pr0! " Вероятность спама: 17,49849%

- «Боже мой, акции GOOG только что взлетели! Поспеши, пока не поздно!» Вероятность спама: 20,42894%

Для этих комментариев вы ничего не сможете сделать, просто изменив значение SPAM_THRESHOLD . Снижение порога спама с 96% до ~9% приведет к тому, что подлинные комментарии будут помечены как спам — один из них имеет рейтинг 58%, хотя он и является законным. Единственный способ справиться с подобными комментариями — это переобучить модель, включив такие крайние случаи в обучающие данные, чтобы она научилась корректировать свое представление о мире с учетом того, что является спамом, а что нет.

Хотя сейчас остается единственный вариант — переобучить модель, вы также увидели, как можно уточнить порог, когда вы решите назвать что-то спамом, чтобы также повысить производительность. Для человека 75% кажется вполне уверенным, но для этой модели вам нужно было увеличить его ближе к 81,5%, чтобы быть более эффективным с примерами входных данных.

Не существует какого-то волшебного значения, которое бы хорошо работало в разных моделях, и это пороговое значение необходимо устанавливать для каждой модели после экспериментов с реальными данными, чтобы определить, что работает хорошо.

В некоторых ситуациях ложноположительный (или отрицательный результат) может иметь серьезные последствия (например, в медицинской отрасли), поэтому вы можете установить очень высокий порог и запросить дополнительные проверки вручную для тех, кто не соответствует пороговому значению. Это ваш выбор как разработчика, и он требует некоторых экспериментов.

4. Переобучить модель обнаружения спама в комментариях

В предыдущем разделе вы определили ряд крайних случаев, которые не подходили для модели, и единственным вариантом было переобучить модель для учета этих ситуаций. В производственной системе вы можете обнаружить это со временем, когда люди вручную помечают комментарий как спам, который был пропущен, или модераторы, просматривающие помеченные комментарии, понимают, что некоторые из них на самом деле не являются спамом, и могут пометить такие комментарии для повторного обучения. Предполагая, что вы собрали кучу новых данных для этих крайних случаев (для достижения наилучших результатов у вас должны быть некоторые варианты этих новых предложений, если можете), теперь мы продолжим показывать вам, как переобучить модель с учетом этих крайних случаев.

Резюме готовой модели

Используемая вами готовая модель была моделью, созданной третьей стороной с помощью Model Maker , которая использует для функционирования модель «среднего внедрения слов».

Поскольку модель была построена с помощью Model Maker, вам нужно будет ненадолго переключиться на Python, чтобы переобучить модель, а затем экспортировать созданную модель в формат TensorFlow.js, чтобы вы могли использовать ее в браузере. К счастью, Model Maker делает использование своих моделей очень простым, поэтому следовать этому будет довольно легко, и мы проведем вас через этот процесс, поэтому не волнуйтесь, если вы никогда раньше не использовали Python!

Колабы

Поскольку в этой лаборатории кода вас не слишком беспокоит желание настроить сервер Linux со всеми установленными различными утилитами Python, вы можете просто выполнить код через веб-браузер, используя «Блокнот Colab». Эти ноутбуки могут подключаться к «бэкэнду» — просто серверу с предустановленными программами, с которого вы затем можете выполнить произвольный код в веб-браузере и просмотреть результаты. Это очень полезно для быстрого прототипирования или использования в подобных уроках.

Просто зайдите на colab.research.google.com , и вы увидите экран приветствия, как показано ниже:

Теперь нажмите кнопку «Новый блокнот» в правом нижнем углу всплывающего окна, и вы увидите пустой колаб, подобный этому:

Большой! Следующий шаг — подключить фронтенд-колаб к какому-нибудь внутреннему серверу, чтобы вы могли выполнить написанный вами код Python. Для этого нажмите «Подключиться» в правом верхнем углу и выберите «Подключиться к размещенной среде выполнения».

После подключения вы должны увидеть на своем месте значки ОЗУ и диска, например:

Хорошая работа! Теперь вы можете начать программировать на Python, чтобы переобучить модель Model Maker. Просто следуйте инструкциям ниже.

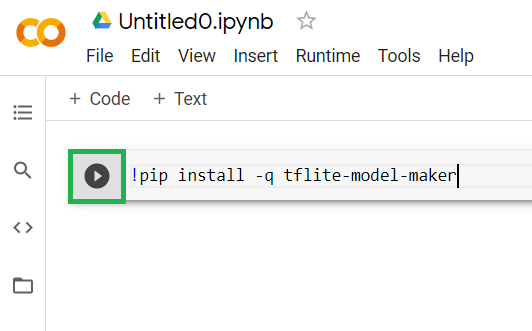

Шаг 1

В первую ячейку, которая в настоящее время пуста, скопируйте приведенный ниже код. Он установит для вас TensorFlow Lite Model Maker с помощью менеджера пакетов Python под названием «pip» (он похож на npm, с которым большинство читателей этой лаборатории кода, возможно, более знакомы по экосистеме JS):

!apt-get install libasound-dev portaudio19-dev libportaudio2 libportaudiocpp0

!pip install -q tflite-model-maker

Однако вставка кода в ячейку не приведет к его выполнению. Затем наведите указатель мыши на серую ячейку, в которую вы вставили приведенный выше код, и в левой части ячейки появится небольшой значок «воспроизведение», как показано ниже:

Нажмите кнопку воспроизведения, чтобы выполнить код, только что введенный в ячейку.

Нажмите кнопку воспроизведения, чтобы выполнить код, только что введенный в ячейку.

Теперь вы увидите установку производителя моделей:

Как только выполнение этой ячейки завершится, как показано, перейдите к следующему шагу ниже.

Шаг 2

Затем добавьте новую ячейку кода, как показано, чтобы вы могли вставить еще немного кода после первой ячейки и выполнить ее отдельно:

Следующая выполненная ячейка будет содержать ряд операций импорта, которые потребуется использовать коду в остальной части блокнота. Скопируйте и вставьте приведенное ниже в новую созданную ячейку:

import numpy as np

import os

from tflite_model_maker import configs

from tflite_model_maker import ExportFormat

from tflite_model_maker import model_spec

from tflite_model_maker import text_classifier

from tflite_model_maker.text_classifier import DataLoader

import tensorflow as tf

assert tf.__version__.startswith('2')

tf.get_logger().setLevel('ERROR')

Довольно стандартные вещи, даже если вы не знакомы с Python. Вы просто импортируете некоторые утилиты и функции Model Maker, необходимые для классификатора спама. При этом также будет проверено, используете ли вы TensorFlow 2.x, что является обязательным условием для использования Model Maker.

Наконец, как и раньше, выполните ячейку, нажав значок «воспроизведение» при наведении курсора на ячейку, а затем добавьте новую ячейку кода для следующего шага.

Шаг 3

Затем вы загрузите данные с удаленного сервера на свое устройство и установите переменную training_data в качестве пути к загруженному локальному файлу:

data_file = tf.keras.utils.get_file(fname='comment-spam-extras.csv', origin='https://storage.googleapis.com/jmstore/TensorFlowJS/EdX/code/6.5/jm_blog_comments_extras.csv', extract=False)

Model Maker может обучать модели из простых файлов CSV, подобных загруженному. Вам просто нужно указать, в каких столбцах находится текст, а в каких — метки. Вы увидите, как это сделать, на шаге 5. При желании вы можете напрямую загрузить CSV-файл, чтобы посмотреть, что он содержит.

Внимательные из вас заметят, что имя этого файла — jm_blog_comments _extras .csv — этот файл представляет собой просто исходные обучающие данные, которые мы использовали для создания первой модели спама в комментариях, в сочетании с новыми данными о крайних случаях, которые вы обнаружили, поэтому все это в одном файл. Помимо новых предложений, на которых вы хотите учиться, вам также потребуются исходные данные обучения, которые использовались для обучения модели.

Необязательно: если вы загрузите этот CSV-файл и проверите последние несколько строк, вы увидите примеры крайних случаев, которые раньше работали неправильно. Они только что были добавлены в конец существующих данных обучения, которые предварительно созданная модель использовала для обучения.

Выполните эту ячейку, затем, как только она завершится, добавьте новую ячейку и перейдите к шагу 4.

Шаг 4

Используя Model Maker, вы не строите модели с нуля. Обычно вы используете существующие модели, которые затем настраиваете в соответствии со своими потребностями.

Model Maker предоставляет несколько предварительно изученных встроенных моделей, которые вы можете использовать, но самым простым и быстрым для начала является average_word_vec , который вы использовали в предыдущей лаборатории кода для создания своего веб-сайта. Вот код:

spec = model_spec.get('average_word_vec')

spec.num_words = 2000

spec.seq_len = 20

spec.wordvec_dim = 7

Продолжайте и запустите это, как только вставите его в новую ячейку.

Понимание

num_words

параметр

Это количество слов, которые вы хотите использовать в модели. Вы можете подумать, что чем больше, тем лучше, но обычно есть золотая середина, основанная на частоте использования каждого слова. Если вы используете каждое слово во всем корпусе, вы можете столкнуться с тем, что модель будет пытаться выучить и сбалансировать веса слов, которые используются только один раз — это не очень полезно. В любом текстовом корпусе вы обнаружите, что многие слова используются только один или два раза, и, как правило, не стоит использовать их в вашей модели, поскольку они оказывают незначительное влияние на общее настроение. Таким образом, вы можете настроить свою модель на нужное количество слов, используя параметр num_words . Меньшее число здесь будет иметь меньшую и более быструю модель, но она может быть менее точной, поскольку распознает меньше слов. У большего числа здесь будет более крупная и потенциально более медленная модель. Ключевым моментом является поиск оптимальной точки, и вы, как инженер по машинному обучению, должны выяснить, что лучше всего подходит для вашего варианта использования.

Понимание

wordvec_dim

параметр

Параметр wordvec_dim — это количество измерений, которые вы хотите использовать для вектора каждого слова. Эти измерения, по сути, представляют собой различные характеристики (создаваемые алгоритмом машинного обучения при обучении), которые могут быть измерены для любого данного слова и которые программа будет использовать, чтобы попытаться лучше всего связать слова, похожие каким-либо значимым образом.

Например, если у вас есть параметр, определяющий, насколько «медицинским» является слово, такое слово, как «таблетки», может получить высокий балл здесь по этому параметру и быть связано с другими словами с высоким рейтингом, такими как «рентген», но слово «кошка» будет иметь высокий балл. низкий уровень в этом измерении. Может оказаться, что «медицинский аспект» полезен для определения спама в сочетании с другими потенциальными измерениями, которые могут оказаться значимыми.

В случае слов, которые имеют высокие оценки в «медицинском измерении», может оказаться полезным второе измерение, которое соотносит слова с человеческим телом. Такие слова, как «нога», «рука», «шея», могут получить высокие оценки здесь, а также довольно высокие оценки в медицинском измерении.

Модель может использовать эти измерения, чтобы затем обнаруживать слова, которые с большей вероятностью связаны со спамом. Возможно, спам-сообщения с большей вероятностью будут содержать слова, относящиеся как к медицине, так и к частям человеческого тела.

Эмпирическое правило, полученное в ходе исследований, заключается в том, что корень четвертой степени из количества слов хорошо подходит для этого параметра. Итак, если я использую 2000 слов, хорошей отправной точкой будет 7 измерений. Если вы измените количество используемых слов, вы также можете изменить это.

Понимание

seq_len

параметр

Модели, как правило, очень жесткие, когда дело касается входных значений. Для языковой модели это означает, что языковая модель может классифицировать предложения определенной статической длины. Это определяется параметром seq_len , где он означает «длина последовательности». Когда вы преобразуете слова в числа (или лексемы), предложение становится последовательностью этих лексем. Таким образом, ваша модель будет обучена (в данном случае) классифицировать и распознавать предложения, содержащие 20 токенов. Если предложение длиннее, оно будет сокращено. Если он короче, он будет дополнен — как и в первой кодовой лаборатории этой серии.

Шаг 5 — загрузите данные обучения

Ранее вы загрузили файл CSV. Теперь пришло время использовать загрузчик данных, чтобы превратить их в обучающие данные, которые сможет распознать модель.

data = DataLoader.from_csv(

filename=data_file,

text_column='commenttext',

label_column='spam',

model_spec=spec,

delimiter=',',

shuffle=True,

is_training=True)

train_data, test_data = data.split(0.9)

Если вы откроете файл CSV в редакторе, вы увидите, что каждая строка имеет только два значения, и они описаны текстом в первой строке файла. Обычно каждая запись считается «столбцом». Вы увидите, что дескриптор первого столбца — commenttext , а первая запись в каждой строке — это текст комментария.

Аналогично, дескриптор второго столбца — spam , и вы увидите, что вторая запись в каждой строке имеет значение ИСТИНА или ЛОЖЬ, чтобы указать, считается ли этот текст спамом в комментариях или нет. Другие свойства задают спецификацию модели, созданную на шаге 4, а также символ-разделитель, которым в данном случае является запятая, поскольку файл разделен запятой. Вы также устанавливаете параметр перемешивания, чтобы случайным образом переупорядочить обучающие данные, чтобы элементы, которые могли быть похожими или собраны вместе, были случайным образом распределены по набору данных.

Затем вы будете использовать data.split() , чтобы разделить данные на обучающие и тестовые данные. .9 означает, что 90% набора данных будет использоваться для обучения, а остальная часть — для тестирования.

Шаг 6. Постройте модель

Добавим еще одну ячейку, куда мы добавим код для построения модели:

model = text_classifier.create(train_data, model_spec=spec, epochs=50)

При этом создается модель классификатора текста с помощью Model Maker, и вы указываете обучающие данные, которые хотите использовать (которые были определены на шаге 4), спецификацию модели (которая также была настроена на шаге 4) и количество эпох в этот случай 50.

Основной принцип машинного обучения заключается в том, что это форма сопоставления с образцом. Первоначально он загружает предварительно обученные веса слов и пытается сгруппировать их вместе с «прогнозированием» того, какие из них, сгруппированные вместе, указывают на спам, а какие нет. В первый раз, скорее всего, оно будет близко к 50:50, поскольку модель только начинает работать, как показано ниже:

Затем он измерит результаты этого и изменит веса модели, чтобы настроить свой прогноз, и повторит попытку. Это эпоха. Итак, если указать epochs=50, этот «цикл» пройдет 50 раз, как показано:

Таким образом, к тому времени, когда вы достигнете 50-й эпохи, модель сообщит о гораздо более высоком уровне точности. В данном случае показано 99,1%!

Шаг 7 — Экспортируйте модель

После завершения обучения вы можете экспортировать модель. TensorFlow обучает модель в своем собственном формате, который необходимо преобразовать в формат TensorFlow.js для использования на веб-странице. Просто вставьте следующее в новую ячейку и выполните:

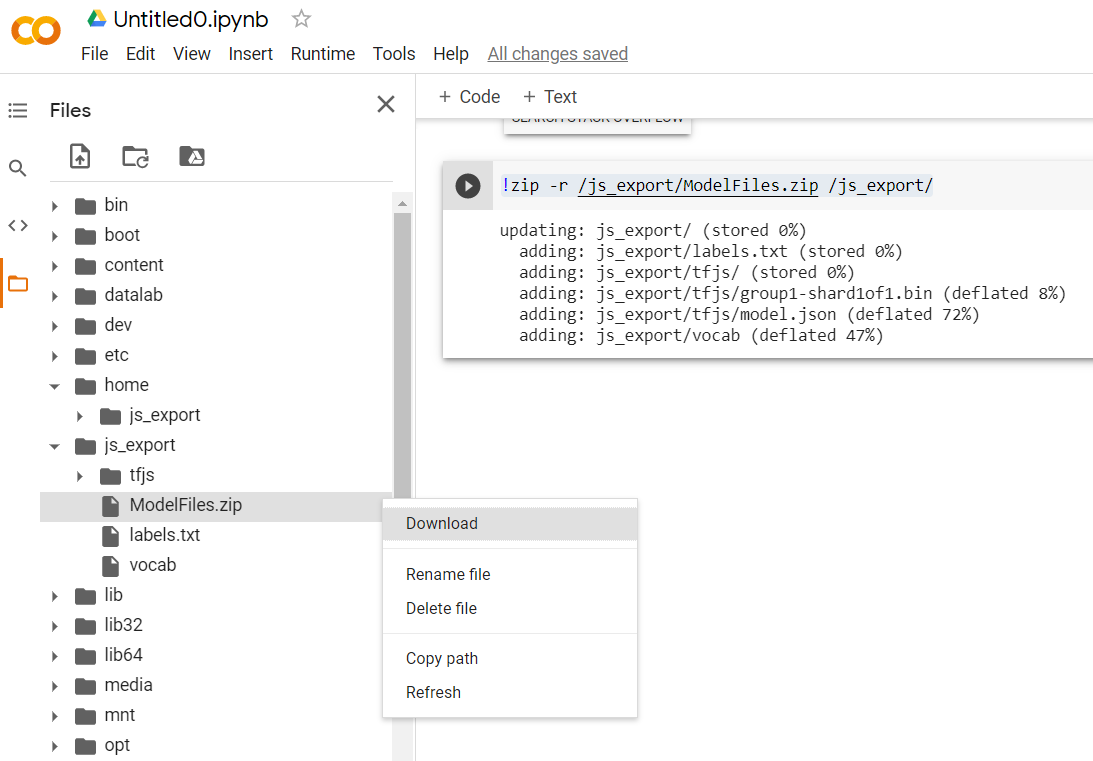

model.export(export_dir="/js_export/", export_format=[ExportFormat.TFJS, ExportFormat.LABEL, ExportFormat.VOCAB])

!zip -r /js_export/ModelFiles.zip /js_export/

После выполнения этого кода, если вы щелкнете значок маленькой папки слева от Colab, вы сможете перейти к папке, в которую вы экспортировали выше (в корневом каталоге — вам может потребоваться подняться на уровень выше), и найти zip-архив файла. экспортированные файлы содержатся в ModelFiles.zip .

Загрузите этот zip-файл на свой компьютер сейчас, поскольку вы будете использовать эти файлы так же, как и в первой лаборатории кода:

Большой! Часть Python окончена, теперь вы можете вернуться в страну JavaScript, которую вы знаете и любите. Уф!

5. Обслуживание новой модели машинного обучения

Теперь вы почти готовы загрузить модель. Прежде чем вы сможете это сделать, вам необходимо загрузить файлы новой модели, загруженные ранее, в лабораторию кода, чтобы они размещались и могли использоваться в вашем коде.

Сначала, если вы еще этого не сделали, разархивируйте файлы модели, только что загруженной из блокнота Model Maker Colab, который вы только что запустили. Вы должны увидеть следующие файлы, содержащиеся в различных папках:

Что у тебя здесь?

-

model.json— это один из файлов, составляющих обученную модель TensorFlow.js. Вы будете ссылаться на этот конкретный файл в коде JS. -

group1-shard1of1.bin— это двоичный файл, содержащий большую часть сохраненных данных для экспортированной модели TensorFlow.js, и его необходимо будет разместить где-нибудь на вашем сервере для загрузки в том же каталоге, что иmodel.jsonвыше. -

vocab— этот странный файл без расширения — это продукт Model Maker, который показывает нам, как кодировать слова в предложениях, чтобы модель понимала, как их использовать. Вы углубитесь в это подробнее в следующем разделе. -

labels.txt— просто содержит результирующие имена классов, которые будет предсказывать модель. Для этой модели, если вы откроете этот файл в текстовом редакторе, в нем просто будут указаны «ложь» и «истина», указывающие «не спам» или «спам» в качестве выходных данных прогноза.

Разместите файлы модели TensorFlow.js.

Сначала разместите файлы model.json и *.bin , созданные на веб-сервере, чтобы вы могли получить к ним доступ через свою веб-страницу.

Удалить существующие файлы модели

Поскольку вы работаете над конечным результатом первой лаборатории кода в этой серии, вам необходимо сначала удалить существующие загруженные файлы модели. Если вы используете Glitch.com, просто проверьте панель файлов слева на наличие model.json и group1-shard1of1.bin , щелкните раскрывающееся меню из трех точек для каждого файла и выберите «Удалить» , как показано:

Загрузка новых файлов в Glitch

Большой! Теперь загрузите новые:

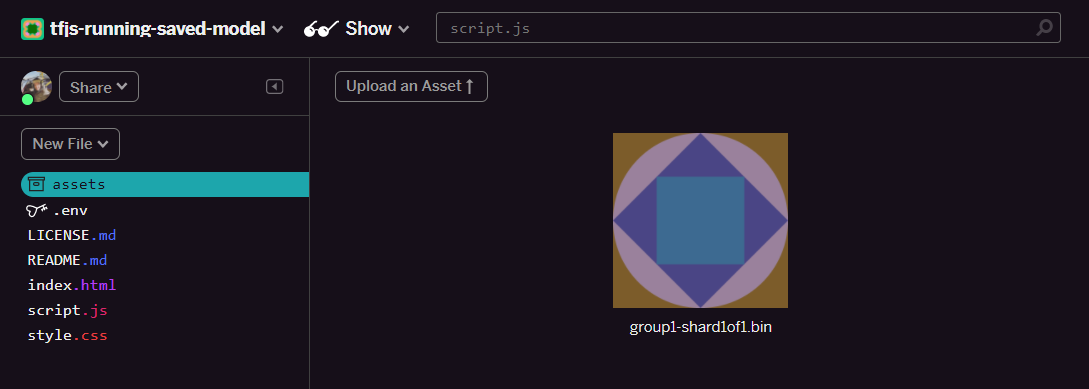

- Откройте папку ресурсов на левой панели вашего проекта Glitch и удалите все старые загруженные ресурсы, если они имеют одинаковые имена.

- Нажмите «Загрузить ресурс» и выберите

group1-shard1of1.binдля загрузки в эту папку. После загрузки это должно выглядеть так:

- Большой! Теперь сделайте то же самое и с файлом model.json, чтобы в папке с ресурсами было 2 файла, например:

- Если вы нажмете на файл

group1-shard1of1.binкоторый вы только что загрузили, вы сможете скопировать URL-адрес в его местоположение. Скопируйте этот путь сейчас, как показано:

- Теперь в левом нижнем углу экрана нажмите «Инструменты» > «Терминал» . Подождите, пока загрузится окно терминала.

- После загрузки введите следующее и нажмите Enter, чтобы изменить каталог на папку

www:

Терминал:

cd www

- Затем используйте

wget, чтобы загрузить два только что загруженных файла, заменив приведенные ниже URL-адреса URL-адресами, которые вы сгенерировали для файлов в папке ресурсов на Glitch (проверьте папку ресурсов, чтобы найти собственный URL-адрес каждого файла).

Обратите внимание на пространство между двумя URL-адресами и на то, что URL-адреса, которые вам нужно будет использовать, будут отличаться от показанных, но будут выглядеть одинаково:

Терминал

wget https://cdn.glitch.com/1cb82939-a5dd-42a2-9db9-0c42cab7e407%2Fmodel.json?v=1616111344958 https://cdn.glitch.com/1cb82939-a5dd-42a2-9db9-0c42cab7e407%2Fgroup1-shard1of1.bin?v=1616017964562

Супер! Теперь вы сделали копию файлов, загруженных в папку www .

Однако сейчас они будут скачиваться со странными именами. Если вы наберете ls в терминале и нажмите Enter, вы увидите что-то вроде этого:

- Используя команду

mv, переименуйте файлы. Введите в консоль следующее и нажмите Enter после каждой строки:

Терминал:

mv *group1-shard1of1.bin* group1-shard1of1.bin

mv *model.json* model.json

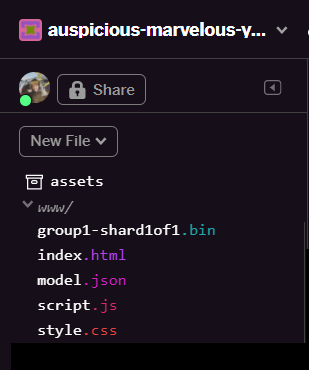

- Наконец, обновите проект Glitch, набрав

refreshв терминале и нажмите Enter:

Терминал:

refresh

После обновления вы должны увидеть model.json и group1-shard1of1.bin в папке www пользовательского интерфейса:

Большой! Последний шаг — обновить файл dictionary.js .

- Преобразуйте новый загруженный файл словарного текста в правильный формат JS вручную с помощью текстового редактора или с помощью этого инструмента и сохраните полученный результат как

dictionary.jsв папкеwww. Если у вас уже есть файлdictionary.jsвы можете просто скопировать и вставить в него новое содержимое и сохранить файл.

Уууу! Вы успешно обновили все измененные файлы, и если вы теперь попытаетесь использовать веб-сайт, вы заметите, как переобученная модель должна уметь учитывать крайние случаи, обнаруженные и изученные, как показано:

Как видите, первые 6 писем теперь корректно классифицируются как не спам, а все 2-я партия из 6 писем идентифицируется как спам. Идеальный!

Давайте попробуем несколько вариантов, чтобы увидеть, хорошо ли они обобщаются. Изначально было такое неудачное предложение, как:

« Боже мой, акции GOOG только что взлетели вверх! Поспешайте, пока не поздно! »

Теперь это правильно классифицируется как спам, но что произойдет, если вы измените его на:

« Итак, акции XYZ только что выросли в цене! Купите их прямо, пока не стало слишком поздно! »

Здесь вы получаете прогноз с вероятностью 98%, что это спам, и это верно, даже если вы немного изменили символ акции и формулировку.

Конечно, если вы действительно попытаетесь сломать эту новую модель, у вас это получится, и все сводиться к сбору еще большего количества обучающих данных, чтобы иметь больше шансов уловить больше уникальных вариантов для распространенных ситуаций, с которыми вы, вероятно, столкнетесь в Интернете. В будущей кодовой лаборатории мы покажем вам, как постоянно улучшать вашу модель с помощью живых данных по мере их пометки.

6. Поздравляем!

Поздравляем, вам удалось переобучить существующую модель машинного обучения, чтобы она обновлялась для работы в обнаруженных вами крайних случаях, и развернула эти изменения в браузере с помощью TensorFlow.js для реального приложения.

Резюме

В этой лаборатории вы:

- Обнаружены крайние случаи, которые не работали при использовании предварительно созданной модели спама в комментариях.

- Переобучена модель Model Maker, чтобы учесть обнаруженные вами крайние случаи.

- Экспортировали новую обученную модель в формат TensorFlow.js.

- Ваше веб-приложение обновлено для использования новых файлов.

Что дальше?

Итак, это обновление работает отлично, но, как и в любом веб-приложении, со временем будут происходить изменения. Было бы намного лучше, если бы приложение постоянно самосовершенствовалось с течением времени, вместо того, чтобы нам приходилось каждый раз делать это вручную. Можете ли вы подумать, как можно автоматизировать эти шаги, чтобы автоматически переобучить модель после того, как у вас, например, будет 100 новых комментариев, помеченных как неправильно классифицированные? Наденьте свою обычную шляпу веб-разработчика, и вы, вероятно, сможете понять, как создать конвейер, который сделает это автоматически. Если нет, не беспокойтесь, ждите следующей лаборатории из этой серии, которая покажет вам, как это сделать.

Поделитесь с нами тем, что вы делаете

Вы можете легко расширить то, что вы сделали сегодня, и для других творческих вариантов использования, и мы призываем вас мыслить нестандартно и продолжать хакинг.

Не забудьте отметить нас в социальных сетях, используя хэштег #MadeWithTFJS , чтобы ваш проект был представлен в блоге TensorFlow или даже на будущих мероприятиях . Нам бы хотелось посмотреть, что вы сделаете.

Дополнительные лаборатории кода TensorFlow.js для более глубокого изучения

- Используйте хостинг Firebase для развертывания и размещения модели TensorFlow.js в любом масштабе.

- Создайте умную веб-камеру, используя готовую модель обнаружения объектов с помощью TensorFlow.js.

Веб-сайты, которые стоит проверить

- Официальный сайт TensorFlow.js

- Готовые модели TensorFlow.js

- API TensorFlow.js

- TensorFlow.js Show & Tell — вдохновитесь и посмотрите, что сделали другие.