1. Overview

Introduction

Gemini 2.5 Pro is Google's strongest model for coding and world knowledge.

With the 2.5 series, the Gemini models are now hybrid reasoning models! Gemini 2.5 Pro can apply an extended amount of thinking across tasks, and use tools in order to maximize response accuracy.

Gemini 2.5 Pro is:

- A significant improvement from previous models across capabilities including coding, reasoning, and multimodality.

- Industry-leading in reasoning with state of the art performance in Math & STEM benchmarks.

- An amazing model for code, with particularly strong web development.

- Particularly good for complex prompts, while still being well rounded, including #1 on LMSys.

What you'll learn

In this tutorial, you will learn how to use the Gemini API and the Google Gen AI SDK for Python with the Gemini 2.5 Pro model.

You will complete the following tasks:

- Generate text from text prompts

- Generate streaming text

- Start multi-turn chats

- Use asynchronous methods

- Configure model parameters

- Set system instructions

- Use safety filters

- Use controlled generation

- Count tokens

- Process multimodal (audio, code, documents, images, video) data

- Use automatic and manual function calling

- Code execution

- Thinking mode examples

2. Before you begin

Prerequisites

Before you can get started, you will need a Google Cloud project with a valid billing account. Please select the Google Cloud project that you want to use.

In order to run the codelab, we will use Colab Enterprise which is a collaborative, managed notebook environment with the security and compliance capabilities of Google Cloud.

Enable the required APIs

Click the button below to enable the necessary APIs for this codelab in your Google Cloud project: Vertex AI, Dataform, and Compute Engine.

Copy the Colab notebook into Google Cloud

Click the button below to open the tutorial notebook in Colab Enterprise. This will create a copy of the Colab Notebook in your current Google Cloud project, which will then allow you to run the notebook.

Let's get started!

3. Initialise the Environment

Now that we have the Colab notebook created, we can execute the code that is provided within the notebook. The first few steps will install the dependencies and import the necessary libraries.

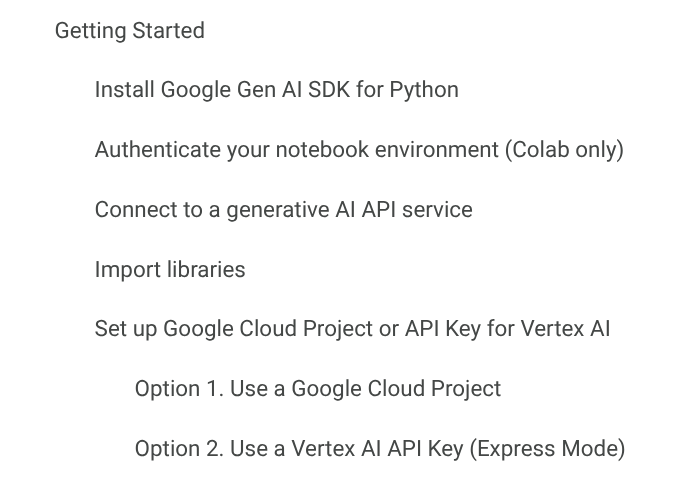

Run the steps in Getting started

Firstly run the cells in the Getting Started section one after the other.

Note: You can run a cell by holding the mouse pointer over the code cell that you want to run, and then click the ![]() Run cell icon.

Run cell icon.

By the end of this section you will have done the following.

- Install Google Gen AI SDK for Python

- Import the necessary libraries for the lab

- Set up a Google Cloud project to use Vertex AI

Now let's use Gemini 2.5 Pro to generate text

4. Generate text with Gemini

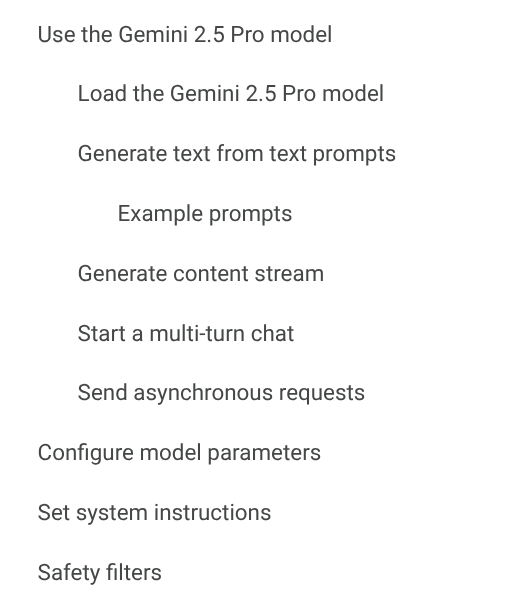

In this section of the Notebook, you will use Gemini 2.5 Pro to generate text completions.

Go ahead and execute the next set of cells in the notebook, taking the time to read through the code and understanding how to use the Google GenAI SDK.

By the end of this section, you will have learnt the following.

- How to specify the model to use.

- Non-streaming vs Streaming output generation.

- Using the multi-turn chat capability of the SDK.

- Calling the SDK asynchronously.

- Configuring the model parameters.

- Setting system instructions to customise model behaviour.

- Configuring content safety filters.

Next we will see how to send multimodal prompts to Gemini

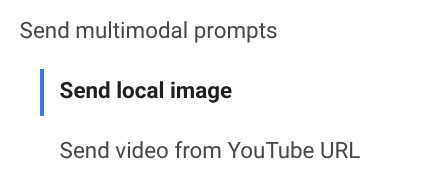

5. Multimodal prompts

In this section of the Notebook, you will use Gemini 2.5 Pro to process images and video.

Go ahead and execute the following cells in the notebook.

By the end of this section, you will have learnt the following.

- Send a prompt consisting of an image and text.

- Process a video from a URL

Next we will generate well defined and structured outputs

6. Structured outputs

When using the response of models in code, it is important that we get consistent and reliable outputs from the model. Controlled generation allows you to define a response schema to specify the structure of a model's output, the field names, and the expected data type for each field.

Go ahead and execute the following cells in the notebook.

Next we will see how to ground the models outputs

7. Grounding

If you want to use existing knowledge bases or provide real time information to the model, then you should look at the grounding the outputs of the model.

With Gemini and Vertex AI, you are able to ground the output in Google Search, on the output of function responses, and finally in code itself. Code Execution allows the model to generate code and run it, thereby learning from the results and iterating to get the final output.

Go ahead and execute the following cells in the notebook.

Next we will see the thinking capabilities of Gemini 2.5 Pro

8. Thinking

Thinking mode is especially useful for complex tasks that require multiple rounds of strategizing and iterative solving. Gemini 2.5 models are thinking models, capable of reasoning through their thoughts before responding, resulting in enhanced performance and improved accuracy.

Go ahead and execute the following cells in the notebook. When you do so, notice the thinking output before the model presents its actual output.

9. Conclusion

Congratulations! You've learned how to leverage the power of Gemini 2.5 Pro using the Google Gen AI SDK for Python, covering text generation, multimodality, grounding, structured outputs, and its advanced thinking capabilities. You now have the foundational knowledge to start building your own innovative applications using the SDK. Gemini 2.5 Pro, with its powerful thinking and reasoning mode, opens up new possibilities and lends itself to innovation across various use cases.

Additional references

- See the Google Gen AI SDK reference docs.

- Explore other notebooks in the Google Cloud Generative AI GitHub repository.

- Explore AI models in Model Garden.