1. 事前準備

在先前的程式碼研究室中,您建立了適用於 Android 和 iOS 的應用程式,並使用基本圖片標籤模型來辨識數百個圖片類別。它能辨識出一幅很普通的花圖片,也就是可看到花瓣、花朵、植物和天空的相片。

如要更新應用程式以辨識特定花朵、雛菊或玫瑰等特定花朵,您需要一個自訂模型,針對您想辨識的各類花朵,從大量樣本中訓練出。

必要條件

- 此學習路徑中的前一個程式碼研究室。

建構與學習目標

- 如何使用 TensorFlow Lite Model Maker 訓練圖片分類器自訂模型。

軟硬體需求

- 所有操作都能在瀏覽器中使用 Google Colab 完成,因此不需要使用特定硬體。

2. 開始使用

所有適用的程式碼都已準備好進行,您可以在這裡透過 Google Colab 執行。如果無法存取 Google Colab,可以複製存放區,並使用 ImageClassificationMobile->colab 目錄中名為 CustomImageClassifierModel.ipynb 的筆記本。

如果您有許多特定花卉範例,可以用 TensorFlow Lite Model Maker 訓練模型,輕鬆辨識這些花朵。

最簡單的方法是建立包含圖片的 .zip 或 .tgz 檔案,並排入目錄。舉例來說,如果您使用雛菊、蒲公英、玫瑰、向日葵和鬱金香的圖片,可將它們整理成如下所示的目錄:

只要建立並代管於伺服器上,您就可以用它來訓練模型。您將在本研究室後續步驟中,使用系統為您準備的解決方案。

本研究室假設您是使用 Google Colab 訓練模型。你可以前往 colab.research.google.com 下載 Colab。如果您使用其他環境,可能需要安裝許多依附元件,而不是 TensorFlow。

3. 安裝及匯入依附元件

- 安裝 TensorFlow Lite Model Maker。如要這麼做,請使用 pip 安裝。&>/dev/null at the end just suppresses the output.模型製作工具輸出的內容很多,但都沒有立即相關。使用者已隱藏自己的工作,因此你可以專心處理手邊的工作。

# Install Model maker

!pip install -q tflite-model-maker &> /dev/null

- 接下來,您必須匯入所需的程式庫,並確保使用的是 TensorFlow 2.x:

# Imports and check that we are using TF2.x

import numpy as np

import os

from tflite_model_maker import configs

from tflite_model_maker import ExportFormat

from tflite_model_maker import model_spec

from tflite_model_maker import image_classifier

from tflite_model_maker.image_classifier import DataLoader

import tensorflow as tf

assert tf.__version__.startswith('2')

tf.get_logger().setLevel('ERROR')

現在環境已就緒,可以開始建立模型了!

4. 下載及準備資料

如果您的圖片已依資料夾分類,且這些資料夾已經過壓縮,則只要您下載並解壓縮 ZIP 檔案,系統就會自動為圖片加上所在資料夾的標籤。系統會將這個目錄視為 data_path 來參照。

data_path = tf.keras.utils.get_file(

'flower_photos',

'https://storage.googleapis.com/download.tensorflow.org/example_images/flower_photos.tgz',

untar=True)

接著,這個資料路徑可載入類神經網路模型,以 TensorFlow Lite Model Maker 的 ImageClassifierDataLoader 類別進行訓練。只要將檔案對準資料夾,就可以順利存取。

使用機器學習技術訓練模型時,有一個重要因素是「不」將「所有」資料用於訓練。使用先前未看過的資料來測試模型。很容易使用從 ImageClassifierDataLoader 傳回的資料集分割方法。傳入 0.9 後,您可以得到 90% 的訓練資料做為訓練資料,10% 則是測試資料:

data = DataLoader.from_folder(data_path)

train_data, test_data = data.split(0.9)

資料準備就緒後,您可以使用該資料建立模型。

5. 建立圖片分類器模型

模型製作工具會抽象化設計類神經網路的許多細節,讓您不必擔心網路設計,以及卷積、稠密、relu、扁平、損失函式和最佳化工具。如為預設模型,您只要使用一行程式碼和提供的資料來訓練類神經網路,即可建立模型:

model = image_classifier.create(train_data)

執行這項作業時,您會看到類似以下的輸出內容:

Model: "sequential_2"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

hub_keras_layer_v1v2_2 (HubK (None, 1280) 3413024

_________________________________________________________________

dropout_2 (Dropout) (None, 1280) 0

_________________________________________________________________

dense_2 (Dense) (None, 5) 6405

=================================================================

Total params: 3,419,429

Trainable params: 6,405

Non-trainable params: 3,413,024

_________________________________________________________________

None

Epoch 1/5

103/103 [===] - 15s 129ms/step - loss: 1.1169 - accuracy: 0.6181

Epoch 2/5

103/103 [===] - 13s 126ms/step - loss: 0.6595 - accuracy: 0.8911

Epoch 3/5

103/103 [===] - 13s 127ms/step - loss: 0.6239 - accuracy: 0.9133

Epoch 4/5

103/103 [===] - 13s 128ms/step - loss: 0.5994 - accuracy: 0.9287

Epoch 5/5

103/103 [===] - 13s 126ms/step - loss: 0.5836 - accuracy: 0.9385

第一部分是顯示模型架構Model Maker 在背後的作業稱為 Transfer Learning,這項技術以現有的預先訓練模型做為起點,只是模型學到如何建構圖像,並應用這些概念理解 5 朵花。您可以在第一行看到:

hub_keras_layer_v1v2_2 (HubK (None, 1280) 3413024

關鍵是「Hub」一詞,代表這個模型來自 TensorFlow Hub。根據預設,TensorFlow Lite Model Maker 會使用「MobileNet」模型這個模型支援辨識 1000 種圖片此處的邏輯是學習「特徵」來區分 1000 個類別,可重複使用相同的「功能」可對應至我們的 5 種花卉類別,因此不必從頭開始學習。

模型經歷了 5 個訓練週期,也就是「訓練週期」完整的訓練週期,類神經網路會嘗試將圖片與標籤進行比對。在經過 5 個訓練週期前,結果大約 1 分鐘,訓練資料的準確度是 93.85%。由於總共有 5 個類別,隨機猜測將是 20% 準確,所以就是這樣!(報告也會報告「損失」數字,但您暫時會忽略此數值)。

我們先前已將資料分割成訓練和測試資料,對先前未看過的資料進行評估,藉此評估網路在先前未見過的資料上成效。使用 model.evaluate 測試資料,可讓您更加瞭解在實際環境中的表現:

loss, accuracy = model.evaluate(test_data)

輸出的內容應如下所示:

12/12 [===] - 5s 115ms/step - loss: 0.6622 - accuracy: 0.8801

請特別留意這裡的準確性。為 88.01%,因此在現實世界中使用預設模型時,準確度應該會再提升。這對於在大約一分鐘內訓練的預設模型而言並沒有幫助。當然,您可以盡量微調模型來改善模型,這一切看似科學!

6. 匯出模型

現在模型已訓練完成,下一步是將模型匯出成行動應用程式可使用的 .tflite 格式。模型製作工具提供可供使用的簡易匯出方法,只需指定輸出的目錄即可。

程式碼如下:

model.export(export_dir='/mm_flowers')

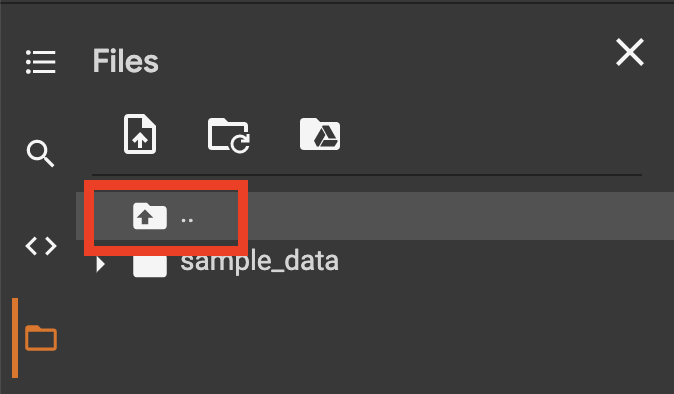

如果您在 Google Colab 中執行此工具,點選畫面左側的資料夾圖示即可查看模型:

這裡會列出目前目錄的清單。使用指定的按鈕向上移動目錄:

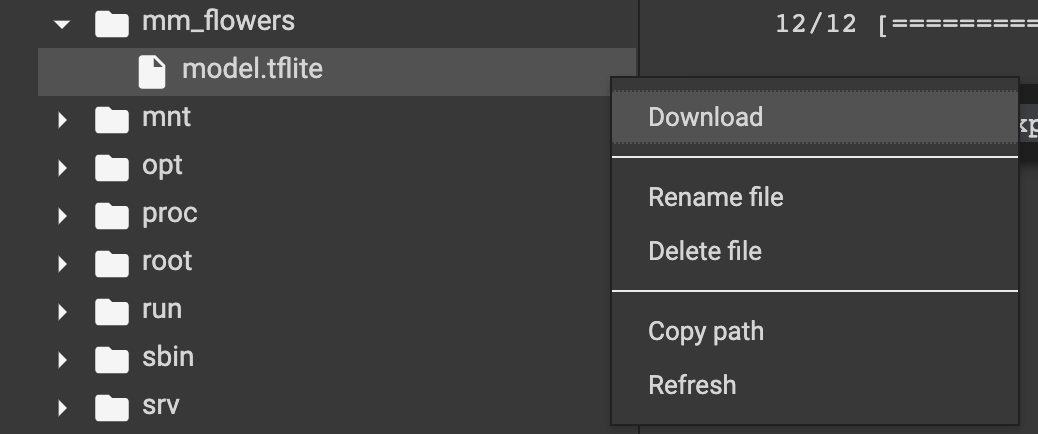

在您指定要匯出至 mm_flowers 目錄的程式碼中。開啟該檔案後,您就會看到名為「model.tflite」的檔案。這是訓練好的模型。

選取檔案後,畫面右側會顯示 3 個圓點。按一下這些圖示取得內容選單,您可以從這裡下載模型。

稍待片刻,模型會下載到「下載」資料夾。

7. 恭喜

您現在就可以將它整合至行動應用程式了!您將在下一個研究室中執行這項操作。