1. Introduction

In this codelab, you will build out an agent using the Agent Development Kit (ADK) that utilizes the MCP Toolbox for Databases.

Through the codelab, you will employ a step by step approach as follows:

- Provision a Cloud SQL for PostgreSQL database that will have the hotels database and sample data.

- Setup MCP Toolbox for Databases, that provides access to the data.

- Design and Develop an Agent using Agent Development Kit (ADK) that will utilize the MCP Toolbox to answer queries from the user.

- Explore options to test the Agent and MCP Toolbox for Databases locally and on Google Cloud via the Cloud Run service.

What you'll do

- Design, Build and Deploy an Agent that will answer user queries on hotels in a location or search for hotels by name.

What you'll learn

- Provisioning and populating a Cloud SQL for PostgreSQL database with sample data.

- Setup MCP Toolbox for Databases for the Cloud SQL for PostgreSQL database instance.

- Design and develop an Agent using Agent Development Kit (ADK) to answer user queries.

- Test out the Agent and MCP Toolbox for Databases in the local environment.

- (Optionally) Deploy the Agent and MCP Toolbox for Databases in Google Cloud.

What you'll need

- Chrome web browser

- A Gmail account

- A Cloud Project with billing enabled

This codelab, designed for developers of all levels (including beginners), uses Python in its sample application. However, Python knowledge isn't required and basic code reading capability will be sufficient to understand the concepts presented.

2. Before you begin

Create a project

- In the Google Cloud Console, on the project selector page, select or create a Google Cloud project.

- Make sure that billing is enabled for your Cloud project. Learn how to check if billing is enabled on a project .

- You'll use Cloud Shell, a command-line environment running in Google Cloud that comes preloaded with bq. Click Activate Cloud Shell at the top of the Google Cloud console.

- Once connected to Cloud Shell, you check that you're already authenticated and that the project is set to your project ID using the following command:

gcloud auth list

- Run the following command in Cloud Shell to confirm that the gcloud command knows about your project.

gcloud config list project

- If your project is not set, use the following command to set it:

gcloud config set project <YOUR_PROJECT_ID>

- Enable the required APIs via the command shown below. This could take a few minutes, so please be patient.

gcloud services enable cloudresourcemanager.googleapis.com \

servicenetworking.googleapis.com \

run.googleapis.com \

cloudbuild.googleapis.com \

cloudfunctions.googleapis.com \

aiplatform.googleapis.com \

sqladmin.googleapis.com \

compute.googleapis.com

On successful execution of the command, you should see a message similar to the one shown below:

Operation "operations/..." finished successfully.

The alternative to the gcloud command is through the console by searching for each product or using this link.

If any API is missed, you can always enable it during the course of the implementation.

Refer documentation for gcloud commands and usage.

3. Create a Cloud SQL instance

We will be using a Google Cloud SQL for PostgreSQL instance to store our hotels data. Cloud SQL for PostgreSQL is a fully-managed database service that helps you set up, maintain, manage, and administer your PostgreSQL relational databases on Google Cloud Platform.

Run the following command in Cloud Shell to create the instance:

gcloud sql instances create hoteldb-instance \

--database-version=POSTGRES_15 \

--tier db-g1-small \

--region=us-central1 \

--edition=ENTERPRISE \

--root-password=postgres

This command takes about 3-5 minutes to execute. Once the command is successfully executed, you should see an output that indicates that the command is done, along with your Cloud SQL instance information like NAME, DATABASE_VERSION, LOCATION, etc.

4. Prepare the Hotels database

Our task now will be to create some sample data for our Hotel Agent.

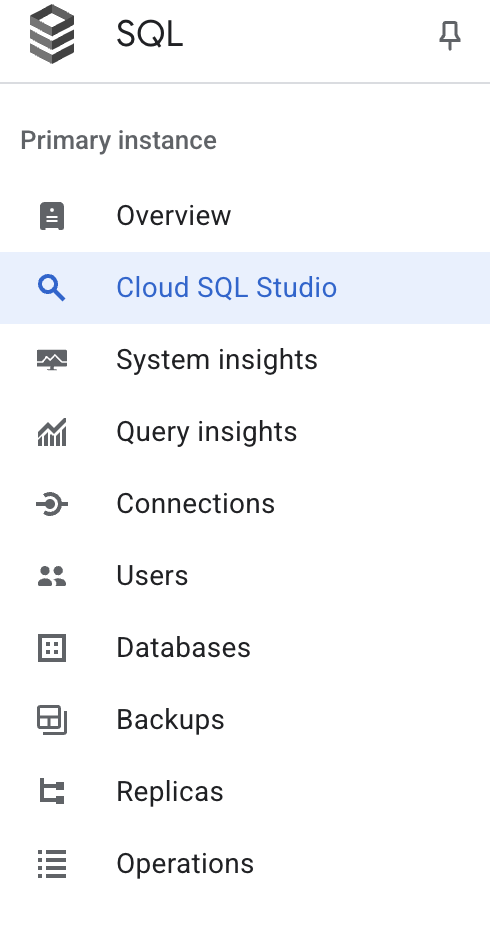

Visit the Cloud SQL page in the Cloud console.You should see the hoteldb-instance ready and created. Click on the name of the instance (hoteldb-instance) as shown below:

From the Cloud SQL left menu, visit the Cloud SQL Studio menu option as shown below:

This will ask you to sign in to the Cloud SQL Studio via which we will be giving a few SQL commands. Select postgres for the Database option and for both User and Password, the value to use is postgres. Click on AUTHENTICATE.

Let us first create the hotel table as per the schema given below. In one of the Editor panes in Cloud SQL Studio, execute the following SQL:

CREATE TABLE hotels(

id INTEGER NOT NULL PRIMARY KEY,

name VARCHAR NOT NULL,

location VARCHAR NOT NULL,

price_tier VARCHAR NOT NULL,

checkin_date DATE NOT NULL,

checkout_date DATE NOT NULL,

booked BIT NOT NULL

);

Now, let's populate the hotels table with sample data. Execute the following SQL:

INSERT INTO hotels(id, name, location, price_tier, checkin_date, checkout_date, booked)

VALUES

(1, 'Hilton Basel', 'Basel', 'Luxury', '2024-04-20', '2024-04-22', B'0'),

(2, 'Marriott Zurich', 'Zurich', 'Upscale', '2024-04-14', '2024-04-21', B'0'),

(3, 'Hyatt Regency Basel', 'Basel', 'Upper Upscale', '2024-04-02', '2024-04-20', B'0'),

(4, 'Radisson Blu Lucerne', 'Lucerne', 'Midscale', '2024-04-05', '2024-04-24', B'0'),

(5, 'Best Western Bern', 'Bern', 'Upper Midscale', '2024-04-01', '2024-04-23', B'0'),

(6, 'InterContinental Geneva', 'Geneva', 'Luxury', '2024-04-23', '2024-04-28', B'0'),

(7, 'Sheraton Zurich', 'Zurich', 'Upper Upscale', '2024-04-02', '2024-04-27', B'0'),

(8, 'Holiday Inn Basel', 'Basel', 'Upper Midscale', '2024-04-09', '2024-04-24', B'0'),

(9, 'Courtyard Zurich', 'Zurich', 'Upscale', '2024-04-03', '2024-04-13', B'0'),

(10, 'Comfort Inn Bern', 'Bern', 'Midscale', '2024-04-04', '2024-04-16', B'0');

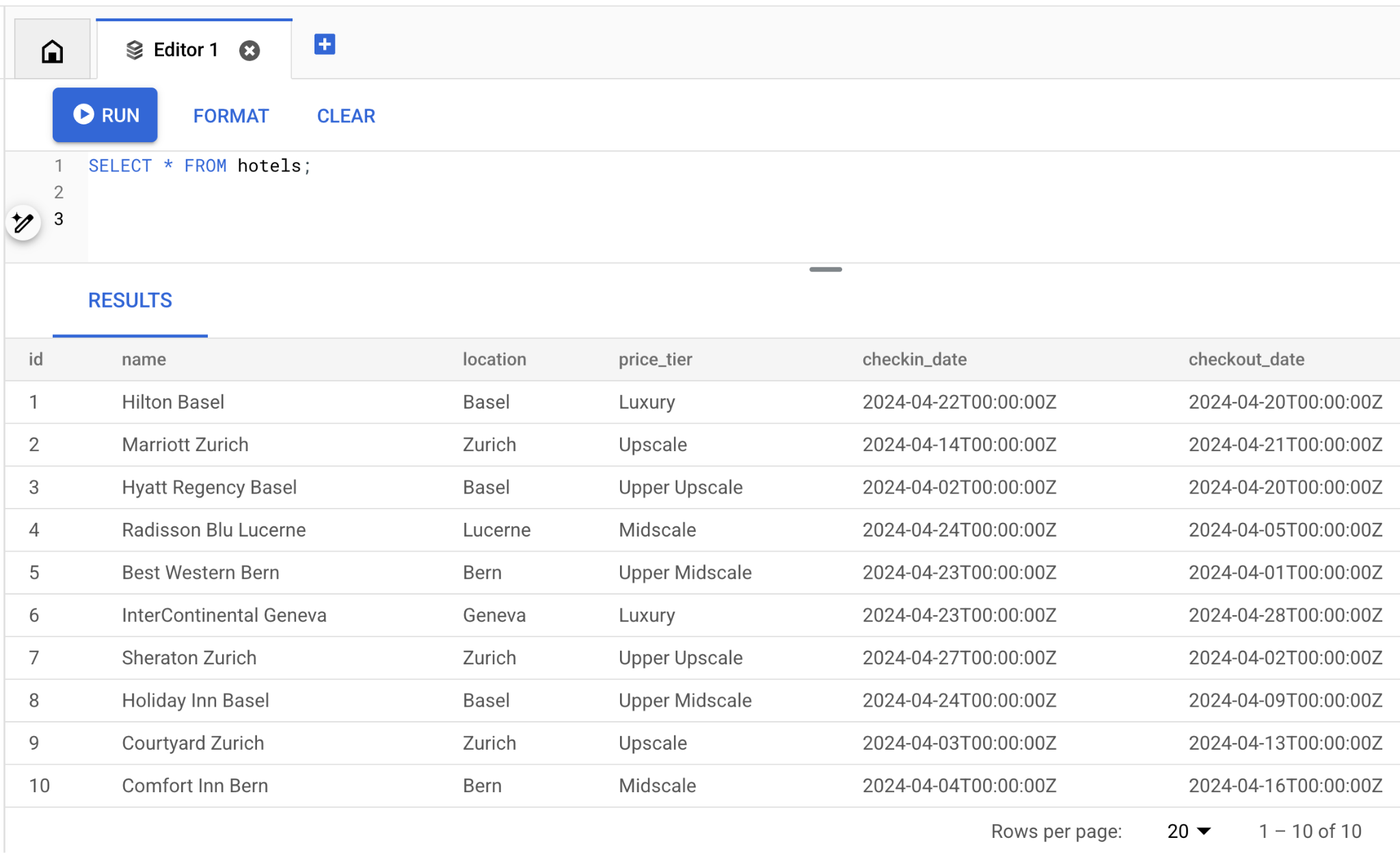

Let's validate the data by running a SELECT SQL as shown below:

SELECT * FROM hotels;

You should see a number of records in the hotels table as shown below:

We have completed the process of setting up a Cloud SQL instance and have created our sample data. In the next section, we shall be setting up the MCP Toolbox for Databases.

5. Setup MCP Toolbox for Databases

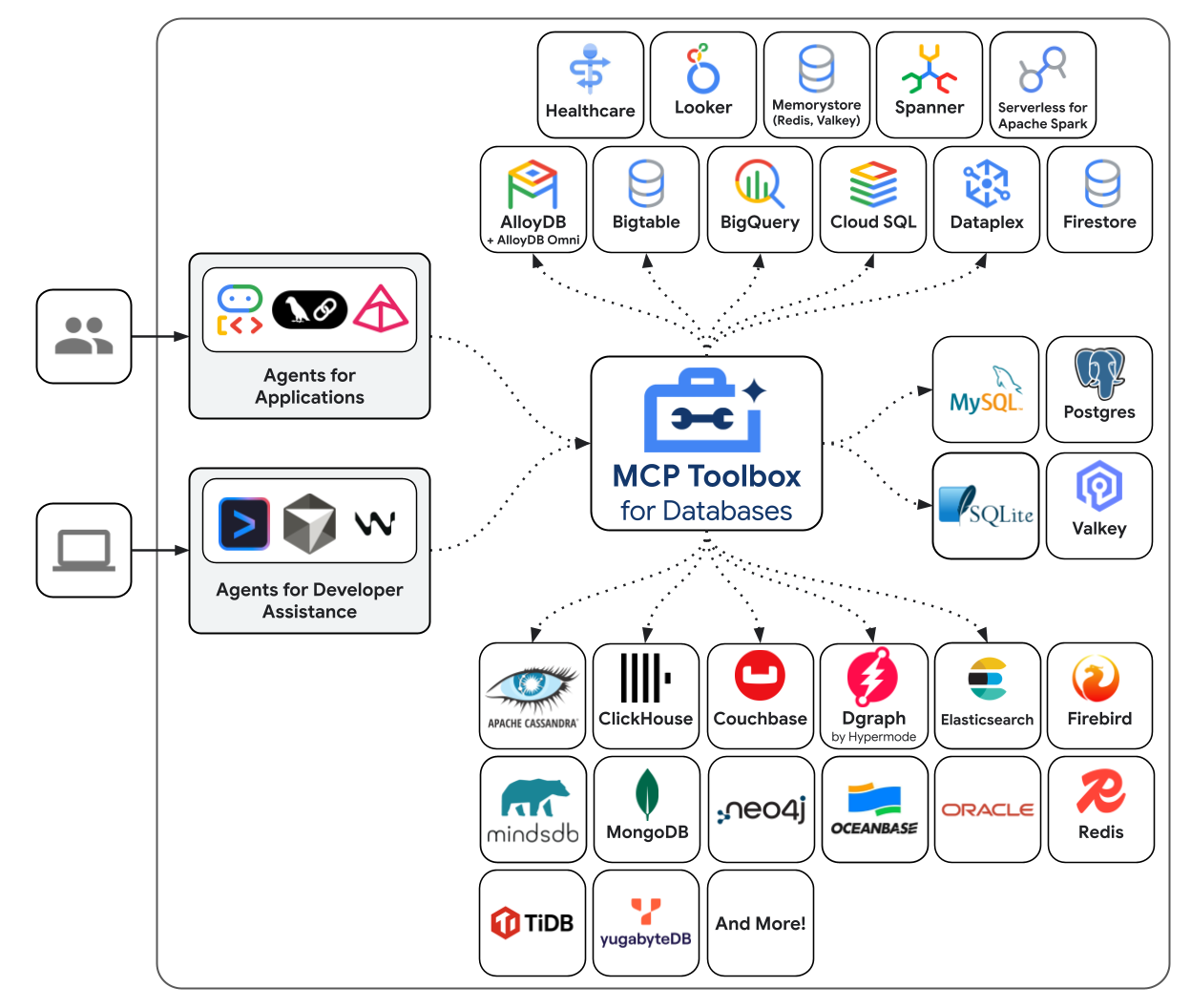

MCP Toolbox for Databases is an open source MCP server for databases It was designed with enterprise-grade and production-quality in mind. It enables you to develop tools easier, faster, and more securely by handling the complexities such as connection pooling, authentication, and more.

Toolbox helps you build Gen AI tools that let your agents access data in your database. Toolbox provides:

- Simplified development: Integrate tools to your agent in less than 10 lines of code, reuse tools between multiple agents or frameworks, and deploy new versions of tools more easily.

- Better performance: Best practices such as connection pooling, authentication, and more.

- Enhanced security: Integrated auth for more secure access to your data

- End-to-end observability: Out of the box metrics and tracing with built-in support for OpenTelemetry.

Toolbox sits between your application's orchestration framework and your database, providing a control plane that is used to modify, distribute, or invoke tools. It simplifies the management of your tools by providing you with a centralized location to store and update tools, allowing you to share tools between agents and applications and update those tools without necessarily redeploying your application.

You can see that one of the databases supported by MCP Toolbox for Databases is Cloud SQL and we have provisioned that in the previous section.

Installing the Toolbox

Open Cloud Shell Terminal and create a folder named mcp-toolbox.

mkdir mcp-toolbox

Go to the mcp-toolbox folder via the command shown below:

cd mcp-toolbox

Install the binary version of the MCP Toolbox for Databases via the script given below. The command given below is for Linux but if you are on Mac or Windows, ensure that you are downloading the correct binary. Check out the releases page for your Operation System and Architecture and download the correct binary.

export VERSION=0.23.0

curl -O https://storage.googleapis.com/genai-toolbox/v$VERSION/linux/amd64/toolbox

chmod +x toolbox

We now have the binary version of the toolbox ready for our use. Let's validate that we have set up the toolbox binary correctly and it does indicate the correct version.

Give the following command to determine the version of the Toolbox:

./toolbox -v

This should print an output similar to the following:

toolbox version 0.23.0+binary.linux.amd64.466aef0

The next step is to configure the toolbox with our data sources and other configurations.

Configuring tools.yaml

The primary way to configure Toolbox is through the tools.yaml file. Create a file named tools.yaml in the same folder i.e. mcp-toolbox, the contents of which is shown below.

You can use the nano editor that is available in Cloud Shell. The nano command is as follows: "nano tools.yaml".

Remember to replace the YOUR_PROJECT_ID value with your Google Cloud Project Id.

sources:

my-cloud-sql-source:

kind: cloud-sql-postgres

project: YOUR_PROJECT_ID

region: us-central1

instance: hoteldb-instance

database: postgres

user: postgres

password: "postgres"

tools:

search-hotels-by-name:

kind: postgres-sql

source: my-cloud-sql-source

description: Search for hotels based on name.

parameters:

- name: name

type: string

description: The name of the hotel.

statement: SELECT * FROM hotels WHERE name ILIKE '%' || $1 || '%';

search-hotels-by-location:

kind: postgres-sql

source: my-cloud-sql-source

description: Search for hotels based on location. Result is sorted by price from least to most expensive.

parameters:

- name: location

type: string

description: The location of the hotel.

statement: |

SELECT *

FROM hotels

WHERE location ILIKE '%' || $1 || '%'

ORDER BY

CASE price_tier

WHEN 'Midscale' THEN 1

WHEN 'Upper Midscale' THEN 2

WHEN 'Upscale' THEN 3

WHEN 'Upper Upscale' THEN 4

WHEN 'Luxury' THEN 5

ELSE 99 -- Handle any unexpected values, place them at the end

END;

toolsets:

my_first_toolset:

- search-hotels-by-name

- search-hotels-by-location

Let us understand the file in brief:

Sourcesrepresent your different data sources that a tool can interact with. A Source represents a data source that a tool can interact with. You can defineSourcesas a map in the sources section of your tools.yaml file. Typically, a source configuration will contain any information needed to connect with and interact with the database. In our case, we have configured a single source that points to our Cloud SQL for PostgreSQL instance with the credentials. For more information, refer to the Sources reference.Toolsdefine actions an agent can take – such as reading and writing to a source. A tool represents an action your agent can take, such as running a SQL statement. You can defineToolsas a map in the tools section of your tools.yaml file. Typically, a tool will require a source to act on. In our case, we are defining two tools:search-hotels-by-nameandsearch-hotels-by-locationand specifying the source that it is acting on, along with the SQL and the parameters. For more information, refer to the Tools reference.- Finally, we have the

Toolset, that allows you to define groups of tools that you want to be able to load together. This can be useful for defining different groups based on agent or application. In our case, we have a single toolset calledmy_first_toolset, which contains the two tools that we have defined.

Save the tools.yaml file in the nano editor via the following steps:

- Press

Ctrl + O(the "Write Out" command). - It will ask you to confirm the "File Name to Write". Just press

Enter. - Now press

Ctrl + Xto exit.

Run the MCP Toolbox for Databases Server

Run the following command (from the mcp-toolbox folder) to start the server:

./toolbox --tools-file "tools.yaml"

Ideally you should see an output that the Server has been able to connect to our data sources and has loaded the toolset and tools. A sample output is given below:

2025-12-17T04:02:19.18028278Z INFO "Initialized 1 sources: my-cloud-sql-source"

2025-12-17T04:02:19.1804012Z INFO "Initialized 0 authServices: "

2025-12-17T04:02:19.180466127Z INFO "Initialized 2 tools: search-hotels-by-name, search-hotels-by-location"

2025-12-17T04:02:19.180520492Z INFO "Initialized 2 toolsets: my_first_toolset, default"

2025-12-17T04:02:19.180534152Z INFO "Initialized 0 prompts: "

2025-12-17T04:02:19.180554658Z INFO "Initialized 1 promptsets: default"

2025-12-17T04:02:19.18058529Z WARN "wildcard (`*`) allows all origin to access the resource and is not secure. Use it with cautious for public, non-sensitive data, or during local development. Recommended to use `--allowed-origins` flag to prevent DNS rebinding attacks"

2025-12-17T04:02:19.185746495Z INFO "Server ready to serve!"

The MCP Toolbox Server runs by default on port 5000. If you find that port 5000 is already in use, feel free to use another port (say 7000) as per the command shown below. Please use 7000 then instead of the 5000 port in the subsequent commands.

./toolbox --tools-file "tools.yaml" --port 7000

Let us use Cloud Shell to test this out.

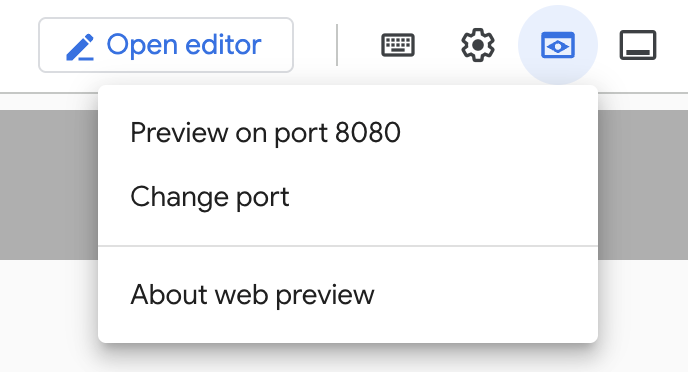

Click on Web Preview in Cloud Shell as shown below:

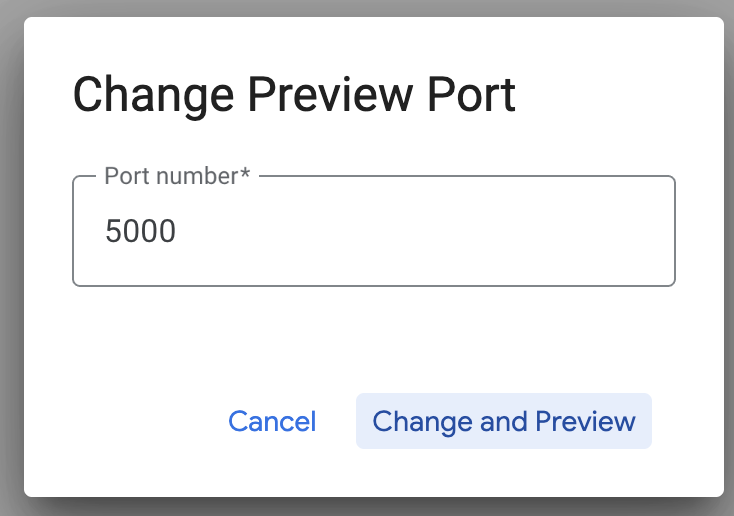

Click on Change port and set the port to 5000 as shown below and click on Change and Preview.

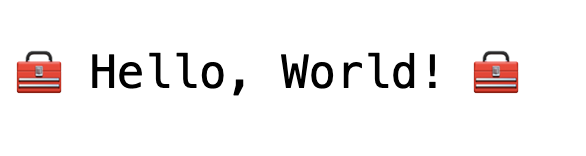

This should bring the following output:

In the browser URL, add the following to the end of the URL:

/api/toolset

This should bring up the tools that are currently configured. A sample output is shown below:

{

"serverVersion": "0.23.0+binary.linux.amd64.466aef0",

"tools": {

"search-hotels-by-location": {

"description": "Search for hotels based on location. Result is sorted by price from least to most expensive.",

"parameters": [

{

"name": "location",

"type": "string",

"required": true,

"description": "The location of the hotel.",

"authSources": []

}

],

"authRequired": []

},

"search-hotels-by-name": {

"description": "Search for hotels based on name.",

"parameters": [

{

"name": "name",

"type": "string",

"required": true,

"description": "The name of the hotel.",

"authSources": []

}

],

"authRequired": []

}

}

}

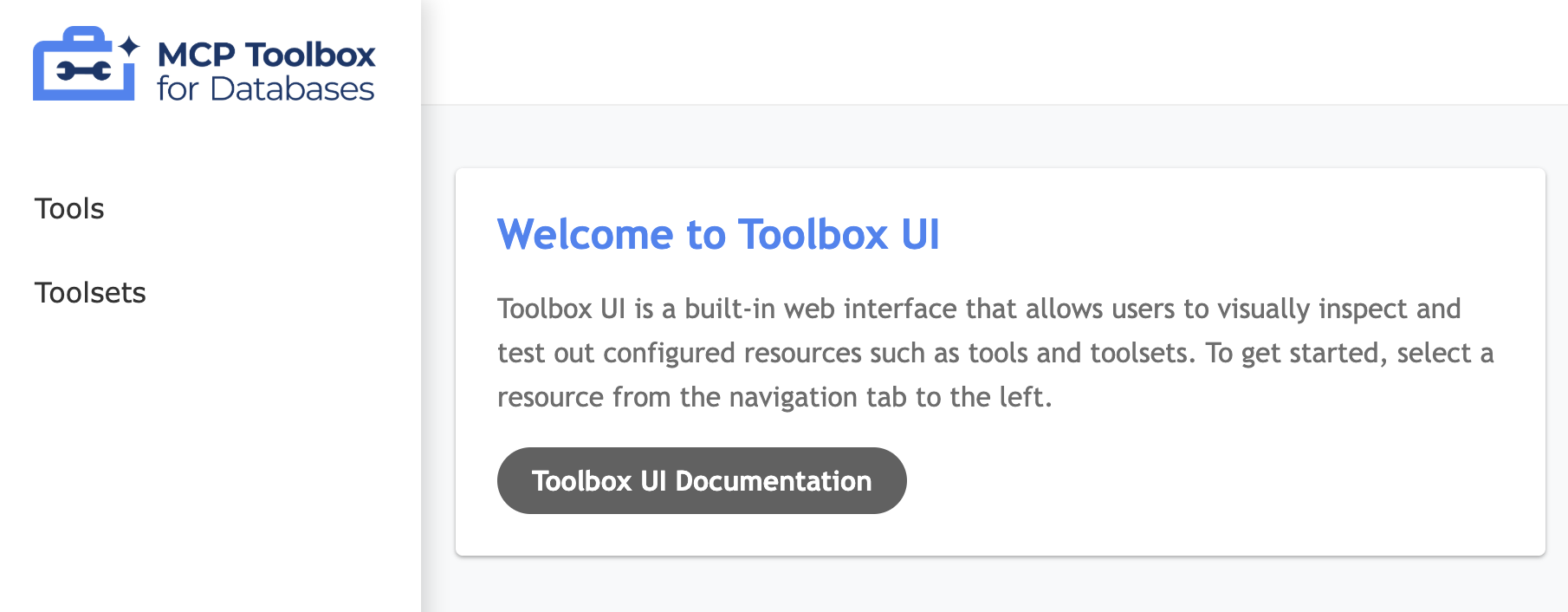

Test the Tools via MCP Toolbox for Databases UI

The Toolbox provides a visual interface (Toolbox UI) to directly interact with tools by modifying parameters, managing headers, and executing calls, all within a simple web UI.

If you would like to test that out, you can run the previous command that we used to launch the Toolbox Server with a --ui option.

To do that, shutdown the previous instance of the MCP Toolbox for Databases Server that you may have running and give the following command:

./toolbox --tools-file "tools.yaml" --ui

Ideally you should see an output that the Server has been able to connect to our data sources and has loaded the toolset and tools. A sample output is given below and you will notice that it will mention that the Toolbox UI is up and running.

2025-12-17T04:03:56.582589201Z INFO "Initialized 1 sources: my-cloud-sql-source"

2025-12-17T04:03:56.582724914Z INFO "Initialized 0 authServices: "

2025-12-17T04:03:56.582772914Z INFO "Initialized 2 tools: search-hotels-by-name, search-hotels-by-location"

2025-12-17T04:03:56.582803723Z INFO "Initialized 2 toolsets: default, my_first_toolset"

2025-12-17T04:03:56.582815576Z INFO "Initialized 0 prompts: "

2025-12-17T04:03:56.582832887Z INFO "Initialized 1 promptsets: default"

2025-12-17T04:03:56.582870495Z WARN "wildcard (`*`) allows all origin to access the resource and is not secure. Use it with cautious for public, non-sensitive data, or during local development. Recommended to use `--allowed-origins` flag to prevent DNS rebinding attacks"

2025-12-17T04:03:56.588485105Z INFO "Server ready to serve!"

2025-12-17T04:03:56.588520956Z INFO "Toolbox UI is up and running at: http://127.0.0.1:5000/ui"

Click on the UI url and ensure that you have the

/ui

at the end of the URL (If you are running this in Cloud Shell, the browser redirection results in the /ui not being there at the end). This will display a UI as shown below:

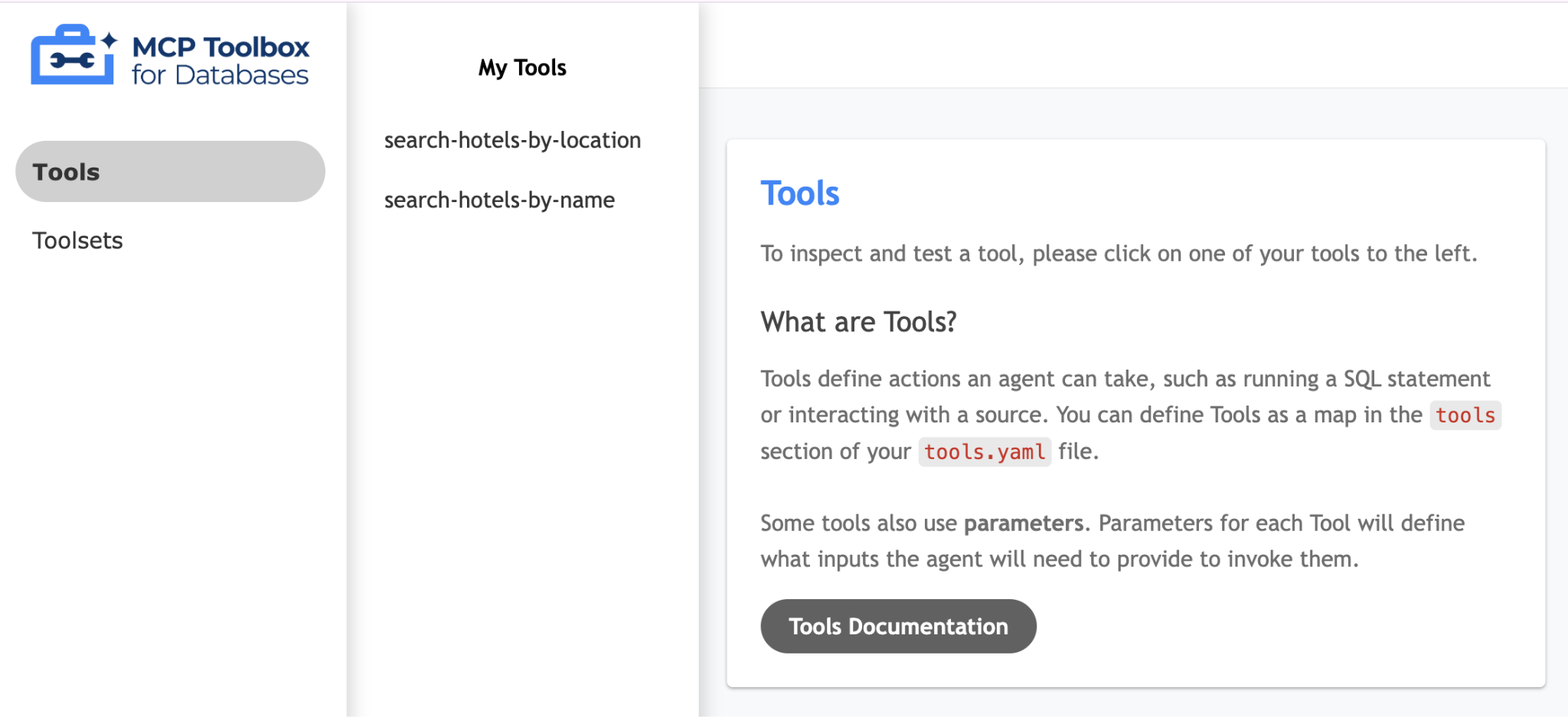

Click on the Tools option on the left to view the tools that have been configured and in our case, it should be two of them i.e. search-hotels-by-name and search-hotels-by-location., as shown below:

Simply click on one of the tools (search-hotels-by-location) and it should bring up a page for you to test out the tool by providing required parameter values and then click on Run Tool to see the result. A sample run is shown below:

The MCP Toolkit for Databases also describes a Pythonic way for you to validate and test out the tools, which is documented over here.

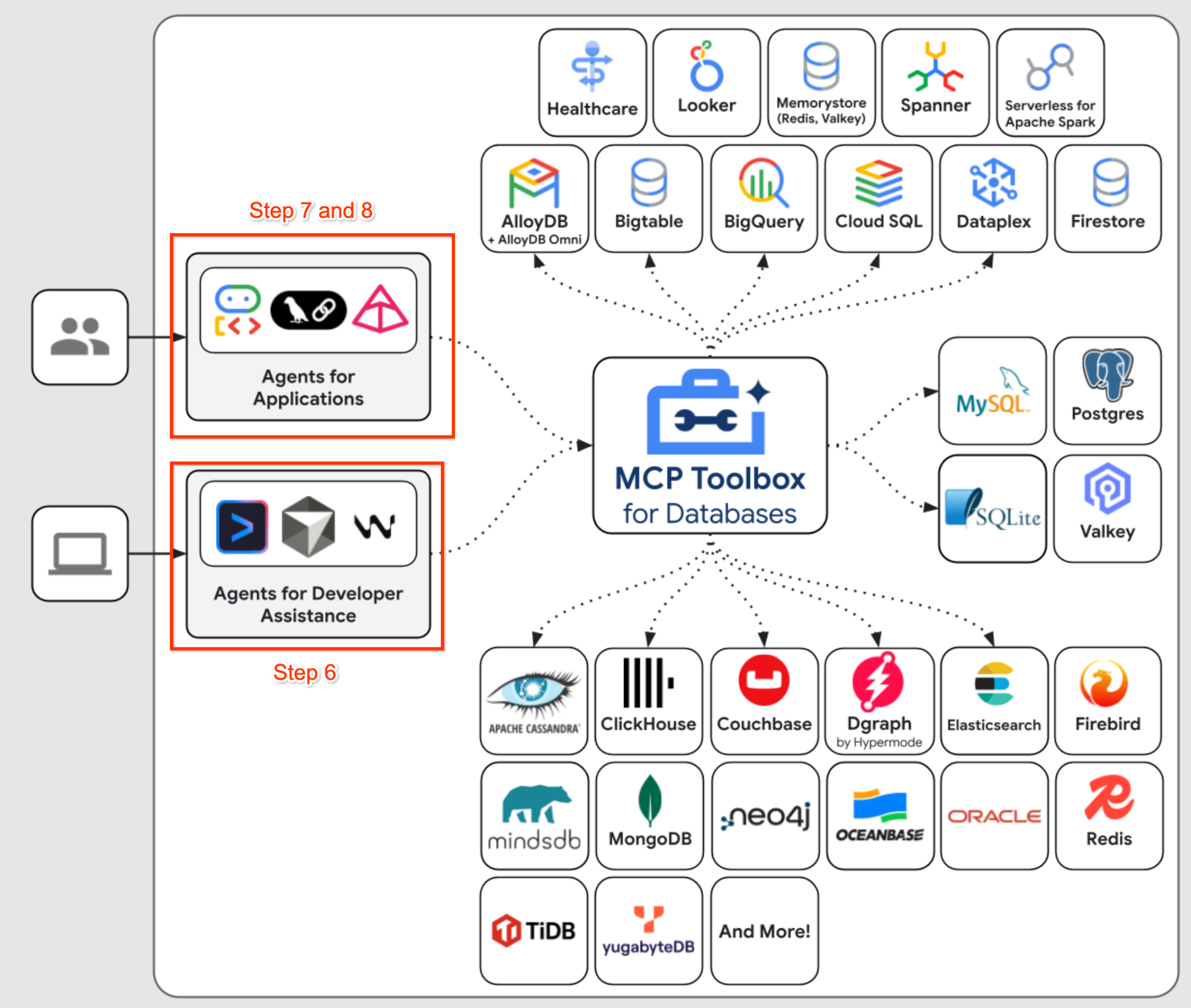

If we revisit the diagram from earlier (as shown below), we have now completed setting up the database and the MCP Server and have two paths in front of us:

- To understand how you can configure the MCP Server into an AI Assisted Terminal / IDE, go to Step 6. This will cover how we integrate our MCP Toolbox Server into

Gemini CLI. - To understand how to use

Agent Development Kit (ADK) using Python, to write your own agents that can utilize the MCP Server Toolbox as a tool, to answer questions related to the dataset, go to Step 7 and 8.

6. Integrating MCP Toolbox in Gemini CLI

Gemini CLI is an open-source AI agent that brings the power of Gemini directly into your terminal. You can use it for both coding and non-coding tasks. It comes integrated with various tools, plus support for MCP servers.

Since we have a working MCP Server, our goal in this section is going to be to configure the MCP Toolbox for Databases Server in Gemini CLI and then use Gemini CLI to talk to our data.

Our first step will be to confirm if you have the Toolbox up and running in one of the Cloud Shell terminals. Assuming that you are running it on the default port 5000, the MCP Server interface is available at the following endpoint: http://localhost:5000/mcp.

Open a new terminal and create a folder named my-gemini-cli-project as follows. Navigate to the my-gemini-cli-project folder too.

mkdir my-gemini-cli-project

cd my-gemini-cli-project

Give the following command to add the MCP Server to the list of MCP Servers configured in Gemini CLI.

gemini mcp add --scope="project" --transport="http" "MCPToolbox" "http://localhost:5000/mcp"

You can check the current list of MCP Servers configured in Gemini CLI via the following command:

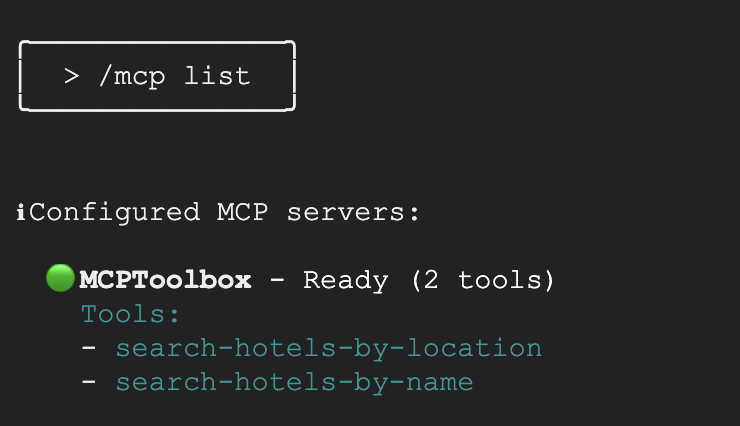

gemini mcp list

You should ideally see the MCPToolbox that we configured with a green tick next to it, which indicates that the Gemini CLI has been able to connect to the MCP Server.

Configured MCP servers:

✓ MCPToolbox: http://localhost:5000/mcp (http) - Connected

From the same terminal, ensure that you are in the my-gemini-cli-project folder. Launch Gemini CLI via the command gemini.

This will bring up the Gemini CLI Interface and you will see that it now has 1 MCP Server configured. You can use the /mcp list command to see the list of MCP Servers and the tools. For e.g. here is a sample output:

You can now give any of the prompts:

Which hotels are there in Basel?Tell me more about the Hyatt Regency?

You will find that the above queries will result in Gemini CLI selecting the appropriate tool from the MCPToolbox. It will ask you for your permission to execute the tool. Give it the necessary permission and you will notice that the results are returned from the database.

7. Writing our Agent with Agent Development Kit (ADK)

Install the Agent Development Kit (ADK)

Open a new terminal tab in Cloud Shell and create a folder named my-agents as follows. Navigate to the my-agents folder too.

mkdir my-agents

cd my-agents

Now, let's create a virtual Python environment using venv as follows:

python -m venv .venv

Activate the virtual environment as follows:

source .venv/bin/activate

Install the ADK and the MCP Toolbox for Databases packages along with langchain dependency as follows:

pip install google-adk toolbox-core

You will now be able to invoke the adk utility as follows.

adk

It will show you a list of commands.

$ adk

Usage: adk [OPTIONS] COMMAND [ARGS]...

Agent Development Kit CLI tools.

Options:

--version Show the version and exit.

--help Show this message and exit.

Commands:

api_server Starts a FastAPI server for agents.

conformance Conformance testing tools for ADK.

create Creates a new app in the current folder with prepopulated agent template.

deploy Deploys agent to hosted environments.

eval Evaluates an agent given the eval sets.

eval_set Manage Eval Sets.

run Runs an interactive CLI for a certain agent.

web Starts a FastAPI server with Web UI for agents.

Creating our first Agent Application

We are now going to use adk to create a scaffolding for our Hotel Agent Application via the adk create command with an app name **(hotel_agent_app)**as given below.

adk create hotel_agent_app

Follow the steps and select the following:

- Gemini model for choosing a model for the root agent.

- Choose Vertex AI for the backend.

- Your default Google Project Id and region will be displayed. Select the default itself.

Choose a model for the root agent:

1. gemini-2.5-flash

2. Other models (fill later)

Choose model (1, 2): 1

1. Google AI

2. Vertex AI

Choose a backend (1, 2): 2

You need an existing Google Cloud account and project, check out this link for details:

https://google.github.io/adk-docs/get-started/quickstart/#gemini---google-cloud-vertex-ai

Enter Google Cloud project ID [YOUR_PROJECT_ID]:

Enter Google Cloud region [us-central1]:

Agent created in <YOUR_HOME_FOLDER>/my-agents/hotel_agent_app:

- .env

- __init__.py

- agent.py

Observe the folder in which a default template and required files for the Agent have been created. You can see the files via the ls -al command in the terminal in the <YOUR_HOME_FOLDER>/my-agents/hotel_agent_app directory.

First up is the .env file. This file is already created as mentioned earlier and you can just view the contents of the file that are shown below:

GOOGLE_GENAI_USE_VERTEXAI=1

GOOGLE_CLOUD_PROJECT=YOUR_GOOGLE_PROJECT_ID

GOOGLE_CLOUD_LOCATION=YOUR_GOOGLE_PROJECT_REGION

The values indicate that we will be using Gemini via Vertex AI along with the respective values for the Google Cloud Project Id and location.

Then we have the __init__.py file that marks the folder as a module and has a single statement that imports the agent from the agent.py file.

from . import agent

Finally, let's take a look at the agent.py file. The contents are shown below:

from google.adk.agents import Agent

root_agent = Agent(

model='gemini-2.5-flash',

name='root_agent',

description='A helpful assistant for user questions.',

instruction='Answer user questions to the best of your knowledge',

)

This is the simplest Agent that you can write with ADK. From the ADK documentation page, an Agent is a self-contained execution unit designed to act autonomously to achieve specific goals. Agents can perform tasks, interact with users, utilize external tools, and coordinate with other agents.

Specifically, an LLMAgent, commonly aliased as Agent, utilizes Large Language Models (LLMs) as their core engine to understand natural language, reason, plan, generate responses, and dynamically decide how to proceed or which tools to use, making them ideal for flexible, language-centric tasks. Learn more about LLM Agents here.

Let's modify the code for the agent.py as follows:

from google.adk.agents import Agent

root_agent = Agent(

model='gemini-2.5-flash',

name='hotel_agent',

description='A helpful assistant that answers questions about a specific city.',

instruction='Answer user questions about a specific city to the best of your knowledge. Do not answer questions outside of this.',

)

Test the Agent App locally

From the existing terminal window and give the following command. Ensure that you are in the parent folder (my-agents) containing the hotel_agent_app folder.

adk web

A sample execution is shown below:

INFO: Started server process [1478]

INFO: Waiting for application startup.

+-----------------------------------------------------------------------------+

| ADK Web Server started |

| |

| For local testing, access at http://127.0.0.1:8000. |

+-----------------------------------------------------------------------------+

INFO: Application startup complete.

INFO: Uvicorn running on http://127.0.0.1:8000 (Press CTRL+C to quit)

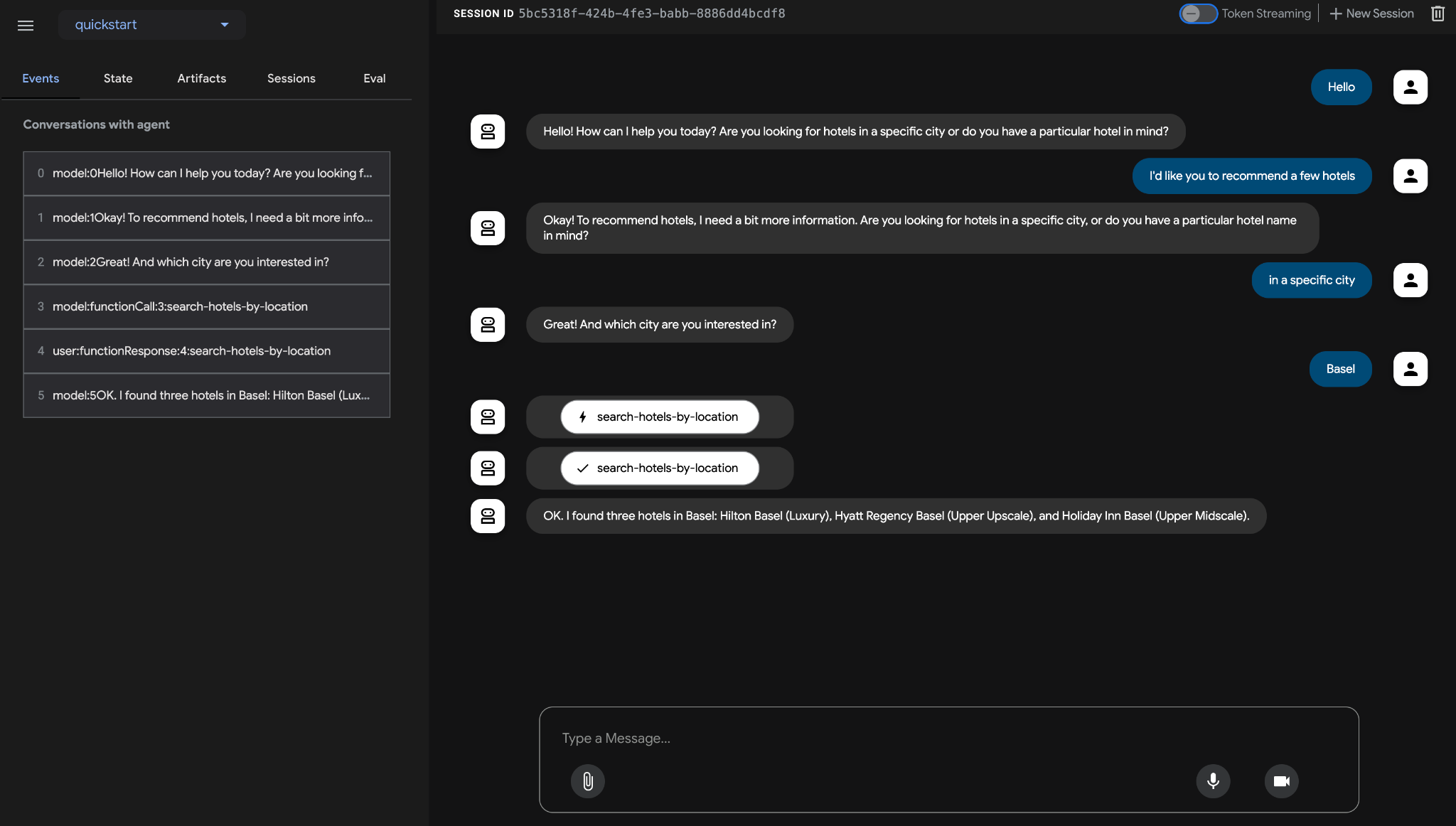

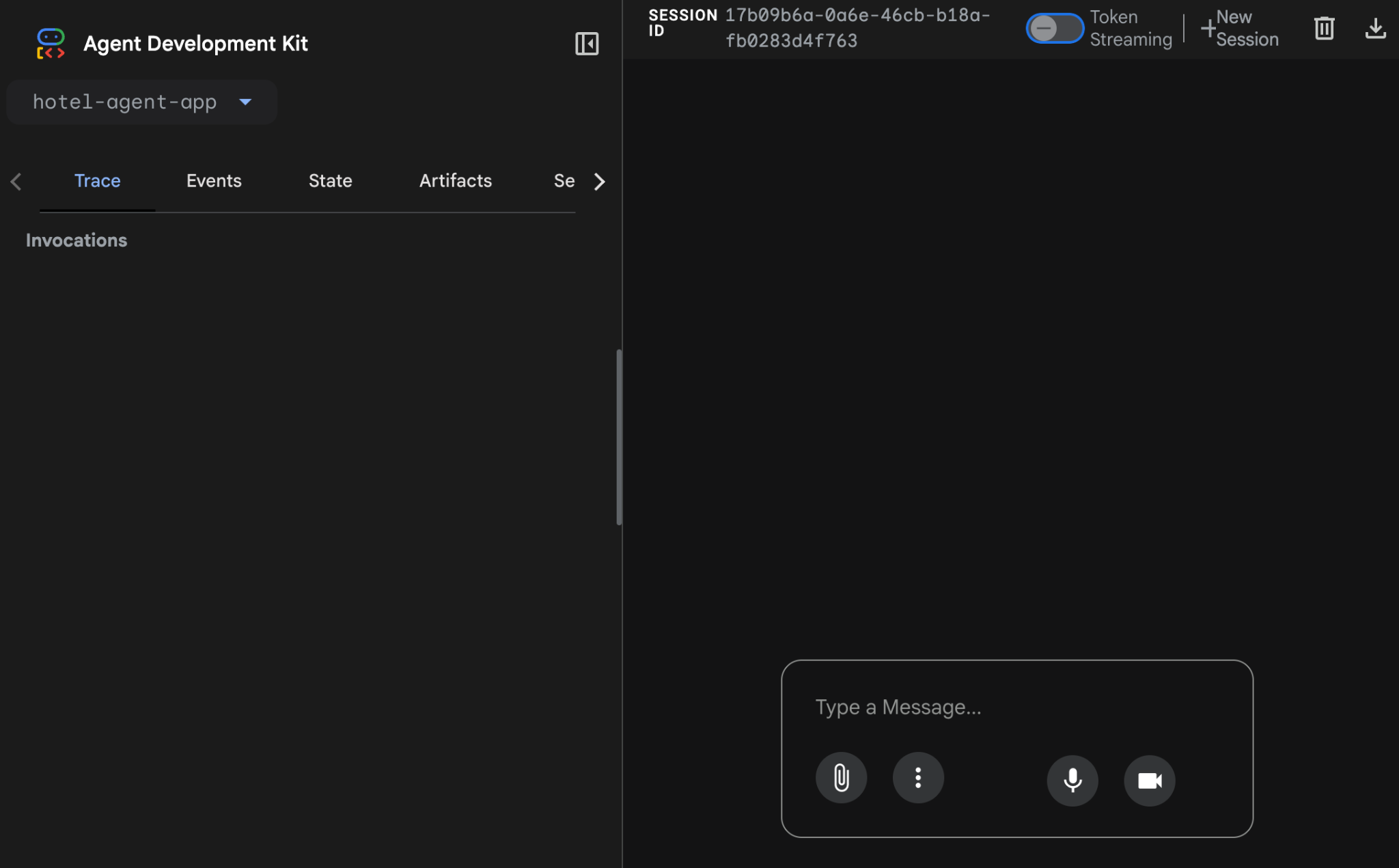

Click on the last link and it should bring up a web console to test out the Agent. You should see the following launched in the browser as shown below:

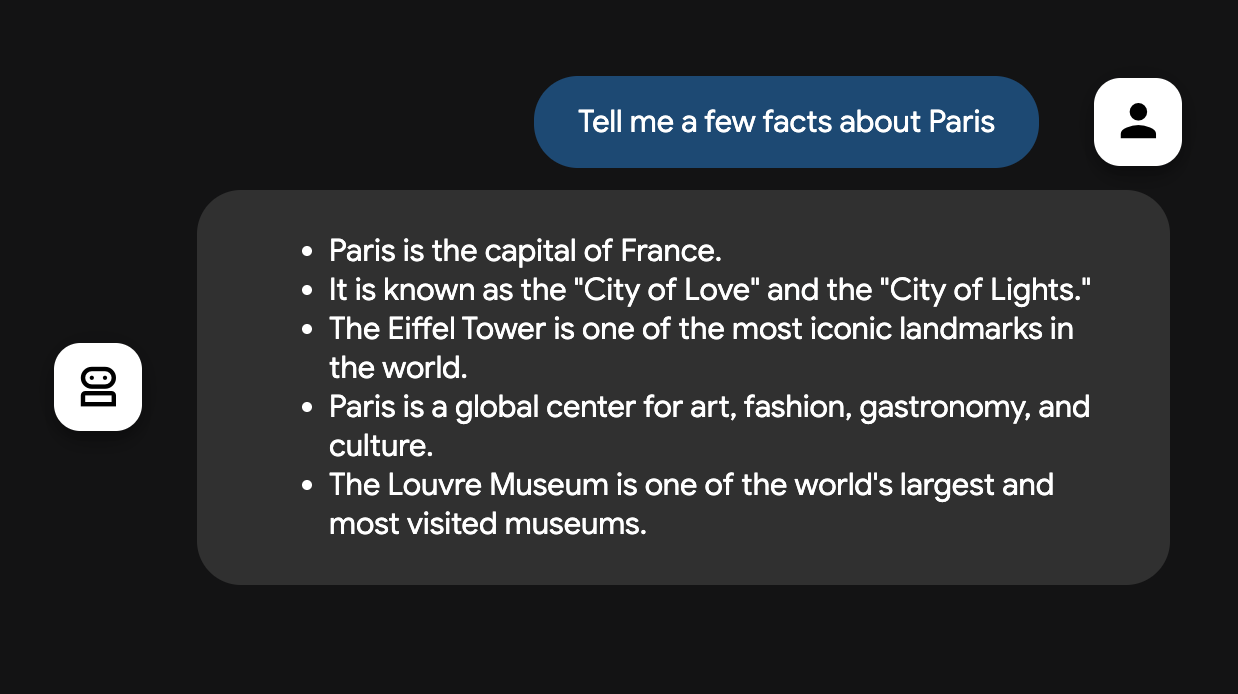

Notice that in the top left, the hotel_agent_app has been identified. You can now start conversing with the Agent. Provide a few prompts inquiring about cities. An example conversation is shown below:

You can shutdown the process running in the Cloud Shell terminal (Ctrl-C).

An alternate way of testing out the Agent is via the adk run command as given below from the my-agents folder.

adk run hotel_agent_app

Try out the command and you can converse with the Agent via the command line (terminal). Type exit to close the conversation.

8. Connecting our Agent to Tools

Now that we know how to write an Agent and test it locally. We are going to connect this Agent to Tools. In the context of ADK, a Tool represents a specific capability provided to an AI agent, enabling it to perform actions and interact with the world beyond its core text generation and reasoning abilities.

In our case, we are going to equip our Agent now with the Tools that we have configured in the MCP Toolbox for Databases.

Modify the agent.py file with the following code. Notice that we are using the default port 5000 in the code, but if you are using an alternate port number, please use that.

from google.adk.agents import Agent

from toolbox_core import ToolboxSyncClient

toolbox = ToolboxSyncClient("http://127.0.0.1:5000")

# Load single tool

# tools = toolbox.load_tool('search-hotels-by-location')

# Load all the tools

tools = toolbox.load_toolset('my_first_toolset')

root_agent = Agent(

name="hotel_agent",

model="gemini-2.5-flash",

description=(

"Agent to answer questions about hotels in a city or hotels by name."

),

instruction=(

"You are a helpful agent who can answer user questions about the hotels in a specific city or hotels by name. Use the tools to answer the question"

),

tools=tools,

)

We can now test the Agent that will fetch real data from our PostgreSQL database that has been configured with the MCP Toolbox for Databases.

To do this, follow this sequence:

In one terminal of Cloud Shell, launch the MCP Toolbox for Databases. You might already have it running locally on port 5000 as we tested earlier. If not, run the following command (from the mcp-toolbox folder) to start the server:

./toolbox --tools_file "tools.yaml"

Ideally you should see an output that the Server has been able to connect to our data sources and has loaded the toolset and tools.

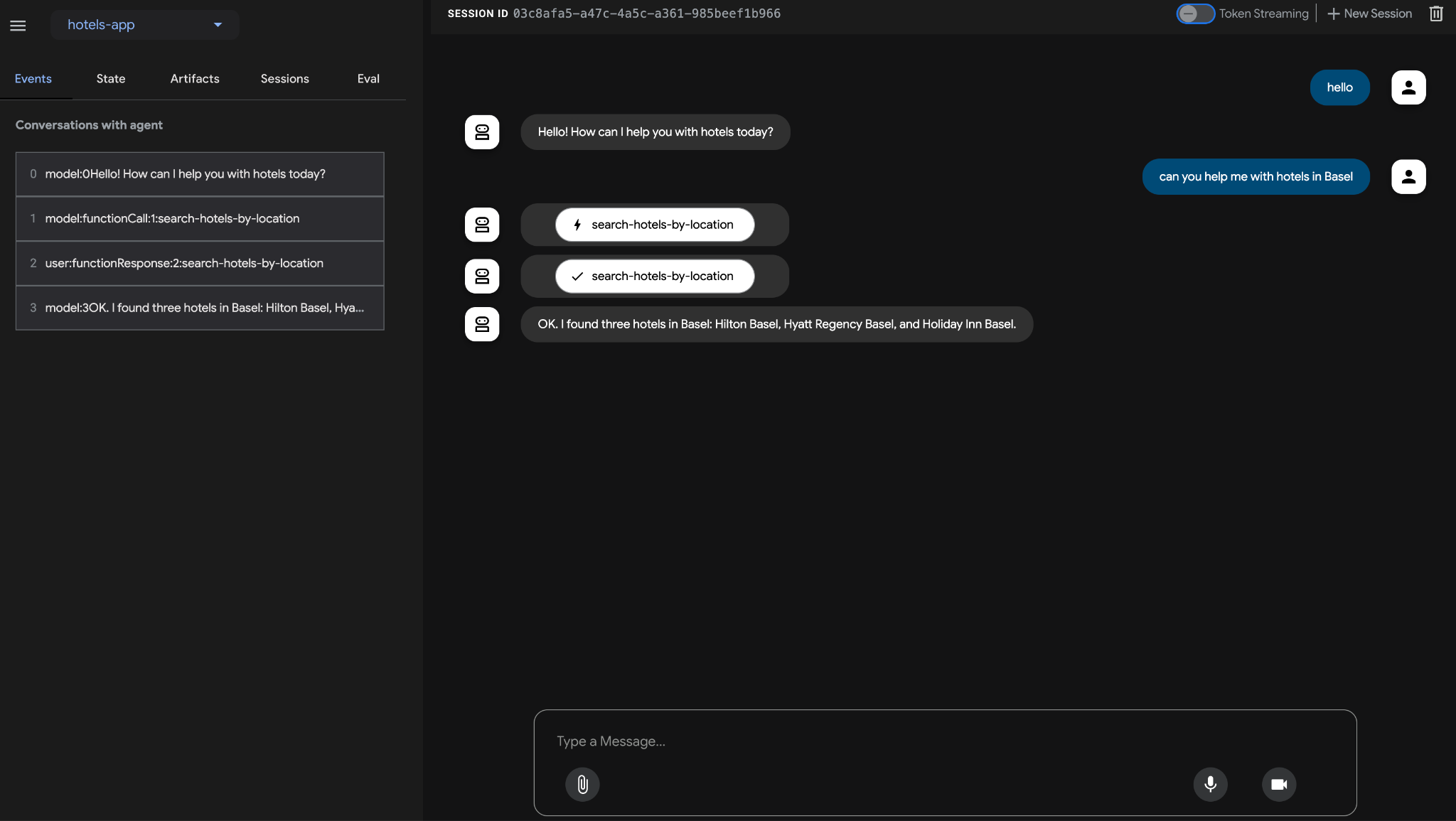

Once the MCP server has started successfully, in another terminal, launch the Agent as we have done earlier via the adk run (from the my-agents folder) command shown below. You could also use the adk web command if you like.

$ adk run hotel_agent_app/

...

Running agent hotel_agent, type exit to exit.

[user]: what can you do for me ?

[hotel_agent]: I can help you find hotels by location or by name.

[user]: I would like to search for hotels?

[hotel_agent]: Great! Do you want to search by location or by hotel name?

[user]: I'd like to search in Basel

[hotel_agent]: Here are some hotels in Basel:

* Holiday Inn Basel (Upper Midscale)

* Hyatt Regency Basel (Upper Upscale)

* Hilton Basel (Luxury)

[user]:

Notice that the Agent is now utilizing the two tools that we have configured in the MCP Toolbox for Databases (search-hotels-by-name and search-hotels-by-location) and providing the correct options to us. It is then able to seamlessly retrieve the data from the PostgreSQL instance database and format the response accordingly.

This completes the local development and testing of our Hotel Agent that we built using the Agent Development Kit (ADK) and which was powered by tools that we configured in the MCP Toolbox for Databases.

9. (Optional) Deploying MCP Toolbox for Databases and Agent to Cloud Run

In the previous section, we used Cloud Shell terminal to launch the MCP Toolbox server and tested the tools with the Agent. This was running locally in the Cloud Shell environment.

You have the option of deploying both MCP Toolbox server and the Agent to Google Cloud services that can host these applications for us.

Hosting MCP Toolbox server on Cloud Run

First up, we can start with the MCP Toolbox server and host it on Cloud Run. This would then give us a public endpoint that we can integrate with any other application and/or the Agent applications too. The instructions for hosting this on Cloud Run is given here. We shall go through the key steps now.

Launch a new Cloud Shell Terminal or use an existing Cloud Shell Terminal. Go to the mcp-toolbox folder, in which the toolbox binary and tools.yaml are present.

Run the following commands (an explanation is provided for each command):

Set the PROJECT_ID variable to point to your Google Cloud Project Id.

export PROJECT_ID="YOUR_GOOGLE_CLOUD_PROJECT_ID"

Next, verify that the following Google Cloud services are enabled in the project.

gcloud services enable run.googleapis.com \

cloudbuild.googleapis.com \

artifactregistry.googleapis.com \

iam.googleapis.com \

secretmanager.googleapis.com

Let's create a separate service account that will be acting as the identity for the Toolbox service that we will be deploying on Google Cloud Run. We are also ensuring that this service account has the correct roles i.e. ability to access Secret Manager and talk to Cloud SQL.

gcloud iam service-accounts create toolbox-identity

gcloud projects add-iam-policy-binding $PROJECT_ID \

--member serviceAccount:toolbox-identity@$PROJECT_ID.iam.gserviceaccount.com \

--role roles/secretmanager.secretAccessor

gcloud projects add-iam-policy-binding $PROJECT_ID \

--member serviceAccount:toolbox-identity@$PROJECT_ID.iam.gserviceaccount.com \

--role roles/cloudsql.client

We will upload the tools.yaml file as a secret and since we have to install the Toolbox in Cloud Run, we are going to use the latest Container image for the toolbox and set that in the IMAGE variable.

gcloud secrets create tools --data-file=tools.yaml

export IMAGE=us-central1-docker.pkg.dev/database-toolbox/toolbox/toolbox:latest

The last step in the familiar deployment command to Cloud Run:

gcloud run deploy toolbox \

--image $IMAGE \

--service-account toolbox-identity \

--region us-central1 \

--set-secrets "/app/tools.yaml=tools:latest" \

--args="--tools_file=/app/tools.yaml","--address=0.0.0.0","--port=8080" \

--allow-unauthenticated

This should start the process of deploying the Toolbox Server with our configured tools.yaml to Cloud Run. On successful deployment, you should see a message similar to the following:

Deploying container to Cloud Run service [toolbox] in project [YOUR_PROJECT_ID] region [us-central1]

OK Deploying new service... Done.

OK Creating Revision...

OK Routing traffic...

OK Setting IAM Policy...

Done.

Service [toolbox] revision [toolbox-00001-zsk] has been deployed and is serving 100 percent of traffic.

Service URL: https://toolbox-<SOME_ID>.us-central1.run.app

You can now visit the Service URL listed above in the browser. It should display the "Hello World" message that we saw earlier. Additionally, you can also visit the following URL to see the tools available:

SERVICE URL/api/toolset

You can also visit Cloud Run from the Google Cloud console and you will see the Toolbox service available in the list of services in Cloud Run.

Note: If you'd like to still run your Hotel Agent locally and yet connect to the newly deployed Cloud Run service, you just need to do one change in the my-agents/hotel_agent_app/agent.py file.

Instead of the following:

toolbox = ToolboxSyncClient("http://127.0.0.1:5000")

Change it to the Service URL of the Cloud Run service as given below:

toolbox = ToolboxSyncClient("CLOUD_RUN_SERVICE_URL")

Test out the Agent Application using adk run or adk web as we saw earlier.

Deploying Hotel Agent App on Cloud Run

The first step is to ensure that you have made the change in the my-agents/hotel_agent_app/agent.py as instructed above to point to the Toolbox service URL that is running on Cloud Run and not the local host.

In a new Cloud Shell Terminal or existing Terminal session, ensure that you are in the correct Python virtual environment that we set up earlier.

First up, let us create a requirements.txt file in the my-agents/hotel_agent_app folder as shown below:

google-adk

toolbox-core

Navigate to the my-agents folder and let's set the following environment variables first:

export GOOGLE_CLOUD_PROJECT=YOUR_GOOGLE_CLOUD_PROJECT_ID

export GOOGLE_CLOUD_LOCATION=us-central1

export AGENT_PATH="hotel_agent_app/"

export SERVICE_NAME="hotels-service"

export APP_NAME="hotels-app"

export GOOGLE_GENAI_USE_VERTEXAI=True

Finally, let's deploy the Agent Application to Cloud Run via the adk deploy cloud_run command as given below. If you are asked to allow unauthenticated invocations to the service, please provide "y" as the value for now.

adk deploy cloud_run \

--project=$GOOGLE_CLOUD_PROJECT \

--region=$GOOGLE_CLOUD_LOCATION \

--service_name=$SERVICE_NAME \

--app_name=$APP_NAME \

--with_ui \

$AGENT_PATH

This will begin the process of deploying the Hotel Agent Application to Cloud Run. It will upload the sources, package it into a Docker Container, push that to the Artifact Registry and then deploy the service on Cloud Run. This could take a few minutes, so please be patient.

You should see a message similar to the one below:

Start generating Cloud Run source files in /tmp/cloud_run_deploy_src/20250905_132636

Copying agent source code...

Copying agent source code completed.

Creating Dockerfile...

Creating Dockerfile complete: /tmp/cloud_run_deploy_src/20250905_132636/Dockerfile

Deploying to Cloud Run...

Building using Dockerfile and deploying container to Cloud Run service [hotels-service] in project [YOUR_PROJECT_ID] region [us-central1]

- Building and deploying... Uploading sources.

- Uploading sources...

. Building Container...

OK Building and deploying... Done.

OK Uploading sources...

OK Building Container... Logs are available at [https://console.cloud.google.com/cloud-build/builds;region=us-central1/d1f7e76b-0587-4bb6-b9c0-bb4360c07aa0?project=415

458962931]. f

OK Creating Revision...

OK Routing traffic...

Done.

Service [hotels-service] revision [hotels-service-00003-hrl] has been deployed and is serving 100 percent of traffic.

Service URL: <YOUR_CLOUDRUN_APP_URL>

INFO: Display format: "none"

Cleaning up the temp folder: /tmp/cloud_run_deploy_src/20250905_132636

On successful deployment, you will be provided a value for the Service URL, which you can then access in the browser to view the same Web Application that allowed you to chat with the Hotel Agent, as we saw earlier in the local setup.

10. Cleanup

To avoid ongoing charges to your Google Cloud account, it's important to delete the resources we created during this workshop. We will be deleting the Cloud SQL instance and optionally, if you have deployed the Toolbox and Hotels App to Cloud Run, then we will delete those services too.

Ensure that the following environment variables are set correctly, as per your project and region:

export PROJECT_ID="YOUR_PROJECT_ID"

export REGION="YOUR_REGION"

The following two commands deletes the Cloud Run services that we have deployed:

gcloud run services delete toolbox --platform=managed --region=${REGION} --project=${PROJECT_ID} --quiet

gcloud run services delete hotels-service --platform=managed --region=${REGION} --project=${PROJECT_ID} --quiet

The following command deletes the Cloud SQL instance:

gcloud sql instances delete hoteldb-instance

11. Congratulations

Congratulations, you've successfully built an agent using the Agent Development Kit (ADK) that utilizes the MCP Toolbox for Databases.