1. Introduction

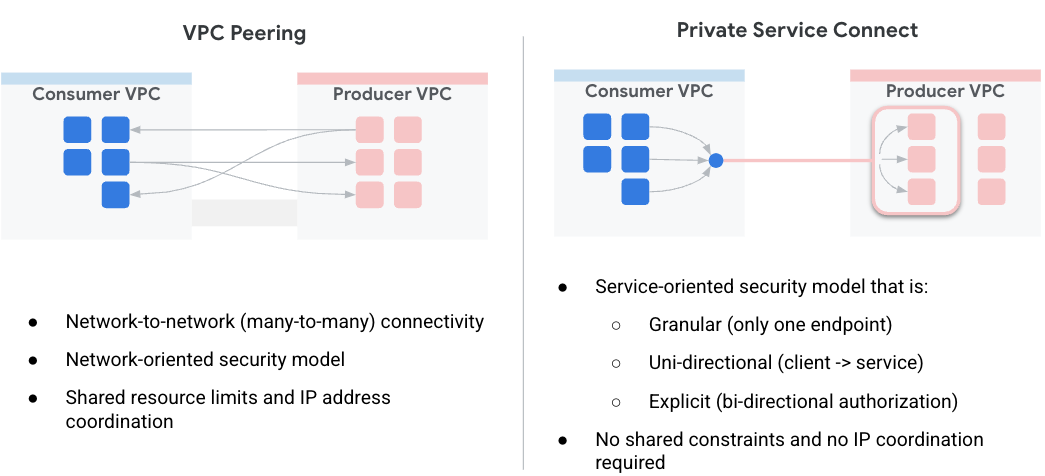

Private Service Connect (PSC) simplifies how services are being securely and privately consumed. This model makes the network architecture drastically easier by allowing service consumers to connect privately to service producers across organizations and eliminates the need for virtual private cloud peering. Figure1 illustrates VPC peering and PSC attributes.

Figure1.

As a service consumer, it allows you the flexibility of choosing how to allocate your private IPs to services, while removing the burden of managing subnet ranges for producer VPCs. You can now simply assign a chosen virtual IP from your VPC to such service by using a service connect.

In this codelab, you're going to build a comprehensive Private Service Connect architecture that illustrates the use of PSC global access with MongoDB atlas.

Global access allows clients to connect to Private Service Connect (PSC) across regional boundaries. This is useful for creating high-availability across managed services hosted in multiple regions or to allow clients to access a service that is not in the same region as the client.

2. Enabling Global Access

Global access is an optional feature that is configured on the consumer-side forwarding rule. The following command shows how it is configured:

gcloud beta compute forwarding-rules create psc-west \

--region=us-west1 \

--network=consumer-vpc \

--address=psc-west-address \

--target-service-attachment=projects/.../serviceAttachments/sa-west \

--allow-psc-global-access

- The

--allow-psc-global-accessflag enables global access on a Private Service Connect endpoint - Global access allows the client to be in a different region from the Private Service Connect forwarding rule, but the forwarding rule must still be in the same region as the service attachment it is connected to.

- There is no configuration required on the producer's service attachment to enable global access. It is purely a consumer-side option.

Global access can also be toggled on or off at any time for existing endpoints. There is no traffic disruption for active connections when enabling global access on an existing endpoint.. Global Access is enabled on an existing forwarding-rule with the following command:

gcloud beta compute forwarding-rules update psc-west --allow-psc-global-access

Disabling Global Access

Global access can also be disabled on existing forwarding rules with the --no-allow-psc-global-access flag. Note that any active inter-regional traffic will be terminated after this command is run.

gcloud beta compute forwarding-rules update psc-west --no-allow-psc-global-access

3. What you'll build

- A multi-region MongoDB Atlas cluster (topology described in Figure2) will be created with one node in us-west1 and two nodes in us-west2 regions respectively.

- A consumer VPC and associated vm to access MongoDB clusters in us-west1 and us-west2.

- A VPC and two subnets in us-west1 and us-west2 regions respectively with at least 64 free IP addresses in each subnets (Create subnets with /26 and below).

MongoDB client will be installed on vm1 in the consumer vpc. When the primary node fails in us-west1, the client will be able to read/write through the new primary node in us-west2.

Figure 2.

What you'll learn

- How to create a VPC and subnets deployed in two regions

- How to deploy a multi-region MongoDB atlas cluster

- How to create a private endpoint

- How to connect to MongoDB

- How to perform and validate multiregion MongoDB failover

What you'll need

- Google Cloud Project

- Provide a /26 subnet per region

- Project owner or Organization owner access to MongoDB Atlas to create a MongoDB cluster with cluster tier M10 or higher. (Please use GETATLAS to get free credits for running the PoV)

4. Before you begin

Update the project to support the codelab

This Codelab makes use of $variables to aid gcloud configuration implementation in Cloud Shell.

Inside Cloud Shell, perform the following:

gcloud config list project

gcloud config set project [YOUR-PROJECT-NAME]

projectname=YOUR-PROJECT-NAME

echo $projectname

5. Consumer Setup

Create the consumer VPC

Inside Cloud Shell, perform the following:

gcloud compute networks create consumer-vpc --project=$projectname --subnet-mode=custom

Create the consumer subnets

Inside Cloud Shell, perform the following:

gcloud compute networks subnets create vm-subnet --project=$projectname --range=10.10.10.0/29 --network=consumer-vpc --region=us-west1

Inside Cloud Shell, create the consumer endpoint for us-west1:

gcloud compute networks subnets create psc-endpoint-us-west1 --project=$projectname --range=192.168.10.0/26 --network=consumer-vpc --region=us-west1

Inside Cloud Shell, create the consumer endpoint for us-west2:

gcloud compute networks subnets create psc-endpoint-us-west2 --project=$projectname --range=172.16.10.0/26 --network=consumer-vpc --region=us-west2

Cloud Router and NAT configuration

Cloud NAT is used in the codelab for software package installation since the VM instances do not have an external IP address.

Inside Cloud Shell, create the cloud router.

gcloud compute routers create consumer-cr --network consumer-vpc --region us-west1

Inside Cloud Shell, create the NAT gateway.

gcloud compute routers nats create consumer-nat --router=consumer-cr --auto-allocate-nat-external-ips --nat-all-subnet-ip-ranges --region us-west1

Instance vm1 configuration

In the following section, you'll create the Compute Engine instance, vm1.

Inside cloud Shell, create instance vm1.

gcloud compute instances create vm1 \

--project=$projectname \

--zone=us-west1-a \

--machine-type=e2-micro \

--network-interface=subnet=vm-subnet,no-address \

--maintenance-policy=MIGRATE \

--provisioning-model=STANDARD \

--create-disk=auto-delete=yes,boot=yes,device-name=vm1,image=projects/ubuntu-os-cloud/global/images/ubuntu-2004-focal-v20230213,mode=rw,size=10,type=projects/$projectname/zones/us-west1-a/diskTypes/pd-balanced

--metadata startup-script="#! /bin/bash

sudo apt-get update

sudo apt-get install tcpdump -y

sudo apt-get install dnsutils -y"

To allow IAP to connect to your VM instances, create a firewall rule that:

- Applies to all VM instances that you want to be accessible by using IAP.

- Allows ingress traffic from the IP range 35.235.240.0/20. This range contains all IP addresses that IAP uses for TCP forwarding.

Inside Cloud Shell, create the IAP firewall rule.

gcloud compute firewall-rules create ssh-iap-consumer-vpc \

--network consumer-vpc \

--allow tcp:22 \

--source-ranges=35.235.240.0/20

6. Create the multi-region MongoDB Atlas cluster

- We need to set up an Atlas cluster before we get started with PSC setup. You can subscribe to MongoDB Atlas in one of the two below ways:

- Through the Google Cloud Marketplace if you have a Google Cloud account. Refer to the documentation to set up your subscription.

- With the Atlas registration page.

- Once subscribed to Atlas Click on Build a Database button as shown below.

- Create new cluster → Dedicated

- Cloud provider & region → Google Cloud

- Multi-cloud, multi-region & workload isolation → Selected (blue check)

- Electable nodes → us-west1 (1 node), us-west2 (2 nodes)

- Cluster tier → M10, Leave all other settings as default

- Cluster name → psc-mongodb-uswest1-uswest2

- Select → Create Cluster

- Database creation takes 7-10 minutes

View of the cluster once deployed

7. Private endpoint creation for us-west1

- Log-on to your Atlas account and navigate to your project.

Create a new user to allow read/write access to any database

Security → Database Access select add new database user. The following is an example, username and password configured as codelab. Ensure to select built-in Role read and write to any database.

- Under Security → Network Access, IP Access List does not require an entry

Prepare Private Endpoints in MongoDB Atlas

- Select, Network access → Private Endpoints → Dedicated cluster → Add private endpoint

Cloud provider

- Select Google Cloud, then next

Service attachment

- Select the region, us-west1, then next

Endpoints

- To create a private service connect endpoint provide the following:

- Google cloud project ID: Select ‘show instructions' for details

- VPC name: consumer-vpc

- Subnet name: psc-endpoint-us-west1

- Private service connect endpoint prefix: psc-endpoint-us-west1

Set up endpoints

In the following section a shell script is generated that should be saved locally named setup_psc.sh. Once saved, edit the shell script to allow psc global access. You can perform this action within the Google Cloud project Cloud Shell.

- Example shell script, your output will have different values

- Copy the shell script from the MongoBD console and save contents in the Google Cloud Cloud Shell terminal, ensure to save the script as setup_psc.sh

Example before update:

#!/bin/bash

gcloud config set project yourprojectname

for i in {0..49}

do

gcloud compute addresses create psc-endpoint-us-west1-ip-$i --region=us-west1 --subnet=psc-endpoint-us-west1

done

for i in {0..49}

do

if [ $(gcloud compute addresses describe psc-endpoint-us-west1-ip-$i --region=us-west1 --format="value(status)") != "RESERVED" ]; then

echo "psc-endpoint-us-west1-ip-$i is not RESERVED";

exit 1;

fi

done

for i in {0..49}

do

gcloud compute forwarding-rules create psc-endpoint-us-west1-$i --region=us-west1 --network=consumer-vpc --address=psc-endpoint-us-west1-ip-$i --target-service-attachment=projects/p-npwsmzelxznmaejhj2vn1q0q/regions/us-west1/serviceAttachments/sa-us-west1-61485ec2ae9d2e48568bf84f-$i

done

if [ $(gcloud compute forwarding-rules list --regions=us-west1 --format="csv[no-heading](name)" --filter="(name:psc-endpoint-us-west1*)" | wc -l) -gt 50 ]; then

echo "Project has too many forwarding rules that match prefix psc-endpoint-us-west1. Either delete the competing resources or choose another endpoint prefix."

exit 2;

fi

gcloud compute forwarding-rules list --regions=us-west1 --format="json(IPAddress,name)" --filter="name:(psc-endpoint-us-west1*)" > atlasEndpoints-psc-endpoint-us-west1.json

Update the shell script to support global access

Use nano or vi editor to identify and update the shell script with the syntax below:

gcloud beta compute forwarding-rules create psc-endpoint-us-west1-$i --region=us-west1 --network=consumer-vpc --address=psc-endpoint-us-west1-ip-$i --target-service-attachment=projects/p-npwsmzelxznmaejhj2vn1q0q/regions/us-west1/serviceAttachments/sa-us-west1-61485ec2ae9d2e48568bf84f-$i --allow-psc-global-access

Example after update:

#!/bin/bash

gcloud config set project yourprojectname

for i in {0..49}

do

gcloud compute addresses create psc-endpoint-us-west1-ip-$i --region=us-west1 --subnet=psc-endpoint-us-west1

done

for i in {0..49}

do

if [ $(gcloud compute addresses describe psc-endpoint-us-west1-ip-$i --region=us-west1 --format="value(status)") != "RESERVED" ]; then

echo "psc-endpoint-us-west1-ip-$i is not RESERVED";

exit 1;

fi

done

for i in {0..49}

do

gcloud beta compute forwarding-rules create psc-endpoint-us-west1-$i --region=us-west1 --network=consumer-vpc --address=psc-endpoint-us-west1-ip-$i --target-service-attachment=projects/p-npwsmzelxznmaejhj2vn1q0q/regions/us-west1/serviceAttachments/sa-us-west1-61485ec2ae9d2e48568bf84f-$i --allow-psc-global-access

done

if [ $(gcloud compute forwarding-rules list --regions=us-west1 --format="csv[no-heading](name)" --filter="(name:psc-endpoint-us-west1*)" | wc -l) -gt 50 ]; then

echo "Project has too many forwarding rules that match prefix psc-endpoint-us-west1. Either delete the competing resources or choose another endpoint prefix."

exit 2;

fi

gcloud compute forwarding-rules list --regions=us-west1 --format="json(IPAddress,name)" --filter="name:(psc-endpoint-us-west1*)" > atlasEndpoints-psc-endpoint-us-west1.json

Run the shell script

Navigate and execute the script setup_psc.sh, once completed a file called atlasEndpoints-psc-endpoint-us-west1.json is created. The json file contains a list of IP addresses and Private Service Connect Endpoint Names required for the next step of the deployment.

Inside Cloud Shell perform the following:

sh setup_psc.sh

Once the script completes, use Cloud Shell editor to download atlasEndpoints-psc-endpoint-us-west1.json locally.

Upload the JSON file

Upload the previously saved json file atlasEndpoints-psc-endpoint-us-west1.json

Select Create

Validate Private Service Connect endpoints

In the MongoDB UI, navigate to your project followed by Security → Network access → Private endpoint. Selecting the tab, dedicated cluster, the endpoint transition to available will take 10 minutes.

Available status

In Google cloud console, navigate to Network services → Private Services Connect, select the tab Connected endpoint displaying consumer endpoints transitioning Pending → Accepted, example below:

8. Private endpoint creation for us-west2

- Log-on to your Atlas account and navigate to your project.

Prepare Private Endpoints in MongoDB Atlas

- Select, Network access → Private Endpoints → Dedicated cluster → Add private endpoint

Cloud provider

- Select Google Cloud, then next

Service attachment

- Select the region, us-west2, then next

Endpoints

- To create a private service connect endpoint provide the following:

- Google cloud project ID: Select ‘show instructions' for details

- VPC name: consumer-vpc

- Subnet name: psc-endpoint-us-west2

- Private service connect endpoint prefix: psc-endpoint-us-west2

Set up endpoints

In the following section a shell script is generated that should be saved locally named setup_psc.sh. Once saved, edit the shell script to allow psc global access. You can perform this action within the Google Cloud project Cloud Shell.

- Example shell script, your output will have different values

- Copy the shell script from the MongoBD console and save contents in the Google Cloud Cloud Shell terminal, ensure to save the script as setup_psc.sh

Example before update:

#!/bin/bash

gcloud config set project yourprojectname

for i in {0..49}

do

gcloud compute addresses create psc-endpoint-us-west2-ip-$i --region=us-west2 --subnet=psc-endpoint-us-west2

done

for i in {0..49}

do

if [ $(gcloud compute addresses describe psc-endpoint-us-west2-ip-$i --region=us-west2 --format="value(status)") != "RESERVED" ]; then

echo "psc-endpoint-us-west2-ip-$i is not RESERVED";

exit 1;

fi

done

for i in {0..49}

do

gcloud compute forwarding-rules create psc-endpoint-us-west2-$i --region=us-west2 --network=consumer-vpc --address=psc-endpoint-us-west2-ip-$i --target-service-attachment=projects/p-npwsmzelxznmaejhj2vn1q0q/regions/us-west2/serviceAttachments/sa-us-west2-61485ec2ae9d2e48568bf84f-$i

done

if [ $(gcloud compute forwarding-rules list --regions=us-west2 --format="csv[no-heading](name)" --filter="(name:psc-endpoint-us-west2*)" | wc -l) -gt 50 ]; then

echo "Project has too many forwarding rules that match prefix psc-endpoint-us-west2. Either delete the competing resources or choose another endpoint prefix."

exit 2;

fi

gcloud compute forwarding-rules list --regions=us-west2 --format="json(IPAddress,name)" --filter="name:(psc-endpoint-us-west2*)" > atlasEndpoints-psc-endpoint-us-west2.json

Update the shell script to support global access

Use nano or vi editor to identify and update the shell script with the syntax below:

gcloud beta compute forwarding-rules create psc-endpoint-us-west2-$i --region=us-west2 --network=consumer-vpc --address=psc-endpoint-us-west2-ip-$i --target-service-attachment=projects/p-npwsmzelxznmaejhj2v

n1q0q/regions/us-west2/serviceAttachments/sa-us-west2-61485ec2ae9d2e48568bf84f-$i --allow-psc-global-access

done

Example after update:

#!/bin/bash

gcloud config set project yourprojectname

for i in {0..49}

do

gcloud compute addresses create psc-endpoint-us-west2-ip-$i --region=us-west2 --subnet=psc-endpoint-us-west2

done

for i in {0..49}

do

if [ $(gcloud compute addresses describe psc-endpoint-us-west2-ip-$i --region=us-west2 --format="value(status)") != "RESERVED" ]; then

echo "psc-endpoint-us-west2-ip-$i is not RESERVED";

exit 1;

fi

done

for i in {0..49}

do

gcloud beta compute forwarding-rules create psc-endpoint-us-west2-$i --region=us-west2 --network=consumer-vpc --address=psc-endpoint-us-west2-ip-$i --target-service-attachment=projects/p-npwsmzelxznmaejhj2v

n1q0q/regions/us-west2/serviceAttachments/sa-us-west2-61485ec2ae9d2e48568bf84f-$i --allow-psc-global-access

done

if [ $(gcloud compute forwarding-rules list --regions=us-west2 --format="csv[no-heading](name)" --filter="(name:psc-endpoint-us-west2*)" | wc -l) -gt 50 ]; then

echo "Project has too many forwarding rules that match prefix psc-endpoint-us-west2. Either delete the competing resources or choose another endpoint prefix."

exit 2;

fi

gcloud compute forwarding-rules list --regions=us-west2 --format="json(IPAddress,name)" --filter="name:(psc-endpoint-us-west2*)" > atlasEndpoints-psc-endpoint-us-west2.json

Run the shell script

Navigate and execute the script setup_psc.sh, once completed a file called atlasEndpoints-psc-endpoint-us-west2.json is created. The json file contains a list of IP addresses and Private Service Connect Endpoint Names required for the next step of the deployment.

Inside Cloud Shell perform the following:

sh setup_psc.sh

Once the script completes, use Cloud Shell editor to download atlasEndpoints-psc-endpoint-us-west2.json locally.

Upload the JSON file

Upload the previously saved json file atlasEndpoints-psc-endpoint-us-west2.json

Select Create

Validate Private Service Connect endpoints

In the MongoDB UI, navigate to your project followed by Security → Network access → Private endpoint. Selecting the tab, dedicated cluster, the endpoint transition to available after 10 minutes.

Available status:

In Google cloud console, navigate to Network services → Private Services Connect, select the tab Connected endpoint displaying consumer endpoints transitioning Pending → Accepted, example below. A total of 100 endpoints are deployed in the consumer endpoint & need to transition to accepted before moving to the next step.

9. Connect to MongoDB atlas from private endpoints

Once the Private Service Connections are Accepted additional time (10-15 minutes) is required to update the MongoDB cluster. In the MongoDB UI, the gray outline represents the cluster update therefore connecting to the private endpoint is not available.

Identify the deployment and select Connect (note the gray box is no longer present)

Choose connection type → Private endpoint, select Choose a connection method

Select Connect with the MongoDB Shell

Select, I do not have the MongoDB Shell installed, Ubuntu 20.4 and ensure to copy the contents from step 1 and step 3 to a notepad.

10. Install mongosh application

Before the installation, you'll need to create a command string based on the previously copied values obtained in steps 1&3. Thereafter, you will ssh into vm1 using Cloud Shell followed by mongosh application installation and validation to the primary (us-west1) database. Ubuntu 20.4 image was installed when creating vm1 in the consumer-vpc.

Choose a connection method: Step 1, Copy download URL

Example command string, replace with your custom values:

https://downloads.mongodb.com/compass/mongodb-mongosh_1.7.1_amd64.deb

Choose a connection method, step 3.

Example command string, replace with your custom values:

mongosh "mongodb+srv://psc-mongodb-uswest1-uswest2-pl-0.2wqno.mongodb.net/psc-mongodb-uswest1-uswest2" --apiVersion 1 --username codelab

Log into vm1

Inside Cloud Shell, perform the following:

gcloud config list project

gcloud config set project [YOUR-PROJECT-NAME]

projectname=YOUR-PROJECT-NAME

echo $projectname

Log into vm1 using IAP in Cloud Shell, retry if there is a timeout.

gcloud compute ssh vm1 --project=$projectname --zone=us-west1-a --tunnel-through-iap

Perform the installation from the OS

Perform the installation from the Cloud Shell os login, additional details available, update syntax below with your custom string

wget -qO - https://www.mongodb.org/static/pgp/server-6.0.asc | sudo apt-key add -

The operation should respond with an

OK

.

echo "deb [ arch=amd64,arm64 ] https://repo.mongodb.org/apt/ubuntu focal/mongodb-org/6.0 multiverse" | sudo tee /etc/apt/sources.list.d/mongodb-org-6.0.list

sudo apt-get update -y

wget https://downloads.mongodb.com/compass/mongodb-mongosh_1.7.1_amd64.deb

sudo dpkg -i mongodb-mongosh_1.7.1_amd64.deb

Connect to the MongoDB deployment

Inside the Cloud Shell os login perform the following. The username and password configured is codelab.

mongosh

mongosh "mongodb+srv://psc-mongodb-uswest1-uswest2-pl-0.2wqno.mongodb.net/psc-mongodb-uswest1-uswest2" --apiVersion 1 --username codelab

Example below:

Execute commands against the database

Inside the Cloud Shell os login perform the following.

show dbs

use Company

db.Employee.insertOne({"Name":"cosmo","dept":"devops"})

db.Employee.findOne({"Name":"cosmo"})

11. Failover active MongoDB region, us-west1

Before we perform the failover, let's validate that us-west1 is primary and us-west2 has two secondary nodes.

Navigate to Database → psc-mongodb-uswest1-uswest2 → Overview

In the following section log into vm1 located in us-west1, failover the primary MongoDB cluster region us-west1 and verify the database is still reachable from MongoDB cluster in us-west2.

You can test both primary and regional failover from Atlas UI.

- Log in to the Atlas UI.

- Click on [...] beside your cluster name, psc-mongodb-uswest1-uswest2 → Test Outage.

- Select Regional Outage → Select regions.

- Select the primary region, us-west1→ Simulate Regional Outage.

Once selected the cluster will display outage simulation after 3-4 minutes

Close the window

Verify that us-west1 is down and us-west2 is now taken over as primary

Navigate to Database → psc-mongodb-uswest1-uswest2 → Overview

Validate connectivity to the cluster by new primary, us-west2

Log into vm1 located in us-west1 and access mongodb in us-west2 validating private service connect global access.

If your Cloud Shell session terminated perform the following:

Inside Cloud Shell, perform the following:

gcloud config list project

gcloud config set project [YOUR-PROJECT-NAME]

projectname=YOUR-PROJECT-NAME

echo $projectname

Log into vm1 using IAP in Cloud Shell, retry if there is a timeout.

gcloud compute ssh vm1 --project=$projectname --zone=us-west1-a --tunnel-through-iap

Connect to the MongoDB deployment

Inside the Cloud Shell os login perform the following.

mongosh

mongosh "mongodb+srv://psc-mongodb-uswest1-uswest2-pl-0.2wqno.mongodb.net/psc-mongodb-uswest1-uswest2" --apiVersion 1 --username codelab

Example below:

Execute commands against the database

Inside the Cloud Shell os login perform the following.

show dbs

use Company

db.Employee.insertOne({"Name":"cosmo","dept":"devops"})

db.Employee.findOne()

exit

Success: You have validated that PSC global access allows seamless consumer endpoint connectivity across regions to aiding high availability or due to regional outages. In the codelab, MongoDB regional failover occurred in the primary node located us-west1 therefore the secondary region us-west2 took over a primary. Although the cluster resulted in a regional outage the consumer vm1 located in us-west1 successfully reached the new primary cluster in us-west2.

12. Cleanup

From Cloud Console delete the consumer endpoints

Navigate to Network services → Private Service Connect → CONNECTED ENDPOINTS

Use the filter psc-endpoint to eliminate potential deletion of non-lab consumer endpoints. Select all endpoints → DELETE

Delete the static internal IP addresses associated with the consumer endpoints

Navigate to VPC network → consumer-vpc→ STATIC INTERNAL IP ADDRESSES

Use the filter psc-endpoint to eliminate potential deletion of non-lab consumer endpoints and increase rows per page to 100. Select all endpoints → RELEASE

From Cloud Shell, delete codelab components.

gcloud compute instances delete vm1 --zone=us-west1-a --quiet

gcloud compute networks subnets delete psc-endpoint-us-west1 vm-subnet --region=us-west1 --quiet

gcloud compute networks subnets delete psc-endpoint-us-west2 --region=us-west2 --quiet

gcloud compute firewall-rules delete ssh-iap-consumer --quiet

gcloud compute routers delete consumer-cr --region=us-west1 --quiet

gcloud compute networks delete consumer-vpc --quiet

From Atlas UI identify cluster psc-mongodb-uswest1-uswest2 → end simulation

Select End outage simulation —> Exit

The cluster is now reverting us-west1 as primary, this process will take 3-4 minutes. Once completed, terminate the cluster, note the gray outline indicating a status change.

Insert the cluster name → Terminate

Delete the private endpoint associated with us-west1 and us-west2

From the Atlas UI navigate to Security → Network Access → Private Endpoint → Select Terminate

13. Congratulations

Congratulations, you've successfully configured and validated a Private Service Connect endpoint with global access to MongoDB across regions. You successfully created a consumer VPC, multi-region MongoDB and consumer endpoints. A VM located in us-west1 successfully connected to MongoDB in both us-west1 and us-west2 upon regional failover.

Cosmopup thinks codelabs are awesome!!

What's next?

Check out some of these codelabs...

- Using Private Service Connect to publish and consume services with GKE

- Using Private Service Connect to publish and consume services

- Connect to on-prem services over Hybrid Networking using Private Service Connect and an internal TCP Proxy load balancer

- Using Private Service Connect with automatic DNS configuration