1. Introduction

GCP has long supported multiple interfaces at a VM instance level. With multiple-interfaces, a VM can connect up to 7 new interfaces (default + 7 interfaces) to different VPCs. GKE Networking now extends this behavior to the Pods that are running on the Nodes. Before this feature, GKE clusters allowed all NodePools to have only a single interface and therefore be mapped to a single VPC. With the Multi-Network on Pods feature, a user can now enable more than a single interface on Nodes and for Pods in a GKE cluster.

What you'll build

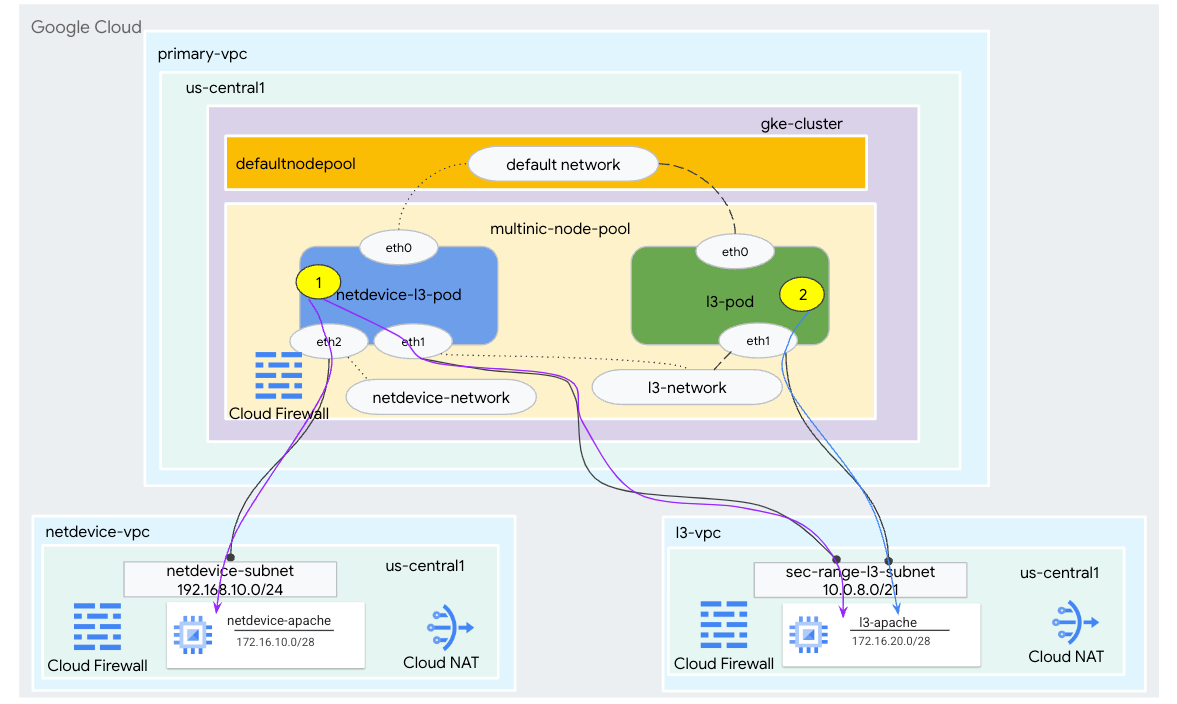

In this tutorial, you're going to build a comprehensive GKE multinic environment that illustrates the use case(s) illustrated in Figure 1.

- Create netdevice-l3-pod leveraging busybox to:

- Perform a PING & wget -S to netdevice-apache instance in the netdevice-vpc over eth2

- Perform a PING & wget -S to l3-apache instance in the l3-vpc over eth1

- Create a l3-pod leveraging busybox to perform a PING & wget -S to l3-apache instance over eth1

In both use cases, eth0 interface of the pod is connected to the default network.

Figure 1

What you'll learn

- How to create a l3 type subnet

- How to create a netdevice type subnet

- How to establish a multi-nic GKE nodepool

- How to create a pod with netdevice and l3 capabilities

- How to create a pod with l3 capabilities

- How to create and validate GKE object network

- How to validate connectivity to remote Apache servers using PING, wget and Firewall logs

What you'll need

- Google Cloud Project

2. Terminology and Concepts

Primary VPC: The primary VPC is a pre-configured VPC that comes with a set of default settings and resources. The GKE cluster is created in this VPC.

Subnet: In Google Cloud, a subnet is the way to create Classless Inter-Domain Routing (CIDR) with netmasks in a VPC. A subnet has a single primary IP address range which is assigned to the nodes and can have multiple secondary ranges that can belong to Pods and Services.

Node-network: The node-network refers to a dedicated combination of a VPC and subnet pair. Within this node-network, the nodes belonging to the node pool are allocated IP addresses from the primary IP address range.

Secondary range: A Google Cloud secondary range is a CIDR and netmask belonging to a region in a VPC. GKE uses this as a Layer 3 Pod-network. A VPC can have multiple secondary ranges, and a Pod can connect to multiple Pod-networks.

Network (L3 or device): A Network object that serves as a connection point for Pods. In the tutorial the networks are l3-network and netdevice-network, where the device can be either netdevice or dpdk. The default network is mandatory and created upon cluster creation based on the default-nodepool subnet.

Layer 3 networks correspond to a secondary range on a subnet, represented as:

VPC -> Subnet Name -> Secondary Range Name

Device network correspond to a subnet on a VPC, represented as:

VPC -> Subnet Name

Default Pod-network: Google Cloud creates a default Pod-network during cluster creation. The default Pod-network uses the primary VPC as the node-network. The default Pod-network is available on all cluster nodes and Pods, by default.

Pods with multiple interfaces: Pods with multiple interfaces in GKE cannot connect to the same Pod-network because each interface of the Pod must be connected to a unique network.

Update the project to support the codelab

This Codelab makes use of $variables to aid gcloud configuration implementation in Cloud Shell.

Inside Cloud Shell, perform the following:

gcloud config list project

gcloud config set project [YOUR-PROJECT-NAME]

projectid=YOUR-PROJECT-NAME

echo $projectid

3. Primary VPC setup

Create the primary VPC

Inside Cloud Shell, perform the following:

gcloud compute networks create primary-vpc --project=$projectid --subnet-mode=custom

Create the node and secondary subnets

Inside Cloud Shell, perform the following:

gcloud compute networks subnets create primary-node-subnet --project=$projectid --range=192.168.0.0/24 --network=primary-vpc --region=us-central1 --enable-private-ip-google-access --secondary-range=sec-range-primay-vpc=10.0.0.0/21

4. GKE cluster creation

Create the private GKE cluster specifying the primary-vpc subnets to create the default nodepool with the required flags –enable-multi-networking and –enable-dataplane-v2 to support multi-nic nodepools.

Inside Cloud Shell, create the GKE cluster:

gcloud container clusters create multinic-gke \

--zone "us-central1-a" \

--enable-dataplane-v2 \

--enable-ip-alias \

--enable-multi-networking \

--network "primary-vpc" --subnetwork "primary-node-subnet" \

--num-nodes=2 \

--max-pods-per-node=32 \

--cluster-secondary-range-name=sec-range-primay-vpc \

--no-enable-master-authorized-networks \

--release-channel "regular" \

--enable-private-nodes --master-ipv4-cidr "100.100.10.0/28" \

--enable-ip-alias

Validate the multinic-gke cluster

Inside Cloud Shell, authenticate with the cluster:

gcloud container clusters get-credentials multinic-gke --zone us-central1-a --project $projectid

Inside Cloud Shell, validate two nodes are generated from the default-pool:

kubectl get nodes

Example:

user@$ kubectl get nodes

NAME STATUS ROLES AGE VERSION

gke-multinic-gke-default-pool-3d419e48-1k2p Ready <none> 2m4s v1.27.3-gke.100

gke-multinic-gke-default-pool-3d419e48-xckb Ready <none> 2m4s v1.27.3-gke.100

5. netdevice-vpc setup

Create the netdevice-vpc network

Inside Cloud Shell, perform the following:

gcloud compute networks create netdevice-vpc --project=$projectid --subnet-mode=custom

Create the netdevice-vpc subnets

Inside Cloud Shell, create the subnet used for the multinic netdevice-network:

gcloud compute networks subnets create netdevice-subnet --project=$projectid --range=192.168.10.0/24 --network=netdevice-vpc --region=us-central1 --enable-private-ip-google-access

Inside Cloud Shell, create a subnet for the netdevice-apache instance:

gcloud compute networks subnets create netdevice-apache --project=$projectid --range=172.16.10.0/28 --network=netdevice-vpc --region=us-central1 --enable-private-ip-google-access

Cloud Router and NAT configuration

Cloud NAT is used in the tutorial for software package installation since the VM instance does not have an external IP address.

Inside Cloud Shell, create the cloud router.

gcloud compute routers create netdevice-cr --network netdevice-vpc --region us-central1

Inside Cloud Shell, create the NAT gateway.

gcloud compute routers nats create cloud-nat-netdevice --router=netdevice-cr --auto-allocate-nat-external-ips --nat-all-subnet-ip-ranges --region us-central1

Create the netdevice-apache instance

In the following section, you'll create the netdevice-apache instance.

Inside Cloud Shell, create the instance:

gcloud compute instances create netdevice-apache \

--project=$projectid \

--machine-type=e2-micro \

--image-family debian-11 \

--no-address \

--image-project debian-cloud \

--zone us-central1-a \

--subnet=netdevice-apache \

--metadata startup-script="#! /bin/bash

sudo apt-get update

sudo apt-get install apache2 -y

sudo service apache2 restart

echo 'Welcome to the netdevice-apache instance !!' | tee /var/www/html/index.html

EOF"

6. l3-vpc setup

Create the l3-vpc network

Inside Cloud Shell, perform the following:

gcloud compute networks create l3-vpc --project=$projectid --subnet-mode=custom

Create the l3-vpc subnets

Inside Cloud Shell, create a primary and secondary-range subnet. The secondary-range(sec-range-l3-subnet) is used for the multinic l3-network:

gcloud compute networks subnets create l3-subnet --project=$projectid --range=192.168.20.0/24 --network=l3-vpc --region=us-central1 --enable-private-ip-google-access --secondary-range=sec-range-l3-subnet=10.0.8.0/21

Inside Cloud Shell, create a subnet for the l3-apache instance:

gcloud compute networks subnets create l3-apache --project=$projectid --range=172.16.20.0/28 --network=l3-vpc --region=us-central1 --enable-private-ip-google-access

Cloud Router and NAT configuration

Cloud NAT is used in the tutorial for software package installation since the VM instance does not have an external IP address.

Inside Cloud Shell, create the cloud router.

gcloud compute routers create l3-cr --network l3-vpc --region us-central1

Inside Cloud Shell, create the NAT gateway.

gcloud compute routers nats create cloud-nat-l3 --router=l3-cr --auto-allocate-nat-external-ips --nat-all-subnet-ip-ranges --region us-central1

Create the l3-apache instance

In the following section, you'll create the l3-apache instance.

Inside Cloud Shell, create the instance:

gcloud compute instances create l3-apache \

--project=$projectid \

--machine-type=e2-micro \

--image-family debian-11 \

--no-address \

--image-project debian-cloud \

--zone us-central1-a \

--subnet=l3-apache \

--metadata startup-script="#! /bin/bash

sudo apt-get update

sudo apt-get install apache2 -y

sudo service apache2 restart

echo 'Welcome to the l3-apache instance !!' | tee /var/www/html/index.html

EOF"

7. Create the multinic nodepool

In the following section, you will create a multinic nodepool consisting of the following flags:

–additional-node-network (Required for Device type interfaces)

Example:

--additional-node-network network=netdevice-vpc,subnetwork=netdevice-subnet

–additional-node-network & –additional-pod-network ( Required forL3 type interfaces)

Example:

--additional-node-network network=l3-vpc,subnetwork=l3-subnet --additional-pod-network subnetwork=l3-subnet,pod-ipv4-range=sec-range-l3-subnet,max-pods-per-node=8

Machine type: When deploying the node pool, consider the machine type dependency. For example, a machine type like "e2-standard-4" with 4 vCPUs can support up to 4 VPCs total. As an example, netdevice-l3-pod will have a total of 3 interfaces (default, netdevice and l3) therefore the machine type used in the tutorial is e2-standard-4.

Inside Cloud Shell, create the nodepool consisting of a Type Device and L3:

gcloud container --project "$projectid" node-pools create "multinic-node-pool" --cluster "multinic-gke" --zone "us-central1-a" --additional-node-network network=netdevice-vpc,subnetwork=netdevice-subnet --additional-node-network network=l3-vpc,subnetwork=l3-subnet --additional-pod-network subnetwork=l3-subnet,pod-ipv4-range=sec-range-l3-subnet,max-pods-per-node=8 --machine-type "e2-standard-4"

8. Validate the multnic-node-pool

Inside Cloud Shell, validate three nodes are generated from the multinic-node-pool:

kubectl get nodes

Example:

user@$ kubectl get nodes

NAME STATUS ROLES AGE VERSION

gke-multinic-gke-default-pool-3d419e48-1k2p Ready <none> 15m v1.27.3-gke.100

gke-multinic-gke-default-pool-3d419e48-xckb Ready <none> 15m v1.27.3-gke.100

gke-multinic-gke-multinic-node-pool-135699a1-0tfx Ready <none> 3m51s v1.27.3-gke.100

gke-multinic-gke-multinic-node-pool-135699a1-86gz Ready <none> 3m51s v1.27.3-gke.100

gke-multinic-gke-multinic-node-pool-135699a1-t66p Ready <none> 3m51s v1.27.3-gke.100

9. Create the netdevice-network

In the following steps you will generate a Network and GKENetworkParamSet kubernetes object to create the netdevice-network that will be used to associate Pods in later steps.

Create the netdevice-network object

Inside Cloud Shell, create the network object YAML netdevice-network.yaml using VI editor or nano. Note the "routes to" is the subnet 172.16.10.0/28 (netdevice-apache) in the netdevice-vpc.

apiVersion: networking.gke.io/v1

kind: Network

metadata:

name: netdevice-network

spec:

type: "Device"

parametersRef:

group: networking.gke.io

kind: GKENetworkParamSet

name: "netdevice"

routes:

- to: "172.16.10.0/28"

Inside Cloud Shell, apply netdevice-network.yaml:

kubectl apply -f netdevice-network.yaml

Inside Cloud Shell, validate the netdevice-network Status Type is Ready.

kubectl describe networks netdevice-network

Example:

user@$ kubectl describe networks netdevice-network

Name: netdevice-network

Namespace:

Labels: <none>

Annotations: networking.gke.io/in-use: false

API Version: networking.gke.io/v1

Kind: Network

Metadata:

Creation Timestamp: 2023-07-30T22:37:38Z

Generation: 1

Resource Version: 1578594

UID: 46d75374-9fcc-42be-baeb-48e074747052

Spec:

Parameters Ref:

Group: networking.gke.io

Kind: GKENetworkParamSet

Name: netdevice

Routes:

To: 172.16.10.0/28

Type: Device

Status:

Conditions:

Last Transition Time: 2023-07-30T22:37:38Z

Message: GKENetworkParamSet resource was deleted: netdevice

Reason: GNPDeleted

Status: False

Type: ParamsReady

Last Transition Time: 2023-07-30T22:37:38Z

Message: Resource referenced by params is not ready

Reason: ParamsNotReady

Status: False

Type: Ready

Events: <none>

Create the GKENetworkParamSet

Inside Cloud Shell, create the network object YAML netdevice-network-parm.yaml using VI editor or nano. The spec maps to the netdevice-vpc subnet deployment.

apiVersion: networking.gke.io/v1

kind: GKENetworkParamSet

metadata:

name: "netdevice"

spec:

vpc: "netdevice-vpc"

vpcSubnet: "netdevice-subnet"

deviceMode: "NetDevice"

Inside Cloud Shell, apply netdevice-network-parm.yaml

kubectl apply -f netdevice-network-parm.yaml

Inside Cloud Shell, validate the netdevice-network Status Reason GNPParmsReady and NetworkReady:

kubectl describe networks netdevice-network

Example:

user@$ kubectl describe networks netdevice-network

Name: netdevice-network

Namespace:

Labels: <none>

Annotations: networking.gke.io/in-use: false

API Version: networking.gke.io/v1

Kind: Network

Metadata:

Creation Timestamp: 2023-07-30T22:37:38Z

Generation: 1

Resource Version: 1579791

UID: 46d75374-9fcc-42be-baeb-48e074747052

Spec:

Parameters Ref:

Group: networking.gke.io

Kind: GKENetworkParamSet

Name: netdevice

Routes:

To: 172.16.10.0/28

Type: Device

Status:

Conditions:

Last Transition Time: 2023-07-30T22:39:44Z

Message:

Reason: GNPParamsReady

Status: True

Type: ParamsReady

Last Transition Time: 2023-07-30T22:39:44Z

Message:

Reason: NetworkReady

Status: True

Type: Ready

Events: <none>

Inside Cloud Shell, validate the gkenetworkparamset CIDR block 192.168.10.0/24 used for the Pod(s) interface in a later step.

kubectl describe gkenetworkparamsets.networking.gke.io netdevice

Example:

user@$ kubectl describe gkenetworkparamsets.networking.gke.io netdevice

Name: netdevice

Namespace:

Labels: <none>

Annotations: <none>

API Version: networking.gke.io/v1

Kind: GKENetworkParamSet

Metadata:

Creation Timestamp: 2023-07-30T22:39:43Z

Finalizers:

networking.gke.io/gnp-controller

networking.gke.io/high-perf-finalizer

Generation: 1

Resource Version: 1579919

UID: 6fe36b0c-0091-4b6a-9d28-67596cbce845

Spec:

Device Mode: NetDevice

Vpc: netdevice-vpc

Vpc Subnet: netdevice-subnet

Status:

Conditions:

Last Transition Time: 2023-07-30T22:39:43Z

Message:

Reason: GNPReady

Status: True

Type: Ready

Network Name: netdevice-network

Pod CID Rs:

Cidr Blocks:

192.168.10.0/24

Events: <none>

10. Create the l3 networks

In the following steps you will generate a Network and GKENetworkParamSet kubernetes object to create the l3 network that will be used to associate Pods in later steps.

Create the l3 network object

Inside Cloud Shell, create the network object YAML l3-network.yaml using VI editor or nano. Note the "routes to" is the subnet 172.16.20.0/28 (l3-apache) in the l3-vpc.

apiVersion: networking.gke.io/v1

kind: Network

metadata:

name: l3-network

spec:

type: "L3"

parametersRef:

group: networking.gke.io

kind: GKENetworkParamSet

name: "l3-network"

routes:

- to: "172.16.20.0/28"

Inside Cloud Shell, apply l3-network.yaml:

kubectl apply -f l3-network.yaml

Inside Cloud Shell, validate the l3-network Status Type is Ready.

kubectl describe networks l3-network

Example:

user@$ kubectl describe networks l3-network

Name: l3-network

Namespace:

Labels: <none>

Annotations: networking.gke.io/in-use: false

API Version: networking.gke.io/v1

Kind: Network

Metadata:

Creation Timestamp: 2023-07-30T22:43:54Z

Generation: 1

Resource Version: 1582307

UID: 426804be-35c9-4cc5-bd26-00b94be2ef9a

Spec:

Parameters Ref:

Group: networking.gke.io

Kind: GKENetworkParamSet

Name: l3-network

Routes:

to: 172.16.20.0/28

Type: L3

Status:

Conditions:

Last Transition Time: 2023-07-30T22:43:54Z

Message: GKENetworkParamSet resource was deleted: l3-network

Reason: GNPDeleted

Status: False

Type: ParamsReady

Last Transition Time: 2023-07-30T22:43:54Z

Message: Resource referenced by params is not ready

Reason: ParamsNotReady

Status: False

Type: Ready

Events: <none>

Create the GKENetworkParamSet

Inside Cloud Shell, create the network object YAML l3-network-parm.yaml using VI editor or nano. Note the spec maps to the l3-vpc subnet deployment.

apiVersion: networking.gke.io/v1

kind: GKENetworkParamSet

metadata:

name: "l3-network"

spec:

vpc: "l3-vpc"

vpcSubnet: "l3-subnet"

podIPv4Ranges:

rangeNames:

- "sec-range-l3-subnet"

Inside Cloud Shell, apply l3-network-parm.yaml

kubectl apply -f l3-network-parm.yaml

Inside Cloud Shell, validate the l3-network Status Reason is GNPParmsReady and NetworkReady:

kubectl describe networks l3-network

Example:

user@$ kubectl describe networks l3-network

Name: l3-network

Namespace:

Labels: <none>

Annotations: networking.gke.io/in-use: false

API Version: networking.gke.io/v1

Kind: Network

Metadata:

Creation Timestamp: 2023-07-30T22:43:54Z

Generation: 1

Resource Version: 1583647

UID: 426804be-35c9-4cc5-bd26-00b94be2ef9a

Spec:

Parameters Ref:

Group: networking.gke.io

Kind: GKENetworkParamSet

Name: l3-network

Routes:

To: 172.16.20.0/28

Type: L3

Status:

Conditions:

Last Transition Time: 2023-07-30T22:46:14Z

Message:

Reason: GNPParamsReady

Status: True

Type: ParamsReady

Last Transition Time: 2023-07-30T22:46:14Z

Message:

Reason: NetworkReady

Status: True

Type: Ready

Events: <none>

Inside Cloud Shell, validate the gkenetworkparamset l3-network CIDR 10.0.8.0/21 used to create the pod interface.

kubectl describe gkenetworkparamsets.networking.gke.io l3-network

Example:

user@$ kubectl describe gkenetworkparamsets.networking.gke.io l3-network

Name: l3-network

Namespace:

Labels: <none>

Annotations: <none>

API Version: networking.gke.io/v1

Kind: GKENetworkParamSet

Metadata:

Creation Timestamp: 2023-07-30T22:46:14Z

Finalizers:

networking.gke.io/gnp-controller

Generation: 1

Resource Version: 1583656

UID: 4c1f521b-0088-4005-b000-626ca5205326

Spec:

podIPv4Ranges:

Range Names:

sec-range-l3-subnet

Vpc: l3-vpc

Vpc Subnet: l3-subnet

Status:

Conditions:

Last Transition Time: 2023-07-30T22:46:14Z

Message:

Reason: GNPReady

Status: True

Type: Ready

Network Name: l3-network

Pod CID Rs:

Cidr Blocks:

10.0.8.0/21

Events: <none>

11. Create the netdevice-l3-pod

In the following section, you will create the netdevice-l3-pod running busybox, known as a "Swiss Army knife" that supports more than 300 common commands. The pod is configured to communicate with the l3-vpc using eth1 and netdevice-vpc using eth2.

Inside Cloud Shell, create the busy box container named netdevice-l3-pod.yaml using VI editor or nano.

apiVersion: v1

kind: Pod

metadata:

name: netdevice-l3-pod

annotations:

networking.gke.io/default-interface: 'eth0'

networking.gke.io/interfaces: |

[

{"interfaceName":"eth0","network":"default"},

{"interfaceName":"eth1","network":"l3-network"},

{"interfaceName":"eth2","network":"netdevice-network"}

]

spec:

containers:

- name: netdevice-l3-pod

image: busybox

command: ["sleep", "10m"]

ports:

- containerPort: 80

restartPolicy: Always

Inside Cloud Shell, apply netdevice-l3-pod.yaml

kubectl apply -f netdevice-l3-pod.yaml

Validate netdevice-l3-pod creation

Inside Cloud Shell, validate netdevice-l3-pod is running:

kubectl get pods netdevice-l3-pod

Example:

user@$ kubectl get pods netdevice-l3-pod

NAME READY STATUS RESTARTS AGE

netdevice-l3-pod 1/1 Running 0 74s

Inside Cloud Shell, validate the IP addresses assigned to the Pod interfaces.

kubectl get pods netdevice-l3-pod -o yaml

In the provided example, networking.gke.io/pod-ips field contains the IP addresses associated to the pod interfaces from the l3-network and netdevice-network. The default network IP address 10.0.1.22 is detailed under podIPs:

user@$ kubectl get pods netdevice-l3-pod -o yaml

apiVersion: v1

kind: Pod

metadata:

annotations:

kubectl.kubernetes.io/last-applied-configuration: |

{"apiVersion":"v1","kind":"Pod","metadata":{"annotations":{"networking.gke.io/default-interface":"eth0","networking.gke.io/interfaces":"[\n{\"interfaceName\":\"eth0\",\"network\":\"default\"},\n{\"interfaceName\":\"eth1\",\"network\":\"l3-network\"},\n{\"interfaceName\":\"eth2\",\"network\":\"netdevice-network\"}\n]\n"},"name":"netdevice-l3-pod","namespace":"default"},"spec":{"containers":[{"command":["sleep","10m"],"image":"busybox","name":"netdevice-l3-pod","ports":[{"containerPort":80}]}],"restartPolicy":"Always"}}

networking.gke.io/default-interface: eth0

networking.gke.io/interfaces: |

[

{"interfaceName":"eth0","network":"default"},

{"interfaceName":"eth1","network":"l3-network"},

{"interfaceName":"eth2","network":"netdevice-network"}

]

networking.gke.io/pod-ips: '[{"networkName":"l3-network","ip":"10.0.8.4"},{"networkName":"netdevice-network","ip":"192.168.10.2"}]'

creationTimestamp: "2023-07-30T22:49:27Z"

name: netdevice-l3-pod

namespace: default

resourceVersion: "1585567"

uid: d9e43c75-e0d1-4f31-91b0-129bc53bbf64

spec:

containers:

- command:

- sleep

- 10m

image: busybox

imagePullPolicy: Always

name: netdevice-l3-pod

ports:

- containerPort: 80

protocol: TCP

resources:

limits:

networking.gke.io.networks/l3-network.IP: "1"

networking.gke.io.networks/netdevice-network: "1"

networking.gke.io.networks/netdevice-network.IP: "1"

requests:

networking.gke.io.networks/l3-network.IP: "1"

networking.gke.io.networks/netdevice-network: "1"

networking.gke.io.networks/netdevice-network.IP: "1"

terminationMessagePath: /dev/termination-log

terminationMessagePolicy: File

volumeMounts:

- mountPath: /var/run/secrets/kubernetes.io/serviceaccount

name: kube-api-access-f2wpb

readOnly: true

dnsPolicy: ClusterFirst

enableServiceLinks: true

nodeName: gke-multinic-gke-multinic-node-pool-135699a1-86gz

preemptionPolicy: PreemptLowerPriority

priority: 0

restartPolicy: Always

schedulerName: default-scheduler

securityContext: {}

serviceAccount: default

serviceAccountName: default

terminationGracePeriodSeconds: 30

tolerations:

- effect: NoExecute

key: node.kubernetes.io/not-ready

operator: Exists

tolerationSeconds: 300

- effect: NoExecute

key: node.kubernetes.io/unreachable

operator: Exists

tolerationSeconds: 300

- effect: NoSchedule

key: networking.gke.io.networks/l3-network.IP

operator: Exists

- effect: NoSchedule

key: networking.gke.io.networks/netdevice-network

operator: Exists

- effect: NoSchedule

key: networking.gke.io.networks/netdevice-network.IP

operator: Exists

volumes:

- name: kube-api-access-f2wpb

projected:

defaultMode: 420

sources:

- serviceAccountToken:

expirationSeconds: 3607

path: token

- configMap:

items:

- key: ca.crt

path: ca.crt

name: kube-root-ca.crt

- downwardAPI:

items:

- fieldRef:

apiVersion: v1

fieldPath: metadata.namespace

path: namespace

status:

conditions:

- lastProbeTime: null

lastTransitionTime: "2023-07-30T22:49:28Z"

status: "True"

type: Initialized

- lastProbeTime: null

lastTransitionTime: "2023-07-30T22:49:33Z"

status: "True"

type: Ready

- lastProbeTime: null

lastTransitionTime: "2023-07-30T22:49:33Z"

status: "True"

type: ContainersReady

- lastProbeTime: null

lastTransitionTime: "2023-07-30T22:49:28Z"

status: "True"

type: PodScheduled

containerStatuses:

- containerID: containerd://dcd9ead2f69824ccc37c109a47b1f3f5eb7b3e60ce3865e317dd729685b66a5c

image: docker.io/library/busybox:latest

imageID: docker.io/library/busybox@sha256:3fbc632167424a6d997e74f52b878d7cc478225cffac6bc977eedfe51c7f4e79

lastState: {}

name: netdevice-l3-pod

ready: true

restartCount: 0

started: true

state:

running:

startedAt: "2023-07-30T22:49:32Z"

hostIP: 192.168.0.4

phase: Running

podIP: 10.0.1.22

podIPs:

- ip: 10.0.1.22

qosClass: BestEffort

startTime: "2023-07-30T22:49:28Z"

Validate netdevice-l3-pod routes

Inside Cloud Shell, validate the routes to netdevice-vpc and l3-vpc from netdevice-l3-pod:

kubectl exec --stdin --tty netdevice-l3-pod -- /bin/sh

Form the instance, validate the pod interfaces:

ifconfig

In the example, eth0 is connected to the default network, eth1 is connected to the l3-network, and eth2 is connected to the netdevice-network.

/ # ifconfig

eth0 Link encap:Ethernet HWaddr 26:E3:1B:14:6E:0C

inet addr:10.0.1.22 Bcast:10.0.1.255 Mask:255.255.255.0

UP BROADCAST RUNNING MULTICAST MTU:1460 Metric:1

RX packets:5 errors:0 dropped:0 overruns:0 frame:0

TX packets:7 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:446 (446.0 B) TX bytes:558 (558.0 B)

eth1 Link encap:Ethernet HWaddr 92:78:4E:CB:F2:D4

inet addr:10.0.8.4 Bcast:0.0.0.0 Mask:255.255.255.255

UP BROADCAST RUNNING MULTICAST MTU:1460 Metric:1

RX packets:5 errors:0 dropped:0 overruns:0 frame:0

TX packets:6 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:446 (446.0 B) TX bytes:516 (516.0 B)

eth2 Link encap:Ethernet HWaddr 42:01:C0:A8:0A:02

inet addr:192.168.10.2 Bcast:0.0.0.0 Mask:255.255.255.255

UP BROADCAST RUNNING MULTICAST MTU:1460 Metric:1

RX packets:73 errors:0 dropped:0 overruns:0 frame:0

TX packets:50581 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:26169 (25.5 KiB) TX bytes:2148170 (2.0 MiB)

lo Link encap:Local Loopback

inet addr:127.0.0.1 Mask:255.0.0.0

UP LOOPBACK RUNNING MTU:65536 Metric:1

RX packets:0 errors:0 dropped:0 overruns:0 frame:0

TX packets:0 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:0 (0.0 B) TX bytes:0 (0.0 B)

From the netdevice-l3-pod validate the routes to the netdevice-vpc (172.16.10.0/28) and l3-vpc (172.16.20.0/28).

Form the instance, validate the pod routes:

ip route

Example:

/ # ip route

default via 10.0.1.1 dev eth0 #primary-vpc

10.0.1.0/24 via 10.0.1.1 dev eth0 src 10.0.1.22

10.0.1.1 dev eth0 scope link src 10.0.1.22

10.0.8.0/21 via 10.0.8.1 dev eth1 #l3-vpc (sec-range-l3-subnet)

10.0.8.1 dev eth1 scope link

172.16.10.0/28 via 192.168.10.1 dev eth2 #netdevice-vpc (netdevice-apache subnet)

172.16.20.0/28 via 10.0.8.1 dev eth1 #l3-vpc (l3-apache subnet)

192.168.10.0/24 via 192.168.10.1 dev eth2 #pod interface subnet

192.168.10.1 dev eth2 scope link

To return to the cloud shell, exit the pod from the instance.

exit

12. Create the l3-pod

In the following section, you will create the l3-pod running busybox, known as a "Swiss Army knife" that supports more than 300 common commands. The pod is configured to communicate with the l3-vpc using eth1 only.

Inside Cloud Shell, create the busy box container named l3-pod.yaml using VI editor or nano.

apiVersion: v1

kind: Pod

metadata:

name: l3-pod

annotations:

networking.gke.io/default-interface: 'eth0'

networking.gke.io/interfaces: |

[

{"interfaceName":"eth0","network":"default"},

{"interfaceName":"eth1","network":"l3-network"}

]

spec:

containers:

- name: l3-pod

image: busybox

command: ["sleep", "10m"]

ports:

- containerPort: 80

restartPolicy: Always

Inside Cloud Shell, apply l3-pod.yaml

kubectl apply -f l3-pod.yaml

Validate l3-pod creation

Inside Cloud Shell, validate netdevice-l3-pod is running:

kubectl get pods l3-pod

Example:

user@$ kubectl get pods l3-pod

NAME READY STATUS RESTARTS AGE

l3-pod 1/1 Running 0 52s

Inside Cloud Shell, validate the IP addresses assigned to the Pod interfaces.

kubectl get pods l3-pod -o yaml

In the provided example, networking.gke.io/pod-ips field contains the IP addresses associated to the pod interfaces from the l3-network. The default network IP address 10.0.2.12 is detailed under podIPs:

user@$ kubectl get pods l3-pod -o yaml

apiVersion: v1

kind: Pod

metadata:

annotations:

kubectl.kubernetes.io/last-applied-configuration: |

{"apiVersion":"v1","kind":"Pod","metadata":{"annotations":{"networking.gke.io/default-interface":"eth0","networking.gke.io/interfaces":"[\n{\"interfaceName\":\"eth0\",\"network\":\"default\"},\n{\"interfaceName\":\"eth1\",\"network\":\"l3-network\"}\n]\n"},"name":"l3-pod","namespace":"default"},"spec":{"containers":[{"command":["sleep","10m"],"image":"busybox","name":"l3-pod","ports":[{"containerPort":80}]}],"restartPolicy":"Always"}}

networking.gke.io/default-interface: eth0

networking.gke.io/interfaces: |

[

{"interfaceName":"eth0","network":"default"},

{"interfaceName":"eth1","network":"l3-network"}

]

networking.gke.io/pod-ips: '[{"networkName":"l3-network","ip":"10.0.8.22"}]'

creationTimestamp: "2023-07-30T23:22:29Z"

name: l3-pod

namespace: default

resourceVersion: "1604447"

uid: 79a86afd-2a50-433d-9d48-367acb82c1d0

spec:

containers:

- command:

- sleep

- 10m

image: busybox

imagePullPolicy: Always

name: l3-pod

ports:

- containerPort: 80

protocol: TCP

resources:

limits:

networking.gke.io.networks/l3-network.IP: "1"

requests:

networking.gke.io.networks/l3-network.IP: "1"

terminationMessagePath: /dev/termination-log

terminationMessagePolicy: File

volumeMounts:

- mountPath: /var/run/secrets/kubernetes.io/serviceaccount

name: kube-api-access-w9d24

readOnly: true

dnsPolicy: ClusterFirst

enableServiceLinks: true

nodeName: gke-multinic-gke-multinic-node-pool-135699a1-t66p

preemptionPolicy: PreemptLowerPriority

priority: 0

restartPolicy: Always

schedulerName: default-scheduler

securityContext: {}

serviceAccount: default

serviceAccountName: default

terminationGracePeriodSeconds: 30

tolerations:

- effect: NoExecute

key: node.kubernetes.io/not-ready

operator: Exists

tolerationSeconds: 300

- effect: NoExecute

key: node.kubernetes.io/unreachable

operator: Exists

tolerationSeconds: 300

- effect: NoSchedule

key: networking.gke.io.networks/l3-network.IP

operator: Exists

volumes:

- name: kube-api-access-w9d24

projected:

defaultMode: 420

sources:

- serviceAccountToken:

expirationSeconds: 3607

path: token

- configMap:

items:

- key: ca.crt

path: ca.crt

name: kube-root-ca.crt

- downwardAPI:

items:

- fieldRef:

apiVersion: v1

fieldPath: metadata.namespace

path: namespace

status:

conditions:

- lastProbeTime: null

lastTransitionTime: "2023-07-30T23:22:29Z"

status: "True"

type: Initialized

- lastProbeTime: null

lastTransitionTime: "2023-07-30T23:22:35Z"

status: "True"

type: Ready

- lastProbeTime: null

lastTransitionTime: "2023-07-30T23:22:35Z"

status: "True"

type: ContainersReady

- lastProbeTime: null

lastTransitionTime: "2023-07-30T23:22:29Z"

status: "True"

type: PodScheduled

containerStatuses:

- containerID: containerd://1d5fe2854bba0a0d955c157a58bcfd4e34cecf8837edfd7df2760134f869e966

image: docker.io/library/busybox:latest

imageID: docker.io/library/busybox@sha256:3fbc632167424a6d997e74f52b878d7cc478225cffac6bc977eedfe51c7f4e79

lastState: {}

name: l3-pod

ready: true

restartCount: 0

started: true

state:

running:

startedAt: "2023-07-30T23:22:35Z"

hostIP: 192.168.0.5

phase: Running

podIP: 10.0.2.12

podIPs:

- ip: 10.0.2.12

qosClass: BestEffort

startTime: "2023-07-30T23:22:29Z"

Validate l3-pod routes

Inside Cloud Shell, validate the routes to l3-vpc from netdevice-l3-pod:

kubectl exec --stdin --tty l3-pod -- /bin/sh

Form the instance, validate the pod interfaces:

ifconfig

In the example, eth0 is connected to the default network, eth1 is connected to the l3-network.

/ # ifconfig

eth0 Link encap:Ethernet HWaddr 22:29:30:09:6B:58

inet addr:10.0.2.12 Bcast:10.0.2.255 Mask:255.255.255.0

UP BROADCAST RUNNING MULTICAST MTU:1460 Metric:1

RX packets:5 errors:0 dropped:0 overruns:0 frame:0

TX packets:7 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:446 (446.0 B) TX bytes:558 (558.0 B)

eth1 Link encap:Ethernet HWaddr 6E:6D:FC:C3:FF:AF

inet addr:10.0.8.22 Bcast:0.0.0.0 Mask:255.255.255.255

UP BROADCAST RUNNING MULTICAST MTU:1460 Metric:1

RX packets:5 errors:0 dropped:0 overruns:0 frame:0

TX packets:6 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:446 (446.0 B) TX bytes:516 (516.0 B)

lo Link encap:Local Loopback

inet addr:127.0.0.1 Mask:255.0.0.0

UP LOOPBACK RUNNING MTU:65536 Metric:1

RX packets:0 errors:0 dropped:0 overruns:0 frame:0

TX packets:0 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:0 (0.0 B) TX bytes:0 (0.0 B)

From the l3-pod validate the routes to the l3-vpc (172.16.20.0/28).

Form the instance, validate the pod routes:

ip route

Example:

/ # ip route

default via 10.0.2.1 dev eth0 #primary-vpc

10.0.2.0/24 via 10.0.2.1 dev eth0 src 10.0.2.12

10.0.2.1 dev eth0 scope link src 10.0.2.12

10.0.8.0/21 via 10.0.8.17 dev eth1 #l3-vpc (sec-range-l3-subnet)

10.0.8.17 dev eth1 scope link #pod interface subnet

172.16.20.0/28 via 10.0.8.17 dev eth1 #l3-vpc (l3-apache subnet)

To return to the cloud shell, exit the pod from the instance.

exit

13. Firewall updates

To allow connectivity from the GKE multicnic-pool to the netdevice-vpc and l3-vpc ingress firewall rules are required. You will create firewall rules specifying the source range as the pod network subnet, e.g. netdevice-subnet, sec-range-l3-subnet.

As an example, the recently created container, l3-pod, eth2 interface 10.0.8.22 (allocated from the sec-range-l3-subnet) is the source IP address when connecting to the l3-apache instance in the l3-vpc.

netdevice-vpc: Allow from netdevice-subnet to netdevice-apache

Inside Cloud Shell, create the firewall rule in the netdevice-vpc allowing netdevice-subnet access to the netdevice-apache instance.

gcloud compute --project=$projectid firewall-rules create allow-ingress-from-netdevice-network-to-all-vpc-instances --direction=INGRESS --priority=1000 --network=netdevice-vpc --action=ALLOW --rules=all --source-ranges=192.168.10.0/24 --enable-logging

l3-vpc: Allow from sec-range-l3-subnet to l3-apache

Inside Cloud Shell, create the firewall rule in the l3-vpc allowing sec-range-l3-subnet access to the l3-apache instance.

gcloud compute --project=$projectid firewall-rules create allow-ingress-from-l3-network-to-all-vpc-instances --direction=INGRESS --priority=1000 --network=l3-vpc --action=ALLOW --rules=all --source-ranges=10.0.8.0/21 --enable-logging

14. Validate pod connectivity

In the following section, you will verify connectivity to the Apache instances from the netdevice-l3-pod and l3-pod by logging in to the pods and running a wget -S that validates a download of the apache servers home page. Because the netdevice-l3-pod is configured with interfaces from the netdevice-network and l3-network, connectivity to the Apache servers in netdevice-vpc and l3-vpc are possible.

In contrast, when performing a wget -S from the l3-pod, connectivity to the Apache server in netdevice-vpc is not possible since the l3-pod is only configured with an interface from the l3-network.

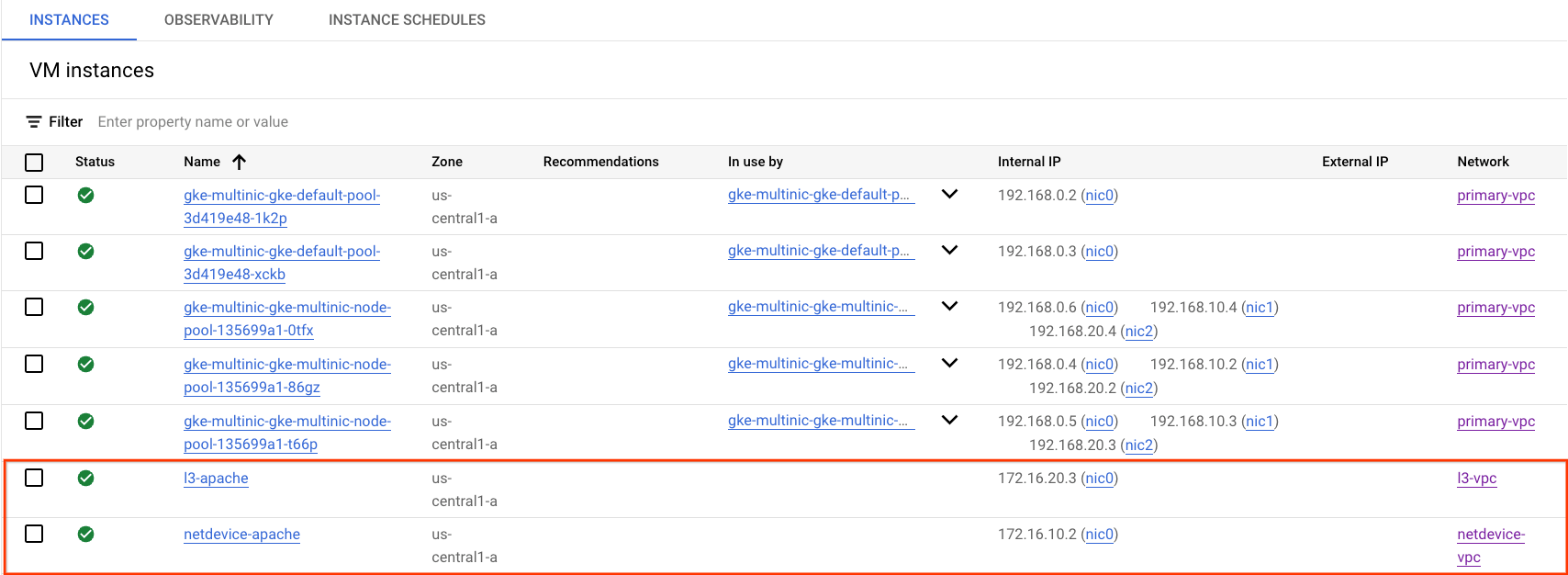

Obtain Apache Server IP Address

From Cloud Console, obtain the IP address of the Apache servers by navigate to Compute Engine → VM instances

netdevice-l3-pod to netdevice-apache connectivity test

Inside Cloud Shell, log into netdevice-l3-pod:

kubectl exec --stdin --tty netdevice-l3-pod -- /bin/sh

From the container, perform a ping to netdevice-apache instance based on the IP address obtained from the previous step.

ping <insert-your-ip> -c 4

Example:

/ # ping 172.16.10.2 -c 4

PING 172.16.10.2 (172.16.10.2): 56 data bytes

64 bytes from 172.16.10.2: seq=0 ttl=64 time=1.952 ms

64 bytes from 172.16.10.2: seq=1 ttl=64 time=0.471 ms

64 bytes from 172.16.10.2: seq=2 ttl=64 time=0.446 ms

64 bytes from 172.16.10.2: seq=3 ttl=64 time=0.505 ms

--- 172.16.10.2 ping statistics ---

4 packets transmitted, 4 packets received, 0% packet loss

round-trip min/avg/max = 0.446/0.843/1.952 ms

/ #

Inside Cloud Shell, perform a wget -S to netdevice-apache instance based on the IP address obtained from the previous step, 200 OK indicates a successful download of the webpage.

wget -S <insert-your-ip>

Example:

/ # wget -S 172.16.10.2

Connecting to 172.16.10.2 (172.16.10.2:80)

HTTP/1.1 200 OK

Date: Mon, 31 Jul 2023 03:12:58 GMT

Server: Apache/2.4.56 (Debian)

Last-Modified: Sat, 29 Jul 2023 00:32:44 GMT

ETag: "2c-6019555f54266"

Accept-Ranges: bytes

Content-Length: 44

Connection: close

Content-Type: text/html

saving to 'index.html'

index.html 100% |********************************| 44 0:00:00 ETA

'index.html' saved

/ #

netdevice-l3-pod to l3-apache connectivity test

Inside Cloud Shell, perform a ping to l3-apache instance based on the IP address obtained from the previous step.

ping <insert-your-ip> -c 4

Example:

/ # ping 172.16.20.3 -c 4

PING 172.16.20.3 (172.16.20.3): 56 data bytes

64 bytes from 172.16.20.3: seq=0 ttl=63 time=2.059 ms

64 bytes from 172.16.20.3: seq=1 ttl=63 time=0.533 ms

64 bytes from 172.16.20.3: seq=2 ttl=63 time=0.485 ms

64 bytes from 172.16.20.3: seq=3 ttl=63 time=0.462 ms

--- 172.16.20.3 ping statistics ---

4 packets transmitted, 4 packets received, 0% packet loss

round-trip min/avg/max = 0.462/0.884/2.059 ms

/ #

Inside Cloud Shell, delete the previous index.html file and perform a wget -S to l3-apache instance based on the IP address obtained from the previous step, 200 OK indicates a successful download of the webpage.

rm index.html

wget -S <insert-your-ip>

Example:

/ # rm index.html

/ # wget -S 172.16.20.3

Connecting to 172.16.20.3 (172.16.20.3:80)

HTTP/1.1 200 OK

Date: Mon, 31 Jul 2023 03:41:32 GMT

Server: Apache/2.4.56 (Debian)

Last-Modified: Mon, 31 Jul 2023 03:24:21 GMT

ETag: "25-601bff76f04b7"

Accept-Ranges: bytes

Content-Length: 37

Connection: close

Content-Type: text/html

saving to 'index.html'

index.html 100% |*******************************************************************************************************| 37 0:00:00 ETA

'index.html' saved

To return to the cloud shell, exit the pod from the instance.

exit

l3-pod to netdevice-apache connectivity test

Inside Cloud Shell, log into l3-pod:

kubectl exec --stdin --tty l3-pod -- /bin/sh

From the container, perform a ping to netdevice-apache instance based on the IP address obtained from the previous step. Since l3-pod does not have an interface associated with netdevice-network the ping will fail.

ping <insert-your-ip> -c 4

Example:

/ # ping 172.16.10.2 -c 4

PING 172.16.10.2 (172.16.10.2): 56 data bytes

--- 172.16.10.2 ping statistics ---

4 packets transmitted, 0 packets received, 100% packet loss

Optional: Inside Cloud Shell, perform a wget -S to netdevice-apache instance based on the IP address obtained from the previous step that will timeout.

wget -S <insert-your-ip>

Example:

/ # wget -S 172.16.10.2

Connecting to 172.16.10.2 (172.16.10.2:80)

wget: can't connect to remote host (172.16.10.2): Connection timed out

l3-pod to l3-apache connectivity test

Inside Cloud Shell, perform a ping to l3-apache instance based on the IP address obtained from the previous step.

ping <insert-your-ip> -c 4

Example:

/ # ping 172.16.20.3 -c 4

PING 172.16.20.3 (172.16.20.3): 56 data bytes

64 bytes from 172.16.20.3: seq=0 ttl=63 time=1.824 ms

64 bytes from 172.16.20.3: seq=1 ttl=63 time=0.513 ms

64 bytes from 172.16.20.3: seq=2 ttl=63 time=0.482 ms

64 bytes from 172.16.20.3: seq=3 ttl=63 time=0.532 ms

--- 172.16.20.3 ping statistics ---

4 packets transmitted, 4 packets received, 0% packet loss

round-trip min/avg/max = 0.482/0.837/1.824 ms

/ #

Inside Cloud Shell, perform a wget -S to l3-apache instance based on the IP address obtained from the previous step, 200 OK indicates a successful download of the webpage.

wget -S <insert-your-ip>

Example:

/ # wget -S 172.16.20.3

Connecting to 172.16.20.3 (172.16.20.3:80)

HTTP/1.1 200 OK

Date: Mon, 31 Jul 2023 03:52:08 GMT

Server: Apache/2.4.56 (Debian)

Last-Modified: Mon, 31 Jul 2023 03:24:21 GMT

ETag: "25-601bff76f04b7"

Accept-Ranges: bytes

Content-Length: 37

Connection: close

Content-Type: text/html

saving to 'index.html'

index.html 100% |*******************************************************************************************************| 37 0:00:00 ETA

'index.html' saved

/ #

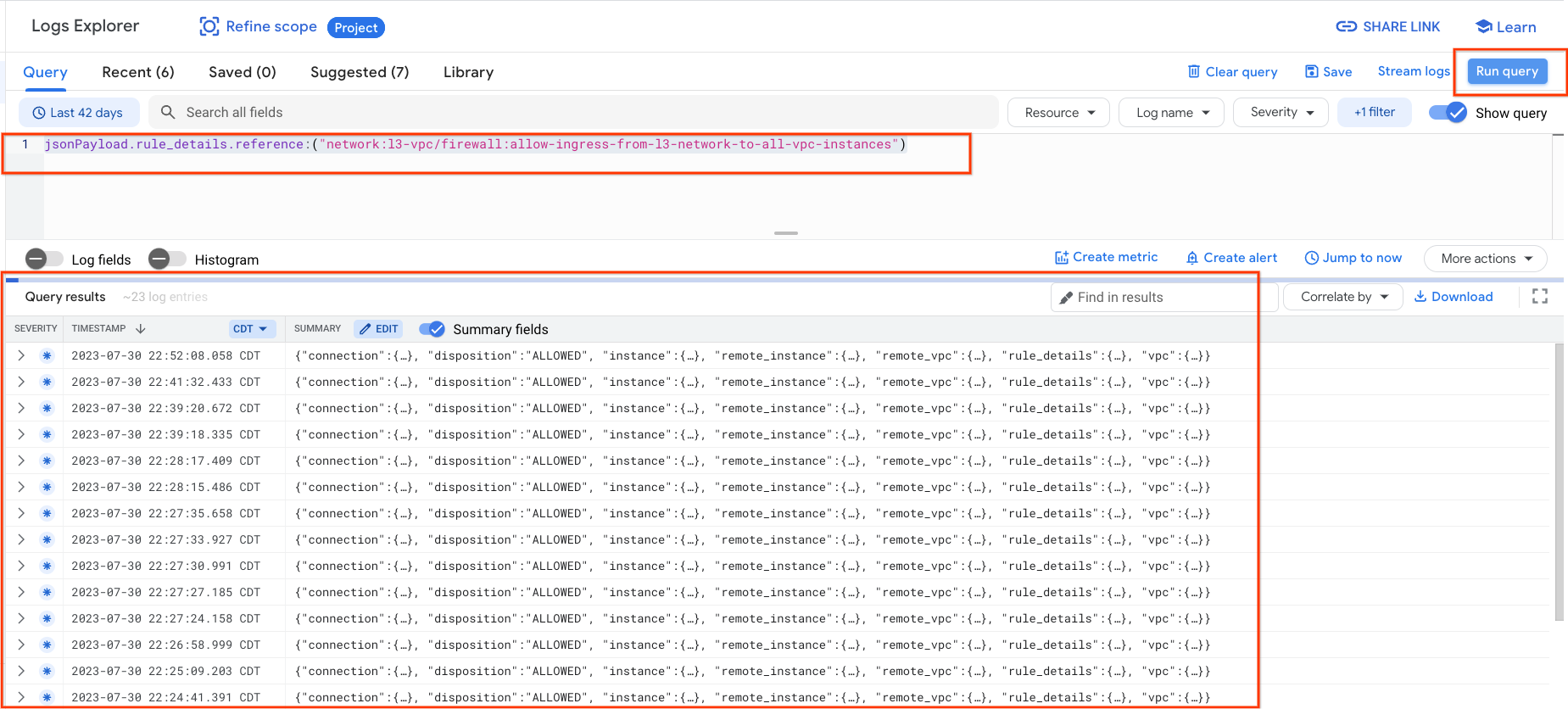

15. Firewall logs

Firewall Rules Logging lets you audit, verify, and analyze the effects of your firewall rules. For example, you can determine if a firewall rule designed to deny traffic is functioning as intended. Firewall Rules Logging is also useful if you need to determine how many connections are affected by a given firewall rule.

In the tutorial you have enabled firewall logging when creating the ingress firewall rules. Let's take a look at the information obtained from the logs.

From Cloud Console, navigate to Logging → Logs Explorer

Insert the query below per the screenshot and select Run query jsonPayload.rule_details.reference:("network:l3-vpc/firewall:allow-ingress-from-l3-network-to-all-vpc-instances")

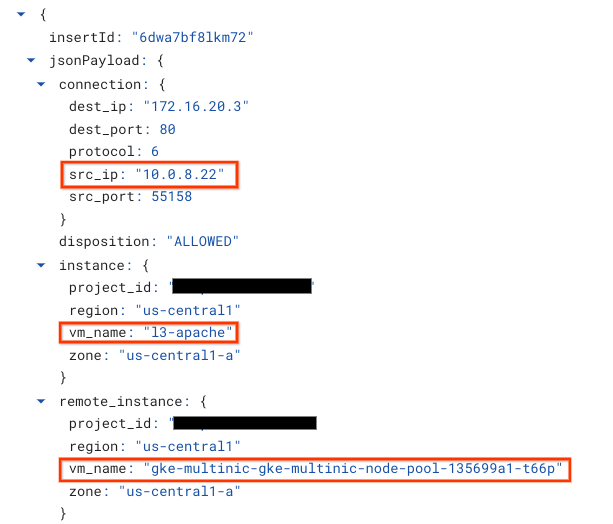

Taking a closer look at a capture provides information elements for security administrators; ranging from source and destination IP Address, port, protocol and nodepool name.

To explore further firewall logs, navigate to VPC Network → Firewall → allow-ingress-from-netdevice-network-to-all-vpc-instances then select view in Logs Explorer.

16. Clean up

From Cloud Shell, delete tutorial components.

gcloud compute instances delete l3-apache netdevice-apache --zone=us-central1-a --quiet

gcloud compute routers delete l3-cr netdevice-cr --region=us-central1 --quiet

gcloud container clusters delete multinic-gke --zone=us-central1-a --quiet

gcloud compute firewall-rules delete allow-ingress-from-l3-network-to-all-vpc-instances allow-ingress-from-netdevice-network-to-all-vpc-instances --quiet

gcloud compute networks subnets delete l3-apache l3-subnet netdevice-apache netdevice-subnet primary-node-subnet --region=us-central1 --quiet

gcloud compute networks delete l3-vpc netdevice-vpc primary-vpc --quiet

17. Congratulations

Congratulations, you've successfully configured and validated creating a multinic nodepool and creating pods running busybox to validate L3 and Device type connectivity to Apache servers using PING and wget.

You also learned how to leverage firewall logs to inspect source and destination packets between the Pod containers and Apache servers.

Cosmopup thinks tutorials are awesome!!