1. ভূমিকা

শেষ আপডেট: 2021-09-15

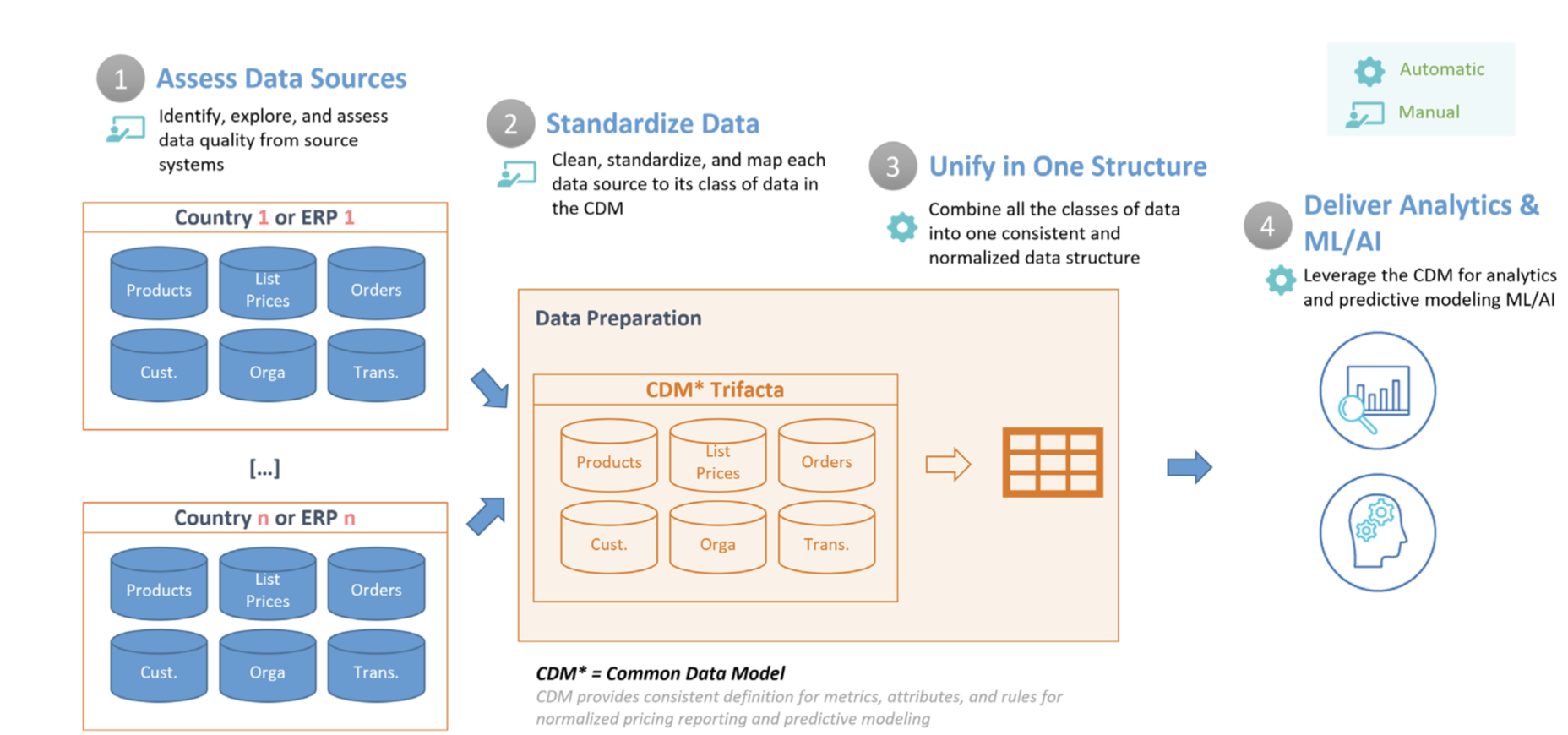

মূল্য নির্ধারণের অন্তর্দৃষ্টি এবং অপ্টিমাইজেশান চালানোর জন্য প্রয়োজনীয় ডেটা প্রকৃতির দ্বারা আলাদা (ভিন্ন সিস্টেম, বিভিন্ন স্থানীয় বাস্তবতা, ইত্যাদি), তাই একটি সুগঠিত, মানসম্মত এবং পরিষ্কার CDM টেবিল তৈরি করা অত্যন্ত গুরুত্বপূর্ণ। এতে লেনদেন, পণ্য, দাম এবং গ্রাহকদের মতো মূল্য নির্ধারণের অপ্টিমাইজেশনের মূল বৈশিষ্ট্যগুলি অন্তর্ভুক্ত রয়েছে। এই নথিতে, আমরা আপনাকে নীচের রূপরেখার ধাপগুলি দিয়ে হেঁটেছি, মূল্য বিশ্লেষণের জন্য একটি দ্রুত সূচনা প্রদান করে যা আপনি আপনার নিজের প্রয়োজনে প্রসারিত এবং কাস্টমাইজ করতে পারেন। নিম্নলিখিত চিত্রটি এই নথিতে অন্তর্ভুক্ত পদক্ষেপগুলির রূপরেখা দেয়৷

- ডেটা উত্সগুলি মূল্যায়ন করুন: প্রথমে, আপনাকে অবশ্যই ডেটা উত্সগুলির একটি তালিকা পেতে হবে যা CDM তৈরি করতে ব্যবহৃত হবে৷ এই ধাপে, ডেটাপ্রেপ ইনপুট ডেটা থেকে সমস্যাগুলি অন্বেষণ এবং সনাক্ত করতেও ব্যবহৃত হয়। উদাহরণস্বরূপ, অনুপস্থিত এবং অমিল মান, অসামঞ্জস্যপূর্ণ নামকরণের নিয়মাবলী, সদৃশ, ডেটা অখণ্ডতার সমস্যা, আউটলিয়ার ইত্যাদি।

- ডেটা স্ট্যান্ডার্ডাইজ করুন: ডেটার যথার্থতা, অখণ্ডতা, সামঞ্জস্য এবং সম্পূর্ণতা নিশ্চিত করার জন্য পূর্বে চিহ্নিত সমস্যাগুলি ঠিক করা হয়েছে। এই প্রক্রিয়াটি ডেটাপ্রেপ-এ বিভিন্ন রূপান্তরকে জড়িত করতে পারে, যেমন তারিখ বিন্যাস, মান মানককরণ, ইউনিট রূপান্তর, অপ্রয়োজনীয় ক্ষেত্র এবং মানগুলিকে ফিল্টার করা এবং উৎস ডেটাকে বিভক্ত করা, যোগদান করা বা ডিডপ্লিকেট করা।

- একটি কাঠামোতে একীভূত করুন: পাইপলাইনের পরবর্তী ধাপে প্রতিটি ডেটা উৎসকে BigQuery- এ একটি একক, প্রশস্ত টেবিলে যোগ করা হয় যাতে সবকটি গুণাগুণ সবথেকে ভালো দানাদার স্তরে থাকে। এই অস্বাভাবিক কাঠামোটি দক্ষ বিশ্লেষণাত্মক প্রশ্নের জন্য অনুমতি দেয় যার জন্য যোগদানের প্রয়োজন হয় না।

- বিশ্লেষণ এবং এমএল/এআই সরবরাহ করুন: একবার ডেটা পরিষ্কার এবং বিশ্লেষণের জন্য ফর্ম্যাট হয়ে গেলে, বিশ্লেষকরা পূর্বের মূল্য পরিবর্তনের প্রভাব বোঝার জন্য ঐতিহাসিক ডেটা অন্বেষণ করতে পারেন। উপরন্তু, BigQuery ML ভবিষ্যতবাণীমূলক মডেল তৈরি করতে ব্যবহার করা যেতে পারে যা ভবিষ্যতে বিক্রয় অনুমান করে। এই মডেলগুলির আউটপুট "হোয়াট-ইফ পরিস্থিতিতে" তৈরি করতে লুকারের মধ্যে ড্যাশবোর্ডগুলিতে অন্তর্ভুক্ত করা যেতে পারে যেখানে ব্যবসায়িক ব্যবহারকারীরা নির্দিষ্ট মূল্য পরিবর্তনের সাথে বিক্রয় কেমন হতে পারে তা বিশ্লেষণ করতে পারে।

নিম্নোক্ত চিত্রটি প্রাইসিং অপ্টিমাইজেশান অ্যানালিটিক্স পাইপলাইন তৈরি করতে ব্যবহৃত Google ক্লাউড উপাদানগুলি দেখায়৷

আপনি কি নির্মাণ করবেন

এখানে আমরা আপনাকে দেখাব কিভাবে একটি মূল্য নির্ধারণের অপ্টিমাইজেশান ডেটা গুদাম ডিজাইন করতে হয়, সময়ের সাথে সাথে ডেটা প্রস্তুতি স্বয়ংক্রিয় করতে হয়, পণ্যের মূল্যের পরিবর্তনের প্রভাবের পূর্বাভাস দিতে মেশিন লার্নিং ব্যবহার করতে হয় এবং আপনার দলকে পদক্ষেপযোগ্য অন্তর্দৃষ্টি প্রদানের জন্য প্রতিবেদন তৈরি করতে হয়।

আপনি কি শিখবেন

- মূল্য বিশ্লেষণের জন্য ডেটাপ্রেপকে ডেটা উত্সের সাথে কীভাবে সংযুক্ত করবেন, যা রিলেশনাল ডেটাবেস, ফ্ল্যাট ফাইল, গুগল শীট এবং অন্যান্য সমর্থিত অ্যাপ্লিকেশনগুলিতে সংরক্ষণ করা যেতে পারে।

- আপনার BigQuery ডেটা গুদামে একটি CDM টেবিল তৈরি করতে ডেটাপ্রেপ ফ্লো কীভাবে তৈরি করবেন।

- ভবিষ্যতের আয়ের পূর্বাভাস দিতে BigQuery ML কীভাবে ব্যবহার করবেন।

- ঐতিহাসিক মূল্য এবং বিক্রয় প্রবণতা বিশ্লেষণ করতে এবং ভবিষ্যতের মূল্য পরিবর্তনের প্রভাব বোঝার জন্য লুকারে কীভাবে প্রতিবেদন তৈরি করবেন।

আপনি কি প্রয়োজন হবে

- বিলিং সক্ষম সহ একটি Google ক্লাউড প্রকল্প৷ আপনার প্রকল্পের জন্য বিলিং সক্ষম হয়েছে তা নিশ্চিত করতে শিখুন ।

- আপনার প্রোজেক্টে BigQuery চালু থাকতে হবে। এটি স্বয়ংক্রিয়ভাবে নতুন প্রকল্পগুলিতে সক্ষম হয়। অন্যথায়, এটি একটি বিদ্যমান প্রকল্পে সক্ষম করুন । এছাড়াও আপনি এখানে ক্লাউড কনসোল থেকে BigQuery শুরু করার বিষয়ে আরও জানতে পারেন।

- আপনার প্রকল্পে ডেটাপ্রেপ অবশ্যই সক্ষম হতে হবে। বিগ ডেটা বিভাগে বাম নেভিগেশন মেনু থেকে Google কনসোল থেকে Dataprep সক্রিয় করা হয়েছে । এটি সক্রিয় করতে সাইন আপ পদক্ষেপ অনুসরণ করুন.

- আপনার নিজস্ব লুকার ড্যাশবোর্ড সেট আপ করতে, আপনার একটি লুকার উদাহরণে বিকাশকারীর অ্যাক্সেস থাকতে হবে, একটি ট্রায়ালের অনুরোধ করতে দয়া করে এখানে আমাদের টিমের সাথে যোগাযোগ করুন , বা আমাদের নমুনা ডেটাতে ডেটা পাইপলাইনের ফলাফলগুলি অন্বেষণ করতে আমাদের সর্বজনীন ড্যাশবোর্ড ব্যবহার করুন৷

- স্ট্রাকচার্ড কোয়েরি ল্যাঙ্গুয়েজ (SQL) এর অভিজ্ঞতা এবং নিম্নলিখিত বিষয়ে প্রাথমিক জ্ঞান সহায়ক: Trifacta , BigQuery , Looker দ্বারা Dataprep

2. BigQuery-এ CDM তৈরি করুন

এই বিভাগে, আপনি কমন ডেটা মডেল (CDM) তৈরি করেন, যা আপনাকে মূল্য পরিবর্তনের বিশ্লেষণ এবং পরামর্শ দেওয়ার জন্য প্রয়োজনীয় তথ্যগুলির একটি একত্রিত দৃশ্য প্রদান করে।

- BigQuery কনসোল খুলুন।

- এই রেফারেন্স প্যাটার্নটি পরীক্ষা করতে আপনি যে প্রকল্পটি ব্যবহার করতে চান তা নির্বাচন করুন।

- একটি বিদ্যমান ডেটাসেট ব্যবহার করুন বা একটি BigQuery ডেটাসেট তৈরি করুন ৷ ডেটাসেটের নাম

Pricing_CDM। - টেবিল তৈরি করুন :

create table `CDM_Pricing`

(

Fiscal_Date DATETIME,

Product_ID STRING,

Client_ID INT64,

Customer_Hierarchy STRING,

Division STRING,

Market STRING,

Channel STRING,

Customer_code INT64,

Customer_Long_Description STRING,

Key_Account_Manager INT64,

Key_Account_Manager_Description STRING,

Structure STRING,

Invoiced_quantity_in_Pieces FLOAT64,

Gross_Sales FLOAT64,

Trade_Budget_Costs FLOAT64,

Cash_Discounts_and_other_Sales_Deductions INT64,

Net_Sales FLOAT64,

Variable_Production_Costs_STD FLOAT64,

Fixed_Production_Costs_STD FLOAT64,

Other_Cost_of_Sales INT64,

Standard_Gross_Margin FLOAT64,

Transportation_STD FLOAT64,

Warehouse_STD FLOAT64,

Gross_Margin_After_Logistics FLOAT64,

List_Price_Converged FLOAT64

);

3. তথ্য উত্স মূল্যায়ন

এই টিউটোরিয়ালে, আপনি নমুনা ডেটা উৎস ব্যবহার করেন যা Google পত্রক এবং BigQuery- এ সংরক্ষিত থাকে।

- প্রতিটি লেনদেনের জন্য একটি সারি রয়েছে এমন লেনদেন Google পত্রক৷ এতে বিক্রিত প্রতিটি পণ্যের পরিমাণ, মোট মোট বিক্রয় এবং সংশ্লিষ্ট খরচের মতো বিবরণ রয়েছে।

- পণ্যের মূল্য নির্ধারণ করা Google পত্রক যাতে প্রতি মাসের জন্য একটি প্রদত্ত গ্রাহকের জন্য প্রতিটি পণ্যের মূল্য থাকে।

- Company_descriptions BigQuery সারণী যাতে পৃথক গ্রাহকদের তথ্য থাকে।

এই কোম্পানির_বিবরণ BigQuery টেবিল নিম্নলিখিত বিবৃতি ব্যবহার করে তৈরি করা যেতে পারে:

create table `Company_Descriptions`

(

Customer_ID INT64,

Customer_Long_Description STRING

);

insert into `Company_Descriptions` values (15458, 'ENELTEN');

insert into `Company_Descriptions` values (16080, 'NEW DEVICES CORP.');

insert into `Company_Descriptions` values (19913, 'ENELTENGAS');

insert into `Company_Descriptions` values (30108, 'CARTOON NT');

insert into `Company_Descriptions` values (32492, 'Thomas Ed Automobiles');

4. প্রবাহ তৈরি করুন

এই ধাপে, আপনি একটি নমুনা ডেটাপ্রেপ প্রবাহ আমদানি করেন, যা আপনি পূর্ববর্তী বিভাগে তালিকাভুক্ত উদাহরণ ডেটাসেটগুলিকে রূপান্তর ও একীভূত করতে ব্যবহার করেন। একটি প্রবাহ একটি পাইপলাইন, বা একটি বস্তুকে উপস্থাপন করে যা ডেটাসেট এবং রেসিপিগুলিকে একত্রিত করে, যা রূপান্তরিত করতে এবং তাদের সাথে যোগ দিতে ব্যবহৃত হয়।

- GitHup থেকে প্রাইসিং অপ্টিমাইজেশান প্যাটার্ন ফ্লো প্যাকেজ ডাউনলোড করুন, কিন্তু এটি আনজিপ করবেন না। এই ফাইলে নমুনা ডেটা রূপান্তর করতে ব্যবহৃত মূল্য নির্ধারণ অপ্টিমাইজেশান ডিজাইন প্যাটার্ন প্রবাহ রয়েছে৷

- Dataprep-এ, বাম নেভিগেশন বারে ফ্লোস আইকনে ক্লিক করুন। তারপর ফ্লোস ভিউতে, প্রসঙ্গ মেনু থেকে আমদানি নির্বাচন করুন। আপনি প্রবাহ আমদানি করার পরে, আপনি এটি দেখতে এবং সম্পাদনা করতে এটি নির্বাচন করতে পারেন৷

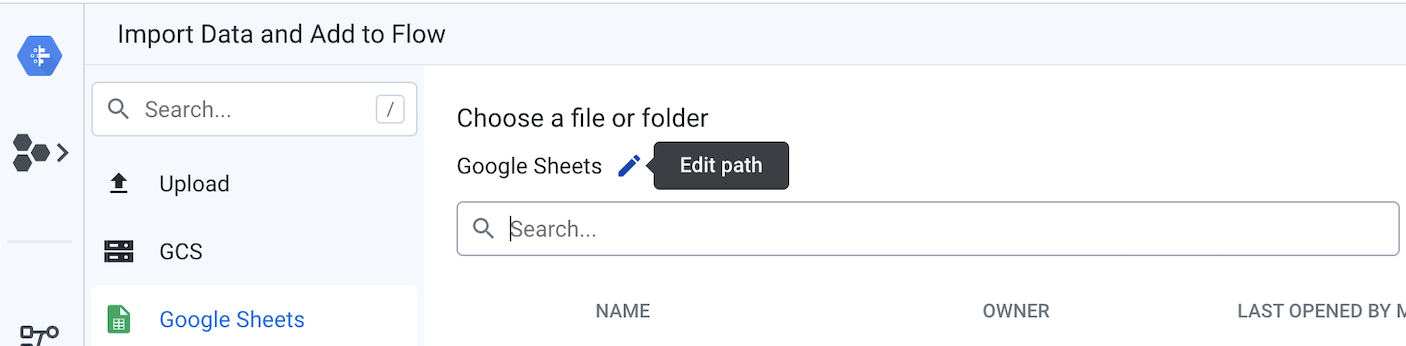

- প্রবাহের বাম দিকে, পণ্যের মূল্য নির্ধারণ এবং তিনটি লেনদেনের প্রতিটি Google পত্রক অবশ্যই ডেটাসেট হিসাবে সংযুক্ত থাকতে হবে৷ এটি করতে, Google পত্রক ডেটাসেট অবজেক্টগুলিতে ডান-ক্লিক করুন এবং প্রতিস্থাপন নির্বাচন করুন। তারপর Import Datasets লিঙ্কে ক্লিক করুন। "পথ সম্পাদনা করুন" পেন্সিলটিতে ক্লিক করুন, যেমনটি নিচের চিত্রে দেখানো হয়েছে।

লেনদেন এবং পণ্যের মূল্য Google পত্রক নির্দেশ করে বর্তমান মানটিকে প্রতিস্থাপন করুন ।

যখন Google পত্রকগুলিতে বেশ কয়েকটি ট্যাব থাকে তখন আপনি মেনুতে যে ট্যাবটি ব্যবহার করতে চান তা নির্বাচন করতে পারেন৷ সম্পাদনা ক্লিক করুন এবং আপনি ডেটা উৎস হিসাবে ব্যবহার করতে চান এমন ট্যাবগুলি নির্বাচন করুন, তারপর সংরক্ষণ করুন ক্লিক করুন এবং আমদানি করুন এবং প্রবাহে যোগ করুন ক্লিক করুন। আপনি যখন মোডেলে ফিরে আসবেন, প্রতিস্থাপন ক্লিক করুন। এই প্রবাহে, প্রতিটি শীটকে তার নিজস্ব ডেটাসেট হিসাবে উপস্থাপিত করা হয় যা পরবর্তীতে একটি পরবর্তী রেসিপিতে পৃথক উত্সগুলিকে ঐক্যবদ্ধ করার জন্য প্রদর্শন করে।

- BigQuery আউটপুট টেবিল সংজ্ঞায়িত করুন:

এই ধাপে, আপনি প্রতিবার Dataoprep কাজ চালানোর সময় BigQuery CDM_Pricing আউটপুট টেবিল লোড করার জন্য লোকেশন সংযুক্ত করবেন।

ফ্লো ভিউতে, স্কিমা ম্যাপিং আউটপুট আইকনে ক্লিক করুন, বিশদ প্যানেলে, গন্তব্য ট্যাবে ক্লিক করুন। সেখান থেকে, পরীক্ষার জন্য ব্যবহৃত ম্যানুয়াল গন্তব্য আউটপুট এবং আপনি যখন আপনার সম্পূর্ণ প্রবাহ স্বয়ংক্রিয় করতে চান তখন ব্যবহৃত নির্ধারিত গন্তব্য আউটপুট উভয়ই সম্পাদনা করুন। এটি করতে এই নির্দেশাবলী অনুসরণ করুন:

- "ম্যানুয়াল গন্তব্যগুলি" সম্পাদনা করুন বিশদ প্যানেলে, ম্যানুয়াল গন্তব্য বিভাগের অধীনে, সম্পাদনা বোতামে ক্লিক করুন৷ পাবলিশিং সেটিংস পৃষ্ঠায়, প্রকাশনা অ্যাকশনের অধীনে, যদি একটি প্রকাশনা অ্যাকশন ইতিমধ্যেই বিদ্যমান থাকে, তাহলে এটি সম্পাদনা করুন, অন্যথায় অ্যাড অ্যাকশন বোতামে ক্লিক করুন। সেখান থেকে, BigQuery ডেটাসেটগুলিকে

Pricing_CDMডেটাসেটে নেভিগেট করুন যা আপনি আগের ধাপে তৈরি করেছেন এবংCDM_Pricingটেবিলটি নির্বাচন করুন। নিশ্চিত করুন যে প্রতিটি রান চেক করা হয়েছে এই টেবিলে যোগ করুন এবং তারপরে অ্যাড ক্লিক করুন সেভ সেটিংস ক্লিক করুন। - "নির্ধারিত গন্তব্যগুলি" সম্পাদনা করুন

বিশদ প্যানেলে, নির্ধারিত গন্তব্য বিভাগের অধীনে, সম্পাদনা ক্লিক করুন।

সেটিংস ম্যানুয়াল গন্তব্য থেকে উত্তরাধিকারসূত্রে প্রাপ্ত এবং আপনাকে কোন পরিবর্তন করতে হবে না। সেভ সেটিং এ ক্লিক করুন।

5. ডেটা স্ট্যান্ডার্ডাইজ করুন

প্রদত্ত ফ্লো ইউনিয়ন, ফর্ম্যাট করে এবং লেনদেনের ডেটা পরিষ্কার করে এবং তারপরে প্রতিবেদনের জন্য কোম্পানির বিবরণ এবং সমষ্টিগত মূল্য ডেটার সাথে ফলাফলে যোগ দেয়। এখানে আপনি প্রবাহের উপাদানগুলির মধ্য দিয়ে হাঁটবেন, যা নীচের ছবিতে দেখা যাবে।

6. লেনদেন সংক্রান্ত ডেটা রেসিপি অন্বেষণ করুন

প্রথমে, আপনি লেনদেন সংক্রান্ত ডেটা রেসিপির মধ্যে কী ঘটবে তা অন্বেষণ করবেন, যা লেনদেনের ডেটা প্রস্তুত করতে ব্যবহৃত হয়। ফ্লো ভিউতে লেনদেন ডেটা অবজেক্টে ক্লিক করুন, বিশদ প্যানেলে, রেসিপি সম্পাদনা বোতামে ক্লিক করুন।

ট্রান্সফরমার পৃষ্ঠাটি বিস্তারিত প্যানেলে উপস্থাপিত রেসিপির সাথে খোলে। রেসিপিটিতে সমস্ত রূপান্তর পদক্ষেপ রয়েছে যা ডেটাতে প্রয়োগ করা হয়। আপনি রেসিপিতে এই নির্দিষ্ট অবস্থানে ডেটার অবস্থা দেখতে প্রতিটি ধাপের মধ্যে ক্লিক করে রেসিপিতে নেভিগেট করতে পারেন।

আপনি প্রতিটি রেসিপি ধাপের জন্য আরও মেনুতে ক্লিক করতে পারেন এবং রূপান্তরটি কীভাবে কাজ করে তা অন্বেষণ করতে সিলেক্টে যান বা সম্পাদনা করুন নির্বাচন করতে পারেন।

- ইউনিয়ন লেনদেন: লেনদেন সংক্রান্ত ডেটা রেসিপি ইউনিয়নের লেনদেনের প্রথম ধাপ প্রতিটি মাসে প্রতিনিধিত্বকারী বিভিন্ন শীটে সংরক্ষিত।

- গ্রাহকের বর্ণনাকে মানসম্মত করুন: রেসিপির পরবর্তী ধাপটি গ্রাহকের বিবরণকে মানসম্মত করে। এর মানে হল যে গ্রাহকের নামগুলি সামান্য পরিবর্তনের সাথে একই রকম হতে পারে এবং আমরা তাদের নামের মতোই স্বাভাবিক করতে চাই৷ রেসিপি দুটি সম্ভাব্য পন্থা প্রদর্শন করে। প্রথমত, এটি স্ট্যান্ডার্ডাইজেশন অ্যালগরিদম ব্যবহার করে, যা বিভিন্ন স্ট্যান্ডার্ডাইজেশন বিকল্পগুলির সাথে কনফিগার করা যেতে পারে যেমন "একই স্ট্রিং" যেখানে সাধারণ অক্ষরগুলির সাথে মানগুলিকে একত্রে ক্লাস্টার করা হয়, বা "উচ্চারণ" যেখানে একই রকম শোনায় এমন মানগুলি একসাথে ক্লাস্টার করা হয়। বিকল্পভাবে, আপনি কোম্পানির আইডি ব্যবহার করে উপরে উল্লেখিত BigQuery টেবিলে কোম্পানির বিবরণ দেখতে পারেন।

ডেটা পরিষ্কার এবং ফর্ম্যাট করার জন্য প্রয়োগ করা হয় এমন অন্যান্য কৌশলগুলি আবিষ্কার করতে আপনি রেসিপিটি আরও নেভিগেট করতে পারেন: সারিগুলি মুছুন, প্যাটার্নের উপর ভিত্তি করে বিন্যাস করুন, লুকআপের সাথে ডেটা সমৃদ্ধ করুন, অনুপস্থিত মানগুলি নিয়ে কাজ করুন বা অবাঞ্ছিত অক্ষরগুলি প্রতিস্থাপন করুন।

7. পণ্যের মূল্য নির্ধারণের ডেটা রেসিপি অন্বেষণ করুন

এর পরে, আপনি পণ্য মূল্য নির্ধারণ ডেটা রেসিপিতে কী ঘটতে পারে তা অন্বেষণ করতে পারেন, যা প্রস্তুতকৃত লেনদেনের ডেটাকে সমষ্টিগত মূল্যের ডেটাতে যোগ করে।

ট্রান্সফরমার পৃষ্ঠাটি বন্ধ করতে এবং ফ্লো ভিউতে ফিরে যেতে পৃষ্ঠার শীর্ষে মূল্য নির্ধারণের অপ্টিমাইজেশন ডিজাইন প্যাটার্নে ক্লিক করুন৷ সেখান থেকে প্রোডাক্ট প্রাইসিং ডেটা অবজেক্টে ক্লিক করুন এবং রেসিপি সম্পাদনা করুন।

- আনপিভট মাসিক মূল্য কলাম: আনপিভট ধাপের আগে ডেটা কেমন দেখায় তা দেখতে 2 এবং 3 ধাপের মধ্যে রেসিপিটিতে ক্লিক করুন। আপনি লক্ষ্য করবেন যে ডেটাতে প্রতিটি মাসের জন্য একটি স্বতন্ত্র কলামে লেনদেনের মান রয়েছে: জান ফেভ মার্চ। এটি এমন একটি বিন্যাস নয় যা SQL-এ সমষ্টি (অর্থাৎ, গড় লেনদেন) গণনা করার জন্য সুবিধাজনক। প্রতিটি কলাম যাতে BigQuery সারণীতে একটি সারি হয়ে যায় সেজন্য ডেটাটিকে আনপিভট করতে হবে। রেসিপিটি প্রতি মাসের জন্য 3টি কলামকে একটি সারিতে রূপান্তর করার জন্য আনপিভট ফাংশনটি ব্যবহার করে যাতে গ্রুপ গণনাগুলি আরও সহজে প্রয়োগ করা যায়।

- ক্লায়েন্ট, পণ্য এবং তারিখ অনুসারে গড় লেনদেনের মান গণনা করুন : আমরা প্রতিটি ক্লায়েন্ট, পণ্য এবং ডেটার জন্য গড় লেনদেনের মান গণনা করতে চাই। আমরা Aggregate ফাংশন ব্যবহার করতে পারি এবং একটি নতুন টেবিল তৈরি করতে পারি (বিকল্প "একটি নতুন টেবিল হিসাবে গোষ্ঠীবদ্ধ করুন")। সেক্ষেত্রে, গোষ্ঠী স্তরে ডেটা একত্রিত করা হয় এবং আমরা প্রতিটি পৃথক লেনদেনের বিবরণ হারিয়ে ফেলি। অথবা আমরা একই ডেটাসেটে বিশদ এবং সমষ্টিগত মান উভয়ই রাখার সিদ্ধান্ত নিতে পারি (বিকল্প "একটি নতুন কলাম(গুলি) হিসাবে গোষ্ঠীবদ্ধ করুন") যা একটি অনুপাত প্রয়োগ করতে খুব সুবিধাজনক হয়ে ওঠে (যেমন সামগ্রিক আয়ে পণ্য বিভাগের % অবদান ) আপনি রেসিপি ধাপ 7 সম্পাদনা করে এই আচরণটি চেষ্টা করতে পারেন এবং পার্থক্যগুলি দেখতে "একটি নতুন টেবিল হিসাবে গোষ্ঠীবদ্ধ করুন" বা "একটি নতুন কলাম(গুলি) হিসাবে গোষ্ঠীবদ্ধ করুন" বিকল্পটি নির্বাচন করুন৷

- যোগদানের মূল্য নির্ধারণের তারিখ: অবশেষে, প্রাথমিক ডেটাসেটে কলাম যোগ করে একটি বড় একটিতে একাধিক ডেটাসেটকে একত্রিত করতে একটি যোগদান ব্যবহার করা হয়। এই ধাপে, 'মূল্যের ডেটা. প্রোডাক্ট কোড' = লেনদেন ডেটা. SKU' এবং 'মূল্যের ডেটা। মূল্যের তারিখ' = 'লেনদেনের ডেটা। আর্থিক তারিখ'-এর উপর ভিত্তি করে লেনদেন সংক্রান্ত ডেটা রেসিপির আউটপুটের সাথে মূল্যের ডেটা যুক্ত করা হয়েছে।

ডেটাপ্রেপের সাথে আপনি যে রূপান্তরগুলি প্রয়োগ করতে পারেন সে সম্পর্কে আরও জানতে, ট্রাইফ্যাক্টা ডেটা র্যাংলিং চিট শীট দেখুন

8. স্কিমা ম্যাপিং রেসিপি অন্বেষণ করুন

শেষ রেসিপি, স্কিমা ম্যাপিং নিশ্চিত করে যে ফলাফল সিডিএম টেবিলটি বিদ্যমান BigQuery আউটপুট টেবিলের স্কিমার সাথে মেলে। এখানে, উভয় স্কিমা তুলনা করতে এবং স্বয়ংক্রিয় পরিবর্তনগুলি প্রয়োগ করতে অস্পষ্ট ম্যাচিং ব্যবহার করে BigQuery টেবিলের সাথে মেলে ডাটা স্ট্রাকচার পুনরায় ফর্ম্যাট করতে দ্রুত লক্ষ্য কার্যকারিতা ব্যবহার করা হয়।

9. এক কাঠামোতে একীভূত করুন

এখন যেহেতু উত্স এবং গন্তব্যগুলি কনফিগার করা হয়েছে, এবং প্রবাহের ধাপগুলি অন্বেষণ করা হয়েছে, আপনি CDM টেবিলটিকে রূপান্তরিত করতে এবং BigQuery-এ লোড করতে ফ্লো চালাতে পারেন৷

- স্কিমা ম্যাপিং আউটপুট চালান: ফ্লো ভিউতে, স্কিমা ম্যাপিং আউটপুট অবজেক্টটি নির্বাচন করুন এবং বিশদ প্যানেলে "রান" বোতামে ক্লিক করুন। "Trifacta Photon" চলমান পরিবেশ নির্বাচন করুন এবং উপেক্ষা রেসিপি ত্রুটিগুলি আনচেক করুন। তারপর Run বাটনে ক্লিক করুন। যদি নির্দিষ্ট করা BigQuery টেবিলটি থাকে, Dataprep নতুন সারি যুক্ত করবে, অন্যথায়, এটি একটি নতুন টেবিল তৈরি করবে।

- কাজের স্থিতি দেখুন: Dataprep স্বয়ংক্রিয়ভাবে Run Job পৃষ্ঠাটি খোলে যাতে আপনি কাজ সম্পাদনের উপর নজর রাখতে পারেন। এগিয়ে যেতে এবং BigQuery টেবিল লোড করতে কয়েক মিনিট সময় লাগবে। কাজটি সম্পূর্ণ হয়ে গেলে, মূল্য নির্ধারণের CDM আউটপুট BigQuery-এ লোড করা হবে একটি পরিষ্কার, কাঠামোবদ্ধ, এবং বিশ্লেষণের জন্য স্বাভাবিক ফর্ম্যাটে।

10. বিশ্লেষণ এবং ML/AI প্রদান করুন

বিশ্লেষণ পূর্বশর্ত

আকর্ষণীয় ফলাফল সহ কিছু বিশ্লেষণ এবং একটি ভবিষ্যদ্বাণীমূলক মডেল চালানোর জন্য, আমরা একটি ডেটা সেট তৈরি করেছি যা নির্দিষ্ট অন্তর্দৃষ্টি আবিষ্কারের জন্য বড় এবং প্রাসঙ্গিক। এই নির্দেশিকাটি চালিয়ে যাওয়ার আগে আপনাকে আপনার BigQuery ডেটাসেটে এই ডেটা আপলোড করতে হবে।

- এই GitHub সংগ্রহস্থল থেকে বড় ডেটা সেট ডাউনলোড করুন

- BigQuery-এর জন্য Google কনসোলে আপনার প্রকল্প এবং CDM_Pricing ডেটাসেটে নেভিগেট করুন।

- মেনুতে ক্লিক করুন এবং ডেটাসেট খুলুন। আমরা একটি স্থানীয় ফাইল থেকে ডেটা লোড করে টেবিলটি তৈরি করব।

+ টেবিল তৈরি করুন বোতামে ক্লিক করুন এবং এই পরামিতিগুলি সংজ্ঞায়িত করুন:

- আপলোড থেকে টেবিল তৈরি করুন এবং CDM_Pricing_Large_Table.csv ফাইলটি নির্বাচন করুন

- স্কিমা স্বয়ংক্রিয় সনাক্ত করুন, স্কিমা এবং ইনপুট পরামিতি পরীক্ষা করুন

- উন্নত বিকল্প, পছন্দ লিখুন, টেবিল ওভাররাইট করুন

- টেবিল তৈরি করুন ক্লিক করুন

সারণি তৈরি হওয়ার পরে এবং ডেটা আপলোড হওয়ার পরে, BigQuery-এর জন্য Google কনসোলে , আপনি নীচের মতো নতুন টেবিলের বিশদ বিবরণ দেখতে পাবেন। BigQuery-এ দামের ডেটার সাহায্যে, আমরা আপনার মূল্যের ডেটা গভীর স্তরে বিশ্লেষণ করতে আরও ব্যাপক প্রশ্ন করতে পারি।

11. মূল্য পরিবর্তনের প্রভাব দেখুন

আপনি বিশ্লেষণ করতে চাইতে পারেন এমন কিছুর একটি উদাহরণ হল যখন আপনি আগে কোনো আইটেমের মূল্য পরিবর্তন করেছেন তখন ক্রম আচরণের পরিবর্তন।

- প্রথমত, আপনি একটি অস্থায়ী সারণী তৈরি করেন যাতে প্রতিবার একটি পণ্যের মূল্য পরিবর্তন হওয়ার সময় একটি লাইন থাকে, সেই নির্দিষ্ট পণ্যের মূল্য সম্পর্কে তথ্য যেমন প্রতিটি মূল্যের সাথে কতগুলি আইটেম অর্ডার করা হয়েছিল এবং সেই মূল্যের সাথে যুক্ত মোট নেট বিক্রয়।

create temp table price_changes as (

select

product_id,

list_price_converged,

total_ordered_pieces,

total_net_sales,

first_price_date,

lag(list_price_converged) over(partition by product_id order by first_price_date asc) as previous_list,

lag(total_ordered_pieces) over(partition by product_id order by first_price_date asc) as previous_total_ordered_pieces,

lag(total_net_sales) over(partition by product_id order by first_price_date asc) as previous_total_net_sales,

lag(first_price_date) over(partition by product_id order by first_price_date asc) as previous_first_price_date

from (

select

product_id,list_price_converged,sum(invoiced_quantity_in_pieces) as total_ordered_pieces, sum(net_sales) as total_net_sales, min(fiscal_date) as first_price_date

from `{{my_project}}.{{my_dataset}}.CDM_Pricing` AS cdm_pricing

group by 1,2

order by 1, 2 asc

)

);

select * from price_changes where previous_list is not null order by product_id, first_price_date desc

- এরপরে, অস্থায়ী টেবিলটি রেখে, আপনি SKU জুড়ে গড় মূল্য পরিবর্তন গণনা করতে পারেন:

select avg((previous_list-list_price_converged)/nullif(previous_list,0))*100 as average_price_change from price_changes;

- পরিশেষে, আপনি প্রতিটি মূল্য পরিবর্তন এবং অর্ডার করা আইটেমের মোট পরিমাণের মধ্যে সম্পর্ক দেখে মূল্য পরিবর্তন করার পরে কী ঘটে তা বিশ্লেষণ করতে পারেন:

select

(total_ordered_pieces-previous_total_ordered_pieces)/nullif(previous_total_ordered_pieces,0)

হিসাবে

price_changes_percent_ordered_change,

(list_price_converged-previous_list)/nullif(previous_list,0)

হিসাবে

price_changes_percent_price_change

from price_changes

12. একটি টাইম সিরিজ পূর্বাভাস মডেল তৈরি করুন

এরপরে, BigQuery-এর তৈরি মেশিন লার্নিং ক্ষমতার সাহায্যে, আপনি বিক্রি করা প্রতিটি আইটেমের পরিমাণ ভবিষ্যদ্বাণী করতে একটি ARIMA টাইম সিরিজ পূর্বাভাস মডেল তৈরি করতে পারেন।

- প্রথমে আপনি একটি ARIMA_PLUS মডেল তৈরি করুন ৷

create or replace `{{my_project}}.{{my_dataset}}.bqml_arima`

options

(model_type = 'ARIMA_PLUS',

time_series_timestamp_col = 'fiscal_date',

time_series_data_col = 'total_quantity',

time_series_id_col = 'product_id',

auto_arima = TRUE,

data_frequency = 'AUTO_FREQUENCY',

decompose_time_series = TRUE

) as

select

fiscal_date,

product_id,

sum(invoiced_quantity_in_pieces) as total_quantity

from

`{{my_project}}.{{my_dataset}}.CDM_Pricing`

group by 1,2;

- এর পরে, আপনি প্রতিটি পণ্যের ভবিষ্যত বিক্রয়ের পূর্বাভাস দিতে ML.FORECAST ফাংশন ব্যবহার করেন:

select

*

from

ML.FORECAST(model testing.bqml_arima,

struct(30 as horizon, 0.8 as confidence_level));

- উপলব্ধ এই ভবিষ্যদ্বাণীগুলির সাহায্যে, আপনি দাম বাড়ালে কী ঘটতে পারে তা বোঝার চেষ্টা করতে পারেন। উদাহরণস্বরূপ, আপনি যদি প্রতিটি পণ্যের মূল্য 15% বাড়িয়ে দেন তাহলে আপনি এই ধরনের প্রশ্নের সাথে পরবর্তী মাসের আনুমানিক মোট আয় গণনা করতে পারেন:

select

sum(forecast_value * list_price) as total_revenue

from ml.forecast(mode testing.bqml_arima,

struct(30 as horizon, 0.8 as confidence_level)) forecasts

left join (select product_id,

array_agg(list_price_converged

order by fiscal_date desc limit 1)[offset(0)] as list_price

from `leigha-bq-dev.retail.cdm_pricing` group by 1) recent_prices

using (product_id);

13. একটি প্রতিবেদন তৈরি করুন

এখন যেহেতু আপনার ডি-নর্মালাইজড প্রাইসিং ডেটা BigQuery-এ কেন্দ্রীভূত করা হয়েছে এবং আপনি বুঝতে পারছেন কীভাবে এই ডেটার বিরুদ্ধে অর্থপূর্ণ কোয়েরি চালাতে হয়, ব্যবসায়িক ব্যবহারকারীদের এই তথ্যটি অন্বেষণ করতে এবং কাজ করার অনুমতি দেওয়ার জন্য এটি একটি প্রতিবেদন তৈরি করার সময়।

আপনার যদি ইতিমধ্যেই একটি লুকার উদাহরণ থাকে তবে আপনি এই প্যাটার্নের জন্য মূল্য নির্ধারণের ডেটা বিশ্লেষণ শুরু করতে এই GitHub সংগ্রহস্থলে LookML ব্যবহার করতে পারেন। শুধু একটি নতুন Looker প্রোজেক্ট তৈরি করুন , LookML যোগ করুন এবং আপনার BigQuery কনফিগারেশনের সাথে মেলে প্রতিটি ভিউ ফাইলে কানেকশন এবং টেবিলের নাম পরিবর্তন করুন।

এই মডেলে, আপনি প্রাপ্ত সারণীটি পাবেন ( এই ভিউ ফাইলটিতে ) যা আমরা দামের পরিবর্তনগুলি পরীক্ষা করার জন্য আগে দেখিয়েছি :

view: price_changes {

derived_table: {

sql: select

product_id,

list_price_converged,

total_ordered_pieces,

total_net_sales,

first_price_date,

lag(list_price_converged) over(partition by product_id order by first_price_date asc) as previous_list,

lag(total_ordered_pieces) over(partition by product_id order by first_price_date asc) as previous_total_ordered_pieces,

lag(total_net_sales) over(partition by product_id order by first_price_date asc) as previous_total_net_sales,

lag(first_price_date) over(partition by product_id order by first_price_date asc) as previous_first_price_date

from (

select

product_id,list_price_converged,sum(invoiced_quantity_in_pieces) as total_ordered_pieces, sum(net_sales) as total_net_sales, min(fiscal_date) as first_price_date

from ${cdm_pricing.SQL_TABLE_NAME} AS cdm_pricing

group by 1,2

order by 1, 2 asc

)

;;

}

...

}

সেইসাথে BigQuery ML ARIMA মডেলটি আমরা আগে দেখিয়েছি , ভবিষ্যতে বিক্রয়ের পূর্বাভাস দিতে ( এই ভিউ ফাইলে )

view: arima_model {

derived_table: {

persist_for: "24 hours"

sql_create:

create or replace model ${sql_table_name}

options

(model_type = 'arima_plus',

time_series_timestamp_col = 'fiscal_date',

time_series_data_col = 'total_quantity',

time_series_id_col = 'product_id',

auto_arima = true,

data_frequency = 'auto_frequency',

decompose_time_series = true

) as

select

fiscal_date,

product_id,

sum(invoiced_quantity_in_pieces) as total_quantity

from

${cdm_pricing.sql_table_name}

group by 1,2 ;;

}

}

...

}

LookML-এ একটি নমুনা ড্যাশবোর্ডও রয়েছে। আপনি এখানে ড্যাশবোর্ডের একটি ডেমো সংস্করণ অ্যাক্সেস করতে পারেন। ড্যাশবোর্ডের প্রথম অংশটি ব্যবহারকারীদের বিক্রয়, খরচ, মূল্য এবং মার্জিনের পরিবর্তন সম্পর্কে উচ্চ স্তরের তথ্য দেয়। একজন ব্যবসায়িক ব্যবহারকারী হিসাবে, বিক্রয় X% এর নিচে নেমে গেছে কিনা তা জানতে আপনি একটি সতর্কতা তৈরি করতে চাইতে পারেন কারণ এর অর্থ হতে পারে আপনার দাম কমানো উচিত।

পরবর্তী বিভাগ, যা নীচে দেখানো হয়েছে, ব্যবহারকারীদের মূল্য পরিবর্তনের আশেপাশের প্রবণতাগুলি খনন করতে দেয়৷ এখানে, আপনি নির্দিষ্ট পণ্যগুলির সঠিক তালিকার মূল্য দেখতে এবং কী দামে পরিবর্তন করা হয়েছে তা দেখতে পারেন - যা আরও গবেষণা করার জন্য নির্দিষ্ট পণ্যগুলিকে চিহ্নিত করার জন্য সহায়ক হতে পারে।

অবশেষে, প্রতিবেদনের নীচে আপনার কাছে আমাদের BigQueryML মডেলের ফলাফল রয়েছে৷ লুকার ড্যাশবোর্ডের শীর্ষে থাকা ফিল্টারগুলি ব্যবহার করে, আপনি উপরে বর্ণিত অনুরূপ বিভিন্ন পরিস্থিতি অনুকরণ করতে সহজেই প্যারামিটারগুলি প্রবেশ করতে পারেন। উদাহরণস্বরূপ, যদি অর্ডারের পরিমাণ পূর্বাভাসিত মানের 75%-এ নেমে যায় এবং সমস্ত পণ্যের মূল্য 25% বেড়ে গেলে কী হবে তা দেখুন, যেমনটি নীচে দেখানো হয়েছে

এটি LookML-এর পরামিতি দ্বারা চালিত হয়, যা এখানে পাওয়া পরিমাপ গণনার সাথে সরাসরি যুক্ত করা হয়। এই ধরনের রিপোর্টিংয়ের মাধ্যমে, আপনি সমস্ত পণ্যের জন্য সর্বোত্তম মূল্য খুঁজে পেতে পারেন বা নির্দিষ্ট পণ্যগুলিতে ড্রিল করতে পারেন যেখানে আপনার মূল্য বাড়ানো বা ছাড় দেওয়া উচিত এবং মোট এবং নেট আয়ের ফলাফল কী হবে তা নির্ধারণ করতে।

14. আপনার মূল্য ব্যবস্থার সাথে মানিয়ে নিন

যদিও এই টিউটোরিয়ালটি নমুনা ডেটা উত্সকে রূপান্তরিত করে, আপনি আপনার বিভিন্ন প্ল্যাটফর্মে থাকা মূল্যের সম্পদগুলির জন্য একই রকম ডেটা চ্যালেঞ্জের মুখোমুখি হবেন। সারাংশ এবং বিস্তারিত ফলাফলের জন্য মূল্য নির্ধারণের সম্পদের বিভিন্ন রপ্তানি বিন্যাস (প্রায়ই xls, শীট, csv, txt, রিলেশনাল ডাটাবেস, ব্যবসায়িক অ্যাপ্লিকেশন) থাকে, যার প্রতিটি ডেটাপ্রেপের সাথে সংযুক্ত হতে পারে। আমরা সুপারিশ করি যে আপনি উপরে প্রদত্ত উদাহরণগুলির মতো আপনার রূপান্তরের প্রয়োজনীয়তাগুলি বর্ণনা করে শুরু করুন৷ আপনার স্পেসিফিকেশনগুলি স্পষ্ট হওয়ার পরে এবং আপনি প্রয়োজনীয় রূপান্তরগুলির ধরন চিহ্নিত করার পরে, আপনি সেগুলিকে Dataprep দিয়ে ডিজাইন করতে পারেন।

- ডেটাপ্রেপ প্রবাহের একটি অনুলিপি তৈরি করুন (প্রবাহের ডানদিকে **... "**আরো" বোতামে ক্লিক করুন এবং ডুপ্লিকেট বিকল্পটি নির্বাচন করুন) যা আপনি কাস্টমাইজ করবেন, বা একটি নতুন ডেটাপ্রেপ ফ্লো ব্যবহার করে স্ক্র্যাচ থেকে শুরু করুন৷

- আপনার নিজস্ব মূল্যের ডেটাসেটের সাথে সংযোগ করুন। Excel, CSV, Google Sheets, JSON এর মতো ফাইল ফরম্যাটগুলি ডেটাপ্রেপ দ্বারা স্থানীয়ভাবে সমর্থিত। এছাড়াও আপনি Dataprep সংযোগকারী ব্যবহার করে অন্যান্য সিস্টেমের সাথে সংযোগ করতে পারেন।

- আপনার চিহ্নিত বিভিন্ন রূপান্তর বিভাগে আপনার ডেটা সম্পদ প্রেরণ করুন। প্রতিটি বিভাগের জন্য, একটি রেসিপি তৈরি করুন। ডেটা রূপান্তর করতে এবং আপনার নিজস্ব রেসিপি লিখতে এই নকশা প্যাটার্নে প্রদত্ত প্রবাহ থেকে কিছু অনুপ্রেরণা পান। আপনি যদি আটকে যান, কোন চিন্তা নেই, Dataprep স্ক্রিনের নীচে বাম দিকে চ্যাট ডায়ালগে সাহায্যের জন্য জিজ্ঞাসা করুন।

- আপনার BigQuery উদাহরণের সাথে আপনার রেসিপি সংযুক্ত করুন। BigQuery-এ ম্যানুয়ালি টেবিল তৈরি করার বিষয়ে আপনাকে চিন্তা করার দরকার নেই, Dataprep আপনার জন্য স্বয়ংক্রিয়ভাবে এটির যত্ন নেবে। আপনি যখন আপনার প্রবাহে আউটপুট যোগ করেন তখন আমরা একটি ম্যানুয়াল গন্তব্য নির্বাচন করার এবং প্রতিটি দৌড়ে টেবিলটি ড্রপ করার পরামর্শ দিই। আপনি প্রত্যাশিত ফলাফল প্রদান করার বিন্দু পর্যন্ত প্রতিটি রেসিপি পৃথকভাবে পরীক্ষা করুন। আপনার পরীক্ষা শেষ হওয়ার পরে, আপনি পূর্বের ডেটা মুছে ফেলা এড়াতে প্রতিটি রানে টেবিলে যুক্ত করতে আউটপুটটিকে রূপান্তর করবেন।

- আপনি ঐচ্ছিকভাবে সময়সূচীতে চালানোর জন্য প্রবাহকে সংযুক্ত করতে পারেন। আপনার প্রক্রিয়া ক্রমাগত চালানোর প্রয়োজন হলে এটি দরকারী কিছু। আপনার প্রয়োজনীয় সতেজতার উপর ভিত্তি করে আপনি প্রতিদিন বা প্রতি ঘন্টায় প্রতিক্রিয়া লোড করার জন্য একটি সময়সূচী নির্ধারণ করতে পারেন। আপনি একটি সময়সূচীতে প্রবাহ চালানোর সিদ্ধান্ত নিলে, আপনাকে প্রতিটি রেসিপির জন্য প্রবাহে একটি সময়সূচী গন্তব্য আউটপুট যোগ করতে হবে।

BigQuery মেশিন লার্নিং মডেল পরিবর্তন করুন

এই টিউটোরিয়ালটি একটি নমুনা ARIMA মডেল প্রদান করে। যাইহোক, এমন অতিরিক্ত পরামিতি রয়েছে যা আপনি নিয়ন্ত্রণ করতে পারেন যখন আপনি মডেলটি বিকাশ করছেন তা নিশ্চিত করতে যে এটি আপনার ডেটার সাথে সর্বোত্তম মানানসই। আপনি এখানে আমাদের ডকুমেন্টেশনের মধ্যে উদাহরণে আরও বিশদ দেখতে পারেন। উপরন্তু, আপনি আপনার মডেল সম্পর্কে আরও বিশদ বিবরণ পেতে এবং অপ্টিমাইজেশান সিদ্ধান্ত নিতে BigQuery ML.ARIMA_EVALUATE , ML.ARIMA_COEFFICIENTS , এবং ML.EXPLAIN_FORECAST ফাংশনগুলিও ব্যবহার করতে পারেন৷

লুকার রিপোর্ট সম্পাদনা করুন

উপরে বর্ণিত হিসাবে আপনার নিজের প্রকল্পে LookML আমদানি করার পরে, আপনি অতিরিক্ত ক্ষেত্র যোগ করতে, গণনা বা ব্যবহারকারীর প্রবেশ করা পরামিতিগুলি পরিবর্তন করতে এবং আপনার ব্যবসার প্রয়োজন অনুসারে ড্যাশবোর্ডে ভিজ্যুয়ালাইজেশন পরিবর্তন করতে সরাসরি সম্পাদনা করতে পারেন। আপনি এখানে LookML-এ ডেভেলপ করা এবং Looker-এ ডেটা ভিজ্যুয়ালাইজ করার বিষয়ে বিস্তারিত জানতে পারেন।

15. অভিনন্দন

আপনি এখন আপনার খুচরা পণ্যের মূল্য অপ্টিমাইজ করার জন্য প্রয়োজনীয় মূল পদক্ষেপগুলি জানেন!

এরপর কি?

অন্যান্য স্মার্ট অ্যানালিটিক্স রেফারেন্স প্যাটার্ন অন্বেষণ করুন

আরও পড়া

- এখানে ব্লগ পড়ুন

- Dataprep সম্পর্কে এখানে আরও জানুন

- BigQuery মেশিন লার্নিং সম্পর্কে এখানে আরও জানুন

- এখানে Looker সম্পর্কে আরও জানুন